Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "compilers"

-

!rant

Has anyone been paying attention to what Google's been up to? Seriously!

1) Fuchsia. An entire OS built from the ground up to replace Linux and run on thin microcontrollers that Linux would bog down — has GNU compilers & Dart support baked in.

2) Flutter. It's like React Native but with Dart and more components available. Super Alpha, but there's "Flutter Gallery" to see examples.

3) Escher. A GPU-renderer that coincidentally focuses on features that Material UI needs, used with Fuchsia. I can't find screenshots anywhere; unfortunately I tore down my Fuchsia box before trying this out. Be sure to tag me in a screenshot if you get this working!

4) Progressive Web Apps (aka Progress Web APKs). Chrome has an experimental feature to turn Web Apps into hybrid native apps. There's a whole set of documentation for converting and creating apps.

And enough about Google, Microsoft actually had a really cool announcement as well! (hush hush, it's really exciting for once, trust me)...

Qualcomm and Microsoft teamed up to run the full desktop version of Windows 10 on a Snapdragon 820. They go so far as to show off the latest version of x86 dekstop Photoshop with no modifications running with excellent performance. They've announced full support for the upcoming Snapdragon 835, which will be a beast compared to the 820! This is all done by virtualization and interop libraries/runtimes, similar to how Wine runs Windows apps on Linux (but much better compatibility and more runtime complete).

Lastly, (go easy guys, I know how much some of you love Apple) I keep hearing of Apple's top talent going to Tesla. I'm really looking forward to the Tesla Roof and Model 3. It's about time someone pushed for cheap lithium cells for the home (typical AGM just doesn't last) and made panels look attractive!

Tech is exciting, isn't it!?38 -

I'm a self-taught 19-year-old programmer. Coding since 10, dropped out of high-school and got fist job at 15.

In the the early days I was extremely passionate, learning SICP, Algorithms, doing Haskell, C/C++, Rust, Assembly, writing toy compilers/interpreters, tweaking Gentoo/Arch. Even got a lambda tattoo on my arm after learning lambda-calculus and church numerals.

My first job - a company which raised $100,000 on kickstarter. The CEO was a dumb millionaire hippie, who was bored with his money, so he wanted to run a company even though he had no idea what he was doing. He used to talk about how he build our product, even tho he had 0 technical knowledge whatsoever. He was on news a few times which was pretty cringeworthy. The company had only 1 programmer (other than me) who was pretty decent.

We shipped the project, but soon we burned through kickstart money and the sales dried off. Instead of trying to aquire customers (or abandoning the project), boss kept looking for investors, which kept us afloat for an extra year.

Eventually the money dried up, and instead of closing gates, boss decreased our paychecks without our knowledge. He also converted us from full-time employees to "contractors" (also without our knowledge) so he wouldn't have to pay taxes for us. My paycheck decreased by 40% by I still stayed.

One day, I was trying to burn a USB drive, and I did "dd of=/dev/sda" instead of sdb, therefore wiping out our development server. They asked me to stay at company, but I turned in my resignation letter the next day (my highest ever post on reddit was in /r/TIFU).

Next, I found a job at a "finance" company. $50k/year as a 18-year-old. CEO was a good-looking smooth-talker who made few million bucks talking old people into giving him their retirement money.

He claimed he changed his ways, and was now trying to help average folks save money. So far I've been here 8 month and I do not see that happening. He forces me to do sketchy shit, that clearly doesn't have clients best interests in mind.

I am the only developer, and I quickly became a back-end and front-end ninja.

I switched the company infrastructure from shitty drag+drop website builder, WordPress and shitty Excel macros into a beautiful custom-written python back-end.

Little did I know, this company doesn't need a real programmer. I don't have clear requirements, I get unrealistic deadlines, and boss is too busy to even communicate what he wants from me.

Eventually I sold my soul. I switched parts of it to WordPress, because I was not given enough time to write custom code properly.

For latest project, I switched from using custom React/Material/Sass to using drag+drop TypeForms for surveys.

I used to be an extremist FLOSS Richard Stallman fanboy, but eventually I traded my morals, dreams and ideals for a paycheck. Hey, $50k is not bad, so maybe I shouldn't be complaining? :(

I got addicted to pot for 2 years. Recently I've gotten arrested, and it is honestly one of the best things that ever happened to me. Before I got arrested, I did some freelancing for a mugshot website. In un-related news, my mugshot dissapeared.

I have been sober for 2 month now, and my brain is finally coming back.

I know average developer hits a wall at around $80k, and then you have to either move into management or have your own business.

After getting sober, I realized that money isn't going to make me happy, and I don't want to manage people. I'm an old-school neck-beard hacker. My true passion is mathematics and physics. I don't want to glue bullshit libraries together.

I want to write real code, trace kernel bugs, optimize compilers. Albeit, I was boring in the wrong generation.

I've started studying real analysis, brushing up differential equations, and now trying to tackle machine learning and Neural Networks, and understanding the juicy math behind gradient descent.

I don't know what my plan is for the future, but I'll figure it out as long as I have my brain. Maybe I will continue making shitty forms and collect paycheck, while studying mathematics. Maybe I will figure out something else.

But I can't just let my brain rot while chasing money and impressing dumb bosses. If I wait until I get rich to do things I love, my brain will be too far gone at that point. I can't just sell myself out. I'm coming back to my roots.

I still feel like after experiencing industry and pot, I'm a shittier developer than I was at age 15. But my passion is slowly coming back.

Any suggestions from wise ol' neckbeards on how to proceed? 32

32 -

This facts are killing me

"During his own Google interview, Jeff Dean was asked the implications if P=NP were true. He said, "P = 0 or N = 1." Then, before the interviewer had even finished laughing, Jeff examined Google’s public certificate and wrote the private key on the whiteboard."

"Compilers don't warn Jeff Dean. Jeff Dean warns compilers."

"gcc -O4 emails your code to Jeff Dean for a rewrite."

"When Jeff Dean sends an ethernet frame there are no collisions because the competing frames retreat back up into the buffer memory on their source nic."

"When Jeff Dean has an ergonomic evaluation, it is for the protection of his keyboard."

"When Jeff Dean designs software, he first codes the binary and then writes the source as documentation."

"When Jeff has trouble sleeping, he Mapreduces sheep."

"When Jeff Dean listens to mp3s, he just cats them to /dev/dsp and does the decoding in his head."

"Google search went down for a few hours in 2002, and Jeff Dean started handling queries by hand. Search Quality doubled."

"One day Jeff Dean grabbed his Etch-a-Sketch instead of his laptop on his way out the door. On his way back home to get his real laptop, he programmed the Etch-a-Sketch to play Tetris."

"Jeff Dean once shifted a bit so hard, it ended up on another computer. "6 -

It seems like every other day I run into some post/tweet/article about people whining about having the imposter syndrome. It seems like no other profession (except maybe acting) is filled with people like this.

Well lemme answer that question for you lot.

YES YOU ARE A BLOODY IMPOSTER.

There. I said it. BUT.

Know that you're already a step up from those clowns that talk a lot but say nothing of substance.

You're better than the rockstar dev that "understands" the entire codebase because s/he is the freaking moron that created that convoluted nonsensical pile of shit in the first place.

You're better than that person who thinks knowing nothing is fine. It's just a job and a pay cheque.

The main question is, what the flying fuck are you going to do about being an imposter? Whine about it on twtr/fb/medium? HOW ABOUT YOU GO LEARN SOMETHING BEYOND FRAMEWORKS OR MAKING DUMB CRUD WEBSITES WITH COLOR CHANGING BUTTONS.

Computers are hard. Did you expect to spend 1 year studying random things and waltz into the field as a fucking expert? FUCK YOU. How about you let a "doctor" who taught himself medicine for 1 year do your open heart surgery?

Learn how a godamn computer actually works. Do you expect your doctors and surgeons to be ignorant of how the body works? If you aspire to be a professional WHY THE FUCK DO YOU STAY AT THE SURFACE.

Go learn about Compilers, complete projects with low level languages like C / Rust (protip: stay away from C++, Java doesn't count), read up on CPU architecture, to name a few topics.

Then, after learning how your computers work, you can start learning functional programming and appreciate the tradeoffs it makes. Or go learn AI/ML/DS. But preferably not before.

Basically, it's fine if you were never formally taught. Get yourself schooled, quit bitching, and be patient. It's ok to be stupid, but it's not ok to stay stupid forever.

/rant14 -

Fun/Interesting fact:

"++i" can be slightly faster than "i++"

Because "i++" can require a local copy of the value of "i" before it gets incremented, while "++i" never does. In some cases, some compilers will optimize it away if possible... but it's not always possible, and not all compilers do this.15 -

Well, it all started off with hardware-level programming involving jumpers and stuff like that... Then came Assembly, which was good.. B, C compilers. Finally came the interpreted languages, and that's where in my opinion the abstraction should've ended. But no, we needed more frameworks, more libraries, even more abstraction! Where does it end? As it seems to be going, I guess that users will have kid toys - no iToys! - for electronics and we'll be programming on with bloated Scratch GUI's. Nothing against Scratch, but that shit ain't proper programming anymore. God I can't wait for the future.

ABSTRACT ALL THE THINGS!!!

Oh and not to mention that all software will be governed in political correctness by some Alex SJW AI shit that became sentient. Not a single programming term will be non-offensive anymore, no matter how hard you try to not offend anyone, or God forbid - don't care about it because you just want to make something that's readable, usable and working!! Terms, UI names for buttons, heck even icons! REMOVE IT BECAUSE IT OFFENDS SOMEONE THAT I DON'T EVEN KNOW JACK SHIT ABOUT!!!18 -

Sometimes.....

When I want to escape how dull/repetitive/boring the world of web development is. I crack open a nice lil terminal, dust off my gcc/g++ compilers and fuck around in C or C++ till my eyes start to bleed.

I have been fucking around with systems development. Mainly with Linux programming. I have also started to get deeper on game engine design and compiler design....because low level development is where its at.

A man can only fuck around rest apis, css and html and the endless sea of Javascript and other dynamic languages for so long before going crazy.

Eventually.....I would want to code something impressive enough to give me a spot somewhere as a C or C++ developer. I just can't work with web development any longer man. It really is not what I want to do, the fact that I do it(and that I am good at it) is circumstantial more than because I really enjoy it. I really don't12 -

Let the student use their own laptops. Even buy them one instead of having computers on site that no one uses for coding but only for some multiple choice tests and to browse Facebook.

Teach them 10 finger typing. (Don't be too strict and allow for personal preferences.)

Teach them text navigation and editing shortcuts. They should be able to scroll per page, jump to the beginning or end of the line or jump word by word. (I am not talking vi bindings or emacs magic.) And no, key repeat is an antifeature.

Teach them VCS before their first group assignment. Let's be honest, VCS means git nowadays. Yet teach them git != GitHub.

Teach git through the command line. They are allowed to use a gui once they aren't afraid to resolve a merge conflict or to rebase their feature branch against master. Just committing and pushing is not enough.

Teach them test-driven development ASAP. You can even give them assignments with a codebase of failing tests and their job is to make them pass in the beginning. Later require them to write tests themselves.

Don't teach the language, teach concepts. (No, if else and for loops aren't concepts you god-damn amateur! That's just syntax!)

When teaching object oriented programming, I'd smack you if do inane examples with vehicles, cars, bikes and a Mercedes Benz. Or animal, cat and dog for that matter. (I came from a self-taught imperative background. Those examples obfuscate more than they help.) Also, inheritance is overrated in oop teachings.

Functional programming concepts should be taught earlier as its concepts of avoiding side effects and pure functions can benefit even oop code bases. (Also great way to introduce testing, as pure functions take certain inputs and produce one output.)

Focus on one language in the beginning, it need not be Java, but don't confuse students with Java, Python and Ruby in their first year. (Bonus point if the language supports both oop and functional programming.)

And for the love of gawd: let them have a strictly typed language. Why would you teach with JavaScript!?

Use industry standards. Notepad, atom and eclipse might be open source and free; yet JetBrains community editions still best them.

For grades, don't your dare demand for them to write code on paper. (Pseudocode is fine.)

Don't let your students play compiler in their heads. It's not their job to know exactly what exception will be thrown by your contrived example. That's the compilers job to complain about. Rather teach them how to find solutions to these errors.

Teach them advanced google searches.

Teach them how to write a issue for a library on GitHub and similar sites.

Teach them how to ask a good stackoverflow question :>5 -

Sometimes I feel like a freak. So many rants are about things that just never seen to happen to me.

I've been using Windows 10 since release, never had an update while I was working.

I've never gotten a virus from an ad, abs don't use AdBlock.

I've never had a crash that lost my work, I save neurotically or the program automatically saved.

I've never had trouble with typing in any language, static or dynamic.

I've never had issues with semicolons, IDEs or compilers tell me the issue explicitly.

I guess I'm just weird or something :/15 -

*Starts compile*

...

...

can't find function foo

ld exited with status code 1

*confused*

*Reruns qmake*

*Compiles again*

...

...

can't find function foo

ld exited with status code 1

*very confused*

*switches compilers*

*compiles*

...

...

Worked!

*dafuq*

*switches back to the first compiler*

*compiles*

...

...

Worked!

*tries not to cry*12 -

Dynamically typed languages are barbaric to me.

It's pretty much universally understood that programmers program with types in mind (if you have a method that takes a name, it's a string. You don't want a name that's an integer).

Even it you don't like the verbosity of type annotations, that's fine. It adds maybe seconds of time to type, which is neglible in my opinion, but it's a discussion to be had.

If that's the case, use Crystal. It's statically typed, and no type annotations are required (it looks nearly identical to Ruby).

So many errors are fixed by static typing and compilers. I know a person who migrated most of the Python std library to Haskell and found typing errors in it. *In their standard library*. If the developers of Python can't be trusted to avoid simple typing errors with all their unit tests, how can anyone?

Plus, even if unit testing universally guarded against typing errors, why would you prefer that? It takes far less time to add a type annotation (and even less time to write nothing in Crystal), and you get the benefit of knowing types at compile time.

I've had some super weird type experiences in Ruby. You can mock out the return of the type check to be what you want. I've been unit testing in Ruby before, tried mocking a method on a type, didn't work as I expected. Checked the type, it lines up.

Turns out, nested away in some obscure place was a factory that was generating types and masking them as different types because we figured "since it responds to all the same methods, it's practically the same type right?", but not in the unit test. Took 45 minutes on my time when it could've taken ~0 seconds in a statically typed language.11 -

Code review time:

Hey Rudy, can you approve my PR? ??? Shouldn't it be can you review my PR?(thought to myself)

Anyway, as a new practice, we(royal we) do not approve PRs with js files. If we touch one, we convert it to typescript as part of a ramp up to a migration that never seems to get here. But I digress.

I look at the laziest conversion in history.

Looked like

Import 'something';

Import 'somethingElse';

Import 'anotherSomething';

export class SomeClass {

public prop1: any;

public prop2: any;

public prop3: any;

public doWork(param: any){

let someValue = param;

// you get the idea

return someValue;

}

}

Anyway, I question if all the properties need to be visible outside of the class since everything was public.

Then if the dev could go and use type safety.

Then asked why not define the return type for the method since it would make it easier for others to consume.

Since parts of the app are still in js, I asked that they check that that the value passed in was valid(no compilation error, obviously).

Also to use = () => {} to make sure "this" is really this.

I also pointed out the import problem, but anyway.

I then see the his team leader approve the PR and then tell me that I'm being too hard on his devs. ????

Do we need to finish every PR comment with "pretty please" now?

These are grown men and women, and yet, it feels oddly like kindergarten.

I've written code in the past that wasn't pretty and I received "WTF?" as a PR comment. I then realized I ate sh*t on that line of code, corrected it and pushed the code. Then we went to Starbucks.

I'm not that old(35), but these young devs need to learn that COMPILERS DO NOT CARE ABOUT YOUR FEELINGS!!!!!

Ahhhhhh

Much better.

Thanks for the platform.8 -

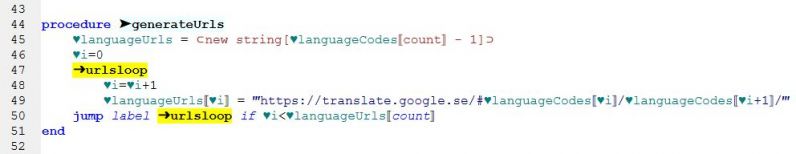

EEEEEEEEEEEE Some fAcking languages!! Actually barfs while using this trashdump!

The gist: new job, position required adv C# knowledge (like f yea, one of my fav languages), we are working with RPA (using software robots to automate stuff), and we are using some new robot still in beta phase, but robot has its own prog lang.

The problem:

- this language is kind of like ASM (i think so, I'm venting here, it's ASM OK), with syntax that burns your eyes

- no function return values, but I can live with that, at least they have some sort of functions

- emojies for identifiers (like php's $var, but they only aim for shitty features so you use a heart.. ♥var)

- only jump and jumpif for control flow

- no foopin variable scopes at all (if you run multiple scripts at the same time they even share variables *pukes*)

- weird alt characters everywhere. define strings with regular quotes? nah let's be [some mental illness] and use prime quotes (‴ U+2034), and like ⟦ ⟧ for array indexing, but only sometimes!

- super slow interpreter, ex a regular loop to count to 10 (using jumps because yea no actual loops) takes more than 20 seconds to execute, approx 700ms to run 1 code row.

- it supports c# snippets (defined with these stupid characters: ⊂ ⊃) and I guess that's the only c# I get to write with this job :^}

- on top of that, outdated documentation, because yea it's beta, but so crappin tedious with this trail n error to check how every feature works

The question: why in the living fartfaces yolk would you even make a new language when it's so easy nowadays to embed compilers!?! the robot is apparently made in c#, so it should be no funcking problem at all to add a damn lua compiler or something. having a tcp api would even be easier got dammit!!! And what in the world made our company think this robot was a plausible choice?! Did they do a full fubbing analysis of the different software robots out there and accidentally sorted by ease of use in reverse order?? 'cause that's the only explanation i can imagine

Frillin stupid shitpile of a language!!! AAAAAHHH

see the attached screenshot of production code we've developed at the company for reference.

Disclaimer: I do not stand responsible for any eventual headaches or gauged eyes caused by the named image.

(for those interested, the robot is G1ANT.Robot, https://beta.g1ant.com/) 4

4 -

To the people that create compilers.

Is it so goddam hard to tell me which fucking object is Null? 13

13 -

If first compiler was compiled by humans, now compilers are compiled using compilers. Then the first chicken didn't come from egg, but something superior. Nowadays we just see eggs turning into chicken, but the origins of chickens isn't related to eggs at all.

#Computer engineering5 -

Most kids just want to code. So they see "Computer Science" and think "How to be a hacker in 6 weeks". Then they face some super simple algebra and freak out, eventually flunking out with the excuse that "uni only presents overtly theoretical shit nobody ever uses in real life".

They could hardly be more wrong, of course. Ignore calculus and complexity theory and you will max out on efficiency soon enough. Skip operating systems, compilers and language theory and you can only ever aspire to be a script kiddie.

You can't become a "data scientist" without statistics. And you can never grow to be even a mediocre one without solid basic research and physics training.

Hack, I've optimized literal millions of dollars out of cloud expenses by choosing the best processors for my stack, and weeks later got myself schooled (on devRant, of all places!) over my ignorance of their inner workings. And I have a MSc degree. Learning never stops.

So, to improve CS experience in uni? Tear down students expectations, and boil out the "I just wanna code!" kiddies to boot camps. Some of them will be back to learn the science. The rest will peak at age 33.16 -

Why is starting a C++ project so overly complicated and annoying?!

So many different compilers. So many ways to organize the files. So many inconsistencies between Linux and Windows. So many outdated/lacking tutorials. So many small problems.

Why is there almost no good C++ IDEs? Why is Visual Studio so bizarre? Why are the CMake official tutorials literally wrong? Why can't we have a standard way to share binaries? Why can't we have a standard way to structure project folders? Why is the linker so annoying to use?

Don't get me wrong, I quite like the language and I love how fast it is (one of the main reasons I decided to use it for my project, which is a game almost comparable to Factorio)... But why is simply starting to write code such a hassle?

I've been programming in Java for years and oh god I miss it so much. JARs are amazing. Packages are amazing. The JDK is amazing. Everything is standardized, even variable names.

I'm so tempted to make this game in Java...

But I can't. I would have a garbage collector in the way of its performance...11 -

Worst things about being a dev? Boy, this will be a long one!

- Whatever I do, be it hard work or smart work, I feel I am always underpaid.

- Most people who don't know tech feel my job shouldn't take that long. "Oh, a website that should be easy." "Oh, REST services, that's cute!"

- Most people who know a little tech will be like, "Here is the code for this on Google, then why are you charging this much"

- Companies like Microsoft and Apple who are too cool to follow standards.

- Always underpaid!

- The friggin compilers and random environment vars. Sometimes you make no change and the code works on a restart. I mean wtf!

- Having to give/meet deadlines, when we know most of the times things get out of control.

- Having to work for jerks mostly who don't know squat, and can't tell the difference between a CPU and a Wooden box.

- Sometimes I wanna take a break from my laptop(traveling and stuff) , those are the times I get the maximum work load!

- Did I mention we are always underpaid?

- Because of the kind of work I do, finding a girl has been challenging. Where the heck are they!

- We have to stay always updated. Often we deploy something using a framework and the next day we see an update.

- Speaking of updates, I hate having to support for OSes like Microsoft.

- Speaking of OSes, I hate Apple!

- Speaking of Apple, I feel we are underpaid, de javu?

...

How much would you hate me if I wrote "just kidding" ?3 -

A couple days ago, I went through the most embarrassing interview ever. It was a startup into both hardware and software merged over image processing. I really wanted it. Really really did. It was telephonic, and involved a little bit coding over docs. In the one hour we talked over the phone, he asked me about 30 questions. I hadn't even heard of the words he said! Ive never delved into compilers, lower level things, and memory management. I could answer about 5 questions- including the tell me about yourself question.

So thats about 25 ways I came up with of saying "I don't know" in a span of 60 minutes.3 -

Currently I'm working on 3D game engine and making a 3D minesweeper game with it.

I have started creating a compiler not long ago using my own implementation (no Lex no tools nothing just raw algorithms application) to hopefully some day I will be able to make a language that works on top of glsl inside my game engine. I have compilers design class this semester which haven't even started yet and made a lexical analyser generator. I also have another class about geographical information systems which I will be using my engine to create some demos for some 3D rendering techniques like level of details or maybe create something similar to arcgis which we will be using.

Oh man I have many stuff I want to do.

Here is a gif showing the state of my minesweeper game. I clearly lack artistic skills lol. One thing I will be making is to model the sphere as squares not triangles.

Finally I want to mention that I months ago saw someone here at devrant making a voronoi diagrams variant of this which inspired me to make this.

I made long post so

TLDR : having fun reinventing the weel and learning 😀

-

Ok, so teacher (which should be something like a professional dev or whatever) assigned us a homework for a Christmas (I dont care, I can complete his assignments in like 10 minutes max). We have to do some simple shit in C++, just some loops and input + output. Nothing hard. He challenged me to write it as short as possible, so I did. My classmates have codes around 60 to 70 lines long (after propper formating). I made it 20 lines long using some pointer magic and stuff like that. I tried my code, it ran fucking perfectly, so I sent that to him. He replied that the code does not work. I tried to recompile it and it ran perfectly. Again, it does not work. Afeter 13 fucking emails he fucking finally sent me the error message. Some fucntion was not found (missing some library but literally everywhere else it works without it...). Thats strange, because it run perfectly on my Fedora with CLion, so I switch to Windows and try to run same code in Visual Studio (which we are using in school btw). Works perfectly. So I start arguing with the teacher more and more. I tried around 10 online compilers. Works fuckng everywhere. Teacher is pissed, me too. So I rewrote my whole code, added comments and shit, reinvented wheel literally everywhere. Now I have C99 standardised code over 370 lines long that run even on a fucking arduino after changing input output methods so it can work with it. It (suprisingly runs) on his PC too.

After a bit more arguing, he said that he is using CodeBlocks from fucking 2015. Wow. Just fucking wow. Even our school has some old Visual Studio (2007 I guess) and it worked there.6 -

So recently I did a lot of research into the internals of Computers and CPUs.

And i'd like to share a result of mine.

First of all, take some time to look at the code down below. You see two assembler codes and two command lines.

The Assembler code is designed to test how the instructions "enter" and "leave" compare to manually doing what they are shortened to.

Enter and leave create a new Stackframe: this means, that they create a new temporary stack. The stack is where local variables are put to by the compiler. On the right side, you can see how I create my own stack by using

push rbp

mov rbp, rsp

sub rsp, 0

(I won't get into details behind why that works).

Okay. Why is this even relevant?

Well: there is the assumption that enter and leave are very slow. This is due to raw numbers:

In some paper I saw ( I couldn't find the link, i'm sorry), enter was said to use up 12 CPU cycles, while the manual stacking would require 3 (push + mov + sub => 1 + 1 + 1).

When I compile an empty function, I get pretty much what you'd expect just from the raw numbers of CPU cycles.

HOWEVER, then I add the dummy code in the middle:

mov eax, 123

add eax, 123543

mov ebx, 234

div ebx

and magically - both sides have the same result.

Why????

For one thing, there is CPU prefetching. This is the CPU loading in ram before its done executing the current instruction (this is how anti-debugger code works, btw. Might make another rant on that). Then there is the fact that the CPU usually starts work on the next instruction while the current instruction is processing IFF the register currently involved isnt involved in the next instruction (that would cause a lot of synchronisation problems). Now notice, that the CPU can't do any of that when manually entering and leaving. It can only start doing the mov eax, 1234 while performing the sub rsp, 0.

----------------

NOW: notice that the code on the right didn't take any precautions like making sure that the stack is big enough. If you sub too much stack at once, the stack will be exhausted, thats what we call a stack overflow. enter implements checks for that, and emits an interrupt if there is a SO (take this with a grain of salt, I couldn't find a resource backing this up). There are another type of checks I don't fully get (stack level checks) so I'd rather not make a fool of myself by writing about them.

Because of all those reasons I think that compilers should start using enter and leave again.

========

This post showed very well that bare numbers can often mislead. 21

21 -

From NAND to Tetris..

This book is IMO the best book for those who want to venture to the lower level programming.

This books retrains you’re thinking, teaches you from the bottom up! Not the typical top down approach.

You begin with the idea of Boolean algebra. And the move on to logic gates.. from there you build in VHDL everything you will use later.

Essentially building your own “virtual machine”.. you design the instruction set. Of which you will then write assembly using the instruction set to control the gate you built in VDHL.

THEN you will continue up the abstraction layer and will learn how a compiler works, and then begin written c code that is then compiled down to your assembly of your instructions set to be linked and ran on your virtual machine you built.

All the compiler and other tools are available on the books website. The book is not a book where you copy and paste, run and done.... you kinda have to take the concepts and apply them with this book.

Then once you master this book, take it the extra step and learn more about compilers and write your own compiler with the dragon book or something.

Fantastic book, great philosophy on teaching software.. ground up rather than top down. Love it! It’s Unique book. 21

21 -

First year: intro to programming, basic data structures and algos, parallel programming, databases and a project to finish it. Homework should be kept track of via some version control. Should also be some calculus and linear algebra.

Second year:

Introduce more complex subjects such as programming paradigms, compilers and language theory, low level programming + logic design + basic processor design, logic for system verification, statistics and graph theory. Should also be a project with a company.

Year three:

Advanced algos, datastructures and algorithm analysis. Intro to Computer and data security. Optional courses in graphics programming, machine learning, compilers and automata, embedded systems etc. ends with a big project that goes in depth into a CS subject, not a regular software project in java basically.4 -

HELL WEEK is coming!! they are going to make us code IN PAPER again.... no compilers, no way to check for errors, time to die again

4

4 -

So, for my C class, the computers in the lab are using VS 2015. To be able to compile C we have to change some settings to allow the program to compile.

I like to use my computer (with Arch Linux) and use my tools (Vim and GCC).

The guy next to me was trying to do the homework, but he was struggling. I decided to give it a shot and I was able to do it, so I showed him my code and he tried it in the computer.

The program crashes every time no matter what. We asked the professor. I show him my code and how it's working. Apparently he was confused because I was using the terminal and not VS. So he proceeded to said that it's because I'm not using VS2015 and GCC is doing the whole work for me.

I'm like ಠ_ಠ and then he keeps saying that he doesn't know what or how GCC works (for real? Someone that teaches C and has a Ph. D on CS doesn't now what GCC is?) but that it is apparently doing everything for me. So my code should be wrong if it crashes on VS2015.... ಠ_ಠ

What do you think? I'm thinking about talking with the head department of CS (I know that he is a Linux guy) and see what happens. Should I do it? Or should I just use VS2015 as the "professor" is asking?

I even tried online compilers to see if it was just working on my computer, but even they use GCC to compile.5 -

It’s throw back Thursday!

Back to 1979... before the time of the red dragon book compiler book, (forgetting about the green dragon book) ... there was a time where only a few well written compiler and assembler “theory” books existed.

What’s special about this one? Well Calingaert was the co patentor of the OS/360. .. “okay soo? ... well Fred Brook’s Mythical Man-Month book I posted the other day. Calingaert is basically the counterpart of brooks on the OS/360.

Anyway, the code is in assembly (obviously) and the compiler code is basic.

Other than this book and from my understanding 2-3 other books that’s all that was available on compilers and assemblers as far as books written goes at the token.

ALLL the rest of knowledge for compilers existed in the ACM and other computing journals of the time.

Is this book relevant today, eh not really, other than giving prospective, it’s a short in comparison to the red dragon books.

If you did read it, it’s more of a book that gives you more lecture and background and concepts.. rather than here’s a swath of code.. copy it and run.. done.. nope didn’t happen in this book.. apply what you lean here 10

10 -

If you're fascinated by compilers and want an easy read, take a look at http://homepage.divms.uiowa.edu/~sl...

It's math light and goes into theory in a casual manner. Was fun reading. Take a look.1 -

Coding is like cooking.

But only if

the heat source is lava. //Language

And the pot is lava. //IDE

And the food is lava. //Program

And the dishes are lava. //Classes

And the floor is lava. //APIs

And the tools are lava. //Compilers3 -

Bit the bullet and installed VS and relevant compilers for C++ and started fucking around with sdl.

Not as terrible as I thought it was going to be.

Pointers seem pretty intuitive. Apparently my time with python has not in fact mentally mutilated me.7 -

Annoyance in C: using the same keyword for two unrelated things, process-long memory allocation and internal linkage. Looking at you, static.

The latter should really have been called "intern", just like there is "extern". Far more people would use it if it was named correctly.

History says "static" was chosen for compatibility, allowing older compilers to take new source files.2 -

Jeff Dean Facts (Source: God)

Jeff Dean once failed a Turing test when he correctly identified the 203rd Fibonacci number in less than a second

Jeff Dean compiles and runs his code before submitting, but only to check for compiler and CPU bugs

Unsatisfied with constant time, Jeff Dean created the world's first O(1/n) algorithm

When Jeff Dean designs software, he first codes the binary and then writes the source as documentation

Compilers don't warn Jeff Dean. Jeff Dean warns compilers

Jeff Dean wrote an O(n^2) algorithm once. It was for the Traveling Salesman Problem

Jeff Dean's watch displays seconds since January 1st, 1970.

gcc -O4 sends your code to Jeff Dean for a complete rewrite

-

well, for now, my biggest dev ambition is to become a compiler designer/programmer... or OS designer/programmer.

In short, systems programmer (compilers or OS).

😅😅😁😁3 -

Parts of the code I am working on date back to the early nineties, written in ancient C++ with lots of special cases for ancient compilers by people with 0-2 years of coding experience.

My favourite coding moment is every time when after refactoring a part of the code, it has about 1000 lines less (no exaggeration), is more reliable, AND can do a lot more than before.6 -

Normal Compilers : i guess you missed a semicolon in line 63

Special Compilers : You wrote wrong code, useless fella, I'm not your servant to tell you all the errors. Your mere existence is unnecessary and useless3 -

In school we got asked 4 our future jobs. I saif: im gonna get informatician, because im already really good at it... Look, i can evem build compilers... In the break, my friend: could u program a game, dude? Me: no, im not using a graphical environnement. If i wanted to i would have to learn unity or flash or some sort of game engine He: then, ur not an informatician, and u shouldnt get one either...

(hes a windows user)3 -

Person:"you're a dev, you must reeeaaaly get frustrated with semi colons 😏"

Me: "at times but it's not such a big problem with the compilers being better now.😊"

Person: "so innovative!😁"

Me: "nothing is innovative!! All new idea app ideas suck and there are not too many clicks!!! The icons are perfect!! Purple and orange buttons are not a good idea!!! What do you mean you want 3 buttons on the one screen that do the same damn thing!! Do you even think!! Oh of course the users are stupid, takes one to know one!!! Doesn't look like much?!!! Sure the backend is a mother fucking kraken the size of Michigan that runs smoother than a babies bottom but hey, let's bitch cause it's too plain on the eye!!! EVERYTHING IS A LIE LIKE THE EXISTANCE OF YOUR BRAAAAIIIN!! - pants neoriticly-😳

Person: "new client? Or friend with an idea? 😒"6 -

Let's try this.

In the project I'm working there is an strict rule : NO COMMENTS!!!

I mean wth, the times I've spend hours trying to understand the crappy legacy code in VB.Net that has been there almost decades, that wouldn't happen with comments, I know i know there are some supernatural developers that think in binary and their eyes work as compilers, but I'm not like that, so seriously go to hell.

P.S. Of course I follow that rule, after all, my code is so damn perfect that even a baby can understand it.

jkundefined devops etiquette stupidest pichardo for president stupid stupider stupid stuff jk rant code smells comments3 -

Java teacher writes code on blackboard in comp lab

He tells us to try it out at our workstations.

We do. The code does work. We tell him.

He says: "There is something wrong with your compiler..."

Question is...we were around 30 students. Can all our compilers not work if we had used the lab before and the code we run worked clean??!?!?!?

We were flabbergasted2 -

Cross platform mobile with Xamarin, an internship asked me to learn Xamarin for them, I found that the docs Xamarin had were surprisingly helpful compared to other places

After continuing to pursue mobile with Xamarin I now feel I know multiple native apis very well (iOS and Android) and have found my favorite language (C#) so I've also learned a ton about how code and compilers work and all sorts of other things

Xamarin has been an incredible learning tool9 -

A few years ago I would whine, complain and rant about shitty software, which I knew could be so much better than it was. But I didn't yet write software of my own.

Now I complain about shitty libraries, API's and users. Not much has changed really. And every time I write code, I curse myself, and whoever made this trashpile I have to work with. I curse the user to the moon and beyond for using the program wrong. Funny thing is, exactly the thing I was complaining about (input validation, see earlier rant) is also exactly what no more than 5 minutes after release, a user fucked up with. The bot just does not respond at this point. But fuck these braindead retards for users.

In a few years I expect myself to be complaining about shitty compilers and buffer overflows, segmentation violations, bad coding style (don't make your program a fucking colander kthx), and so on.

Next decade I expect myself to be complaining about physics itself, and why the universe is governed by the laws it's governed by. Whoever this God is, he's a fucking retard. Funny thing is, the signs for it are already there. Electron theory! If only those electrons were positrons, then the math would check out properly. Instead of negative electrons traveling from negative to positive, we'd have positive positrons traveling from positive to negative. At least from what I understand so far, this is still a decade away after all.

The point I'm trying to make is that nothing changes, only my understanding of the world around me does, as I tumble further and further down the rabbit hole. Sometimes I wish I had taken the blue pill... Either complain about others' software or perhaps not give a shit at all. Become one of those filthy users I now despise.1 -

Fuck this shitty C ecosystem! Multible compilers, one standard complying, one hacked toghether? Only one GPL poisoned standart library, with no real chance of switching it, which prevents me of making staticly linked programs? And then there is microsofts compiler, with fucking ANSI support. Thanks. No dependency handling. Concurrency? pthreads. Are you fucking kidding. JSON? Have fun finding something static. Compile times where you can read entire books. Segfaults without one helpful info, so you have to debug with prints. And every library, every tool, installer, compiler, stdlib, anything is poisoned by GPL. But hey, its fast. And efficient. After you spend many slow and inefficient months developing something. I am so done with this shit.

Well.

Tommorow i will continue working with C on my backup project.

Did i mention that the stdlib has no features? Not even threading? Which is IN THE STANDARD?8 -

I've been laughed at a lot for thinking this way, but I'm honestly frustrated by how little information exists on the web for people who want to take Operating System development a step further. I mean, the OSDev Community is amazing and offers pretty, much everything one needs to know at the system level. But my issue is: What if someone didn't want to use existing compilers and assemblers like GCC and NASM, and do everything from total scratch? I mean, the original Unix came from somewhere, right? I know you're going to think "Why not? It works.". Well, I just think it's crazy how few people (such as Linus and the GNU foundation) are out there that have the ability to create such things without help from existing software tools. Sure, it could take me decades of careful practice and experience, but my passion is for creating software at this level and becoming one of those people is very strong. I just wish I knew where to begin and who to learn from.4

-

I fucking hate the process of setting up IDEs and compilers! All the build files, cmake files, tasks, all that shit.

I undrerstand it's integral part of coding, but fuck, why does it always take so long to set up a stupid project. Just let me start coding ffs.

Sometimes I get so frustrated that I rather write a bash script or run the compiler commands in the shell instead of going through the hassle of setting all this up.2 -

Most of you probably know this one but still, it's my favorite I couldn't resist:

http://stackoverflow.com/questions/...1 -

There are two kinds of programmers — those who have written compilers and those who haven't.

— Terry A. Davis9 -

Unofficial slogans for programming languages:

Javascript - JustShitting out frameworks every week.

Python - Shit programmers become slightly less shit and call themselves "data scientists" here.

C# - We know we are better than you, and even though we don't need to say it, we will say it anyway.

Pascal - The only recognized version of Pascal is from one single vendor.

Haskell - Stay is school if you want to use this professionally.

Swift - (honestly don't know what to say here, Lensflare can fill in on this one.) Maybe this: The first rule of Swift club is we don't talk about Apple club.

Java/Kotlin - We are in everything, including your mom's vibrator.

C - The rest of the programming world doesn't exist. Especially in embedded. Happily using K & R compilers for 3 decades.

C++ - We will pretend to care about the rest of the programming world, but like C, we will do whatever the fuck we want. or, Being held back by the ABI for at least a decade.

Rust - I feel bad for you for using other programming languages.

These are probably highly inaccurate, mostly just wanted to talk about Java being in your mom's sex toy.9 -

I would consider myself in the topper half of c++. I build compilers, i contributed 1000 linus to the linux kernel... But still: I am (only) 14 and lack experience... Could you please share some tipps for a young programmer... Not only for jobs, but also to improve more...10

-

Fellow Deviants, I need your help in understanding the importance of C++

Okay, I need to clarify a few things:

I am not a beginner or a newbie who has just entered this community...

I have been using C++ for some time and in fact, it was the language which introduced me to the world of programming... Before, I switched to Java, since I found it much better for application development...

I already know about the obvious arguments given in favour of C/C++ like how it is a much more faster and memory efficient than other languages...

But, at the same time, C/C++ exposes us and doesn't protect us from ourselves.. I hope that you understand what I mean to say..

And, I guess that it is a fair tradeoff for the kind of power and control that these languages (C/C++) provide us..

And, I also agree with the fact that it is an language that ideally suits our need, if we wish to deal with compilers, graphics, OS, etc, in the future...

But, what I really want to ask here is:

In this age and times, when hardware has advanced so much, where technically, memory efficiency or execution speeds no longer is the topmost priority... These were the reasons for which C/C++ was initially created...

In today's time, human concept of time matters more and hence, syntactical less complicated languages like Java or Python are much more preferred, especially for domains like application development or data sciences...

So, is continuing with C++, an endeavour worth sticking with in the future or is it not required...

I am talking about this issue since I am in a dilemma about the use of C++ in the future...

I would be grateful if we could talk about keeping AI, Machine Learning or Algorithms Optimisation in mind... Since, these are the fields in which I am interested in...

I know that my question could have been posted in a better way.. But, considering the chaos that is present in my mind, regarding this question doesn't allow me to do so...

Any kind of suggestion or thoughts would be welcome and much appreciated...

P.S: I currently use C++ only for competitive programming or challenges...28 -

compile with gcc, ./a.out: "Segmentation fault (core dumped)"

compile with clang, ./a.out: runs and fails.

compile with cc, ./a.out: Alternated between "Error: Too many arguments" and "Segmentation fault"...

ffs I'm done for the week I guess.

The problem is not that it fails, the problem is that it alternates because of time of compilation, power consumption, random blody oracles or the phase of the moon in a leap year on a Friday the 13th. God.Please.Send.~Nudes~. Help.rant clang afraid to use other compilers compiler argp linking what is that cc gcc subliminal segmentation faults stumble12 -

At what point can I claim to not be a script kiddie anymore?

Like, I've built compilers, and interpreters for an excel-like syntax, I refactored a pdf-parsing library from the ground up. I managed databases and wrote protocols for communicating with hardware.

But most of my experience is with python / nodejs / golang. It is only recently that I started playing with C and rust for actual efficient system code.8 -

!rant

All computers are great and not all people are compilers

please use a Semicolon for God's sake

Though coding is competence

I believe in readable code2 -

Build apps for UWP, it will be fun they said. Yeah, created my first hello world app, which works btw. Added one f***ing button and compilers throws thousands of errors. Dude, I really enjoy it! :)

-

Q: "Babe, what's wrong?"

Women: "Nothing, its just fine"

Compilers: "Object reference not set to an instance of an object"6 -

I found a vulnerability in an online compiler.

So, I heard that people have been exploiting online compilers, and decided to try and do it (but for white-hat reasons) so I used the system() function, which made it a lot harder so i decided to execute bash with execl(). I tried doing that but I kept getting denied. That is until I realized that I could try using malloc(256) and fork() in an infinite loop while running multiple tabs of it. It worked. The compiler kept on crashing. After a while I decided that I should probably report the vulnerabilites.

There was no one to report them to. I looked through the whole website but couldn't find any info about the people who made it. I searched on github. No results. Well fuck.6 -

(a question)

so in my city there's an opportunity for some

tech university students to attend extra programming classes for a year (12 hours/week). they'll teach many things: (advanced) data structures, databases, oses, computer networks, compilers, making games, data analytics etc. is it worth attending or i should just write down those topics in a to-do list and learn them by myself? i've never attended such courses so i don't know if they are useful or not. thanks for taking the time to read this :)6 -

If you asked me two months ago I'd have said building and using a Barnes Hut tree with CUDA.

Today my answer is working on a fuzzer with LLVM without knowing shit about either C++ and compilers. -

Servey Question.

How many you programmers have a working knowledge of how compilers work? The philosophy and mathematics behind them. Different stages. The choices one might have to make at different stages. Reasoning about the said choices. Difference between different paradigms -- philosophically and implementation wise. The tools one might use.

Reason behind I'm asking this is that I got into a debate with a friend where he said 9/10 of people whom we call "developers" have little to no idea how compilers work.11 -

Didn't think I had material for a rant but... Oh boy (at least at the level I'm at, I'm sure worse is to come)

I'm a Java programmer, lets get that out of the way. I like Java, it feels warm and fuzzy, and I'm still a n00b so I'm allowed to not code everything in assembly or whatever.

So I saw this video about compilers and how they optimize and move and do stuff with the machine code while generating the executable files. And the guy was using this cool terminal that had color, autocomplete past commands and just looked cool. So I was like "I'll make that for my next project!"

In Java.

So I Google around and find a code snipped that gives me "raw" input (vs "cooked" input) and returns codes and I'm like 😎. Pressing "a" returns 97 (I think that's the ASCII value) and I think this is all golden now.

No point in ranting if everything goes as planned so here is the *but*

Tabs, backspaces and other codes like that returned appropriate ASCII codes in Unix. But in windows, no such thing. And since I though I'd go multiplatform (WORA amarite) now I had to do extra work so that it worked cross platform.

Then I saw arrow keys have no ASCII codes... So I pressed a arrow key and THREE SEPARATE VALUES WERE REGISTERED. Let me reiterate. Unix was pretending I had pressed three keys instead of one, for arrow keys. So on Unix, I had to work some magic to get accurate readings on what the user was actually doing (not too bad but still...). Windows actually behaved better, just spit out some high values and all was good. So two more systems I had to set up for dealing with arrow keys.

Now I got to ANSI codes (to display color, move around the terminal window and do other stuff). Unix supports them and Windows did but doesn't but does with some Win 10 patch...? But when tested it doesn't (at least from what I've seen). So now, all that work I put into making one Unix key and arrow key reader, and same for Windows, flies out the window. Windows needs a UI (I will force Win users, screw compatibility).

So after all the fiddling and messing, trying to make the bloody thing work on all systems, I now have to toss half the input system and rework it to support UI. And make a UI, which I absolutely despise (why I want to do back end work and thought this would be good, since terminal is not too front end).1 -

Ignore every single comment that had been passionately written into the code.

Probably thought "if compilers can, why can't I?". -

Well the company I work for was too cheap to buy a new Graphics Card so we got an old graphics card (nvidia) and had to install it with working drivers

We checked and the drivers were End Of Live since I think 2015 or 2011 so we downgraded the hell out of ubuntu 20.04 Kernel, Compilers and the driver installation still failed We had to get the gcc 7.2 but there wasn't any PPA's available with this anymore and the installation still failed without giving proper error message 11 Hours later we decided to go home

The next day we got an Email we wasted time and money of the company

but we were asked to do this.... (two working students getting minimum wage)5 -

!rant :) FUUUUUUUUDGE YEAAAAH!

it's so satisfying when you've been working on a huge ass thing(when maybe you should have tested individual parts) and it just fucking works as intended amazing, I love it!

It's so beautiful to see your own compiler(jk just scanner+parser atm) compile code successfully -

So I just read up on what the language D has to offer. It seems quite good!

- Active community

- Multiple compilers

- Modern (no header files, garbage collector, etc.)

- No VM or framework needed to run it (like C# and Java)

Looking forward to trying it out!

Does anyone have any experience with it? What are your thoughts?7 -

Best thing: I started to understand how compilers work under the hood (sort of), was even able to implement a few scanners already

Worst thing: I have absolutely no clue how to continue ._.2 -

If you're working on close to hardware things, make sure you run static analysis, and manually inspect the output of your compiler if you feel something's off - it may be doing something totally different from what you expect, because of optimization and what not. Also, optimizations don't always trigger as expected. Also, sometimes abstractions can cost a fair amount too (C++ std::string c/dtor, for example, dtors in general), more than you'd expect, and in those cases you might want to re-examine your need for them.

Having said all that, also know how to get the compiler to work for you, hand-optimization at the assembly level isn't usually ideal. I've often been surprised by just how well compilers figure out ways to speed up / compactify code, especially when given hints, and it's way better than having a blob of assembly that's totally unmaintainable.

Learnt this from programming MCUs and stuff for hobby/college team/venture, and from messing around with the Haskell compiler and LLVM optimization passes.1 -

god damn it c++, you and your ambiguous, contextual grammar!

currently working on a c++ and c parser, went from trying to use a parser generator to now writing a parser by hand.3 -

Anyone able to link me to some good reading material on compilers, interpreters, emulation and CPU design?

Keen to actually get some of this knowledge under my belt, don't mind if it requires a little investment as long as it isn't written in (fucking) python, preferably C if anyone knows of anything.

Thanks guys! :-D9 -

"Reflective" programming...

In almost every other language:

1. obj.GetType().GetProperties()

or

for k, v in pairs(obj) do something end

or

fieldnames(typeof(obj))

or

Object.entries(obj)

2. Enjoy.

In C++: 💀

1. Use the extern keyword to trick compilers into believing some fake objects of your chosen type actually exist.

2. Use the famous C++ type loophole or structured binding to extract fields from your fake objects.

3. Figure out a way to suppress those annoying compiler warnings that were generated because of your how much of a bad practice your code is.

4. Extract type and field names from strings generated by compiler magic (__PRETTY_FUNCTION__, __FUNCSIG__) or from the extremely new feature std::source_location (people hate you because their Windows XP compilers can't handle your code)

5. Realize your code still does not work for classes that have private or protected fields.

6. Decide it's time to become a language lawyer and make OOPers angry by breaking encapsulation and stealing private fields from their classes using explicit template instantiation

7. Realize your code will never work outside of MSVC, GCC or CLANG and will always be reliant on undefined behaviors.

8. Live forever in doubt and fear that new changes to the compiler magic you abused will one day break your code.

9. SUFFER IN HELL as you start getting 5000 lines worth of template errors after switching to a new compiler.13 -

Hey this is Linuxer4fun or BinaryByter, you might remember me as that smartass Teen who fanboyed over C++ and built kernels and compilers and all that shit. Well...

Ultimately i must admit that I have moved away from Programming. I dont have any Projects I could acomplish which would be worth my time, I cant come up eith any, to say the least.

Additionally I'm demotivated as hell because I'm always tired due to my Hourlong Organ-Practice sessions and very long school times.

I think that I want to major in Music.

So incase you wondered, thats where I have gone to. I might still lurk here, and maybe someday i'll restart coding. I hope that I will, because coding was loads of fun!7 -

Today was a rather funny day in school. School starts for me at 13:40 because our timetable planners are so qualified for this job.

First 2hrs: Physics, fine its good

Second 2hrs: Discrete Maths (however you want to call it)

Goal is to write a text (30 pages, 10, etc all those standard settings). Teacher prefers Latex over word, but we can do it in word if we want. We could choose a topic, I took primes because it looked the best. I decided to use latex because I'm a fetishist and it simply looks better in the end. A classmate was arguing with our teacher about ides: texmaker vs kile. And I'm like "I use vim". So my teacher is like kk

Later that class, when we actually started doing stuff I started the ssh session to my server because I don't know any good c++ compilers for win and I'm too lazy to get a portable version of cygwin (or whatever its called). So in my server I open vim and start coding my tool for Fermat Primes (Fermatsche Primzahlen, too lazy to actually translate). And this teacher seriously is the best teacher I ever met in my life. Usually teachers are like " dude r u hakin' the school server?" and I'm like bruh its just vim and I'm doing it this way because I cannot code on your PC coz I can't install a compiler. And this teacher is like "oh hey you actually use vi, all cool kids used it in 2000. I first though u were kidding and stuff..." And we continued talking about more of stuff like that and I have to say that this is the first teacher that actually understands me. Phew

Now I'm going to continue writing my 30 pages piece of trash latex doc and hope it'll end good1 -

Compilers should just work for raw C with only static memory allocation. This isn't the bad old days where a couple of dudes wrote a short book explaining how C might probably should possibly work. I hear supposedly we have standards now.

Well, last week I lost 2 days to our compiler randomly forgetting that it wasn't okay to put a globally allocated uint32 at an address ending in 9. What? It had been handling this case without issue for more a year, but now after changing completely unrelated code we have this problem.

I'm not sure how to even deal with this idiocy so no doubt I'll continue working on it this week, too.

Thanks a lot, GCC.1 -

Oh god, structure alignement, why you do this... You might be interested if you do C/C++ but haven't tried passing structures as binary to other programs.

Just started working recently with a lib that's only a DLL and a header file that doesn't compile. So using python I was able to use the DLL and redefined all of the structures using ctypes, and the nice thing is: it works.

But I spent the whole afternoon debugging why the data in my structures was incoherent. After much cussing, I figured out that the DLL was compiled with 2 bytes packing...

Packing refers to how structures don't just have all the data placed next to each other in a buffer. Instead, the standard way a compiler will allocate memory for a structure is to ensure that for each field of the structure, the offset between the pointer to the structure and the one to the field in that structure is a multiple of either the size of the field, or the size of the processor's words. That means that typically, you'll find that in a structure containing a char and a long, allocated at pointer p, the double will be starting at p+4 instead of the p+1 you might assume.

With most compilers, on most architectures, you still have the option to force an other alignment for your structures. Well that was the case here, with a single pragma hidden in a sea of ifdefs... Man that took some time to debug...2 -

A bit confused

I have a code and it is giving different answers in different language and compilers

the code is

Int a=4

Int b = ++a + a++ + --a

Please ignore the syntactical errors

But this logic give different answers in different compiler and language like in

C(turbo c++ compiler) it gives 12

C(gcc)- 16

Java- 15

Python- 12

Can anybody explain the logic behind this...10 -

My Mentor during the 1st year of my College

Set High Expectations and very frequently used to throw insults and shame me as if I knew nothing. And he was not wrong. I sucked so bad. Did learn some basics and promoted me one level from the "total newbie" state

But my best Mentor would have to be my PCs, Compilers, Debuggers. Couldn't find a better one1 -

Having other people pitch you to hiring managers, raise the hype, and profess faith in your abilities is kind of nerve-wracking. But I found a tutorial to teach me how to write a compiler and it’s not in a language I know, so that’s fun and distracting. Been up since 4am. Is now 12:30am. Should probably sleep.3

-

What book/video/resource do you know that explains complex stuff in a simple and fun way?

I recently found "Carfting Interpreters" by Bob Nystrom. It explains how to create a new scripting langauge from scratch, It teaches you a lot about interpreters and compilers and virtual machines. And it's free!

http://craftinginterpreters.com//1 -

A long time ago in a decision poorly made:

Past me: hmm we're having trouble getting IT to give us a new build machine with the new compilers.

Past me: I know we'll just use one of the PCs that belongs to a member of the team to tide us over.

[2 months pass]

Present me: that's odd, Jenkins is really slow today.

[Several minutes pass]

Present me: holly shit fuck; it's building the whole weekends worth of builds at 9am on a workday and eating licenses like a cast away that suddenly teleported to an all you can eat buffet.

Present me: [abort, abort, for the love of fuck abort]

Present me: contacts IT, they can't find any problems, wtf happened.

Present me: discovers team member turned off his machine on Friday and builds had been stacking up all weekend.

Lessons learnt: disable power button on team members pc and hire a tazer guy to shoot whenever someone goes near the wall socket.

1 hour lost and no build results for the last 3 days.

It's looking like a bad morning

-

I want to build sentiments into the lexical analysis part of compilers, just so that every time my coworker tries to compile his "work", the computer prints,

"*sigh* Brian, just... *sigh*" -

so llvm core was written in c++

and it's used to build good compilers.

and they used it to build llvm-g++, a c++ compiler (now deprecated)

huh...1 -

Today I realized that compilers are children, and must be treated as such. Generally, you might tend to expect a language to follow the same rules consistently, but oh how wrong you are, my sweet summer child.

I have a framework that I've been reusing across several personal Unity3d projects for a while, and all was well. This week, I was tasked with creating a PoC that combines a web app with Unity WebGL for data visualization. My framework has a ton of useful stuff helped me create the PoC very quickly, and all was well.

Come 3 days ago and there's one last piece that isn't working for some reason. It almost appears that this one bit of code isn't executing at all. Today, after countless hours of swearing at the computer and banging my head against the wall, I realized that the WebGL compiler has a different implementation for the method that checks assignability of types. An implementation that has different rules than everything else. An implementation that has no documentation about this discrepancy anywhere. I have no words.

tl;dr: The language changed the rules on me. Fuck me right?1 -

bro look how cool i am haha lol i know java c c# angular react and php lol haha infact bro i created couple compilers haha lol bro vscode bro more like vssucks lol i use Google Docs for coding haha bro what is windows i use Ubuntu lol for that alpha sigma grindset life haha lol just update 1000 packages a week bro i play with the bootloader like messi plays football bro haha bro i can't exit vim bro i basically stay in it haha lol bro i know all about AI haha LLMs haha im taking an inteview, a shit and solving complex neurological simulations at once bro haha i wear dev related tshirts haha lol bro my house is built on Alexa bro haha ALEXA TURN ON THE LIGHTS see how cool it is bro haha i use OAuth everywhere bro to gain access to my toilet seat haha lol my thumbs hurt so bad lol bro cuz I code all day long bro what are weekends bro I never take leaves bro haha have to stay on that sigma side hustle culture right haha look how many stickers i have on my laptop haha im so cool haha lol.

But I am lonely and go online to tell people how cool I am from my mother's basement.4 -

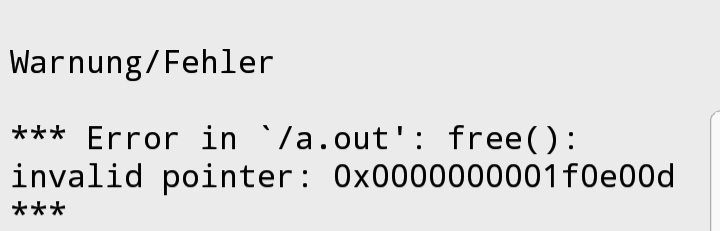

Some compilers give an error message on forgotten type casting. From that it shows good typing style casting. So you also avoid clerical errors that can lead to the program crash in the worst case. With some types it is also necessary to perform type casting comma on others Types, however, do this automatically for the compiler.

In short:Type casting is used to prevent mistakes.

An example of such an error would be:

#include <stdio.h>

#include <stdlib.h>

int main ()

{

int * ptr = malloc (10*sizeof (int))+1;

free(ptr-1);

return 0;

}

By default, one tries to access the second element of the requested memory. However, this is not possible, since pointer calculation (+,-) does not work for a void pointer.

The improved example would be:

int * ptr = ((int *) malloc (10*sizeof (int)))+1;

Here, typecasting is done beforehand and this turns the void pointer into its int pointer and pointer calculation can be applied. Note: If instead of error "no output" is displayed on the sololearn C compiler try another compiler. 1

1 -

Got VS running, SDL up and running and outputting, and angelscript included. Only getting linker errors on angel at the moment, not on inclusion, but on calling engine initialization.

Who knows what it is. Devs recommended precompiling but I wanted to compile with the project rather than as a dll (maybe I'm doing something stupid though, too new to know).

Goal is to do for sdl, cpp, and angelscript, what LOVE2d did for lua. Maybe half baked, and more just an experiment to learn and see if I can.

Would be cool to script in cpp without having to fuck with compilers and IDEs.

As simple as 1. write c++, 2. script is compiled on load, 3. have immediate access to sdl in the same language that the documentation and core bindings are written for.

Maybe make something a little more batteries-included than what lua and love offer out of the box, barebones editors and tooling and the like, but thats off in the near future and just a notion rather than a solid plan.

Needed to take a break from coding my game and here I am..experimenting with more code.

Something is wrong with me.8 -

I prefer starting from theory and then proceed with practicing. For example, to learn haskell and deeply understand it, I started by taking a course in category theory and I already have a degree in computer science and then start writing actual code. The same with JS. I started with theory for JIT compilers and studied how V8 works, how it utilizes event loops and how they are implemented in the kernel. Then I started experimenting with code and demos. It's a success path for me, that has worked every time with every new technology.2

-

I just learned C and I have created some projects like Parking System and Library Management System. My problem is I don't know mathematics and I want to learn DataStructures & Algorithms and become pro in it. In the whole September I will still be focusing on C and create more projects. I have started learning Mathematics today from High School level to College level. I thik maths will take 1 year to complete. After September in the October I want to start learning C++ and finish C++ till the end of Dec 2019. I want to know that do I have to first finish my maths learning which will take 1 year then I should start learning Data Structures and Algorithms? As I said I want to become a professional in Algorithms. I think its not possible to learn DS&A yet I have to wait 1 year till I finish learning my Maths. I can't do more with C & C++ without knwoing DS&A? If I started learning DS&A with C++ in the future then I can't become good at algorithms? I want to do competitive programming and be at Top 1 of Hacker Rank and other sites like this.7

-

Just had a thought: Instead of LLVM modeling and optimizing an IR and then backends having to optimize again for actual machine code lowering, wouldn't it be possible to unite both under one unified system?

If you model everything as one huge and complex state machine with a bunch of predefined "micro ops", couldn't you write an optimizer which lowers to the mathmatical presentation of the target platform's instructions?

I.e. the actual identities of the instructions don't matter. What matters is that the input ir is `(x + 3) & 0xff` and the optimizer tries to fit a sequence of instructions to that so that it "solves the system". It doesn't know x86 `andb`; it knows that `andb` takes an input, maybe truncates it, does a bitwise or, and stores the output into a reg

That way you wouldn't have to write complex target dependent backends. Just declare the sequence of actiosn each instruction does and llvm would automatically be able to produce very high quality machine code

I think there's a phd worth of research here but helllll no I'm not touching compilers again lol -

Can anyone help me with this theory about microprocessor, cpu and computers in general?

( I used to love programming when during school days when it was just basic searching/sorting and oop. Even in college , when it advanced to language details , compilers and data structures, i was fine. But subjects like coa and microprocessors, which kind of explains the working of hardware behind the brain that is a computer is so difficult to understand for me 😭😭😭)

How a computer works? All i knew was that when a bulb gets connected to a battery via wires, some metal inside it starts glowing and we see light. No magics involved till now.

Then came the von Neumann architecture which says a computer consists of 4 things : i/o devices, system bus ,memory and cpu. I/0 and memory interact with system bus, which is controlled by cpu . Thus cpu controls everything and that's how computer works.

Wait, what?

Let's take an easy example of calc. i pressed 1+2= on keyboard, it showed me '1+2=' and then '3'. How the hell that hapenned ?

Then some video told me this : every key in your keyboard is connected to a multiplexer which gives a special "code" to the processer regarding the key press.

The "control unit" of cpu commands the ram to store every character until '=' is pressed (which is a kind of interrupt telling the cpu to start processing) . RAM is simply a bunch of storage circuits (which can store some 1s) along with another bunch of circuits which can retrieve these data.

Up till now, the control unit knows that memory has (for eg):

Value 1 stored as 0001 at some address 34A

Value + stored as 11001101 at some address 34B

Value 2 stored as 0010 at some Address 23B

On recieving code for '=' press, the "control unit" commands the "alu" unit of cpu to fectch data from memory , understand it and calculate the result(i e the "fetch, decode and execute" cycle)

Alu fetches the "codes" from the memory, which translates to ADD 34A,23B i.e add the data stored at addresses 34a , 23b. The alu retrieves values present at given addresses, passes them through its adder circuit and puts the result at some new address 21H.

The control unit then fetches this result from new address and via, system busses, sends this new value to display's memory loaded at some memory port 4044.

The display picks it up and instantly shows it.

My problems:

1. Is this all correct? Does this only happens?

2. Please expand this more.

How is this system bus, alu, cpu , working?

What are the registers, accumulators , flip flops in the memory?

What are the machine cycles?

What are instructions cycles , opcodes, instruction codes ?

Where does assembly language comes in?

How does cpu manipulates memory?

This data bus , control bus, what are they?