Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "data overflow"

-

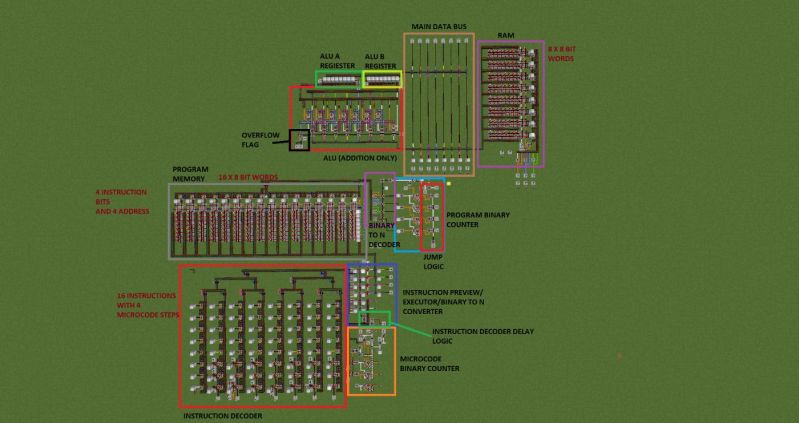

There is. My latest creation. A 8bit microcontroler made in minecraft.

Features:

(1.0 version without control room)

-8bit full adder + overflow flag

-8x8bit RAM

-16x8(4bit instruction, 4bit address)

program memory

-64 possible microinstructions (16 instructions with 4 step each)

-uncondintional and if oveflow jumps

(place determined using address written with instruction)

-1/3Hz clock speed 😨

New working version (2.0) has 1Hz clock and new faster instruction decoder.

In 3.0 in addition to that useless bus was replaced with 16x8bit "hardware" stack that can store adresses and data. The clock is going to be yeeted out because it is unnecesary #clocklessisbetter (WIP tho)

Might add more documentation and post it as learning model for CS wanabees 🤔. What do you think?

Picture: Old working version 1.0

(the only one with fancy diagram)

Newer version screenshots in comments. 33

33 -

The story of the $500,000,000 error.

In 1996, an unmanned Ariane 5 model rocket was launched by the European Space Agency.

Onboard was software written to analyze the horizontal velocity of the spacecraft. A conversion between a 64-bit floating point value and a 16-bit signed integer within this software ultimately caused an overflow error just forty seconds after launch, leading to a catastrophic failure of the spacecraft.

That day, $7 billion of development met it's match: a data type conversion.12 -

If all you have is a hammer, everything looks like a nail!

This was something which my tech lead used to tell me when I was so obsessed with nosql databases a few years back. I would try to find problems to solve that has a use case for nosql databases or even try to convince me(I didn’t realise it back then) that I need to use nosql db for this new idea that I have, without really thinking deep enough whether the data in question is better represented using an sql schema or not.

Now, leading a team of young developers, I come across similar suggestions from few of my team members who just discovered this new and shiny tech and want to use it in production projects.

While I am not against new and shiny, it’s not a good practice to jump right in to it without exploring it deep enough or considering all the shortcomings. The most important question to ask is, whether some of the problems you are trying to solve can be solved with the current stack.

Modifying your stack requires more than just a week’s experience of playing around with the getting started guide and stack overflow replies. This is something which need to be carefully considered after taking inputs from the people who would be supporting it, that include operations, sysadmins and teams that are gonna interface with your stack indirectly.

I am not talking about delaying adoption by waiting for long list of approvals to get some thing that would bring immediate value, but a carefully orchestrated plan for why and how to migrate to a new stack.

Just because one of the tech giants made a move to a new stack and wrote about it in their engineering blog doesn’t mean that you need to make a switch in the same direction. Take a moment to analyse the possible reasons that motivated them to do it, ask yourself if your organisation is struggling with the exact same problems, observe how others facing the same issue are addressing it, and then make an informed decision.

Collect enough data to support your proposal.

Ask yourself again if you are the one holding the hammer.

If the answer is no, forge ahead! 9

9 -

A group of Security researchers has officially fucked hardware-level Intel botnet officially branded as "Intel Management Engine" they did so by gathering it all the autism they were able to get from StackOverflow mods... though they officially call it a Buffer Overflow.

On Wednesday, in a presentation at Black Hat Europe, Positive Technologies security researchers Mark Ermolov and Maxim Goryachy plan to explain the firmware flaws they found in Intel Management Engine 11, along with a warning that vendor patches for the vulnerability may not be enough.

Two weeks ago, the pair received thanks from Intel for working with the company to disclose the bugs responsibility. At the time, Chipzilla published 10 vulnerability notices affecting its Management Engine (ME), Server Platform Services (SPS), and Trusted Execution Engine (TXE).

The Intel Management Engine, which resides in the Platform Controller Hub, is a coprocessor that powers the company's vPro administrative features across a variety of chip families. It has its own OS, MINIX 3, a Unix-like operating system that runs at a level below the kernel of the device's main operating system.

It's a computer designed to monitor your computer. In that position, it has access to most of the processes and data on the main CPU. For admins, it can be useful for managing fleets of PCs; it's equally appealing to hackers for what Positive Technologies has dubbed "God mode."

The flaws cited by Intel could let an attacker run arbitrary code on affected hardware that wouldn't be visible to the user or the main operating system. Fears of such an attack led Chipzilla to implement an off switch, to comply with the NSA-developed IT security program called HAP.

But having identified this switch earlier this year, Ermolov and Goryachy contend it fails to protect against the bugs identified in three of the ten disclosures: CVE-2017-5705, CVE-2017-5706, and CVE-2017-5707.

The duo say they found a locally exploitable stack buffer overflow that allows the execution of unsigned code on any device with Intel ME 11, even if the device is turned off or protected by security software.

For more of the complete story go here:

https://blackhat.com/eu-17/...

https://theregister.co.uk/2017/12/...

I post mostly daily news, commentaries and such on my site for anyone that wish to drop by there 19

19 -

In the begining of time, when The Company was small and The Data could fit in some fucking excel sheets, Those Who Came Before implemented some java tool to issue invoices, notify customers and clear received payments.

Then came the Time Of The Great Expanse, when The Company grew to unthinkable levels. Headcount increased with each passing day, and The Data shows that everything was going great!

But when the future seemed bright, came The Stall-Out. The days when The Company could not expand as fast as it did before. And Those Who Came Before left, abandoning their Undocumented Java Tool to its own luck.

Those who came after knew nothing of the inner workings of the Undocumented Java Tool. They knew only that the magical Jar would take a couple fucking excel spreadsheets and spit out reports and send emails like magic.

And those were The Dark Days.

In the darkness, The Data grew to be a monster. Soon a fucking excel spreadsheet could not hold The Data contained any longer. Those Who Came After, fearing the wrath of The Undocumented Java Tool, dared not mess with its code. Instead, they fucking cut away the lowest volume transactions from the fucking input spreadsheet, and left the company to report the unbilled invoices as "surprise losses". Fucking script kiddies, were Those Who Came After.

Then, at The Darkest of Days (literally, Dec 21st), marched into the project The Six Witchers, who fear not the Demon of Refactoring.

This story is still unfolding. Will The Six Witchers manage to unravel the mysteries of The Undocumented Java Tool? Will they be able to reverse engineer the fucking black box, and scale it's magic into a modern application?

Will they decrease revenue forecasting error by at least 2% in a single strike?

Only the future will tell.16 -

So day 2 of my python automations.

I have spent 6 hours and a lot of stack overflow “research” to saved myself 45 minutes a day with file downloads (web & ftp and outlook emails), excel spreadsheets and data manipulation macros, all stored in a nice tidy zip file at the end.

Now to find a way to send to a web server for digestion 😎

And all of this in a poor 90 lines 😧

God damn why didn’t I look into this earlier?2 -

I'm exhausted.

After one and a half year after my last rant, I'm here again. I left the previous job as web developer after almost 12y. At the time I found 3 new jobs as developer; I chose the one with the largest company, the premises were really good. My 3 interviews were excellent. But what I found next was almost a nightmare.

I was literally "confined" for the first 2 months, no internet connection, no email address, very little communication with colleagues. My near colleague was sharing the code were I would work via a usb key. All this for "safety" purposes, because "here you start this way".

For me it was not so bad, I could take my time to study my work and do it (without Stack Overflow and only by reference guides, when needed - I felt proud in an old way). But the next months were really tough: no help to understand what I missed about the work I was doing (consider that I was working on a large database, previously used by an old ERP, on which other developers - prior me - wrote a lot of code, to make the company continue use all the data after the expiration of the ERP licences - speaking about a year 2000's Java application).

Now I find myself struggling, because the main project on which I was working has been set aside (apparently for some budget decisions); my work team constantly make me do some manteinance on the old code, but the main tasks are done by the old mate, "because deadlines are always pressing and there would not be enough time to explain you anything". I'm not growing.

I'm really becoming reluctant to write code, and whenever I do it, I constantly feel under pressure, and this makes me nervous and inclined to make errors.

Don't take me wrong, I was/am good at my work, but it's like I'm loosing that sparkle I had till a few years ago.

When I'm at home I try to study or write code, just to keep training my mind, but I'm really struggling and I'm worried about losing my brain for doing this job. I constantly forget things and lose focus.

Never felt this way. I am thinking about the chance to switch again and search for another company.6 -

For fuck's sake, management is now asking us to provide data converted in % as to how genAI is actually making us more efficient as developers. How the fuck do you even measure that empirically?

It is already BS enough that they track how much we query these AI tools everyday in our development environments, but now they want genAI to produce most of the code templates in our SDK. It can barely produce a working regex or a working python script, let alone a small piece of code that won't stack overflow itself into oblivion. It sometimes takes more time to debug and refactor than to do it myself from scratch.

They ask for our professional opinion, we tell them, they don't give a fuck about it, proceed to think all is rainbows and unicorns, and still ask us the same moronic things as if they were the new messiah's on earth.

Don't get me wrong, genAI can be useful, but why the fuck does management think it will magically solve all our problems when they don't even understand how it works even on the surface.

The only thing that would make sense is a lot of them got money at stake in some AI investment sales pitch bullshit and they try to jam it up our collective throats because otherwise they will loose their investments like there is no tomorrow.

Fuck all of this, I just want to do engineering and build something useful to society. Is it too much to ask?14 -

Only if people understood the amount of effort that goes behind building a simple app.

Even if it's a simple notes app, I've to design the UI (at least 2 different activities - 1 for the list and the other for editing notes), write the code which makes it run i.e. without which the app is just a piece of empty design, think about what data

structures to use (that notes you are saving need to be stored somehow) and then club everything together and hope nothing breaks (spoiler alert: something will definitely break).

People need to understand that it's not just putting some fancy buttons and boxes around. Also, I'm not just making the app for one device. I've to make sure it works on different screen sizes, different versions of the OS (a user can't imagine how many functions need to be re written because something got deprecated in the process and I'd to switch to something different).

Also I'm not just sitting at my computer and converting coffee to code. I've to think about the flow, structure, design, navigation, backend etc. Of the app; most of my time isn't spent writing code but thinking/studying how to write the code. I also need to wait while the project is compiling/building every time I want to test it.

A function which you think is hard to implement night be really easy while something you claim is easy might be a nightmare. Oh and I didn't even mention how I need to stick to some design guidelines to make the app look consistent with the rest of the OS.

If you're wondering why a developer is spending most of his time on a browser, he isn't playing internet games or browsing reddit ( at least you better hope not), he's probably looking at the docs/stack overflow to get something to work/fix something!

Wow! That was long. Thanks!3 -

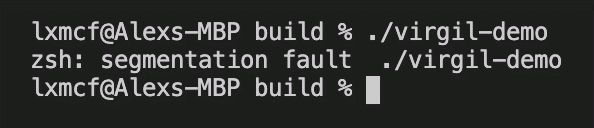

Well after years of programming, I've hit my first runtime error that provides no info , the code fails prior to being able to generate an error so this is fucking fine :-)

And of course, the one time I need stack overflow, it tells you to initialise the class with data... Yet the class doesn't contain a fucking constructor... Smiley face 7

7 -

I can’t believe that Python takes the 3rd place of the most commonly used languages in 2023.

Stack Overflow Developer Survey:

https://survey.stackoverflow.co/202...

Wtf?! I would have thought that it’s just used for small scripts occasionally that don’t change frequently and just rot in some project for years.

And of course there are some niche Data Science and Math areas where Python is used primarily.

Maybe Machine Learning and "AI" as well.

But 3rd place? Almost 50% of devs use Python?

Can someone explain?20 -

! rant

Sorry but I'm really, really angry about this.

I'm an undergrad student in the United States at a small state college. My CS department is kinda small but most of the professors are very passionate about not only CS but education and being caring mentors. All except for one.

Dr. John (fake name, of course) did not study in the US. Most professors in my department didn't. But this man is a complete and utter a****le. His first semester teaching was my first semester at the school. I knew more about basic programming than he did. There were more than one occasion where I went "prof, I was taught that x was actually x because x. Is that wrong?" knowing that what I was posing was actually the right answer. Googled to verify first. He said that my old teachings were all wrong and that everything he said was the correct information. I called BS on that, waited until after class to be polite, and showed him that I was actually correct. Denied it.

His accent was also really problematic. I'm not one of those people who feel that a good teacher needs a native accent by any standard (literally only 1 prof in the whole department doesn't), but his English was *awful*. He couldn't lecture for his life and me, a straight A student in high school, was almost bored to sleep on more than one occasion. Several others actually did fall asleep. This... wasn't a good first impression.

It got worse. Much, much worse.

I got away with not having John for another semester before the bees were buzzing again. Operating systems was the second most poorly taught class I've ever been in. Dr John hadn't gotten any better. He'd gotten worse. In my first semester he was still receptive when you asked for help, was polite about explaining things, and was generally a decent guy. This didn't last. In operating systems, his replies to people asking for help became slightly more hostile. He wouldn't answer questions with much useful information and started saying "it's in chapter x of the textbook, go take a look". I mean, sure, I can read the textbook again and many of us did, but the textbook became a default answer to everything. Sometimes it wasn't worth asking. His homework assignments because more and more confusing, irrelavent to the course material, or just downright strange. We weren't allowed to use muxes. Only semaphores? It just didn't make much sense since we didn't need multiple threads in a critical zone at any time. Lastly for that class, the lectures were absolutely useless. I understood the material more if I didn't pay attention at all and taught myself what I needed to know. Usually the class was nothing more than doing other coursework, and I wasn't alone on this. It was the general consensus. I was so happy to be done with prof John.

Until AI was listed as taught by "staff", I rolled the dice, and it came up snake eyes.

AI was the worst course I've ever been in. Our first project was converting old python 2 code to 3 and replicating the solution the professor wanted. I, no matter how much debugging I did, could never get his answer. Thankfully, he had been lazy and just grabbed some code off stack overflow from an old commit, the output and test data from the repo, and said it was an assignment. Me, being the sneaky piece of garbage I am, knew that py2to3 was a thing, and used that for most of the conversion. Then the edits we needed to make came into play for the assignment, but it wasn't all that bad. Just some CSP and backtracking. Until I couldn't replicate the answer at all. I tried over and over and *over*, trying to figure out what I was doing wrong and could find Nothing. Eventually I smartened up, found the source on github, and copy pasted the solution. And... it matched mine? Now I was seriously confused, so I ran the test data on the official solution code from github. Well what do you know? My solution is right.

So now what? Well I went on a scavenger hunt to determine why. Turns out it was a shift in the way streaming happens for some data structures in py2 vs py3, and he never tested the code. He refused to accept my answer, so I made a lovely document proving I was right using the repo. Got a 100. lol.

Lectures were just plain useless. He asked us to solve multivar calculus problems that no one had seen and of course no one did it. He wasted 2 months on MDP. I'd continue but I'm running out of characters.

And now for the kicker. He becomes an a**hole, telling my friends doing research that they are terrible programmers, will never get anywhere doing this, etc. People were *crying* and the guy kept hammering the nail deeper for code that was honestly very good because "his was better". He treats women like delicate objects and its disgusting. YOU MADE MY FRIEND CRY, GAVE HER A BOX OF TISSUES, AND THEN JUST CONTINUED.

Want to know why we have issues with women in CS? People like this a****le. Don't be prof John. Encourage, inspire, and don't suck. I hope he's fired for discrimination.11 -

We need an open-source alternative to stack overflow. They have fucking monopolizing pieces of ratshit admins there and lame ass bots.

I HAD A FUCKING 450 REP :/ and now i have "reached my question limit"

I mean its okay of you want to keep stackoverflow clean , but straight out rejecting the new queries should be against your god damn principles, if those mofos have any!

If it is so easy to downvote and delete a question for the mods, why can't they create a trash site called dump.stackoverflow.com ? whenever a question is not following their stupid guidelines , downvote it to oblivion. After a certain limit, that question goes to dump space where it will be automatically removed after 30 days. Atleast give us 30 fucking days to gather attention of audience !

And how does a question defines someone's character that you downright ban the person from asking new questions? Is there a phd that we should be doing in our mother's womb to get qualified as legitimate question author?

"No questions are stupid" is what we usually hear in our school/college life. And that's a stretch, i agree. Some questions are definitely stupid. But "Your questions are so stupid we are removing you from the site" is the worst possible way to deal with a question asker.

Bloody assholes.

Now, can anyone tell me that if am passing a parcelable list of objects in an intent before starting a new activity, how can i retrieve it in the new activity without getting any kotlin warnings?

The compiler is saying that the data coming via intent is that of list<Type!> aka list of platform type, so how to deal with this warning?13 -

!rant ?

So I had 2 Stack Overflow questions open about Rails / Webpack data communication, plus one issue open on Webpacker's github for 3 days, desperatly looking for an answer or an idea. No answers.

Today I talked a bit with my flatmate about my problem, dude gives me a perfect, easy to implant solution, and life seems to be bright again. Thank you Alex 😥.2 -

The company I work for uses Coldfusion which is a dead technology in my opinion. I was tasked with using a data grid for our data from our mssql databases. This data grid I was trying out uses ajax to make a call to the server and expects the data transfered back in Json format. well coldfusion sucks balls because it's serializeJson function returns a outdated JSON structure and I can't use it. So obviously this datagrid throws errors and when I try looking up coldfusion solutions online or scope out stack overflow, the posts are dated like 6 years back because no one fucking uses CF anymore. My boss loves to jerk to it, it seems because he refuses to change languages cause its all they have ever used. -_- this is 2016 bitch lol6

-

When someone (and this is typically someone non-dev and not a very structured person) says "Can I ask you a question" and, upon an affirmative answer to that, fires away a whole bunch of questions without waiting for an answer to the first of the questions.

Pleeeease! If you expect an answer, ask me one. question. at. a. time. Or write a freaking e-mail.3 -

I like how software is smart so I have to do things twice instead of once.

Automatically putting quotes works only if you put quotes and then paste inside it, the problem is I usually paste then put first quote and then need to remove second quote and put it on back and remove second quote from back.

Video start from where you left automatically fires and shows closing credits because you obviously want to see them.

Evaluate variable removes old evaluation because why you want old one when you have new.

Collapsing imports or functions in ide so you need to expand them all the time because who needs to look at functions when we have ai

AI models suggesting and adding meaningless annotations and code suggestions to distract me.

Randomly running some console command because I entered keyboard shortcut I don’t know even exists.

Literally every web browser address bar becomes advertising network instead of showing me history results.

Shadowing browsing history when you click back and forward button.

Search results are now buy results.

Suggesting me useless crap to watch because I watched one video in that topic.

Showing me 10 minutes videos as a solution to my problem where I want to find exact line of text to copy paste it. If I’m lucky I need to write text from video into my computer.

Stack overflow infinite loop of answered in #some-different-question

I think it’s about time for me to slowly retire from programming and software as a whole or switch to notepad because I don’t want to use this crap anymore.

Looks like software is now meant for entertainment and distraction instead of doing actual work where you need precise data and information.

Luckily if everything goes good I can retire soon and throw everything away for a while.3 -

2018: Data Scientist = Stack overflow copy pasting: "I followed a 12-hour 'DS' course on Lynda!"

lm() # science2 -

First post on devRant... Aaaaand it's university hw... I can't wrap my head around this...

So, the problem is: I have to implement writing and printing 64 bit decimal integers (negative and positive with 2s complement) in NASM Assembly. There are no input parameters, and the result should be in EDX:EAX. The use of 64 bit registers is prohibited.

There is a library which I can use: mio.inc

It has these functions:

- mio_writechar (writes the character which corresponds to the ASCII code stored in AL to console)

- mio_readchar (reads an ASCII character from console to AL)

It also has to manage overflow and backspace. An input can be considered valid or invalid only after the user hits Enter... It's actually a lot of work, and it's just the first exercise out of 10... 😭

The problem is actually just the input - printing should be easy, once I have valid data...

Please help me!3 -

I figured I would share my Capstone from this semester with a community that might be interested. An eclipse plugin that was developed in our lab is able to implicitly track developer eye gazes as they work in an IDE (eclipse in this case). Before I began work on it, source code, bug reports, and stack overflow documents could be tracked with all of the data on said documents being extracted. For example, if source code is being tracked, everything from the file name and class/method name down to statement types are collected. The tracking isn't on still images. Since it's within an IDE, you can open multiple files, scroll, and modify -- all while tracking is collecting accurate data based on the (x, y) gaze coordinate and the handler assigned to the type of document/file being viewed.

My job was to extend this functionality to track gazes on UML class diagram documents. This means I had to gather data at the highest level: the class/connection being looked at, down to the lowest level: members/methods, their types and containing classes.

Being new to Java's EMF, GEF, and eclipse plugin development, I had a bit of a learning curve. Anyways here is the poster of the functionality I added. 🙃

Not much of a rant haha.

-

OK I need some help. I need to make sure I’m not losing my mind.

We are using an ERP which is hosted by another company. We are supposed to be able to access the data via a REST API. This works fine using Insomnia or Postman, but when I attempt to hit the API from my web application, CORS blocks the localhost origin.

I contacted the company’s technical team to request that they change the CORS configuration to allow localhost. They keep running me around in circles telling me that I don’t know what I’m talking about because localhost isn’t a DNS resolvable name and I’m doing something wrong and they don’t need to change any configuration.

They insist that if anything would need white listed, it would be my IP, not localhost.

I sent them screenshots and stack overflow posts and documentation links, showing them exactly what headers need to be set and where the configuration needs to be set in the ERP. They tell me I don’t know what I’m talking about.

They tell me that if I can hit the API from Postman, I can hit it from my browser.

Am I losing my mind? Have I fundamentally misunderstood CORS all these years? I’m sure I’m right. But I’m starting to feel like I’m crazy.19 -

!rant (I got down voted for this on Stack Overflow, so I try to discuss the issue with a more professional crowd.)

In a Software Engineering class, we had an assignment to read Parnas' seminal paper on modularization [0]. In this paper, two approaches of dividing a software into modules are discussed:

Traditional Approach: A flow chart is drawn to work out the single processing steps and the program's high-level flow. Then every processing step is turned into a module. This approach doesn't yield very good results.

New Approach: Every design decision will be turned into a module by the means of information hiding. This approach leads to much better results.

My personal interpretation of the term design decision is that the modules are identified as data structures rather than as processing steps of an algorithm. This makes sense, because data structures are much more suitable for information hiding then processing steps of an algorithm. (The information inside a data structure is hidden behind functions, whereas a function only hides more detailed processing steps and no information; the information is actually passed in as arguments.)

Why does the second approach work so much better than the first approach? Here comes my second interpretation: The single processing steps of an algorithm are not replaceable (and thus not reusable), whereas it's possible to convert data structures into other data structures.

And here's my question: Could that be the reason why software development using workflow engines (based on BPMN, for example) never really took off?

My personal experience is that the activities created in such workflows are hardly ever reused, but there often are big data structures passed around all the involved activities, even if most of the activities use only one or two of them.

My question exaggerated: Could we get rid of all those clumsy workflow engines by giving managers Parnas' paper to read?

[0]: On the criteria to be used in decomposing systems into modules (Parnas 1972)2 -

Sydochen has posted a rant where he is nt really sure why people hate Java, and I decided to publicly post my explanation of this phenomenon, please, from my point of view.

So there is this quite large domain, on which one or two academical studies are built, such as business informatics and applied system engineering which I find extremely interesting and fun, that is called, ironically, SAD. And then there are videos on youtube, by programmers who just can't settle the fuck down. Those videos I am talking about are rants about OOP in general, which, as we all know, is a huge part of studies in the aforementioned domain. What these people are even talking about?

Absolutely obvious, there is no sense in making a software in a linear pattern. Since Bikelsoft has conveniently patched consumers up with GUI based software, the core concept of which is EDP (event driven programming or alternatively, at least OS events queue-ing), the completely functional, linear approach in such environment does not make much sense in terms of the maintainability of the software. Uhm, raise your hand if you ever tried to linearly build a complex GUI system in a single function call on GTK, which does allow you to disregard any responsibility separation pattern of SAD, such as long loved MVC...

Additionally, OOP is mandatory in business because it does allow us to mount abstraction levels and encapsulate actual dataflow behind them, which, of course, lowers the costs of the development.

What happy programmers are talking about usually is the complexity of the task of doing the OOP right in the sense of an overflow of straight composition classes (that do nothing but forward data from lower to upper abstraction levels and vice versa) and the situation of responsibility chain break (this is when a class from lower level directly!! notifies a class of a higher level about something ignoring the fact that there is a chain of other classes between them). And that's it. These guys also do vouch for functional programming, and it's a completely different argument, and there is no reason not to do it in algorithmical, implementational part of the project, of course, but yeah...

So where does Java kick in you think?

Well, guess what language popularized programming in general and OOP in particular. Java is doing a lot of things in a modern way. Of course, if it's 1995 outside *lenny face*. Yeah, fuck AOT, fuck memory management responsibility, all to the maximum towards solving the real applicative tasks.

Have you ever tried to learn to apply Text Watchers in Android with Java? Then you know about inline overloading and inline abstract class implementation. This is not right. This reduces readability and reusability.

Have you ever used Volley on Android? Newbies to Android programming surely should have. Quite verbose boilerplate in google docs, huh?

Have you seen intents? The Android API is, little said, messy with all the support libs and Context class ancestors. Remember how many times the language has helped you to properly orient in all of this hierarchy, when overloading method declaration requires you to use 2 lines instead of 1. Too verbose, too hesitant, distracting - that's what the lang and the api is. Fucking toString() is hilarious. Reference comparison is unintuitive. Obviously poor practices are not banned. Ancient tools. Import hell. Slow evolution.

C# has ripped Java off like an utter cunt, yet it's a piece of cake to maintain a solid patternization and structure, and keep your code clean and readable. Yet, Cs6 already was okay featuring optionally nullable fields and safe optional dereferencing, while we get finally get lambda expressions in J8, in 20-fucking-14.

Java did good back then, but when we joke about dumb indian developers, they are coding it in Java. So yeah.

To sum up, it's easy to make code unreadable with Java, and Java is a tool with which developers usually disregard the patterns of SAD. -

Yo, DevRat! Python is basically the rockstar of programming languages. Here's why it's so dope:

1. **Readability Rules**: Python's code is like super neat handwriting; you don't need a decoder ring. Forget those curly braces and semicolons – Python uses indents to keep things tidy.

2. **Zen Vibes**: Python has its own philosophy called "The Zen of Python." It's like Python's personal horoscope, telling you to keep it simple and readable. Can't argue with cosmic coding wisdom, right?

3. **Tools Galore**: Python's got this massive toolbox with tools for everything – web scraping, AI, web development, you name it. It's like a programming Swiss Army knife.

4. **Party with the Community**: Python peeps are like the coolest party crew. Stuck on a problem? Hit up Stack Overflow. Wanna hang out? GitHub's where it's at. PyCon? It's like the Woodstock of coding, man!

5. **All-in-One Language**: Python isn't a one-trick pony. You can code websites, automate stuff, do data science, make games, and even boss around robots. Talk about versatility!

6. **Learn It in Your Sleep**: Python's like that subject in school that's just a breeze. It's beginner-friendly, but it also scales up for the big stuff.

So, DevRat, Python's the way to go – it's like the coolest buddy in the coding world. Time to rock and code! 🚀🐍💻rant pythonbugs pythonwoes pythonlife python pythonprogramming codinginpython pythonfrustration pythoncode pythonrant pythoncommunity pythondev2 -

https://shiftmag.dev/unhappy-develo...

Semi interesting read covering some of the data points gleaned from the last SO survey. I hadn't seen this.