Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "decimal"

-

When you say "almost 2" instead of 1.7 because you're not sure your customers understand the decimal system.5

-

"42", the answer to life, the universe and everything, is the decimal representation for "*" in the ascii table. Got it? How cool is that?20

-

The GET /users endpoint will return a page of the first 13 users by default.

To request other pages, add |-separated querystring with the limit and offset, as roman numerals enclosed in double quotation marks. Response status is always equal to 200, plus the total count of the resource, or zero when there's an error.

You can include an array of friends of the user in the result by setting the request header "friends" to the base64-encoded value of the single white pixel png.

Other metadata is not included by default in responses, but can be requested by appending ?meta.json to any endpoint, which will return an xml response.

If you want to update the user's profile picture, you can request an OAuth token per fax machine, followed by a pigeon POST capsule containing a filename and a rolled up Polaroid picture. The status code attached to the return postal dove will be the decimal ASCII code for a happy smiley on success, and a sad smiley if any field fails form validation.

-- Every single external REST API I've ever worked with.7 -

Wonder why my colleague threw this book on my desk?

I accidentally changed all product prices from decimal(10,4) to decimal(10,0) on two live web shops with several thousands of products.

And it's friday 4 pm.

Have a nice weekend! 16

16 -

Halfway through a timed midterm (no computers or calculators):

Convert this 5-digit decimal number to binary

Convert this 10-digit decimal number to binary

Convert this 20-digit decimal number to binary

Convert this even longer decimal number to binary11 -

In a programming contest, I forgot how to round numbers in Java, an I needed a 3 number rounding, so I multiplied the number by 1000, then sum 0.5 and convert it to integer so the decimal part would be gone, finally, just print the number except the 3 last digits as a string, put a period and print the other 3 digits.

I must say I'm not proud of that.5 -

🍿🍿 pull up a chair and get comfy. This was a few years ago and anger has filled some details, so bear with me...

One day, during one of rare afternoons off of work, I was in the library to work on a group project for school. This was maybe a month before it was due, so we were tracking for decent progress and one less stressor over finals. It was about 80° F out, with the perfect breeze for the beach, but school comes first.

I'm team lead (which is terrifying, but less important) and my bro C shows up early to be ready to go on time because he's professional. I'M SO BAD I FORGOT DOUCHEBAGS NAME, so he's A (for asshole), shows up AN HOUR AND 15 MINUTES LATE. But it's not the end of the world, C and I worked around our database schema (which A sent us and we approved), so we could iron out kinks as we went.

A gets there... Fucking finally.

Fucker didn't have the database built (had 2 months to do it, we all agreed on schema a month prior. We're trying to be the adults our ages claim is to be).

*breathe in, count to 10* not a problem, A, just go ahead and start it now so we can at least check what we have.

Ok, my queen, I'll have it done in 10 minutes...

🤔🤔

We needed an id (sku... Which, in 99.9999% of companies is numeric), a short name (xBox one, Macbook, don't smart tv), a description and a price (with 2 decimals). All approved by all 3 of us.

His sku ranges from 3 to 9 ALPHA NUMERIC CHARACTERS, the names were even more generic than expected (item1, item 2, Item_3), no description, and he somehow thought US currency had 5 decimal places!!! (it's more accurate...)

There was an epic, royal, and expensive fight scene in the library (may have been during the Lenten season I decided to give up caffeine AND fast for 40 days to prove a point to an ass wipe of a history teacher, don't recall). I made him cry, he failed the class because C and I wound up fixing everything he touched (graded by commits, because it was also an intro to git, but also, a classmate saw it all), and I had to buy multiple people coffee for yelling in the library.

A tried making out buttons work (I was fed up and done thinking for the day, so moved to documentation), but he fucked those up. I then made those worse by having nested buttons, but I deleted all his shit and started over and fixed it.

I then cried, but C and I survived and have each others backs still.11 -

POSTMORTEM

"4096 bit ~ 96 hours is what he said.

IDK why, but when he took the challenge, he posted that it'd take 36 hours"

As @cbsa wrote, and nitwhiz wrote "but the statement was that op's i3 did it in 11 hours. So there must be a result already, which can be verified?"

I added time because I was in the middle of a port involving ArbFloat so I could get arbitrary precision. I had a crude desmos graph doing projections on what I'd already factored in order to get an idea of how long it'd take to do larger

bit lengths

@p100sch speculated on the walked back time, and overstating the rig capabilities. Instead I spent a lot of time trying to get it 'just-so'.

Worse, because I had to resort to "Decimal" in python (and am currently experimenting with the same in Julia), both of which are immutable types, the GC was taking > 25% of the cpu time.

Performancewise, the numbers I cited in the actual thread, as of this time:

largest product factored was 32bit, 1855526741 * 2163967087, took 1116.111s in python.

Julia build used a slightly different method, & managed to factor a 27 bit number, 103147223 * 88789957 in 20.9s,

but this wasn't typical.

What surprised me was the variability. One bit length could take 100s or a couple thousand seconds even, and a product that was 1-2 bits longer could return a result in under a minute, sometimes in seconds.

This started cropping up, ironically, right after I posted the thread, whats a man to do?

So I started trying a bunch of things, some of which worked. Shameless as I am, I accepted the challenge. Things weren't perfect but it was going well enough. At that point I hadn't slept in 30~ hours so when I thought I had it I let it run and went to bed. 5 AM comes, I check the program. Still calculating, and way overshot. Fuuuuuuccc...

So here we are now and it's say to safe the worlds not gonna burn if I explain it seeing as it doesn't work, or at least only some of the time.

Others people, much smarter than me, mentioned it may be a means of finding more secure pairs, and maybe so, I'm not familiar enough to know.

For everyone that followed, commented, those who contributed, even the doubters who kept a sanity check on this without whom this would have been an even bigger embarassement, and the people with their pins and tactical dots, thanks.

So here it is.

A few assumptions first.

Assuming p = the product,

a = some prime,

b = another prime,

and r = a/b (where a is smaller than b)

w = 1/sqrt(p)

(also experimented with w = 1/sqrt(p)*2 but I kept overshooting my a very small margin)

x = a/p

y = b/p

1. for every two numbers, there is a ratio (r) that you can search for among the decimals, starting at 1.0, counting down. You can use this to find the original factors e.x. p*r=n, p/n=m (assuming the product has only two factors), instead of having to do a sieve.

2. You don't need the first number you find to be the precise value of a factor (we're doing floating point math), a large subset of decimal values for the value of a or b will naturally 'fall' into the value of a (or b) + some fractional number, which is lost. Some of you will object, "But if thats wrong, your result will be wrong!" but hear me out.

3. You round for the first factor 'found', and from there, you take the result and do p/a to get b. If 'a' is actually a factor of p, then mod(b, 1) == 0, and then naturally, a*b SHOULD equal p.

If not, you throw out both numbers, rinse and repeat.

Now I knew this this could be faster. Realized the finer the representation, the less important the fractional digits further right in the number were, it was just a matter of how much precision I could AFFORD to lose and still get an accurate result for r*p=a.

Fast forward, lot of experimentation, was hitting a lot of worst case time complexities, where the most significant digits had a bunch of zeroes in front of them so starting at 1.0 was a no go in many situations. Started looking and realized

I didn't NEED the ratio of a/b, I just needed the ratio of a to p.

Intuitively it made sense, but starting at 1.0 was blowing up the calculation time, and this made it so much worse.

I realized if I could start at r=1/sqrt(p) instead, and that because of certain properties, the fractional result of this, r, would ALWAYS be 1. close to one of the factors fractional value of n/p, and 2. it looked like it was guaranteed that r=1/sqrt(p) would ALWAYS be less than at least one of the primes, putting a bound on worst case.

The final result in executable pseudo code (python lol) looks something like the above variables plus

while w >= 0.0:

if (p / round(w*p)) % 1 == 0:

x = round(w*p)

y = p / round(w*p)

if x*y == p:

print("factors found!")

print(x)

print(y)

break

w = w + i

Still working but if anyone sees obvious problems I'd LOVE to hear about it.36 -

Me: Hans, Get ze Flammenwerfer!!!

Hans: Why?

Me: They use long instead of big decimal!

Hans: How do they handle the floating values then?

Me: Before sending the request they multiply by 100 and on receiving they divide by 1002 -

Just got a lection from my manager.

Today he sent me an email with request to change validation on one field validation from decimal(5,3) to int which will be 5 digit number. Ok i did that, I changed it on UI, changed validation, changed mappings, changed dtos, created migration files, and changed it in databse. After i did all of that I replied to his email and said that ive changed validation and adjusted it in database.

After my email here comes rage mail from manager with every fuckin important person in cc I kid you not. Manager is asking why the fuck did I change database when Ive could only use different validaton for that field on UI.

I Almost flipped fuckin table. What does validation good do if you wouldnt be able ti save that form? And form has like 150 fields. And if I left validation only everthing would fuckin break.

Sometimes i think that its better not to think.

FML 7

7 -

math be like:

"Addition (often signified by the plus symbol "+") is one of the four basic operations of arithmetic; the others are subtraction, multiplication and division. The addition of two whole numbers is the total amount of those values combined. For example, in the adjacent picture, there is a combination of three apples and two apples together, making a total of five apples. This observation is equivalent to the mathematical expression "3 + 2 = 5" i.e., "3 add 2 is equal to 5".

Besides counting items, addition can also be defined on other types of numbers, such as integers, real numbers and complex numbers. This is part of arithmetic, a branch of mathematics. In algebra, another area of mathematics, addition can be performed on abstract objects such as vectors and matrices.

Addition has several important properties. It is commutative, meaning that order does not matter, and it is associative, meaning that when one adds more than two numbers, the order in which addition is performed does not matter (see Summation). Repeated addition of 1 is the same as counting; addition of 0 does not change a number. Addition also obeys predictable rules concerning related operations such as subtraction and multiplication.

Performing addition is one of the simplest numerical tasks. Addition of very small numbers is accessible to toddlers; the most basic task, 1 + 1, can be performed by infants as young as five months and even some members of other animal species. In primary education, students are taught to add numbers in the decimal system, starting with single digits and progressively tackling more difficult problems. Mechanical aids range from the ancient abacus to the modern computer, where research on the most efficient implementations of addition continues to this day."

And you think like .. easy, but then you turn the page: 15

15 -

React is a nice js framework, but I constantly find myself with 50 different files open because every component and related css file is spread across the world. I get why our frontend guy built it with so many modular components, but it makes me feel like back in the day when libraries were a thing and you had to flip through those giant Dewey decimal system drawers of cards to pull out the one little card you want to then hunt for that one little book you wanted to find that one little line from4

-

In my previous rant about IPv6 (https://devrant.com/rants/2184688 if you're interested) I got a lot of very valuable insights in the comments and I figured that I might as well summarize what I've learned from them.

So, there's 128 bits of IP space to go around in IPv6, where 64 bits are assigned to the internet, and 64 bits to the private network of end users. Private as in, behind a router of some kind, equivalent to the bogon address spaces in IPv4. Which is nice, it ensures that everyone has the same address space to play with.. but it should've been (in my opinion) differently assigned. The internet is orders of magnitude larger than private networks. Most SOHO networks only have a handful of devices in them that need addressing. The internet on the other hand has, well, billions of devices in it. As mentioned before I doubt that this total number will be more than a multiple of the total world population. Not many people or companies use more than a few public IP addresses (again, what's inside the SOHO networks is separate from that). Consider this the equivalent of the amount of public IP's you currently control. In my case that would be 4, one for my home network and 3 for the internet-facing servers I own.

There's various ways in which overall network complexity is reduced in IPv6. This includes IPSec which is now part of the protocol suite and thus no longer an extension. Standardizing this is a good thing, and honestly I'm surprised that this wasn't the case before.

Many people seem to oppose the way IPv6 is presented, hexadecimal is not something many people use every day. Personally I've grown quite fond of the decimal representation of IPv4. Then again, there is a binary conversion involved in classless IPv4. Hexadecimal makes this conversion easier.

There seems to be opposition to memorizing IPv6 addresses, for which DNS can be used. I agree, I use this for my IPv4 network already. Makes life easier when you can just address devices by a domain name. For any developers out there with no experience with administration that think that this is bullshit - imagine having to remember the IP address of Facebook, Google, Stack Overflow and every other website you visit. Add to the list however many devices you want to be present in the imaginary network. For me right now that's between 20 and 30 hosts, and gradually increasing. Scalability can be a bitch.

Any other things.. Oh yeah. The average amount of devices in a SOHO network is not quite 1 anymore - there are currently about half a dozen devices in a home network that need to be addressed. This number increases as more devices become smart devices. That said of course, it's nowhere close to needing 64 bits and will likely never need it. Again, for any devs that think that this is bullshit - prove me wrong. I happen to know in one particular instance that they have centralized all their resources into a single PC. This seems to be common with developers and I think it's normal. But it also reduces the chances to see what networks with many devices in it are like. Again, scalability can be a bitch.

Thanks a lot everyone for your comments on the matter, I've learned a lot and really appreciate it. Do check out the previous rant and particularly the comments on it if you're interested. See ya!25 -

Am I the only one that gets freaked out by this decimal point? Either stick with the comma or with the dot, but don't mix them!

9

9 -

I remember those times when I was a developer for an R&D company, I used to have calculations in my lines of C code determining the bits and the convertions to binary, hexadecimal, decimal...😂

2

2 -

What grinds my gears:

IEEE-754

This, to me, seems retarded.

Take the value 0.931 for example.

Its represented in binary as

00111111011011100101011000000100

See those last three bits? Well, it causes it to

come out in decimal like so:

0.93099999~

Which because bankers rounding is nowstandard, that actually works out to 0.930, because with bankers rounding, we round to the nearest even number? Makes sense? No. Anyone asked for it? No (well maybe the banks). Was it even necessary? Fuck no. But did we get it anyway?

Yes.

And worse, thats not even the most accurate way to represent

our value of 0.931 owing to how fucked up rounding now is becaue everything has to be pure shit these days.

A better representation would be

00111101101111101010101100110111 <- good

00111111011011100101011000000100 < - shit

The new representation works out to

0.093100004

or 0.093100003898143768310546875 when represented internally.

Whats this mean? Because of rounding you don't lose accuracy anymore.

Am I mistaken, or is IEEE-754 shit?4 -

Why would you ask me the diferrence between parseInt and parseFloat? When you were the first person who was unable to fix the decimal to two using parseFloat? 😝

-

Every language ever:

"You can't compare objects of type A and B"

Swift, on the other hand:

"main.swift:365:34: note: overloads for '==' exist with these partially matching parameter lists: (Any.Type?, Any.Type?), ((), ()), (Bool, B ool), (Character, Character), (Character.UnicodeScalarView.Index, Character.UnicodeScalarView.Index), (CodingUserInfoKey, CodingUserInfoKey ), (OpaquePointer, OpaquePointer), (AnyHashable, AnyHashable), (UInt8, UInt8), (Int8, Int8), (UInt16, UInt16), (Int16, Int16), (UInt32, UIn t32), (Int32, Int32), (UInt64, UInt64), (Int64, Int64), (UInt, UInt), (Int, Int), (AnyKeyPath, AnyKeyPath), (Unicode.Scalar, Unicode.Scalar ), (ObjectIdentifier, ObjectIdentifier), (String, String), (String.Index, String.Index), (UnsafeMutableRawPointer, UnsafeMutableRawPointer) , (UnsafeRawPointer, UnsafeRawPointer), (UnicodeDecodingResult, UnicodeDecodingResult), (_SwiftNSOperatingSystemVersion, _SwiftNSOperatingS ystemVersion), (AnyIndex, AnyIndex), (AffineTransform, AffineTransform), (Calendar, Calendar), (CharacterSet, CharacterSet), (Data, Data), (Date, Date), (DateComponents, DateComponents), (DateInterval, DateInterval), (Decimal, Decimal), (IndexPath, IndexPath), (IndexSet.Index, IndexSet.Index), (IndexSet.RangeView, IndexSet.RangeView), (IndexSet, IndexSet), (Locale, Locale), (Notification, Notification), (NSRange,

NSRange), (String.Encoding, String.Encoding), (PersonNameComponents, PersonNameComponents), (TimeZone, TimeZone), (URL, URL), (URLComponent s, URLComponents), (URLQueryItem, URLQueryItem), (URLRequest, URLRequest), (UUID, UUID), (DarwinBoolean, DarwinBoolean), (DispatchQoS, Disp atchQoS), (DispatchTime, DispatchTime), (DispatchWallTime, DispatchWallTime), (DispatchTimeInterval, DispatchTimeInterval), (Selector, Sele ctor), (NSObject, NSObject), (CGAffineTransform, CGAffineTransform), (CGPoint, CGPoint), (CGSize, CGSize), (CGVector, CGVector), (CGRect, C GRect), ((A, B), (A, B)), ((A, B, C), (A, B, C)), ((A, B, C, D), (A, B, C, D)), ((A, B, C, D, E), (A, B, C, D, E)), ((A, B, C, D, E, F), (A , B, C, D, E, F)), (ContiguousArray<Element>, ContiguousArray<Element>), (ArraySlice<Element>, ArraySlice<Element>), (Array<Element>, Array <Element>), (AutoreleasingUnsafeMutablePointer<Pointee>, AutoreleasingUnsafeMutablePointer<Pointee>), (ClosedRangeIndex<Bound>, ClosedRange Index<Bound>), (LazyDropWhileIndex<Base>, LazyDropWhileIndex<Base>), (EmptyCollection<Element>, EmptyCollection<Element>), (FlattenCollecti onIndex<BaseElements>, FlattenCollectionIndex<BaseElements>), (FlattenBidirectionalCollectionIndex<BaseElements>, FlattenBidirectionalColle ctionIndex<BaseElements>), (Set<Element>, Set<Element>), (Dictionary<Key, Value>.Keys, Dictionary<Key, Value>.Keys), ([Key : Value], [Key : Value]), (Set<Element>.Index, Set<Element>.Index), (Dictionary<Key, Value>.Index, Dictionary<Key, Value>.Index), (ManagedBufferPointer<Hea der, Element>, ManagedBufferPointer<Header, Element>), (Wrapped?, Wrapped?), (Wrapped?, _OptionalNilComparisonType), (_OptionalNilCompariso nType, Wrapped?), (LazyPrefixWhileIndex<Base>, LazyPrefixWhileIndex<Base>), (Range<Bound>, Range<Bound>), (CountableRange<Bound>, Countable Range<Bound>), (ClosedRange<Bound>, ClosedRange<Bound>), (CountableClosedRange<Bound>, CountableClosedRange<Bound>), (ReversedIndex<Base>, ReversedIndex<Base>), (_UIntBuffer<Storage, Element>.Index, _UIntBuffer<Storage, Element>.Index), (UnsafeMutablePointer<Pointee>, UnsafeMut ablePointer<Pointee>), (UnsafePointer<Pointee>, UnsafePointer<Pointee>), (_ValidUTF8Buffer<Storage>.Index, _ValidUTF8Buffer<Storage>.Index) , (Self, Other), (Self, R), (Measurement<LeftHandSideType>, Measurement<RightHandSideType>)"17 -

Full stack developer.

I know what it's supposed to mean, but I feel like it gives discredit to the devs who perfect their area (frontend, backend, db, infrastructure). It's, to me, like calling myself a chef because I can cook dinner..

The depth, analysis and customization of the domain to shape an api to a website is never appreciated. The finicle tweaks on the frontend to make those final touches. Then comes a brat who say they are full stack, and can do all those things. Bullshit. 99.9% of them have never done anything but move data through layers and present it.

Throw these wannabes an enterprise system with monoliths and microservices willy nelly, orchestrate that shit with a vertical slice nginx ssi with disaster recovery, horizontal scaling, domain modeling, version management, a busy little bus and events flowing all decimal points of 2pi. Then, if you fully master everything going on there, I believe you are full stack.

Otherwise you just scraped the surface of what complexities software development is about. Everyone who can read a tutorial can scrape together an "in-out" website. But if your db is looking the same as your api, your highest complexity is the alignment of an infobox, I will laugh loud at your full stack.

And if you told me in an interview that you are full stack, you'd better have 10+ years experience and a good list of failed and successful projects before I'd let you stay the next two minutes..1 -

I didn't leave, I just got busy working 60 hour weeks in between studying.

I found a new method called matrix decomposition (not the known method of the same name).

Premise is that you break a semiprime down into its component numbers and magnitudes, lets say 697 for example. It becomes 600, 90, and 7.

Then you break each of those down into their prime factorizations (with exponents).

So you get something like

>>> decon(697)

offset: 3, exp: [[Decimal('2'), Decimal('3')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('2')]]

offset: 2, exp: [[Decimal('2'), Decimal('1')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: [[Decimal('7'), Decimal('1')]]

And it turns out that in larger numbers there are distinct patterns that act as maps at each offset (or magnitude) of the product, mapping to the respective magnitudes and digits of the factors.

For example I can pretty reliably predict from a product, where the '8's are in its factors.

Apparently theres a whole host of rules like this.

So what I've done is gone an started writing an interpreter with some pseudo-assembly I defined. This has been ongoing for maybe a month, and I've had very little time to work on it in between at my job (which I'm about to be late for here if I don't start getting ready, lol).

Anyway, long and the short of it, the plan is to generate a large data set of primes and their products, and then write a rules engine to generate sets of my custom assembly language, and then fitness test and validate them, winnowing what doesn't work.

The end product should be a function that lets me map from the digits of a product to all the digits of its factors.

It technically already works, like I've printed out a ton of products and eyeballed patterns to derive custom rules, its just not the complete set yet. And instead of spending months or years doing that I'm just gonna finish the system to automatically derive them for me. The rules I found so far have tested out successfully every time, and whether or not the engine finds those will be the test case for if the broader system is viable, but everything looks legit.

I wouldn't have persued this except when I realized the production of semiprimes *must* be non-eularian (long story), it occured to me that there must be rich internal representations mapping products to factors, that we were simply missing.

I'll go into more details in a later post, maybe not today, because I'm working till close tonight (won't be back till 3 am), but after 4 1/2 years the work is bearing fruit.

Also, its good to see you all again. I fucking missed you guys.9 -

A new mathematical constant was discovered recently: Bruce's constant

I took some code from the paper and adapted it in python.

def bruce(n):

J = log(n, 1.333333333333333) / log(n, 2)

K = log(n, 1.333333333333333) / log(n, 3)

return ((J+K)-e)+1

gives e everytime for ((J+K)-bruce)+1, regardless of the value of n.

bruce can always be aproximated with the decimal 4.5, telling you how close n can be used to aproximate e (usually to two digits).

Bruce's constant is equal to 4.5099806905005

It is named after that famous mathematician, bruce lee.

You'll start with four limbs and end up with two in a wheelchair!5 -

This.

Not the worst but almost all of us (including me) handle strings like fucking morons.

If the input doesn't need to be an exact match we use a explicit comparison operator, when the input should explicitly match we do a loose comparison operator.

I'll format the crap out of a number, convert it, validate decimal places, check for float rounding hell, give it a absolute value and return it correctly formatted for the users locale but half the time I forget to trim their input. 🤦♂

Like I said - just a tad fucking moronic isn't it? 3

3 -

The human in me knows casting a datetime to a decimal(20, 12) is fairly future-proof.

The dev in me is worried someone working at the same company in 273669 years will get mad at him.5 -

Reading another rant about scrolling and decimal values I felt an urge to write about a bad practice I often see.

Load on demand when scrolling has been popular for quite some years but when implementing it, take some time to consider the pages overall layout.

I have several times encountered sites with this “helpful” feature that at the same time follows another staple feature of pages, especially news sites, of putting contact and address information in the footer ...

Genius right :)

I scroll down to find contact info and just as it comes in view new content gets loaded and pushes it out of view.

If you plan to use load on demand, make sure there is nothing below anyone will try to reach, no text or links or even pictures, you will frustrate the visitor ;)

The rant I was inspired by probably did not do this but its what got me thinking.

https://devrant.com/rants/1356907/...1 -

Stop fucking with the numbers.

A number is a number, integer or float.

Just because you wanted your stupid decimal place doesn't mean you need to fuck things up and make the front-end break because now its sending a string the server instead of a float. For fuck sakes. How long have we been doing this?5 -

The more I work with performance, the less I like generated queries (incl. ORM-driven generators).

Like this other team came to me complaining that some query takes >3minutes to execute (an OLTP qry) and the HTTP timeout is 60 seconds, so.... there's a problem.

Sure, a simple explain analyze suggests that some UIDPK index is queried repeatedly for ~1M times (the qry plan was generated for 300k expected invocations), each Index Scan lasts for 0.15ms. So there you go.. Ofc I'd really like to see more decimal zeroes, rather than just 0.15, but still..

Rewriting the query with a CTE cut down the execution time to pathetic 0.04sec (40ms) w/o any loops in the plan.

I suggest that change to the team and I am responded a big fat NO - they cannot make any query changes since they don't have any control on their queries

....

*sigh*

....

*sigh*

but down to 0.04sec from 3+ minutes....

*sigh*

alright, let's try to VACUUM ANALYZE, although I doubt this will be of any help. IDK what I'll do if that doesn't change the execution plan :/ Prolly suggest finding a DBA (which they won't, as the client has no € for a DBA).

All this because developers, the very people sho should have COMPLETE control over the product's code, have no control over the SQLs.

This sucks!27 -

When I was in 11th class, my school got a new setup for the school PCs. Instead of just resetting them every time they are shut down (to a state in which it contained a virus, great) and having shared files on a network drive (where everyone could delete anything), they used iServ. Apparently many schools started using that around that time, I heard many bad things about it, not only from my school.

Since school is sh*t and I had nothing better to do in computer class (they never taught us anything new anyway), I experimented with it. My main target was the storage limit. Logins on the school PCs were made with domain accounts, which also logged you in with the iServ account, then the user folder was synchronised with the iServ server. The storage limit there was given as 200MB or something of that order. To have some dummy files, I downloaded every program from portableapps.com, that was an easy way to get a lot of data without much manual effort. Then I copied that folder, which was located on the desktop, and pasted it onto the desktop. Then I took all of that and duplicated it again. And again and again and again... I watched the amount increate, 170MB, 180, 190, 200, I got a mail saying that my storage is full, 210, 220, 230, ... It just kept filling up with absolutely zero consequences.

At some point I started using the web interface to copy the files, which had even more interesting side effects: Apparently, while the server was copying huge amounts of files to itself, nobody in the entire iServ system could log in, neither on the web interface, nor on the PCs. But I didn't notice that at first, I thought just my account was busy and of course I didn't expect it to be this badly programmed that a single copy operation could lock the entire system. I was told later, but at that point the headmaster had already called in someone from the actual police, because they thought I had hacked into whatever. He basically said "don't do again pls" and left again. In the meantime, a teacher had told me to delete the files until a certain date, but he locked my account way earlier so that I couldn't even do it.

Btw, I now own a Minecraft account of which I can never change the security questions or reset the password, because the mail address doesn't exist anymore and I have no more contact to the person who gave it to me. I got that account as a price because I made the best program in a project week about Java, which greatly showed how much the computer classes helped the students learn programming: Of the ~20 students, only one other person actually had a program at the end of the challenge and it was something like hello world. I had translated a TI Basic program for approximating fractions from decimal numbers to Java.

The big irony about sending the police to me as the 1337_h4x0r: A classmate actually tried to hack into the server. He even managed to make it send a mail from someone else's account, as far as I know. And he found a way to put a file into any account, which he shortly considered to use to put a shutdown command into autostart. But of course, I must be the great hacker.3 -

Been engaged in a silly-client-request VS stubborn-developer war since last week. They wanted a textbox where they enter decimals - generally in the form 1.234 - to automatically put the decimal point after the first number.

"What if it's 10.xxx or 100.xxx?"

"That won't happen"

"How much time will it really save them having to press another key?"

"Why, how long will it take you to do the fix?"

Etc, ridiculousness and rage increasing exponentially...

Common sense finally prevailed today. Just think of all those wasted milliseconds having to press the "." key.3 -

Got an exam next friday, 1/3 of the points are given by calculating decimal numbers to binary numbers1

-

A buddy of mine sent this to me from a first year procedural programming course.

The email was in regards to an assignment where they had to print some statistics. The caps letters are the response from the lecturer...

Integers with decimal places hey? 3

3 -

I was going crazy for not understanding it.

I went to binary to decimal converters.

I tried applying my own messed up logic.

I was about to post a rage rant.

Now i realized how it works.. fml

And yes, i took a picture of my monitor, enjoy eye cancer :) 11

11 -

What will happen if every school starts teaching with binary numbers before the easy decimal number system?

I think it would be challenging initially but it can have a much greater impact on how we think and it can open a completely new possibility of faster algorithms that can directly be understood by computers.

The reason people hate binary systems is that all their life they make the decimal system a habit which makes them reluctant to learn binary systems into that much depth later on.

Just a thought. But I really believe if I would have learned the binary system before the decimal system than my brain would see things in a totally different way than it does now.

It sounds a little geeky yet thoughtful12 -

When you work on a web design by a graphic designer, expect to see 12px used for article paragraphs and 9px for navs... And of course, decimal pixels and asymmetric layouts that don't fit in any grid systems... On top of that, layers and layers and clipping masks and all the weird stuff in Illustrator...8

-

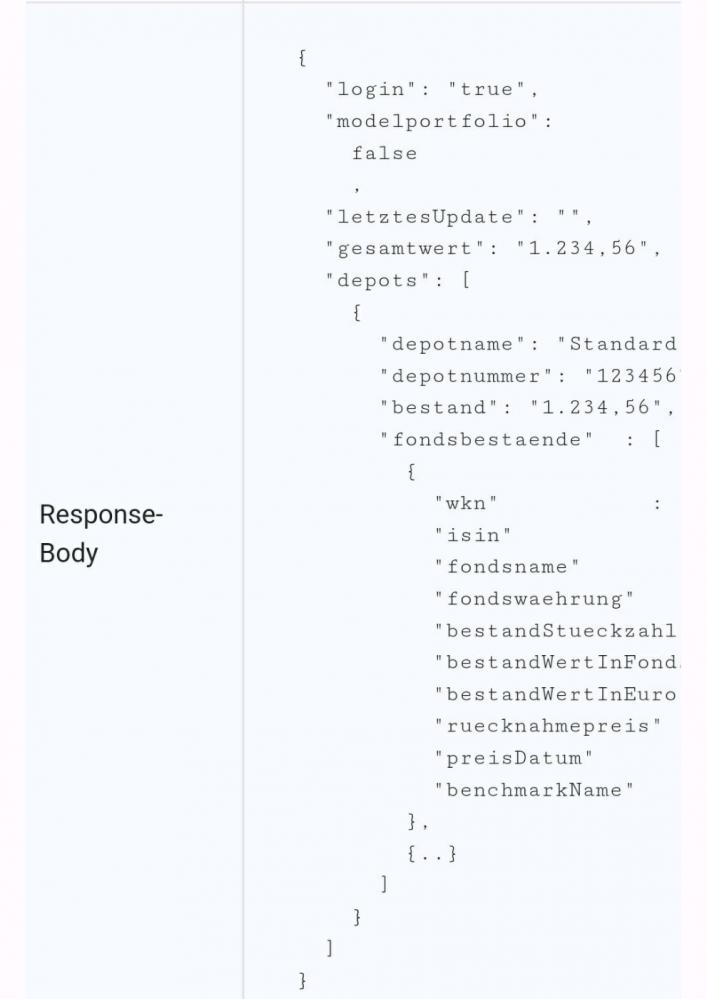

I once tried to create a client for the fonds bank of Frankfurt. But I didn't expect their JSON API to be designed by a trainee.

Look at the API.

Stringified numbers, decimal commas (Germany), separator dots for thousands, and even one breaks as if it came from a pjp script where they just put an if condition in each line.

I documented the API and tried to create a Jax rs client, but stopped completing it. Not useable atm. Just look at what I spoofed.

https://github.com/bmhm/... 7

7 -

Working with surds recently, and found some cool new identities that I don't think were known before now.

if n = x*y, and z = n.sqrt(), assuming n is known but x and y are not..

q = (surd(n, (1/(1/((n+z)-1))))*(n**2))

r = (surd(n, (surd(n, x)-surd(n, y)))*surd(n, n))

s = abs(surd(abs((surd(n, q)-q)), n)/(surd(n, q)-q))

t = (abs(surd(abs((surd(n, q)-q)), n)/(surd(n, q)-q)) - abs(surd(n, abs(surd(n, q)+r)))+1)

(surd(n, (1/(1/((n+z)-1))))*(n**2)) ~=

(surd(n, (surd(n, x)-surd(n, y)))*surd(n, n))

for every n I checked.

likewise.

s/t == r.sqrt() / q.sqrt()

and

(surd(n, q) - surd(s, q)) ==

(surd(n, t) - surd(s, t))

Even without knowing x, y, r, or t.

Not sure if its useful, but its cool.

surd() is just..

surd(j, k ) = return (j+k.sqrt())*(j-k.sqrt())

and d() is just the python decimal module for ease of use.12 -

CEO offers me a position

CTO sends me 7 logical interview questions, including asking me to write a program that converts binary to decimal in Node...2 -

Can anyone see where this repeats predictably?

Decimal('507.724375') / Decimal('173.2336')

I set the precision to 500+ digits and I'm not seeing any repetitions at a glance.

If there isn't, this is my new favorite irrational number.24 -

My leader was yelling at me cause i couldn't relate two tables, the first has a decimal(15,5) PK and the second has char(20) as a FK.

WELL TELL ME HOW THE FUCK SHOULD I RELATE THEM YOU STUPID FUCKING CUNT ??

Decimal !!! I could not believe my fucking eyes ! And Fuck keeping the clients satisfied!

Well, sorry, i just wanted to let it out.1 -

Here are some of the variable types in java if someone needs it :)

-String myString = "Hello";

//integeres

- Byte myByte = 127; [It can hold up to 128

-Short myShort = 530; [up to 32767] -> 2 bytes

-int myInt = 8021; [up to 2,147,483,647] -> 4 bytes]

-long myLong = 213134; [ up to 9,223,372,036,854,755,807] -> 8bytes

//Decimal Numbers

-float myFloat = 3.14f;

-double myDouble = 23.45;

P.S.

I hate double... idk why

Bye12 -

I want to call out the absolute retard at Oracle who decided that file modes should be read from the envvar called UMASK and be in decimal by default.

They probably never cared about file permissions.1 -

If you want a really obtuse method for inverting numbers in python, here you go:

z0 = Decimal('78.56341431805592768684300246940000494686645802145994376216014333')

z1 = Decimal('1766612.615660056866253324637112675800498420645531491441947721134')

z2 = Decimal('1766891.018224248707391295083927186110075710886547799081463050088')

z3 = Decimal('15658548.51274600264911287951105338985790517559073271799766738859')

z4 = Decimal('1230189034.426242656418217548674280136397763003160663651728899428')

z5 = Decimal('1.000157591178577552497828947885508869785153918881552213564806501')

((((z0/(z1/(z2/(n)))))*(z3))/z4)/z5

From what I can see, it works for any value of n.

I have no clue why it works.

Also have a function to generate the z values for any n input.

Shitpost studios.

Bringing you QUALITY math posts since 2019!

"we shitpost because we care."18 -

Officially faster bruteforcing:

https://pastebin.com/uBFwkwTj

Provided toy values for others to try. Haven't tested if it works with cryptographic secure prime pairs (gcf(p, q) == 1)

It's a 50% reduction in time to bruteforce a semiprime. But I also have some inroads to a/30.

It's not "broke prime factorization for good!" levels of fast, but its still pretty nifty.

Could use decimal support with higher precision so I don't cause massive overflows on larger numbers, but this is just a demonstration after all.10 -

Fuck you c++...!!!

TL;DR:

float a = 1.0/10.0;

float b = a*10.0;

a == b returns false

😐

Story:

A beginner of c++ here

Wrote about a 1000 lines code (spread across multiple files, m not dumb)

Passed 90% of cases

Took one and a half days to figure out what's wrong

Turns out c++ doesn't give accurate (as perceived by a human who thinks in decimal) results when comparing equality of 2 floats with ==

Shouldn't that be the first thing to be taught in schools?19 -

What's occurring? Asked my friend.

A reoccurring decimal! I said.

*Awkward silence for the next hour* -

Greetings my good people.

I'm currently making a Calculator app. Is there any suggestions you can give me.

I've already added, Square Root function, Factorial, Area of Circle, Volume of Cone, Decimal to Binary function, temperature convertor, and BMI function. 8

8 -

how do u guys organise you hard drive? i recently found johnny.decimal. gonna try in my new lappy, let's see how it's going to be.

https://johnnydecimal.com/

what othere ways are there?16 -

Was supposed to be studying for a test today at 1am (it's in a few hours). Instead starts writing the date in binary, hexadecimal and octal (and then decimal). Go me, watch me fail that test LoL

PS: those dry erase pens were the best thing I could ever buy. I love writing on my windows -

Up all damn night making the script work.

Wrote a non-sieve prime generator.

Thing kept outputting one or two numbers that weren't prime, related to something called carmichael numbers.

Any case got it to work, god damn was it a slog though.

Generates next and previous primes pretty reliably regardless of the size of the number

(haven't gone over 31 bit because I haven't had a chance to implement decimal for this).

Don't know if the sieve is the only reliable way to do it. This seems to do it without a hitch, and doesn't seem to use a lot of memory. Don't have to constantly return to a lookup table of small factors or their multiple either.

Technically it generates the primes out of the integers, and not the other way around.

Things 0.01-0.02th of a second per prime up to around the 100 million mark, and then it gets into the 0.15-1second range per generation.

At around primes of a couple billion, its averaging about 1 second per bit to calculate 1. whether the number is prime or not, 2. what the next or last immediate prime is. Although I'm sure theres some optimization or improvement here.

Seems reliable but obviously I don't have the resources to check it beyond the first 20k primes I confirmed.

From what I can see it didn't drop any primes, and it didn't include any errant non-primes.

Codes here:

https://pastebin.com/raw/57j3mHsN

Your gotos should be nextPrime(), lastPrime(), isPrime, genPrimes(up to but not including some N), and genNPrimes(), which generates x amount of primes for you.

Speed limit definitely seems to top out at 1 second per bit for a prime once the code is in the billions, but I don't know if thats the ceiling, again, because decimal needs implemented.

I think the core method, in calcY (terrible name, I know) could probably be optimized in some clever way if its given an adjacent prime, and what parameters were used. Theres probably some pattern I'm not seeing, but eh.

I'm also wondering if I can't use those fancy aberrations, 'carmichael numbers' or whatever the hell they are, to calculate some sort of offset, and by doing so, figure out a given primes index.

And all my brain says is "sleep"

But family wants me to hang out, and I have to go talk a manager at home depot into an interview, because wanting to program for a living, and actually getting someone to give you the time of day are two different things.1 -

Probably pure coincidence but if you look at the deconstruction of the dedekinds like so:

>>> decon(6)

offset: 1, exp: [[Decimal('2'), Decimal('1')], [Decimal('3'), Decimal('1')]]

>>> decon(20)

offset: 2, exp: [[Decimal('2'), Decimal('2')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: []

>>> decon(168)

offset: 3, exp: [[Decimal('2'), Decimal('2')], [Decimal('5'), Decimal('2')]]

offset: 2, exp: [[Decimal('2'), Decimal('2')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: [[Decimal('2'), Decimal('3')]]

>>> decon(7581)

offset: 4, exp: [[Decimal('2'), Decimal('3')], [Decimal('5'), Decimal('3')], [Decimal('7'), Decimal('1')]]

offset: 3, exp: [[Decimal('2'), Decimal('2')], [Decimal('5'), Decimal('3')]]

offset: 2, exp: [[Decimal('2'), Decimal('4')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: []

>>> decon(7828354)

offset: 7, exp: [[Decimal('2'), Decimal('6')], [Decimal('5'), Decimal('6')], [Decimal('7'), Decimal('1')]]

offset: 6, exp: [[Decimal('2'), Decimal('8')], [Decimal('5'), Decimal('5')]]

offset: 5, exp: [[Decimal('2'), Decimal('5')], [Decimal('5'), Decimal('4')]]

offset: 4, exp: [[Decimal('2'), Decimal('6')], [Decimal('5'), Decimal('3')]]

offset: 3, exp: [[Decimal('2'), Decimal('2')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('2')]]

offset: 2, exp: [[Decimal('2'), Decimal('1')], [Decimal('5'), Decimal('2')]]

offset: 1, exp: [[Decimal('2'), Decimal('2')]]

>>> decon(d('2414682040998'))

offset: 13, exp: [[Decimal('2'), Decimal('13')], [Decimal('5'), Decimal('12')]]

offset: 12, exp: [[Decimal('2'), Decimal('13')], [Decimal('5'), Decimal('11')]]

offset: 11, exp: [[Decimal('2'), Decimal('10')], [Decimal('5'), Decimal('10')]]

offset: 10, exp: [[Decimal('2'), Decimal('11')], [Decimal('5'), Decimal('9')]]

offset: 9, exp: [[Decimal('2'), Decimal('9')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('8')]]

offset: 8, exp: [[Decimal('2'), Decimal('10')], [Decimal('5'), Decimal('7')]]

offset: 7, exp: [[Decimal('2'), Decimal('7')], [Decimal('5'), Decimal('6')]]

offset: 6, exp: []

offset: 5, exp: [[Decimal('2'), Decimal('6')], [Decimal('5'), Decimal('4')]]

offset: 4, exp: []

offset: 3, exp: [[Decimal('2'), Decimal('2')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('2')]]

offset: 2, exp: [[Decimal('2'), Decimal('1')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: [[Decimal('2'), Decimal('3')]]

the powers in the 2's column go:

1, 2, 2, 2, 3, 3, 2, 4, 6

which are predicted by:

https://oeis.org/search/...

Again, probably only a coincidence, but kinda beautiful.2 -

First post on devRant... Aaaaand it's university hw... I can't wrap my head around this...

So, the problem is: I have to implement writing and printing 64 bit decimal integers (negative and positive with 2s complement) in NASM Assembly. There are no input parameters, and the result should be in EDX:EAX. The use of 64 bit registers is prohibited.

There is a library which I can use: mio.inc

It has these functions:

- mio_writechar (writes the character which corresponds to the ASCII code stored in AL to console)

- mio_readchar (reads an ASCII character from console to AL)

It also has to manage overflow and backspace. An input can be considered valid or invalid only after the user hits Enter... It's actually a lot of work, and it's just the first exercise out of 10... 😭

The problem is actually just the input - printing should be easy, once I have valid data...

Please help me!3 -

Anyone used base 12 in a project before?

Read about it on the internet (duo decimal, dodecimal, dozenal, etc) and I wonder if:

* There are useable implementations.

* Actual use cases.

* Have you used it in projects before?

Trivia: before Napoleon raged in Europe, a lot of citizens counted in 12. Think of your clock or legacy money.

I'm especially interested in the use cases. I bet there are implementations, just haven't bothered to Google them yet.3 -

Today I had a co-worker ask how we can set a value in the registry since this other program we are working with encrypts it. My response was, "It isn't encrypted, it is little-endian." Then I went into the whole endianness topic. After finally telling him how to convert the hex values in the registry back to the original decimal value that the program is storing, he said, "I'll just take your word for it."1

-

This morning I was exploring dedekind numbers and decided to take it a little further.

Wrote a bunch of code and came up with an upperbound estimator for the dedekinds.

It's in python, so forgive me for that.

The bound starts low (x1.95) for D(4) and grows steadily from there, but from what I see it remains an upperbound throughout.

Leading me to an upperbound on D(10) of:

106703049056023475437882601027988757820103040109525947138938025501994616738352763576.33010981

Basics of the code are in the pastebin link below. I also imported the decimal module and set 'd = Decimal', and then did 'getcontext().prec=256' so python wouldn't covert any variable values into exponents due to overflow.

https://pastebin.com/2gjeebRu

The upperbound on D(9) is just a little shy of D(9)*10,000

which isn't bad all things considered.3 -

Like 4 years ago I worked in a company as IT that used a windows desktop app with SQL Server 2008 (yep that old) to manage their sales, this app was written in WPF, the app was good because it was customizable with reports

One day the boss wanted to keep extra some data in the customer invoice, so they contacted the app developers to add this data to the invoice, so they they did it, but it in their own way, because the didn't modify the app itself(even if it was an useful idea for the app and companies that use it) they just used other unused fields in the invoice to keep this data and one of the field that the boss was interested was currency rate, later I verified in the DB this rate was saved as string in the database

The boss was not interested in reports because he just wanted to test it first and let time to know what the boss will need in the reports, so at the of the year they will contact again the devs to talk about the reports

So is the end of that year and the boss contacted the devs to talk about the reports of the invoices using the currency rate, this rate was just printed in the invoice nothing more, that's what the boss wanted that's what's the devs did, but when asked to do the reports they said they could'nt because the data was saved as string in the DB o_O

Well, that was one the most stupid excuses I ever heard...

So I started to digging on it and I found why... and the reason is that they were just lazy, at the end I did it but it took some work and the main the problem was that the rate was saved like this 1,01 here we use comma for decimal separator but in SQL you must use the dot (.) as decimal separator like this 1.01, also there was a problem with exact numbers, for example if the rate was exactly 1, that data must be saved just 1 in the field, but it was saved as 1,00 so not just replace all the commas with dots, it's also delete all ,00 and with all that I did the reports for my boss and everyone was happy

Some programmers just want to do easy things... -

So I promised a post after work last night, discussing the new factorization technique.

As before, I use a method called decon() that takes any number, like 697 for example, and first breaks it down into the respective digits and magnitudes.

697 becomes -> 600, 90, and 7.

It then factors *those* to give a decomposition matrix that looks something like the following when printed out:

offset: 3, exp: [[Decimal('2'), Decimal('3')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('2')]]

offset: 2, exp: [[Decimal('2'), Decimal('1')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: [[Decimal('7'), Decimal('1')]]

Each entry is a pair of numbers representing a prime base and an exponent.

Now the idea was that, in theory, at each magnitude of a product, we could actually search through the *range* of the product of these exponents.

So for offset three (600) here, we're looking at

2^3 * 3 ^ 1 * 5 ^ 2.

But actually we're searching

2^3 * 3 ^ 1 * 5 ^ 2.

2^3 * 3 ^ 1 * 5 ^ 1

2^3 * 3 ^ 1 * 5 ^ 0

2^3 * 3 ^ 0 * 5 ^ 2.

2^3 * 3 ^ 1 * 5 ^ 1

etc..

On the basis that whatever it generates may be the digits of another magnitude in one of our target product's factors.

And the first optimization or filter we can apply is to notice that assuming our factors pq=n,

and where p <= q, it will always be more efficient to search for the digits of p (because its under n^0.5 or the square root), than the larger factor q.

So by implication we can filter out any product of this exponent search that is greater than the square root of n.

Writing this code was a bit of a headache because I had to deal with potentially very large lists of bases and exponents, so I couldn't just use loops within loops.

Instead I resorted to writing a three state state machine that 'counted down' across these exponents, and it just works.

And now, in practice this doesn't immediately give us anything useful. And I had hoped this would at least give us *upperbounds* to start our search from, for any particular digit of a product's factors at a given magnitude. So the 12 digit (or pick a magnitude out of a hat) of an example product might give us an upperbound on the 2's exponent for that same digit in our lowest factor q of n.

It didn't work out that way. Sometimes there would be 'inversions', where the exponent of a factor on a magnitude of n, would be *lower* than the exponent of that factor on the same digit of q.

But when I started tearing into examples and generating test data I started to see certain patterns emerge, and immediately I found a way to not just pin down these inversions, but get *tight* bounds on the 2's exponents in the corresponding digit for our product's factor itself. It was like the complications I initially saw actually became a means to *tighten* the bounds.

For example, for one particular semiprime n=pq, this was some of the data:

n - offset: 6, exp: [[Decimal('2'), Decimal('5')], [Decimal('5'), Decimal('5')]]

q - offset: 6, exp: [[Decimal('2'), Decimal('6')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('5')]]

It's almost like the base 3 exponent in [n:7] gives away the presence of 3^1 in [q:6], even

though theres no subsequent presence of 3^n in [n:6] itself.

And I found this rule held each time I tested it.

Other rules, not so much, and other rules still would fail in the presence of yet other rules, almost like a giant switchboard.

I immediately realized the implications: rules had precedence, acted predictable when in isolated instances, and changed in specific instances in combination with other rules.

This was ripe for a decision tree generated through random search.

Another product n=pq, with mroe data

q(4)

offset: 4, exp: [[Decimal('2'), Decimal('4')], [Decimal('5'), Decimal('3')]]

n(4)

offset: 4, exp: [[Decimal('2'), Decimal('3')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('3')]]

Suggesting that a nontrivial base 3 exponent (**2 rather than **1) suggests the exponent on the 2 in the relevant

digit of [n], is one less than the same base 2 digital exponent at the same digit on [q]

And so it was clear from the get go that this approach held promise.

From there I discovered a bunch more rules and made some observations.

The bulk of the patterns, regardless of how large the product grows, should be present in the smaller bases (some bound of primes, say the first dozen), because the bulk of exponents for the factorization of any magnitude of a number, overwhelming lean heavily in the lower prime bases.

It was if the entire vulnerability was hiding in plain sight for four+ years, and we'd been approaching factorization all wrong from the beginning, by trying to factor a number, and all its digits at all its magnitudes, all at once, when like addition or multiplication, factorization could be done piecemeal if we knew the patterns to look for.7 -

A few weeks ago a friend was teaching me about 16bit numbers because we were making one in C# with a function, but he said we need two numbers for the function to work.(so as an example were gonna use 0, 2)

Now I didn’t understand how two numbers were supposed to make one. And my friend could not explain it to me. So I researched the topic all day and the epiphany happened I realized I was looking at it all wrong. I shouldn’t be looking at it as 2 decimal numbers but 2 binary values or two binary arrays forming one byte array with a length of 16.7 -

Lol, devRant good move by converting all the likes that one had received into binary system.

Rejigged old memories of converting binary to decimal at undergrad classes. 😂😂😂 -

Excel is a powerful and extremely versatile application, but one thing that really SUCKS about it, is that formulas are language-specific! So if using Outlook in - for example - Swedish you can't write "IF(<expression>,<then>,<else>)" but instead "OM(<expression>;<then>;<else>)". Note the semi-colon instead of comma (because in Swedish comma is used as decimal). AAARGGH! This pisses me off!2

-

If you're using random in python, and need arbitrary precision, use mpmath.

If you're using it with the decimal module, it doesn't automatically convert just so you know.

Instead convert the output of arb_uniform to a string before passing to the decimal module.2 -

Just spent 3 fucking hours trying to find out why my tests are failing. I'm mocking ef with an in memory sqlite dB as THE FUCKING. Net docs say to.

My code does a simple decimal comparison in a linq statement and returns bullshit. Why? Sqlite does not have a decimal type, it does some sort of BULLSHIT to convert it into some sort of text value.

I change all my models to use doubles instead of decimals and all my tests turn green.

WTF IS THIS SHIT. If it doesn't work don't tell me to use it. I expect better of the. Net docs. Wtf are they doing.3 -

Floating point numbers! 😖

Writing geometric algorithms for CNC machining... you'll find those 10th decimal place inconsistencies real quick!1 -

So I have an array of length 20 which stores a 64 bit decimal number by digits. The starting address is let's say array64. When I am trying to build up my number in ECX:EBX, I am using EDX as the iterator to access the individual elements of the array (I am loading them into AL)

.LOOP:

....

MOV AL, [array64+EDX]

INC EDX

....

JMP .LOOP

The problem is: somehow EDX cannot get higher than 10, so the program just stops and waits for input, when I try to work with a 12 digit number...

This module is outside the main function, and I thought about some Far Pointer problems, but all of my ideas just failed...

What is wrong here?1 -

So I made a couple slight modifications to the formula in the previous post and got some pretty cool results.

The original post is here:

https://devrant.com/rants/5632235/...

The default transformation from p, to the new product (call it p2) leads to *very* large products (even for products of the first 100 primes).

Take for example

a = 6229, b = 10477, p = a*b = 65261233

While the new product the formula generates, has a factor tree that contains our factor (a), the product is huge.

How huge?

6489397687944607231601420206388875594346703505936926682969449167115933666916914363806993605...

and

So huge I put the whole number in a pastebin here:

https://pastebin.com/1bC5kqGH

Now, that number DOES contain our example factor 6229. I demonstrated that in the prior post.

But first, it's huge, 2972 digits long, and second, many of its factors are huge too.

Right from the get go I had hunch, and did (p2 mod p) and the result was surprisingly small, much closer to the original product. Then just to see what happens I subtracted this result from the original product.

The modification looks like this:

(p-(((abs(((((p)-(9**i)-9)+1))-((((9**i)-(p)-9)-2)))-p+1)-p)%p))

The result is '49856916'

Thats within the ballpark of our original product.

And then I factored it.

1, 2, 3, 4, 6, 12, 23, 29, 46, 58, 69, 87, 92, 116, 138, 174, 276, 348, 667, 1334, 2001, 2668, 4002, 6229, 8004, 12458, 18687, 24916, 37374, 74748, 143267, 180641, 286534, 361282, 429801, 541923, 573068, 722564, 859602, 1083846, 1719204, 2167692, 4154743, 8309486, 12464229, 16618972, 24928458, 49856916

Well damn. It's not a-smooth or b-smooth (where 'smoothness' is defined as 'all factors are beneath some number n')

but this is far more approachable than just factoring the original product.

It still requires a value of i equal to

i = floor(a/2)

But the results are actually factorable now if this works for other products.

I rewrote the script and tested on a couple million products and added decimal support, and I'm happy to report it works.

Script is posted here if you want to test it yourself:

https://pastebin.com/RNu1iiQ8

What I'll do next is probably add some basic factorization of trivial primes

(say the first 100), and then figure out the average number of factors in each derived product.

I'm also still working on getting to values of i < a/2, but only having sporadic success.

It also means *very* large numbers (either a subset of them or universally) with *lots* of factors may be reducible to unique products with just two non-trivial factors, but thats a big question mark for now.

@scor if you want to take a look.5 -

Not dev related.

Two incidents that I'd like to share.

So here in India two major streams for college are engineering and medicine (others do exist). So entrances to both these colleges are based upon entrance exams. So here are two "events" that happened this year and worth mentioning.

Incident 1:

The exam for the engineering stream had a section where the answer is a number with up to two digits of decimal. Range is (0.00 - 9.99) So apparently this two decimal precision created some confusion and the court decided that if the answer is precisely "seven" then only the candidates who've marked 7.00 are given marks while those who marked 7.0 or 7 were given wrong answer.

Incident 2:

So for the medical entrance, exam was for 720 marks (180 questions * 4marks each). So every candidate from the state of Tamil Nadu were given a full 196 marks as bonus because the translations from English to Tamil we're inaccurate.

Now I need to mention that around 300 marks would fetch a decent seat in a government college.

What the fuck is happening? One the only thing they're supposed to conduct every year is also messed up. And who the fuck created complicated shit like 7.00 is correct while 7.0 and 7 are wrong. I mean should the candidate worry about the getting the answer or marking it?

For those who don't know wrong answers are penalized heavily and there's huge competition.

https://m.timesofindia.com/home/...

https://m.timesofindia.com/india/...1 -

How hard is it for people to understand that rounding individual decimal numbers and adding them is not equal to adding them all and then rounding off.

FFS1 -

decimal discount = line.Discount - (line.StandardPrice - line.CustomerPrice);

2 fucking hours working out what and why the fuck this line of code. Fml -

Top 12 C# Programming Tips & Tricks

Programming can be described as the process which leads a computing problem from its original formulation, to an executable computer program. This process involves activities such as developing understanding, analysis, generating algorithms, verification of essentials of algorithms - including their accuracy and resources utilization - and coding of algorithms in the proposed programming language. The source code can be written in one or more programming languages. The purpose of programming is to find a series of instructions that can automate solving of specific problems, or performing a particular task. Programming needs competence in various subjects including formal logic, understanding the application, and specialized algorithms.

1. Write Unit Test for Non-Public Methods

Many developers do not write unit test methods for non-public assemblies. This is because they are invisible to the test project. C# enables one to enhance visibility between the assembly internals and other assemblies. The trick is to include //Make the internals visible to the test assembly [assembly: InternalsVisibleTo("MyTestAssembly")] in the AssemblyInfo.cs file.

2. Tuples

Many developers build a POCO class in order to return multiple values from a method. Tuples are initiated in .NET Framework 4.0.

3. Do not bother with Temporary Collections, Use Yield instead

A temporary list that holds salvaged and returned items may be created when developers want to pick items from a collection.

In order to prevent the temporary collection from being used, developers can use yield. Yield gives out results according to the result set enumeration.

Developers also have the option of using LINQ.

4. Making a retirement announcement

Developers who own re-distributable components and probably want to detract a method in the near future, can embellish it with the outdated feature to connect it with the clients

[Obsolete("This method will be deprecated soon. You could use XYZ alternatively.")]

Upon compilation, a client gets a warning upon with the message. To fail a client build that is using the detracted method, pass the additional Boolean parameter as True.

[Obsolete("This method is deprecated. You could use XYZ alternatively.", true)]

5. Deferred Execution While Writing LINQ Queries

When a LINQ query is written in .NET, it can only perform the query when the LINQ result is approached. The occurrence of LINQ is known as deferred execution. Developers should understand that in every result set approach, the query gets executed over and over. In order to prevent a repetition of the execution, change the LINQ result to List after execution. Below is an example

public void MyComponentLegacyMethod(List<int> masterCollection)

6. Explicit keyword conversions for business entities

Utilize the explicit keyword to describe the alteration of one business entity to another. The alteration method is conjured once the alteration is applied in code

7. Absorbing the Exact Stack Trace

In the catch block of a C# program, if an exception is thrown as shown below and probably a fault has occurred in the method ConnectDatabase, the thrown exception stack trace only indicates the fault has happened in the method RunDataOperation

8. Enum Flags Attribute

Using flags attribute to decorate the enum in C# enables it as bit fields. This enables developers to collect the enum values. One can use the following C# code.

he output for this code will be “BlackMamba, CottonMouth, Wiper”. When the flags attribute is removed, the output will remain 14.

9. Implementing the Base Type for a Generic Type

When developers want to enforce the generic type provided in a generic class such that it will be able to inherit from a particular interface

10. Using Property as IEnumerable doesn’t make it Read-only

When an IEnumerable property gets exposed in a created class

This code modifies the list and gives it a new name. In order to avoid this, add AsReadOnly as opposed to AsEnumerable.

11. Data Type Conversion

More often than not, developers have to alter data types for different reasons. For example, converting a set value decimal variable to an int or Integer

Source: https://freelancer.com/community/...2 -

Algolia says:

"So our price widget doesn't allow decimals, you'll have to create a custom widget"

I do it.

"Hey, It's not working and I verified it's applying the filter correctly. I noticed my price is a string in your index, maybe that's incorrect and causing it to not work?"

They say: "Yep, you'll need to run an update to fix that and change all to floats" (charges an arm and a leg for the thousands of index operations needed to update the data type)

I clear the index and send a single one as a test, verifying it's a float by casting it using (float) then var_dumping. It shows "double(3.99)", but when it gets to Algolia, it's 0.

So I contact support.

"Hi, I'm sending across floats like you say but it's receiving it as 0, am I doing something wrong? Here's my code and the result of the var_dump"

They respond: "Looks like you're doing it right, but our log shows us receiving 3.999399593939, maybe check your PHP.ini for "serialize_precision" and make sure it's set to -1"

I check and it's fine, then I realize that var_dump is probably rounding to 2 decimal points so I change my cast to (float) number_format($row['Price'], 2) and wallah...it works.

Now I've wasted days of paying for their service, a ton of charges for indexing operations, and it was such a simple fix.

if they had thrown an error for the infinite decimal, that would have helped, but instead I had to reach out to find out that was the issue.

#Frustrated. -

Whats it called when you subtract 1 or more from a number and get its decimal component back as result?

For example, lets say

((x+y)/y) - 1 == x/y

For example

((x+y)/y) == d('1.000041160213365071474861471983496528380123531238424473420976789')

and the right hand side is

(x/y) == d('0.000041160213365071474861471983496528380123531238424473420976789')

Is this trivial or something interesting or a concept that others are familiar with and I just haven't learned?7 -

Need opinions: When your knowledgeable colleague backend-developer chooses 1,2,4,8,16 as enum values instead of 1,2,3,4,5 (for roles associated with permissions, which may be cumulatable) in order to be able to do bitwise operations, is it a sound decision for this scenario? Is it a best practice, just as good, or pedantic?

I want to master bitwise but have a hard time grasping such operations as quickly as logical ones.9 -

I'm thinking I know why the approximation graph of my ML goes sideways.

It can never reach the solution set completely because the solution set has decimal places to the 1x10^(-12)2 -

I know it's not really related to development, but I got in a discussion on twitter and one dude tweeted "in science, 1 isn't 1"

And so I was like "mate what? science is highly dependent on math and in math, 1 is kinda always worth math"

And this this girl comes in and just says:

"it's not true that 1 is always 1 because there's binary code as well"

And was was like totally astonished, like, have you even studied something? 1b = 1d = 1x and it's always 1 in whatever base!

(she even says she's some sort of engineer in her bio)8 -

Commas being used as a decimal point are the absolute bane of my damn existence. And what's worse, they are used in csv files with a semicolon as a delimiter. It is comma separated values ffs not a semicolon separated comma separated random fucking shit that's against the fucking syntax of every damn language. Fuck whichever dimwit that thought using commas as a decimal separator was a good idea.6

-

!rant

I'm a starting programmer. So when my supervisor asks me to write a report and test against 50 coordinates written in little endian hex form in excel, I got lazy and wrote a code that gives me all the coordinates in decimal to be input into my excel program.

Anyone know an easier method tho? -

Why do we still use floating-point numbers? Why not use fixed-point?

Floating-point has precision errors, and for some reason each language has a different level of error, despite all running on the same processor.

Fixed-point numbers don't have precision issues (unless you get way too big, but then you have another problem), and while they might be a bit slower, I don't think there is enough of a difference in speed to justify the (imho) stupid, continued use of floating-point numbers.

Did you know some (low power) processors don't have a floating-point processor? That effectively makes it pointless to use floating-point, it offers no advantage over fixed-point.

Please, use a type like Decimal, or suggest that your language of choice adds support for it, if it doesn't yet.

There's no need to suffer from floating-point accuracy issues.26 -

Hang with me! This is *not* a math shitpost, I repeat, it is NOT a math shitpost, not entirely anyway.

It appears there is for products of two non-trivial factors, a real number n (well a rational number anyway) such that p/n = i (some number in the set of integers), whos factor chain is apparently no greater than floor(log(log(p))**2)-2, and whos largest factor is never greater than p^(1/4).

And that this number is at least derivable, laboriously with the following:

where p=a*b

https://pastebin.com/Z4thebha

And assuming you have the factors of p/z = jkl..

then instead of doing

p/(jkl..) = z

you can do

p-(jkl) to get the value of [result] whos index is a-1

Getting the actual factor tree of p/z is another matter, but its a start.

Edit: you have to provide your own product.

Preferably import Decimal first.3 -

How come when implementing merge sort the mid doesn't need to deal with odd/even division?

I know int will always go down if there is decimal but how will it cover the whole array?

Full code:

https://gist.github.com/allanx2000/...

I guess in general, array indexing that involves dynamic cutoffs always confuse me.

How do you think about them without having to try things out on paper?7 -

Has anyone else used the Decimal module in python?

And if so do you know why it returns

"AttributeError: type object 'decimal.Decimal' has no attribute 'power'"?

According to the documentation

https://docs.python.org/3/library/...

...theres a power() function.

Doing

decimal.power()

Decimal.power()

power(x, y)

No matter how I call it, it always returns an error indicating power() doesn't exist and I'm scratching my head.4 -

Is there any reason why the actual fuck the OracleDataAccess Driver for C# returns everything either as decimal or string else its a TypeConversionError? Why does it even have the functionality to return an int if that can literally never happen?

-

What's your idea of a perfect number?

Mine is:

default decimal, prefix for binary, octal, hex

Integers in natural notation with strictly positive exponent

All bases allow natural notation, where the exponent is always a decimal number and represents the power of the base (0b101e3 = 0b101000)

Floats in all bases allow a combination of natural notation and dot notation

Underscores allowed anywhere except the beginning and end for easier reading11 -

I'm beginning to feel like any kind of specific approximation via neural networks is a myth. That if you can't reduce output to simple categorical values that can be broadly interpreted between two points, that it doesn't work.

I have some questions and they don't seem to be getting answered about the design of the net. How many layers should I use ? How many neurons per layer ? How does this relate to the number of desired quantitive scalar outputs I'm looking to create, even if they are normalized, they can vary GREATLY and will if I'm approximating the out of several mathematical expressions. Based on this and the expected error ranges of these numbers and how many possible major digits could be produced within the domain of the variable inputs being introduced, how many neurons per layer ? What does having more layers do ? In pytorch there don't seem to be a lot of layer types per say, but there are a crap ton of activation functions, and should I just be using these at the tail end or should they actually be inserted between layers so the input of the next layer passes through another series of actiavtion functions ? what does this do to the range of output ?

do I need to be a mathematician to do this ?

remembered successes removed quantifiable scalars entirely from output, meaning that I could interpret successful results from ranges of decimal points.

but i've had no success with actual multi variable regression as of yet, even when those input variables are only 2 and on limited value ranges eg [0,100] and [0, 2pi]

and then there are training epochs to avoid overfitting, and reasonable expectation of batches till quality results will start to form.3 -

Need help for a task

I need to do a code in JavaScript that decrements a user value, say 0.2>0.1>0.09>0.08 etc etc.

How do I decrement 0.1 to 0.09?

Thanks.10