Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "cache everything"

-

I absolutely HATE "web developers" who call you in to fix their FooBar'd mess, yet can't stop themselves from dictating what you should and shouldn't do, especially when they have no idea what they're doing.

So I get called in to a job improving the performance of a Magento site (and let's just say I have no love for Magento for a number of reasons) because this "developer" enabled Redis and expected everything to be lightning fast. Maybe he thought "Redis" was the name of a magical sorcerer living in the server. A master conjurer capable of weaving mystical time-altering spells to inexplicably improve the performance. Who knows?

This guy claims he spent "months" trying to figure out why the website couldn't load faster than 7 seconds at best, and his employer is demanding a resolution so he stops losing conversions. I usually try to avoid Magento because of all the headaches that come with it, but I figured "sure, why not?" I mean, he built the website less than a year ago, so how bad can it really be? Well...let's see how fast you all can facepalm:

1.) The website was built brand new on Magento 1.9.2.4...what? I mean, if this were built a few years back, that would be a different story, but building a fresh Magento website in 2017 in 1.x? I asked him why he did that...his answer absolutely floored me: "because PHP 5.5 was the best choice at the time for speed and performance..." What?!

2.) The ONLY optimization done on the website was Redis cache being enabled. No merged CSS/JS, no use of a CDN, no image optimization, no gzip, no expires rules. Just Redis...

3.) Now to say the website was poorly coded was an understatement. This wasn't the worst coding I've seen, but it was far from acceptable. There was no organization whatsoever. Templates and skin assets are being called from across 12 different locations on the server, making tracking down and finding a snippet to fix downright annoying.

But not only that, the home page itself had 83 custom database queries to load the products on the page. He said this was so he could load products from several different categories and custom tables to show on the page. I asked him why he didn't just call a few join queries, and he had no idea what I was talking about.

4.) Almost every image on the website was a .PNG file, 2000x2000 px and lossless. The home page alone was 22MB just from images.

There were several other issues, but those 4 should be enough to paint a good picture. The client wanted this all done in a week for less than $500. We laughed. But we agreed on the price only because of a long relationship and because they have some referrals they got us in the door with. But we told them it would get done on our time, not theirs. So I copied the website to our server as a test bed and got to work.

After numerous hours of bug fixes, recoding queries, disabling Redis and opting for higher innodb cache (more on that later), image optimization, js/css/html combining, render-unblocking and minification, lazyloading images tweaking Magento to work with PHP7, installing OpCache and setting up basic htaccess optimizations, we smash the loading time down to 1.2 seconds total, and most of that time was for external JavaScript plugins deemed "necessary". Time to First Byte went from a staggering 2.2 seconds to about 45ms. Needless to say, we kicked its ass.

So I show their developer the changes and he's stunned. He says he'll tell the hosting provider create a new server set up to migrate the optimized site over and cut over to, because taking the live website down for maintenance for even an hour or two in the middle of the night is "unacceptable".

So trying to be cool about it, I tell him I'd be happy to configure the server to the exact specifications needed. He says "we can't do that". I look at him confused. "What do you mean we 'can't'?" He tells me that even though this is a dedicated server, the provider doesn't allow any access other than a jailed shell account and cPanel access. What?! This is a company averaging 3 million+ per year in revenue. Why don't they have an IT manager overseeing everything? Apparently for them, they're too cheap for that, so they went with a "managed dedicated server", "managed" apparently meaning "you only get to use it like a shared host".

So after countless phone calls arguing with the hosting provider, they agree to make our changes. Then the client's developer starts getting nasty out of nowhere. He says my optimizations are not acceptable because I'm not using Redis cache, and now the client is threatening to walk away without paying us.

So I guess the overall message from this rant is not so much about the situation, but the developer and countless others like him that are clueless, but try to speak from a position of authority.

If we as developers don't stop challenging each other in a measuring contest and learn to let go when we need help, we can get a lot more done and prevent losing clients. </rant>14 -

Boss: we have to cache everything.

Me: but some parts of the page are dynamic, we can not cache them

Boss: EVERYTHING!!!!

Few days later...

Boss: This part of the page displays the wring content!

---------------------------

Well duh. If content changes with every request caching is probably not a good idea...9 -

Boss wants to scale our webservers because it seems they're having performance/capacity issues....

I'VE BEEN TELLING HIM FOR WEEKS IT'S NOT THE SERVERS!!! IT'S THE FACT THAT EVERY SINGLE QUERY HITS A SINGLE MONGODB... AND NO CACHE EITHER... AND THE DB CANT BE ENTIRELY LOADED INTO MEMORY AS ITS TOO BIG FOR RAM ON A SINGLE SERVER...

HOW THE FUCK CAN YOU SCALE IF EVERYTHING HAS A DEPENDENCY ON 1 NON-DISTRIBUTED DATABASE?6 -

!!oracle

I'm trying to install a minecraft modpack to play with a friend, and I'm super psyced about it. According to the modpack instructions, the first step is to download the java8 jre. Not sure if I actually need it or not, but it can download while I'm doing everything else, so I dutifully go to the download page and find the appropriate version. The download link does point to the file, but redirects to a login page instead. Apparently I need an oracle account to download anything on their site. stupid.

So I make an account. It requires my life story, or at least full name and address and phone number. stupid. So my name is now "fuck off" and I live in Hell, Michigan. My email is also "gofuckyourself" because I'm feeling spiteful. Also, for some reason every character takes about 3/4ths of a second to type, so it's very slow going. Passwords also cannot contain spaces, which makes me think they're doing some stupid "security" shenanigans like custom reversible encryption with some 5th grade math. or they're just stupid. Whatever, I make the stupid account.

Afterwards, I try to log in, but apparently my browser-saved credentials are wrong? I try a few more times, try enabling all of the javascripts, etc. No beans. Okay, maybe I can't use it until I verify the email? That actually makes some sense. Fine, I go check the throwaway inbox. No verification email. It's been like five minutes, but it's oracle so they probably just failed at it like everything else, so I try to have them resend the email. I find the resend link, and try it. Every time I enter my email address, though, it either gives me a validation error or a server error. I try a few mores times, and give up. I try to log in again; no dice. Giving up, I go do something else for awhile.

On a whim later, I check for the verification email again. Apparently it just takes bloody forever, but it did show up. Except instead of the first name "Fuck" I entered, I'm now "Andrew", apparently. okay.... whatever. I click the verify button anyway, and to my surprise it actually works, and says that I'm now allowed to use my account. Yay!

So, I go back to the login page (from the download link) and enter my credentials. A new error appears! I cannot use redirects, apparently, and "must type in the page address I want to visit manually." huh? okay, i go to the page directly, and see the same bloody error because of course i do because oracle fucking sucks. So I close the page, go back to the download list, click the link, wait for the login page redirect (which is so totally not allowed, apparently, except it works and manual navigation does not. yay backwards!), and try to log in.

Instead of being presented with an error because of the redirect, it lets me (try to) log in. But despite using prefilled creds (and also copy/pasting), it tells me they're invalid. I open a new tab container, clear the cache (just to be thorough), and repeat the above steps. This time it redirects me to a single signon server page (their concept of oauth), and presents me with a system error telling me to contact "the Administrator." -.- Any second attempts, refreshes, etc. just display the same error.

Further attempts to log in from the download page fail with the same invalid credentials error as before.

Fucking oracle and their reverse Midas touch.10 -

Well... I had in over 15 years of programming a lot of PHP / HTML projects where I asked myself: What psychopath could have written this?

(PHP haters: Just go trolling somewhere else...)

In my current project I've "inherited" a project which was running around ~ 15 years. Code Base looked solid to me... (Article system for ERP, huge company / branches system, lot of other modules for internal use... All in all: Not small.)

The original goal was to port to PHP 7 and to give it a fresh layout. Seemed doable...

The first days passed by - porting to an asset system, cleaning up the base system (login / logout / session & cookies... you know the drill).

And that was where it all went haywire.

I really have no clue how someone could have been so ignorant to not even think twice before setting cookies or doing other "header related" stuff without at least checking the result codes...

Basically the authentication / permission system was fully fucked up. It relied on redirecting the user via header modification to the login page with an error set in a GET variable...

Uh boy. That ain't funny.

Ported to session flash messages, checked if headers were sent, hard exit otherwise - redirect.

But then I got to the first layers of the whole "OOP class" related shit...

It's basically "whack a mole".

Whoever wrote this, was as dumb and as ignorant to build up a daisy chain of commands for fixing corner cases of corner cases of the regular command... If you don't understand what I mean, take the following example:

Permissions are based on group (accumulation of single permissions) and single permissions - to get all permissions from a user, you need to fetch both and build a unique array.

Well... The "names" for permissions are not unique. I'd never expected to be someone to be so stupid. Yes. You could have two permissions name "article_search" - while relying on uniqueness.

All in all all permissions are fetched once for lifetime of script and stored to a cache...

To fix this corner case… There is another function that fetches the results from the cache and returns simply "one" of the rights (getting permission array).

In case you need to get the ID of the other (yes... two identifiers used in the project for permissions - name and ID (auto increment key))...

Let's write another function on top of the function on top of the function.

My brain is seriously in deep fried mode.

Untangling this mess is basically like getting pumped up with pain killers and trying to solve logic riddles - it just doesn't work....

So... From redesigning and porting from PHP 7 I'm basically rewriting the whole base system to MVC, porting and touching every script, untangling this dumb shit of "functions" / "OOP" [or whatever you call this garbage] and then hoping everything works...

A huge thanks to AURA. http://auraphp.com/

It's incredibily useful in this case, as it has no dependencies and makes it very easy to get a solid ground without writing a whole framework by myself.

Amen.2 -

I was working on a site just moments ago and everything was fine. Then I move my laptop from my living room to my bedroom and on refreshing the page everything seems broken! Disorganized cards, and everything looks 10x bigger. I panic because I was supposed to deploy it on Thursday and I was just doing some final touches and angular has given me one hell of a time. I fucking rolled back all my commits to yesterday and cleared chromes chrome's cache....still nothing works. Then I realized there's a new button on my address tab and on clicking it is showed I had (accidentally) zoomed in by a fucking 175% when moving the laptop *facepalm*. On resetting it everything was ok. Now I have lost all of today's commits and my chrome cache. One box of tic-tacs down and I still can't overcome my rage... So I wrote this rant 😠😩😩

I need a stress ball😩😩 7

7 -

I messed up carelessly in production. Learnt how SQL queries bite you in the ass when it knows you are under pressure.

Was hosting an online quiz kinda thing during my college techfest. Tens of thousands of people participating.

Using MySQL as database and thousands of queries were being executed. Everyone were pretty excited as the event just opened up.

None of the teams could solve one particular level. Turns out the solution was wrong and was asked by the organisers to change the solution for that particular level. Usual stuff, right?

Was too lazy to open up the web UI for the back office and so, straight ahead logged in to the MySQL server and ran the UPDATE query on the table consisting of the solutions.

It had been a couple of hours and the organisers came to me with a weird problem. There were no changes in the scoreboard for the last two hours. Everyone were stuck wherever they were. Weird, right?

I then realized.

Fk.

In that dreaded query, I had only run

UPDATE 'qa' SET answer = 'something'

leaving out the where clause, specifying the question to update, like

WHERE qno=13

As a result, solutions to all the questions were updated to the same answer. After hastily fixing everything back, I had the dreaded conversation.

Org: What was the problem?

Me: It was the cache.

Org: Damn thing. Always messes up.

Me: *sheepishly* yeah

Probably the most embarrassing moment in my life, wrt coding 😑4 -

I had spent the last year working on a online store power by woocommerce with over 100k products from various suppliers. This online store utilized a custom API that would take the various formats that suppliers offer their inventory in and made them consistent. Now everything was going swimmingly initially, but then I began adding more and more products using a plug-in called WP all import. I reached around 100k products and the site would take up to an entire minute to load sometimes timing out. I got desperate so I installed several caching plugins, but to no avail this did not help me. The site was originally only supposed to take three to four months but ended up taking an entire year. Then, just yesterday I found out what went wrong and why this woocommerce website with all of these optimizations was still taking anywhere from 60 to 90 seconds to load, or just timing out entirely. I had initially thought that I needed a beefier server so I moved it to a high CPU digitalocean VM. While this did help a little bit, the site was still very slow and now I had very high CPU usage RAM usage and high disk IO. I was seriously stumped the Apache process was using a high amount of CPU and IO along with MYSQL as well. It wasn't until I started digging deeper into the database that I actually found out what the issue was. As I was loading the site I would run 'show process list' in the SQL terminal, I began to notice a very significant load time for one of the tables, so I went to go and check it out. What I did was I ran a select all query on that particular table just to see how full it was and SQL returned a error saying that I had exceeded the maximum packet size. So I was like okay what the fuck...

So I exited my SQL and re-entered it this time with a higher packet size. I ran a query that would count how many rows were in this particular table and the number came out to being in the millions. I was surprised, and what's worse is that this table belong to a plugin that I had attempted to use early in the development process to cache the site. The plugin was deactivated but apparently it had left PHP files within the wp content directory outside of the actual plugin directory, so it's still executing scripts even though the plugin itself was disabled. Basically every time I would change anything on the site, it would recache the whole thing, and it didn't delete any old records. So 100k+ products caching on saves with no garbage collection... You do the math, it's gonna be a heavy ass database. Not only that but it was serialized data, so when it did pull this metric shit ton of spaghetti from the database, PHP then had to deserialize it. Hence the high ass CPU load. I had caching enabled on the MySQL end of things so that ate the ram. I was really desperate to get this thing running.

Honest to God the main reason why this website took so long was because the load times made it miserable to work on. I just thought that the hardware that I had the site on was inadequate. I had initially started the development on a small Linux VM which apparently wasn't enough, which is why I moved it to digitalocean which also seemed to not be enough, so from there I moved to a dedicated server which still didn't seem to be enough. I was probably a few more 60-second wait times or timeouts from recommending a server cluster to my client who I know would not be willing to purchase it. The client who I promised this site to have completed in 3 months and has waited a year. Seriously, I would tell people the struggles that I would go through with this particular site and they would just tell me to just drop the site; just take the money, just take the loss. I refused to, this was really the only thing that was kicking my ass. I present myself as this high-and-mighty developer like I'm just really good at what I do but then I have this WordPress site that's just beating the shit out of me for a year. It was a very big learning experience and it was also very humbling as well, it made me realize that I really don't know as much as I think I might. It was evidence that there is still so much more to learn out there, I did learn a lot from that experience especially about optimizing websites the different types of methods to do that particular lonely on the server side and I'll be able to utilize this knowledge in the future.

I guess the moral of the story is, never really give up. Ultimately things might get so bad that you're running on hopes and dreams. Those experiences are generally the most humbling. Now I can finally present the site that I am basically a year late on to the client who will be so happy that I did not give up on the project entirely. I'll have experienced this feeling of pure euphoria, and help the small business significantly grow their revenue. Helping others is very fulfilling for me, even at my own expense.

Anyways, gonna stop ranting. Running out of characters. If you're still here... Ty for reading :')7 -

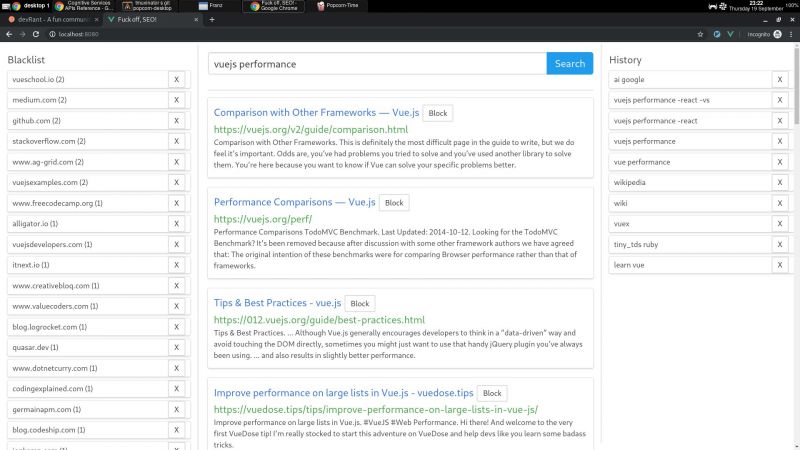

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

Want to make someone's life a misery? Here's how.

Don't base your tech stack on any prior knowledge or what's relevant to the problem.

Instead design it around all the latest trends and badges you want to put on your resume because they're frequent key words on job postings.

Once your data goes in, you'll never get it out again. At best you'll be teased with little crumbs of data but never the whole.

I know, here's a genius idea, instead of putting data into a normal data base then using a cache, lets put it all into the cache and by the way it's a volatile cache.

Here's an idea. For something as simple as a single log lets make it use a queue that goes into a queue that goes into another queue that goes into another queue all of which are black boxes. No rhyme of reason, queues are all the rage.

Have you tried: Lets use a new fangled tangle, trust me it's safe, INSERT BIG NAME HERE uses it.

Finally it all gets flushed down into this subterranean cunt of a sewerage system and good luck getting it all out again. It's like hell except it's all shitty instead of all fiery.

All I want is to export one table, a simple log table with a few GB to CSV or heck whatever generic format it supports, that's it.

So I run the export table to file command and off it goes only less than a minute later for timeout commands to start piling up until it aborts. WTF. So then I set the most obvious timeout setting in the client, no change, then another timeout setting on the client, no change, then i try to put it in the client configuration file, no change, then I set the timeout on the export query, no change, then finally I bump the timeouts in the server config, no change, then I find someone has downloaded it from both tucows and apt, but they're using the tucows version so its real config is in /dev/database.xml (don't even ask). I increase that from seconds to a minute, it's still timing out after a minute.

In the end I have to make my own and this involves working out how to parse non-standard binary formatted data structures. It's the umpteenth time I have had to do this.

These aren't some no name solutions and it really terrifies me. All this is doing is taking some access logs, store them in one place then index by timestamp. These things are all meant to be blazing fast but grep is often faster. How the hell is such a trivial thing turned into a series of one nightmare after another? Things that should take a few minutes take days of screwing around. I don't have access logs any more because I can't access them anymore.

The terror of this isn't that it's so awful, it's that all the little kiddies doing all this jazz for the first time and using all these shit wipe buzzword driven approaches have no fucking clue it's not meant to be this difficult. I'm replacing entire tens of thousands to million line enterprise systems with a few hundred lines of code that's faster, more reliable and better in virtually every measurable way time and time again.

This is constant. It's not one offender, it's not one project, it's not one company, it's not one developer, it's the industry standard. It's all over open source software and all over dev shops. Everything is exponentially becoming more bloated and difficult than it needs to be. I'm seeing people pull up a hundred cloud instances for things that'll be happy at home with a few minutes to a week's optimisation efforts. Queries that are N*N and only take a few minutes to turn to LOG(N) but instead people renting out a fucking off huge ass SQL cluster instead that not only costs gobs of money but takes a ton of time maintaining and configuring which isn't going to be done right either.

I think most people are bullshitting when they say they have impostor syndrome but when the trend in technology is to make every fucking little trivial thing a thousand times more complex than it has to be I can see how they'd feel that way. There's so bloody much you need to do that you don't need to do these days that you either can't get anything done right or the smallest thing takes an age.

I have no idea why some people put up with some of these appliances. If you bought a dish washer that made washing dishes even harder than it was before you'd return it to the store.

Every time I see the terms enterprise, fast, big data, scalable, cloud or anything of the like I bang my head on the table. One of these days I'm going to lose my fucking tits.10 -

I was already about to hit my head against the wall: was trying to install nginx all the time, but was greeted by apache default page, over and over again I re-installed the servers, tried connecting directly to the server ip, changed server hardware, picked different distros, manually build from source, did everything possible, even searched the whole system for "apache" and different regex...

It was chrome cache........ after I wiped cache I was greeted by welcome to nginx...... 10 hours wasted.......3 -

tl;dr - install ‘Pop!_os’ and try it out if you haven’t yet, it’s pretty damn good!

Heavy Micro$haft user here, have tried using ubuntu a bunch of times in the past and fucking regretted it every time. Ran into issues with stupid shit like the apt cache growing exponentially until the drive was full, or something like the the system python getting borked.

To be fair, I’m 120% certain my dumb-assery is what caused the problems. I’m definitely not trying to blame the OS. But my experience was shitty, even if it was at my own hands lol.

Started playing around with Pop!_os from the system76 team. And I’m seriously in freakin’ love with this OS. It’s clean, is performant, feels way less buggy or just feels more stable somehow. I know it’s based on ubuntu, but I’ve had a great time thus far using it. I’ve got ansible, docker, aws toolkit, aws cli, sam-cli, vscode, dynamodb-local, serverless, npm, brew, and working on steam now.

Everything has been a breeze and again the system feels really fast and snappy. It feels a lot like mac on the smoothness scale, but snappy like a windows box with beefy hardware specs.

I’m still just in the testing phase on a VM, but I’m seriously thinking about blowing away my windows install for Pop!_os.

(I’ll try arch someday when I’m up for some hardcore masochism)8 -

!(!(!(!(!(!(!(!rant)))))))

My new HTC smartphone hates me.

First it started to shut down all of the sudden yesterday night, when I was solving quadratic equations on my laptop.

I thought that it might be due to low battery. So I have restarted it. After putting itself into a bootloop for 4 start sequences, it was able to fully start to the page where it told me to enter the security pin to decrypt my files. I also had 30 attempts left. Like a ransomware.

I was like "tf I didn't set anything up".

So I decided to use my first attempt as I had 30 attempts left.

I entered the pin (I can swear that it's correct) and it told me that it has to wipe the /data partition.

I did that. I pressed that button. After waiting for 30 minutes I gave up and rebooted into the bootloader.

Bootloader -> Download Mode -> wipe /data (stock rom + stock recovery btw.)

Some error with "e: mount /cache failed[...]e: mount /data failed"

So, I tried using the adb sideload - no success.

Fastbooted into RUU Mode - HTC keeps rebooting itself into the RUU Mode - no success

Tried to flash the firmware and twrp recovery from Download mode - no success

Then I tried to flash all these things from the sd card - no success

Searched for revolutionary (I know this from my old HTC sensation device).

It wasn't big of any help.

Then someone on xda recommended htcDev (htc's <b>developer-friendly</b> lol site)

I followed every step. Everything seemed to be okay.

I got to the last step.

I needed to get my encrypted token by entering "fastboot oem get_identifier_token" to be able to submit it to HTC, and after they would send me an e-Mail with an .bin file that would let me unlock the bootloader to be able to flash my way through all this headache giving fucking piece of dog shit!

But since I can't back to the phone settings to select the bootloader activation box that would let me get my token... but nah.

FML

------------

Sent by using the devRant web app (:\)8 -

Is your code green?

I've been thinking a lot about this for the past year. There was recently an article on this on slashdot.

I like optimising things to a reasonable degree and avoid bloat. What are some signs of code that isn't green?

* Use of technology that says its fast without real expert review and measurement. Lots of tech out their claims to be fast but actually isn't or is doing so by saturation resources while being inefficient.

* It uses caching. Many might find that counter intuitive. In technology it is surprisingly common to see people scale or cache rather than directly fixing the thing that's watt expensive which is compounded when the cache has weak coverage.

* It uses scaling. Originally scaling was a last resort. The reason is simple, it introduces excessive complexity. Today it's common to see people scale things rather than make them efficient. You end up needing ten instances when a bit of skill could bring you down to one which could scale as well but likely wont need to.

* It uses a non-trivial framework. Frameworks are rarely fast. Most will fall in the range of ten to a thousand times slower in terms of CPU usage. Memory bloat may also force the need for more instances. Frameworks written on already slow high level languages may be especially bad.

* Lacks optimisations for obvious bottlenecks.

* It runs slowly.

* It lacks even basic resource usage measurement.

Unfortunately smells are not enough on their own but are a start. Real measurement and expert review is always the only way to get an idea of if your code is reasonably green.

I find it not uncommon to see things require tens to hundreds to thousands of resources than needed if not more.

In terms of cycles that can be the difference between needing a single core and a thousand cores.

This is common in the industry but it's not because people didn't write everything in assembly. It's usually leaning toward the extreme opposite.

Optimisations are often easy and don't require writing code in binary. In fact the resulting code is often simpler. Excess complexity and inefficient code tend to go hand in hand. Sometimes a code cleaning service is all you need to enhance your green.

I once rewrote a data parsing library that had to parse a hundred MB and was a performance hotspot into C from an interpreted language. I measured it and the results were good. It had been optimised as much as possible in the interpreted version but way still 50 times faster minimum in C.

I recently stumbled upon someone's attempt to do the same and I was able to optimise the interpreted version in five minutes to be twice as fast as the C++ version.

I see opportunity to optimise everywhere in software. A billion KG CO2 could be saved easy if a few green code shops popped up. It's also often a net win. Faster software, lower costs, lower management burden... I'm thinking of starting a consultancy.

The problem is after witnessing the likes of Greta Thunberg then if that's what the next generation has in store then as far as I'm concerned the world can fucking burn and her generation along with it.6 -

👦🏻 : I Enter office.

🕵🏻 : 8 emails from client with subject line "Urgent Fire! Fix ASAP".

👦🏻 : Opens Application and everything seems normal.

-- Another email 5 mins later --

🕵🏻 : Oops sorry! It was my browser cache.

👦🏻 : 🙄3 -

It's not that big of a deal, but it's kinda embarrassing since I was one of the best students in the class.

Took a web design (HTML, CSS & a tiny bit of JS) class. I never really struggled; more like polished everything I already knew to become a bit better.

In class working on an assignment. So we have a folder dedicated on a server just for this class. The folder is accessible as long as you're on the school internet or using the VPN. So I have an assignment there. I drop it onto my desktop, because i had worked on it since the last time I was at this computer.

I opened the project in VSCode and begin making changing things. I opened the HTML file wasn't updating. "That's odd" I thought. Cleared the cache, opened and closed the browser. Still nothing. I called the professor over to see if had any clue what was going on.

My dumbass self was editing the file that was on my desktop, but I had opened the HTML file from the server. I felt so stupid but we both just laughed it off and went on. -

This was originally a reply to a rant about the excessive complexity of webdev.

The complexity in webdev is mostly necessary to deal with Javascript and the browser APIs, coupled with the general difficulty of the task at hand, namely to let the user interact with amounts of data far beyond network capacity. The solution isn't to reject progress but to pick your libraries wisely and manage your complexity with tools like type safe languages, unit tests and good architecture.

When webdev was simple, it was normal to have the user redownload the whole page everytime you wanted to change something. It was also normal to have the server query the database everytime a new user requested the same page even though nothing could have changed. It was an inefficient sloppy mess that only passed because we had nothing better and because most webpages were built by amateurs.

Today webpages are built like actual programs, with executables downloaded from a static file server and variable data obtained through an API that's preferably stateless by design and has a clever stateful cache. Client side caches are programmable and invalidations can be delivered through any of three widely supported server-client message protocols. It's not to look smart, it's engineering. Although 5G gets a lot of media coverage, most mobile traffic still flows through slow and expensive connections to devices with tiny batteries, and the only reason our ever increasing traffic doesn't break everything is the insanely sophisticated infrastructure we designed to make things as efficient as humanly possible.10 -

Upscaling a prod database which was running on an 8 year old Dell desktop used as server. It had about 2MB of RAM and an Intel Core 2 processor...

This was the day I've learned a lot about querying the database as efficient as humanly possible.3 -

I hate these modern forums

if I follow a link and go back I lose my place and have to scroll because MoDeRn PaGinAtIoN

I would like to browse months of posts over several days but tomorrow my browser will lose the cache and I'll have to scroll past weeks of posts to find my place again and keep going

literally everything gets worse somehow through time. less features, less intuitive, less convenient, more walled-garden, everybody is more confused, yet less opinionated and less unique. before you'd have people at least making fun of each other, inside jokes, familiarities. people would give multiple right answers, trying to outdo each other with their version to gain a cultural foothold. now companies hold the cultural foothold and just ban you if your opinion is different, and every user is just another nameless generic blob1 -

[Rust]

I have a bunch of computational steps in a Rust program, all very expensive. They all depend on each other, forming a cycle-free and rather small graph of dependencies which is not a tree. The results of each of them for a given input are likely used tens of times by the others, so I would like to cache the subresults dynamically.

How would I go about doing this, considering that caching (rightfully) requires mutable access to the cache and multiple operations often refer to the same subresult?

I can't ask SO because they'd just tell me to use another language or recalculate everything every time, fully convinced that difficult questions can only emerge from design mistakes.12 -

so here’s the tea.

i’m a Chinese dev working in a Japanese company. they’ve got this decently sized project a full web app and backend stack and yeah, I’m handling both ends. full-stack life. not a problem. I’ve seen worse.

but the maturity level in this place? the passive-aggressiveness? is different level. have you ever worked somewhere where your coworkers act all sweet on the surface, but lowkey make it feel like everything is your fault in the most obvious way possible?

so here comes the fun part.

the Stripe exchange rate endpoint we were using? deprecated. not globally — just regionally in Japan.

i did my homework. contacted Stripe support. got the chats, screenshots, docs, confirmations, evidence, not .........vibes.

solution? easy. i integrated a third-party API that returns the same exchange rate data. built a cron job to pull and cache the values daily. stored it locally. frontend grabs the user’s currency via IP, backend returns the rate, no stress, no wasted API calls. boom. problem solved.

my manager? totally got it. said it was efficient.

but the founder? man acted like a toddler.

he flipped. said it was my fault.

told me i just "no communicate well...uh...very confuse..." like bro… what even? do I look like I own stripe or do I look like I secretly working for them? plus, i explain in full, still not understand.

he got heated in meeting, so I clapped back in the meeting: you want to argue all day and get nothing done? or you want to understand what’s going on, and let me go back to building stuff that actually works? pick one.

he didn’t like that.

pretty sure he’s shopping for my replacement now. well, doesn’t take a genius to see it.

but I’m not here to babysit egos. I’ll do my job clean, document everything, and keep it professional. meanwhile yeah, I’ve already started looking for something else.11 -

!dev

TL;DR: Today my phone Kruger&Matz Live 3+ got ebola. Anyone had same issues?

I woke up and unplugged my phone from charger as always, but it was hot as hell. I was not worried, thought it heated up cause of charging as I plugged it few hours before waking up.

Then things got serious. I was unable to use phone, it freezed randomly, opened apps I hovered when frozen, etc. I thought thats becuse it was hot, so I turned it off and put it into the fridge (I do it sometimes).

I was leaving house in an hour so I hoped that would help. I turned it back on when leaving, but nothing changed and it was getting hot again. I've checked processes, was deleting apps like mad, thibking that was some bug in update of one of them, cleared cache partiotion too. That did not help, so I was forced to factory reset. Guess what... same issues.

I tried everything possible and lost all hope, was ready to send it to service. So I turned it off, so it won't burn my pocket out.

Few hour later I talked with dad complaining about the issue and tried to show him what's wrong, but... it was all right again. No freezes, no heating.

Later that day my sister told me she had issues with her phone - Live 3, described same as mine. Even weirder that my girlfriend had no issues with her Flow 4+ from same company.

Two phones of same company, almost same product line with the same exact issues on the same time frame? Any ideas what happened?4 -

Let's see what's on the menu today:

* Web Application Catastrophe Special *

Includes, but not limited to:

- Orphaned server processes in the configuration management cluster

- Microservice back-end architecture with no API documentation

- Poorly implemented cache microservice with no documentation

- Stale data causing everything to be shown as down in production, despite everything running fine

Cost: 1 developer's sanity -

There are always days when everything related to school projects seems to hate me. In example we have to create a tunnel from our own computers to access uni's private server and suddenly mine stopped working. Me and my classmate were trying to figure it out for 1,5 hour and even our teacher didnt understand the hell was happening and in the end we found out that even tho we deleted the faulty line from host file the firefox still had it in its cache

-

My phone got stuck in funky restart boot loop yesterday. The first 2 restarts was odd but after 3 cycles, I started panicking. Went on my PC, googled for all kinds of button combinations of power button, volume button, back button, and home button to get into fastboot, recovery and safe mode to see if I can clear stuff or at least get backup of my stuff. I also tried taking the battery out. Nothing works, except when I factory reset.

Everything was new again and as it booted up, I have to remember to change my authentication keys in LastPass, my private ssh key in Krypt. But fortunately, Google remembered all my apps and suggested if I wanted to install them again since it recognized my phone was an old phone. Thanks for tracking me Google. And now since its a reset, everything is clean, no cache, cookie, and some of my music files are all gone. Well at least its fast like before.1 -

I'm going to re-try my ConsoleWidgets/ CursesWidgets project from complete scratch. Here are some things I learned and will do better this time with:

- Keep people updated on progress to maintain motivation (Hence this post)

- Centralize drawing, eliminate curses entirely besides in this static class.

- Don't worry about complicated rendering until basic rendering is done. I really got stuck up on text rendering last time.

- Sort out a color system from the very beginning, and make it as simple as possible. Working with curses, it is a good idea to have a color manager.

- Research how to logically render two items - both sized to 50% of the screen - when there are an odd amount of pixels available.

- Only make one type of widget at the beginning. Don't worry about Buttons and Sliders and such until the base Widget class is completed.

- Truly decide if I want to call them Widgets or Controls

- Don't worry about supporting multiple curses windows. Got hung up on that too. stdscr will do for everything I need.

- Cache inflation values so that they need not be re-calculated each render. Re-calculate on resize.

- This is more of a c++ thing, but drop pointers in favor of references. It's 2018. I have already started to do this in other projects but THIS IS THE ONE. -

Magento Debugging Horror!

Changing lots of things in magento with no problem. Continuing development for quite sometime. Suddenly decide to clear cache to see affect of a change on a template in frontent. Suddenly magento crashes! There's no error message. No exception log. No log in any file anywhere on the disk. All that happens is that magento suddenly returns you to the home page!

Reverting all the changes to the template. Clear the cache. Nope! Still the same! Why? Because the problem has happened somewhere in your code. Magento just didn't face it, because it was using an older version of your code. How? Because magento 2 even caches code! Not the php opcache. Don't get me wrong. It has it's own cache for code, in a folder called generated. Now that you cleared all the caches including this folder, you just realized that, somewhere something is wrong. But there is no way for you to know where as there is absolutely no exception logged anywhere!

So you debug the code, from index.php, down to the deepest levels of hell. In a normal php code, once the exception happens, you should see the control jumps to an exception handler, there, you can see the exception object and its call stack in your debugger. But that's not the case with magento.

Your debugger suddenly jumps to a function named:

write_close();

That's all. No exception object. No call stack. No way to figure out why it failed. So you decide to debug into each and every step to figure out where it crashes. The way magento renders response to each request is that, it calls a plugin, which calls a plugin loop, which calls another plugin, which calls a list of plugins, which calls a plugin loop, which calls another plugin.....

And if in each step, just by accident, instead of step through, you use the step over command of your debugger, the crash happens suddenly and you end up with the same freaking write_close() function with no idea what went wrong and where the error happened! You spend a whole day, to figure out, that this is actually a bug in core of magento, they simply introduced after your recent update of magento core to the latest STABLE version!!! It was not your mistake. They ruined their own code for the thousandth of time. You just didn't notice it, because as I said, you didn't clear the `generated` folder, therefore using an older version of everything!

Now that after spending 7 hours figuring out what has failed with absolutely no standard way of debugging and within a spaghetti of GOTO commands (Magento calls them plugin), why not report it to github? So you report it with a pull request. This also takes 1 hour of your time. Just to next day get informed that your pull request is rejected because another person already fixed the bug and made the same pull request. It was just not on the latest stable version yet!

So you decide to avoid updating magento as much as possible. Because you know that the next Stable version will make your life and career unstable. But then the customer complains that the Admin Panel is warning him of using old Magento version which might pose SECURITY THREATS! -

!rant

TL;DR: Can anyone recommend or point at any resources which deal with best practices and software design for non-beginners?

I started out as a self-taught programmer 7 years ago when I was 15, now I'm computer science student at a university.

I'd consider myself pretty experienced when it comes to designing software as I already made lots of projects, from small things which can be done in a week, to a project which i worked on for more than a year. I don't have any problems with coming up with concepts for complex things. To give you an example I recently wrote a cache system for an android app I'm working on in my free time which can cache everything from REST responses to images on persistent storage combined with a memcache for even faster access to often accessed stuff all in a heavily multithreaded environment. I'd consider the system as solid. It uses a request pattern where everthing which needs to be done is represented by a CacheTask object which can be commited and all responses are packed into CacheResponse objects.

Now that you know what i mean by "non-beginner" lets get on to the problem:

In the last weeks I developed the feeling that I need to learn more. I need to learn more about designing and creating solid systems. The design phase is the most important part during development and I want to get it right for a lot bigger systems.

I already read a lot how other big systems are designed (android activity system and other things with the same scope) but I feel like I need to read something which deals with these things in a more general way.

Do you guys have any recommended readings on software design and best practices?3 -

Disconnect from everything that you're thinking of for a moment. Step away from your computer, and dump all of your thoughts out for about five minutes.

In better terms: clear your cache. Your devices need it, and you need it too. Bonus points if you give your eyes a stretch and look outside your window.2 -

So... we are changing our cache system to a fastest one.

I suggested an update method (with code samples included) as we currently only manage insert and remove... making impossible any modification of a list in cache without getting the entire list, modifying whatever we want and insert again everything.

Answer: 'It could work, but we want to keep the previous system behavior'

Why. SMH3 -

Last weekend I was working on a small project for a friend of mine: a dockerized webapp, plus API backend and DB. I had some problems with the installation on the vps and had to try out different images and never really did a complete setup of my usual dotfiles. Got it running on an Ubuntu distro. Everything great.

It was the first release so I still had to check that every configuration worked ok, like letsencrypt companion container, the reverse proxy and all that stuff, so I decided to clone the whole project on the server tho make the changes there and then commit them from there.

Docker compose, 10 lines of code, change the hosts and password. Boom everything working. Great... Except for the images in the webapp.

WTF? Check the repo, here they are, all ok. I try different build tactics. Nothing. Even building the app on another docker always the same. Checked browser cache, all the correct ports are open. I even though that maybe react was still using some weird websocket I didn't know, but no.

Damn, I spent 5 hours checking why the f*** the server wouldn't make it out.

Then, finally, the realization...

I didn't install the f******* git-lfs plugin and all I was working with were stupid symbolics links! Webpack never even throw an error for any of the stupid images and the browser would only show a corrupted image, when decoding the base64 string.

Literally the solution took 5 minutes.

F*** changes on production, now I do everything on a fully automated CI. -

so, I am trying to implement a caching solution for my CI/CD (because, you know, BitBucket CI caching sucks ass big time). This time I was writing a module in Python. I spent 2 evenings in the evening building it, debugging and testing, implementing several features making it a flexible solution.

So, yesterday I had a pretty much well working version. Before pushing changes I wanted to drop the cache and give it another round of testing, just to be sure I was pushing a truly working code. I rm-rf the cache directory, restart the engine and I'm greeted with an error message saying the module I was working on cannot be found.

wtf..?

Out of a sudden the IDE stopped showing all the project files as well.

wtf happened....?

oh, of course.. I rm-rf'ed my project directory, not the cache directory. Deleting EVERYTHING I had.

fuck.

I should not be working half-asleep4 -

First let me start this rant by saying: Don't use SharePoint lists as your primary data store if you can avoid it. You're gonna have a bad time.

My coworkers and I work on a system where we need to pull tons of data down from a SharePoint site and run various algorithms and operations on it. Generate reports, that sort of thing. This is all done in the browser using a Typescript React SPFX webpart. Basically using SharePoint as a DB/DAL.

Because of the sheer amount of data we end up pulling down (our system in production is the single source of truth for one of the largest companies in Canada, and they're currently building a pipeline as we speak), in order to maintain a reasonable speed while using it, we have some pretty intense caching logic implemented, logic that ensures we get new items when new items are detected, and merges changes to already exisiting objects. It's pretty brilliant, and that's before we even consider the custom paging that my coworker implemented in order to get around the IndexedDB max size of 100MB.

Well that's all well and good, and works great in production, but it is a horror to work with. Because EVERYTHING we touch on the server is cached locally, it can be IMPOSSIBLE to detect data anomalies, be they local or server side -.- You don't know how many hours I have completely WASTED fixing a "bug" that didn't really exist... Just incorrect data in the cache12 -

I hate group project so much.

I yet again successfully stirred up a big drama in my project group. For project, I proposed a CDN cache system for a post only database server. Super simple. I wanted to see what ideas other people come up with. So I said I am not good at the content and the idea is dumb. Oh man, what a horrible mistake. One group member wants to build a chat app with distributed storage. We implemented get/put for a terribly designed key value store and now they want to build a freaking chat app on top of a more stupid kV store using golang standard lib. I don't think any of those fools understand the challenges that comes with the distributed storage.

I sent a video explaining part of crdt. "That's way too complicated. Why are you making everything complicated."

Those fools leave too much details for course stuff's interpretation and says

"course stuff will only grade the project according to the proposal. It's in the project description".

I asked why don't they just take baby steps and just go with their underlying terribly designed kV store.

"Messaging app is more interesting and designing kV store with generic API is just as difficult"

😂 Fucking egos

Then I successfully pissed off all group members with relatively respectful words then pissed off myself and joined another group.1 -

It often feels like the logic and the equivalent final application code have nothing to do with each other.

Logic: Find the only element in this list that matches criterion, or the first element in this other list, or none. If the first list has multiple matches, fail.

Application: Produce information about the criterion checks for all elements in both lists for info logging. Find any elements in first list that match. Save the number of matches for an optimization that relies on a lot of assumptions about the search criterion that are only ever expressed in doc text. If one, return, if multiple, fail. Otherwise find first match in second list, produce debug hint on why the preceding elements in that list didn't match by aggregating the criterion check info. If multiple matched in second list, check highly specific interdependency, and if absent, produce warning about ambiguity. Return first match if any.

The first can be beautifully expressed as a 5 line iterator transform. The second takes 3 mutable arguments (cache, logger, criterion because it also may cache and log), must compute everything eagerly and has constraints that are neither strictly necessary for a correct implementation nor expressible in the type system.1 -

I'm building a script parser to make mods for a game I like. The first step is to write an importer.

The documentation is nonexistent and I'm delving into byte manipulation, which I'm not familiar with - at all. I'm porting existing code from Java to C#, and everything is similar but different enough that I can't always just to a 1:1 transfer.

I get everything working, cleaned up and split into classes so I can write the exporter.

I do an import and the file won't parse. I try all previously know working files and still no good. I clean, rebuild, clean rebuild, run, debug, restart my computer, clear my cache, clean, rebuild. No good.

IT WAS WORKING 5 MINUTES AGO

Proceed to revert to every version from the last hour. No dice.

I was in the wrong folder the whole time.

Navigate to the proper folder, open the filename I know to be good and bingo, works like a charm.

The same project caused me headaches because I had a "== -1", when it should have been "== 1". Between my inexperience with byte manipulation and my untreated astigmatism, I was nearly sent to the shadow realm fixing that.3 -

Oh, tryna debug that XSD validation fuckup in your DNF cache XML? How pathetic; even Git's laffing its commits off at your tab-loving ass breaking everything like a noob.3

-

Question about cache (Redis or other distribuated cache).

So I would like to find a solution with “Partioning”. But without code it my self (ofc)

Ok, example :

In the application you have clients, each client has users, each user has role.

So right now it’s in the cache with the keu “User:<userId>” = role

Sometimes, when you change client settings, all entries should be removed.

So what I would love to have :

Client_Id/UsersRoles/UserId as a key

And I would love to be able tp delete “all keys after /” :

Basiclly delete client_id/ would delete everything in cache for this client

Delete client_id/UserRoles will clean up all saved roles.

I’m pretty new working with redis, but it doesn’t seem possible out of the box.

Any reading material I could read ?4