Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "pattern"

-

My girlfriend saw me coding in XCode.

GF: What are you doing?

Me: Ahmm. Coding.

GF: *saw the colors in every line of code

GF: That's easy. You just need to follow the color pattern. Green, Blue, Red and Yellow.

Me:

Macbook:

XCode:

Charger:

BTW. She's a Preschool Teacher. Hahahaha22 -

4+ years of programming.

Still have no clue how to make my own regex pattern.

Every single time I need to, I always open 4 cheat sheets and/or stackoverflow.24 -

Client: About this QR code for my website, can we change it?

Me: Well we could redirect, but what's wrong with it?

Client: I just dont like the pattern, it's too noisy...

Me: 🙃8 -

The original story:

"When I've got my very first android I was downloading any shit from Play Store. There was app called pattern security or something like that. The app was taking selfies everytime power button was pressed several times and then photo would sent to email. One day I left my old phone at home and at the office this is the photo i've received." 12

12 -

One of my worst meetings, as the sheer rage was unbelievable.

Backstory:

Architect: "Stop duplicating code", "stop copy pasting code", "We need to reuse code more", "We need to look at a new pattern for unit tests" etc.

Meeting:

Architect: What did you want to talk about?

Me: I built a really simple lightweight library to solve a lot of our problems. Its built to make unit testing our code much easier, devs only need to change a small bit of how they work.

Architect: I like the pattern a lot, looks great ... but why a library? can we not just copy the code from project to project?

... do you have a twin or something?2 -

Gahaa!!! Finally back home, after 7 fucking hours of sitting in busses and trains!

BUT I GOT MY NEXUS 6P!! Yoo-hoo!!! :D

And I've got a nice story about it.

So when I bought it, the guy selling it to me was a nontechnical type (I think?) whose wife was the previous owner. So I thought to myself, cool a nontechnical user used it.. probably no hardware mods or anything to worry about. Apparently they even factory reset it for me :)

Now, when I left to go back home, I of course immediately booted up the thing and did the whole doodad of logging into it, setting up the device etc.

Then it struck me. When I booted up the device and wanted to log in, there was a lock from Google that required me to first authenticate as either a previous account of the device, or their unlock pattern. So I figured, eh fuck it, I'll just flash some AOSP without GApps or send the owner an email asking what the previous pattern is.

But I still had to wait 30 minutes at the bus stop so I thought to myself.. previous owner was a nontechnical woman.. maybe I could crack it. No way to know if I don't try. So I started putting in random unlock patterns.

3 attempts later - I shit you not! - pattern accepted.

Do you want to add this account?

Oh boy Google, of course I do! Thanks for letting me in pal!

3 fucking attempts. That's all it took to crack the unlock pattern of an unknown person. 😎 23

23 -

To all the design pattern nazis..

Don't you ever tell me that something is impossible because it violates some design pattern! Those design principles are there to make your life easier, not something you have to obey by law.

Don't get me wrong, you should where ever possible respect those best practices, because it keeps your software maintainable.

But your software should foremost solve real world problems and real world problems can be far more complex than any design pattern could address. So there are cases where you can consciously decide to disregard a best practice in order to provide value to the world.

Thanks for reading if you got this far.6 -

Microsoft announced Visual Studio 2017. Yeah, Party.

I am so hyped, because with it comes C#7.0. I am speaking of tuples, pattern matching and local functions. This will be great. Soon ☺️🎉6 -

Cook A:

1 - Makes a soup

2 - Leaves a mess

Company: ☺️ What a nice cook, here's your promotion to senior Cook.

Cook B:

1 - Cleans kitchen

2 - Makes soup

3 - Cleans after themself

Company: 😡 What took you so long!? Cook A made it in 1/3 of the time.

This is the pattern I've seen so far in development... and it's sad 17

17 -

Lesson learnt :

Never ever do "rm -rf <pattern>" without doing "ls <pattern>" first.

I had to delete all the contents of my current directory.

I did "rm -rf /*" instead of "rm -rf ./*".18 -

Legends -> I: Interviewer

I: what is mvc architecture

Me: model.. view.. controller... and blah blah

I: mvc is not an architecture.. its a design pattern.. architecture is blah blah

I: srry U r rejected.. god bless you

Me: 😥😢

after 1hr

Me: googled 'Is mvc a design pattern or architectural pattern'

Google: shows stack overflow link

Stackoverflow: mvc is architectural pattern blah blah... accepted answer

Me: hopeless about my future

GOD BLESS THE INTERNET and SOFTWARE DEVELOPERS16 -

*fortunes and tons of research spent on machine learning, signal processing and pattern recognition*

People: 5

5 -

I think I'm doomed when it comes to posting the kinds of rants that get upvotes. The pattern I've noticed is that profanity-laden rants get more love than ones that are more tame. I was raised old-school, so profanity isn't my thing. Guess I'll just sit back and watch.6

-

Write comments you dumb fucks.

If you change shit that is different from the original pattern, fucking write a comment.

1 minute vs. 45 minutes you mother fucker.14 -

Worst error message management.

Can't you just display the valid pattern for an username instead of showing different error message everytime.

If plain ASCII and only letters and numbers same for them, just show only letters and numbers, and

what's that hourly limit.

I just couldn't sign up after wasting time thinking of an username. 6

6 -

!rant

Finally finished the blanket I’ve spent a month crocheting without a pattern after teaching myself to crochet at, like, the beginning of the month. It’s huge (this is it laying on a King sized bed) and I made so many mistakes that seem super obvious now, but I’m still weirdly proud of it. 8

8 -

Introducing my everyday weapon against bugs.

Colour pattern to change depending on my mood or my rage against PEBKAC. 5

5 -

I'm seeing a pattern here... We devs/testers/sysadmins/etc. don't get to spend too much time outside... We talk about different stuff than most people... We are more intelligent than most people so we don't get their dumb jokes... Most of us like to work at night because that's the time when nobody bothers us...

We don't get a chance to find a girlfriend, we don't understand how it works...

We are doomed 13

13 -

At my previous job a coworker left positive comments alongside any negative ones on my code. “Nice job here. Very clean”, or “nice use of X design pattern here!” Kinda made me look forward to his code reviews.4

-

Procrastinating while waiting for my robot parts to arrive

(The lights make a nice pattern, but I can't upload videos here :() 8

8 -

!rant I think I may have a way smaller head than the pattern I was using seemed to assume. 😅

(Or my stitches got messed up along the way. Either is possible.) 5

5 -

Started with C, then C++...forgot some C. Then on to java forgot some C++, then on to Objective-C, learned some swift...forgot some Objective-C...do you see the pattern. 😎2

-

In an interview, when you throw a simple piece of code to a candidate...

Candidate: ...

ME: (maybe I'm too hard on them)

Candidate: ...

ME: (ok, I definitively have to simplify this little pattern example)

Candidate: ...

ME: ...explaining the short piece of code and give'm the answer

Candidate: haaaaa that's what I was thinking but I used that long time ago...

ME: (Yeah... nice try)2 -

I opened a post starting with a "NO TOFU" logo and I was wondering what relationship existed between the SSH protocol and anti-vegan people.

After some paragraphs it explained that TOFU stands for Trust On First Use (a security anti-pattern). 7

7 -

Does balding scare the shit out of anyone else here? I am 19 and have started showing signs of male pattern baldness *sigh*. Just hope to make it to 25 without balding completely.20

-

Splash pages. Remember that crap from 20 years ago? That was a home page with some "click to enter" nonsense to get to the actual home page. Laughably stupid.

Today's empty home pages where you have to scroll down to get to any real content is exactly the same moronic pattern, just by another name: showing off useless design wankery and forcing user interaction to bypass it. Fuck you if you still do that shit.29 -

Remember to regularly defragment your drives on linux. use this handy command.

dd if=/dev/zero of=/dev/sdX bs=1M

Terminology:

dd: Disk defragment

if: input file (the pattern to search for, and should always be /dev/zero)

of: output file (your disk, /dev/sda for instance)

bs: blocsize 1M is fine here16 -

I was MEAN developer and moved to MERN developer.

My thoughts:

Angular very good framework BUT react + redux fucking awesome7 -

Ever heard of event-based programming? Nope? Well, here we are.

This is a software design pattern that revolves around controlling and defining state and behaviour. It has a temporal component (the code can rewind to a previous point in time), and is perfectly suited for writing state machines.

I think I could use some peer-review on this idea.

Here's the original spec for a full language: https://gist.github.com/voodooattac...

(which I found to be completely unnecessary, since I just implemented this pattern in plain TypeScript with no extra dependencies. See attached image for how TS code looks like).

The fact that it transcends language barriers if implemented as a library instead of a full language means less complexity in the face of adaptation.

Moving on, I was reviewing the idea again today when I discovered an amazing fact: because this is based on gene expression, and since DNA is recombinant, any state machine code built using this pattern is also recombinant[1]. Meaning you can mix and match condition bodies (as you would mix complete genes) in any program and it would exhibit the functionality you picked or added.

You can literally add behaviour from a program (for example, an NPC) to another by copying and pasting new code from a file to another. Assuming there aren't any conflicts in variable names between the two, and that the variables (for example `state.health` and `state.mood`) mean the same thing to both programs.

If you combine two unrelated programs (a server and a desktop application, for example) then assuming there are no variables clashing, your new program will work as a desktop application and as a server at the same time.

I plan to publish the TypeScript reference implementation/library to npm and GitHub once it has all basic functionality, along with an article describing this and how it all works.

I wish I had a good academic background now, because I think this is worthy of a spec/research paper. Unfortunately, I don't have any connections in academia. (If you're interested in writing a paper about this, please let me know)

Edit: here's the current preliminary code: https://gist.github.com/voodooattac...

***

[1] https://en.wikipedia.org/wiki/...29 -

C# FTW! New features for C#7.0:

blogs.msdn.microsoft.com/dotnet/2016/08/24/whats-new-in-csharp-7-0/

tuples, pattern matching, local functions *coder-gasm*6 -

Me: Right, time to sit down and write some code.

Also me: I think I need to try a new IDE to see if that makes me more productive.

Productivity tools are my own productivity anti-pattern...!3 -

Laying next to my snoring wife... My mind is running on what coding her snoring pattern would be like.2

-

We have a long time developer that was fired last week. The customer decided that they did not want to be part of the new Microsoft Azure pattern. They didn't like being tied to a vendor that they had little control over; they were stuck in Windows monoliths for the last 20 years. They requested that we switch over to some open source tech with scalable patterns.

He got on the phone and told them that they were wrong to do it. "You are buying into a more expensive maintenance pattern!" "Microsoft gives the best pattern for sustaining a product!" "You need to follow their roadmap for long term success!" What a fanboy.

Now all of his work including his legacy stuff is dumped on me. I get to furiously build a solution based on scalable node containers for Kubernetes and some parts live in AWS Lambda. The customer is super happy with it so far and it deepened their resolve to avoid anything in the "Microsoft shop" pattern. But wow I'm drowning in work.24 -

Someone ask to me as a security engineer.

Bro : what do you think about most secure way to authenticate, i read news using fingerprint no longer safe?

Me : yes they can clone your fingerprint if you take a photo with your fingerprint to camera.

Bro : so what is the other way to authenticate more secure and other people can't see in picture ?

Me : D*ck authentication is more secure now, other people can't see your d*ck pattern right?10 -

The boss made us to set a shitty background (pattern) to the website we are working on right now but it looks ugly as hell. I tried to change his mind several times but no, he fucking loves that background.7

-

Ah.. the beauty of clean code.

I wrote a very cleanly written program two years ago. Proper variable names, not too many, right naming, right design pattern,.. Now I come back to it and I am able to instantly figure out the code again. It only took me half a minute.

The importance of clean code... that's something the industry needs to understand more. Well, then there's the money issue. lol5 -

I was an oilfield machinist for about 10 years. During downtime I'd read blogs and books on my phone. Eventually I wrote an app to manage parts drawings and CNC programs for my shop. Any time I came across a package or pattern I didn't understand I'd pursue it relentlessly. CodeWars and reading other people's code got me a long way. Now I've got a job in silicon valley and things are pretty sweet.

-

How do you lock your phone (or computer)?

- not/swipe

- 3x3 dot connection pattern

- number code

- password

- fingerprint

- FaceID 24

24 -

Look at that. The very fucking smart colleague spent 40 days implementing a repository pattern (WHEN WE'RE USING AN ACTIVE RECORD ORM), breaking stuff left and right. Does he use that fucking pattern at the very least?

Of course he doesn't. And along the way he's making sure to create conflicts with the stuff he broke (and I'm fixing). By the time I fix the merge conflicts of one commit, he pushed 6 of them. 9

9 -

O'joy has come, it is time to make the best if/switch statement...

Worst part I can't see a pattern in this, so I have to hardcode all this shit.

Even worser part, it has to be updated yearly... woop w00p 9

9 -

Fuck you, insomnia.. You're a bastard.

/(o.o)\

That's it, I don't have enough energy for more. Pretty sure this "sleep every second night" pattern is involved somehow though...6 -

Every time I rant about JS, I get accused of:

- being a noobie

- not "REALLY" understand JS

- being an incel

- being dumb or low IQ

I'm starting to see a pattern of behavior, similar to the far right or far left political people on Twitter, and highly religious people.

I know, correlation != causation but it makes me think that maybe emotional attachment to ideas is bad for the brain14 -

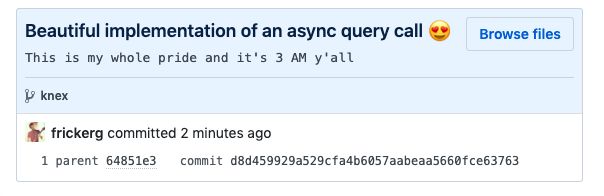

I've been working on a proof of concept for my thesis for a few days and the async query calls drove me nuts for quite a while. I finally managed to deliver all query results asynchronously while still very much relying on a strong architectural design pattern. I am filled with caffeine, joy and a sense of pride and accomplishment.

rant late night coding caffeine async await query proof of concept javascript boilerplate database typescript1

rant late night coding caffeine async await query proof of concept javascript boilerplate database typescript1 -

My project wouldn't need a robust backend language, or even a fancy frontend framework...

With unlimited time and money, I would give every child under the sun the opportunity to stay alive, to have no fear of poverty or illness, and to prosper in their own way. Only one design pattern needed: HOPE...

-

Why does MS need to be such a scumbag with Windows updates?

Every now and then, this unskipable blue setup screen appears and forces the user to make some decisions.

"Do you want to set Edge as the default browser?"

"Do you want a 360 subscription?"

The usual crap.

But it‘s not skippable!

You have to make a decision and the UI for "fuck off" is different for every decision.

You can‘t just press the Nope button every time.

It‘s fucking deliberate. They want you to spend time on reading their shit and force it down your throat.

And let‘s not forget about people who don‘t know computer stuff very well and are confused by this. Then call us because "the computer isn‘t working again."

And you can‘t tell them to skip this slimy rotten vomit of a marketing weasel because you need them to tell you what the options are for each fucking decision screen.

😫17 -

Go to interviews. You always learn stuff in any interview. Either be a technology or design pattern or any freaking thing they use there.

Basically 2 things can happen:

you get the job or ... you don't,

either way you will still leave with some extra knowledge.

Also don't be afraid to tell how much money you want to earn. Getting a job ans feeling pissed about the salary is a horrible feeling.1 -

GodDAMN, C# 7.0 is so ridiculously feature packed. I can pattern-match inside a predicate on an exception filter. Want to catch ONLY NHibernate's exceptions caused by a SQL timeout? Boom:

catch (GenericADOException adoEx) when (adoEx.InnerException is SqlException sqlEx && sqlEx.Number == -2) { return Failure("timeout"); }7 -

My university alerts all student and staff any time a phishing email is reported. I've yet to attend one class, and I've received a few dozen emails alerting you of phishing emails being sent. It's sad people can't notice the pattern of the emails, and realize right away "Hey this is a bullshit email" and not rely on the alerts.

It's the 21st century; basic computer competency is a necessity.3 -

One of my classmates was working on a login form, and the fucker handtyped a 100+ character email validation regex but forgot to add a check to make sure no fields were blank.

It was funny when I was able to create an account with no username, breaking his website, and even funnier when I told him html forms have a built-in email pattern5 -

Dear companies..

There is a fucking difference between:

-pattern recognition

-machine learning

And

- artificial INTELLIGENCE....

Learning from experience is NOT THE SAME as being able to make conclusions out of unknown conditions and figuring out new stuff without any input.8 -

Dev. policy: The use of SELECT * is forbidden.

Open Data Access Layer > first statement: SELECT * FROM bullshit_table4 -

I quite like Unity.

It's a good engine, BUT....

I despise doing UI in it, it's just so tedious to make everything work and show up.

In the past I didn't mind but then I learned about XAML in WPF, UWP and Xamarin. XAML works very nice with the MVVM pattern (even if you do that lazily) with the data binding.

So now I'm working on my own data binding, which is both fun and saddening (because it's not in there already)1 -

Regex of doom!!

"It appears that you need exactly 7579 characters to pattern match every possible legal url out there."

http://terminally-incoherent.com/bl...3 -

!rant

As a programmer I feel that i write instructions for the machine's heartbeat.

Single repetitive pattern to be performed for gazillion number of times.

And all that matters is how that heartbeat goes. As long as this one is fine, the next one should be mostly fine. -

String username = "Xx_thelegend27_xX";

final Pattern pattern = Pattern.compile("(xx|(_|))(?<username>\\w*)(_|(xx))", Pattern.CASE_INSENSITIVE);

final Matcher matcher = pattern.matcher(username);

if(matcher.matches()){

this.showError(String.format("Your username cannot be that... Try: %s", matcher.group("username")));

}else {

registerOrSomething();

}2 -

I posted one for work already but this little guy sits on my desk at home.

Meet Donkey, he's 32 years old and suffers from male pattern baldness. My mom made him for me when I was 2 years old (thus the odd name I gave him) and he's been with me ever since. 5

5 -

Just "learned" that Singleton is a good design pattern with no disadvantages. And in MVC the model should be a singleton.

FML :^)4 -

After few years of coding php, I finally REALLY get the mvc pattern. Never gonna write crappy code again.4

-

This is from a book I'm reading right now! I found it funny :-D

Pattern: What is a chaperone?

Shallan: That is someone who watches two young people when they are together, to make certain they don’t do anything inappropriate

Pattern: Inappropriate? Such as . . . dividing by zero?8 -

Just got rejected for an internship because of my architecture pattern. Learnt an entirely new framework and deployed an API in a week. Sigh

Edit:

Was at a college level. Not completed my bachelor's yet. -

When you have high pattern recognition but get rudely ignored until the problem you warned about becomes a very serious issue days later 😪🙄

Fuck I'm so tired of feeling like the only one trying to stave off bad practice sometimes 🫠

🙃3 -

Weekly Group Rant suggestion: What anti-pattern exists that still keeps being propagated or infecting other areas of your code base (like a virus)?

Code samples/screen-shots required.12 -

On Friday I was planning on working on getting the MVVM pattern down in C# and WPF. Today I watched episodes of Smallville all day. I seem to have a lack of motivation, but I guess it is the weekend. 😜1

-

There should be an open source, Linux based Printer operating system. Like OpenWRT for routers, also works with almost every device in the wild.

This would be such a relief for everyone. Come on, most printer firmwares are crap.

Remember Scannergate? Yep, the one about professional xerox scanners changing numbers in scanned document. Went unnoticed for 8 years and affected almost every workcenter even in the highest compression setting. Just because they wanted to save a few bytes by using pattern matching. -

Fun fact:

The gradual speed increase in the descent pattern of the aliens in space invaders was actually a bug, due to the amount of aliens in the screen.

The more you kill, the faster they get.3 -

a lot of devs just don't have the first clue of what they are doing

they haven't put in the work

they'll blame absolutely everything else (language, framework, pattern, etc.) instead of actually putting in the work and making it easier for themselves

sad9 -

First of all, merry christmas to everyone on devrant.

Second, another interesting paper--this time on pattern classification using piecewise linear functions vs classic spiking neural nets.

Supposedly it was a *six million* percent improvement in computation time, versus the spiking simulation. Thats my five minute overview of the document anyway.

Highly unusual application (hadn't seen it done before now but maybe I'm unfamiliar). Check it out:

https://link.springer.com/chapter/...4 -

Time for a full refactor. Everyone can go home for three days while I unfuck every page and pattern.

-

I was so fed up with being spammed with generic messages from recruiters on Linkedin I decided to create a parody generator - Linked xD (http://devpurge.com/linkedxd/en). It was first launched in Polish and went viral; a few months later I heard even recruiters started to use it on a daily basis as an anti-pattern.8

-

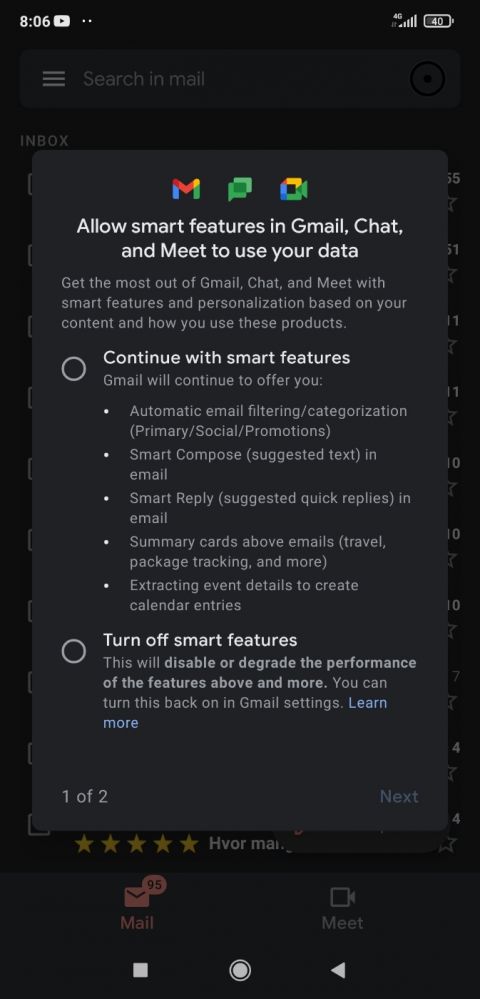

Forced choice between two options which both seemingly have irreversible and potentially destructive consequences. Tapping back or outside the modal doesn't dismiss it. No 'Read more' type link for the first option.

Laws and regulations against dark pattern design when?

edit: okay the readmore link is passable but I still want to be grumpy about it. 4

4 -

So I was excited about working on a proyect I recently was invited to work on, but when I finally got my hands on the code I felt this urge to scream "YOU FUCKER!!!" (the dev responsible for the code)6

-

Do you use the well known "pattern" for positioning fingers on the keyboard for faster typing, or you just came up with your own "pattern" as time passed?13

-

If I uncheck the "stay signed in" checkbox on login, don't have it checked when I need to sign in again. Especially, don't do that and make me sign in ten times when I'm just navigating your page.

Try to avoid making the website for people like yourself, because not everyone is stupid.1 -

My fucking internet went off and was so slow that even ssh resetted me, fucking thanks. And it was the same typical pattern again: turn off, turn on, kbps speed, wait ~30/50 minutes and then again fullspeed, fucking cuntbags stop fucking fingerbanging the ethernet ports - I need to get shit done.

1

1 -

In two weeks of christmas holidays my brain had enough time to fire the guy that was in charge of my sleep pattern and to hire a new guy with less experience that is really trying hard to fix the mess that has been left for him.

Went to bed at 5am...

Woke up at 9am...

I'm getting there!4 -

i've never earned more in my life

i've never been more bored in my life

...is this a pattern?

pls halp13 -

Why do most apps have a password reset page that redirects to a mobile site ?

Why not give them a code they can enter into the app which would then show a password reset page within the app or a link which opens the page within the app.

Isn't it good practice to keep the user within the app?

Isn't it better to serve a token than serve an entire html webpage for the server.

I've been thinking about this but 90% people follow the website pattern and Idk why. Am I missing something ?

Please fill me in on it. (Even devrant uses the same pattern)5 -

Well, I only worked with two designers so far. The first one was incredibly competent and a nice guy. The second one however...not so much.

He wanted me to change the background of his website to a specific pattern. It was a pattern that easily could have been used as repeated background.

So I asked for a single pattern in PNG format.

Guy refuses to give it to me and forces me to use a 4k image as background.

BOI WHAT

PAGESPEED LITERALLY RATES THE SITE 30/100 BECAUSE THE IMAGE TAKES SO FUCKING LONG TO LOAD

WHAT IS WRONG WITH YOU2 -

Interviewer: Could you please make a class to force it create one instance at most?

Me: Sure!

(I didn't know the singleton pattern)

class A {

public static bool isCreated = false;

A() {

if(isCreated == true)

throw new Exception();

isCreated = true;

}

}5 -

OMFG HOW CAN SOMEONE FUCK A PROJECT SO HARD IN TWO WEEKS???

I struggled for 6 months to keeps a minimal pattern and logic throughout the project between tight deadlines and changed scopes, but in two weeks they managed to literally shit on top of it and now I have to fix this bullshit?

Oh boy...I really don't know if I fucking scream, punch someone or rage quit.1 -

I'm currently learning design pattern and was looking to understand the basic difference between Factory and Abstract Factory patterns. So the latter is typically used as a factory to procude factories...1

-

Here is my idea for a time machine which can only send one bit of information back in time.

@Wisecrack has asked me about it and I didn’t want to write it in comments because of the character limit.

So here we go.

The DCQE (delayed-choice quantum eraser) is an experiment that has been successfully performed by many people in small scale.

You can read about it on wikipedia but I'll try to explain it here.

https://en.wikipedia.org/wiki/...

First I need to quickly explain the double slit experiment because DCQE is based on that.

The double slit experiment shows that a particle, like a photon, seems to go through both slits at the same time and interfere with itself as a wave to finally contribute to an interference pattern when hit on a screen. Many photons will result in a visible interference pattern.

However, if we install a detector somewhere between the particle emitter an the screen, so that we know which path the particle must have taken (which slit it has passed through), then there will be no interference pattern on the screen because the particle will not behave as a wave.

For the time machine, we will interpret the interference pattern as bit 1 and no interference pattern as bit 0.

Now the DCQE:

This device lets us choose if we know the path of the particle or if we want wo erase this knowledge. And we can make this decision after the particle hit the screen (that is the "delayed" part), with the help of quantum entanglement.

How does it work?

Each particle send out by the emitter will pass through a crystal which will split it into an entangled pair of particles. This pair shares the same quantum state in space and time. If we know the path of one of the particle "halves", we also know the path of the other one. Remember the knowledge about the path determines if we will see the interference pattern. Now one of the particle "halves" goes directly into the screen by a short path. The other one takes a longer path.

The longer path has a switch that we can operate (this is the "choice" part). The switch changes the path that the particle takes so that it either goes through a detector or it doesn't, determining if it will contribute to the intererence pattern on the screen or not. And this choice will be done for the short path particle-half because their are entangeld.

The path of the first half particle is short, so it will hit the screen earlier.

After that happened, we still have time to make the choice for the second half, since its path is longer. But making the choice also affects the first half, which has already hit the screen. So we can retroactively change what we will see (or have seen) on the screen.

Remember this has already been tested and verified. It works.

The time machine:

We need enough photons to distinguish the patterns on the screen for one single bit of information.

And the insanely difficult part is to make the path for the second half long enough to have something practical.

Also, those photons need to stay coherent during their journey on that path and are not allowed to interact with each other.

We could use two mirrors, to let the photons bounce between them to extend the path (or the travel duration), but those need to be insanely pricise for reasonable amounts of time.

Just as an example, for 1 second of time travel, we would need a path length about the distance of the moon to the earth. And 1 second isn't very practical. To win the lottery we would need at least many hours.

Also, we would need to build the whole thing multiple times, one for each bit of information.

How to operate the time machine:

Turn on the particle emitter and look at the screen. If you see an interference pattern, write down a 1, otherwise a 0.

This is the information that your future you has sent you.

Repeat this process with the other time machines for more bits of information.

Then wait the time which corresponds to the path length (maybe send in your lottery numbers) and then (this part is very important) make sure to flip the switch corresponsing to the bit that you wrote down, so that your past you receives that info in the past.

I hope that helps :)9 -

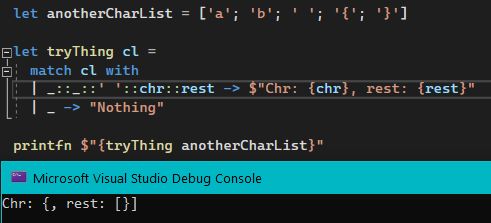

!rant

...i realized i can actually pattern-match like this (as in, sequence of elements (including "whatever") instead of just head::rest in F#...

...from watching a talk about prolog.

like "wait... prolog can do this when pattern-matching? that seems very useful. i think i tried to do that in F# but it didn't work, which seems stupid... I'm gonna go try it again".

and sure enough =D

i think i really am gonna like F# if i find the time and resolve to break through how its different mode of thinking stretches my brain in ways it hasn't been stretched for a long time =D 6

6 -

"The MVC framework is just a hype, we dont need to refactor our ASP.NET webshop" - former CTO

Dudeee, its a pattern not a framework, ever heard of 'single responsibily' instead of these monolithic 8000 line helper classes!3 -

Haha I started to read some programming books (want to get better in pattern designs, etc) and now I am dreaming code for 3 days in a row. Maybe I shloud stop? Fml4

-

My reaction when I meet peopel that still don't use any Architecture/Design Pattern to code.

What is yours?

-

I write a blogpost twice a year and then spend 4 hours fixing my handcrafted blog engine. It's healthy to stay in the loop regarding the latest Vite, Typescript and React bugs and inconsistencies I guess.

Anyway, I explained a cool pattern with Rust traits:

https://lbfalvy.com/blog/...20 -

1st day of the job tomorrow.

Tried to reset my (broken) sleep pattern by going to bed earlier and getting up at half 5, which is the time I'll need to get up. In typical fashion, I just didn't sleep. I just laid there with my eyes closed. This has happened before, so I know what will happen - no sleep again tomorrow and I start my first day after being awake for ~48hrs...

Fuck you, insomnia.2 -

One of my favorite patterns in Java ✨

@highlight

public interface SocketOptions {

}

public enum UnixSocketOptions implements Options {

FOOBAR,

DAVE,

}13 -

"The way to 'get your name out there' is to establish a pattern of excellent work and a reputation for integrity over several years. " - Andy Rutledge5

-

Fucking Edge forcing itself onto me after Windows update by displaying annoying dark pattern like fullscreen popups and putting itself into the task panel.

FUCKING GO AWAY you piece of shit! Nobody wants you!

Do I have an OS or fucking malware on my pc?7 -

When you're working on a project and and face a task you solved like 1000 times before so you decide to bring some fresh wind and implement a fancy design pattern... and it works with the first compile!! *badass meme* ( someone send me one I dont have)1

-

Microsoft.Graph has filtering capabilities for dates, which is great.

Format is: "2018-04-12T12:00:00Z"

Doesn't natively support string conversion of a DateTime to match the pattern... Nor does Graph accept any date formats such as the "s" parameter for sortable dates, which goes into milliseconds, etc.

DateTime.ToString("derp");

Sometimes I wish I was a Java Dev.1 -

Just implemented a feature using Builder pattern .

State of mind : aaaghh fuck this shit , i am in hell . let's get some rest before integrating and testing this disaster

after some rest , while integrating " Oh you beauty my past self, i love you😍😍😍😍

-

2 job offers on table.

One, where I'd to work on multiple technologies, comfortable timing i.e 6 - 7 hours a day. Enough time to do personal projects.

Other, I'd to work on single technology stack, but more complex and large projects. Good for architectural and design pattern knowledge. Better pay, but stressing work hours like 8-10 hours daily. Might be on weekends as well.

Help me out!18 -

Today was a bad dev day working on a shitty React project. Not that React in itself is bad, but it can be hell to work with when the code is a big pile a crap full of anti pattern code. I spent the day refactoring to try to fix a bug, but to no avail. It would take days if not weeks to put some order in this mess and to prevent such bugs.6

-

Does anyone have a true measure to recruit freshly graduated? yesterday I had a technical interview with a candidate, the problem is he didn't know even the basic of coding in Java, like String equality and hashcode, he also didn't have a solid understanding of basic design pattern. But what made me want to give him a chance is that he seemed highly motivated and eager to learn. So I don't know what to do guys?10

-

I remember the day when I was in first year college. We were tasked to make a c++ program that will print a diamond pattern. Didn't know that you could achieve that with for loops.

What I did?

A hard coded one.

What I got?

An F -

Little known "Achievement" on Google Play Games:

1)Open Play Games.

2)Swipe the screen anywhere in this pattern - Up, Up, Down, Down, Left, Right, Left, Right. This reveals the classic keypad.

3)Click the buttons in this order EXACTLY - B, A, Play button.

4)Thank me later.2 -

Proactively seeking out new knowledge: mostly podcasts and watching what's new on github.

keeping an open mind: just because some pattern is industry proven doesn't mean its necessarily the better,

Testing: write a test describing a problem then trying to write slightly different solutions (eg. One that leverages service location, another that emphasize dependency injection..),

Forced & timed breaks: keep hydrated, don't get stuck "spinning the wheel".. :) -

On my first day at new job, a non-technical person used CQRS word while explaining the system. When I asked what's need of such a complex pattern for simple query type service, he simply backed off. 😝

-

After a code review where I identified an odd way a request was being generated, I suggested to the developer to utilize the Strategy pattern.

Knowing that the Strategy pattern probably wouldn't make sense in the current context, I told him I would put an example together by the end of the day.

I throw something together, sent it to him.

Go to the restroom, come back and 'Bob' says..

Bob:"There is my hero. Justin said you saved the world again. What was it this time? World hunger? Global warming? Ha ha ha."

Frack off you condescending kiss ass. Why don't you take 5 minutes to listen and understand the problem Justin was having instead of making fun of him?

Yea, I heard you this morning laughing at his code, monday-morning quarterbacking a solution in which you have no idea whats going on.

Heard your days are numbers anyway. Good riddance.1 -

I've been on holiday for almost 2 months now... I don't even know what a sleeping pattern is anymore😭😭

I have school tomorrow... I'll look like a zombie during orientation😂 -

Brought to you from the 5000+ line Java file. Contained within the 1500 line method. I bring you the following pattern. Let's guess all the ways we could've done this better?

- Streams

- For loop

- Iterator

What gets really fun is when this you get this pattern doubly nested so you have a random i's and j's floating all over the place.

Note: this isn't ancient code it was developed about 3 months ago.

[Code has been lightly anonymized] 5

5 -

I feel like there is a pattern going on with stupid-ass recruiters, so let's fix it.

if(person.getJob() === 'Recruiter') {

person.insertIdea(Sex.FEMALE, Ability.CAN_FUCKING_PROGRAM);

//stupid shitheads

} -

Jesus fuck. Why does anyone thing you need a factory pattern when you have only one single class you create...5

-

Open for all.

Below are 4 sets of numeric data. Each set carries two numeric strings. Each occur in a pattern and each set below are n'th terms of the pattern. Each set equals to the value 50. The value of 50 can be obtained from either the first or the second string or even both.

Find a next term or even a n'th term of the pattern.

sets -

{ 738548109958, 633449000001000435 } , { 667833743011, 65173000001000838 } , { 314763556877, 652173000001000685 } , { 332455491545 , 65216100000100411 }

You will be rewarded

You will not fail 5

5 -

Hello everyone, I would like to create a native desktop application on Linux, which language/gui framework would you suggest to me, knowing that I have been working as a Web developer all my life?

I tried Javafx, but I don't like that very much, is really confusing and requires a lot of boilerplate, I use to work with visual studio, so a drag and drop visual editor to create a gui, that was easy.

I tried electron, but I don't really like it.

The main problem I am facing is adapt a pattern like mvc to desktop app, and share data between scene.

I would love to use flux pattern.

Any tutorial suggestions?8 -

Regex are one of the finest art piece in software.

Had a 2 hour class and even after that I think you can spend months on mastering it.

It's not something I haven't used but we undermine whole beauty of how random characters can form formula and extract complicated pattern.

Kleene we owe you. -

Integrating a modern JavaScript framework into an existent, large sized MVC project is really a pain in the ass...2

-

Today we're going over a list of mouth breathers that I strongly dislike.

- people that don't turn their turn signal on until they've basically come to a complete stop.

- people who can't follow the pattern in grocery stores. THERE ARE FUCKING STICKERS ON THE FLOOR. FOLLOW THE FUCKING PATTERN.

- people that post on a forum and then never respond to the replies on their posts7 -

lel just noticed a pattern here:

if someone asks newbie questions on devrant about anything - gets shat on

but if that person asks about react - its all roses and rainbows

i say there's a new cult in town and theyre recruiting!11 -

Some old cool warning:

"class X' only defines private constructors and has no friends"

(using a singleton pattern implementation)1 -

we have 4 options when come to Windows 10 development

+ Windows 32 API

+ WPF

+ WinForm

+ UWP

the best performance would be Windows 32 API but really painful when maintaining

but game like League Of Legends have complex logic still accomplish so I think they also create their own OOP pattern7 -

I'm a strong believer in the triple-A unit-test pattern: Arrange, Act and Assert

Anyone else that uses this for their tests? Do you see any cons to using this approach to writing tests? Are you using an alternative?11 -

Having just been let go from a job I couldn't keep up with, I'm now confronted with a job search where every job description includes something like, "Must be able to multi-task across dozens of different projects per day as if you were six people in one."

I'm seeing a pattern here. Apparently, the productivity of only one person is all that is needed to run an entire department's worth of work.1 -

For today I had to implement a Strategy Pattern solution for dynamically loading items in a view. So, I came in the morning and started doing it, finally after some time I acomplished it, with one strategy, so when I started implementing the other ones, everything went to crap so I thought "Okay, lets checkout to how it was on the morning, just to realize I leaved yesterday without commiting.

I wanna kill someone1 -

is there some trendy definition for "keyboard pattern" that im unaware of?

seriously though... while not always current on whatever scammer\phisher\wannabe hackers\etc are doing... im still one hell of a, beyond capable, cyber security pro... mainly cuz I've been networking professionally since half-duplex existed, know, and thoroughly enjoy data architectures, encryption and things like hardware drivers and low level systems down to the literal, physical\mechanical and digital bits...

basically,i have a rare viewpoint in comprehension; I'm used to nonsensical tactics being enforced as if they were actually valid (basically everything other than a min length (~6+) and *don't use basic words found in a pocket dictionary* is typically a double edged sword).

so wtf do they mean? i mean, technically, everything typed can be a keyboard pattern... itd be like how people say "vps" when they are talking about a proxy. 19

19 -

Hmmm, so you’ve used the DAO pattern everywhere so you’re not coupled to a particular database, but you don’t mind having 8 lines of Hibernate annotations at the start of an entity class, and 2-3 annotations on every property.

I see... -

I'm still learning so take it easy on me, I'm trying to learn typescript and Factory pattern, hope I did it correctly this time :)

Link: https://pastebin.com/99AL3qah

Its only one class in hope I got it right so I can continue with the others9 -

I wrote some code in a different pattern than that was seen in the project. Got positive comments, but the senior said that as per the project rules you are not supposed to write like this.

So ended up writing some duplicate code but somehow it incorporates my pattern and existing project rules.

Should I be happy or sad? -

1. Teach DS and Algos. Not basics but advanced data structures and the ones that are recently published.

2. DBMS should show core underlying concepts of how queries are executed. Also, what data structures are used in new tech.

3. Teach linkers, compliers and things like JIT. Parsers and how languages have implemented X features.

4. Focus on concept instead of languages. My school has a grad course for R and Java. (I can get that thing from YouTube !!)

5. Focus a little on software engineering design pattern.

6. It's a crime to let a developer graduate if he doesn't know GIT or any version control. Plus, give extra credits for students contributing to open source. Tell them if they submit a PR you get good grades. If that PR gets merged bonus (straight A may be ?)

7. Teach some design pattern and how industry write code. I am taking up a talk at school to explain SOLID design pattern.

Mostly make them build software!

Make them write code!

Make them automate their homeworks!

Make them an educated and employable student.!1 -

@dfox

I recently posted a rant containing the tag "$" (php, you know).

But if I search rants with the search pattern "$", the search returns no results.

I guess this has something todo with $ as a special character ;)

Is it a bug or is it a feature? *g*7 -

Am I the only one who will spend half an hour reading through the "top all-time rants" section of devRant to figure out why they're so popular? I just can't shake that feeling that there's some sort of pattern there that I can optimize...

Guess I must be a developer, given that last statement :D2 -

Who actually started the reign of mixed character passwords? because seriously it sucks to have an unnecessarily complex password! Like websites and apps requesting passwords to contain Upper/Lower case letter, numeric characters and symbols without considering the average user with low memory threshold (i.e; Me).

Let's push the complaint aside and return back to the actual reason a complex password is required.

Like we already know; Passwords are made complex so it can't be easily guessed by password crackers used by hackers and the primary reason behind adding symbols and numbers in a password is simply to create a stretch for possible outcome of guesses.

Now let's take a look into the logic behind a password cracker.

To hack a password,

1) The Password Cracker will usually lookup a dictionary of passwords (This point is very necessary for any possible outcome).

2) Attempts to login multiple times with list of passwords found (In most cases successful entries are found for passwords less than 8 chars).

3) If none was successful after the end of the dictionary, the cracker formulates each password on the dictionary to match popular standards of most website (i.e; First letter uppercase, a number at the end followed by a symbol. Thanks to those websites!)

4) If any password was successful, the cracker adds them to a new dictionary called a "pattern builder list" (This gives the cracker an upper edge on that specific platform because most websites forces a specific password pattern anyway)

In comparison:

>> Mygirlfriend98##

would be cracked faster compared to

>> iloveburberryihatepeanuts

Why?

Because the former is short and follows a popular pattern.

In reality, password crackers don't specifically care about Upper-Lowercase-Number-Symbol bullshit! They care more about the length of the password, the pattern of the password and formerly used entries (either from keyloggers or from previously hacked passwords).

So the need for requesting a humanly complex password is totally unnecessary because it's a bot that is being dealt with not another human.

My devrant password is a short story of *how I met first girlfriend* Goodluck to a password cracker!5 -

That moment when you read a Redux article titled "The Perils of Using a Common Redux Anti-Pattern" as part of educating yourself on the stack of the app you're paid to continue development on, go back to the code of the application, and realize the ENTIRE REDUX STATE WAS BUILT ON THAT ANTI-PATTERN. I thought I was the Redux noob!! #FML

URL: https://itnext.io/the-perils-of-usi... -

With unlimited time, I'd put resources into the invention/improvement of a container which can be fed photons and is able to bounce them between mirrors for a long time, like days, and can be released at any time.

With that tech, I would build a delayed choice quantum eraser and set it up so that it produces with many photons an interference pattern or strips pattern by choice, representing a bit of information.

Then i would set up many of those devices in a row so that the results are representing bit strings for arbitrary information.

And I will use this time machine, which can send back information, to win the lottery and other stuff.1 -

It's sort of two separate projects although they are very tightly related.

The first is a pattern combination library and parsing engine. It takes a superficially similar approach to Regex or parser combinators, but with some important underlying differences.

The second is a specialized (not turing complete) language for rapidly defining full language grammars and parsers/lexers for those languages. -

I shall delay your PR with my giant egotistical scrotum by:

- Use less words for your function name

- Use this obscure pattern you have not learnt in your outdated compsci degree

- Each loop should get their own function

- Your function has too many parameters

- You must name every instance of magic numbers / strings

- Here, have another obscure test case to write9 -

Using a 3rd part library to manage drag/drop in wpf and then have to use router events because it doesn't natively manage commanding for mvvm pattern, then you write a commanding behaviour which works perfectly for everything else except drag drop because it's not part of the visual tree so binging is a nightmare!! 😡😡😡😡😡😡😡😡1

-

Theregister.com is wrestling with gpus that need 700 Watts of juice and how to cool them. 50 years ago I was reading an excellent magazine called "Electronics". And I remember that IBM came up with a scheme to absorb enormous amounts of heat from chips. You simply score the underside of the chip in a grid pattern and pump water through it. Hundreds of watts per degree Celsius can be removed. Problem solved.4

-

Need to use new module or pattern:

1. Read the online documentation

2. Have no fu*kin clue what I just read

3. Try for 2 hours and fail.

4. Go home and sleep

5. Wake up at 3am from a fever dream with the solution to the problem

6. Go to work and implement it in 10 min

I guess I learn when I sleep -

TeamLeader : "Come two seconds"

TL : "I'd like you to check that out when you do that"

Me: "But it's your job to do this"

TL: "Yeah, but I'd like you to just check in case I forgot"

THEN DO YOUR FKING TASK PROPERLY WHATS THE POINT IF YOU FORGOT AND I DO IT

Edit my english sucks1 -

My first ever system... It was bad, no coding standards, no reusing, no pattern, no nothing. I really don't know how I managed to make it work.

-

If you have 5 classes using the same postfix... and inside, 4 methods en each, called EXACTLY THE SAME... Please, create at least an abstract class!! or else next week you'll have to make the EXACT same change 5 times!!! or more!!... I don't know man! just saying... Patterns exist for a reason!1

-

!rant

TL;DR: Can anyone recommend or point at any resources which deal with best practices and software design for non-beginners?

I started out as a self-taught programmer 7 years ago when I was 15, now I'm computer science student at a university.

I'd consider myself pretty experienced when it comes to designing software as I already made lots of projects, from small things which can be done in a week, to a project which i worked on for more than a year. I don't have any problems with coming up with concepts for complex things. To give you an example I recently wrote a cache system for an android app I'm working on in my free time which can cache everything from REST responses to images on persistent storage combined with a memcache for even faster access to often accessed stuff all in a heavily multithreaded environment. I'd consider the system as solid. It uses a request pattern where everthing which needs to be done is represented by a CacheTask object which can be commited and all responses are packed into CacheResponse objects.

Now that you know what i mean by "non-beginner" lets get on to the problem:

In the last weeks I developed the feeling that I need to learn more. I need to learn more about designing and creating solid systems. The design phase is the most important part during development and I want to get it right for a lot bigger systems.

I already read a lot how other big systems are designed (android activity system and other things with the same scope) but I feel like I need to read something which deals with these things in a more general way.

Do you guys have any recommended readings on software design and best practices?3 -

is it ok if im the only person who codes an android app and i code it by my own free will and skills?

meaning im not following any design pattern while doing so.

i dont like following design pattern because it narrows down my freedom of writing code the way i want to write it.

its like, imagine, you have a strict schedule or a dad who says at:

5:59am: get up

7:15am: study

9:01am: eat breakfast

11:00am: go to college

3:07pm: eat lunch

5:14pm: come home

8:02pm: eat dinner

9:00pm: brush your teeth

10:58pm: go to bed

11:59pm: you must sleep before midnight

IMAGINE THAT. be honest, could you actually follow this schedule in its exact hour and minute as it was written down for the rest of your life every day, no exceptions?

if you're a sane person, you would answer - no, of fcking course not.

life is much more broader and dynamic than following a static pattern every day forever.

so is not following a static design pattern while coding an app.10 -

Is noop actually a user, or is she someone's "second ++"? I noticed a pattern in her behavior, that she always ++'d my rants right after {she256}5d106eb069.4

-

you have 6 problems

you introduce rust, now you have 30 problems

you worked on it for about a month and maybe this is the 6th rewrite, and you now have 300 problems and also you have less functionality than every one of the previous versions

why and how

this pattern seems to be consistent no less16 -

So apparently, the next version of C# is gonna have list pattern matching more powerful than F#...

...so... my motivation to learn F# drops back down to curiosity, since C#'s list pattern matching seems to will have all I needed and wanted for my parser, as opposed to F# which seems to not have it...

also fuck Russia and China, but I don't want to think about the impending apocalypse, thankyouverymuch. -

I am finally getting to learn web api with mvc 6, core.net or whatever they call it. Why do all the example show the repository pattern data access layer? I have not used that for several years.

-

When the company running my student accommodation not only stores the passwords for their resident portal in plain text and emails them straight to you in the case of a forgotten password. But also generates your password at sign up according to a specific general pattern...2

-

Just finished the code tests. I swear all of them could have just been regex. The first 2 I know can be because I did.

The last one was pattern matching in an array of strings (think battleships)

I actually fucked up the second test because I decided that they probably want code instead of regex...8 -

Any data scientists here? Need a non ML/AI answer. What is the modern alternative to Dynamic Time Warping for pattern recognition?

-

I have searched the universe of how go lang developers modularize their api server.... I couldn't find any.. Except for this git repo https://github.com/velopert/...

So, what kind of architecture or pattern do you use? Oh, and I am more interested in MVC4 -

I was just asked to make a method for the "logical or" (aka "||") in JavaScript "because it's used multiple times".

How dense do you have to be to argue against "it creates additional closures" and "creating functions for built-in operators is an anti-pattern".

Come on!!! At least it's my last week here, I'll be done with this soon enough.1 -

Had to factory reset my phone as I added a pattern password. I used that password all day and right I as am getting ready for bed, I FORGET IT!! Stupid me did not put on USB debugging and I am like... Seriously!!1

-

one of the few times i follow the existing structure and pattern i get told to fuck off and hardcode it instead because we want something temporary

-

Writes regex pattern at regex101.com: match

Writes same pattern on site: No matches

Trying to fetch text from foreign site to detect when the text changes. -

Dependency injection is the most useless piece of crap ever invented. Convention over configuration my ass.

It simplifies nothing a good architecture and pattern can't solve. It's just the current trend but it's the hugest pain in the ass I've ever experienced. It just adds complexity to the project.

I think it's just a thing for masochists and lazy devs, but then why not sticking a huge dido up your ass it's the same fucking thing.12 -

Why the hell every IT/CS professor teach pattern codes like pyramid, star and many weird kind of designs to do on console? just why? Why don't teach basic implementation Problems which are really going to ask for SDE jobs in most of the companies.8

-

I'm curious about which kind of pattern/architecture you are using in your react native apps (big ones).

-

my biggest insecurity... I don't know, I have some problems with people who only stay with one idea, technology, pattern and cannot change, I am afraid of becoming that3

-

Love pattern matching, esp. in function clauses. No programming language can be considered feature rich without it. Yes, I'm looking at you, <mainstream programming language>1

-

Recommended PHP ORM?

Had a couple bad experiences with Sequelize (I know it's JS, save it). Also, I'd like to try the decorators pattern. I'm also looking for good (and I mean GOOD) relationships management (you are allowed ONE pun about it).

Anyone? :((3 -

So...I kinda started a huge project and it requires a pattern recognition for the users' schedules as the cherry on top.

I have no idea from where could I start learning this. I tried lots of stuff, I have basic knowledge of ai, but I need to get to the next level.

Any advice?2 -

Why do I get super depressed when stuck on a hard bug? It affects my life, sleeping pattern, etc..1

-

Lets make animated fractal pattern that spins and resizes I said. It'll be a fun and easy way to brush up on raw javascript and to try html5 canvas I said.

Provides a lot more learning opportunities than I had thought :)2 -

Thoughts about the strangler pattern?

I just came across it today and it sounds like a neat thing.

My main concern is with redundancy and the risk that old classes or methods would further be used and expanded. I've come across enough obsolete classes which have been further expanded because the dev ignored the flag and didn't want to search further or create new implementations. I wonder how this could be avoided.2 -

Why is it so common for people to insist on following particular patterns at PR when they have no concept of why the pattern originated or when and why it should apply?9

-

Test Driven Development, Pattern Driven Development, Domain Driven Development, Design Domain Driven Development.

When do we eventually get to the development part??3 -

How do I solve this board without guessing? Always get stuck on these and what the pattern to look for is.

13

13 -

Lensflare, look at how those lines in between menu elements don’t protrude all the way to the left edge, instead stopping before icons, so there is no line in between icons. Telegram uses the same UI pattern for “all chats” screen, and it looks good. What about using it in JoyRant in notifications screen?

2

2 -

@devrant team - how are the tags sorted? I've noticed that they sometimes get sorted somehow but I can't find a pattern.1

-

In javascript, is there a difference between separate function calls that mimic a "chain pattern" or state changes using if/else if/else and using the chain or state pattern directly? The internet gave me no real/helpful response to that.

Suppose that:

if(isThingA(thing)) {

makeThingB();

else if(isThingB(thing)){

makeThingC();

else {

makeThingA();

}

That code is always executed e.g. after a user mouse click. "thing" gets defined in some other code.

It can be seen as a state machine that goes back to its starting point.

Is a pattern with objects/classes/prototypes even needed/preferred instead?

It's partly a problem I'm facing in my code but it's also interesting to know ideas/thoughts on this.3 -

for fellow Data Scientists/Analysts..

I was wondering...which is the longest maintained time series data of all time? i am just learning about trends, seasonality , etc in a time series, and wondered if the pattern still exists in fairly large data, like for 100 years or 100,000 days or if our present forcasting models like arma/ arima would cover them -

stateless design is another part of programming or web development i haven't quite been able to grasp fully, I understand what it is and its capabilities but I cant seem to.... say "hey to implement stateless design on project xyz that is an actual project will real life usage, this is how to go about it" it's easy to build any web app like a story or like a building, from the ground up and roof, but what about a webapp that has really unpredictable data and is very fluid that the ui just moves around and adapts to whatever data is thrown at it, as long as the data makes sense and is applicable to be situation on ground, you can't just build such a ui from the ground up from a template, you'll end up with a lot of if elses until the code is bloated and probably unreadable,

there has to be common sense in what I'm trying to say, maybe I'm not using the right words10 -

Had a particularly strange one about hose pipes flailing wildly but in a very specific pattern.

I’d been working on a bit of a monolithic multithreaded data pipeline and I couldn’t work out what I wanted to do with the thread pool. When I woke up I had a picture of those hose pipes, that basically how it now works😂 -

I always have multiple accounts thanks to Single-Sign-On, so I don't find my event tickets, logins, and contacts. To make it worse, those sites regularly log me out for no reason and some force logging in using my Google account although I have a main account with my business email address.

I suspect that's another deceptive pattern that they let happen on purpose so they can claim to have more users than they really have.1 -

SonarQube is obnoxious in it's moronic ideas that demonstrate lack of understanding of the languages it's analyzing.

In C# there exists a special kind of switch-case statement where the switch is on an object instance and the cases are types the instance could polymorphically be, along with a name to refer to that cast instance throughout the case. Pattern matching, basically.

SonarQube will bitch about short switch-case statements done in this way, saying if-else statements should be used instead. Which would absolutely be right if this was the basic switch-case statement.

This is a language with excellent OOP features. Why are your tests not aware of this?

I can't realistically ignore the pattern because that would also ignore actually cases where it's right. And ignoring the issue doesn't sit right with me. How does it look when a project ignores tons of issues instead of fixing them? -

My project setup:

-Entity Framework (Code first)

-Autofac (Dependency Injection)

-Asp.Net MVC C# with service layer pattern.

How is your setup? :)1 -

Not using design pattern on a school project because he was too busy understanding what the fuck was Smalltalk since no one understood it in classes.

yes it was me. I don't blame myself, I really took too much time understanding that (and I was the only one to do that, the other just asked me. ALL OF THEM). But I should, I guess. -

You know what is awesome. React with Redux. It is such a clean and scalable pattern for building UI. Conceptually not that easy but on once you have it. So much better than any other pattern I have seen.

But what fucks me off is working with other librarys with it not hard just fucking annoying.2 -

After rejoining, this place really does seem a bit deserted. So ill try to bring some controversy to this place.

AI, a hype? Machine learning wearing a mask? Pattern recognition on steroids?

What do y'all think? In my opinion its an awesome technology that has many practical applications but it is far from what they try to tell us it is. Its awesome, yes. But under the hood still mostly pattern recognition, classification etc. LLMs seem a bit more complex but still the same thing.

Sure, it's easy to write a program that does a given task a lot better than a human, however its limited to doing exactly that.. So is a calculator.

What I think of then hearing AI is what is now known as general intelligence but just a question of time until they come up with something that can do more than AI and call that general intelligence and actual general intelligence will be called something else.. You get me?5 -

Websites nowadays feels and look the same in its structure and how its designed and the elements around it

Its almost like software interface designers follow a pattern

I fee like "hand crafted" software for a niche will be the way to go to differentiate yourself and to give unique experience to end users -

Today's story.

1. Git commit all changes

2. Need to git pull bcz of master change

3. Mistakly did git commits undo.

All my changes fucked up.

At last

Ctr+z saved my ass -

I was new on iOS so they hired a senior guy to lead the project ... I tried to listen to him but when he started to talk about "Gingleton" pattern i just put my headphones back

-

Sigh, what is it with these cowboy SQL Devs? Why the fuck is this a pattern for anything?

New contract, new idiots, sigh.

EDIT: Had to change picture because Prod is different to Dev (but no dev has been done since release....smh) 4

4 -

Not ranting. Just wonder, dapper + repository pattern, a good idea? And how it relates to unit of work? As dapper doesnt have iqueryable like entity framework has. C# dev ranters, enlighten me, show me the way 🐒💡💡

-

Rant("

I wonder if Orchardcms with its great idea to replace the mvc pattern with an mpvchds pattern (model part view controller handler driver shape) should inject dependencies of IHateble, IBullShitService and IFuckingFuckshitCMS Interfaces.

");2 -

My standard pattern for using 'time saving' things (frameworks, scripts, new technologies) seems to be:

1) Get excited by how easy <new thing> is to use.

2) Spend the next 12 hours trying to work out how to work with this new shit.

3) Realise I'm now further back than I started.

Today has been docker.

Easy to run my new Wildfly docker container.... can I find the IP address to connect to? Can I fuck.2 -

Just askin:

If you have a method which returns a value from an array. What do you prefer in a case when the item is not found?

A)Throw an exception

B)return null

C)return a special value like a null object or some primitive type edge value like Integer.MIN_VALUE14 -

My model for one part of my project has become so crazy big I’m become lost every time I add a new feature or debug :-/

-

Just finished the night job at 5am. Looking forward to a good rest tomorrow! Not looking forward to correcting my sleeping pattern for Monday 😭1

-

Can we please pick a consistent pattern across the same app? I don't have enough short term memory to deal with this.

-

I just don't get why CSS shorthands don't get to follow the X-Y pattern, such as margin 10px 5px, as 10px for width and 5px height. WHY DOES IT HAVE TO BE THE INVERSE?

I don't use CSS very often, but when I do, I remember how stupid and nonsense that is. -

Reading comments here and subsequently looking here I’m wondering if I’ve been doing MVC wrong. I’ve always thought the View calls data from the Model. However I see people load model data and then call the view via the controller.

Which is right?2 -

So I've worked a bit too hard today, some thing with production being down...

Does anyone have some sort of relax/wind down pattern they don't mind sharing?

My current one is watching youtube the rest of the evening, but I am interested what everyone else does to calm their minds after a long workday4 -

- Learned how to use Git properly;

- learning how to use SASS and building the stylesheet of this all by myself;

- Learned how to reuse my code all over the project;

- Made my first design pattern and ruleset to create and maintain a project. -

Im a mid level developer (4 years of work exp in a mid sized company)

With little design pattern knowledge.

How fucked am I, and what are the best resources to learn them? What are the essential design patterns I should know?3 -

I'd had some weirder experiences with Rust where errors would surface late or in odd places so now I can't tell whether this is actually legal or the error is being discarded because it's in dead code.

When did Rust start extending the lifetime of temporaries outside enclosing blocks to match their borrows?

(in case it''s not obvious, pattern and full_pattern are both slices) 5

5 -

Instagram's UI is like an extremely hard game that will kicks you out of the process just a second before you're about to finish just to make you start over and try harder. It's a know deceptive pattern to make people invest more energy and engage more intensively, some will leave in frustration, but the rest will feel more attached to your product thanks to the sunk cost fallacy. What a pathetic waste of time! Sad.

-

Which way you prefer to write code?

1.

server.addHandler(...)

server.addSerializer(...)

server.addCompressor(...)

...

2.

server.add("handler",...)

server.add("serializer",...)

server.add("compressor",...)

...

3. Both. (as per mood) :p15 -

Facebook is testing out showing conversations in tabs to make sure you don't miss any conversation.

Looks like another good UX pattern.

-

Is it a good idea to learn two programming languages at the same time? I have a learning schedule created like I learn 2 languages alternatively in a week. For example, Python on Monday, Wednesday, Friday and Java on Tuesday, Thursday and Saturday. Is this a right approach to learn a new programming language or practice already learnt programming language? Any suggestions or developers following similar pattern of learning, please share your sample schedule.14

-

SQLAlchemy is such a bloated piece of crap. Even without the fact that many consider ORMs an anti-pattern, this library is extremely janky, salty and uncomfortable to work with.5

-

Which makes more sense: Coding a website membership system based on a design pattern of renewing 1 year to the day of initial registration? Or coding one that renews everyone on June 30th regardless of when they first registered?3

-

!rant

Go's tuple-style returns. ❤️

What's an exception? I don't even know what those are anymore. 😄

I've started using this pattern in JavaScript / TypeScript too.

also... supabase anyone? ❤️5 -

So let's say I kinda came up with a pattern/architecture for Unity scripting which I find really useful and elegant.

It uses some features which are quite new, and I can't find anything similar on Google. So I suspect I might actually have invented something new.

What should I do?6 -

How do you handle error checking? I always feel sad after I add error checking to a code that was beautifully simple and legible before.

It still remains so but instead of each line meaning something it becomes if( call() == -1 ) return -1; or handleError() or whatever.

Same with try catch if the language supports it.

It's awful to look at.

So awful I end up evading it forever.

"Malloc can't fail right? I mean it's theoetically possible but like nah", "File open? I'm not gonna try catch that! It's a tmp file only my program uses come oooon", all these seemingly reasonable arguments cross my head and makes it hard to check the frigging errors. But then I go to sleep and I KNOW my program is not complete. It's intentionally vulnerable. Fuck.

How do you do it? Is there a magic technique or one has to reach dev nirvana to realise certain ugliness and cluttering is necessary for the greater good sometimes and no design pattern or paradigm can make it clean and complete?15 -

!rant

To all the devs that got their stickers + @dfox and @trogus: do the stickers adhere to uneven surfaces without leaving glue or bits of the sticker material behind? Because I've reached the amount of ++ needed to ask for my stickers (I'm gonna ask them around xmas time so it can be like a xmas present 😀 and also so my brother doesn't take them) but I don't know if I can safely stick them there and remove them if need be

My computer has this circular pattern around the ASUS logo and the area around the touchpad is also uneven, it has some grid pattern

I don't have any stickers on it so devRant's may be the first :D5 -

Just to piss off people some more.

Since everything applies across industries.

How could design patterns apply to non software industries?3 -

1. Went to try chat.openai.com/chat

2. "Write a java program that uses a singleon pattern to calculate the sum of the first 100 prime numbers"

3. ???? HOW DOES IT DO THAT8 -

Online resources that discuss testing recommend the following pattern when writing your unit test method names:

given[ExplainYourInput]When[WhatIsDone]Then[ExpectedResult]()

This makes developers write extremely long test method names.. and this is somehow the acceptable standard? There must be something better.. I think I've seen annotations being used instead of this.5 -

As blood rushes through my face, hundreds of tiny capillaries bursting under my skin create a beautiful freckles pattern that has to be seen to be believed.

This is what happens to me when I vomit. That’s a great way to get a striking look on a budget when I’m late to a party!2 -