Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "network storage"

-

In a user-interface design meeting over a regulatory compliance implementation:

User: “We’ll need to input a city.”

Dev: “Should we validate that city against the state, zip code, and country?”

User: “You are going to make me enter all that data? Ugh…then make it a drop-down. I select the city and the state, zip code auto-fill. I don’t want to make a mistake typing any of that data in.”

Me: “I don’t think a drop-down of every city in the US is feasible.”

Manage: “Why? There cannot be that many. Drop-down is fine. What about the button? We have a few icons to choose from…”

Me: “Uh..yea…there are thousands of cities in the US. Way too much data to for anyone to realistically scroll through”

Dev: “They won’t have to scroll, I’ll filter the list when they start typing.”

Me: “That’s not really the issue and if they are typing the city anyway, just let them type it in.”

User: “What if I mistype Ch1cago? We could inadvertently be out of compliance. The system should never open the company up for federal lawsuits”

Me: “If we’re hiring individuals responsible for legal compliance who can’t spell Chicago, we should be sued by the federal government. We should validate the data the best we can, but it is ultimately your department’s responsibility for data accuracy.”

Manager: “Now now…it’s all our responsibility. What is wrong with a few thousand item drop-down?”

Me: “Um, memory, network bandwidth, database storage, who maintains this list of cities? A lot of time and resources could be saved by simply paying attention.”

Manager: “Memory? Well, memory is cheap. If the workstation needs more memory, we’ll add more”

Dev: “Creating a drop-down is easy and selecting thousands of rows from the database should be fast enough. If the selection is slow, I’ll put it in a thread.”

DBA: “Table won’t be that big and won’t take up much disk space. We’ll need to setup stored procedures, and data import jobs from somewhere to maintain the data. New cities, name changes, ect. ”

Manager: “And if the network starts becoming too slow, we’ll have the Networking dept. open up the valves.”

Me: “Am I the only one seeing all the moving parts we’re introducing just to keep someone from misspelling ‘Chicago’? I’ll admit I’m wrong or maybe I’m not looking at the problem correctly. The point of redesigning the compliance system is to make it simpler, not more complex.”

Manager: “I’m missing the point to why we’re still talking about this. Decision has been made. Drop-down of all cities in the US. Moving on to the button’s icon ..”

Me: “Where is the list of cities going to come from?”

<few seconds of silence>

Dev: “Post office I guess.”

Me: “You guess?…OK…Who is going to manage this list of cities? The manager responsible for regulations?”

User: “Thousands of cities? Oh no …no one is our area has time for that. The system should do it”

Me: “OK, the system. That falls on the DBA. Are you going to be responsible for keeping the data accurate? What is going to audit the cities to make sure the names are properly named and associated with the correct state?”

DBA: “Uh..I don’t know…um…I can set up a job to run every night”

Me: “A job to do what? Validate the data against what?”

Manager: “Do you have a point? No one said it would be easy and all of those details can be answered later.”

Me: “Almost done, and this should be easy. How many cities do we currently have to maintain compliance?”

User: “Maybe 4 or 5. Not many. Regulations are mostly on a state level.”

Me: “When was the last time we created a new city compliance?”

User: “Maybe, 8 years ago. It was before I started.”

Me: “So we’re creating all this complexity for data that, realistically, probably won’t ever change?”

User: “Oh crap, you’re right. What the hell was I thinking…Scratch the drop-down idea. I doubt we’re have a new city regulation anytime soon and how hard is it to type in a city?”

Manager: “OK, are we done wasting everyone’s time on this? No drop-down of cities...next …Let’s get back to the button’s icon …”

Simplicity 1, complexity 0.16 -

I have this little hobby project going on for a while now, and I thought it's worth sharing. Now at first blush this might seem like just another screenshot with neofetch.. but this thing has quite the story to tell. This laptop is no less than 17 years old.

So, a Compaq nx7010, a business laptop from 2004. It has had plenty of software and hardware mods alike. Let's start with the software.

It's running run-off-the-mill Debian 9, with a custom kernel. The reason why it's running that version of Debian is because of bugs in the network driver (ipw2200) in Debian 10, causing it to disconnect after a day or so. Less of an issue in Debian 9, and seemingly fixed by upgrading the kernel to a custom one. And the kernel is actually one of the things where you can save heaps of space when you do it yourself. The kernel package itself is 8.4MB for this one. The headers are 7.4MB. The stock kernels on the other hand (4.19 at downstream revisions 9, 10 and 13) took up a whole GB of space combined. That is how much I've been able to remove, even from headless systems. The stock kernels are incredibly bloated for what they are.

Other than that, most of the data storage is done through NFS over WiFi, which is actually faster than what is inside this laptop (a CF card which I will get to later).

Now let's talk hardware. And at age 17, you can imagine that it has seen quite a bit of maintenance there. The easiest mod is probably the flash mod. These old laptops use IDE for storage rather than SATA. Now the nice thing about IDE is that it actually lives on to this very day, in CF cards. The pinout is exactly the same. So you can use passive IDE-CF adapters and plug in a CF card. Easy!

The next thing I want to talk about is the battery. And um.. why that one is a bad idea to mod. Finding replacements for such old hardware.. good luck with that. So your other option is something called recelling, where you disassemble the battery and, well, replace the cells. The problem is that those battery packs are built like tanks and the disassembly will likely result in a broken battery housing (which you'll still need). Also the controllers inside those battery packs are either too smart or too stupid to play nicely with new cells. On that laptop at least, the new cells still had a perceived capacity of the old ones, while obviously the voltage on the cells themselves didn't change at all. The laptop thought the batteries were done for, despite still being chock full of juice. Then I tried to recalibrate them in the BIOS and fried the battery controller. Do not try to recell the battery, unless you have a spare already. The controllers and battery housings are complete and utter dogshit.

Next up is the display backlight. Originally this laptop used to use a CCFL backlight, which is a tiny tube that is driven at around 2000 volts. To its controller go either 7, 6, 4 or 3 wires, which are all related and I will get to. Signs of it dying are redshift, and eventually it going out until you close the lid and open it up again. The reason for it is that the voltage required to keep that CCFL "excited" rises over time, beyond what the controller can do.

So, 7-pin configuration is 2x VCC (12V), 2x enable (on or off), 1x adjust (analog brightness), and 2x ground. 6-pin gets rid of 1 enable line. Those are the configurations you'll find in CCFL. Then came LED lighting which required much less power to run. So the 4-pin configuration gets rid of a VCC and a ground line. And finally you have the 3-pin configuration which gets rid of the adjust line, and you can just short it to the enable line.

There are some other mods but I'm running out of characters. Why am I telling you all this? The reason is that this laptop doesn't feel any different to use than the ThinkPad x220 and IdeaPad Y700 I have on my desk (with 6c12t, 32G of RAM, ~1TB of SSDs and 2TB HDDs). A hefty setup compared to a very dated one, yet they feel the same. It can do web browsing, I can chat on Telegram with it, and I can do programming on it. So, if you're looking for a hobby project, maybe some kind of restrictions on your hardware to spark that creativity that makes code better, I can highly recommend it. I think I'm almost done with this project, and it was heaps of fun :D 11

11 -

Got laid off on Friday because of a workforce reduction. When I was in the office with my boss, someone went into my cubicle and confiscated my laptop. My badge was immediately revoked as was my access to network resources such as email and file storage. I then had to pack up my cubicle, which filled up the entire bed of my pickup truck, with a chaperone from Human Resources looking suspiciously over my shoulder the whole time. They promised to get me a thumb drive of my personal data. This all happens before the Holidays are over. I feel like I was speed-raped by the Flash and am only just now starting to feel less sick to the stomach. I wanted to stay with this company for the long haul, but I guess in the software engineering world, there is no such thing as job security and things are constantly shifting. Anyone have stories/tips to make me feel better? Perhaps how you have gotten through it? 😔😑😐14

-

This rant is particularly directed at web designers, front-end developers. If you match that, please do take a few minutes to read it, and read it once again.

Web 2.0. It's something that I hate. Particularly because the directive amongst webdesigners seems to be "client has plenty of resources anyway, and if they don't, they'll buy more anyway". I'd like to debunk that with an analogy that I've been thinking about for a while.

I've got one server in my home, with 8GB of RAM, 4 cores and ~4TB of storage. On it I'm running Proxmox, which is currently using about 4GB of RAM for about a dozen VM's and LXC containers. The VM's take the most RAM by far, while the LXC's are just glorified chroots (which nonetheless I find very intriguing due to their ability to run unprivileged). Average LXC takes just 60MB RAM, the amount for an init, the shell and the service(s) running in this LXC. Just like a chroot, but better.

On that host I expect to be able to run about 20-30 guests at this rate. On 4 cores and 8GB RAM. More extensive migration to LXC will improve this number over time. However, I'd like to go further. Once I've been able to build a Linux which was just a kernel and busybox, backed by the musl C library. The thing consumed only 13MB of RAM, which was a VM with its whole 13MB of RAM consumption being dedicated entirely to the kernel. I could probably optimize it further with modularization, but at the time I didn't due to its experimental nature. On a chroot, the kernel of the host is used, meaning that said setup in a chroot would border near the kB's of RAM consumption. The busybox shell would be its most important RAM consumer, which is negligible.

I don't want to settle with 20-30 VM's. I want to settle with hundreds or even thousands of LXC's on 8GB of RAM, as I've seen first-hand with my own builds that it's possible. That's something that's very important in webdesign. Browsers aren't all that different. More often than not, your website will share its resources with about 50-100 other tabs, because users forget to close their old tabs, are power users, looking things up on Stack Overflow, or whatever. Therefore that 8GB of RAM now reduces itself to about 80MB only. And then you've got modern web browsers which allocate their own process for each tab (at a certain amount, it seems to be limited at about 20-30 processes, but still).. and all of its memory required to render yours is duplicated into your designated 80MB. Let's say that 10MB is available for the website at most. This is a very liberal amount for a webserver to deal with per request, so let's stick with that, although in reality it'd probably be less.

10MB, the available RAM for the website you're trying to show. Of course, the total RAM of the user is comparatively huge, but your own chunk is much smaller than that. Optimization is key. Does your website really need that amount? In third-world countries where the internet bandwidth is still in the order of kB/s, 10MB is *very* liberal. Back in 2014 when I got into technology and webdesign, there was this rule of thumb that 7 seconds is usually when visitors click away. That'd translate into.. let's say, 10kB/s for third-world countries? 7 seconds makes that 70kB of available network bandwidth.

Web 2.0, taking 30+ seconds to load a web page, even on a broadband connection? Totally ridiculous. Make your website as fast as it can be, after all you're playing along with 50-100 other tabs. The faster, the better. The more lightweight, the better. If at all possible, please pursue this goal and make the Web a better place. Efficiency matters.9 -

I am running a small - but growing - ceph-cluster at work. Since it is fun and our storage demand is growing each day.

Today, it was time to bring another node online and add another 12TB to the cluster.

Installation of the OS went fine, network settings fine, drives looks fine.

Now, time to add it into the cluster.... BAM

Every Dell machine in the Cluster - Dead.

The two HP-machines is online and running. But the Dell-machines just died.

WAT!?19 -

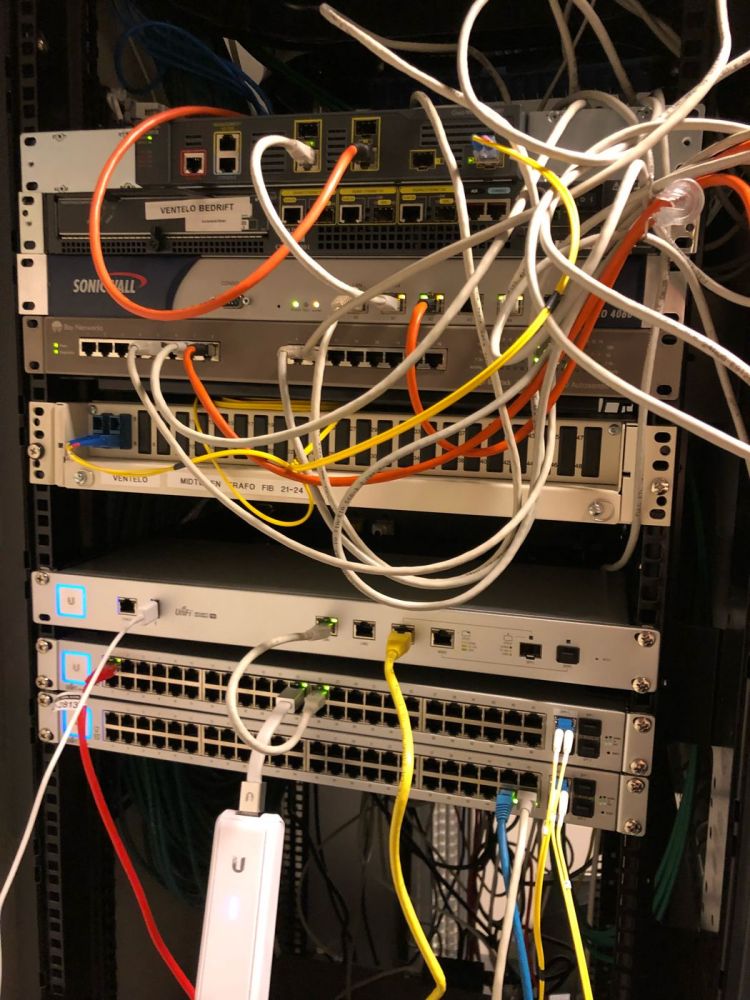

I’ve started the process of setting up the new network at work. We got a 1Gbit fibre connection.

Plan was simple, move all cables from old switch to new switch. I wish it was that easy.

The imbecile of an IT Guy at work has setup everything so complex and unnecessary stupid that I’m baffled.

We got 5 older MacPros, all running MacOS Server, but they only have one service running on them.

Then we got 2x xserve raid where there’s mounted some external NAS enclosures and another mac. Both xserve raid has to be running and connected to the main macpro who’s combining all this to a few different volumes.

Everything got a static public IP (we got a /24 block), even the workstations. Only thing that doesn’t get one ip pr machine is the guest network.

The firewall is basically set to have all ports open, allowing for easy sniffing of what services we’re running.

The “dmz” is just a /29 of our ip range, no firewall rules so the servers in the dmz can access everything in our network.

Back to the xserve, it’s accessible from the outside so employees can work from home, even though no one does it. I asked our IT guy why he hadn’t setup a VPN, his explanation was first that he didn’t manage to set it up, then he said vpn is something hackers use to hide who they are.

I’m baffled by this imbecile of an IT guy, one problem is he only works there 25% of the time because of some health issues. So when one of the NAS enclosures didn’t mount after a power outage, he wasn’t at work, and took the whole day to reply to my messages about logins to the xserve.

I can’t wait till I get my order from fs.com with new patching equipment and tonnes of cables, and once I can merge all storage devices into one large SAN. It’ll be such a good work experience. 7

7 -

*looks for jobs in system administration*

For our client in $location we're looking for a Network and System Administrator ... to manage our local IT infrastructure (so far so good) ... that's Microsoft-based.

Fuck that company.

*looks further*

Requirements: deployment and maintenance of servers, backups and storage, updates, yada yada.. fine with me.

yOU wiLl mAiNtAiN WanBLowS sUrVaR sYsTeMs

Fuck that company too.

Does anyone here in Belgium even work with fucking Linux servers?! Or should I really relocate to the Netherlands to get something decent?!!28 -

Fucking piece of shit German internet man. Some of you might know that Germany probably has the shittiest internet in the EU. And by shitty, I don't mean the downstream speeds you can get (which is how most ISPs justify their crappy network), but the GODDAMN UPSTREAM SPEEDS.

See, I'm just a student, right? I don't run a fucking company or something like that. I don't need / can't afford a symmetrical gigabit connection. But I do a lot of stuff that requires a decent upstream connection.

Fucking Unitymedia (my ISP), if I already decide to buy the goddamn "business plan" (IPv6 & static adresses), at least supply me with some decent upstream speeds. PLEASE!

My current plan costs ~45€ a month for internet and TV (I don't watch, but my two other flat-mates do).

Internet speeds are 150 Mbit/s down and FUCKING 10 Mbit/s up! What??! What the hell am I supposed to do with only 10 Mbit/s?? I'm already completely exhausting the bandwidth and I'm not even done setting everything up! Fucking hell...

I was planning on getting their "upload package" to get at least 20 Mbit/s up – but they removed that option! IT'S GONE, PEOPLE! They said in an interview last year that "customers are not interested in higher upload speeds" and consequently removed that option. WHAT???

"You wanna have state-of-the-art downstream speeds of 400 Mbit/s? Here you go. Oh, our maximum limit of 10 Mbit/s upstream is not enough for you? TOO FUCKING BAD, NOTHING THAT WE CAN OFFER YOU!"

(Seriously though, the best customer internet plan is 400D & 10U)

Goddamn... in this day and age of things like cloud storage etc. even "normal" people definitely need higher upload speeds.

Man, this rant got so long, but I really wanted to get this out. This wasn't even everything though, maybe I'll make a separate rant to elaborate on other issues.

If you are interested, you might want to read up on the following report:

https://speedtest.net/reports/...33 -

2012 laptop:

- 4 USB ports or more.

- Full-sized SD card slot with write-protection ability.

- User-replaceable battery.

- Modular upgradeable memory.

- Modular upgradeable data storage.

- eSATA port.

- LAN port.

- Keyboard with NUM pad.

- Full-sized SD card slot.

- Full-sized HDMI port.

- Power, I/O, charging, network indicator lamps.

- Modular bay (for example Lenovo UltraBay)

- 1080p webcam (Samsung 700G7A)

- No TPM trojan horse.

2024 laptop:

- 1 or 2 USB ports.

- Only MicroSD card slot. Requires fumbling around and has no write-protection switch.

- Non-replaceable battery.

- Soldered memory.

- Soldered data storage.

- No eSATA port.

- No LAN port.

- No NUM pad.

- Micro-HDMI port or uses USB-C port as HDMI.

- Only power lamp. No I/O lamp so user doesn't know if a frozen computer is crashed or working.

- No modular bay

- 720p webcam

- TPM trojan horse (Jody Bruchon video: https://youtube.com/watch/... )

- "Premium design" (who the hell cares?!)11 -

Long rant ahead.. 5k characters pretty much completely used. So feel free to have another cup of coffee and have a seat 🙂

So.. a while back this flash drive was stolen from me, right. Well it turns out that other than me, the other guy in that incident also got to the police 😃

Now, let me explain the smiley face. At the time of the incident I was completely at fault. I had no real reason to throw a punch at this guy and my only "excuse" would be that I was drunk as fuck - I've never drank so much as I did that day. Needless to say, not a very good excuse and I don't treat it as such.

But that guy and whoever else it was that he was with, that was the guy (or at least part of the group that did) that stole that flash drive from me.

Context: https://devrant.com/rants/2049733 and https://devrant.com/rants/2088970

So that's great! I thought that I'd lost this flash drive and most importantly the data on it forever. But just this Friday evening as I was meeting with my friend to buy some illicit electronics (high voltage, low frequency arc generators if you catch my drift), a policeman came along and told me about that other guy filing a report as well, with apparently much of the blame now lying on his side due to him having punched me right into the hospital.

So I told the cop, well most of the blame is on me really, I shouldn't have started that fight to begin with, and for that matter not have drunk that much, yada yada yada.. anyway he walked away (good grief, as I was having that friend on visit to purchase those electronics at that exact time!) and he said that this case could just be classified then. Maybe just come along next week to the police office to file a proper explanation but maybe even that won't be needed.

So yeah, great. But for me there's more in it of course - that other guy knows more about that flash drive and the data on it that I care about. So I figured, let's go to the police office and arrange an appointment with this guy. And I got thinking about the technicalities for if I see that drive back and want to recover its data.

So I've got 2 phones, 1 rooted but reliant on the other one that's unrooted for a data connection to my home (because Android Q, and no bootable TWRP available for it yet). And theoretically a laptop that I can put Arch on it no problem but its display backlight is cooked. So if I want to bring that one I'd have to rely on a display from them. Good luck getting that done. No option. And then there's a flash drive that I can bake up with a portable Arch install that I can sideload from one of their machines but on that.. even more so - good luck getting that done. So my phones are my only option.

Just to be clear, the technical challenge is to read that flash drive and get as much data off of it as possible. The drive is 32GB large and has about 16GB used. So I'll need at least that much on whatever I decide to store a copy on, assuming unchanged contents (unlikely). My Nexus 6P with a VPN profile to connect to my home network has 32GB of storage. So theoretically I could use dd and pipe it to gzip to compress the zeroes. That'd give me a resulting file that's close to the actual usage on the flash drive in size. But just in case.. my OnePlus 6T has 256GB of storage but it's got no root access.. so I don't have block access to an attached flash drive from it. Worst case I'd have to open a WiFi hotspot to it and get an sshd going for the Nexus to connect to.

And there we have it! A large storage device, no root access, that nonetheless can make use of something else that doesn't have the storage but satisfies the other requirements.

And then we have things like parted to read out the partition table (and if unchanged, cryptsetup to read out LUKS). Now, I don't know if Termux has these and frankly I don't care. What I need for that is a chroot. But I can't just install Arch x86_64 on a flash drive and plug it into my phone. Linux Deploy to the rescue! 😁

It can make chrooted installations of common distributions on arm64, and it comes extremely close to actual Linux. With some Linux magic I could make that able to read the block device from Android and do all the required sorcery with it. Just a USB-C to 3x USB-A hub required (which I have), with the target flash drive and one to store my chroot on, connected to my Nexus. And fixed!

Let's see if I can get that flash drive back!

P.S.: if you're into electronics and worried about getting stuff like this stolen, customize it. I happen to know one particular property of that flash drive that I can use for verification, although it wasn't explicitly customized. But for instance in that flash drive there was a decorative LED. Those are current limited by a resistor. Factory default can be say 200 ohm - replace it with one with a higher value. That way you can without any doubt verify it to be yours. Along with other extra security additions, this is one of the things I'll be adding to my "keychain v2".10 -

Game Streaming is an absolute waste.

I'm glad to see that quite a lot of people are rightfully skeptical or downright opposed to it. But that didn't stop the major AAA game publishers announcing their own game streaming platforms at E3 this weekend, did it?

I fail to see any unique benefit that can't be solved with traditional hardware (either console or PC)

- Portability? The Nintendo Switch proved that dedicated consoles now have enough power to run great games both at home and on the go.

- Storage? You can get sizable microSD cards for pretty cheap nowadays. So much so that the Switch went back to use flash-based cartridges!

- Library size/price? The problem is even though you're paying a low price for hundreds of games, you don't own them. If any of these companies shut down the platform, all that money you spent is wasted. Plus, this can be solved with backwards compatibility and one-time digital downloads.

- Performance on commodity hardware? This is about the only thing these streaming services have going for it. But unfortunately this only works when you have an Internet connection, so if you have crap Internet or drop off the network, you're screwed. And has it ever occurred to people that maybe playing Doom on your phone is a terrible UX experience and shouldn't be done because it wasn't designed for it?

I just don't get it. Hopefully this whole fad passes soon.19 -

Was just recalling one of the worst calls I ever got in IT...

Many years ago we had a single rack for all of our servers, network and storage (pre virtualization too!).

We had a new security system installed in the building and the facilities manager let the guy into the server room to run all the sensor cables in because that is where they wanted their panel... the guy was too lazy to get up on the roof and in the attic repeatedly so after he checked it out he went around every where and drilled a hole straight up where he wanted the sensor wire to go... well the server room was not under an attic space... when he found he had drilled through to the out side... HE FILLED IT WITH EXPANDING FOAM.... the membrane on the roof was damaged... that night it rained... I got a call at 4 am that systems were acting funky and I went in... when I opened the door it was literally raining through the corners of the drop ceiling onto the rack... An excellent DR plan saved our asses but the situation cost the vendor's insurance company $30k in dead equipment and another $10k in emergency labor. Good thing for him we had so little equipment in that room back in.

Moral of the story... always have a good DR plan... you never know when it will rain in the server room.... :)3 -

Only touching the topic slightly:

In my school time we had a windows domain where everyone would login to on every computer. You also had a small private storage accessible as network share that would be mapped to a drive letter so everyone could find it. The whole folder containing the private subfolders of everyone was shared so you could see all names but they were only accessible to the owner.

At some point, though, I tried opening them again but this time I could see the contents. That was quite unexpected so I tried reading some generic file which also worked without problems. Even the write command went through successfully. Beginning to grasp the severity of the misconfiguration I verified with other userfolders and even borrowed the account of someone else.

Skipping the "report a problem" form, which would have been read at at least in the next couple hours but I figured this was too serious, I went straight to the admin and told him what I found. You can't believe how quickly he ran off to the admin room to have a look/fix the permissions. -

24th, Christmas: BIND slaves decide to suddenly stop accepting zone transfers from the master. Half a day of raging and I still couldn't figure out why. dig axfr works fine, but the slaves refuse a zone update according to tcpdump logs.

25th, 2nd day: A server decides to go down and take half my network with it. Turns out that a Python script managed to crash the goddamn kernel.

Thank you very much technology for making the Christmas days just a little bit better ❤️

At least I didn't have anything to do during either days, because of the COVID-19 pandemic. And to be fair, I did manage to make a Telegram bot with fancy webhooks and whatnot in 5MB of memory and 18MB of storage. Maybe I should just write the whole thing and make another sacred temple where shitty code gets beaten the fuck out of the system. Terry must've been onto something...5 -

What you are expected to learn in 3 years:

power electronics,

analogue signal,

digital signal processing,

VDHL development,

VLSI debelopment,

antenna design,

optical communication,

networking,

digital storage,

electromagnetic,

ARM ISA,

x86 ISA,

signal and control system,

robotics,

computer vision,

NLP, data algorithm,

Java, C++, Python,

javascript frameworks,

ASP.NET web development,

cloud computing,

computer security ,

Information coding,

ethical hacking,

statistics,

machine learning,

data mining,

data analysis,

cloud computing,

Matlab,

Android app development,

IOS app development,

Computer architecture,

Computer network,

discrete structure,

3D game development,

operating system,

introduction to DevOps,

how-to -fix- computer,

system administration,

Project of being entrepreneur,

and 24 random unrelated subjects of your choices

This is a major called "computer engineering"4 -

Last Monday I bought an iPhone as a little music player, and just to see how iOS works or doesn't work.. which arguments against Apple are valid, which aren't etc. And at a price point of €60 for a secondhand SE I figured, why not. And needless to say I've jailbroken it shortly after.

Initially setting up the iPhone when coming from fairly unrestricted Android ended up being quite a chore. I just wanted to use this thing as a music player, so how would you do it..?

Well you first have to set up the phone, iCloud account and whatnot, yada yada... Asks for an email address and flat out rejects your email address if it's got "apple" in it, catch-all email servers be damned I guess. So I chose ishit at my domain instead, much better. Address information for billing.. just bullshit that, give it some nulls. Phone number.. well I guess I could just give it a secondary SIM card's number.

So now the phone has been set up, more or less. To get music on it was quite a maze solving experience in its own right. There's some stuff about it on the Debian and Arch Wikis but it's fairly outdated. From the iPhone itself you can install VLC and use its app directory, which I'll get back to later. Then from e.g. Safari, download any music file.. which it downloads to iCloud.. Think Different I guess. Go to your iCloud and pull it into the iPhone for real this time. Now you can share the file to your VLC app, at which point it initializes a database for that particular app.

The databases / app storage can be considered equivalent to the /data directories for applications in Android, minus /sdcard. There is little to no shared storage between apps, most stuff works through sharing from one app to another.

Now you can connect the iPhone to your computer and see a mount point for your pictures, and one for your documents. In that documents mount point, there are directories for each app, which you can just drag files into. For some reason the AFC protocol just hangs up when you try to delete files from your computer however... Think Different?

Anyway, the music has been put on it. Such features, what a nugget! It's less bad than I thought, but still pretty fucked up.

At that point I was fairly dejected and that didn't get better with an update from iOS 14.1 to iOS 14.3. Turns out that Apple in its nannying galore now turns down the volume to 50% every half an hour or so, "for hearing safety" and "EU regulations" that don't exist. Saying that I was fuming and wanting to smack this piece of shit into the wall would be an understatement. And even among the iSheep, I found very few people that thought this is fine. Though despite all that, there were still some. I have no idea what it would take to make those people finally reconsider.. maybe Tim Cook himself shoving an iPhone up their ass, or maybe they'd be honored that Tim Cook noticed them even then... But I digress.

And then, then it really started to take off because I finally ended up jailbreaking the thing. Many people think that it's only third-party apps, but that is far from true. It is equivalent to rooting, and you do get access to a Unix root account by doing it. The way you do it is usually a bootkit, which in a desktop's ring model would be a negative ring. The access level is extremely high.

So you can root it, great. What use is that in a locked down system where there's nothing available..? Aha, that's where the next thing comes in, 2 actually. Cydia has an OpenSSH server in it, and it just binds to port 22 and supports all of OpenSSH's known goodness. All of it, I'm using ed25519 keys and a CA to log into my phone! Fuck yea boi, what a nugget! This is better than Android even! And it doesn't end there.. there's a second thing it has up its sleeve. This thing has an apt package manager in it, which is easily equivalent to what Termux offers, at the system level! You can install not just common CLI applications, but even graphical apps from Cydia over the network!

Without a jailbreak, I would say that iOS is pretty fucking terrible and if you care about modding, you shouldn't use it. But jailbroken, fufu.. this thing trades many blows with Android in the modding scene. I've said it before, but what a nugget!8 -

I think I'm having a "return to monkey" phase.

What the fuck are we doing?

Free VPN's, free cloud storage, smartphones and stupid telemetry/uSaGE aNaLYtiCs, password managers, social media, content farms, cheap wifi enabled smart home and 'intelligent' cars.

I'm starting to hate it all.

Look at how many people (including myself, sadly) is glued to their fucking datahoarding multimedia shitdevices (known as 'smartphones'). While sitting in a room filled with every fucking small appliance that needs an app, wifi and phones home to who the fuck knows.

Even my fucking dishwasher has an app and wifi enabled so I can start the dishwasher outside the wifi network.

How the fuck did we get here?20 -

A project I've been a part of for two years finally exited beta this morning! It was so exciting watching it grow and and change into what it is today. The project in question is Storj.io. A decentralized cloud storage. When I first joined the project, literally all it did was create junk files to take up space. Now it is a thriving network storinf over a petabyte of data without the possibility of it ever going offline.8

-

About slightly more than a year ago I started volunteering at the local general students committee. They desperately searched for someone playing the role of both political head of division as well as the system administrator, for around half a year before I took the job.

When I started the data center was mostly abandoned with most of the computational power and resources just laying around unused. They already ran some kvm-hosts with around 6 virtual machines, including a cloud service, internally used shared storage, a user directory and also 10 workstations and a WiFi-Network. Everything except one virtual machine ran on GNU/Linux-systems and was built on open source technology. The administration was done through shared passwords, bash-scripts and instructions in an extensive MediaWiki instance.

My introduction into this whole eco-system was basically this:

"Ever did something with linux before? Here you have the logins - have fun. Oh, and please don't break stuff. Thank you!"

Since I had only managed a small personal server before and learned stuff about networking, it-sec and administration only from courses in university I quickly shaped a small team eager to build great things which would bring in the knowledge necessary to create something awesome. We had a lot of fun diving into modern technologies, discussing the future of this infrastructure and simply try out and fail hard while implementing those ideas.

Today, a year and a half later, we look at around 40 virtual machines spiced with a lot of magic. We host several internal and external services like cloud, chat, ticket-system, websites, blog, notepad, DNS, DHCP, VPN, firewall, confluence, freifunk (free network mesh), ubuntu mirror etc. Everything is managed through a central puppet-configuration infrastructure. Changes in configuration are deployed in minutes across all servers. We utilize docker for application deployment and gitlab for code management. We provide incremental, distributed backups, a central database and a distributed network across the campus. We created a desktop workstation environment based on Ubuntu Server for deployment on bare-metal machines through the foreman project. Almost everything free and open source.

The whole system now is easily configurable, allows updating, maintenance and deployment of old and new services. We reached our main goal for this year which was the creation of a documented environment which is maintainable by one administrator.

Although we did this in our free-time without any payment it was a great year with a lot of experience which pays off now. -

I continue to internally read and study about Smalltalk in an effort to see where we might have FUCKED UP and went backwards in terms of software engineering since I do not believe that complex source code based languages are the solution.

So I have Pharo. Nothin to complex really, everything is an object, yet, you do have room for building DSL's inside of it over a simple object model with no issue, the system browser can be opened across multiple screens (morph windows inside of a smalltalk system) for which you can edit you code in composable blocks with no issues. Blocks being a particular part of the language (think Ruby in more modern features) give ample room for functional programming. Thus far we have FP and OO (the original mind you) styles out in the open for development.

Your main code can be executed and instantly ALTER the live environment of a program as it is running, if what you are trying to do is stupid it won't affect the live instance, live programming is ahead of its time, and impressive, considering how old Smalltalk is. GUI applications can be given headless (this is also old in terms of how this shit was first distributed) So I can go ahead and package the virtual machine with the entire application into a folder, and distribute it agains't an organization "but why!!!! that package is 80+ mbs!") yeah cuz it carries the entire virtual machine, but go ahead and give it to the Mac user, or the Linux user, it will run, natively once it is clicked.

Server side applications run in similar fashion to php, in terms of lifecycles of request and how session storage is handled, this to me is interesting, no additional runtimes, drop it on a server, configure it properly and off you go, but this is common on other languages so really not that much of a point.

BUT if over a network a user is using your application and you change it and send that change over the network then the the change is damn near instant and fault tolerant due to the nature of the language.

Honestly, I don't know what went wrong or why we are not bringing this shit to the masses, the language was built for fucking kids, it was the first "y'all too stupid to get it, so here is simple" engine and we still said "nah fuck it, unlimited file system based programs, horrible build engines and {}; all over the place"

I am now writing a large budget managing application in Pharo Smalltalk which I want to go ahead and put to test soon at my institution. I do not have any issues thus far, other than my documentation help is literally "read the source code of the package system" which is easy as shit since it is already included inside. My scripts are small, my class hierarchies cover on themselves AND testing is part of the system. I honestly see no faults other than "well....fuck you I like opening vim and editing 300000000 files"

And honestly that is fine, my questions are: why is a paradigm that fits procedural, functional and OBVIOUSLY OO while including an all encompassing IDE NOT more famous, SELECTION is fine and other languages are a better fit, but why is such environment not more famous?9 -

When I was in 11th class, my school got a new setup for the school PCs. Instead of just resetting them every time they are shut down (to a state in which it contained a virus, great) and having shared files on a network drive (where everyone could delete anything), they used iServ. Apparently many schools started using that around that time, I heard many bad things about it, not only from my school.

Since school is sh*t and I had nothing better to do in computer class (they never taught us anything new anyway), I experimented with it. My main target was the storage limit. Logins on the school PCs were made with domain accounts, which also logged you in with the iServ account, then the user folder was synchronised with the iServ server. The storage limit there was given as 200MB or something of that order. To have some dummy files, I downloaded every program from portableapps.com, that was an easy way to get a lot of data without much manual effort. Then I copied that folder, which was located on the desktop, and pasted it onto the desktop. Then I took all of that and duplicated it again. And again and again and again... I watched the amount increate, 170MB, 180, 190, 200, I got a mail saying that my storage is full, 210, 220, 230, ... It just kept filling up with absolutely zero consequences.

At some point I started using the web interface to copy the files, which had even more interesting side effects: Apparently, while the server was copying huge amounts of files to itself, nobody in the entire iServ system could log in, neither on the web interface, nor on the PCs. But I didn't notice that at first, I thought just my account was busy and of course I didn't expect it to be this badly programmed that a single copy operation could lock the entire system. I was told later, but at that point the headmaster had already called in someone from the actual police, because they thought I had hacked into whatever. He basically said "don't do again pls" and left again. In the meantime, a teacher had told me to delete the files until a certain date, but he locked my account way earlier so that I couldn't even do it.

Btw, I now own a Minecraft account of which I can never change the security questions or reset the password, because the mail address doesn't exist anymore and I have no more contact to the person who gave it to me. I got that account as a price because I made the best program in a project week about Java, which greatly showed how much the computer classes helped the students learn programming: Of the ~20 students, only one other person actually had a program at the end of the challenge and it was something like hello world. I had translated a TI Basic program for approximating fractions from decimal numbers to Java.

The big irony about sending the police to me as the 1337_h4x0r: A classmate actually tried to hack into the server. He even managed to make it send a mail from someone else's account, as far as I know. And he found a way to put a file into any account, which he shortly considered to use to put a shutdown command into autostart. But of course, I must be the great hacker.3 -

Fucking docker swarm. Why the hell do they have to change the way it works so damn often. Find a good workthough and its not fucking valid anymore cause swarm doesnt use consul to catalog swarm nodes anymore. Well fuck thanks docker now i have to rethink my architecture cause you fuckers wanted to do something half assed.

Sad fucking thing is the change that made you do that shit in the first place doesn't work right for ssl so your damn mesh network is fucking useless for any real world uses unless people like me rig the fucking hell out off it.

Another fucking thing how the hell haven't these fucktards added a shared storage yet, come the fuck on. -

!rant && story

tl;dr I lost my path, learned to a lot about linux and found true love.

So because of the recent news about wpa2, I thought about learning to do some things network penetration with kali. My roommate and I took an old 8gb usb and turned it into a bootable usb with persistent storage. Maybe not the best choice, but atleast we know how to do that now.

Anyway, we started with a kali.iso from 2015, because we thought it would be faster than downloading it with a 150kpbs connection. Learned a lot from that mistake while waiting apt-get update/upgrade.

Next day I got access to some faster connection, downloaded a new release build and put the 2015 version out it's misery. Finally some signs of progress. But that was not enough. We wanted more. We (well atleast I) wanted to try i3, because one of my friends showed me to /r/unixporn (btw, pornhub is deprecated now). So after researching what i3 is, what a wm is AND what a dm is, we replaced gdm3 with lightdm and set i3 as standard wm. With the user guide on an other screen we started playing with i3. Apparently heaven is written with two characters only. Now I want to free myself from windows and have linux (Maybe arch) as my main system, but for now we continue to use thus kali usb to learn about how to set uo a nice desktop environment. Wait, why did we choose to install kali? 😂

I feel kinda sorry for that, but I want to experiment on there before until I feel confident. (Please hit me up with tips about i3)

Still gotta use Windows as a subsystem for gaming. 😥3 -

fuck.. FUCK FUCK FUCK!!!

I'mma fakin EXPLODE!

It was supposed to be a week, maybe two weeks long gig MAX. Now I'm on my 3rd (or 4th) week and still got plenty on my plate. I'm freaking STRESSED. Yelling at people for no reason, just because they interrupt my train of thought, raise a hand, walk by, breathe, stay quiet or simply are.

FUCK!

Pressure from all the fronts, and no time to rest. Sleeping 3-5 hours, falling asleep with this nonsense and breaking the day with it too.

And now I'm fucking FINALLY CLOSE, I can see the light at the end of the tunne<<<<<TTTOOOOOOOOOOOOOTTTTT>>>>>>>

All that was left was to finish up configuring a firewall and set up alerting. I got storage sorted out, customized a CSI provider to make it work across the cluster, raised, idk, a gazillion issues in GH in various repositories I depend on, practically debugged their issues and reported them.

Today I'm on firewall. Liason with the client is pressured by the client bcz I'm already overdue. He propagates that pressure on to me. I have work. I have family, I have this side gig. I have people nagging me to rest. I have other commitments (you know.. eating (I practically finish my meal in under 3 minutes; incl. the 2min in the µ-wave), shitting (I plan it ahead so I could google issues on my phone while there), etc.)

A fucking firewall was left... I configured it as it should be, and... the cluster stopped...clustering. inter-node comms stopped. `lsof` shows that for some reason nodes are accessing LAN IPs through their WAN NIC (go figure!!!) -- that's why they don't work!!

Sooo.. my colleagues suggest me to make it faster/quicker and more secure -- disable public IPs and use a private LB. I spent this whole day trying to implement it. I set up bastion hosts, managed to hack private SSH key into them upon setup, FINALLY managed to make ssh work and the user_data script to trigger, only to find out that...

~]# ping 1.1.1.1

ping: connect: Network is unreachable

~]#

... there's no nat.

THERE"S NO FUCKING NAT!!!

HOW CAN THERE BE NO NAT!?!?!????? MY HOME LAPTOP HAS A NAT, MY PHONE HAS A NAT, EVEN MY CAT HAS A MOTHER HUGGING NAT, AND THIS FUCKING INFRA HAS NO FUCKING NAT???????????????????????

ALready under loads of pressure, and the whole day is wasted. And now I'll be spending time to fucking UNDO everything I did today. Not try something new. But UNDO. And hour or more for just that...

I don't usually drink, but recently that bottom shelf bottle of Captain Morgan that smells and tastes like a bottle of medical spirit starts to feel very tempting.

Soo.. how's your dayrant overdue tired no nat hcloud why there's no nat???? fuck frustrated waiting for concrete to settle angry hetzner need an outlet2 -

Fk you Google!

My Samsung note 10 screen went dead near a week ago... it's a secondary line so waiting for parts wasn't the end of the world.

Ofc the screen (curved and incl a fingerprint reader thatd be a major pain to not replace) was integrated to the whole front half... back panel glued, battery, glued immensely and with all other parts out, about 6mm space only at the bottom to get a tool in to pry it out.

New screen (off brand) ~200... all genuine parts amazon refurb ~230... figured id have some extra hardware for idk what... i like hardware and can write drivers so why not.

Figured id save a bit of time and avoid other potentially damaged (water) components to just swap out the mobo unit that had my storage.

Put it back together, first checked that my sim was recognised since this carrier required extraneous info when registering the dev... worked fine... fingerprint worked fine, brave browser too...

Then i open chrome. It tells me im offline... weird cuz i was literally in a discord call. My wifi says connected to the internet (not that i wouldn't have known the second there was a network issue... i have all our servers here and a /28 block... ofc i have everything scripted and connected to alert any dev i have, anywhere i am, the moment something strange happens).

Apparently google doesnt like the new daughter board(i dislike the naming scheme... its weird to me)... so anything that is controlled by google aside from the google account that is linked to non-google reliant apps like this... just hangs as if loading and/or says im offline.

I know... itll only take me about the 5-10m it took to type this rant but ffs google... why dont you even have an error message as to what your issue is... or the simple ability to let me log in and be like 'yup it's me, here's your dumb 2fa and a 3rd via text cuz you're extra paranoid yet dont actually lock the account or dev in any way!'

I think it's a toss up if google actually knows that it's doing this or they just have some giant glitch that showed up a couple times in testing and was resolved via the methods of my great grama- "just smack it or kick it a few times while swearing at it in polish. Like reaaaally yelling. Always worked for me! If not, find a fall guy."6 -

Today I spent 9 hours trying to resolve an issue with .net core integration testing a project with soap services created using a third party soap library since .net core doesn't support soap anymore. And WCF is before my time.

The tests run in-process so that we can override services like the database, file storage, basically io settings but not code.

This morning I write the first test by creating a connected service reference to generate a service client. That way I don't need to worry about generating soap messages and keeping them in sync with the code.

I sent my first request and... Can't find endpoint.

3 hours later I learn via fiddler that a real request is being made. It's not using the virtual in-process server and http client, it's sending an actual network request that fiddler picks up, and of course that needs a real server accepting requests... Which I don't have.

So I start on MSDN. Please God help me. Nope. Nothing. Makes sense since soap is dead on .net core.

Now what? Nothing on the internet because above. Nothing in the third party soap library. Nothing. At this point I question of I have hit my wall as a developer.

Another 4 hours later I have reverse engineered the Microsoft code on GitHub and figured out that I am fucked. It's so hard to understand.

2 more hours later I have figured out a solution. It's pure filth..I hide it away in another tooling project and move all the filth to internal classes :D the equivalent of tidying your room as a kid by shoving it all under the bed. But fuck it.

My soap tests now use the correct http client with the virtual server. I am a magician.4 -

Interesting project lined up for today!

I'll be installing a security system, one camera connected to a DVR pointing at a front door triggering a buzzer and sending a video feed to a monitor in a ground floor room at the other side of the house.

But it's my dads house so I'm going to have fun with it and install a wired Cat5 network an Isolated offline router and build in some "smart home" features from scratch, all running on a Local Area Network.

I've built a private home server package for media and storage using Apache and I want to add as many features to the house as I can, maybe even install an extra camera pointing towards the sky (every home should have a sky cam lol).

I can take my time with this project over the next several weeks and I was wondering what would you add to this project?10 -

VLC for android is horrendous when it comes to network storage (and ux, but that's vlc in general). 3500 audio titles in a playlist being vlc-native format via samba-share. The fucking thing just freezes up. 3 minutes later it still doesn't do anything..

Oh you say it's prebuffering? How nice it's able to do so witHOUT READING ANYTHING FROM THE FUCKING DISK IN MY SERVER NICE DUDE IS IT GOING TO FETCH IT TELEPATHICALLY?!3 -

Windows why the fuck you slow down to a crawl when I upload something to network storage?! I only upload at 100Mbit and you loose your shit opening another file explorer??!2

-

as a seasoned systems eng myself, i had huge mental block of "i am not a programmer" whining when starting to incorperate agile/infrastructure as code for more seasoned syseng staff.

leadership made devops a role and not a practice so lots of growing pains. was finally able to win them over by asking them to look at how many 'scripts' and 'tools' they wrote to make life easier... and how much simpler and sustainable using puppet/ansible/chef/salt... and checking in all our sacred bin files and only approved 'scripts' would be pushed thru automation tool after post review.

we still are not programmers or developers, but using specific practices and source control took some time but saving us loads of time and gives us ability to actually do engineering

but just have 2 groups of younger guys that grew up wanting to be the bofh/crumudgen get off my systems types that are like not even 30... frustrating as they are the ones that should be more familiar with the shift from strictly ops to some overlap. and the devs that ask for root now that they can launch instances on aws or can launch docker containers and microservice..... ugggg. these 2 groups have never had to rack and stack servers, network gear, storage... just all magic to them because they can start 50 servers with a button click.

try to get past the iam roles, acls, facls, selinux and noshell i have been pushing. bitches. -

A beginner in learning java. I was beating around the bushes on internet from past a decade . As per my understanding upto now. Let us suppose a bottle of water. Here the bottle may be considered as CLASS and water in it be objects(atoms), obejcts may be of same kind and other may differ in some properties. Other way of understanding would be human being is CLASS and MALE Female be objects of Class Human Being. Here again in this Scenario objects may differ in properties such as gender, age, body parts. Zoo might be a class and animals(object), elephants(objects), tigers(objects) and others too, Above human contents too can be added for properties such as in in Zoo class male, female, body parts, age, eating habits, crawlers, four legged, two legged, flying, water animals, mammals, herbivores, Carnivores.. Whatever.. This is upto my understanding. If any corrections always welcome. Will be happy if my answer modified, comment below.

And for basic level.

Learn from input, output devices

Then memory wise cache(quick access), RAM(runtime access temporary memory), Hard disk (permanent memory) all will be in CPU machine. Suppose to express above memory clearly as per my knowledge now am writing this answer with mobile net on. If a suddenly switch off my phone during this time and switch on.Cache runs for instant access of navigation,network etc.RAM-temporary My quora answer will be lost as it was storing in RAM before switch off . But my quora app, my gallery and others will be on permanent internal storage(in PC hard disks generally) won't be affected. This all happens in CPU right. Okay now one question, who manages all these commands, input, outputs. That's Software may be Windows, Mac ios, Android for mobiles. These are all the managers for computer componential setup for different OS's.

Java is high level language, where as computers understand only binary or low level language or binary code such as 0’s and 1’s. It understand only 00101,1110000101,0010,1100(let these be ABCD in binary). For numbers code in 0 and 1’s, small case will be in 0 and 1s and other symbols too. These will be coverted in byte code by JVM java virtual machine. The program we write will be given to JVM it acts as interpreter. But not in C'.

Let us C…

Do comment. Thank you6 -

I hate the elasticsearch backup api.

From beginning to end it's an painful experience.

I try to explain it, but I don't think I will be able to cover it all.

The core concept is:

- repository (storage for snapshots)

- snapshots (actual backup)

The first design flaw is that every backup in an repository is incremental. ES creates an incremental filesystem tree.

Some reasons why this is a bad idea:

- deletion of (older) backups is slow, as newer backups need to be checked for integrity

- you simply have to trust ES that it does the right thing (given the bugs it has... It seems like a very bad idea TM)

- you have no possibility of verification of snapshots

Workaround... Create many repositories as each new repository forces an full backup.........

The second thing: ES scales. Many nodes / es instances form a cluster.

Usually backup APIs incorporate these in their design. ES does not.

If an index spans 12 nodes and u use an network storage, yes: a maximum of 12 nodes will open an eg NFS connection and start backuping.

It might sound not so bad with 12 nodes and one index...

But it get's pretty bad with 100s of indexes and several dozen nodes...

And there is no real limiting in ES. You can plug a few holes, but all in all, when you don't plan carefully your backups, you'll get a pretty f*cked up network congestion.

So traffic shaping must be manually added. Yay...

The last thing is the API itself.

It's a... very fragile thing.

Especially in older ES releases, the documentation is like handing you a flex instead of toilet paper for a wipe.

Documentation != API != Reality.

Especially the fault handling left me more than once speechless...

Eg:

/_snapshot/storage/backup

gives you a state PARTIAL

/_snapshot/storage/backup/_status

gives you a state SUCCESS

Why? The first one is blocking and refers to the backup status itself. The second one shouldn't be blocking and refers to the backup operation.

And yes. The backup operation state is SUCCESS, while the backup state might be PARTIAL (hence no full backup was made, there were errors).

So we have now an additional API that we query that then wraps the API of elasticsearch. With all these shiny scary workarounds like polling, since some APIs are blocking which might lead to a gateway timeout...

Gateway timeout? Yes. Since some operations can run a LONG (multiple hours) time and you don't want to have a ton of open connections hogging resources... You let the loadbalancer kill it. Most operations simply run in ES in the background, while the connection was killed.

So much joy and fun, isn't it?

Now add the latest SMR scandal and a few faulty (as in SMR instead of CMD) hdds in a hundred terabyte ZFS pool and you'll get my frustration level.

PS: The cluster has several dozen terabyte and a lot od nodes. If you have good advice, you're welcome - but please think carefully about this fact.

I might have accidentially vaporized people sending me links with solutions that don't work on large scale TM.2 -

What do you guys think about deploying elastic search on App Engine Custom Runtime?

(Basically, an empty folder with an elastic search Dockerfile.)

I think it's a good idea: you can now deploy your code and storage application (Elastic search, Redis, etc) as services on your cluster.

You can use GCP magic to auto scale those services, you have so many good stuff that come with it.

And it's inside the same network as your services running in the same AppEngine project.1 -

Does anyone know of a simple facial recognition program that I could train locally on a set of people’s faces?

I’m tasked with culling through a few hundred photos to find ones of certain people. I need the originals taken off the network drive and copied to a hard-disk.1 -

External Storage recommendation questions.

Im in need of some sort of external storage, either a harddrive or a NAS server, but idk what to get.

Price should be reasonable for the security and storage space it gives, so heres what i figured so far for pros and cons:

NAS Server:

+ Bigger capacity

+ Raid option

+ Easily expandable

+ Always accessible via the network (local)

- Difficult to transport (not gonna do that, but still)

- Expensive

- Physically larger

- Consumes power 24/7 (i dont pay for power currently)

Harddrive:

+ Easy to pack away and transport

+ Cheaper

- If drive fails, youre fucked

- If you want larger capacity, you end up with two external backups

What do you guys do? Im not sure what i should do :i

Any advice is appreciated.

It will be used for external backup, as mentioned. For my server and my own pc.12 -

RECOVER YOUR STOLEN USDT AND ETH WITH SPARTAN TECH GROUP RETRIEVAL FOR INSTANT RECOVERY

Exceptional Support and Guidance from Spartan Tech Group Retrieval: A Lifesaver in Cryptocurrency Trouble . I recently had a complex issue while transferring my cryptocurrency through the Ethereum network to my Trust Wallet, which defaulted to the BNB Smart Chain. Due to the new beta version of Trust Wallet, called the "Swift Wallet," there was no option for the Ethereum network, causing my crypto to become effectively frozen. I could only see the transaction on Ether scan, but without a way to access my funds.Unfortunately, I was informed that it might take months, or even years, for Trust Wallet to integrate Ethereum support into this beta version, leaving me in limbo. To make matters worse, the Swift Wallet does not use a traditional private key; instead, it relies on my device as the key. This means that if my device were lost or damaged, I would permanently lose access to my funds, as Trust Wallet does not provide a backup key. After struggling with this issue, I reached out to Spartan Tech Group Retrieval on WhatsApp:+1 (971) 4 8 7 - 3 5 3 8 . They were incredibly helpful and dedicated to explaining the intricacies of my situation. They kept their promise of no upfront fees and only asked me to write a review in return for their assistance. Their transparency and professionalism were evident throughout the process. Spartan Tech Group Retrieval provided not only insights into my immediate issue but also offered valuable advice on alternative exchanges, wallets, and cold storage options. They were attentive to my safety, ensuring that my sensitive information and passwords were never exposed. Their straightforward approach made the process feel trustworthy, which is crucial in the often-confusing world of cryptocurrency. I can recommend Spartan Tech Group Retrieval highly enough. Their commitment to helping me navigate this challenging situation without any financial burden was remarkable. I will definitely return to them for any future needs and will refer anyone facing similar issues to their services. Thank you, Spartan Tech Group Retrieval, for your exceptional support and guidance! other contact info like Telegram:+1 (581) 2 8 6 - 8 0 9 2

OR Email: spartantechretrieval (@) g r o u p m a i l .c o m to reach out to them -

The emergence of cryptocurrencies has completely changed the way we think about money in the constantly changing field of digital finance. Bitcoin, a decentralized currency that has captivated the interest of both investors and enthusiasts, is one of the most well-known of these digital assets. But the very characteristics of this virtual money, like its intricate blockchain technology and requirement for safe storage, have also brought with them a special set of difficulties. One such challenge is the issue of lost or stolen Bitcoins. As the value of this cryptocurrency has skyrocketed over the years, the stakes have become increasingly high, leaving many individuals and businesses vulnerable to the devastating consequences. Enter Salvage Asset Recovery, a team of highly skilled and dedicated professionals who have made it their mission to help individuals and businesses recover their lost or stolen Bitcoins. With their expertise in blockchain technology, cryptography, and digital forensics, they have developed a comprehensive and innovative approach to tackling this pressing issue. The story begins with a young entrepreneur, Sarah, who had been an early adopter of Bitcoin. She had invested a significant portion of her savings into the cryptocurrency, believing in its potential to transform the financial landscape. However, her excitement quickly turned to despair when she discovered that her digital wallet had been hacked, and her Bitcoins had been stolen. Devastated and unsure of where to turn, Sarah stumbled upon the Salvage Asset Recovery website while searching for a solution. Intrigued by their claims of successful Bitcoin recovery, she decided to reach out and seek their assistance. The Salvage Asset Recovery team, led by the enigmatic and brilliant individuals immediately sprang into action. They listened intently to Sarah's story, analyzing the details of the theft and the specific circumstances surrounding the loss of her Bitcoins. The Salvage Asset Recovery team started by carrying out a comprehensive investigation, exploring the blockchain in great detail and tracking the flow of the pilfered Bitcoins. They used sophisticated data analysis methods, drawing on their knowledge of digital forensics and cryptography to find patterns and hints that would point them in the direction of the criminal. As the investigation progressed, the Salvage Asset Recovery team discovered that the hacker had attempted to launder the stolen Bitcoins through a complex network of digital wallets and exchanges. Undeterred, they worked tirelessly, collaborating with law enforcement agencies and other industry experts to piece together the puzzle. Through their meticulous efforts, the team was able to identify the location of the stolen Bitcoins and devise a strategic plan to recover them. This involved a delicate dance of legal maneuvering, technological wizardry, and diplomatic negotiations with the various parties involved. Sarah marveled at how skillfully and precisely the Salvage Asset Recovery team carried out their plan. They outwitted the hacker and reclaimed the stolen Bitcoins by navigating the complex web of blockchain transactions and using their in-depth knowledge of the technology. As word of their success spread, the Salvage Asset Recovery team found themselves inundated with requests for assistance. They rose to the challenge, assembling a talented and dedicated team of blockchain experts, cryptographers, and digital forensics specialists to handle the growing demand. Send a DM to Salvage Asset Recovery via below contact details.

WhatsApp-----.+ 1 8 4 7 6 5 4 7 0 9 6

Telegram-----@SalvageAsset 1

1 -

HOW TO HIRE A GENUINE CRYPTO RECOVERY SERVICE HIRE ADWARE RECOVERY SPECIALIST

I had been meticulously building my cryptocurrency portfolio for years, carefully investing and diversifying my holdings. But in a single, devastating moment, it all vanished. My heart sank as I realized that my Bitcoin, worth a staggering $80,000, had been stolen from my digital wallet. I was devastated, my trust in the digital world shattered. I had heard the horror stories of crypto theft, but I never imagined it would happen to me. I felt helpless, unsure of where to turn or what to do next. The thought of losing my hard-earned savings was overwhelming, and I knew I had to take action. The ADWARE RECOVERY SPECIALIST Team, a team of highly qualified cybersecurity specialists that focused on locating and retrieving stolen digital assets, was what I discovered at that point. Initially dubious, I made the decision to get in touch after reading their stellar record and client endorsements. Email info: Adwarerecoveryspecialist@ auctioneer. net The ADWARE RECOVERY SPECIALIST Team got to work as soon as I called them. They asked me specific questions concerning the theft and the actions I had already taken as they listened carefully to my account. I could tell they were sympathetic and understanding right away, and I knew I was in capable hands. The team quickly got to work, utilizing their extensive network of contacts and cutting-edge investigative techniques to trace the movement of my stolen Bitcoin. They scoured the dark web, analyzed blockchain transactions, and employed advanced forensic tools to uncover the trail of the thieves. I found myself on an emotional rollercoaster. There were moments of hope, followed by periods of frustration and doubt. But the ADWARE RECOVERY SPECIALIST Team never wavered in their commitment to finding my stolen assets. And then, I received a call that would change everything. The team had managed to locate the digital wallet where my Bitcoin was being held. They had painstakingly pieced together the puzzle, following a complex web of transactions and shell companies, to finally pinpoint the culprits. The next step was to negotiate with the thieves, a delicate and high-stakes process that required the utmost skill and finesse. The ADWARE RECOVERY SPECIALIST Team approached the situation with the utmost professionalism, engaging in a series of tense discussions and carefully crafted strategies. With a deep breath, I agreed to the terms, and within a matter of days, my Bitcoin was back in my possession. The relief I felt was indescribable. It was as if a weight had been lifted from my shoulders, and I could finally breathe easy again. WhatsApp info:+12723 328 343 But the journey didn't end there. The ADWARE RECOVERY SPECIALIST Team went above and beyond, providing me with comprehensive support and guidance to ensure the security of my digital assets moving forward. They helped me implement robust security measures, including multi-factor authentication, cold storage solutions, and regular monitoring of my accounts. Today, I am more vigilant than ever when it comes to the protection of my digital wealth. I have become an advocate for cryptocurrency security, sharing my story and the invaluable lessons I've learned with others in the community. And whenever I see the ADWARE RECOVERY SPECIALIST Team's name, I am reminded of the power of perseverance, the importance of trust, and the transformative impact that skilled professionals can have on our lives. 34

34