Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "slow query"

-

After listening to two of our senior devs play ping pong with a new member of our team for TWO DAYS!

DevA: "Try this.."

Junior: "Didn't work"

DevB: "Try that .."

Junior: "Still not working"

I ask..

Me:"What is the problem?"

Few ums...uhs..awkward seconds of silence

Junior: "App is really slow. Takes several seconds to launch and searching either crashes or takes a really long time."

DevA: "We've isolated the issue with Entity Framework. That application was written back when we used VS2010. Since that application isn't used very often, no one has had to update it since."

DevB: "Weird part is the app takes up over 3 gigs of ram. Its obviously a caching issue. We might have to open up a ticket with Microsoft."

Me: "Or remove EF and use ADO."

DevB: "That would be way too much work. The app is supposed to be fully deprecated and replaced this year."

Me: "Three of you for the past two days seems like a lot of work. If EF is the problem, you remove EF."

DevA: "The solution is way too complicated for that. There are 5 projects and 3 of those have circular dependencies. Its a mess."

DevB: "No fracking kidding...if it were written correctly the first time. There aren't even any fracking tests."

Me:"Pretty sure there are only two tables involved, maybe 3 stored procedures. A simple CRUD app like this should be fairly straight forward."

DevB: "Can't re-write the application, company won't allow it. A redesign of this magnitute could take months. If we can't fix the LINQ query, we'll going to have the DBAs change the structures to make the application faster. I don't see any other way."

Holy frack...he didn't just say that.

Over my lunch hour, I strip down the WPF application to the basics (too much to write about, but the included projects only had one or two files), and created an integration test for refactoring the data access to use ADO. After all the tests and EF removed, the app starts up instantly and searches are also instant. Didn't click through all the UI, but the basics worked.

Sat with Junior, pointed out my changes (the 'why' behind the 'what') ...and he how he could write unit tests around the ViewModel behavior in the UI (and making any changes to the data access as needed).

Today's standup:

Junior: "Employee app is fixed. Had some help removing Entity Framework and how it starts up fast and and searches are instant. Going to write unit tests today to verify the UI behaivor. I'll be able to deploy the application tomorrow."

DevA: "What?! No way! You did all that yesterday?"

Me: "I removed the Entity Framework over my lunch hour. Like I said, its basic CRUD and mostly in stored procedures. All the data points are covered by integration tests, but didn't have time for the unit tests. It's likely I broke some UI behavior, but the unit tests should catch those."

DevB: "I was going to do that today. I knew taking out Entity Framework wouldn't be a big deal."

Holy fracking frack. You fracking lying SOB. Deeeep breath...ahhh...thanks devRant. Flame thrower event diverted.12 -

Hey, Root? How do you test your slow query ticket, again? I didn't bother reading the giant green "Testing notes:" box on the ticket. Yeah, could you explain it while I don't bother to listen and talk over you? Thanks.

And later:

Hey Root. I'm the DBA. Could you explain exactly what you're doing in this ticket, because i can't understand it. What are these new columns? Where is the new query? What are you doing? And why? Oh, the ticket? Yeah, I didn't bother to read it. There was too much text filled with things like implementation details, query optimization findings, overall benchmarking results, the purpose of the new columns, and i just couldn't care enough to read any of that. Yeah, I also don't know how to find the query it's running now. Yep, have complete access to the console and DB and query log. Still can't figure it out.

And later:

Hey Root. We pulled your urgent fix ticket from the release. You know, the one that SysOps and Data and even execs have been demanding? The one you finished three months ago? Yep, the problem is still taking down production every week or so, but we just can't verify that your fix is good enough. Even though the changes are pretty minimal, you've said it's 8x faster, and provided benchmark findings, we just ... don't know how to get the query it's running out of the code. or how check the query logs to find it. So. we just don't know if it's good enough.

Also, we goofed up when deploying and the testing database is gone, so now we can't test it since there are no records. Nevermind that you provided snippets to remedy exactly scenario in the ticket description you wrote three months ago.

And later:

Hey Root: Why did you take so long on this ticket? It has sat for so long now that someone else filed a ticket for it, with investigation findings. You know it's bringing down production, and it's kind of urgent. Maybe you should have prioritized it more, or written up better notes. You really need to communicate better. This is why we can't trust you to get things out.

*twitchy smile*rant useless people you suck because we are incompetent what's a query log? it's all your fault this is super urgent let's defer it ticket notes too long; didn't read19 -

I’m surrounded by idiots.

I’m continually reminded of that fact, but today I found something that really drives that point home.

Gather ‘round, everybody, it’s story time!

While working on a slow query ticket, I perused the code, finding several causes, and decided to run git blame on the files to see what dummy authored the mental diarrhea currently befouling my screen. As it turns out, the entire feature was written by mister legendary Apple golden boy “Finder’s Keeper” dev himself.

To give you the full scope of this mess, let me start at the frontend and work my way backward.

He wrote a javascript method that tracks whatever row was/is under the mouse in a table and dynamically removes/adds a “.row_selected” class on it. At least the js uses events (jQuery…) instead of a `setTimeout()` so it could be worse. But still, has he never heard of :hover? The function literally does nothing else, and the `selectedRow` var he stores the element reference in isn’t used elsewhere.

This function allows the user to better see the rows in the API Calls table, for which there is a also search feature — the very thing I’m tasked with fixing.

It’s worth noting that above the search feature are two inputs for a date range, with some helpful links like “last week” and “last month” … and “All”. It’s also worth noting that this table is for displaying search results of all the API requests and their responses for a given merchant… this table is enormous.

This search field for this table queries the backend on every character the user types. There’s no debouncing, no submit event, etc., so it triggers on every keystroke. The actual request runs through a layer of abstraction to parse out and log the user-entered date range, figure out where the request came from, and to map out some column names or add additional ones. It also does some hard to follow (and amazingly not injectable) orm condition building. It’s a mess of functional ugly.

The important columns in the table this query ultimately searches are not indexed, despite it only looking for “create_order” records — the largest of twenty-some types in the table. It also uses partial text matching (again: on. every. single. keystroke.) across two varchar(255)s that only ever hold <16 chars — and of which users only ever care about one at a time. After all of this, it filters the results based on some uncommented regexes, and worst of all: instead of fetching only one page’s worth of results like you’d expect, it fetches all of them at once and then discards what isn’t included by the paginator. So not only is this a guaranteed full table scan with partial text matching for every query (over millions to hundreds of millions of records), it’s that same full table scan for every single keystroke while the user types, and all but 25 records (user-selectable) get discarded — and then requeried when the user looks at the next page of results.

What the bloody fucking hell? I’d swear this idiot is an intern, but his code does (amazingly) actually work.

No wonder this search field nearly crashed one of the servers when someone actually tried using it.

Asdfajsdfk.rant fucking moron even when taking down the server hey bob pass me all the paperclips mysql murder terrible code slow query idiot can do no wrong but he’s the golden boy idiots repeatedly murdered mysql in the face21 -

Time for an actual rant:

During an internship I heard from my PM that my assignment for the week after was going to be working on a specific sql query to add some features and fix some bugs.

When talking with colleagues about that assignment later, they laughed and referred to the query as the "query of doom" (QoD), naive as I was back then, I thought that one of my colleagues had the QoD displayed on his screen because the query he was working on looked rather large (about 20 lines). They all laughed and told me I was in for a treat.

Starting my assignment the week after I was horrified to find out the QoD was huge, and by huge I mean, printing that specific query resulted in 8 A4 pages font size 10, front and back.

There were over a 100 union statements, no proper aliases, no documentation, not a single foreign key in the entire database, naming that makes no sense. And everything written manually by 10 different developers over the past years, who all fell of the face of the earth.

And this was only the query of doom. The entire product was a complete clusterfuck of forms with a queries directly behind action buttons, because we weren't allowed to make classes (yes you read that correctly. We couldn't make classes, unless we had a very compelling reason). Everything was created by over 30 different devs who only managed to stay just long enough to get some work done.

And all of this was the result of a PM who didn't believe in frameworks, ORM's, OOP, classes, ... because that made the software slow. To this day he still manages that product, but I'm glad that I quickly decided to move on.9 -

At the moment I'm trying to optimize a slow old MySQL query from a project I made several years ago. The execution time is in excess of 55 seconds. Not good :(

After about an hour of experimenting I finally set a good index and reduced the time to < 3 seconds.

Chuffed :) 3

3 -

Customer : c

Me : m

*Few weeks ago*

C: the server is slow, it sometimes takes 7 seconds before I see our data

(the project is 7+ years old and wasn't written by someone who is very good in SQL)

M: yeah I see that, our servers are busy with this one "process" (SQL query)

C: make it faster

M: well that's possible but it will take a few days (massive SQL spaghetti that I first have to untangle)

C: 😡 nvm then

*Yesterday*

C: server is down !

M: 🤔 *loads data from server and waits ~ 7 seconds*

M: Well what's the problem?

C: I need the data but it's so slow

WELL YOU MINDLESS IMBECILE... If something is slow it doesn't mean our god damn production server is down !

That just means that you have to give us a day or two so we can optimise the (ALSO BY YOUR REQUEST) rushed project... And save you YOUR money that YOU waste on the processing time on our server...4 -

I just can't understand what will lead an so called Software Company, that provides for my local government by the way, to use an cloud sever (AWS ec2 instance) like it were an bare metal machine.

They have it working, non-stop, for over 4 years or so. Just one instance. Running MySQL, PostgreSQL, Apache, PHP and an f* Tomcat server with no less than 10 HUGE apps deployed. I just can't believe this instance is still up.

By the way, they don't do backups, most of the data is on the ephemeral storage, they use just one private key for every dev, no CI, no testing. Deployment are nightmares using scp to upload the .war...

But still, they are running several several apps for things like registering citizen complaints that comes in by hot lines. The system is incredibly slow as they use just hibernate without query optimizations to lookup and search things (n+1 query problems).

They didn't even bother to get a proper domain. They use an IP address and expose the port for tomcat directly. No reverse proxy here! (No ssl too)

I've been out of this company for two years now, it was my first work as a developer, but they needed help for an app that I worked on during my time there. I was really surprised to see that everything still the same. Even the old private key that they emailed me (?!?!?!?!) back then still worked. All the passwords still the same too.

I have some good rants from the time I was there, and about the general level of the developers in my region. But I'll leave them for later!

Is it just me or this whole shit is crazy af?3 -

> TeamLeader1: I just discovered SQL is actually super fast! The low responsiveness I've experienced comes from our ORM!

> IHateForALiving: well of course SQL is blazingly fast. SQL has been refined by the best engineers in the world for the past 50 years, its performances are unparalleled for everything you could possibly need, unless you want to scale REALLY big. Sequelize, instead, is an Active Record ORM, so it's bound to struggle with huge amount of data, because every single row will get attached a significant amount of black magic to make sure everything syncs correctly. Why is that?

> TeamLeader1: I have a problem with this frontend component, it doesn't allow pagination. I tried downloading the whole DB to bypass that, but the ORM is slow... so I will bypass the ORM and download the whole table with a raw query. Look at that! It works like a charm, it's super duper fast!'

This mf is downloading some 35 thousand rows every time some user loads a page because he doesn't know how to paginate the fucking table with Angular, there's no way these people are real. 9

9 -

While writing up this quarter's performance review, I re-read last quarter's goals, and found one my boss edited and added a minimum to: "Release more features that customers want and enjoy using, prioritized by product; minimum 4 product feature/bug tickets this quarter."

... they then proceeded to give me, not four+ product tickets, but: three security tickets (two of which are big projects), a frontend ticket that should have been assigned to the designer, and a slow query performance ticket -- on top of my existing security tickets from Q3.

How the fuck was I supposed to meet this requirement if I wasn't given any product tickets? What, finish the monster tickets in a week instead of a month or more each and beg for new product tickets from the product manager who refuses to even talk to me?

Fuck these people, seriously.8 -

Switched to DuckDuckGo, because Google thought it would be nice, to ban the Proxy IP of our company (because you know, many requests) from searching and putting us behind these captcha monstrosities. I don't want to captcha myself out of every query I have for goddamn 2 minutes with slow ass fading images.

Turns out, I like their service even more. 9

9 -

Not about favorite language but about why PHP is not my favorite language.

I recently launched a web shop built on Prestashop. I found that some product pages are so god damn slow, like taking 50 fuckin' seconds to load. So I started investigating and analyzing the problem. Turns out that for some products we have so many different combinations that it results in a cartesian product totalling about 75K of unique combinations.

Prestashop did a real bad job coding the product controller because for every combination they fetch additional data. So that results in 75K queries being executed for just 1 product detail page. Crazy, even more when you know that the query that loads all these combinations, before iterating through them, takes 7 fuckin' seconds to execute on my dev machine which is a very very fast high end machine.

That said I analyzed the query and now I broke the query down into 3 smaller queries that execute in a much faster 400 ms (in total!) fetching the exact same data.

So what does this have to do with PHP? As PHP is also OO why the fuck would you always put stuff in these god damn associative arrays, that in turn contain associative arrays that contain more arrays containing even more arrays of arrays.

Yes I could do the same in C# and other languages as well but I have never ever encountered that in other languages but always seem to find this in PHP. That's why I hate PHP. Not because of the language but all those fucking retarded assholes putting everything in arrays. Nothing OO about that.2 -

I had spent the last year working on a online store power by woocommerce with over 100k products from various suppliers. This online store utilized a custom API that would take the various formats that suppliers offer their inventory in and made them consistent. Now everything was going swimmingly initially, but then I began adding more and more products using a plug-in called WP all import. I reached around 100k products and the site would take up to an entire minute to load sometimes timing out. I got desperate so I installed several caching plugins, but to no avail this did not help me. The site was originally only supposed to take three to four months but ended up taking an entire year. Then, just yesterday I found out what went wrong and why this woocommerce website with all of these optimizations was still taking anywhere from 60 to 90 seconds to load, or just timing out entirely. I had initially thought that I needed a beefier server so I moved it to a high CPU digitalocean VM. While this did help a little bit, the site was still very slow and now I had very high CPU usage RAM usage and high disk IO. I was seriously stumped the Apache process was using a high amount of CPU and IO along with MYSQL as well. It wasn't until I started digging deeper into the database that I actually found out what the issue was. As I was loading the site I would run 'show process list' in the SQL terminal, I began to notice a very significant load time for one of the tables, so I went to go and check it out. What I did was I ran a select all query on that particular table just to see how full it was and SQL returned a error saying that I had exceeded the maximum packet size. So I was like okay what the fuck...

So I exited my SQL and re-entered it this time with a higher packet size. I ran a query that would count how many rows were in this particular table and the number came out to being in the millions. I was surprised, and what's worse is that this table belong to a plugin that I had attempted to use early in the development process to cache the site. The plugin was deactivated but apparently it had left PHP files within the wp content directory outside of the actual plugin directory, so it's still executing scripts even though the plugin itself was disabled. Basically every time I would change anything on the site, it would recache the whole thing, and it didn't delete any old records. So 100k+ products caching on saves with no garbage collection... You do the math, it's gonna be a heavy ass database. Not only that but it was serialized data, so when it did pull this metric shit ton of spaghetti from the database, PHP then had to deserialize it. Hence the high ass CPU load. I had caching enabled on the MySQL end of things so that ate the ram. I was really desperate to get this thing running.

Honest to God the main reason why this website took so long was because the load times made it miserable to work on. I just thought that the hardware that I had the site on was inadequate. I had initially started the development on a small Linux VM which apparently wasn't enough, which is why I moved it to digitalocean which also seemed to not be enough, so from there I moved to a dedicated server which still didn't seem to be enough. I was probably a few more 60-second wait times or timeouts from recommending a server cluster to my client who I know would not be willing to purchase it. The client who I promised this site to have completed in 3 months and has waited a year. Seriously, I would tell people the struggles that I would go through with this particular site and they would just tell me to just drop the site; just take the money, just take the loss. I refused to, this was really the only thing that was kicking my ass. I present myself as this high-and-mighty developer like I'm just really good at what I do but then I have this WordPress site that's just beating the shit out of me for a year. It was a very big learning experience and it was also very humbling as well, it made me realize that I really don't know as much as I think I might. It was evidence that there is still so much more to learn out there, I did learn a lot from that experience especially about optimizing websites the different types of methods to do that particular lonely on the server side and I'll be able to utilize this knowledge in the future.

I guess the moral of the story is, never really give up. Ultimately things might get so bad that you're running on hopes and dreams. Those experiences are generally the most humbling. Now I can finally present the site that I am basically a year late on to the client who will be so happy that I did not give up on the project entirely. I'll have experienced this feeling of pure euphoria, and help the small business significantly grow their revenue. Helping others is very fulfilling for me, even at my own expense.

Anyways, gonna stop ranting. Running out of characters. If you're still here... Ty for reading :')7 -

I don't know if I'm being pranked or not, but I work with my boss and he has the strangest way of doing things.

- Only use PHP

- Keep error_reporting off (for development), Site cannot function if they are on.

- 20,000 lines of functions in a single file, 50% of which was unused, mostly repeated code that could have been reduced massively.

- Zero Code Comments

- Inconsistent variable names, function names, file names -- I was literally project searching for months to find things.

- There is nothing close to a normalized SQL Database, column ID names can't even stay consistent.

- Every query is done with a mysqli wrapper to use legacy mysql functions.

- Most used function is to escape stirngs

- Type-hinting is too strict for the code.

- Most files packed with Inline CSS, JavaScript and PHP - we don't want to use an external file otherwise we'd have to open two of them.

- Do not use a package manger composer because he doesn't have it installed.. Though I told him it's easy on any platform and I'll explain it.

- He downloads a few composer packages he likes and drag/drop them into random folder.

- Uses $_GET to set values and pass them around like a message contianer.

- One file is 6000 lines which is a giant if statement with somewhere close to 7 levels deep of recursion.

- Never removes his old code that bloats things.

- Has functions from a decade ago he would like to save to use some day. Just regular, plain old, PHP functions.

- Always wants to build things from scratch, and re-using a lot of his code that is honestly a weird way of doing almost everything.

- Using CodeIntel, Mess Detectors, Error Detectors is not good or useful.

- Would not deploy to production through any tool I setup, though I was told to. Instead he wrote bash scripts that still make me nervous.

- Often tells me to make something modern/great (reinventing a wheel) and then ends up saying, "I think I'd do it this way... Referes to his code 5 years ago".

- Using isset() breaks things.

- Tens of thousands of undefined variables exist because arrays are creates like $this[][][] = 5;

- Understanding the naming of functions required me to write several documents.

- I had to use #region tags to find places in the code quicker since a router was about 2000 lines of if else statements.

- I used Todo Bookmark extensions in VSCode to mark and flag everything that's a bug.

- Gets upset if I add anything to .gitignore; I tried to tell him it ignores files we don't want, he is though it deleted them for a while.

- He would rather explain every line of code in a mammoth project that follows no human known patterns, includes files that overwrite global scope variables and wants has me do the documentation.

- Open to ideas but when I bring them up such as - This is what most standards suggest, here's a literal example of exactly what you want but easier - He will passively decide against it and end up working on tedious things not very necessary for project release dates.

- On another project I try to write code but he wants to go over every single nook and cranny and stay on the phone the entire day as I watch his screen and Im trying to code.

I would like us all to do well but I do not consider him a programmer but a script-whippersnapper. I find myself trying to to debate the most basic of things (you shouldnt 777 every file), and I need all kinds of evidence before he will do something about it. We need "security" and all kinds of buzz words but I'm scared to death of this code. After several months its a nice place to work but I am convinced I'm being pranked or my boss has very little idea what he's doing. I've worked in a lot of disasters but nothing like this.

We are building an API, I could use something open source to help with anything from validations, routing, ACL but he ends up reinventing the wheel. I have never worked so slow, hindered and baffled at how I am supposed to build anything - nothing is stable, tested, and rarely logical. I suggested many things but he would rather have small talk and reason his way into using things he made.

I could fhave this project 50% done i a Node API i two weeks, pretty fast in a PHP or Python one, but we for reasons I have no idea would rather go slow and literally "build a framework". Two knuckleheads are going to build a PHP REST framework and compete with tested, tried and true open source tools by tens of millions?

I just wanted to rant because this drives me crazy. I have so much stress my neck and shoulder seems like a nerve is pinched. I don't understand what any of this means. I've never met someone who was wrong about so many things but believed they were right. I just don't know what to say so often on call I just say, 'uhh..'. It's like nothing anyone or any authority says matters, I don't know why he asks anything he's going to do things one way, a hard way, only that he can decipher. He's an owner, he's not worried about job security.12 -

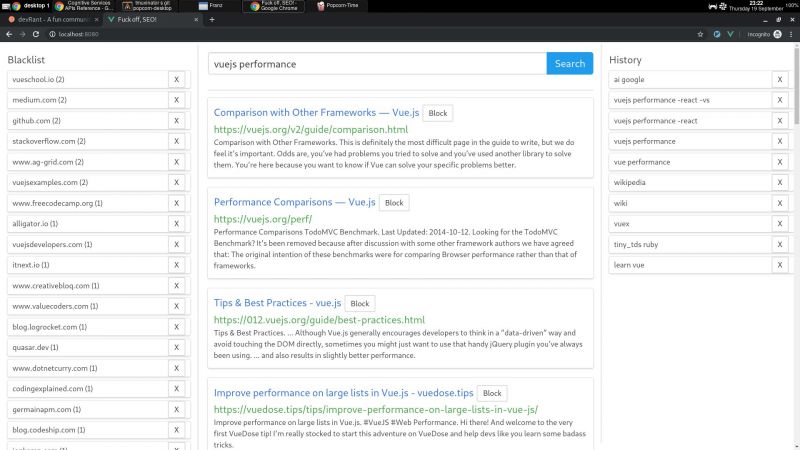

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

Saw a question on SO asking why foreach was slow with big data.

The code provided was 6 nested foreachs (basically a cartesian product between an array of arrays, and 4 other arrays).

Inside, a select query and an "update or create" operation.

"But why is foreach so slow?"4 -

I found weird that some developer never ask why when facing a problem. "What do you mean never ask why?" here some story.

Let's say a developer work with simple app. Laravel as Backend and Postgresql as Database. He face a problem that the app very slow when searching data.

In order to solve that problem he implement cache using redis but he found problem that it fast occasionally. In order to solve that problem he implement elasticsearch because he think elasticsearch very good for search but he found another problem that sometimes data on postgresql out of sync with data on elasticsearch. In order to solve that problem he implement cronjobs to fix out of sync data but he found another problem that cronjobs cannot fix out of sync data in real time. and so on...

Do you see the problem? He never ask why the app slow. Which part search the data? Backend or Database (Search in the Backend mostly slower than Database because Backend have to get all data on database first). Has the query been optimized? (limit offset, indexing). How about the internet connection? etc.

For me it's important to ask why when facing a problem and try to solve the problem as simple as possible.2 -

>Be client

>Have an issue with incredibly slow webpage load time

>Blame memcache issues

So... I look into the problem. Yes, the page either loads up fast, or times out. So, into the logs I go. Webserver is fine (except the timeout), PHP though... Error log is fine (just notices), but slow log shows the issue is the database (of course... its always the database... ugh)

So, checking the database, there is one ugly query that seems to be an issue. 5 joins and a huge where condition.

So I run EXPLAIN on the query and... Proceed to bang my head against the wall.

OF COURSE ITS SLOW YOU FU******, NONE OF YOUR TABLES HAVE ANY INDEXES.

What do they expect when the database has to always go down the whole table and do everything in memory, until it runs out and has to dump it all on disk and work with it there.

Ugh... Some clients... -

First month of project we suggest that we test that Entity Framework has made reasonable DB queries because the system will need to handle a lot of records. “Not a priority in this sprint because we need features.” Devs try to get it into every sprint. The last week of the project they want us to dump in a ton of records so they can test it. The N+1 SELECT query issue is on main queries. It is so bad and slow with more records that a simple query causes the container management to auto scale the application on a single query. They can have max 8 users in the system at a time and it will take 10 seconds to do a simple page refresh.

They get on our case and we dredge up all of the correspondence where they completely ignored our advice. Fix it now! We need another sprint. Fix it free! No.11 -

Colleague asked me to look at his eCommerce search filtering system as the customer was complaining it was slow taking 5-6 seconds to find results.

Delving into the code deeper, I discovered he was querying the results, sticking them in an array and then sub querying the results looking at all the combinations.

On top of that each sub query looked at the database fields using "DESCRIBE" to then search them each time it found a pair!

The total query count for one page search was 14,512!!!

Why oh Why? One SQL query could of done all that in one go.

I look at other code bits he's done and he's very good in other areas. I just don't get how sometimes a good developer can make such a weird decision? It's almost as if he wanted to make it as complex as possible.4 -

This was originally a reply to a rant about the excessive complexity of webdev.

The complexity in webdev is mostly necessary to deal with Javascript and the browser APIs, coupled with the general difficulty of the task at hand, namely to let the user interact with amounts of data far beyond network capacity. The solution isn't to reject progress but to pick your libraries wisely and manage your complexity with tools like type safe languages, unit tests and good architecture.

When webdev was simple, it was normal to have the user redownload the whole page everytime you wanted to change something. It was also normal to have the server query the database everytime a new user requested the same page even though nothing could have changed. It was an inefficient sloppy mess that only passed because we had nothing better and because most webpages were built by amateurs.

Today webpages are built like actual programs, with executables downloaded from a static file server and variable data obtained through an API that's preferably stateless by design and has a clever stateful cache. Client side caches are programmable and invalidations can be delivered through any of three widely supported server-client message protocols. It's not to look smart, it's engineering. Although 5G gets a lot of media coverage, most mobile traffic still flows through slow and expensive connections to devices with tiny batteries, and the only reason our ever increasing traffic doesn't break everything is the insanely sophisticated infrastructure we designed to make things as efficient as humanly possible.10 -

Found a dumb mistake I made in a query in my project.

Reduced page load from 10 sec to < 1 sec with an hour's restructuring.

Had just assumed it was slow because of my super cheap Azure DB subscription... 🙄 -

Eloquent is so fucking slow, my (dead) grandma queries faster. It takes the fucking double of time on a simple query with only one join for maybe ~200 rows... From now on I go fucking back to raw SQL in my Laravel projects. 😤16

-

CTO at my previous company think that wordpress based website is took a long time to load.

I suggest to use caching and fix ton of abusive query, He refused. He spun up more VM, upgrade the ec2 instance level to the max. Said that he resolved the problem. But the problem still persist actually.

Blame me for slow response website, blame me for late of deployment because data is not ready ( there's a lot of spam in there, we need to clean it before )

I left the company, Coworker said that he just install a bunch of caching plugin,

He made the website down for entire day and don't understand what is happening. Ask other developer to fix it quickly, to do unpaid overime

The site is back to bussiness, said to all team that he already fixed it.

Everything good happened, he claimed that it was his idea.

And the best part is : he put 'ssh' as skill list in his personal site1 -

Reminded again why every professional developer should at least read and understand basic algorithms...

Colleague: I don't understand why this agregation query is so slow, the counting is on the DB.

This function used to work fine... Now it sometimes hangs.

Me thinking: why does everyone assume db has unlimited resources and computing power so everything should be quick (no time or space complexity)...

Maybe if everyone understood this stuff our code base wouldn't be so shitty from the start...8 -

When updating the activities log:

- Working in the clients report feature 8:00 am - 9:30

- Improving slow query 9: 45 am 10: 45 am

- Filling this piece of shit because my boss cares what other departments say and wants to demonstrate that we are actually working since his a fucking square-minded dinasour who thinks more hours = more productivity FML 11:00 am - 11:15 am1 -

So I'm currently "assigned" a task in which I need to fix a slow query problem, which isn't a big deal. The biggest problem is that the original team of this project haven't got any means to develop things on your local machine. Looking at their docs and scripts, it seems like everything is deployed to a dev server. But whilst looking for details for this server, I found out that the network team have decommissioned the server!

So my dilemma right now is that I can't test any of my fixes on anywhere besides staging, or possibly production! Inheriting projects is the bloody worst!5 -

I looked at an SQL server today from a customer, talked with one of their devs and he said that he's unable to understand why the server misbehaves... All (!) queries were optimized, but they have 'big data queries'... Migraine started, I had a very bad feeling. Monitoring? Nooooppeeee. Migraine kicks in. Connected to server. SHOW GLOBAL VARIABLES...

After a bit of scrolling I found a lot of misconfigured variables (e.g. extreme large join buffers, unrealistic buffer sizes), high slow query count (nearly 60 % of COM_SELECT) and a few variables that were unknown to me.

Then came the version line.

5.0.46

Yes. 5.0.46.

Big data? Well... 30 GB of usage data.

I called the company back... The dev told me sternly that this was the production server (I had hope...) and that I lie - neither the version, nor the variables could be the problem.

A coworker had to verify it and our manager had to do the communication... Worst, most traumatic working day I ever had. -

I had to optimize a SQL-Query and i was able to cut the amount of time in half.

The business part of the company said it is still too slow, so i researched deeper and looked into the called functions which are used in every part where to claculate value X.

Found 20 similiar selects to get values which are summed up afterwards into three different variables. Thought this can be done in three selects with "sum", but then i found the dumbest thing is this function:

V1, V2 have the values from the selects

V3 is ja given value

X is the return value

the function returns another value

help = functionCall(parameter) - V1 -V2

X= help + V1 + V2 +V3

WTF im mean it does the selects for nothing...

X = functionCall(parameter) + V3 -

I turned on slow query log just for funsies and was immediately humbled.

Looks like I've got my next sprint lined up.3 -

If you make a db query and it goes surprisingly fast, that means it somehow fucked up your db. A database _never_ works faster than when it fucks itself up. Normal queries are always slow, but wrong queries are oh so quick.

-

Is GraphQL worth it? It promisses to keep some load away from backend programmers but what about this scenario:

There's a list of items with scroll load/infinite scroll. There can be several filters as well as the Option to change the ordering of the list items.

With "traditional" REST, I'll hit the DB with one request, get the data in the right order to the backend, might itterate over it once to add additional information, cache the result in Redis/Memcache and send it off.

Using GraphQL, the frontend has to load all entries first, sort them in JS (which probably is slow on mobile devices), and then display it. No matter how "expensive" the query is, there's no caching.

Is that about it? Did I get something backwards?2 -

I hate the elasticsearch backup api.

From beginning to end it's an painful experience.

I try to explain it, but I don't think I will be able to cover it all.

The core concept is:

- repository (storage for snapshots)

- snapshots (actual backup)

The first design flaw is that every backup in an repository is incremental. ES creates an incremental filesystem tree.

Some reasons why this is a bad idea:

- deletion of (older) backups is slow, as newer backups need to be checked for integrity

- you simply have to trust ES that it does the right thing (given the bugs it has... It seems like a very bad idea TM)

- you have no possibility of verification of snapshots

Workaround... Create many repositories as each new repository forces an full backup.........

The second thing: ES scales. Many nodes / es instances form a cluster.

Usually backup APIs incorporate these in their design. ES does not.

If an index spans 12 nodes and u use an network storage, yes: a maximum of 12 nodes will open an eg NFS connection and start backuping.

It might sound not so bad with 12 nodes and one index...

But it get's pretty bad with 100s of indexes and several dozen nodes...

And there is no real limiting in ES. You can plug a few holes, but all in all, when you don't plan carefully your backups, you'll get a pretty f*cked up network congestion.

So traffic shaping must be manually added. Yay...

The last thing is the API itself.

It's a... very fragile thing.

Especially in older ES releases, the documentation is like handing you a flex instead of toilet paper for a wipe.

Documentation != API != Reality.

Especially the fault handling left me more than once speechless...

Eg:

/_snapshot/storage/backup

gives you a state PARTIAL

/_snapshot/storage/backup/_status

gives you a state SUCCESS

Why? The first one is blocking and refers to the backup status itself. The second one shouldn't be blocking and refers to the backup operation.

And yes. The backup operation state is SUCCESS, while the backup state might be PARTIAL (hence no full backup was made, there were errors).

So we have now an additional API that we query that then wraps the API of elasticsearch. With all these shiny scary workarounds like polling, since some APIs are blocking which might lead to a gateway timeout...

Gateway timeout? Yes. Since some operations can run a LONG (multiple hours) time and you don't want to have a ton of open connections hogging resources... You let the loadbalancer kill it. Most operations simply run in ES in the background, while the connection was killed.

So much joy and fun, isn't it?

Now add the latest SMR scandal and a few faulty (as in SMR instead of CMD) hdds in a hundred terabyte ZFS pool and you'll get my frustration level.

PS: The cluster has several dozen terabyte and a lot od nodes. If you have good advice, you're welcome - but please think carefully about this fact.

I might have accidentially vaporized people sending me links with solutions that don't work on large scale TM.2 -

In Postgres, parallel query works by having multiple workers....

As such, depending on workers available, queries can run theoretically fast (workers available) or slow (workers not available) - right?

Question is: are there more optimizations like this... And is my assumption correct?

It seems like a major pain in the ass... Scalability and reliability wise. (?!)8 -

SQL is amazing.

I'll toss out some bassakwards query and the optimizer will make sense of it and suddenly I'm searching a amazonillian records in no time.

Then rando one day (today) I fire up what I think is really not the most wonky query I've ever written and ... "Well shit this is surprisingly slow."

So then I go full n00b and add some fields to the query that I know would limit the number of possible records to way low thinking that might help and ... nope no faster...

Guess it's time to bust open some books about SQL....4 -

Relatively often the OpenLDAP server (slapd) behaves a bit strange.

While it is little bit slow (I didn't do a benchmark but Active Directory seemed to be a bit faster but has other quirks is Windows only) with a small amount of users it's fine. slapd is the reference implementation of the LDAP protocol and I didn't expect it to be much better.

Some years ago slapd migrated to a different configuration style - instead of a configuration file and a required restart after every change made, it now uses an additional database for "live" configuration which also allows the deployment of multiple servers with the same configuration (I guess this is nice for larger setups). Many documentations online do not reflect the new configuration and so using the new configuration style requires some knowledge of LDAP itself.

It is possible to revert to the old file based method but the possibility might be removed by any future version - and restarts may take a little bit longer. So I guess, don't do that?

To access the configuration over the network (only using the command line on the server to edit the configuration is sometimes a bit... annoying) an additional internal user has to be created in the configuration database (while working on the local machine as root you are authenticated over a unix domain socket). I mean, I had to creat an administration user during the installation of the service but apparently this only for the main database...

The password in the configuration can be hashed as usual - but strangely it does only accept hashes of some passwords (a hashed version of "123456" is accepted but not hashes of different password, I mean what the...?) so I have to use a single plaintext password... (secure password hashing works for normal user and normal admin accounts).

But even worse are the default logging options: By default (atleast on Debian) the log level is set to DEBUG. Additionally if slapd detects optimization opportunities it writes them to the logs - at least once per connection, if not per query. Together with an application that did alot of connections and queries (this was not intendet and got fixed later) THIS RESULTED IN 32 GB LOG FILES IN ≤ 24 HOURS! - enough to fill up the disk and to crash other services (lessons learned: add more monitoring, monitoring, and monitoring and /var/log should be an extra partition). I mean logging optimization hints is certainly nice - it runs faster now (again, I did not do any benchmarks) - but ther verbosity was way too high.

The worst parts are the error messages: When entering a query string with a syntax errors, slapd returns the error code 80 without any additional text - the documentation reveals SO MUCH BETTER meaning: "other error", THIS IS SO HELPFULL... In the end I was able to find the reason why the input was rejected but in my experience the most error messages are little bit more precise.2 -

Switching jobs is part of our career growth and as a developer, we do this every two years on average.

I know that after announcing my resignation my colleagues won't treat me the same.

It's like I'm an entity in the system that you don't have to query it anymore but you have to exhaust its knowledge transfer limit within the notice period.

All the facades and presenter layers will fade out and you will know which models care about you and want to keep the existent associations. Those models only deserve to publish your contact payload with them.

My requests will be faced by slow response HR endpoints and I'll have to rely on a retriable solution to access the required document data.

I was mentally ready for it but it's still painful as I have to endure this for 2 months, yes, the EU has longer notice periods.

Do you guys have tips to share from your experience?2 -

Not a rant, but seeking advice...

Should I abandon 2 years' worth of work on migrating a personal project from SQL (M$) to a Graph database, and just stick to SQL? And only consider migrating when/if I need graph capabilities?

The project is a small social media platform. Has around ~50 monthly active users.

Why I started the migration in the first place:

• When researching databases, I read that for social media, graph is more suitable. It was, at least in terms of query structure. It was more natural, there were no "joins", and queries were much simpler than their SQL counterparts.

• In case the project got big, I didn't want to have to panic-deal with database issues that come with growth. I had some indexing issues with MSSQL, and it got me worried that at 50MAU I'm having these issues, what would happen if I get more?

• It's a personal project, and the Gremlin language and graph databases looked cool and I was motivated to learn something new.

----

Why I'm considering aborting the migration:

• It's taking too damn long. I'm unable to work on other features because this migration is taking up all my free time. Sunk cost fallacy is hitting me hard with this one.

• In local testing within docker, it's extremely slow. I tried various graph engines (janusgraph, official tinkerpop, orientdb), and the fastest one takes 4-6minutes to complete my server tests. SQL finishes the same tests in under 2 minutes, same docker environment. I also tried running my tests on a remote server (AWS neptune) and it was just as slow. Maybe my queries are bad, but can I afford to spend even more time fine tuning all queries?

• I now realise that "graph = no scalability issues" was naïve of me, and 100% wishful thinking. Scalability issues don't care what database I use, but about how well tuned and configured the whole system is.

• I really want to move on. My tech stack is falling behind and becoming outdated. I'm unable to maintain dependencies.

• I'm worried about losing those 50 MAU because they're essential to gaining traction once I release the platform. I keep telling them about the migration but at some point (2 years later) they're going to get bored I feel.

I guess partially it's a rant because I feel like I shouldn't stop now having spent 2 years on this, but at the same time I feel like I'm heading towards a dead end.

If you made it this far, thank you for reading:)10