Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "crawler"

-

We are making a hardcore dungeon crawler with our little game studio. First time using godot in production, im really excited.

Also have more cat memes. 9

9 -

Found a security hole....

A fast food delivery service had an ID for every order it Said

"example.com/order/9237" - i go 9236... finds another persons order, address, and phone number

So What should i do?

i thought of making a crawler and then make statistics on everyones orders and send Them a link 😂20 -

The gift that keeps on giving... the Custom CMS Of Doom™

I've finally seen enough evidence why PHP has such a bad reputation to the point where even recruiters recommended me to remove my years of PHP experience from the CV.

The completely custom CMS written by company <redacted>'s CEO and his slaves features the following:

- Open for SQL injection attacks

- Remote shell command execution through URL query params

- Page-specific strings in most core PHP files

- Constructors containing hundreds of lines of code (mostly used to initialize the hundreds of properties

- Class methods containing more than 1000 lines of code

- Completely free of namespaces or package managers (uber elite programmers use only the root namespace)

- Random includes in any place imaginable

- Methods containing 1 line: the include of the file which contains the method body

- SQL queries in literally every source file

- The entrypoint script is in the webroot folder where all the code resides

- Access to sensitive folders is "restricted" by robots.txt 🤣🤣🤣🤣

- The CMS has its own crawler which runs by CRONjob and requests ALL HTML links (yes, full content, including videos!) to fill a database of keywords (I found out because the server traffic was >500 GB/month for this small website)

- Hundreds of config settings are literally defined by "define(...)"

- LESS is transpiled into CSS by PHP on requests

- .......

I could go on, but yes, I've seen it all now.12 -

CEO - So... We'll have a new side project, it's a small thing, I spoke with a guy, he needs just a small thing...

Me - Ok....... So, what do you need me for?

CEO - Not much, this guy started a project but wants your help on a small part, and you already did something similar for us, so it should be fast, just copy and paste and change a little bit. You probably know better than me.

Me - *Sigh* ... So `friend`, what do you need from me?

Friend - So, I made a crawler that is storing some information on a local file, I just need to add multiprocessing and multithreading, a producer-consumer system with a queue so I can automatically add new links every now and then, a failback system, so when a process doesn't finish, it should be re-queued and crawled later, store all the information on a database cluster (that is not set up), [......]

Me - And when is this supposed to be up and running?

Friend - Your CEO told me you could do this by the weekend. Can we finish this by Friday?

Me - *facepalm* FML13 -

Ah yes, write your own fucking website crawler in PHP and deoptimize so hard that it uses some gigabytes of RAM and takes about 1 hour to crawl the very own website it's running on.

Oh and don't forget to download every single image and video file in order to "crawl" it for extremely valuable text content.

What a genius move! I'm really impressed.7 -

I wrote a web crawler to find a few problems on my company's blog. I accidentally DOS'd the peice of shit with a single thread. Fuck WordPress.3

-

We got DDoS attacked by some spam bot crawler thing.

Higher ups called a meeting so that one of our seniors could present ways to mitigate these attacks.

- If a custom, "obscure" header is missing (from api endpoints), send back a basic HTTP challenge. Deny all credentials.

- Some basic implementation of rate limiting on the web server

We can't implement DDoS protection at the network level because "we don't even have the new load balancer yet and we've been waiting on that for what... Two years now?" (See: spineless managers don't make the lazy network guys do anything)

So now we implement security through obscurity and DDoS protection... Using the very same machines that are supposed to be protected from DDoS attacks.16 -

It works. It finally works!

After an 8 hour session I got my crawler to work and give me basically every anime op/ed I long for.

All that using Elixir even though I'm still fairly inexperienced with both elixir and functional programming

I'll try to create an API and eventually Maybe even a database tomorrow (or rather in a couple of hours)5 -

Just finished my third year of my comp sci degree when a friend found me a position at a very small startup. I was asked to build a web crawler to take job postings off kijiji and craigslist and place them in our database for our clients to find. It didn't take long to build (even with limited experience). It was pretty shady. I didn't think i'd have to deal with the ethics of a task so soon in my new dev-life! Luckily it never made it to the live site. After that they got me to work on their android app (not so shady)

4 years later i still work for that company building apps. It's still a small team, and i love 'em 🤙1 -

!rant

2 days ago I made an image crawler with a web interface that scrapes whatever url you put in and returns a list of images that it found on that website, right now it's only limited to img elements

It was pretty fun to work on it during the weekend :3 9

9 -

I get an email about an hour before I get into work: Our website is 502'ing and our company email addresses are all spammed! I login to the server, test if static files (served separately from site) works (they do). This means that my upstream proxy'd PHP-FPM process was fucked. I killed the daemon, checked the web root for sanity, and ran it again. Then, I set up rate limiting. Who knew such a site would get hit?

Some fucking script kiddie set up a proxy, ran Scrapy behind it, and crawled our site for DDoS-able URLs - even out of forms. I say script kiddie because no real hacker would hit this site (it's minor tourism in New Jersey), and the crawler was too advanced for joe shmoe to write. You're no match for well-tuned rate-limiting, asshole!1 -

!Rant

Wrote a crawler and now has 18 million records in the queue. About 500.000 files with metadata.

1 month until deadline and we have to do shit many things.

Now we discover we have a flaw in our crawler ( I don't see it as a bug ).. We don't know how much metadata we missed, but now we have to write a script that scrapes every webpage that we've already visited and get that metadata..

What's the flaw you ask? Some people find it funny to put capital letters in their attribute names.. *kuch* Microsoft.com!! *kuch*

And what didn't we do? We didn't lower case each entire webpage and then, only then, search the webpage for data..5 -

Trying to get some information about a product:

1. Opening the manufacturers webpage, gets a crawler protection page presented, 30 kB

2. The actual website opens, 600 kB + an endless amount of javaScript.

3. Tries to download a PDF, a extra download page is opened, another 600 kB and even more JS.

4 Download the Download a PDF, 3 MB

5. Read the information in that PDF which would fit into 8 kB.2 -

*Finished the deploy*

*Dusts collar*

"Easy pesy"

Few hours later

*slack tone*

Production inaccessible! Blackbox crawler failed with message 5xx.

And that was the day little Charlie learnt dev-ops is not fun and thrilling. -

Lab needs a crawler to download some assets, none of my business though

But why not

Haven't touched crawler for two years

Google for latest state of art

Found scrapy

I have to define a class for a crawling script?

Got scared

Went back to beautifulsoup and request

Got the job done in 20 mins

Fuck yeah6 -

Too lazy to come up with a better name...

Yea... the app still works...

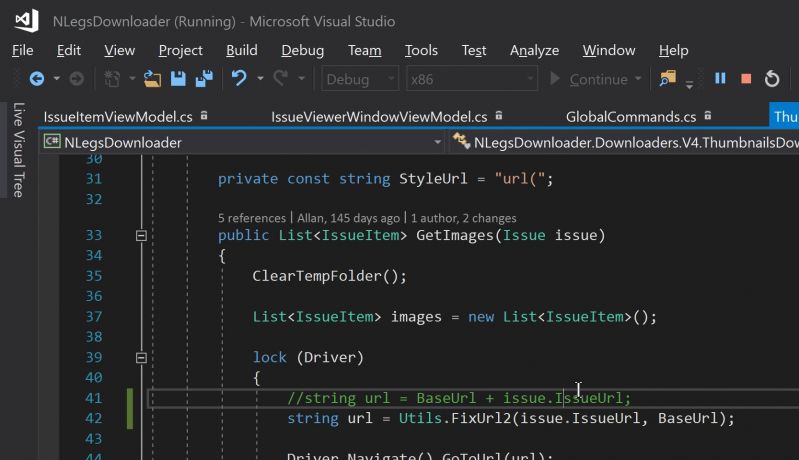

5th time editing the crawler logic... 2 years now? 13

13 -

So I go into Google Search Console to try to determine why its saying pages are not compliant with mobile and whatnot.

3 hours later I come out realizing that what Google REALLY wants is for everyone to build every web page as static HTML with no script tags and never a call to an external website. Just dump all that javascript and css and HTML into one BIG FRIGGIN FILE so our crawler feels satisfied that it's loading everything all in one request.

No CMSes allowed unless GOOGLE built it.

Let's just all revert to HTML 1.0 and be done with it.1 -

Question about google crawler (?)

So, got a question, hopefully you have an answer.

I have a personal website that went up about 2 months ago that has a contact form.

Today I got two emails sent to me. This is the way I have coded it up.

But take a look at the name and message fields. I wonder if this is a google crawler submitting the form by any chance. I also got another email around the same time where the message and name field are reversed.

Anyone else experience this? 9

9 -

Person: *has issues with bots* (probably just stuff like Google's crawler n stuff)

People trying to "help": "Use CloudFlare"

...

...

Could you all please bugger off with CloudFail?6 -

I remember back when I was in pre calculus I decided to take a class online. So my teacher's website was made by him and run on go Daddy, he taught precalculus, calculus, algebra, algebra ii, and computer science. I decided to penetration test his website and use a web crawler. His directory that had the tests, test answers, exams, exam answers, and homework answer's as well as all the books he's written in PDFs, was unprotected, I could access and download them all. He also had a database directory that contained all the students' phone numbers, email addresses, home addresses, and their full names.

I alerted him to this and didn't get anything in turn :P2 -

Just posted this in another thread, but i think you'll all like it too:

I once had a dev who was allowing his site elements to be embedded everywhere in the world (intentional) and it was vulnerable to clickjacking (not intentional). I told him to restrict frame origin and then implement a whitelist.

My man comes back a month later with this issue of someone in google sites not being able to embed the element. GOOGLE FUCKING SITES!!!!! I didnt even know that shit existed! So natually i go through all the extremely in depth and nuanced explanations first: we start looking at web traffic logs and find out that its not the google site name thats trying to access the element, but one of google's web crawler-type things. Whatever. Whitelist that url. Nothing.

Another weird thing was the way that google sites referenced the iframe was a copy of it stored in a google subsite???? Something like "googleusercontent.com" instead of the actual site we were referencing. Whatever. Whitelisted it. Nothing.

We even looked at other solutions like opening the whitelist completely for a span of time to test to see if we could get it to work without the whitelist, as the dev was convinced that the whitelist was the issue. It STILL didnt work!

Because of this development i got more frustrated because this wasnt tested beforehand, and finally asked the question: do other web template sites have this issue like squarespace or wix?

Nope. Just google sites.

We concluded its not an issue with the whitelist, but merely an issue with either google sites or the way the webapp is designed, but considering it works on LITERALLY ANYTHING ELSE i am unsure that the latter is the answer.2 -

following former rant https://devrant.com/rants/1816992/ i implemented some features that enable a specialized search engine to process the content of my wifes custom site like a wordpress site. since the crawler relies on class-names i made an dynamic implementation of additional - non-used but crawlable - classnames. i called that method "classmate". i am a bit desperate.

-

Need suggestions for nutch vs storm crawler. I have to scrape information for 5000 companies listed on bse3

-

Recently we got a new project assigned and as always you are hyped, really really hyped...........

We were supposed to find all kind of driver updates (especially bios ones) for all devices the company owns. So first of all we thought:

EAAAASY! A little bit of web crawling, regex, etc.

.

.

.

.

B

U

U

U

U

T

!

We were sooooo soooo wrong these fucking manufacturer websites are absolutely awful to crawl or parse and nowadays there are no proper FTP Servers or something else anymore you could use to get the information. Every subsite is little bit different...

While coding and literally brute forcing possible urls (there was some kind of vague pattern) we learned AGAIN to appreciate proper developed and designed websites. Especially by devs who may have some more usage scenarios in mind for their site than simple human clients.

So thank you to all of you awesome web developers who design proper websites and web tools!

All in all it took us 2 weeks to come up with a proper solution (by the way we are a smal team of 3 devs) which somewhat works reliable and can deal with site changes etc. -

I'm just dumping 10 GB of data remotely from a mysql db, because my el cheapo VPS run out of space

can you suggest a good book?

oh, actually I already found one, the title is "Prepare your fucking server/workspace properly if you want to play around with a lot of data"5 -

I wrote a site specific web crawler for my job(debt collection agency) spent well over 175 hours on it, database integration, remote spider deployment, easy GUI. I need a major raise if I'm going to give it to them though, I'm currently making 12/hr under the title of legal assistant, think I could get a raise to 23/hr. What would you do?9

-

When I browsed for a Food Recipes (Especially Indian Food) Dataset, I could not find one (that I could use) online. So, I decided to create one.

The dataset can be found here: https://lnkd.in/djdh9nX

It contains following fields (self-explanatory) - ['RecipeName', 'TranslatedRecipeName', 'Ingredients', 'TranslatedIngredients', 'Prep', 'Cook', 'Total', 'Servings', 'Cuisine', 'Course', 'Diet', 'Instructions', 'TranslatedInstructions']. The datset contains a csv and a xls file. Sometimes, the content in Hindi is not visible in the csv format.

You might be wondering what the columns with the prefix 'Translated' are. So, a lot of entries in the dataset were in Hindi language. To take care of such entries and translating them to English for consistency, I went ahead and used 'googletrans'. It is a python library that implements Google Translate API underneath.

The code for the crawler, cleaning and transformation is on Github (Repo:https://lnkd.in/dYp3sBc) (@kanishk307).

The dataset has been created using Archana's Kitchen Website (https://lnkd.in/d_bCPWV). It is a great website and hosts a ton of useful content. You should definitely consider viewing it if you are interested.

#python #dataAnalytics #Crawler #Scraper #dataCleaning #dataTransformation -

Does Google Crawl every website and pages on the internet?

I want to know does google crawler will visit my pages or they only visit few, like I have more than 10 Million pages so how much time it will take approx to index complete pages.4 -

Anyone know how to use a proxy for a web crawler written in native Java for Android. I have a bug in an app in production that only surfaced after being used for a couple of days and I urgently need to fix it.

HELP!!! -

I have never seen core coding questions here so this is one of my shots in the dark-- this time, because I have a phobia for stackoverflow, and specifically, discussing this objective among wider audience

Here it goes: Ever since elon musk overpriced twitter apis, the 3rd-party app I used to unfollow non-followers broke. So I wrote a nifty crawler that cycles through those following me and fish out traitors who found me unpleasant enough to unfollow. Script works fine, I suspect, because I have a small amount I'm following

The challenge lies in me preemptively trying to delete some of the elements before the dom can overflow. Realistically, you want to do this every 1000 rows or so. The problem is, tampering with the rows causes the page's lazy loader to break. Apparently, it has some indicator somewhere using information on one of the rows to determine details of the next fetch

I've tried doing many things when we reach that batch limit:

1) wiping either the first or last

2) wiping only even rows

3) logging read rows and wiping them when it reaches batch limit

4) Emptying or hiding them

5) Accessing siblings of the last element and wiping them

I've tried adding custom selectors to the incoming nodes but something funny occurs. During each iteration, at some point, their `.length` gets reset, implying those selectors were removed or the contents were transferred to another element. I set the MutationObserver to track changes but it fetches nothing

I hope there are no twitter devs here cuz I went great pains to decipher their classes. I don't want them throwing another cog that would disrupt the crawler. So you can post any suggestions you have that could work and I will try it out. Or if it's impossible to assist without running the code, I will have no choice but to post it here4