Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

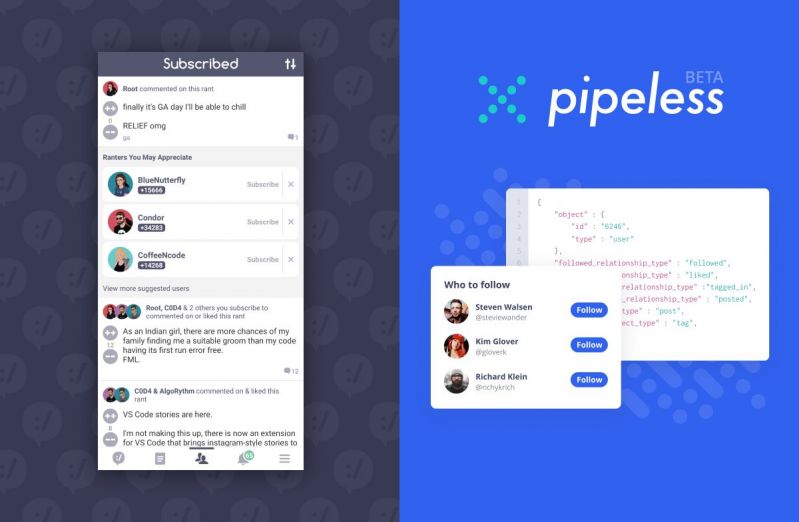

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "dataset"

-

Hey everyone,

We have a few pieces of news we're very excited to share with everyone today. Apologies for the long post, but there's a lot to cover!

First, as some of you might have already seen, we just launched the "subscribed" tab in the devRant app on iOS and Android. This feature shows you a feed of the most recent rant posts, likes, and comments from all of the people you subscribe to. This activity feed is updated in real-time (although you have to manually refresh it right now), so you can quickly see the latest activity. Additionally, the feed also shows recommended users (based on your tastes) that you might want to subscribe to. We think both of these aspects of the feed will greatly improve the devRant content discovery experience.

This new feature leads directly into this next announcement. Tim (@trogus) and I just launched a public SaaS API service that powers the features above (and can power many more use-cases across recommendations and activity feeds, with more to come). The service is called Pipeless (https://pipeless.io) and it is currently live (beta), and we encourage everyone to check it out. All feedback is greatly appreciated. It is called Pipeless because it removes the need to create complicated pipelines to power features/algorithms, by instead utilizing the flexibility of graph databases.

Pipeless was born out of the years of experience Tim and I have had working on devRant and from the desire we've seen from the community to have more insight into our technology. One of my favorite (and earliest) devRant memories is from around when we launched, and we instantly had many questions from the community about what tech stack we were using. That interest is what encouraged us to create the "about" page in the app that gives an overview of what technologies we use for devRant.

Since launch, the biggest technology powering devRant has always been our graph database. It's been fun discussing that technology with many of you. Now, we're excited to bring this technology to everyone in the form of a very simple REST API that you can use to quickly build projects that include real-time recommendations and activity feeds. Tim and I are really looking forward to hopefully seeing members of the community make really cool and unique things with the API.

Pipeless has a free plan where you get 75,000 API calls/month and 75,000 items stored. We think this is a solid amount of calls/storage to test out and even build cool projects/features with the API. Additionally, as a thanks for continued support, for devRant++ subscribers who were subscribed before this announcement was posted, we will give some bonus calls/data storage. If you'd like that special bonus, you can just let me know in the comments (as long as your devRant email is the same as Pipeless account email) or feel free to email me (david@hexicallabs.com).

Lastly, and also related, we think Pipeless is going to help us fulfill one of the biggest pieces of feedback we’ve heard from the community. Now, it is going to be our goal to open source the various components of devRant. Although there’s been a few reasons stated in the past for why we haven’t done that, one of the biggest reasons was always the highly proprietary and complicated nature of our backend storage systems. But now, with Pipeless, it will allow us to start moving data there, and then everyone has access to the same system/technology that is powering the devRant backend. The first step for this transition was building the new “subscribed” feed completely on top of Pipeless. We will be following up with more details about this open sourcing effort soon, and we’re very excited for it and we think the community will be too.

Anyway, thank you for reading this and we are really looking forward to everyone’s feedback and seeing what members of the community create with the service. If you’re looking for a very simple way to get started, we have a full sample dataset (1 click to import!) with a tutorial that Tim put together (https://docs.pipeless.io/docs/...) and a full dev portal/documentation (https://docs.pipeless.io).

Let us know if you have any questions and thanks everyone!

- David & Tim (@dfox & @trogus) 52

52 -

Seven months ago:

===============

Project Manager: - "Guys, we need to make this brand new ProjectX, here are the specs. What do you think?"

Bored Old Lead: - "I was going to resign this week but you've convinced me, this is a challenge, I never worked with this stack, I'm staying! I'll gladly play with this framework I never used before, it seems to work with this libA I can use here and this libB that I can use here! Such fun!"

Project Manager: - "Awesome! I'm counting on you!"

Six months ago:

====================

Cprn: - "So this part you asked me to implement is tons of work due to the way you're using libA. I really don't think we need it here. We could use a more common approach."

Bored Old Lead: - "No, I already rewrote parts of libB to work with libA, we're keeping it. Just do what's needed."

Cprn: - "Really? Oh, I see. It solves this one issue I'm having at least. Did you push the changes upstream?"

Bored Old Lead: - "No, nobody uses it like that, people don't need it."

Cprn: - "Wait... What? Then why did you even *think* about using those two libs together? It makes no sense."

Bored Old Lead: - "Come on, it's a challenge! Read it! Understand it! It'll make you a better coder!"

Four months ago:

==============

Cprn: - "That version of the framework you used is loosing support next month. We really should update."

Bored Old Lead: - "Yeah, we can't. I changed some core framework mechanics and the patches won't work with the new version. I'd have to rewrite these."

Cprn: - "Please do?"

Bored Old Lead: - "Nah, it's a waste of time! We're not updating!"

Three months ago:

===============

Bored Old Lead: - "The code you committed doesn't pass the tests."

Cprn: - "I just run it on my working copy and everything passes."

Bored Old Lead: - "Doesn't work on mine."

Cprn: - "Let me take a look... Ah! Here you go! You've misused these two options in the framework config for your dev environment."

Bored Old Lead: - "No, I had to hack them like that to work with libB."

Cprn: - "But the new framework version already brings everything we need from libB. We could just update and drop it."

Bored Old Lead: - "No! Can't update, remember?"

Last Friday:

=========

Bored Old Lead: - "You need to rewrite these tests. They work really slow. Two hours to pass all."

Cprn: - "What..? How come? I just run them on revision from this morning and all passed in a minute."

Bored Old Lead: - "Pull the changes and try again. I changed few input dataset objects and then copied results from error messages to assertions to make the tests pass and now it takes two hours. I've narrowed it to those weird tests here."

Cprn: - "Yeah, all of those use ORM. Maybe it's something with the model?"

Bored Old Lead: - "No, all is fine with the model. I was just there rewriting the way framework maps data types to accommodate for my new type that's really just an enum but I made it into a special custom object that needs special custom handling in the ORM. I haven't noticed any issues."

Cprn: - "What!? This makes *zero* sense! You're rewriting vendor code and expect everything to just work!? You're using libs that aren't designed to work together in production code because you wanted a challenge!?? And when everything blows up you're blaming my test code that you're feeding with incorrect dataset!??? See you on Monday, I'm going home! *door slam*"

Today:

=====

Project Manager: - "Cprn, Bored Old Lead left on Friday. He said he can't work with you. You're responsible for Project X now."24 -

I’m so mad I’m fighting back anger tears. This is a long rant and I apologize but I’m so freaking mad.

So a few weeks ago I was asked by my lead staff person to do a data analysis project for the director of our dept. It was a pretty big project, spanning thousands of users. I was excited because I love this sort of thing and I really don’t have anything else to do. Well I don’t have access to the dataset, so I had to get it from my lead and he said he’d do it when he had a chance. Three days later he hadn’t given it to me yet. I approach him and he follows me to my desk, gives me his login and password to login to the secure freaking database, then has me clone it and put it on my computer.

So, I start working on it. It took me about six hours to clean the database, 2 to set up the parameters and plan of attack, and two or three to visualize the data. I realized about halfway through that my lead wasn’t sure about the parameters of the analysis, and I mentioned to him that the director had asked for more information than what he was having me do. He tells me he will speak with director.

So, our director is never there, so I give my lead about a week to speak with her, in the mean time I finish the project to the specifications that the director gave. I even included notes about information that I would need to make more accurate predictions, to draw conclusions, etc. It was really well documented.

Finally, exasperated, and with the project finished but just sitting on my computer for a week, I approached my director on a Saturday when I was working overtime. She confirmed that I needed to what she said in the project specs (duh), and also mentioned she needed a bigger data set than what I was working with if we had one. She told me to speak to my lead on Monday about this, but said that my work looked great.

Monday came and my lead wasn’t there so I spoke with my supervisor and she said that what I was using was the entire dataset, and that my work looked great and I could just send it off. So, at this point 2/3 of my bosses have seen the project, reviewed it, told me it was great, and confirmed that I was doing the right thing.

I sent it off to the director to disseminate to the appropriate people. Again, she looked at it and said it was great.

A week later (today) one of the people that the project was sent to approaches me and tells me that i did a great job and thank you so much for blah blah blah. She then asks me if the dataset I used included blahblah, and I said no, that I used what was given to me but that I’d be happy to go in and fix it if given the necessary data.

She tells me, “yeah the director was under the impression that these numbers were all about blahblah, so I think there was some kind of misunderstanding.” And then implied that I would not be the one fixing the mistake.

I’m being taken off of the project for two reasons: 1. it took to long to get the project out in the first place,

2. It didn’t even answer the questions that they needed answered.

I fucking told them in the notes and ALL THROUGH THE VISUALIZATIONS that I needed additional data to compare these things I’m so fucking mad. I’m so mad.15 -

That awkward moment when you realize the code you have been debugging for an hour actually works fine and updating the entire dataset. You've just been returning only the top 1000 rows.2

-

This was WAY back in my first job as a programmer where I was working on a custom built CMS that we took over from another dev shop. So a standard feature was of course pagination for a section that had well over 400,000 records. The client would always complain about this section always being very slow to load. My boss at that job would tell me to not look at the problem as it wasn't a part of the scope.

But being a young enthusiastic programmer, I decided to delve into the problem anyway. What I came to discover was that the pagination was simply doing a select all 400,000 records, and then looping through the entire dataset until it got to the slice it needed to display.

So I fixed the pagination and page loads went from around 1 min to only a few seconds. I felt pretty proud about that. But I later got told off by my boss as he now can't bill for that fix. Personally I didn't care since I learned a bit about SQL pagination, and just how terrible some developers can be.5 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

Context: we analyzed data from the past 10 years.

So the fuckface who calls himself head of research tried to put blame on me again, what a surprise. He asked for a tool what basically adds a lot of numbers together with some tweakable stuff, doesnt really matter. Now of course all the datanwas available already so i just grabbed it off of our api, and did the math thing. Then this turdnugget spends 4 literal weeks, tryna feed a local csv file into the program, because he 'wanted to change some values'. One, this isnt what we agreed on, he wanted the data from the original. When i told him this, he denied it so i had to dig out a year old email. Two, he never explicitly specified anything so i didnt use a local file because why the fuck would i do that. Three, i clearly told him that it pulls data from the server. Four, what the fuck does he wanna change past values for, getting ready for time travel? Five, he ranted for like 3 pages, when a change can be done by currentVal - changedVal + newVal, even a fucking 10 year old could figure that out. Also, when i allowed changes in a temporary api, he bitched about how the additional info, what was calculated from yet another original dataset, doesnt get updated, when he fucking just randimly changes values in the end set. Pinnacle of professionalism.2 -

Look, I'm not even mad that your dataset is the spaghettiest of all spaghetti, but why do you have ten different jupyter notebook files lying around?

I mean, I'm not implying that a monkey has more brain in his armpit than you have in your entire body, but like, you call this a dataset while all over seen so far is half-processed garbage. You could've just dipped your pc in sewage and the results would still be cleaner than this.

Luckily, your paper is half decent so what the hell, let's see if I can fish anything useful out of this. But I swear to god if I come across another static path in this... And here we go! Another static path! Ladies and gentlemen, I propose we get this guy's phd back until he learns to fucking do a decent code.

(It's actually a massively complicated project, so it kinda makes sense to be this big of a mess. But still!)6 -

Someone put a fucking \b in this dataset I'm working with, which just so happens to be an illegal character for xml.

FUCKING HOW. FUCKING WHY. FUCKING WHERE ARE YOU AND WHY DO YOU WANT TO SEE ME SUFFER THIS MUCH4 -

If anyone has been keeping up with my data warehouse from hell stories, we're reaching the climax. Today I reached my breaking point and wrote a strongly worder email about the situation. I detailed 3 separate cases of violated referential integrity (this warehouse has no constraints) and a field pulling from THE WRONG FLIPPING TABLE. Each instance was detailed with the lying ER diagram, highlighted the violating key pairs, the dangers they posed, and how to fix it. Note that this is a financial document; a financial document with nondeterministic behavior because the previous contractors' laziness. I feel like the flipping harbinger of doom with a cardboard sign saying "the end is near" and keep having to self-validate that if I was to change anything about this code, **financial numbers would change**, names would swap, description codes would change, and because they're edge cases in a giant dataset, they'll be hard to find. My email included SQL queries returning values where integrity is violated 15+ times. There's legacy data just shoved in ignoring all constraints. There are misspellings where a new one was made instead of updating, leaving the pk the same.

Now I'd just put sorting and other algos, but the data is processed by a crystal report. It has no debugger. No analysis tools. 11 subreports. The thing takes an hour to run and 77k queries to the oracle backend. It's one of the most disgusting infrastructures I've ever seen. There's no other solution to this but to either move to a general programming language or get the contractor to fix the data warehouse. I feel like I've gotten nowhere trying to debug this for 2 months. Now that I've reached what's probably the root issue, the office beaucracy is resisting the idea of throwing out the fire hazard and keeping the good parts. The upper management wants to just install sprinklers, and I'm losing it. -

Dude, publish your damn dataset with your damn ML study!!!! I'm not even asking for your Godforsaken model!

😡😡🗡️🗡️⚔️🔫🔫🏹🔨4 -

So I just finished a prototyp for my thesis. Still need to segment the real data myself and collect some statistics stuff to write about the network, but I am pretty proud of the result considering the dataset is very small.

For now I need some god damn sleep. 5

5 -

Fucking incompetence

Senior level developer with 15 years of software development experience ...

ends up writing brute force search on a sorted data - when questioned he's like yeah well dataset is not that large so performance degradation will be marginal

He literally evades any particularly toil heavy task like fixing the unit test cases , or managing the builder node versions to latest ( python 2 to 3 ) because it's beneath him and would rather work on something flashy like microservice microfrontend etc. -- which he cannot implement anyway

Or will pick up something very straightforward like adding a if condition to a particular method just to stay relevant

And the management doesn't really care who does what so he ends up getting away with this

The junior guys end up taking up the butt load of crappy tasks which are beneath the senior guy

And sometimes those tasks are not really junioresque - so we end up missing deadlines and getting questioned as to why we are are not able to deliver.

Fuck this shit ... My cortisol shoots up whenever I think of him4 -

when you start machine learning on you laptop, and want to it take to next level, then you realize that the data set is even bigger that your current hard-disk's size. fuuuuuucccckkk😲😲

P. S. even metadata csv file was 500 mb. Took at least 1 min to open it. 😭😧11 -

So I downloaded the handwritten character dataset from EMNIST, took an hour to extract. Found out it has 814k + pngs. I haven't optimized all of them with Gimp 😕 change them to idx3-ubyte format, or make labels for each char in a c++ automation.. While Gimp was frozen in bulk process, I started hearing crackling sounds from my desk 😨😨 I'm like fckkk shits gonna blow..

That's when the poorly taped 0x7E2 calendar fell from the wall.🤣🤣🤣2 -

While scraping web sources to build datasets, has legality been ever a concern?

Is it a standard practice for checking whether a site prohibits scraping?22 -

Data Disinformation: the Next Big Problem

Automatic code generation LLMs like ChatGPT are capable of producing SQL snippets. Regardless of quality, those are capable of retrieving data (from prepared datasets) based on user prompts.

That data may, however, be garbage. This will lead to garbage decisions by lowly literate stakeholders.

Like with network neutrality and pii/psi ownership, we must act now to avoid yet another calamity.

Imagine a scenario where a middle-manager level illiterate barks some prompts to the corporate AI and it writes and runs an SQL query in company databases.

The AI outputs some interactive charts that show that the average worker spends 92.4 minutes on lunch daily.

The middle manager gets furious and enacts an Orwellian policy of facial recognition punch clock in the office.

Two months and millions of dollars in contractors later, and the middle manager checks the same prompt again... and the average lunch time is now 107.2 minutes!

Finally the middle manager gets a literate person to check the data... and the piece of shit SQL behind the number is sourcing from the "off-site scheduled meetings" database.

Why? because the dataset that does have the data for lunch breaks is labeled "labour board compliance 3", and the LLM thought that the metadata for the wrong dataset better matched the user's prompt.

This, given the very real world scenario of mislabeled data and LLMs' inability to understand what they are saying or accessing, and the average manager's complete data illiteracy, we might have to wrangle some actions to prepare for this type of tomfoolery.

I don't think that access restriction will save our souls here, decision-flumberers usually have the authority to overrule RACI/ACL restrictions anyway.

Making "data analysis" an AI-GMO-Free zone is laughable, that is simply not how the tech market works. Auto tools are coming to make our jobs harder and less productive, tech people!

I thought about detecting new automation-enhanced data access and visualization, and enacting awareness policies. But it would be of poor help, after a shithead middle manager gets hooked on a surreal indicator value it is nigh impossible to yank them out of it.

Gotta get this snowball rolling, we must have some idea of future AI housetraining best practices if we are to avoid a complete social-media style meltdown of data-driven processes.

Someone cares to pitch in?13 -

Random af project idea that will see me burned alive by the internet (because if I do it I intend to put it in dev.to which is full of "that offends me" people):

Generate a classifier that will scan text from different websites and categorize where the person might be from.

Example: "plz send bob and vagene" <--- we all know

"mami que ricas nalgas" <--- Mexican for the most part.

"there, their, they're and similar text" <--- my fellow Americans for the most part....

"cyka blyat" <--- 0.o we know

"pompous statement about the way Americans do shit" <--- European, meaning, from Yurop.

"angry as fuck rant/banter" <-- German

"lol whatever Trump is the best president ever" <--- some moron from the south of the U.S (south much like myself but I am not a Trump supporter nor a republican)

etc etc.

What makes this complex is that I would have to put together my own dataset in the highly likely chance of something like that not existing already for me to use.

Can you imagine the chaos?11 -

A few days ago PM started asking me once a day when we will have fixed an error he saw at customer site.

I always tell him that it is not a software error, just missing data. I try to explain the issue and that the root cause is the incomplete data given by the customer.

Then he says he will talk with the data import guys if they can fix something and I tell him that from my point of view the data import is fine, but the customer has to provide a full dataset and the "error" will vanish immediately.

He walks to the data import colleague anyway and gets told that everything is ok with the import.

Next day he appears at my desk to tell me that the import seems ok and asks me how we could fix the error and I tell him that it's not a software issue, try to explain it...

I wonder how long he will keep up on it.3 -

Today I had to present my final year project called segmentation and detection of glioma using deep learning.

It took 15 minutes for the evaluators to understand what an mri image dataset (BRaTs) dataset looks like (they are voxels and not pixels). My god, these peasants...

And I was there expecting them to understand down sampling convolutions and up scaling convolutions of U Net model 😂. Life is so convoluted right now!2 -

You know. I think people trashing chatgpt detracts from the fact that if it is actually functioning as stated it's still a goddamm miracle of science!

I mean are we all really that jaded ?

You can type a plain English request in and a COMPUTER PROGRAM sends you a Novel response that may reflect the bias of its dataset and the bias of people trying to keep "secrets" but otherwise it sends a response formulated from extensive data.

That's a breakthrough

Quick acting like an alpha that was frozen years ago apparently is the RTM!22 -

I recently tried to apply the same data analytics rationale that I use at work to my personal life. This is not a rant, it is more like an data storytelling of an actual use case I would like some input on.

I set a goal - gotta thin up a bit and calm down my ticker - and got a (almost unreasonably expensive) field expert consultant to yell at me about it for a couple hours.

I unravel the metrics - there is like a million weight-related KPIs and most say nothing at all. I have never seen an non-infrastructure measurable subject that could not be resumed to 2-5 performance metrics. I got overall weight, how well my nine-years-old business suit fits me, heart rate, and day-after relative muscle pain (it will make sense soon).

Then its data-pipeline time. I bought a cheap weight scale and smartwatch, and every morning I input the data in an app. Yes, I try to put on the suit every morning. It still does not fit.

After establishing a baseline, I tried to fit different approaches. Doing equipment-free exercises, going to the gym, dieting. None was actually feasible in the long run, but trying different approaches does highlight the impacts and the handling profile of each method.

Looking at the now-gathered data, one thing was obvious - can't do dieting because it is not doable to have a shopping list and meals for me and another for the family.

Gym is also off the table - too much overhead. I spend more time on the trip there and back than actually there.

And home exercise equipment is either super crappy or very expensive. But it is also the most reasonable approach.

So it is solutions time. I got a nice exercise bycicle (not a peloton), an yoga mat (the wife already had that one) and an exercise program that uses only those two resources. Not as efficient without dieting, not as measurable and broad as the gym, but it fits my workflow. Deploy to production!

A few months pass and the dataset grows. The signal is subtle but has support - it works! The handling, however, needs improvement, since I cannot often enough get with the exercise program. Some mornings are just after some hard days.

I start thinking about what else I can improve in the program, but it is already pretty lean and full of compromises.

So I pull an engineer and start thinking about the support systems and draft profile. What else could be draining my willpower and morning time?

Chores. Getting the kids ready for school, firing up the moka pot, setting the off-brand roomba, folding the overnight-dried clothes, cooking breakfast, doing the dishes, cleaning the toilets. All part of my morning routine. It might benefit from some automation.

Last month I got that machine our elders call "wasteful" and "useless crap lazy entitled Americans invented because they feel oh-so-insulted for simply doing something by hand like everyone always did" - a "dish-washer".

Heh, I remember how hard was to convince my mother-in-law that an remote-controled electric garage door would not make she look like an spoiled brat.

Still to early to call, but I think that the dishwasher just saved me about 25 mins every morning. It might be enough to save willpower for me to do more exercise.

This is all so reflective of all data analytics cases really are out in the wild - the analytics phase seems so small compared to the gathering and practical problem-solving all around. And yet d.a. is what tells you that you are doing the wrong thing all along. Or on what you should work next.7 -

Probably had my worst half-week ever this week.

Customer's CRM system, the read and edit masks just...stopped existing on last week friday. CRM fell back on some default masks for the dataset. No way to create new masks directly without putting the whole system upside down.

We couldn't do anything anyway because they reported the issue literally as we all were about to leave for weekend and our boss was like "Ah nah, well do it next week."

Our brains were already fried anyway...

I mail the reporter that we've registered their issue, will investigate and report back ASAP once we've got news.

Monday rolls around, I'm whacking my head against their system trying to figure the fuck out, what went wrong and how to solve it, I come up empty; Not that terrible since the masks only stopped existing in the webclient version of the system and they can still use the windows client, so they can still work.

Tuesday rolls around, I'm at an on site training for an ERP system with my boss at a remote company. Get an email in midst of the training, I was doing protocol.

Guy from the afflicted company goes and tells me that the issue has somehow spread to his colleague and him...IN THE WINDOWS CLIENT.

I'm fucking flabbergasted, so to speak, since the masks for the windows client and the web client are totally isolated from one another.

After we're back at our company, I investigate, less efficiently this time because my brain got fried at the training. I come up empty again.

NOW TODAY: Discuss further proceedings with my boss, he's not pissed at me or anything, just to say, but we're both worried, obviously.

Then at 10:20, a guy from the afflicted company mails me in an annoyed tone that the masks are still broken.

11:00, we figure out a workaround so the windows client users can at least work again, albeit limited.

11:10, I mail the guy, telling him that although we're still not able to fully work everything out and are still investigating, we've made a workaround so they can at least work again.

11:20, the guy mails me in a pissed tone around the lines of "This is very very important and must be fixed ASAP or else we'll not be able to work at all [...]"

And I think like "Dude I literally just told you like 8 minutes ago that there's are workaround so you'll be able to at least work again..."

Forward the mail to boss, we meet up quickly to discuss how in God's name we can deescalate this mfer.

11:31, the guy mails me again, all apologetically this time "Stop! All is good, I just now fully read you mail, thanks for implementing the workaround, nothing will come to a standstill [...]"

BRUH CAN YOU NOT FUCKING READ BEFORE ESCALATING SHIT

Fuck customers. Dumb fucking cretins unable to fucking read.

The issue is still unresolved. Support of the CRM software lets us sit on our collective asses and wait.

There is no such thing as stable software, it's a myth.

Every corporate software is like an ever-decaying semi-corpse of a brain dead patient slowly getting worse and worse but not fucking dying.

Rant over. -

python machine learning tutorials:

- import preprocessed dataset in perfect format specially crafted to match the model instead of reading from file like an actual real life would work

- use images data for recurrent neural network and see no problem

- use Conv1D for 2d input data like images

- use two letter variable names that only tutorial creator knows what they mean.

- do 10 data transformation in 1 line with no explanation of what is going on

- just enter these magic words

- okey guys thanks for watching make sure to hit that subscribe button

ehh, the machine learning ecosystem is burning pile of shit let me give you some examples:

- thanks to years of object oriented programming research and most wonderful abstractions we have "loss.backward()" which have no apparent connection to model but it affects the model, good to know

- cannot install the python packages because python must be >= 3.9 and at the same time < 3.9

- runtime error with bullshit cryptic message

- python having no data types but pytorch forces you to specify float32

- lets throw away the module name of a function with these simple tricks:

"import torch.nn.functional as F"

"import torch_geometric.transforms as T"

- tensor.detach().cpu().numpy() ???

- class NeuralNetwork(torch.nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__() ????

- lets call a function that switches on the tracking of math operations on tensors "model.train()" instead of something more indicative of the function actual effect like "model.set_mode_to_train()"

- what the fuck is ".iloc" ?

- solving environment -/- brings back memories when you could make a breakfast while the computer was turning on

- hey lets choose the slowest, most sloppy and inconsistent language ever created for high performance computing task called "data sCieNcE". but.. but. you can use numpy! I DONT GIVE A SHIT about numpy why don't you motherfuckers create a language that is inherently performant instead of calling some convoluted c++ library that requires 10s of dependencies? Why don't you create a package management system that works without me having to try random bullshit for 3 hours???

- lets set as industry standard a jupyter notebook which is not git compatible and have either 2 second latency of tab completion, no tab completion, no documentation on hover or useless documentation on hover, no way to easily redo the changes, no autosave, no error highlighting and possibility to use variable defined in a cell below in the cell above it

- lets use inconsistent variable names like "read_csv" and "isfile"

- lets pass a boolean variable as a string "true"

- lets contribute to tech enabled authoritarianism and create a face recognition and object detection models that china uses to destroy uyghur minority

- lets create a license plate computer vision system that will help government surveillance everyone, guys what a great idea

I don't want to deal with this bullshit language, bullshit ecosystem and bullshit unethical tech anymore.11 -

Started off with a prototype with about 20 backend data points per hour and 10 concurrent front-end users. Total dataset in the 10,000s.

Now about 5 backend data points per second and 500 concurrent front-end users. Total dataset in excess of 20,000,000. -

When your teacher tells you to run a model that uses a 1gb dataset on a computer that has 256 mb ram.

Ah, what sin have I done, my lord? :/ -

Well... I can think of several bugs that I found on a previous project, but one of the worst (if not the worst, because the damage scope) it's one bug that only appears for a couple of days at the end of every month.

What happens is the following: this bug occurs in a submodule designed (heh) to control the monthly production according the client requirements (client says "I want 1000 thoot picks", that submodule calculates the daily production requirements in order to full fill the order).

Ideally, that programming need to be done once a week (for the current month), because the quantities are updated by client on the same schedule, and one of the edge cases is that when the current date is >= 16th of the month, the user can start programming the production of the following month.

So, according to this specific case, there's an unidentified, elusive, and nasty bug that only shows up on the two last days of every month, when it doesn't allow to modify/create anything for the following month. I mean, normally, whenever you try to edit/create new data, the application shows either an estimated of the quantities to produce, or the previous saved data. But on those specific days it doesn't show any information at all, disregarding of there's something saved or not.

The worst thing is that such process involves both a very overcomplicated stored procedure, and an overcomplicated functionality on the client side (did I mentioned that it dynamically generates a pseudo-spreadsheet with the procedure dataset? Cell by cell), that absolutely no one really fully understands, and the dude that made those artifacts is no longer available (and by now, I'm not so sure that he even remember what he done there).

One of the worst thing is that at this point, it's easier to handle with that error rather to redesign all of that (not because technical limitations, but for bureaucratic and management issues).

The another worst thing (the most important none) is that this specific bug can create a HUGE mess as it prevents the programming of the production to be done the next day (you know, people tends to procrastinate and start doing things at the very end of the day/week/month)... And considering that the company could lose a huge amount of money by every minute without production, you can guess the damage scope of this single bug.

Anyway, this bug has existed since, I don't know, 2015 (Q4?) and we have tried so many things trying to solve it, but that spaghettis refuse to be understood (specially the stored procedure, as it has dynamically generated queries). During my tenure (that ended last year) I spent a good amount of time (considering what I mentioned on the last rant, about the toxic environment) trying to solve that, just giving up after the first couple of weeks.

Anyway... I'm guessing that this particular bug will survive another 4-ish years, or even outlive the current full development team... But, who knows ¯\_(ツ)_/¯ ? -

Had some fun with textgenrnn (Tensorflow text generating thingy on Github). So I created a tiny dataset with some example c# code and let it train for a while.

Sorry people, but I ruined our jobs. We don't need to write code anymore.

Update: image was unreadable due to compression. Let me find an alternative. 5

5 -

-----------Jr Dev Fucked by Sr Dev RANT------

Huge data set (300X) that looks like this :

( Primary_key, group_id,100more columns) .

Dataset to be split in records of X sized files such that all primary_key(s) of same group_id has to go in same file.

Sde2 with MS from Australia, 12 years of 'experience' generates an 'algo'. 70% Test case FAILED.

I write a bin packing algo with 100% test case pass, raises pull request to MASTER in < 1 day. Same sde2 does not approve, blocking same day release.

|-_-| What the fuck |-_-| Incompetent people getting 2x my salary with <.5x my work2 -

So I decided to run mozilla deep speech against some of my local language dataset using transfer learning from existing english model.

I adjusted alphabet and begin the learning.

I have pc with gtx1080 laying around so I utilized that but I recommend to use at least newest rtx 3080 to not waste time ( you can read about how much time it took below ).

Waited for 3 days and error goes to about ~30 so I switched the dataset and error went to about ~1 after a week.

Yeah I waited whole got damn week cause I don’t use this computer daily.

So I picked some audio from youtube to translate speech to text and it works a little. It’s not a masterpiece and I didn’t tested it extensively also didn’t fine tuned it but it works as I expected. It recognizes some words perfectly, other recognize partially, other don’t recognize.

I stopped test at this point as I don’t have any business use or plans for this but probably I’m one of the couple of companies / people right now who have my native language speech to text machine learning model.

I was doing transfer learning for the first time, also first time training from audio and waiting for results for such long time. I can say I’m now convinced that ML is something big.

To sum up, probably with right amount of money and time - about 1-3 months you can make decent speech to text software at home that will work good with your accent and native language. -

"Our Data Service comes PRE-P0WN'D"

Those SHIT-FOR-BRAINS data service providers GLOAT that their data can be natively integrated into most BI platforms, no code required.

How? Because they will EXPOSE THE ENTIRE FUCKING THING ON THE INTERNET.

LITERALLY.

UNAUTHENTICATED URL WITH THE ENTIRE DATASET.

STATIC. WON'T EVER FUCKING CHANGE.

NO VPN REQUIRED. NO AUTHENTICATION HEADERS. NO IN-TRANSIT ENCRYPTION.

"It is safe! No one will know the secret token that is a parameter in the url"

BLOODY BYTE BUTTS, BATMAN! IT IS A FUCKING UNAUTHENTICATED URL THAT DOES NOT REQUIRES RENEWAL NOR A VPN, IT WILL LEAK EVENTUALLY!

That is the single fucking worst SELF-P0WN I have ever seen.

Now I know why there are fucking toddlers "hacking" large scale databases all over the globe.

Because there are plenty of data service providers that are FUCKING N00BS.4 -

AI is dumb and is not going to rob your work as a programmer.

Expanding on this:

https://devrant.com/rants/12459112/...

Don't know about the others, but programming and IT is mostly safe unless you're a secretary answering to mails pressing 1 keystroke at time with index finger.

Bullshit.

I’ve tried EVERYTHING. As a developer, I know exactly what instructions to give and how to explain them. I tried this stuff for years.

I abandoned the idea to give Ai a full blown workspace to vscode with copilot, even with experimental LLMS (Claude 3.5, Gpt4o, o1, as per my linked post, copilot is dumb as a rock), because it fucks up every fucking time so bad.

I tried getting an AI to build a simple project, something that has plenty of samples of code around, something that I was sure it could have been in its training dataset. A copy of Arkanoid, in HTML/CSS/JS, even reformulating the prompts over and over with different LLMs that claim to have reasoning abilities. I provided detailed feedback step by step, pointed out the errors, improvements, and problems in-depth to: o3, o1, 4o, deepseekv3+R1, and Qwen 2.5 Ultra. I even activated web search and suggested scanning GitHub repos when necessary. I gave examples of code after several failed attempts.

And guess what? Nothing. A total mess. Half the time, the game didn’t even run, and when it did, everything was wrong—bricks overlapping, barely anything working the way I asked. Even though the internet is full of similar code, and I gave it part of the solution myself when it couldn’t figure it out.

Don’t worry, AI isn’t going to steal your job—it’s just a broken toy. Fine for repetitive, simple tasks, but nothing more.

It's years that they make hyped up bold statements that the next model will revolutionize everything and it's years that I get delusional results.

It's just good at replacing some junior bovine work like mapping some classes or writing some loops with not too much variables and logics involved.

Sigh. My error was getting too comfortable using it and trusting/hoping that this ramp up in AI developement would have brought an easier life to dev.

Silly mistake.2 -

Downloaded 130gb of movie subtitles zip files.

If I find some power deep in my heart I would normalize data and launch training on generative transformer to see if it produces decent dialogues.

It will probably stop on planning phase because I’m diving deeper towards depression.5 -

Late night kaggle session, and I'm enjoying how cute and clean this dataset is!

I'm jealous if data scientists always get to work with such neat sets! Dude! I got .95 acc without any effort! This is so... Weird. 🤔4 -

I used to be a big security guy, not allowing stuff like most of the social media, not bringing my phone anywhere, carrying a RPi tablet for privacy reasons. Very Stallman stuff.

Recently I noticed that I don't care so much.. I see these things as opportunities, for instance Microsoft products could be benefitial for job opportunities, I have some workout sessions on my phone.

I could restrict myself... but is it worth it just to decline some capitalist/politician's row in a dataset for analysis?

But then again I feel as a society I think we should either do this or request this data to be distributed to us as well.

Should you be playing a game of cards, when the enemy can see your hand? What do u think?4 -

"""Itty bitty frustration"""

# wannabe mode on

import sklearn

iris_dataset = sklearn.datasets.load_iris() -

This is why code reviews are important.

Instead of loading a relevant dataset from the database once, the developer was querying the database for every field, every time the method interacted with it.

What should have been one call for 200k records ended up as 50+ calls for 200k records for every one of 300+ users.

The whole production application server was locked.2 -

Started doing deep learning.

Me: I guess the training will take 3 days to get about 60% accuracy

Electricity: I dont think so! *Power cut every day lately

My dataset: I dont think so! *Running training only achieve 30% of learning accuracy and 19% of validation accuracy

Project submission: next week

😑😑😑2 -

Today I came across a very strange thing or a coincidence(maybe).

I was working on my predictive analytics project and I had registered on Kaggle(repository for datasets) long back and was searching on how to scrape websites, as I couldn't find any relevant dataset. So, while I was searching for ways to scrape a website, suddenly after visiting a few websites, I get notifications of a new email. And it was from Kaggle with the subject line

"How to Scrape a Tidy Dataset for Analysis"

Now I don't how to feel about it. Mixed feelings! It is either a wild coincidence, or Kaggle is tracking all the pages visited by the user. The latter makes more sense. By the way, Kaggle wasn't open in any of the tabs on my browser.1 -

So when I am pissed at everything in life, I take out my frustration at my laptop.

I give it to clone 2 400MBs repository.

I give it to load 2 400 MBs dataset to load and train a resNet and say "yeah Multiprocess bitch"1 -

I’d like to have a DevRant dataset so I can make some great visualizations, text analysis, etc. of the things we hate the most. This is top priority, thanks in advance.4

-

Looks like unzipping on disk drive where you keep your dataset can crash machine learning training session.

Thanks nvidia for your great drivers.

I like your solutions. -

What is this format? Spaces are separated by + sign and columns are separated by & sign and it’s value is followed by = sign. Can I directly convert it to Spark dataframe?

8

8 -

I’m expanding my storage with 8x 20TB hard drives. With raid5 on it I would get approximately 126TB of storage space.

This would allow me to download full common crawl dataset and play with it locally.11 -

I've noticed loading half of a small dataset twice is faster than loading the full small dataset once from files around half a terabyte.

Any tips?11 -

One of my minions (erm, I mean, "a valued junior member of my team") asked to be assigned to tasks more "data science related".

Regardless of the very last-decade sounding request, I tried to explain to the Jr that there is more to "data science" than distilling custom llms and downloading pytorch models. There are several entire fields of study. And those are all sciences. In this context, science equals math.

But they said they were not scared of math.

I've seen them using their phones to calculate freaking tips. If you can't do 15% of a lunch bill in your head, hypothesis tests might be a bit more than challenging.

But, ok then. Here we go.

So I had them do some semi-supervisioned clustering. On a database as raw as dirt, but with barely 5Gb, few dimensions and regarding subjects with easily available experts.

Even better, we had hundreds of manually classified training and test cases.

The Jr came back a month later with some convoluted mess of convoluted networks; just the serialized weights of the poor thing were about as large as the database itself.

And when I tried it on some other manually classified test datasets... Freaking 41% error rate, for something that should be a slam dunk. Little better than a coin toss.

One month of their time wasted on an overfitted unusable mess.

I had to re-assign the task to someone else, more experienced, last friday. It was monday when they came up with an iterative KNN approach giving error rates for several values of K... some of them with less than 15% error on the test dataset.

WTF are schools teaching and calling "data science" nowadays?!?!?

I reeeeally need to watch those juniors more closely. Maybe ask for middle-sprint demonstrations. But those are soooo boring and waste so much time from people who know what they are doing...

Does anyone have a better idea to prevent this type of off-track deviation? Without being a total bore, that is.

And... should I start asking people "gotcha" data analysis questions before giving them free reign on this type of tasks? Or is it an asshole boss move? I would hate someone giving me a pop quizz before letting me work... But I got no other ideas.1 -

Would it make sense in order to regulate AI to force companies to publish the dataset they used to train a specific model?4

-

!!rant

Just spent a week creating a distributed api architecture which I found out won't work due to a singular issue which can't be solved - not unless I hack stuff to a degree where I might as well write my own frameworks.

I've been aiming the user application's requests towards my wsgi, which based on a custom header will proxy it towards the correct api. Each customer base has their own api and dataset, but they all visit the same address.

I've handled CORS manually, just picking up when there's an options request, asserting the origin, then returning the correct headers. Cool everyone's happy. Turns out, socket.io includes session id and handshake info as part of their options preflight, which I can't pair with my api header (or cookie, for that matter) which means my wsgi doesn't know where to send it. You get a 400! You get a 400! You get a 401! </oprah>

So my option is to either roll my own sockets engine or just assign each api to a subdomain or give it some url prefix or something. Subdomains are probably pretty clean and tidy, but that doesn't change having to rewrite a bunch of stuff and the hours I spent staring at empty headers in options preflights.

At least this discussion saved me some time in trying to make it work. One of my bad habits is getting in those grooves of "but surely... what the hell, surely there's a way. There has to be"

https://github.com/socketio/... -

Have been working all day long on a dataset.. Worked like charm in the end.. Went for dinner, now it's outputs have changed all together and I haven't touch the code, I think Skynet is here.

-

How hard can it be to let sql just multiply some values and sum the results, right? As it turns out, damn hard!

I hear you thinking, surely you can just do select SUM(price*amount) AS total right? Nope! I mean, yes you can, but it fucks up. Oddly. It always ends up giving me wrong results. Always. Wtf sql? And it's not like I'm running a massive dataset or anything, it's like 100 records at most?27 -

Just because I didn't know the direction to work on doesn't mean I didn't do shit

Also, aren't you the professor so you please tell me what to do

And no you don't need to focus on the sample dataset I'm working on. Yes its name is "Breast Cancer" SO WHAT!!!2 -

Deep learning. Working on an image classification problem for a big company. The "boss" ask me to teach an AI to classify images into a few classes.

"Mmm, ok...I just need to create the dataset and then build the AI...so.."

Where is the problem??

The problem is that the classes are so perfectly similar that no one knows how to help me create the dataset and I have to do it alone.

That's how you spend your weeks in a loop where you look at thousands of images over and over just to have something decent start your work.

After that I felt like...

"I'm the hero they deserves, but not the one they need right now" - Cit2 -

Today i received a hard drive contraining one million malicious non-PE files for a ML baed project.

It's going to be a fun week.14 -

So a couple of months ago I had some stability issues which seems to have caused Baloo go crazy and create an 1.7 exabyte index file. It was apparently mainly empty as zfs compressed it down to 535MB

Today I spent some time trying to reproduce the "issue" and turns out that wasn't that hard.

So this little program running on FreeBSD with a compressed (lz4) zfs dataset creates an 1.9 Exabyte large file, nicely compressed down to 45KB :)

#include <stdio.h>

#include <fcntl.h>

#include <unistd.h>

#include <sys/limits.h>

int main(int argc, char** argv) {

int fd = open("bigfile.lge", O_RDWR|O_CREAT, 0644);

for (int i = 0 ; i < 1000000000; i++) {

lseek(fd, INT_MAX, SEEK_CUR);

}

write(fd, " ",1);

close(fd);

}3 -

There are sites that list different datasets, this makes them dataset of datasets.

As there are multiple of these sites, and they are listed on Google, that made Google dataset of dataset datasets. -

I just ended up making a program that shows news depending on the user's current emotions.

I implemented OpenCV and CK+ dataset to make this possible. Also, Feedparser for getting news. Now looking into PyQt for making all of this into a GUI app.

So happy that all of this was completed within a week and without much hitches. -

Any idea to get around the cluster storage limitation?

I have to train a model on a large dataset, but the limit I have is about 39GB. There is space on my local disk but I don't know if I can store the data on my computer and have the model train on the cluster resources.1 -

based on my previous rant about dataset I downloaded

https://devrant.com/rants/9870922/...

I filtered data from single language and removed duplicates.

The first problem I spotted are advertisements and kudos at movie start and at end in the subtitles.

The second is that some text files with subtitles don’t have extensions.

However I managed to extract text files with subtitles and it turned out there is only 2.8gb of data in my native language.

I postponed model training for now as it will be long, painful process and will try to get some nice results faster by leveraging different approach.

I figured out I can try to load this data to vector database and see if I can query it with text fragment. 2.8gb will easily fit into ram so queries should be fast.

Output I want is time of this text fragment, movie name and couple lines before and after.

It will be faster and simpler test to find out if dataset is ok.

Will try to make it this week as I don’t have much todo besides sending CVs and talking with people.2 -

Reading over a note I left myself "Numbers returned will be slightly off due to new implementation and the Fuck that we use a different dataset"

Oh boy. This was there for 2 weeks, Im so lucky no one saw this. -

I finished my graduation project

We developed app for skin disease classification, we used Flutter & Python for training the model on a dataset called SD-198

We tried to use Transfer Learning to hit the highest accuracy but actually IT DIDN'T WORK SURPRISINGLY!!

After that's we tried build our CNN model with a few of layers, we scored %24

We couldn't improve it more, we are proud of ourself but we want to improve it moreee

Any suggestions?

Thank you for reading.2 -

I'm looking for a image segmentation and classification web based tool to create ground truth for my dataset in next Deep Learning project, what tool do You use?1

-

So I wrote a while ago .ndjson shapes dataset player.

https://quickdraw.withgoogle.com/ this site contain peoples shitdrawings of particular object.

Grab dataset from here in .ndjson format logging into google account

https://console.cloud.google.com/st...

go to here

https://jsfiddle.net/ywmp6bju/

browse the file and press play when it's enabled.

Attached picture is a frog. 2

2 -

When I browsed for a Food Recipes (Especially Indian Food) Dataset, I could not find one (that I could use) online. So, I decided to create one.

The dataset can be found here: https://lnkd.in/djdh9nX

It contains following fields (self-explanatory) - ['RecipeName', 'TranslatedRecipeName', 'Ingredients', 'TranslatedIngredients', 'Prep', 'Cook', 'Total', 'Servings', 'Cuisine', 'Course', 'Diet', 'Instructions', 'TranslatedInstructions']. The datset contains a csv and a xls file. Sometimes, the content in Hindi is not visible in the csv format.

You might be wondering what the columns with the prefix 'Translated' are. So, a lot of entries in the dataset were in Hindi language. To take care of such entries and translating them to English for consistency, I went ahead and used 'googletrans'. It is a python library that implements Google Translate API underneath.

The code for the crawler, cleaning and transformation is on Github (Repo:https://lnkd.in/dYp3sBc) (@kanishk307).

The dataset has been created using Archana's Kitchen Website (https://lnkd.in/d_bCPWV). It is a great website and hosts a ton of useful content. You should definitely consider viewing it if you are interested.

#python #dataAnalytics #Crawler #Scraper #dataCleaning #dataTransformation -

Power BI: wonderful tool, pretty graphics, and can do a lot of powerful stuff.

But it’s also quite frustrating when you want to do advanced things, as it’s such a closed platform.

* No way to run powerquery scripts in a command line

* Unit testing is a major pain, and doesn’t really test all the data munging capabilities

* The various layers (offline/online, visualisation, DAX, Powerquery, Dataset, Dataflow) are a bit too seamless: locating where an issue is happening when debugging can be pain, especially as filtering works differently in Query Editing mode than Query Visualisation mode.

And my number 1 pet peeve:

* No version control

It’s seriously disconcerting to go back to a no version control system, especially as you need to modify “live code” sometimes in order to debug a visual.

At best, I’ve been looking into extracting the code from the file, and then checking that into git, but it’s still a one-way street that means a lot of copying and pasting back into the program in order to roll back, and makes forking quite difficult.

It’s rewarding to work with the system, but these frustrations can really get to me sometimes2 -

can you please help me with this.

I'm creating dataset of [Leet words][1].

This code is for generating [Leet words][1]. it is working fine with less number of strings but I've nearly 3,800+ strings and my pc is not capable to do so. I've Tried to run this on cloud(30gb RAM) not worked for me. but I think possible solution is to convert this code into numpy but I don't know how. if you know any other efficient way to do this it will be helpful.

Thanks!

from itertools import product

import pandas as pd

REPLACE = {'a': '@', 'i': '*', 'o': '*', 'u': '*', 'v': '*',

'l': '1', 'e': '*', 's': '$', 't': '7'}

def Leet2Combos(word):

possibles = []

for l in word.lower():

ll = REPLACE.get(l, l)

possibles.append( (l,) if ll == l else (l, ll) )

return [ ''.join(t) for t in product(*possibles) ]

s="""india

love

USA"""

words = s.split('\n')

print(words)

lst=[]

# ['india', 'love', 'USA']

for word in words:

lst.append(Leet2Combos(word))

k = pd.DataFrame(lst)

k.head()3 -

Anyone ever tried using user-to-user collaborative filtering to classify the mnist digits dataset?

This is about as far as I got:

https://hastebin.com/obinoyutuw.py

It's literal copy-and-paste frankencode because this is only the second time I've ever done something like this, so pardon the hatchjob.1 -

Is a picture worth a thousand words?

Super fun data driven analysis based on Google's Conceptual Captions Dataset.

https://kanishk307.medium.com/is-a-...

#python #dataanalysis #exploratorydataanalysis #statistics #bigdata3 -

Part 3

https://devrant.com/rants/9881158/...

I dropped subtitles and started extracting audio from movie, after that I use whisper to convert speech to text.

I parse srt from whisper, adjust timestamps to get >= arbitrary amount of voice seconds. I put text to vector database with timestamps and movie file name.

I query database by ex. “I don’t know” and extract first n results, after that I walk trough movies and extract parts with found text.

I normalize and merge parts into one movie.

Results are satisfying so now I decided to try to find a common dialogue that I can watch by combining multiple persons speaking from multiple movies.

Might also try to extract person from one movie and put it to other movie.2 -

It feels good to be a gangsta. Convinced my coworkers to use Dapper.net (used by StackOverflow) as ORM of the choice instead of old internal ORM which heavily utilizes DataSet.

-

Why do clients expect that they would get a high quality machine learning model without a properly cleaned dataset? I usually get the response, ‘just scrape data and train it. It shouldn’t take long’3

-

Grrrrrrr!!!!!!! How you frustrate me SQL SERVER REPORTING SERVICES! Designing a report changed query on dataset to include new field, fields started displaying all sorts of random stuff, booleans in text fields etc. Just spent 20 mins "checking" by rebuilding the first few bits of report and first dataset it's something weird with SSRS. Bye bye Sunday evening!!!

-

I have a project in need of machine learning. It takes an image and turns it into text. How do I begin acquiring the data needed to feed the machine? Should I just start taking pictures of this particular item on many different devices and get as many friends to do the same? How do I begin gathering my data is the question?4

-

What ai model would I use to propagate a series of survival factors and decision making scenarios that if the optimal order of activities are pursued would lead survival and even prosperity and the worst set of possibilities would lead to death where the environment and sensations being experienced would always lead to specific pitfalls but wherein some of these pathways would lead to later reward and where the obstacles like predators could be overcome by simple combinations of objects which would be a crude mimicry of the invention ?

Neural nets don’t see to fit this given my understanding but there is a training aspect I’m looking for where the creature being simulated dies, develops fear responses, feels pain, avoids pain, remembers things, develops behaviors related to characteristics in creatures, has unborn motivations that weight decisions, and learns to prioritize.

I had created a massive dataset of objects including memories and aspects of semantic memory and episodic memory colored by emotion inspired by past conflict and reward with the idea that a running average would affect behavior and decide on various behaviors all the way down to perceptual differences

Any thoughts again ? Or will wolf try to steal these too ?29 -

That moment when you waste two hours of your work life trying to find a dataset in a sea of crap to answer your bosse's question...

-

#Suphle Rant 7: transphporm failure

In this issue, I'll be sharing observations about 3 topics.

First and most significant is that the brilliant SSR templating library I've eyed for so many years, even integrated as Suphle's presentation layer adapter, is virtually not functional. It only works for the trivial use case of outputting the value of a property in the dataset. For instance, when validation fails, preventing execution from reaching the controller, parsing fails without signifying what ordinance was being violated. I trim the stylesheet and it only works when outputting one of the values added by the validation handler. Meaning the missing keys it can't find from controller result is the culprit.

Even when I trimmed everything else for it to pass, the closing `</li>` tag seems to have been abducted.

I mail project owner explaining what I need his library for, no response. Chat one of the maintainers on Twitter, nothing. Since they have no forum, I find their Gitter chatroom, tag them and post my questions. Nothing. The only semblance of a documentation they have is the Github wiki. So, support is practically dead. Project last commit: 2020. It's disappointing that this is how my journey with them ends. There isn't even an alternative that shares the same philosophy. It's so sad to see how everybody is comfortable with PHP templating syntax and back end logic entagled within their markup.

Among all other templating libraries, Blade (which influenced my strong distaste for interspersing markup and PHP), seems to be the most popular. First admission: We're headed back to the Blade trenches, sadly.

2nd Topic: While writing tests yesterday, I had this weird feeling about something being off. I guess that's what code smell is. I was uncomfortable with the excessive amount of mocking wrappers I had to layer upon SUT before I can observe whether the HTML adapter receives expected markup file, when I can simply put a `var_dump` there. There's a black-box test for verifying the output but since the Transphporm headaches were causing it to fail, I tried going white-box. The mocking fixture was such a monstrosity, I imagined Sebastian Bergmann's ghost looking down in abhorrence over how much this Degenerate is perverting and butchering his creation.

I ultimately deleted the test travesty but it gave rise to the question of how properly designed system really is. Or, are certain things beyond testing white box? Are there still gaps in the testing knowledge of a supposed testing connoisseur? 2nd admission.

Lastly, randomly wanted to tweet an idea at Tomas Votruba. Visited his profile, only to see this https://twitter.com/PovilasKorop/.... Apparently, Laravel have implemented yet another feature previously only existing in Suphle (or at the libraries Arkitekt and Deptrac). I laughed mirthlessly as I watch them gain feature-parity under my nose, when Suphle is yet to be launched. I refuse to believe they're actually stalking Suphle 3

3 -

Not enough disk space error..just when I am done writing code and unzipping the bigger dataset.

Angry me.

Hours later.. Now mounted 200Gigs to machine.

Feels like a boss.! -

It was my first time doing an NLP task / implementing a RNN and I was using the torchtext library to load and do sentiment analysis on the IMDB dataset. I was able to use collate_fn and batch_sampler and create a DataLoader but it gets exhausted after a single epoch. I’m not sure if this is the expected behavior, if it is then do I need to initialize a new DataLoader for every epoch? If not is something wrong with my implementation, please provide me the correct way to implement the same.

PS. I was following the official changelog() of torchtext from github

You can find my implementation here

changelog - https://github.com/pytorch/text/...

My implementation - https://colab.research.google.com/d... -

I am applying CNN-LSTM model to predict the level of interest of a person in a particular image or video. But, I don't have any dataset on EEG sensors

nor do I have any facility to gather such data. Can anyone help me in any way?1 -

Just Started learning unsupervised learning algorithms, and i write this: Unsupervised Learning is an AI procedure, where you don’t have to set the standard. Preferably, you have to allow the model to take a chance at its own to see data.

Unsupervised Learning calculations allow you to make increasingly complex planning projects contrasted with managed learning. Albeit, Unsupervised Learning can be progressively whimsical contrasted and other specific learning plans.

Unsupervised machine learning algorithm induces patterns from a dataset without relating to known or checked results. Not at all like supervised machine learning, Unsupervised Machine Learning approaches can’t be legitimately used to loss or an order issue since you have no proof of what the conditions for the yield data may be, making it difficult for you to prepare the estimate how you usually would. Unsupervised Learning can preferably be used to get the essential structure of the data. -

Are there some good tools to analyze a big dataset of json files? I mean i could normalize the dataset into a SQL database but are there some secret weapons to make life simpler?9

-

Here's the dataset, model training, and output phases for a generative adversarial network I wrote that basically learned about...Me, and subsequently created a custom social media avatar.

I wrote the damn thing and it still couldn't figure it out. I'm too complex. My therapist was right. 4

4