Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "big data"

-

buzzword translations:

"cloud" -> someones computer

"big data" -> lots of somewhat irrelevant data

"ai" -> if if if if if if if if if if if if if else

"algorithm" -> something that works but you don't know why

"secure" -> https://

"cyber security" -> kali linux + black hoodie

"innovation" -> adding something completely irrelevant such as making a poop emoji talk

"blockchain" -> we make lots of backups

"privacy" -> we store your data, we just don't tell you about it40 -

Buzzword dictionary to deal with annoying clients:

AI—regression

Big data—data

Blockchain—database

Algorithm—automated decision-making

Cloud—Internet

Crypto—cryptocurrency

Dark web—Onion service

Data science—statistics done by nonstatisticians

Disruption—competition

Viral—popular

IoT—malware-ready device15 -

I miss old internet.

- without politics

- without robots

- without money

- without big portals

- without commercials

- without advertising

- without data centers

- without ipv6

but with great usenet and community

Shit fuck I’m old26 -

I'd like to extend my heartfelt fuck-you to the following persons:

- The recruiter who told me that at my age I wouldn't find a job anymore: FUCK YOU, I'll send you my 55 birthday's cake candles, you can put all of them in your ass, with light on.

- The Project Manager that after 5 rounds of interviews and technical tests told me I didn't have enough experience for his project: be fucked in an Agile way by all member of your team, standing up, every morning for 15 minutes, and every 2 weeks by all stakeholders.

- The unemployment officer who advised me to take low level jobs, cut my expenses and salary expectations: you can cut your cock and suck it, so you'll stop telling bullshit to people

- The moron that gave me a monster technical assignment on Big Data, which I delivered, and didn't gave me any feedback: shove all your BIG DATA in your ass and open it to external integrations

- the architect who told me I should open my horizons, because I didn't like React: put a reactive mix in your ass and close it, so your shit will explode in your mouth

- the countless recruiter who used my cv to increase their db, offering fake jobs: print all your db on paper and stuff your ass with that, you'll see how big you will be

To all of them, really really fuck you.12 -

*Interview*

Interviewer: We have an opening. Are you interested to work?

Me: What is that I'll be doing?

I: What technologies and languages do you know?

Me: I know Scala, Java, Spark, Angular, Typescript, blah blah. What is your tech stack?

I: Any experience working on frontend?

Me: Yes. But what do you use for it?

I: Can you work with databases?

Me: I can, on SQL based. What are yours?

I: Can you do big data processing?

Me: I know Spark, if that's what you are asking for. What is it that you actually do?

I: Any experience in cloud development?

Me: Yes. AWS? Azure? GCP?

I: Do you know CI CD?

Me: Excuse me.. I've been asking a lot of questions but you're not paying attention to what I'm asking. Can you please answer the questions I asked.

I: Yes. Go ahead.

Me: What will be my position?

I: A full stack developer.

Me: What technologies do you use in your project?

I: We use all the latest tech.

Me: Like?

I: All latest tech.

Me: You mentioned big data processing?

I: Yes. Processing data from DB and generating reports.

Me: what do you use for that?

I: Java.

Me: Are you planning to rebuild it using Spark or something and deploy in the cloud?

I: No we're not rebuilding it. Just some additions to the existing.

Me: Then what's with cloud? Why did you ask for that?

I: Just to know if you're familiar.

Me: So I'll be working with Java. Okay. What do you use for UI?

I: Flash

Me: 🙄

I sat for a couple of minutes contemplating life.

I: Are you willing to join?

Me: No. Not at all. Thankyou for the offer.5 -

This can annoy the hell out of me. When people ask me if they can have your Facebook or whatsapp or something and I'm like 'sorry I don't have that' and they ask why and you explain because privacy reasons and they go like 'oh you're a little paranoid are ya?'.

There's a motherfucking big difference between wanting control over your data as much as possible and being paranoid.

Fucking hell.30 -

Me: you should not open that log file in excel its almost 700mb

Client: its okay, my computer has 4gb ram

Me: *looking at clients computer crashing*

Client: the file is broken!

Me: no, you just need to use a more memory efficient tool, like R, SAS, python, C#, or like anything else!5 -

I guess that is what you get for bringing up security issues on someones website.

Not like I could read, edit or delete customer or company data...

I mean what the shit... all I did was try to help and gives me THIS? I even offered to help... maybe he got angry cause I kind of threw it in his face that the whole fucking system is shit and that you can create admin accounts with ease. No it's not a framework or anything, just one big php file with GET parameters as distinction which function he should use. One fucking file where everything goes into. 21

21 -

If Big O notations where emojis. This chart shows you common big-Os with emoji showing how they'll make you feel as your data scales. Source blog.honeybadger.io

7

7 -

Was programming on the privacy site REST api.

Needed a break and started searching for a good movie or documentary.

Found a documentary about big data/mass surveillance.

I now have loads of motivation for programming on this again as this showed me the importance of secure services/software.19 -

A few years ago:

In the process of transferring MySQL data to a new disk, I accidentally rm'ed the actual MySQL directory, instead of the symlink that I had previously set up for it.

My guts felt like dropping through to the floor.

In a panic, I asked my colleague: "What did those databases contain?"

C: "Raw data of load tests that were made last week."

Me: "Oh.. does that mean that they aren't needed anymore?"

C: "They already got the results, but might need to refer to the raw data later... why?"

Me: "Uh, I accidentally deleted all the MySQL files... I'm in Big Trouble, aren't I?"

C: "Hmm... with any luck, they might forget that the data even exists. I got your back on this one, just in case."

Luck was indeed on my side, as nobody ever asked about the data again.5 -

So Facebook provided unlimited data access to loads of companies including spotify/microsoft and other big names.

Although there are privacy rules, those companies had deals which excluded them from these privacy rules.

I don't think my custom DNS server or a pihole is enough anymore, let's firewall block all Facebook's fucking ip ranges.

Source: https://fossbytes.com/facebook-gave...19 -

Manual Data Entry: Most boring job

This reminds me of one conversation with one of my faculty..

Faculty: Why not try some Machine Learning Project?

Me: Cool. Any ideas you have already thought

Faculty: Comes up with a really noble idea

Me: Awesome idea. But we need data

Faculty: Don't worry. I will get it. Just help me setup Hadoop (see the irony.. no data yet, and he wants big data setup)

Me: But we don't have data. Let's focus of data collection, Sir

Faculty: I will get it. Don't worry. Trust me.

( I did setup for him twice coz he formatted the system on which I did the setup first time)

After 6 months,

Me: (same question) Sir, Data??

Faculty: I got it.

Me: Great. Give me, I can start looking into it from today.

Faculty: Actually, it's in a register written manually in a different language (which even I can't understand) I will hire data entry guys to convert it into English digital contents.

Me: *facepalm*

Road to Manual data entry to Big Data

Dedicating this pencil to the individuals keeping the register up to date and Sir in hopes of converting it into big data..

Long way to go.. 4

4 -

Some companies be like-

.. In job posting - We are the next big thing. We are going to change the industry. We are like Google / Facebook etc...

..in Introduction - We are the next big thing. We are going to change the industry. We are like Google / Facebook etc...

.. in Interviews - We are the next big thing. We are already changing the industry. Think of us like Google / Facebook etc...

.. during Interviews - Our interview process is rigorous because we are the next big thing. We are going to change the industry. We are like Google / Facebook etc...

.. questions in interviews - Since we are Google / Facebook, please answer questions on Java, C/C++, JS, react, angular, data structure, html, css, C#, algorithms, rdbms, nosql, python, golang, pascal, shell, perl...

.. english, french, japanese, arabic, farsi, Sinhalese..

.. analytics, BigData, Hadoop, Spark,

.. HTTP(s), tcp, smpp, networking,.

..

..

..

.. starwars, dark-knight, scarface, someShitMovie..

You must be willing to work anytime. You must have 'no-excuses' attitude

.........................................

Now in Salary - Oh... well... yeah... see.... that actually depends on your previous package. Stocks will be given after 24 re-births. Joining bonus will be given once you lease your kidneys.

But hey, look... We got free food.

Well, SHOVE THAT FOOD UPTO YOUR ASS.

FUCK YOU...

FUCK YOUR 'COOL aka STUPID PIZZA BEER - CULTURE'.

FUCK YOUR 'FLAT- HIERARCHY'.

FUCK YOUR REVOLUTIONARY-PRODUCT.

FUCK YOU!2 -

Although it might not get much follow up stuffs (probably a few fines but that will be about it), I still find this awesome.

The part of the Dutch government which keeps an eye on data leaks, how companies handle personal data, if companies comply with data protection/privacy laws etc (referring to it as AP from now on) finished their investigation into Windows 10. They started it because of privacy concerns from a few people about the data collection Microsoft does through Windows 10.

It's funny that whenever operating systems are brought up (or privacy/security) and we get to why I don't 'just' use windows 10 (that's actually something I'm asked sometimes), when I tell that it's for a big part due to privacy reasons, people always go into 'it's not that bad', 'oh well as long as it's lawful', 'but it isn't illegal, right!'.

Well, that changed today (for the netherlands).

AP has concluded that Windows 10 is not complying with the dutch privacy and personal data protection law.

I'm going to quote this one (trying my best to translate):

"It appears that Microsofts operating system follows every step you take on your computer. That gives a very invasive image of you", "What does that mean? do people know that, do they want that? Microsoft should give people a fair chance for deciding this by themselves".

They also say that unless explicit lawful consent is given (with enough information on what is collected, for what reasons and what it can be used for), Microsoft is, according to law, not allowed to collect their telemetrics through windows 10.

"But you can turn it off yourself!" - True, but as the paragraph above said, the dutch law requires that people are given more than enough information to decide what happens to their data, and, collection is now allowed until explicitly/lawfully ok'd where the person consenting has had enough information in order to make a well educated decision.

I'm really happy about this!

Source (dutch, sorry, only found it on a dutch (well respected) security site): https://security.nl/posting/534981/...8 -

My client is trying to force me to sign an ethics agreement that would allow them to sue me if found in breach of it. At the same time they are scraping eBay's data without their consent and refuse to sign the licence agreement. Apparently they don't understand irony.2

-

Just wanted to say a 'thank you' to all people who bear with my privacy stuffs! I know quite some people who installed messaging apps, signed up to privacy services and so on, solely because --> I <-- want to communicate in private and I realise (I've always realized that though) that that can be tough sometimes.

Also a thank you to those people for not requiring me to get data fed into the big companies :).

Thanks!24 -

National Health Service (nhs) in the UK got hacked today... Workers at the hospitals could not access patient and appointment related data... How big a cheapskate you gotta be to hack a free public health service that is almost dying for fund shortages anyway...16

-

Mum: Is this the big data?

Father: Do you know anything about Bitcoin? Can you explain me what it is?1 -

Overheard some guy talking about robotics on the phone, turns out it was all about MS excel macros.

people need to stop abusing terms like big data, AI etc. to make them sound 'smart' 🙄4 -

When I was 10 my younger brother saved over my fully completed pokedex in Pokemon blue.

First big data loss taught me a good life lesson. Now I backup everything on a local server.1 -

I've dreamed of learning business intelligence and handling big data.

So I went to an university info event today for "MAS Data Science".

Everything's sounded great. Finding insights out of complex datasets, check! Great possibilities and salery.

Yay! 😀

Only after an hour they've explained that the main focus of this course is on leading a library, museum or an archive. 😟 huh why? WTF?

Turns out, they've relabled their librarian education course to Data science for getting peoples attention.

Hey you cocksuckers! I want my 2 hours of wasted life time back!

Fuck this "english title whitewashing"4 -

You may know about my dumb CTO, if not, read here: https://devrant.io/rants/854361/...

Anyway, the dumbass emailed me this weekend asking “what is big data?”

So I replied: “...it’s when you use a large font in your code...”

He thanked me. I bet he will be at some presentation somewhere and will reference using large fonts in an IDE!!!10 -

Today a colleague was making weird noises because he was modifying some data files where half of the data needed to be updated with a name field, there were 4 files all about 1200 lines big.

I asked how he was doing and he said he was ready to kill himself, after he explained why I asked why he was doing if manually. He said he normally uses regex for it but he couldnt do this with a regex.

I opened VS code for him, used the multiselect thing (CTRL+D) and changed one of the files in about 2 minutes. Something he was working on for over half an hour already.... He thanked me about a million times for explaining it to him.

If you ever find yourself in a position where you have a tedious task which takes hours, please ask if somebody knows a way of doing it quicker. Doing something in 2 minutes is quite a bit cheaper and better for your mental state than doing the same thing manually In 3 hours (our estimate)4 -

"Big data" and "machine learning" are such big buzz words. Employers be like "we want this! Can you use this?" but they give you shitty, ancient PC's and messy MESSY data. Oh? You want to know why it's taken me five weeks to clean data and run ML algorithms? Have you seen how bad your data is? Are you aware of the lack of standardisation? DO YOU KNOW HOW MANY PEOPLE HAVE MISSPELLED "information"?!!! I DIDN'T EVEN KNOW THERE WERE MORE THAN 15 WAYS OF MISSPELLING IT!!! I HAD TO MAKE MY OWN GODDAMN DICTIONARY!!! YOU EVER FELT THE PAIN OF TRAINING A CLASSIFIER FOR 4 DAYS STRAIGHT THEN YOUR GODDAMN DEVICE CRASHES LOSING ALL YOUR TRAINED MODELS?!!

*cries*7 -

I always thought that this could only happen with big orgs with precious data. One of my coworkers sent me this last night

10

10 -

What kind of cum gargling gerbil shelfer stores and transmits user passwords in plain text, as well as displays them in the clear, Everywhere!

This, alongside other numerous punishable by death, basic data and user handling flaws clearly indicate this fucking simpleton who is "more certified than you" clearly doesn't give a flying fuck about any kind of best practice that if the extra time was taken to implement, might not totally annihilate the company in lawsuits when several big companies gang up to shower rape us with lawsuits over data breaches.

Even better than that is the login fields don't even differentiate between uppercase or lowercase, I mean WHAT THE ACTUAL FUCK DO YOU SELF RIGHTEOUS IGNORANT CUNTS THINK IS GOING TO HAPPEN IN THIS SCENARIO?13 -

Developer vs Tester

(Spoiler alert: developer wins)

My last developent was quite big and is now in our system testing department. So last week i got every 20 minutes a call from the tester, that something did not work as expected. For about 90% of the time i looked at the testing setup or the logs and told him, that the data is wrong or he used the tool wrong. After a couple of days i got mad because of his frequent interruptions. So I decided to make a list. Every time he came to me with an "error" i checked it and made a line for "User Error" or "Programming Error". He did not liked that much, because the User Error collum startet to grow fast:

User Errors: ||||| |||

Programming Errors: |||

Now he checks his testing data and the logs 3 times before he calls me and he hardly finds any "errors" anymore.3 -

I absolutely hate the way we are taught programming in Indian colleges.

FML #1: I'm pursuing a UG CS course, and this semester, I only had one subject of Computers, that too only 1 credit. The rest with all electronics.

FML #2: In that 1 credit course, we had to make a C++ project which had "data handling". No one cares if you build something cool or not, just that a project should have "extensive use" of data handling.

FML #3: Source code had to be >= 1000 lines. This is the only place where ADDING MORE LINES OF CODES THAN REDUCING IT is appreciated. Had to stuff my code with all kinds of comments and violating the basic principle of DRY.

So, yeah, we're fucked big time. 😥14 -

Whoever implemented the data import in Numbers on Mac needs to be lined up against a wall and shot with needles until they wish they were dead.

Why on all of gods unholy green and shitty earth would i want data i import (EVEN IN CSV FOR FUCK SAKE) to be delimited by an arbitrary text width? WHAT THE ACTUAL FUCK

WHY WHY why would I EVER want to delimit my carefully structured data by fucking text width instead of new line or comma? AAAAARRRHHH

And what fucking big brain genius made this the DEFAULT SETTING for imported text AND CSV FILES. IT STANDS FOR COMMA SEPARATED FILE YOU FUCK BOI MAYBE JUST MAYBE I WANT IT SEPARATED BY FUCKING COMMMMMMMAAAAASSSSSS9 -

GOT AN A+ FOR MY LAST PROJECT OF HIGH SCHOOL!!! SO FUCKING HAPPY!!!

(by the way, we built a search engine for this project. A pretty big and fast one too)10 -

A company gave a placement talk in college today.

First, they talked about their company's facts and figures, which no one was interested in.

Second, they talked about Amazon and Jeff's vision, AirBnB and their revolutionary idea, more than their own company and products.

Third, they showed some testimonial videos of their employees and customers.

"What the fuck is going on?" I thought. We were there to get information about a placement test.

Buzzwords started coming in. Machine Learning, Artificial Intelligence, Big Data and what not.

Last 15 minutes, a guy came. He talked about test date, test format and test topics, finally.

An hour and half wasted for 15 minutes of information.

Fuck placement talks.35 -

You've heard it!! To become a Web 3.0 daveluper, you have to do Blockchain programming, and you get additional investor points when you do artificial intelligence, Big Data and IoT 😝

2

2 -

Everybody talking about Machine Learning like everybody talked about Cloud Computing and Big Data in 2013.4

-

From a design meeting yesterday:

MyBoss: "The estimate hours seem low for a project of this size. Is everything accounted for?"

WebDev1: "Yes, we feel everything for the web site is accounted for."

-- ding ding...my spidey sense goes off

Me: "What about merchandising?"

MerchDevMgr: "Our estimate pushed the hours over what the stakeholders wanted to spend. Web department nixed it to get the proposal approved."

MyBoss: "WTF!? How the hell can this project go anywhere without merchandising entering the data!?"

WebDev2: "Its fine. We'll just get the data from merchandising and enter it by hand. It will only be temporary"

Me: "Temporary for who? Are you expecting developers to validate and maintain data?"

WebDev1: "It won't be a big deal."

MyBoss: "Yes it is! When the data is wrong, who are they going to blame!?"

WebDev1: "Oh, we didn't really think of that."

MerchDevMgr: "I did, but the CEO really wants this project completed, but the Web VPs would only accept half the hours estimated."

Me: "Then you don't do it. Period. Its better to do it right the first time than half-ass. How do think the CEO will react to finding out developers are responsible for the data entry?"

MerchDevMgr: "He would be pissed."

MyBoss: "I'm not signing off on this design. You can proceed without my approval., but I'll make a note on the document as to why. If you talk to Eric and Tom about the long term implications, they'll listen. At the end of the day, the MerchVPs are responsible to the CEO."

WebDev1: "OK, great. Now, the database, it should be SQLServer ..."

I checked out after that...daydreamed I was a viking.1 -

True story.

Some clients (especially in India) don't want to pay, but they want everything to be implemented in the project.

Big data.... Check

Machine learning.... Check

Deep learning..... Check

Espresso maker.... Check.

They want all the buzz words that are buzzing to be put in your project and they want you to put it in the 'cloud', for which you have to pay.....10 -

I just got four CSV reports sent to me by our audit team, one of them zipped because it was too large to attach to email.

I open the three smaller ones and it turns out they copied all the (comma separated) data into the first column of an Excel document.

It gets better.

I unzip the "big" one. It's just a shortcut to the report, on a network share I don't have access to.

They zipped a shortcut.

Sigh. This'll be a fun exchange.3 -

When there are no widely approved Swedish translations of big data terminology, such as "big data" itself. When discussing this kind of terms you have to resort to using the English words for them, which results in a horrible language mix, Swenglish.39

-

Creating an anonymous analytics system for the security blog and privacy site together with @plusgut!

It's fun to see a very simple API come alive with querying some data :D.

Big thanks to @plusgut for doing the frontend/graphs side on this one!18 -

My co-workers hate it when I ask this question on a technical interview, but my common one is "what is the difference between a varchar(max) and varchar(8000) when they are both storing 8000 characters"

Answer, you cannot index a varchar(max). A varchar(max) and varchar(8000) both store the data in the table but a max will go to blob storage if it is greater than 8000.

No one ever knows the answer but I like to ask it to see how people think. Then I tell them that no one ever gets that right and it isn't a big deal that they don't know it, as I give them the answer.8 -

We are building this big-data engine for a client's product for which we were using a cluster on GCP and they were billed ~1100$ for the last month's usage.

The CTO - the CHIEFFUCKING TECHNOLOGY OFFICER told us to hook up 5-6 laptops in our server room and create our own cluster because they cannot afford so much bill.6 -

Client: we need a big data implementation in AWS to be fully HA and DR.... Money is no object

*3 weeks later when the bill comes in *

Client: its too expensive we don't need this HA stuff we don't even know what it stands for anyhow so can you take it out? But the system still needs 24/7 availability....2 -

Spend 14 hours a week studying more with my free time.

Things to be studied:

-discrete math

-data structures

-algorithms

-coding challenges

-problem defining

-abstraction

-other relevant maths

Other things I want to improve:

-confidence at work

-reaching out to teams with questions

-social skills

-time management

-enjoying the little things

-patience

-consistency (with everything above)

Last big thing would be being more conscious with what type of data/platforms I am digesting everyday. Just like a good diet I want to get in the habit of consuming “good” useful content that’s thought provoking or knowable rather than fast food social media carbs

Wish everyone a productive New Year!6 -

Coworker: since the last data update this query kinda returns 108k records, so we gotta optimize it.

Me: The api must return a massive json by now.

C: Yeah we gotta overhaul that api.

Me: How big do you think that json response is? I'd say 300Kb

C: I guess 1.2Mb

C: *downloads json response*

Filesize: 298Kb

Me: Hell yeah!

PM: Now start giving estimates this accurate!

Me: 😅😂4 -

A lot of brainwashed people dont care about privacy at all and always say: "Ive got nothing to hide, fuck off...". But that is not true. Any information can be used aginst you in the future when "authorities" will release some kind of Chinas social credit system. Stop selling your data for free to big companies.

https://medium.com/s/story/...6 -

Currently, a classmate and I are working on our technical thesis.

It is all about industry 4.0, IIoT, big data and stuff.

This week, we presented our interim results to our supervisor. He is very pleased with our work and made the following suggestion:

He thinks it would be awesome to publish our work on our own GitHub repository and make it open source because he is convinced that this thesis is able to kind of "set a new standard" in some specific fields of using big data analysis in production processes.

I guess I'm kind of proud :)4 -

Remove all the outdated and unwanted topics which were taught during Indus Valley civilization like: 8080 microprocessor, Java 6, Software Testing principles etc. And add more interesting and realistic topics like: Algorithm design, graphs and other data structures, Java 8 (at least for now), big data, Basics about AI, etc.5

-

veekun/pokedex

https://github.com/veekun/pokedex

It's essentially all meta you need to make a pokemon game, in csv files.

Afaik, they ripped the information from the original games, so you can be sure about their validity.

I love how it's easy to use, isn't some weird ass formatted wiki and even has scripts to load it into your database.

Me being a huge pokemon fan, that's the non plus ultra. -

My code review nightmare?

All of the reviews that consisted of a group of devs+managers in a conference room and a big screen micro-analyzing every line of code.

"Why did you call the variable that? Wouldn't be be more efficient to use XYZ components? You should switch everything to use ServiceBus."

and/or using the 18+ page coding standard document as a weapon.

PHB:"On page 5, paragraph 9, sub-section A-123, the standards dictate to select all the necessary data from the database. Your query is only selecting 5 fields from the 15 field field table. You might need to access more data in the future and this approach reduces the amount of code change."

Me: "Um, if the data requirements change, wouldn't we have change code anyway?"

PHB: "Application requirements are determined by our users, not you. That's why we have standards."

Me: "Um, that's not what I ..."

PHB: "Next file, oh boy, this one is a mess. On page 9, paragraph 2, sub-section Z-987, the standards dictate to only select the absolute minimum amount of the data from the database. Your query is selecting 3 fields, but the application is only using 2."

Me: "Yes, the application not using the field right now, but the user stated they might need the data for additional review."

PHB: "Did they fill out the proper change request form?"

Me: "No, they ...wait...Aren't the standards on page 9 contradictory to the standards on page 5?"

PHB: "NO! You'll never break your cowboy-coding mindset if you continue to violate standards. You see, standards are our promise to customers to ensure quality. You don't want to break our promises...do you?"6 -

This might actually be my first real rant.

Whatever fucking cockgoblin decided that making dynamics GP so fucking confusing needs to suck a big bag of dicks. I'm so fucking tired of having to google every damned table name and column name because nothing makes any motherfucking sense.

Am I supposed to instinctively know what PM20201 does? What data it holds? I don't mind reading documentation. But it's hard to even know where to start when the shitbird API and database are more complicated than calculating orbital fucking decay.

I am done. Fuck you gp. Fuck you and your nonsense. I guess our sales people don't get to know when an invoice was paid.8 -

User: Hey, we got a big issue with one of your tools. One of your pages isn't loading.

Me: Ok, so when did this happen?

User: We don't know? Its been like that for a long time though, so we thought it was normal 😃

Me: ....ok. So do you know what data is supposed to appear?

User: Uhhh we're not sure as well. Since, you know, its been like that for a while.

Just great 😑4 -

One thing I've noticed about devRant is the ratio of web dev/mobile dev posts to database/architecture/big data dev posts. There's A LOT of you web peeps out there, and not enough data dudes, which I guess justifies my constant demand, salary and lack of competition. Just an observation.9

-

DEAR CTOs, PLEASE ASK THE DEVELOPER OF THE SOFTWARE WHICH YOU ARE PLANNING TO BUY IN WHAT LANGUAGE AND WHAT VERSION THEY ARE WRITTEN IN.

Background: I worked a LONG time for a software company which developed a BIG crm software suite for a very niche sector. The softwary company was quite successfull and got many customers, even big companies bought our software. The thing is: The software is written in Ruby 1.8.7 and Rails 2. Even some customer servers are running debian squeeze... Yes, this setup is still in production use in 2022. (Rails 7 is the current version). I really don't get it why no one asked for the specific setup, they just bought it. We always told our boss, that we need time to upgrade. But he told every time, no one pays for an tech upgrade... So there it is, many TBs of customer data are in systems which are totally old, not updated and with possibly security issues.9 -

Me - Yeah great so you say it's big data we are gonna be analyzing and having to store, are you currently utilizing a service and aggregating any of it into smaller manageable segments?

Client - well yeah it's lots and lots of data, we can share it with you if you sign a nda.

Me - ok... sure, how are you gonna share it with me.

Client - oh I can email you the spreadsheet.

Me - .... Spreadsheet ... Um... Ok... 'Stands up and walks away to tell this as the most interesting meeting of the month, to some one that will get it'

--

Buzz word for the win!9 -

Client from a big company requested that all sensible data should be encrypted, passwords included.

We agreed that was OK, and that we were already saving the hashes for the passwords.

The reply was "Hashes should be encrypted too"4 -

Dear Friends,

As a husband, I've sat next to my wife through eight miscarriages, and while drowning my sorrows on Facebook, face the inundation of pregnancy and baby ads. It's heartbreaking, depressing, and out right unethical.

How can we, as developers who conquer the world with software solutions, not solve this problem? Let's be honest, it's not that we cannot solve this problem, it's that we won't solve it.

We're really screwing this one up, and I'm issuing a challenge - who's out here on devRant that can make the first targeted "Shiva" ad campaign? Don't tell me you don't have the data in your system, because we all know you do. Your challenge is to identify the death of a loved one, or a miscarriage, and respectfully mourn the loss with no desire to make money from those individuals.

Fucking advertise flower delivery services and fancy chocolates to the people in THEIR inner circle, but stop fucking advertising pregnancy clothes to my wife after a miscarriage. You know you can do it. Don't let me down.

https://washingtonpost.com/lifestyl...11 -

Best exp:

( ͡ ͡° ͜ ʖ ͡ ͡°)

\╭☞ \╭☞ learning python and working with big data

Worst one:

(╯°□°)╯︵ learning php and visiting classes of programming at my college1 -

Every 2019 tech startup: We do deep machine learning with big data in the cloud.

Investors: Please take our money!5 -

Someone at work snuck something past the censors.

Our Hadoop servers all have "bigd" in their name 😂5 -

Goals for the next 100 weeks eh?

- Teach my 8yo and 11yo to be awesome java coders

- Take my 2yo to her first day at school

- Grow my department in work to over 40 people worldwide

- Start and finish my Masters degree in Big Data*

- Speak at 5 major international conferences (1000+ ppl)11 -

fuck code.org.

here are a few things that my teacher said last class.

"public keys are used because they are computationally hard to crack"

"when you connect to a website, your credit card number is encrypted with the public key"

"digital certificates contain all the keys"

"imagine you have a clock with x numbers on it. now, wrap a rope with the length of y around the clock until you run out of rope. where the rope runs out is x mod y"

bonus:

"crack the code" is a legitimate vocabulary words

we had to learn modulus in an extremely weird way before she told the class that is was just the remainder, but more importantly, we werent even told why we were learning mod. the only explanation is that "its used in cryptography"

i honestly doubt she knows what aes is.

to sum it up:

she thinks everything we send to a server is encrypted via the public key.

she thinks *every* public key is inherently hard to crack.

she doesnt know https uses symmetric encryption.

i think that she doesnt know that the authenticity of certificates must be checked.7 -

At work the other day...

Guy: "Oh hey I was thinking if you could help me with an application to visualize some data."

Me: "Ooookay...what did you have in mind?"

Guy: "I think we have XML files that could be turned into graphs...oh and we could add some trend lines. (Getting more excited) And maybe we could supplement it with live data...oh hey and maybe we could add real time alerts via email..."

Me: *thinks to self...there is no way in hell I am starting to work on something that he is literally coming up with requirements as he's talking* "I need specifics...so go take some time, think it through and get back to me with concrete details and examples."

Guy: "Ok. That should be enough to get you started for now at least."

That would be a big fuck no, good sir. Haven't started and won't start it. He has never mentioned it to me again since then.4 -

I hate it when marketing people decide they're technical - quote from a conference talk I regrettably sat through:

"The fourth industrial revolution is here, and you need to make sure you invest in every aspect of it - otherwise you'll be left in the dust by companies that are adopting big data, blockchain, quantum computing, nanotech, 3D printing and the internet of things."

Dahhhhhhhhhh6 -

The GitHub graphql API is pretty neat, mostly because it's a great example of a product where graphql has advantages over REST. As a code reviewer for repos with hundreds of simultaneous PRs, I use it to filter through branches for stuff that needs my attention the most.

NewRelic's NRQL API is also quite nice, as it provides an unusual but very direct interface into the underlying application metrics.

I'm also a big fan of launchlibrary, purely because I love spaceflight, and their API is an extremely rich and actively maintained resource. This makes it a great data source for playing around with plotting & statistics libraries — when I'm learning new languages or tools, I prefer to make something "real" rather than following a tutorial, and I often use launchlibrary as a fun and useful data backend. -

Hey !

A big question:

Assume we got an android app which graphs a sound file .

The point is: the user is able to zoom in/out so the whole data must be read in the begining , but as the file is a little longer , the load time increases.

What can i do to prevent this?3 -

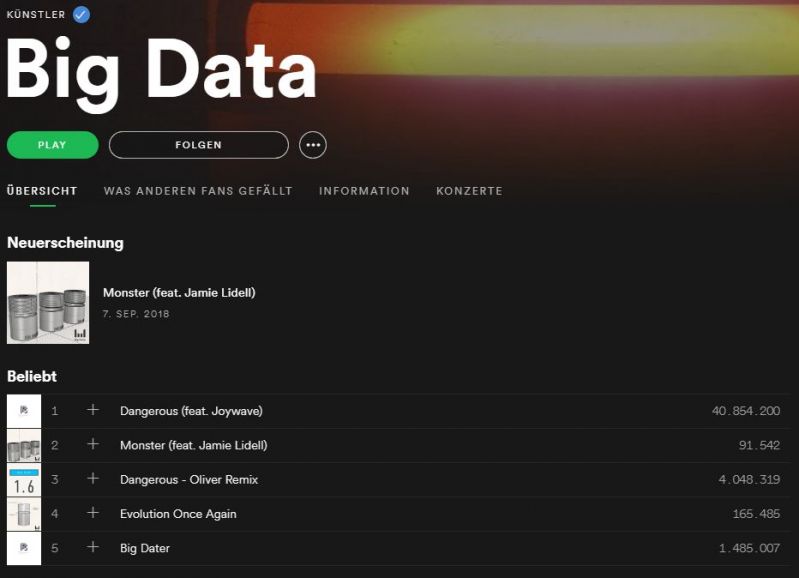

Recently found an artist called "Big Data". His song titles describe what Big Data actually is really well:

-

"How we use Tensorflow, Blockchain, Cloud, nVidia GPU, Ethereum, Big Data, AI and Monkeys to do blah blah... "4

-

BUZZWORD BUZZWORD AAAAAH

ARTIFICIAL INTELLIGENCE

BLOCKCHAIN

ALGORITHM

CLOUD

IOT

BIG DATA

SaaS

DEVOPS

5G

AR

VR

AAAAH BUZZWORD HERE BUZZWORD THERE3 -

oauth (Yahoo) just opened sourced their data-processing & search engine!

It looks fricken cool, can't wait to play with it... and even more I can't wait to see what people make with it!

Yahoo!

[announcement](https://oath.com/press/...)

[docs](http://docs.vespa.ai/documentation/...)4 -

The world is talking about AI, self-driving cars, big data, IOT and there are roboter driving around on Mars.

And here I stand, trying to figure out why a small change in a silly batch-script works on Windows7 and raises an error on Windows XP.

In 2020.2 -

Time for a soap box rant.

I just found this in one of our projects. I've simplified the example to make it more anonymous.

When I see code like this it automatically means there is a lack of attention to enumerations and/or understanding of what they are.

One may argue that in a certain execution of code it's a minor performance hit and therefore insignificant. It's still a performance hit. Furthermore, it takes even less time to do it the right way than it does to do it the wrong way.

Every one of these lines will enumerate the list from the beginning to try and find that one element you're interested in. Big O notation, people.

Throw that crap into a dictionary or hashset or similarly applicable data structure with direct reads at the beginning of your logic so that it only gets enumerated ONCE when the data structure instance is created. Then access it however many times you want.

Soap box rant over. 15

15 -

How to get investors wet:

“My latest project utilizes the microservices architecture and is a mobile first, artificially intelligent blockchain making use of quantum computing, serverless architecture and uses coding and algorithms with big data. also devOps, continuous integration, IoT, Cybersecurity and Virtual Reality”

Doesn’t even need to make sense11 -

I received 2 job offers:

1: c++ / c# / unity developer for a VR studio, tons of vr visors and shit to use

2: python / Java/somethingelse developer for machine learning, iot, big data

Offer n1 is from a small business 35 employees - casual outfit

Offer n2 is from medium/big business with 100-200 employees - suit and tie for all.

Same economic offer, 2 different and divergent paths on different but trending topics.

What do you choose and why16 -

I see the industry popularizing Machine Learning programs using AI to implement ethical Blockchain as a Javascript framework using Scrum techniques for Big Data Web2.0 in Responsive Virtual Reality for your IoT Growth Hacking operations.3

-

"Our company encourages cryptocurrency big data agile machine learning, empowerment diversity, celebrate wellness and synergy, unpack creative cloud real-time front-end bleeding edge cross-platform modular success-driven development of digital signage, powered by an unparalleled REST API backend, driven by a neural network tail recursion AI on our cloud based big data linux servers which output real time data to our Wordpress template interactive dynamic website TypeScript applet, with deep learning tensor flow capabilities.

Don't get what the fuck I just said? Udemy offers countless courses on python based buzzwords. Be the first out of 13 people to sell your soul and private information, and you'll get the first three minutes of the course free!"random bullshit cryptocurrency joke/meme ai fuck your buzzwords rest api deep learning big data udemy3 -

Perhaps more of a wishlist than what I think will actually happen, but:

- Everyone realises that blockchain is nothing more than a tiny niche, and therefore everyone but a tiny niche shuts up about it.

- Starting a new JS framework every 2 seconds becomes a crime. Existing JS frameworks have a big war, until only one is left standing.

- Developing for "FaaS" (serverless, if I must use that name) type computing becomes a big thing.

- Relational database engines get to the point where special handling of "big data" isn't required anymore. Joins across billions of rows doesn't present an issue.

- Everyone wakes up one day and realises that Wordpress is a steaming pile of insecure cow dung. It's never used again, and burns in a fire.9 -

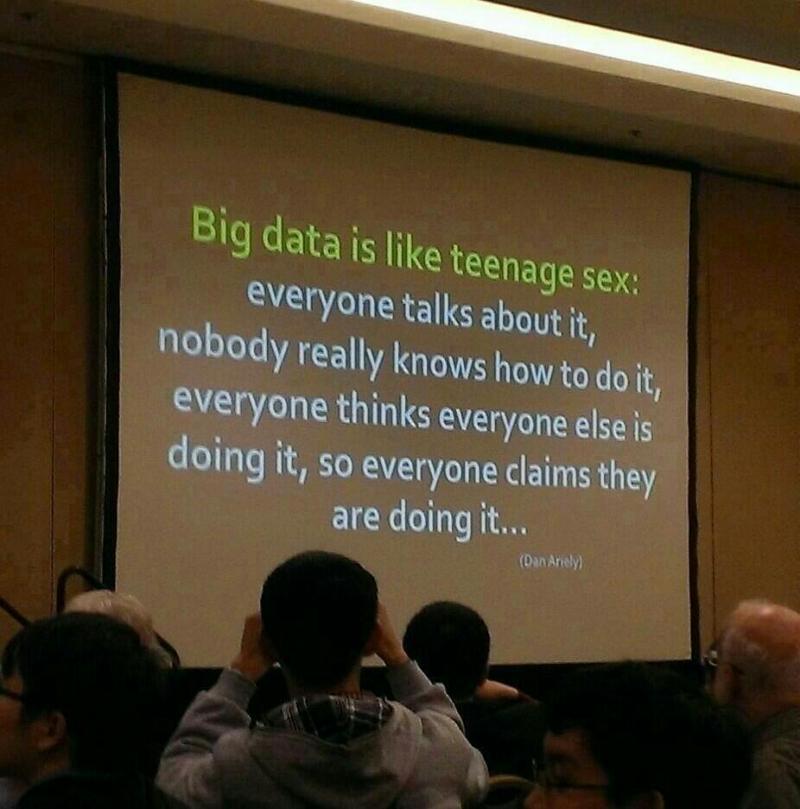

Big data is like teenage sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it… — Dan Ariely4

-

First year: intro to programming, basic data structures and algos, parallel programming, databases and a project to finish it. Homework should be kept track of via some version control. Should also be some calculus and linear algebra.

Second year:

Introduce more complex subjects such as programming paradigms, compilers and language theory, low level programming + logic design + basic processor design, logic for system verification, statistics and graph theory. Should also be a project with a company.

Year three:

Advanced algos, datastructures and algorithm analysis. Intro to Computer and data security. Optional courses in graphics programming, machine learning, compilers and automata, embedded systems etc. ends with a big project that goes in depth into a CS subject, not a regular software project in java basically.4 -

Fuck you Intel.

Fucking admit that you're Hardware has a problem!

"Intel and other technology companies have been made aware of new security research describing software analysis methods that, when used for malicious purposes, have the potential to improperly gather sensitive data from computing devices that are operating as designed. Intel believes these exploits do not have the potential to corrupt, modify or delete data"

With Meltdown one process can fucking read everything that is in memory. Every password and every other sensible bit. Of course you can't change sensible data directly. You have to use the sensible data you gathered... Big fucking difference you dumb shits.

Meltown occurs because of hardware implemented speculative execution.

The solution is to fucking separate kernel- and user-adress space.

And you're saying that your hardware works how it should.

Shame on you.

I'm not saying that I don't tolerate mistakes like this. Shit happens.

But not having the balls to admit that it is because of the hardware makes me fucking angry.5 -

Worked as a student in a big company. Just doing data entry and checking product data. It was a nice part time job at the same time with computer science study. After a year I asked for something else and switched to the Android Team. I said I could do a little bit of Java and wrote for another half year Unit Tests. That was the point where I really learned coding and got experienced. Would never learned so much in my study because I was lazy. Now I can call me a Android Developer. Still love the company for giving me this opportunity.

-

PyTorch.

2018: uh, what happens when someone uses a same name attack? - No big deal. https://github.com/pypa/pip/...

2020: I think that's a security issue. - Nanana, it's not. https://github.com/pypa/pip/...

2022: malicious package extracts sensitive user data on nightly. https://bleepingcomputer.com/news/...

You had years to react, you clowns.5 -

Want to make someone's life a misery? Here's how.

Don't base your tech stack on any prior knowledge or what's relevant to the problem.

Instead design it around all the latest trends and badges you want to put on your resume because they're frequent key words on job postings.

Once your data goes in, you'll never get it out again. At best you'll be teased with little crumbs of data but never the whole.

I know, here's a genius idea, instead of putting data into a normal data base then using a cache, lets put it all into the cache and by the way it's a volatile cache.

Here's an idea. For something as simple as a single log lets make it use a queue that goes into a queue that goes into another queue that goes into another queue all of which are black boxes. No rhyme of reason, queues are all the rage.

Have you tried: Lets use a new fangled tangle, trust me it's safe, INSERT BIG NAME HERE uses it.

Finally it all gets flushed down into this subterranean cunt of a sewerage system and good luck getting it all out again. It's like hell except it's all shitty instead of all fiery.

All I want is to export one table, a simple log table with a few GB to CSV or heck whatever generic format it supports, that's it.

So I run the export table to file command and off it goes only less than a minute later for timeout commands to start piling up until it aborts. WTF. So then I set the most obvious timeout setting in the client, no change, then another timeout setting on the client, no change, then i try to put it in the client configuration file, no change, then I set the timeout on the export query, no change, then finally I bump the timeouts in the server config, no change, then I find someone has downloaded it from both tucows and apt, but they're using the tucows version so its real config is in /dev/database.xml (don't even ask). I increase that from seconds to a minute, it's still timing out after a minute.

In the end I have to make my own and this involves working out how to parse non-standard binary formatted data structures. It's the umpteenth time I have had to do this.

These aren't some no name solutions and it really terrifies me. All this is doing is taking some access logs, store them in one place then index by timestamp. These things are all meant to be blazing fast but grep is often faster. How the hell is such a trivial thing turned into a series of one nightmare after another? Things that should take a few minutes take days of screwing around. I don't have access logs any more because I can't access them anymore.

The terror of this isn't that it's so awful, it's that all the little kiddies doing all this jazz for the first time and using all these shit wipe buzzword driven approaches have no fucking clue it's not meant to be this difficult. I'm replacing entire tens of thousands to million line enterprise systems with a few hundred lines of code that's faster, more reliable and better in virtually every measurable way time and time again.

This is constant. It's not one offender, it's not one project, it's not one company, it's not one developer, it's the industry standard. It's all over open source software and all over dev shops. Everything is exponentially becoming more bloated and difficult than it needs to be. I'm seeing people pull up a hundred cloud instances for things that'll be happy at home with a few minutes to a week's optimisation efforts. Queries that are N*N and only take a few minutes to turn to LOG(N) but instead people renting out a fucking off huge ass SQL cluster instead that not only costs gobs of money but takes a ton of time maintaining and configuring which isn't going to be done right either.

I think most people are bullshitting when they say they have impostor syndrome but when the trend in technology is to make every fucking little trivial thing a thousand times more complex than it has to be I can see how they'd feel that way. There's so bloody much you need to do that you don't need to do these days that you either can't get anything done right or the smallest thing takes an age.

I have no idea why some people put up with some of these appliances. If you bought a dish washer that made washing dishes even harder than it was before you'd return it to the store.

Every time I see the terms enterprise, fast, big data, scalable, cloud or anything of the like I bang my head on the table. One of these days I'm going to lose my fucking tits.10 -

"What app is that?"

"It's like yik yak for developers..."

"Like FML for programmers..."

"Like one big groupme for computer scientists..."

"Like Josh from Intro to Data Structures..."

I'm running out of ways to describe devRant.1 -

My manager's boss just commited on a delivery date a month from now. We dont know what is to be delivered, nor does the client. We are supposed to work on a platform that we know nothing about. And of course the catchphrase is : yeah just use big data and spark. I'm dying...3

-

Saw a question on SO asking why foreach was slow with big data.

The code provided was 6 nested foreachs (basically a cartesian product between an array of arrays, and 4 other arrays).

Inside, a select query and an "update or create" operation.

"But why is foreach so slow?"4 -

Fucking shit uni is such a waste of time. We are learning Apache Spark in Big Data module. Fucking losers have Spark 1.6.0 installed while the latest version is 2.2.1 right now.

What a bunch of cunts. We are paying tons of money to study deprecated shits and a degree. A fucking degree that is not even on a piece of paper anymore.

Fuck this shit man.6 -

Pffff...... Wanna make an app tomorrow...

Got no clue what to make....

Maybe something with big AI learning data machine. Yeah I think that hits all the right buzzwords :P

Any ideas you're willing to share?2 -

Years ago, we were setting up an architecture where we fetch certain data as-is and throw it in CosmosDb. Then we run a daily background job to aggregate and store it as structured data.

The problem is the volume. The calculation step is so intense that it will bring down the host machine, and the insert step will bring down the database in a manner where it takes 30 min or more to become accessible again.

Accommodating for this would need a fundamental change in our setup. Maybe rewriting the queries, data structure, containerizing it for auto scaling, whatever. Back then, this wasn't on the table due to time constraints and, nobody wanted to be the person to open that Pandora's box of turning things upside down when it "basically works".

So the hotfix was to do a 1 second threadsleep for every iteration where needed. It makes the job take upwards of 12 hours where - if the system could endure it - it would normally take a couple minutes.

The solution has grown around this behavior ever since, making it even harder to properly fix now. Whenever there is a new team member there is this ritual of explaining this behavior to them, then discussing solutions until they realize how big of a change it would be, and concluding that it needs to be done, but...

not right now.2 -

Google.

They’re doing amazing things but they are just too big now... Too much of a monopoly and the data is scary too.3 -

i want to get my own social network up and running.

so far ive got -

login 100% securely

register (1000% securely)

view someone’s profile (10^7% securely)

to add -

scrypt (maybe bcrypt, however scrypt looks like the better option)

friend a user

track their every move (ill use facebooks and googles apis for that)

to describe my product -

ai

blockchain

iot

big data

machine learning

secure

empower

analysis

call me when im a gazillionaire

but seriously, im making a social network and i hope its done by wk105 tbh3 -

Hi.. one month ago i started to learn JavaScript (my first programming language)

In the 2nd proyect we create a Data dashboard i do my very best effort to create Js funcional code and other 2 girls works in css and html.

Im really proud of my work (1st time!)

A few guys told me JavaScript is awful and difficult but in a few weeks we will start in jquery.

In 2 weeks im gonna participate in Angelhack Santiago Hackathon 2018

I need an advice for me its a really big step10 -

So here I am testing some python code and writing to a file. No big deal. But damn is it taking a long time to get data back from this API. Ah it's fine I'll let it work in the background.

40 minutes later.

Oh! The requests timed out. No big deal. I'll just cut out the parts that are already done.

1st request in.

I wonder what the file is looking like.

Only showing 1 request.

waitaminute.jpeg

I should have more than that.

*Suddenly realizes that I was writing to the file and not appending.

Fuuuuuuuuuuuuuuuuuuck 2

2 -

Client: THIS IS CRITICAL, SOME DATA HAS BEEN DELETED, WHAT ZE FUUK HAPPENED, UNDO THIS FAST

Us: so after carefully reviewing the code, related resources and the network traffic we conclude that was never sent in the first place.

*closes issue*

I'm glad we got such a meaningful bug report on the same day a production system started failing, one big deployment that that was like a boss with 3 phases, an unnecessary long meeting and an app developer that that wanted me to break HTTP standards. 1

1 -

If you're currently in college and wish to get placed in a major tech giant like Amazon or Facebook:

Don't learn React.js, instead learn Linked lists.

Don't learn Flutter, instead learn Binary search trees.

Don't learn how to perform secure Authorization with JWTs, instead learn how to recursively reverse a singly linked list.

Don't learn how to build scalable and fault tolerant web servers, instead learn how to optimally inverse a binary search tree.

These big tech companies don't really care what real world development technologies you've mastered. Your competence in competitive programming and data structures is all that matters.

The system is screwed. Or atleast I am.18 -

Yeah so seems like huge companies are literally just throwing tech buzzwords like "blockchain" and "cloud" and "big data" for marketing purposes.This annoys me.1

-

When I wrote my first algorithm that learns...

So in order to on board our customers onto our software we have to link the product on their data base to the products on ours. This seems easy enough but when you actually start looking at their data you find it's a fuck up of duplication's, bad naming conventions and only 10% or so have distinct identifiers like a suppler code,model no or barcode. After a week or 2 they find they can't do it and ask for our help and we take over. On average it took 2 of our staff 1-2 weeks to complete the task manually searching one record of theirs against our db at a time. This was a big problem since we only had enough resources to on board 2-4 customers a month meaning slow growth.

I realized when looking at different customers databases that although the data was badly captured - it was consistently badly captured similar to how crap file names will usually contain the letters 'asd' because its typed with the left hand.

I then wrote an algorithm that fuzzy matched against our data and the past matches of other customers data creating a ranking algorithm similar to google page search. After auto matching the majority of results the top 10 ranked search results for each product on their db is shown to a human 1 at a time and they either click the the correct result or select "no match" and repeat until it is done at which point the algo will include the captured data in ranking future results.

It now takes a single staff member 1-2 hours to fully on board a customer with 10-15k products and will continue to get faster and adapt to changes in language and naming conventions. Making it learn wasn't really my intention at the time and more a side effect of what I was trying to achieve. Completely blew my mind. -

Why everyone is happy about Google clip? It's the single most scary instance of a big brother appliance that exists today. What are they going to do with the data? They say it's save memories of your kid or your dog. There's already something like that. It's called a brain and paying attention to your damn life. I don't want to be saved in your shitty memories just bc you are so insecure about remembering your fuck*ng memories.

I'm sorry for the outburst but that sh*t is solving a problem nobody had and it's getting applauded like those heaven's gate motherf*ckrs that say that life is improved by these shitty beliefs.26 -

Building an interface for a client between industrial power quality meters and a database that serves a webapp of data.

Client had heard of a way of sending data between meter and raspberry. From some manager in a big firm.

Currently we where using modus to connect the meter to a raspberry. This method was tested and proofen to work. Both devices could talk to each other in modbus.

Client kept demaning to use mbus, and was nog listening to any reason because the firm suggested it. In the end we end up going modbus to mbus to send it to the raspberry. There the mbus was converted back modbus. Because the meter could not communicate in mbus.

Really weird experience to program something so useless. But protesting about it was going nowhere and taking more time than the changes would take to implant.2 -

Data Disinformation: the Next Big Problem

Automatic code generation LLMs like ChatGPT are capable of producing SQL snippets. Regardless of quality, those are capable of retrieving data (from prepared datasets) based on user prompts.

That data may, however, be garbage. This will lead to garbage decisions by lowly literate stakeholders.

Like with network neutrality and pii/psi ownership, we must act now to avoid yet another calamity.

Imagine a scenario where a middle-manager level illiterate barks some prompts to the corporate AI and it writes and runs an SQL query in company databases.

The AI outputs some interactive charts that show that the average worker spends 92.4 minutes on lunch daily.

The middle manager gets furious and enacts an Orwellian policy of facial recognition punch clock in the office.

Two months and millions of dollars in contractors later, and the middle manager checks the same prompt again... and the average lunch time is now 107.2 minutes!

Finally the middle manager gets a literate person to check the data... and the piece of shit SQL behind the number is sourcing from the "off-site scheduled meetings" database.

Why? because the dataset that does have the data for lunch breaks is labeled "labour board compliance 3", and the LLM thought that the metadata for the wrong dataset better matched the user's prompt.

This, given the very real world scenario of mislabeled data and LLMs' inability to understand what they are saying or accessing, and the average manager's complete data illiteracy, we might have to wrangle some actions to prepare for this type of tomfoolery.

I don't think that access restriction will save our souls here, decision-flumberers usually have the authority to overrule RACI/ACL restrictions anyway.

Making "data analysis" an AI-GMO-Free zone is laughable, that is simply not how the tech market works. Auto tools are coming to make our jobs harder and less productive, tech people!

I thought about detecting new automation-enhanced data access and visualization, and enacting awareness policies. But it would be of poor help, after a shithead middle manager gets hooked on a surreal indicator value it is nigh impossible to yank them out of it.

Gotta get this snowball rolling, we must have some idea of future AI housetraining best practices if we are to avoid a complete social-media style meltdown of data-driven processes.

Someone cares to pitch in?13 -

A couple of years ago, we decide to migrate our customer's data from one data center to another, this is the story of how it goes well.

The product was a Facebook canvas and mobile game with 200M users, that represent approximately 500Gibi of data to move stored in MySQL and Redis. The source was stored in Dallas, and the target was New York.

Because downtime is responsible for preventing users to spend their money on our "free" game, we decide to avoid it as much as possible.

In our MySQL main table (manually sharded 100 tables) , we had a modification TIMESTAMP column. We decide to use it to check if a user needs to be copied on the new database. The rest of the data consist of a savegame stored as gzipped JSON in a LONGBLOB column.

A program in Go has been developed to continuously track if a user's data needs to be copied again everytime progress has been made on its savegame. The process goes like this: First the JSON was unzipped to detect bot users with no progress that we simply drop, then data was exported in a custom binary file with fast compressed data to reduce the size of the file. Next, the exported file was copied using rsync to the new servers, and a second Go program do the import on the new MySQL instances.

The 1st loop takes 1 week to copy; the 2nd takes 1 day; a couple of hours for the 3rd, and so on. At the end, copying the latest versions of all the savegame takes roughly a couple of minutes.

On the Redis side, some data were cache that we knew can be dropped without impacting the user's experience. Others were big bunch of data and we simply SCAN each Redis instances and produces the same kind of custom binary files. The process was fast enough to launch it once during migration. It takes 15 minutes because we were able to parallelise across the 22 instances.

It takes 6 months of meticulous preparation. The D day, the process goes smoothly, but we shutdowns our service for one long hour because of a typo on a domain name.1 -

I fixed my big data processing code, I think. If all works as planned, I'll wake up to some processed data that I can do some statistical analysis on... I hope...8

-

I never knew that I was a good mentor at SQL , specially at PL/SQL.

I gave a task to a new member of my team, to fill 5 tables with data from other 15 tables.

I informed him well about data table info and structure. He spended about 3 days to create 25 different queries in order to fill 5 tables.

After I saw the 25 queries, I told him, that he could do it with 1 main query and 5 insert statements.

So I spended 1 hour of training, in order to build,run and explain how to create the best sql statements for this task.

(First 5 minutes)

It was looking so simple at the beginning from starting with 1 simple join, after some steps he lost my actions.

(Rest 55 minutes)

I was explained the sql statements I 've created and how Oracle works.

Now , every time he meets me, he feels so thankful for learning him all those Oracle sql tips in 1 hour.

Now he is working only with big data and he loves the sql.1 -

The scrum master for the project I'm working on decided to help out with changing some code (I'll add he's got a master's in software engineering and very proud about it..aka..big ego). It took him two days...yes two days to write the attached code.

I reviewed his code and sent back a response (code took about 15 seconds to write) including the link to the logging documentation explaining what fields were and were not necessary. Not sure how will look in devrant ...

var data = new InformationalDataPoint

{

Properties =

{

["RMANumber"] = rma,

["InvoiceID"] = invoiceId

}

};

Logger.Log(data);

He's stopped talking to me. Our next scrum meeting with the product owner should be ...um...awkward.

-

Encryption, Data, Servers, Protection, Certificate

oOOO WEE, I use big ear old words so I must be a hacker.2 -

I've just realised, I don't care if Facebook are sharing my data... I mean, what I get more ads for men with big penises and ripped abs? So what...13

-

INTERVIEW. It tells everything about the company. I recently applied for a "big" company for the position of ML Engineer. The Job description was like "someone with good knowledge of visual recognition, deep learning, advanced ML stuff, etc." I thought great, I might be a good fit. A guy called me the next day. Introduced himself as a manager of the Data Science team with 8+ years of experience. Started the talk saying "it is just an informal intro". But things escalated very quickly. Started shooting Data Science questions. He was asking questions in a very bookish way. Tells me to recite formulas (like big formulas). When I explained to him a concept, he was not understanding anything. Wanted a very bookish answer. I quickly realized I know more about ML stuff than him (not a big deal) and he is arrogant as fuck (not accepting my answers). Plus, he has no knowledge about Deep Learning. At the very end, he tells me "man, you need to clear up your fundamentals". WTH??? My fundamentals. Okay, I am not Einstein or Hinton, but I know I was answering things correctly. I have read books and research papers and blogs and all. When I don't know about things, I tell straight away. I don't cook answers. So the "interview" ended. I searched that man on LinkedIn. Got to know he teaches college students Data Science and ML. For a fee of 50,000 INR. It's a big amount!! Considering the things he teaches. You can find the same stuff (with far higher quality) free of cost (on Coursera, Udacity, YouTube, free books, what not). He is a cheater. He is making fool of college students. That is why I sometimes hate "experience". 8+ years of exp and he is such an a**hole!! BTW, I thanked God for saving me from that company. Can't imagine such an arrogant boss.

TLDR: Be vigilant during interviews. It tells a lot about the company.4 -

At one point, my laptop's hard drive went down. Turns out, windows had written some garbage data to the mft, and fucked up the file structure. Luckily i was able to restore a big chunk of the data using recuva. I cleaned the disk after saving the most important files, cleaned the disk, reinstalled windows. All good so far. I put the laptop's drive and my recovery disk into my desktop to put back the files. During the install in forced me to make an account, which I wanted to delete. So I ran "rmdir /users /s" and went to grab a cup of coffee. Turns out, cmd was pointed at my recovery disk instead of my laptop disk. My whole backup wiped.1

-

What if people, life, humanity, the universe is just a cluster of CPUs running a giant Recurrent Neural Network algorithm? 🤔

-Sun and food == power source

-People == semiconductors

-Earth/a Galaxy == a single CPU

-Universe == a local grouping of nearby nodes, so far the ones we've discovered are dead or not what same data transport protocol/port as us

-Universal Expansion == the search algorithm

-Blackholes: sector failures

-Big Bang == God turns on his PC, starts the program

-Big Crunch == rm -rf4 -

https://youtu.be/hkDD03yeLnU?t=8s

"I'll create a GUI interface using Visual Basic, see if I can track an IP address." 🤨🤔

I'll just blockchain a neural netwok for AI using big data in Delphi. -

The word, "Code" being used as a verb,

"Cloud",

'Big Data",

Recruiters,

Scratch,

Any other crappy "Teach your kid to code!" Product,

And finally, mondays.

This is the comprehensive list of buzzwords and things in general that make me want to die right now7 -

Is it just me, or are the media / journalists once again putting a stupidly unfair pessimistic spin on that SpaceX launch?

"SpaceX rocket launches but explodes shortly into flight"

"Musk's SpaceX big rocket explodes on test flight"

"SpaceX rocket explosion: None injured or killed"

They've said time and time again, it's the first test of a massively complex rocket that's bigger than anything that's ever gone before it, and success is just defined as "getting off the launch pad" and collecting data. They did that and then some.

But instead of spreading excitement about the data, the fact it launched, that it's a world first, etc. - it's all doom and gloom, implying that the whole thing was a failure and people could have died 🙄

And people wonder why I have a low opinion of journalists.13 -

So I joined a course for big data analysis. And they setup a lab specifically for us. Pulling us away from the usual computer labs

AND GUESS WHAT THEY DON'T EVEN BLOODY HAVE MYSQL INSTALLED. THEY'VE CHARGED ME A FORTUNE AND THEY DIDN'T EVEN CARE TO INSTALL THE BASIC SOFTWARES AND ITS ALREADY BEEN 2 WEEKS WITH THE START OF THE COURSE AND NOTHING.

F**king hate this man!!!!10 -

Testers in my team have been told like 1000 times to follow the style guides that we all follow. That's not that big a deal. The big deal is that they were put on this project without having any mathematics background when the project is all about geometric stuff. So after me as a developer having to put so many hours to explain to them why the tests are not covering the requirements or why the tests are red because they are initializing the data completely wrong, I ask them pretty please to do the checks for the coding style and I have already been 4 hours reviewing code because not only I have to go through the maths and really obscure testing code to ensure that the tests are correct, but every line I have to write at least 4 or 5 style corrections. And some are not even about the code being clean, but about using wrong namespaces or not sticking to the internal data types. For fuck shake, this is embedded software and has to obey to certain security standards...3

-

Can't get over how many big companies get away with poor/no documentation for their own APIs. The past week i have been working with a large insurance company that only via email threads explained what endpoint to send files to and what username I could use to get this to work.

I also worked with a major courier service last month that only had a two page document for all their methods and one of the pages was explaining the transportation of data via imagery haha.1 -

!rant

Does anyone know what the **day-to-day** differences are between working in IT (banks, hedge funds) vs tech (Google, Facebook, Netflix).

In my mind, I see Hell and Heaven. And there's a giant wall in between called "technical interviews + algorithms and data structures".

I'm on the Hell side... And not sure if I should climb the wall 😔

Is the wall even that big?8 -

Well, my country has a Degree called Bsc.CSIT which literally means Bachelors of Science Computer Science and Information Technology. I completed that degree and was employed right after I completed my degree. I have worked in two offices and no one cares what degree I have.

So I think Degree is not that necessary here in Nepal as long as you can get the job done.

Now I am about to pursue a Big Data related degree hope that is not as worthless as my current degree.1 -

A big project in my company. Had some annoying race condition that caused data to get deleted when two processes finished in the wrong order they hit the dB and override each other’s work.

Long story short. Fixed the bug and in the process the codebase shrunk by 60%. I didn’t have to delete the rest of the code, but the bug was due to a function in the legacy section of the code, and found out that it was the only function used in that section.

So I deleted it. Rewrote the function so it upserts. And bam. Smaller, cleaner code :)1 -

Manager:

Hey this client sent us a list with all of their employees in this format... we would tell them to input it themselves but they're a pretty big client, so could you do it?

Me: Sure

*3 hours later*

... why am I taking so long...?

I look back at my code, and see that I've done a whole framework to input data into our system, which accepts not only the client's format but it's actually pretty abstract and extensible for any format you'd like, all with a thorough documentation.

*FACEPALM*

Why can I do this with menial jobs and not for our main code?3 -

Agile development of a decentralised AI, using a neural network based on Blockchain technology for big data.

Is that enough buzzwords to make an employer happy? :p2 -

I will major in AI. No, I will major in Big data, wait, I want to major in cloud too. I think I should first complete the courses I enrolled on cloud academy or the tens of courses in enrolled on Udemy on all the domains possible first! So many technologies, so many dreams!

-

I don't know why they made so many algorithms, data structures and big O questions during interview, when all they wanted me to do was to maintain some legacy, tight coupled, spaghetti code with no architecture, documentation, tests nor any kind of engineering behind :/1

-

What would be the better approach for loading very big in size or in quantity files in java?

1) Loading data parallel through multiple threads

2) Loading data in series in a single thread

3) any other methodology?

Just asking because loading time is varying both cases.16 -

I hate doing front-end development...

I was hired along with another dev to build a webapp to manage the personnel of this big (2000+) company.

I made the backend and some of the frontend (mainly handling the data movement between the two), but my partner was let go after we delivered a first version because "there was not enough work for both of us".

The backlog is months of work for me and now I have to do everything and it's wearing me down...

I want to quit but it's paying well and I don't want to search for something new.

What do?6 -

For all the hate that Java gets, this *not rant* is to appreciate the Spring Boot/Cloud & Netty for without them I would not be half as productive as I am at my job.

Just to highlight a few of these life savers:

- Spring security: many features but I will just mention robust authorization out of the box

- Netflix Feign & Hystrix: easy circuit breaking & fallback pattern.

- Spring Data: consistent data access patterns & out of the box functionality regardless of the data source: eg relational & document dbs, redis etc with managed offerings integrations as well. The abstraction here is something to marvel at.

- Spring Boot Actuator: Out of the box health checks that check all integrations: Db, Redis, Mail,Disk, RabbitMQ etc which are crucial for Kubernetes readiness/liveness health checks.

- Spring Cloud Stream: Another abstraction for the messaging layer that decouples application logic from the binder ie could be kafka, rabbitmq etc

- SpringFox Swagger - Fantastic swagger documentation integration that allows always up to date API docs via annotations that can be converted to a swagger.yml if need be.

- Last but not least - Netty: Implementing secure non-blocking network applications is not trivial. This framework has made it easier for us to implement a protocol server on top of UDP using Java & all the support that comes with Spring.

For these & many more am grateful for Java & the big big community of devs that love & support it. -

Client: "..and you will send us the big data via mail... right?"

At the end we send a 100 kb file.1 -

Can anyone tell me what all things a developer should follow in order to be upto date. It's just too long of a thing.

I have been a back end developer, became a big data developer, then moving to becoming a full stack developer. Now I don't know who I am anymore. -

Is python a good language for building a RestAPI? Personally I don't have any experience with python yet, but what I've gathered, is that python is great for scripting, and big data.

I have a bit of knowledge about Node.js, and I really like the structure, and it's so easy to make an API using express.js.

I've already read a bunch of articles about it, but I'd like to know what the community feels about the two languages?21 -