Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "server speed"

-

My face when a developer suggests to use Windows server instead of Linux for speed and security reasons

29

29 -

I absolutely HATE "web developers" who call you in to fix their FooBar'd mess, yet can't stop themselves from dictating what you should and shouldn't do, especially when they have no idea what they're doing.

So I get called in to a job improving the performance of a Magento site (and let's just say I have no love for Magento for a number of reasons) because this "developer" enabled Redis and expected everything to be lightning fast. Maybe he thought "Redis" was the name of a magical sorcerer living in the server. A master conjurer capable of weaving mystical time-altering spells to inexplicably improve the performance. Who knows?

This guy claims he spent "months" trying to figure out why the website couldn't load faster than 7 seconds at best, and his employer is demanding a resolution so he stops losing conversions. I usually try to avoid Magento because of all the headaches that come with it, but I figured "sure, why not?" I mean, he built the website less than a year ago, so how bad can it really be? Well...let's see how fast you all can facepalm:

1.) The website was built brand new on Magento 1.9.2.4...what? I mean, if this were built a few years back, that would be a different story, but building a fresh Magento website in 2017 in 1.x? I asked him why he did that...his answer absolutely floored me: "because PHP 5.5 was the best choice at the time for speed and performance..." What?!

2.) The ONLY optimization done on the website was Redis cache being enabled. No merged CSS/JS, no use of a CDN, no image optimization, no gzip, no expires rules. Just Redis...

3.) Now to say the website was poorly coded was an understatement. This wasn't the worst coding I've seen, but it was far from acceptable. There was no organization whatsoever. Templates and skin assets are being called from across 12 different locations on the server, making tracking down and finding a snippet to fix downright annoying.

But not only that, the home page itself had 83 custom database queries to load the products on the page. He said this was so he could load products from several different categories and custom tables to show on the page. I asked him why he didn't just call a few join queries, and he had no idea what I was talking about.

4.) Almost every image on the website was a .PNG file, 2000x2000 px and lossless. The home page alone was 22MB just from images.

There were several other issues, but those 4 should be enough to paint a good picture. The client wanted this all done in a week for less than $500. We laughed. But we agreed on the price only because of a long relationship and because they have some referrals they got us in the door with. But we told them it would get done on our time, not theirs. So I copied the website to our server as a test bed and got to work.

After numerous hours of bug fixes, recoding queries, disabling Redis and opting for higher innodb cache (more on that later), image optimization, js/css/html combining, render-unblocking and minification, lazyloading images tweaking Magento to work with PHP7, installing OpCache and setting up basic htaccess optimizations, we smash the loading time down to 1.2 seconds total, and most of that time was for external JavaScript plugins deemed "necessary". Time to First Byte went from a staggering 2.2 seconds to about 45ms. Needless to say, we kicked its ass.

So I show their developer the changes and he's stunned. He says he'll tell the hosting provider create a new server set up to migrate the optimized site over and cut over to, because taking the live website down for maintenance for even an hour or two in the middle of the night is "unacceptable".

So trying to be cool about it, I tell him I'd be happy to configure the server to the exact specifications needed. He says "we can't do that". I look at him confused. "What do you mean we 'can't'?" He tells me that even though this is a dedicated server, the provider doesn't allow any access other than a jailed shell account and cPanel access. What?! This is a company averaging 3 million+ per year in revenue. Why don't they have an IT manager overseeing everything? Apparently for them, they're too cheap for that, so they went with a "managed dedicated server", "managed" apparently meaning "you only get to use it like a shared host".

So after countless phone calls arguing with the hosting provider, they agree to make our changes. Then the client's developer starts getting nasty out of nowhere. He says my optimizations are not acceptable because I'm not using Redis cache, and now the client is threatening to walk away without paying us.

So I guess the overall message from this rant is not so much about the situation, but the developer and countless others like him that are clueless, but try to speak from a position of authority.

If we as developers don't stop challenging each other in a measuring contest and learn to let go when we need help, we can get a lot more done and prevent losing clients. </rant>14 -

A typical demo...

Me: We added validation, server communication, caching....

Customer: Meh...

Me: We fixed bugs, sped up queries, implemented X features.

Customer: Meh...

Me: We surpassed the speed of light, transcended to another plane of reality, cured cancer, brought peace to galaxy.

Customer: Meh...

UI Designer: I prepared these sketches for the UI

Customer: Wow, so innovative, look at that beautiful transitions, even mobile design, just wow

Me: * dies *11 -

A fellow intern recommended the use of windows server for security and speed reasons.

Few details about the situation: windows server got hacked due to a vulnerability which had no patch released yet and this had happened multiple times that year. Also, the company was migrating everything to Linux (servers).

The senior/lead programmer literally gave him a GTFO face and pointed at the door.

Everyone was giving him the GTFO face by the way, he didn't know how fast he had to get out 🤣8 -

Dev: Hey our current server is starting to chug a bit. Can I get approved for $1200 additional spend to double the speed?

Manager: *Sharp inhale*. We need this project to cost as little as possible, we really can’t justify spending any additional money for any reason right now.

*2 days later*

Manager: YOU ARE APPROVED FOR $100,000 TO IMMEDIATELY IMPLEMENT SOMETHING RELATED TO NFTs IN ANY OF OUR APPS. THE BUSINESS NEEDS TO EXPAND INTO THE METAVERSE ASAP IMMEDIATELY. I NEED AN ETA BY EOD AS TO WHEN THIS CAN BE ROLLED OUT.

Dev: …16 -

[This makes me sound really bad at first, please read the whole thing]

Back when I first started freelancing I worked for a client who ran a game server hosting company. My job was to improve their system for updating game servers. This was one of my first clients and I didn't dare to question the fact that he was getting me to work on the production environment as they didn't have a development one setup. I came to regret that decision when out of no where during the first test, files just start deleting. I panicked as one would and tried to stop the webserver it was running on but oh no, he hasn't given me access to any of that. I thought well shit, I might as well see where I fucked up since it was midnight for him and I wasn't able to get a hold of him. I looked at every single line hundreds of times trying to see why it would have started deleting files. I found no cause. Exhausted, (This was 6am by this point) I pretty much passed out. I woke up around 5 hours later with my face on my keyboard (I know you've all done that) only to see a good 30 messages from the client screaming at me. It turns out that during that time every single client's game server had been deleted. Before responding and begging for forgiveness, I decided to take another crack at finding the root of the problem. It wasn't my fault. I had found the cause! It turns out a previous programmer had a script that would run "rm -rf" + (insert file name here) on the old server files, only he had fucked up the line and it would run "rm -rf /". I have never felt more relieved in my life. This script had been disabled by the original programmer but the client had set it to run again so that I could remake the system. Now, I was never told about this specific script as it was for a game they didn't host anymore.

I realise this is getting very long so I'll speed it up a bit.

He didn't want to take the blame and said I added the code and it was all my fault. He told me I could be on live chat support for 3 months at his company or pay $10,000. Out of all of this I had at least made sure to document what I was doing and backup every single file before I touched them which managed to save my ass when it came to him threatening legal action. I showed him my proof which resulted in him trying to guilt trip me to work for him for free as he had lost about 80% of his clients. By this point I had been abused constantly for 4 weeks by this son of a bitch. As I was underage he had said that if we went to court he'd take my parents house and make them live on the street. So how does one respond? A simple "Fuck off you cunt" and a block.

That was over 8 years ago and I haven't heard from him since.

If you've made it this far, congrats, you deserve a cookie!6 -

One comment from @Fast-Nop made me remember something I had promised myself not to. Specifically the USB thing.

So there I was, Lieutenant Jr at a warship (not the one my previous rants refer to), my main duties as navigation officer, and secondary (and unofficial) tech support and all-around "computer guy".

Those of you who don't know what horrors this demonic brand pertains to, I envy you. But I digress. In the ship, we had Ethernet cabling and switches, but no DHCP, no server, not a thing. My proposition was shot down by the CO within 2 minutes. Yet, we had a curious "network". As my fellow... colleagues had invented, we had something akin to token ring, but instead of tokens, we had low-rank personnel running around with USB sticks, and as for "rings", well, anyone could snatch up a USB-carrier and load his data and instructions to the "token". What on earth could go wrong with that system?

What indeed.

We got 1 USB infected with a malware from a nearby ship - I still don't know how. Said malware did the following observable actions(yes, I did some malware analysis - As I said before, I am not paid enough):

- Move the contents on any writeable media to a folder with empty (or space) name on that medium. Windows didn't show that folder, so it became "invisible" - linux/mac showed it just fine

- It created a shortcut on the root folder of said medium, right to the malware. Executing the shortcut executed the malware and opened a new window with the "hidden" folder.

Childishly simple, right? If only you knew. If only you knew the horrors, the loss of faith in humanity (which is really bad when you have access to munitions, explosives and heavy weaponry).

People executed the malware ON PURPOSE. Some actually DISABLED their AV to "access their files". I ran amok for an entire WEEK to try to keep this contained. But... I underestimated the USB-token-ring-whatever protocol's speed and the strength of a user's stupidity. PCs that I cleaned got infected AGAIN within HOURS.

I had to address the CO to order total shutdown, USB and PC turnover to me. I spent the most fun weekend cleaning 20-30 PCs and 9 USBs. What fun!

What fun, morons. Now I'll have nightmares of those days again.9 -

Never in my life I was scared as today.

I recently left a big company to work for a small one as the first internal developer.

Had a small issue in the production server. The fix was easy, just remove a single table entry. And... *drum roll*... I forgot to add a where clause. All orders were lost.

No idea if we had backups or anything, I quickly called the one other IT dude in the company.

He had no clue where are the backups and how to find them.

Having some experience with Nmap, I quickly scanned our network and found a Nas device.

There was a backup, whole VHD backup. 300GB of it, the download speed is around 512kb/s. No way I can fix it before management finds out, but then an idea came to mind. Old glorious 7zip. Managed to extract only the database files, sent them to the server and quickly swapped them. Everything was fine... The manager connected 5 minutes later. Scariest 45 minutes of my life...20 -

Sooooo me and the lead dev got placed in the wrong job classification at work.

Without sounding too mean, we are placed under the same descriptor and pay scale reserved for secretaries, janitors and the people that do maintenance at work(we work for a college as developers) whilst our cowormer who manages the cms got the correct classification.

The manager went apeshit because the guidelines state that:

Making software products

Administration of dbs

Server maintenance and troubleshooting

Security (network)

And a lot of shit is covered on the exemption list and it is things that we do by a wide fucking margin. The classification would technically prohibit us from developing software and the whole it dptmnt went apeshit over it since he(lead developer) refuses (rightfully so) to touch anything and do basically nothing other than generate reports.

Its a fun situation. While we both got a substantial raise in salary(go figure) we also got demoted at the same time.

There is a department in IT which deals with the databases for other major applications, their title is "programmers" yet for some reason me and the lead end up writing all the sql code that they ever need. They make waaaaay more money than me and the lead do, even in the correct classification.

Resolution: manager is working with the head of the department to correct this blasphemy WHILE asking for a higher pay than even the "programmers"

I love this woman. She has balls man. When the president of the school paraded around the office asking for an update on a high priority app she said that I am being gracious enough to work on it even though i am not supposed to. The fucking prick asked if i could speed it up to where she said that most of my work I do it on my off time, which by law is now something that I cannot do for the school and that she does not expect any of her devs to do jack shit unless shit gets fixed quick. With the correct pay.

Naturally, the president did not like such predicament and thus urged the HR department(which is globally hated now since they fucked up everyone's classification) to fix it.

Dunno if I will get above the pay that she requested. But seeing that royal ammount of LADY BALLS really means something to me. Which is why i would not trade that woman for a job at any of my dream workplaces.

Meanwhile, the level of stress placed my 12 years of service diabetic lead dev at the hospital. Fuck the hr department for real, fuck the vps of the school that fucked this up royally and fuck people in this city in general. I really care for my team, and the lead dev is one of my best friends and a good developer, this shit will not fucking go unnoticed and the HR department is now in low priority level for the software that we build for them

Still. I am amazed to have a manager that actually looks out for us instead of putting a nice face for the pricks that screwed us over.

I have been working since I was 16, went through the Army, am 27 now and it is the first time that I have seen such manager.

She can't read this, but she knows how much I appreciate her.3 -

"Fuck JavaScript, its such a shitty language" seems to be quite a common rant today. It seems as if JS is actually getting more hate than PHP, which is certainly odd, considering the stereotype.

So, as someone who has spent a lot of time in JS and a lot of time elsewhere, here are my views. Please, discuss your opinions with me as well. I am genuinely interested in an intelligent conversation about this topic.

So here's my background: learned HTML/CSS/JS in that order when I was 12 because I liked computers. I was pretty shitty at JS until U was at least 15, but you get the point, Ive had it sploshing about in my brain for a while.

Now, JS certainly has its quirks, no doubt, but theres nothing about the language itself that I would say makes it shitty. Its a very easy leanguage to use, but isn't overdeveloped like VB.net (Or, as I like to call it, TheresAFunctionForThat)

Most of the hate is centered around JS being used for a very broad range of systems. I doubt JS would be in the rant feed so often if it were to stay in its native ecosystem of web browsers. JS can be used in server backend, web frontent, desktop and mobile applications, and even in some system services (Although this isn't very popular as of yet). People seem to be terrified that one very easy to learn language can go so far. And, oh god, its interpreted... How can a system app run off an interpreted language? That's absurd.

My opinion on JSEverything is that it's progress. Thats what we're all about, right? The technologies already in place are unthreatened by JS, it isn't a gamechanger. The only thing JS integration is doing is making tedius and simple tasks easier. Big companies with large systems aren't going to jump ship and migrate to JS. A startup, however, could save a fucking ton of development time by using a JS framework, however. I want to live in a world where startups can become the next Google, because technology will stagnate when youre trying to protect your fortune, (Look at Apple for fucks sake) but innovation is born of small people with big ideas.

I have a feeling the hate for JS is coming from fear of abandoning what you're already doing. You don't have to do that. JS is only another option (And a very good one, which is why it's becoming so popular).

As for my personal opinion from my experiences... I've left this part til the end on purpose. I love programming and learning and creating, so I've never hated a lamguage, really. It all depends on what I want to do. In the times i've played arpund with JS, I've loved it. Very very easy. The idea of having it on both ends of web development makes a lot of sense too, no conversion, just direct communication. I would imagine this really helps with speed, as well. I wouldn't use it in a complicated system, though. Small things, medium size projects: perfect. Running a bank? No.

So what do you think about this JSUniverse?13 -

I hate Wordpress. I hate Wordpress. I hate Wordpress.

Wordpress can take a big shit on itself and crawl into a deep dark hole far away from all that is good.

Who even uses Wordpress? Bloggers? Come on, let’s be honest, they’re using more intuitive sites like weebly, wix, and square space. So WHAT is Wordpress for? I’ll tell you, it’s just to FUCKING TORTURE PEOPLE.

So, being the “techy guy” of the family, a relative contacts me asking for some help with their website because they need to install an SSL certificate but they don’t know how to. I tell them I’d gladly do it because, sure, they’re family and how long can it possibly take to install a certificate? I’ve done it before!

Well, I get to work and log into the sluggish Wordpress dashboard and try to use a plugin that would issue a LetsEncrypt certificate because they are free and just as good as any other SSL. But one plugin after the next I keep getting errors about how my hosting wouldn’t allow it.

So I contact GoDaddy (don’t get me fucking started) and ask them about the issue. The guy tells me it’s “policy” to only be able to use GoDaddy’s certificates. How much do they cost? Oh, how about $100 a year?! Fuck you.

I figured out the only way to escape this hell was to ask them to open an economy Linux hosting account with cPanel on GoDaddy (the site was formerly hosted on a “Managed Wordpress” account which is just bullshit for not wanting to give you any control over your own goddamn content). So now I have to deal with migrating the site.

GoDaddy representative tells me that it should only take 20 minutes for me to do this (I’ve already spent way too much time on this but whatever) so I go forward with the new account. I decide I should migrate the site by exporting a backup and manually placing everything on the new server. Doesn’t it end up taking an entire hour to back up a 200MB site because GoDaddy throttled the processing speed?!

So, it’s another hour later and I’ve installed all the databases and carried over all the files. At this point, I’m really at the end of my rope and can’t wait to install the certificate and be done with this fuckery.

I install the certificate and finally get ready to be on my way, but then I see it. A warning. A warning from my browser telling me the site is only partially secure. It turns out the certificate was properly installed but whoever initially made the site HARDCODED ALL THE LINKS to images, websites, and style sheets to be http instead of https.

I’m gonna explode.

I swear, I’m gonna fucking explode.

After a total of 5 hours of work, I finally get the site secure by using search and replace on every fucking file.

Wordpress can go suck a big one. Actually, Wordpress can go suck the largest fuckin one in existence and choke on it.

TL;DR I agree to install an SSL certificate but end up with much more work than I bargained.34 -

New programming language alarm!

The V Language sets the goal to compete with Rust and Go. It's main advantage is appearantly it's efficiency and speed. You can build a basic web server with only 65kb file size.

https://vlang.io 26

26 -

Last year at work we started migrating our backend from PHP on a dedicated server to Node.js on AWS lamda functions

We went from 10 second calls to 70ms calls...

At this point our frontend is not even ready for this kind of speed 😅9 -

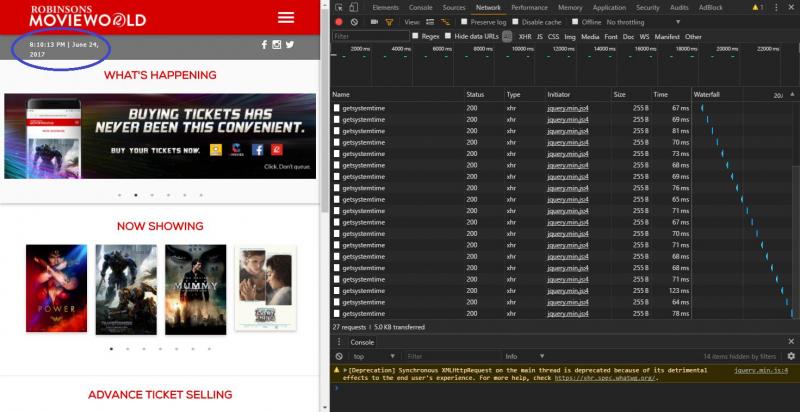

RIP servers of https://robinsonsmovieworld.com//

I guess he must've gotten fired. It requests for the system time from the server, gets a response, and then displays it on the page. 7

7 -

My worst experience was at my job where they told me I have to move to a permanent position from 3 years of contracting without a specific offer.

Why is that bad? In my country it means approximatly 40% lower wage.

I came into the job with PHP knowledge when they were looking for Perl on a project one year behind schedule. I learned the language and finished working demo in 6 weeks.

After that, every project that was ever assigned to me was done within 5-15% of the allocated time. I'm not kidding here. My manager loved be, because I was reliable, fast and I even 'accidentaly' solved other problems, like for instance I developed simple syslog search tool and benchmarked zip algos for reading speed, and the fastest had 70% better compression than the algo used before (gzip into plzip on 1-2gb files). That solved anothet problem - syslog servers did not have enough disk space and they didn't have money to upgrade the server.

The number of projects I touched or developed was over 20.

I also lead and developed our team's most successful tool, that every customer was throwing money to buy, while cutting down costs everywhere.

And after three years of that, my manager says that there are no more money for contractors. And the only possibility is going for employment. Without any specific offer! Just 'we cant do this anymore'.

Which I understand, that can happen in corporation, but ffs after all I've done, I expected warmer attitude. Not like 'you may have to leave, since we do not really care'.

I liked the people there, even though the corporation environment was lacking in many respects, but I wanted to help our local branch with everything I could and they gave up on me like that.

So I started looking elsewhere and I found a startup which offered 6 times the money I had in my previous job and promises to relocate me to USA. Which is the best thing that has happened to me that year and second best in my whole life!3 -

Thanks to @C0D4 I rediscovered Folding@Home!

I've been running this on a very powerful server at home at full-speed for a few days now (quite some cores being used to the max right now, it's like I have a vacuum cleaner running full-time in my place 😄)

Then, last night it hit me that I have quite a few servers running close to idle (rented ones).....

I'm now running a total of 4 servers at full capacity with Folding@Home.

Can recommend!10 -

After moving, I don't have DSL yet so I have to use mobile data to get internet access. Additionally I had to finish a freelancing job. In Germany you have one of the most expensive and least reliable mobile networks in europe.

I had to upload my develpoed software to a remote server

So I suddenly was sent back in time. A single call would have disrupted the download (I can't use internet and phone at the same time, might be a phone issue). While my phone has "high speed" volume left and showed at least HSPA, but I still, the upload rate was prehistoric: 7

7 -

Got my first Webdev job at a small marketing company, felt very lucky as I didn't have much experience. Turns out I'm the only one that could program. The other guys just use Wordpress. It felt wrong at first, using plugins instead of developing, but we got results and clients were happy. I felt like there was a lot less to this development thing than I'd previously thought! And so we continued.

But I noticed that some of our more plugin heavy sites (not made by me - these were made in some drag/drop Wordpress interface) were running slow. I mean 15 seconds load time slow. I joined devRant around the same time and discovered that no - this is not what normal development actually is. Wordpress seems universally hated. Thank god, because something seemed very wrong!

So with us getting complaints all over the place over page speed from relatively high-profile clients, I've gone and set up a script on a server that downloads the whole front end of these Wordpress sites and serves them up instead of the 'real' thing. Did I mention that there's basically no dynamic content on most of these sites? It works like a charm! I'm now trying to figure out how to get forms and route them into the real, hidden version of the site, as well as automatically updating the html views whenever the client changes anything in the Wordpress backend. Not sure if this has fixed the problem or just enabled bad practice, but I don't think I'm going to be able to stop the others from doing things this way...

For the record, yes there are plugins that do similar stuff but I thought it'd be nice to never use plugins again! And hey, I got to learn all about bash scripting so I can't complain.

For real though, I didn't quite realise how bad the Wordpress thing really was until I came here. Thanks for making me aware, all!7 -

.Net is masterrace.

C# gives me frequent orgasms.

Use SQL Server for DB, add to that parallel querying and NoSQL capabilities.

Incredible development speed with EF

Incredebly powerful web framework...check

AI and neural networks...check

App Development...Xeck

If you want to do some of that functional programming F# is the language for you.

And the best thing: .Net core runs on Linux too10 -

Got my first serious project about a year ago. Made it clear to the client that we are developing a Windows app. After around 80-100 hours of work client just goes "how about we make this a web app?" Got a "financial support" instead of the agreed payment. Got around 4 times less money than agreed upon. They never ended up using some parts of the software (I ran the server so I knew that they weren't using it)

I once had a nightmare explaining to the client that he cannot use a 30+ MB image as his home page background. Average internet speed in my country is around 1-2 MB/s. I even had to do the calculation for him because he couldn't figure out the time it took for the visitor to load the image.3 -

EoS1: This is the continuation of my previous rant, "The Ballad of The Six Witchers and The Undocumented Java Tool". Catch the first part here: https://devrant.com/rants/5009817/...

The Undocumented Java Tool, created by Those Who Came Before to fight the great battles of the past, is a swift beast. It reaches systems unknown and impacts many processes, unbeknownst even to said processes' masters. All from within it's lair, a foggy Windows Server swamp of moldy data streams and boggy flows.

One of The Six Witchers, the Wild One, scouted ahead to map the input and output data streams of the Unmapped Data Swamp. Accompanied only by his animal familiars, NetCat and WireShark.

Two others, bold and adventurous, raised their decompiling blades against the Undocumented Java Tool beast itself, to uncover it's data processing secrets.

Another of the witchers, of dark complexion and smooth speak, followed the data upstream to find where the fuck the limited excel sheets that feeds The Beast comes from, since it's handlers only know that "every other day a new one appears on this shared active directory location". WTF do people often have NPC-levels of unawareness about their own fucking jobs?!?!

The other witchers left to tend to the Burn-Rate Bonfire, for The Sprint is dark and full of terrors, and some bigwigs always manage to shoehorn their whims/unrelated stories into a otherwise lean sprint.

At the dawn of the new year, the witchers reconvened. "The Beast breathes a currency conversion API" - said The Wild One - "And it's claws and fangs strike mostly at two independent JIRA clusters, sometimes upserting issues. It uses a company-deprecated API to send emails. We're in deep shit."

"I've found The Source of Fucking Excel Sheets" - said the smooth witcher - "It is The Temple of Cash-Flow, where the priests weave the Tapestry of Transactions. Our Fucking Excel Sheets are but a snapshot of the latest updates on the balance of some billing accounts. I spoke with one of the priestesses, and she told me that The Oracle (DB) would be able to provide us with The Data directly, if we were to learn the way of the ODBC and the Query"

"We stroke at the beast" - said the bold and adventurous witchers, now deserving of the bragging rights to be called The Butchers of Jarfile - "It is actually fewer than twenty classes and modules. Most are API-drivers. And less than 40% of the code is ever even fucking used! We found fucking JIRA API tokens and URIs hard-coded. And it is all synchronous and monolithic - no wonder it takes almost 20 hours to run a single fucking excel sheet".

Together, the witchers figured out that each new billing account were morphed by The Beast into a new JIRA issue, if none was open yet for it. Transactions were used to update the outstanding balance on the issues regarding the billing accounts. The currency conversion API was used too often, and it's purpose was only to give a rough estimate of the total balance in each Jira issue in USD, since each issue could have transactions in several currencies. The Beast would consume the Excel sheet, do some cryptic transformations on it, and for each resulting line access the currency API and upsert a JIRA issue. The secrets of those transformations were still hidden from the witchers. When and why would The Beast send emails, was still a mistery.

As the Witchers Council approached an end and all were armed with knowledge and information, they decided on the next steps.

The Wild Witcher, known in every tavern in the land and by the sea, would create a connector to The Red Port of Redis, where every currency conversion is already updated by other processes and can be quickly retrieved inside the VPC. The Greenhorn Witcher is to follow him and build an offline process to update balances in JIRA issues.

The Butchers of Jarfile were to build The Juggler, an automation that should be able to receive a parquet file with an insertion plan and asynchronously update the JIRA API with scores of concurrent requests.

The Smooth Witcher, proud of his new lead, was to build The Oracle Watch, an order that would guard the Oracle (DB) at the Temple of Cash-Flow and report every qualifying transaction to parquet files in AWS S3. The Data would then be pushed to cross The Event Bridge into The Cluster of Sparks and Storms.

This Witcher Who Writes is to ride the Elephant of Hadoop into The Cluster of Sparks an Storms, to weave the signs of Map and Reduce and with speed and precision transform The Data into The Insertion Plan.

However, how exactly is The Data to be transformed is not yet known.

Will the Witchers be able to build The Data's New Path? Will they figure out the mysterious transformation? Will they discover the Undocumented Java Tool's secrets on notifying customers and aggregating data?

This story is still afoot. Only the future will tell, and I will keep you posted.6 -

In fact I'm a sinful dev, so that I can't easily decide which one is worst. From indenting with tabs, or using nano instead of vim/emacs, to hardcoding database credentials on server, to many hacks and workarounds I use as actual "fixes" when the deadline is upon me and I've tried all I could. But it always led only to my own regret. For instance, my latest sin was that I prefered Debian over Arch and used proprietary graphic drivers to speed up my new setup. But ended up with a curse from St. Ignucius. (check my last rant)

But my worst sin probably goes to when I was "printf-debugging" some issue for a GSM controller on a raspberry pi. I forgot to remove one little print line and deployed the new "fixed" version. I didn't follow that project after that for like a month or so, when the client posted back the device and said that "it just doesn't work anymore". It seemed that raspbian didn't boot beacause the sd card was curroptted. I dd'ed through the card and I noticed that there are billions of lines of "DEBUG:: reading stream from 192.some.shitty.ip", took almost all over the 32G sdcard. Just as I suddenly remembered the cursed line I just added a month ago, I declared the sd card dead with no hesitation, dunce-commented the line (so the history would remember), implemented a time out for the thread containing it, setup a journald unit for my service and removed the redirection of process output to a log file, found a new sd card and installed everything again, and finally posted back the new "fix" to the client.

Moral: Never comfort yourself for the sins you have commited in the past kids, they certainly will come back to you. And also not to do any io especially write to a file on an SD card with ext fs, in a potentially infinite loop with no timeout.

P.S: I'd posted my last rant just before the new week rant last nigh. I really liked the St. Ignucius meme so decided to create a new one. He's very adorable :) 1

1 -

I feel so guilty.

I had to make a hotfix today. It is the ugliest piece of shit code I ever intentionally created. But there was no other way. I swear there was no other fucking way!

My boss just assigned this to me. But because she thinks this needs to be a hotfix and can't wait for the next release we just have to change the server and not the client side of our application.

So I had to add a memory to our server so that it knows from which high level method from the client the multiple low level calls to it are coming from.

It just doesn't make sense logically.

I mean I feel like I killed someone. And just so that we get less writes to our DB. I mean yes in some edge cases it is a huge speed-up...

But nothing this fix solves is a new bug.

I'm gonna take a shower now. For like an hour3 -

Can someone help me understand?

I subscribed to a nifty IT-releated magazine, and on its back, there's an ad for "Dedicated root server hosting", nothing unusual at a first glance, but after I read the issue, I decided to humor them and see what it is that they offered, and... It just... Doesn't make sense to me!

An ad for "Dedicated Root Server" - What is a dedicated root server first of all? Root servers of any infrastructure sound pretty important.

But, the ad also boasts "High speed performance with the new Intel Core i9-9900K octa-core processor", that's the first weird thing.

Why would anyone responsible enough want to put an i9 into a highly-reliable root server, when the thing doesn't even support ECC? Also, come on, octa-core isn't much, I deal with servers that have anywhere between 2 and 24 cores. 8 isn't exactly a win, even if it has a higher per-core clock.

Oh, also, further down the ad has a list of, seeming, advantages/specs of the servers, they proclaim that the CPU "incl. Hyper-Threading-Technology"... Isn't that... Standard when it comes to servers? I have never seen a server without hyperthreading so far at my job.

"64 GBs of DDR4 RAM" - Fair enough, 64 gigs is a good amount, but... Again, its not ECC, something I would never put into a server.

"2 x 8 TB SATA Enterprise Hard Drive 7200 rpm" - Heh, "enterprise hard drive", another cheap marketing word, would impress me more if they mentioned an actual brand/model, but I'll bite, and say that at least the 7200 rpm is better than I expected.

"100 GBs of Backup Space" - That's... Really, really little. I've dealt with clients who's single database backup is larger than that. Especially with 2x8 TB HDD (Even accounting for software raids on top)

This one cracks me up - "Traffic unlimited"

Whaaaat?! You are not gonna give me a limit to the total transferred traffic to the internet for my server in your data center? Oh, how generous of you, only, the other case would make the server just an expensive paperweight! I thought this ad was for semi-professionals at least, so why mention traffic, and not bandwidth, the thing that matters much more when it comes to servers? How big of a bandwidth do I get? Don't tell me you use dialup for your "Dedicated Root Server"s!

"Location Germany or Finland" - Fair enough, geolocation can matter when it comes to latency.

"No minimum contract" - Oooh, how kiiiind of you, again, you are not gonna charge me extra for using the server only as long as I pay? How nice!

"Setup Fee £60" - I guess, fair enough, the server is not gonna set itself up, only...

The whole ad is for "monthly from £55.50", that's quite the large fee for setup.

Oh, and a cherry on top, the tiny print on the bottom mentions: "All prices exclude VAT and are a subject to..." blah blah blah.

Really? I thought that this sort of almost customer deceipt is present only in the common people's sphere!

I must say, there's being unimpressed, and then... There's this. Why, just... Why? Anyone understands this? Because I don't...12 -

RPi 4 is hard to get your hands on it seems.

Really debating buying it though, 4 GB is enticing, but I just don't see a place for it. I have a surplus of machines which are much more powerful and accessible (Display ports - not mini HDMI)

And let's not forget the sub-2GHz clock speed. My desktop goes to 5, and my server isn't far behind. And my laptop isn't far behind that. And my other laptop isn't far behind that. But this new Pi would be far far behind that.

Not to mention the ARM architecture. There have been leaps and bounds made since the Pi first came out in terms of support for ARM (Most certainly fueled by the Android craze) but it still isn't x64, is it?

If I were 13 again and I didn't have all of the toys that I do now, I would be elated at the launch of the Pi 4. But as it stands, I don't see a use for it. Maybe nostalgia.19 -

- Launch the new version of the system I have been refactoring for 2 years and counting, then ceremoniously burn (literally) the legacy code as well as the cluster fuck of hardware it runs on.

- Decrease my stress + bus factor by bringing another up to speed on my code & the new version (his cluster fuck now).

- Pay attention to & take better care of health, my wrists in patricular.

- Find a mentor and mentor someone else.

- Get out of crisis management mode and find the time to write tuts, experiment and live a little.

- Find & join a local dev meetup, maybe make a local dev friend.

- Book leave and actually take it, preferabbly without having to take my laptop to the beach - actually, preferabbly at least have the choice to take a offline vacation.

- Sort through the drives containing ALL the code I have ever written, migrate the usefull interesting bits to Github.

Phew, that bit of self reflection was intense! I'm adding a cron to my server to sms & email me this rant in a year to remind me what hope looks like. -

Warning: This contains spoilers for Silicon Valley S4 E10:

.

.

.

.

.

.

.

.

.

.

.

.

.

At 5:35 Gilfoyle says: "In order to hold that much data we would have to go RAID 0. [...] If we lose even one platter, we lose Melcher's data. Permanently."

But my question is: Why use RAID at all? Just storing the data without RAID would reduce the complexity, and if one disk fails, only the data on that one disk is lost. Also, I doubt speed is a priority at that point, since the whole thing is running on a home broadband connection, which something like a WD Gold data-center-harddrive (200+ MB/s) can easily max out.

Also, wouldn't it be easier to pay the broadband bill out of their own pocket, instead of moving tons of server equipment to Stanford?4 -

!Rant #motivation #hugeProject

Yesterday i started a new app and i designed some of it but classes i coded will speed up the whole coding of other parts .

Anyways today i needed to work on the server side of the project and when i was working on setting up the databases structures i realized how big is this project (it uses like 3 APIs) so i was unmotivated because its a side project and it takes alot of time and overall it dont worth it and even app may fail or may be successful.

So i said i dont care about how it will turn out

Im gonna do it , and im gonna do it right now

So i did now its 6 am and the server part is almost finished ! 75% done .

It was a secure login system and signup with verifications and more security stuff and the codes that provide the server status and most of the user parts . And some of the features of the app .

The most hard thing remaining is to setup the in app purchases and the APIs .

So if you see a project that is huge .

Dont give up . Just do it as long as you can

And you will see how much you progress !

And the huge project will be a big project ;)

Then a normal project , then a tiny project :P

Good night1 -

Ok, first rant, about my struggles getting reliable internet over the past 6 years. It's not too interesting of a topic, but here we go:

I'm living in a more rural part of Germany and internet here is shit. I pay more than 50 bucks a month for 700kb/s downstream (let's just not talk about upstream...), which is meh by itself but it gets worse. Before this I had roughly 230kb/s downstream using DSL. My provider came out with a new oh-so-fucking-fancy solution for giving people faster internet without upgrading their lame ass fucking backbone and POS infrastructure from 70 years ago: they sell you hybrid internet which combines your shit DSL and an LTE connection using TCP Multicast. Not only do I get only 6 of my promised (and payed for) 50 Mbit, no, It's also a fucking piece of nonworking shit!!!

Let me illustrate:

You constantly have problems with web content (or any remote content) not loading because the host server does not support TCP Multicast. It either refuses connection altogether or it takes about 30-50 seconds to establish a connection. Think about your live when it takes two or three fucking minutes to load 5 YouTube thumbnails or load new tweets at the bottom of the Twitter page! Also, you never know if you a) have an error in your implementation of a new API or if b) the remote host doesn't support TCPMC (there's never an error for that! Fuck you!), your SSH sessions ALWAYS drop in the most inopportune fucking moments because the LTE thing lost connection, you always have to turn on a VPN if you want to visit specific websites (for example your school's website) and so on....

Oh and also, my provider started throttling specific services again these days with Netflix and YouTube struggling to display 240p, fucking 240p video without buffering when I get 600kbit down on steam (ofc the steam download is paused when watching videos). When using a VPN, YouTube 720p and Netflix HD work like a charm again. Fucking Telekom bastards

Then there is the problem with VPNs. The good thing about them is that they solve all the TCP Multicast problems. Yay. Now for the bad things:

First of all, as soon as I use a VPN, access times to remote go up by like fucking 500%. A fucking DNS lookup takes 8-15 seconds!!! The bandwidth is there but it takes forever.. because reasons I guess. Then the speed drops to DSL speeds after a while because the router turns off my LTE connection when it is unused and it does not detect VPN traffic as traffic (again because... Reasons?) And also, the VPN just dies after an hour and you have to manually reconnect (with every VPN provider so far)

And as if that wasn't enough, now the lan is dying on me, too, with the router (the fucking expensive hybrid piece of shit, 230 bucks..) not providing DHCP service anymore or completely refusing all wifi connections or randomly dropping 5Ghz devices, or.....

You get the point.

The worst thing is, they recently layed down 400mbit fiber in my neighborhood. Guess where the FUCKING PIECE OF SHIT CABLE ENDS??? YEAH, RIGHT IN FRONT OF MY NEIGHBORS HOUSE. STREET NUMBER 19 IS SERVED WITH 400MBIT AND MY HOME, THE 20, IS NOT IN THEIR FUCKING SERVICE REGION. Even though there is a fucking cable with the cable companies name on it on my property, even leading up to my house! They still refuse to acknowledge it! FUCK YOU!!!!

Well anyways thanks for reading. Any of you got the same problems? :/2 -

So recently I installed Windows 7 on my thiccpad to get Hyperdimension Neptunia to run (yes 50GB wasted just to run a game)... And boy did I love the experience.

ThinkPads are business hardware, remember that. And it's been booting Debian rock solid since.. pretty much forever. There are no hardware issues here. Just saying.

With that out of the way I flashed Windows 7 Ultimate on a USB stick and attempted to boot it... Oh yay, first hurdle to overcome. It can't boot in UEFI mode. Move on Debian, you too shall boot in BIOS mode now! But okay, whatever right. So I set it to BIOS mode and shuffled Debian's partitions around a bit to be left with 3 partitions where Windows could stick in one more.

Installed, it asks for activation. Now my ThinkPad comes with a Windows 7 Pro license key, so fuck it let's just use that and Windows will be able to disable the features that are only available for Ultimate users, right? How convenient would that be, to have one ISO for all the half a dozen editions that each Windows release has? And have the system just disable (or since we're in the installer anyway, not install them in the first place) features depending on what key you used? Haha no, this is Microsoft! Developers developers developers DEVELOPERS!!! Oh and Zune, if anyone remembers that clusterfuck. Crackhead Microsoft.

But okay whatever, no activation then and I'll just fetch Windows Loader from my webserver afterwards to keygen my way through. Too bad you didn't accept that key Microsoft! Wouldn't that have been nice.

So finally booted into the installed system now, and behold finally we find something nice! Apparently Windows 7 Enterprise and Ultimate offer a native NFS driver. That's awesome! That way I don't have to adjust my file server at all. Just some fuckery with registry keys to get the UID and GID correct, but I'll forgive it for that. It's not exactly "native" to Windows after all. The fact that it even has a built-in driver for it is something I found pretty neat already.

Fast-forward a few hours and it's time to Re Boot.. drivers from Lenovo that required reboots and whatnot. Fire the system back up, and low and behold the network drive doesn't mount anymore. I've read that this is apparently due to Windows (not always but often) mounting the network drive before the network comes up. Absolutely brilliant! Move out shitstaind, have you seen this beauty of an init Mr. Poet?

But fuck it we can mount that manually after every single boot.. you know, convenient like that. C O P E.

With it now manually mounted, let's watch a movie! I've recently seen Pyro's review on The Platform and I absolutely loved it. The movie itself is quite good too. Open the directory on my file server and.. oh. Windows.. you just put db.thumb on it and db.thumb:encryptable. I shit you not, with the colon and everything. I thought that file names couldn't contain colons Windows! I thought that was illegal in NTFS. Why you doing this in NFS mate? And "encryptable", am I already infected with ransomware??? If it wasn't for the fact that that could also be disabled with something as easy as a registry key, I would've thought I contracted ransomware!

Oh and sound to go with that video, let's pair up some Bluetooth headphones with that Bluetooth driver I installed earlier! Except.. haha nope. Apparently you don't get that either.

Right so let's just navigate the system in its Aero glory... Gonna need to flick the mouse for that. Except it's excruciatingly slow, even the fastest speed is slower than what I'm used to on Linux.. and it's jerky as hell (Linux doesn't have any of that at higher speed). But hey it can compensate for that! Except that slows down the mouse even more. And occasionally the mouse driver gets fucked up too. Wanna scroll on Telegram messages in a chat where you're admin? Well fuck you mate, let me select all these messages for you and auto scroll at supersonic speeds! And God forbid that you press delete with that admin access of yours. Oh maybe I'll do it for you, helpful OS I am!

And the most saddening part of it all? I'd argue that Windows 7 is the best operating system that Microsoft ever released. Yeah. That's the best they could come up with. But at least it plays le games!10 -

First they make it difficult to do your job. "Only use this server for your testing with twenty other engineers". And when you adjust to that, they change it to impossible. "The server is off limits while demos are on going" .. But you're expected to continue working at full speed ahead.

-

Finaly I write my first rant about dev stuff.

My mom works as a shop clerk in the optic shop (they sell glasses). It is a small shop run by family buisness (not by my mom, she is only employed there). She had been constantly complaining about the poor pc performance and how the program there are using for inventory managment always hangs.

Her boss decided to "upgrade" the pc's by buing macs, but he was stopped by me and my mom. (I was helping them with some IT stuff so i had a bit of a influence over that).

The program they are using was written by some amateur programer that is a boss of a similar shop somewhere in the country.

So i recommended to them to install SSD's to speed up their pc's, and it did nothing. Of course i blammed the poorly written program next.

The program hangs when you type in the find field. I wanted to check if my gut feeling was right so i asked them to have task manager open when they type. And my feeling was right.

When you change anything in the text find bar, the program sends a crap ton of requests to the local server and that server sends a crap ton of packiets back, enough to saturate the local connection...

I will try to rewrite the app myself, just for the challenge of it. I want to check if i can write a better one than this one pos. They still want to buy better pc's but they wont be any help to them... Well i will help them with that anyway (having good pc's is good anyway). I hope i can create the app that will fix their problems...3 -

Shit week....

Defragmentation of several applications codebase(s), sifting out duplicate code and creating a library out of it. Bash.

Not funny.

Yesterday while cooking I was too fast.

Chopping board with adjustable cutting depth, was at 6mm. Right thumb. Full speed. :(

Boy that wasn't pretty. Bled for half an hour and created quite a mess while trying to find some band aid to get pressure on it. Guess I'll have fun the next week's as no thumb is pretty handicapped .

And today we have in Germany a pretty severe snow storm.

I really hope that the server rooms @ company don't get flooded or shit like that.6 -

Have a question about my career:

So far my career out of uni has been like this:

8 months in first place working as C# .NET dev, creating native desktop apps for windows. job was shitty, was not getting any best practices skills so I left.

12 months in 2nd place working as android dev in a startup. was working all alone and had to rebuilt my app up to 5-6 times to learn best practices. startup didnt care about android app at all so I left and now doing just some small freelance work for them.

3 months in new startup as android dev.Today I was told that its decided to focus on iOS and do all marketing (also uplift of new design) only on iOS. basically for next 3-4 months they don't plan to do much on android side. they saw that I showed some interest in backend and now they are asking me to talk with two other senior guys about starting with some small tasks for me on backend.

Our backend is mainly using python. Also backend guys will be pretty busy for next few months because they will have to deliver many new features in next few upcoming months. I've talked with one of them and he said that this is a bad idea to force frontend to start working on backend. However I feel that he's sort of gateekeping and probably just doesn't want to help me with getting up to speed.

In my defense, my knowledge doesn't end with C# .NET desktop apps and native mobile apps for android.

I have hobbie projects (gameservers) where I worked on websites (php,html,css,javascript,mysql) and also was taking care of a java based gameserver which is hosted in a linux vps.

Also I've had a small hosting "company" where with available tools I've managed to automate VPS(virtual private server) ordering, web hosting ordering and domain ordering. Basically I owned a dedicated server and did everything using whmcs, cpanel and proxmox virtualization.

I trust myself in learning this backend stuff and doing whats required, however I learned everything by myself and I won't follow all of these best practices.

Should I accept more responsibility on backend or should I continue focusing on android?7 -

IMAGE COMPRESSION QUESTION

lets say i upload a 100x100 photo from my android device. this image has a size of e.g. 2MB. not a lot. if i compress it then the size will be e.g. 300kB. cool. upload is thunderbolt for any internet speed.

lets consider this case. a random ass motherfucker decides it is cool to upload a 10000x10000 image that has a size e.g. 300MB. compressing this would be e.g. 150MB which is still a lot as fuck for one pic.

heres my question: where should the compression be handled? at backend (REST API server) or client (android image compression library)?

because if i try to send a 150MB pic to the server and their internet sucks but to be fucking honest even the best internet speed would take way too long to upload, is it better to do the compression on the backend or client?

or should i do compression in android? if i should do compression on client then should i;

1) do the compression on the main thread with a progress dialog to wait them until the compression + PLUS the fucking upload is done or

2) do the compression + THE upload in a background thread in which case it can be dangerous for verbose amount of fuckups (internet dies phone explodes etc) and the app crashes

which (one) option of the 2 suboptions from the second parent option branch?

of course this is an extremely unrealistic case, it is possible but thats not my point: my point is WHERE SHOULD THE COMPRESSION (as some kind of universal standard) BE HANDLED AT?6 -

After learning a bit about alife I was able to write

another one. It took some false starts

to understand the problem, but afterward I was able to refactor the problem into a sort of alife that measured and carefully tweaked various variables in the simulator, as the algorithm

explored the paramater space. After a few hours of letting the thing run, it successfully returned a remainder of zero on 41.4% of semiprimes tested.

This is the bad boy right here:

tracks[14]

[15, 2731, 52, 144, 41.4]

As they say, "he ain't there yet, but he got the spirit."

A 'track' here is just a collection of critical values and a fitness score that was found given a few million runs. These variables are used as input to a factoring algorithm, attempting to factor

any number you give it. These parameters tune or configure the algorithm to try slightly different things. After some trial runs, the results are stored in the last entry in the list, and the whole process is repeated with slightly different numbers, ones that have been modified

and mutated so we can explore the space of possible parameters.

Naturally this is a bit of a hodgepodge, but the critical thing is that for each configuration of numbers representing a track (and its results), I chose the lowest fitness of three runs.

Meaning hypothetically theres room for improvement with a tweak of the core algorithm, or even modifications or mutations to the

track variables. I have no clue if this scales up to very large semiprime products, so that would be one of the next steps to test.

Fitness also doesn't account for return speed. Some of these may have a lower overall fitness, but might in fact have a lower basis

(the value of 'i' that needs to be found in order for the algorithm to return rem%a == 0) for correctly factoring a semiprime.

The key thing here is that because all the entries generated here are dependent on in an outer loop that specifies [i] must never be greater than a/4 (for whatever the lowest factor generated in this run is), we can potentially push down the value of i further with some modification.

The entire exercise took 2.1735 billion iterations (3-4 hours, wasn't paying attention) to find this particular configuration of variables for the current algorithm, but as before, I suspect I can probably push the fitness value (percentage of semiprimes covered) higher, either with a few

additional parameters, or a modification of the algorithm itself (with a necessary rerun to find another track of equivalent or greater fitness).

I'm starting to bump up to the limit of my resources, I keep hitting the ceiling in my RAD-style write->test->repeat development loop.

I'm primarily using the limited number of identities I know, my gut intuition, combine with looking at the numbers themselves, to deduce relationships as I improve these and other algorithms, instead of relying strictly on memorizing identities like most mathematicians do.

I'm thinking if I want to keep that rapid write->eval loop I'm gonna have to upgrade, or go to a server environment to keep things snappy.

I did find that "jiggling" the parameters after each trial helped to explore the parameter

space better, so I wrote some methods to do just that. But what I wouldn't mind doing

is taking this a bit of a step further, and writing some code to optimize the variables

of the jiggle method itself, by automating the observation of real-time track fitness,

and discarding those changes that lead to the system tending to find tracks with lower fitness.

I'd also like to break up the entire regime into a training vs test set, but for now

the results are pretty promising.

I knew if I kept researching I'd likely find extensions like this. Of course tested on

billions of semiprimes, instead of simply millions, or tested on very large semiprimes, the

effect might disappear, though the more i've tested, and the larger the numbers I've given it,

the more the effect has become prevalent.

Hitko suggested in the earlier thread, based on a simplification, that the original algorithm

was a tautology, but something told me for a change that I got one correct. Without that initial challenge I might have chalked this up to another false start instead of pushing through and making further breakthroughs.

I'd also like to thank all those who followed along, helped, or cheered on the madness:

In no particular order ,demolishun, scor, root, iiii, karlisk, netikras, fast-nop, hazarth, chonky-quiche, Midnight-shcode, nanobot, c0d4, jilano, kescherrant, electrineer, nomad,

vintprox, sariel, lensflare, jeeper.

The original write up for the ideas behind the concept can be found at:

https://devrant.com/rants/7650612/...

If I left your name out, you better speak up, theres only so many invitations to the orgy.

Firecode already says we're past max capacity!5 -

Hello guys. A newbie to the app. I would like to ask - start a conversation with you about adopting new technologies, if should we follow or just wait? I am a PHP developer. I would set myself around mid to senior level. Since I graduated and I start working on a Marketing/Development Company, I have been develop a lot of websites, platforms with pure PHP, JavaScript, SQL. Later I start using framework like laravel. Now I am thinking about JS frameworks such as node, vue, react, angular and maybe later noSQL. The problem is that there are many new technologies that companies required when you apply. I want to learn new technologies but I don't know if that would be helpful than focus on LAMP and get better and better to that. Many orgs have implemented their own technologies and each company is getting mad to it. You see each company adapt these new technologies even if they don't want em or projects required it. So my question is: are we talking about dramatically speed and light use to server when we use new frameworks like these, previous mentione + etc? Or companies are just trying to look cool by mentioning many techologies while projects could never ask for em? (Nothing serious, I am just trying to make conversation and clear my thoughts by getting others opinion)17

-

Under pressure for a big feature that had to be merged into develop like one month ago. But I couldn't because of issues I discover every single fucking day.

Today's issue is that a Cucumber test fails. I try reproducing it on my machine, it fails with a different error. Apparently I need to download some 10GB database file from some company server.

Alright, let's download it. But it's damn too slow. Well, let's have lunch in the meantime.

I come back, the download timed out at basically the same point I left it at.

I don't wanna try again. Not without trying to improve things. Download speed is ridiculous. Switching from Wi-Fi to Ethernet definitely helps, I thought.

The cable doesn't work. The port LEDs are both off. Is that cable even connected to something? So I follow that damn cable throughout my colleagues' desks. I'm now doing things without even remembering why.

I finally find the other end. It is plugged to the wall. I try another plug, but that fucking LED is still off. A colleague tells me: not all the sockets are actually connected to the switch, you have to call IT to have yours patched. Stay calm, stay caaaaalm...

A small lamp turns on in my head. Maybe something in my laptop is broken. So I try with a colleague's ethernet. That fucking LED is still off. A-ha.

Turns out, the shitty macbook adapter has this Ethernet port that DOESN'T work out of the box. It needs a driver to even realize there's a port. I look for it, I find it. I finally have wired connection. It's like having drinking water again.

I turn off WiFi, I re-try downloading that fucking database.

Nope, it's still stupidly slow. The bottleneck was in the dumbfuck internal server.

FUCK.

At least I have Ethernet now.1 -

Since I got my 100mbit/s down and 25 up connection I noticed that some servers aren't even sending over a 30mbit/s. Is it me or have some of you the same issue?3

-

How do you diagnose speed issues?

I've been lumped with looking after a legacy app.

It connects to our ERP system to handle raising invoices etc. And in June is developed slowness, which we sort of fixed by making one section load later (the SQL was a horrible view in a view in a view).

We then upgraded our ERP software, and the SQL issues are resolved (had to upgrade SQL server etc at the same time) and now the legacy app is running really slowly.

I know that it is when it loops through a data set to set column values etc.

A particular project has 1900 time transactions and takes upto 2 minutes to load.

This part of the program hasn't been changed in over a year, and has only started running slowly since the upgrade.

Are there any good way I can investigate and diagnose exactly why it has suddenly started running slowly?8 -

some languages completely get lost in minutiae, disposable preciousism that looks pretty but mischievously gobble development cycles. Now, there's no doubt they make for skinnier, trustworthy, low maintenance code, yes, congratulations Haskell. Although, you see, Haskell, not every language out here is defacto an academic one. You hear me, Rust. So, for fuck sakes, Rust dear. You've macros, sis, you don't need a new languages feature every other naughty day. You need prototyping speed, not more complexity. I'm not complaining not really.... It's your fucking language server, your compiler... They can't take this shit no more. Have you seen their overeating problems? Please, Rust, stop picking plastic surgery instead of make-up and use macros instead

--

and google, dear, your auto completion sucks ass1 -

Technical question that I just cant find the answer to anywhere.

I have a load balancer and want it to pass the IP of the original caller to the server. Usually it is done by modifying the header? of the Request HTTP packet? and adding X-Forwarded-For: ....

The LB team though says it needs to modify X-Originating-IP and somehow causes a noticeable impact of the speed of all requests.

I don't know the details but it should only modify the first Packet that has the HTTP headers and should be appending X-Forwarded-For. If only need to modify the Header packet, how can it slow down the whole interaction so much:

-Adds 100ms to a 200ms request

-Increases a 10 minute download to like 20-30 minutes6 -

Sooo I think my VPS slowly dying. Disk r/w performance has gone to shit - we're talking ~10MB/s write speed on a fucking SSD.

Is there any way to bring it back to life? Or should I just give up?

Note: I've been keeping this VPS alive for years because it only costs me 1 eur/month, and they don't have this offer anymore - it costs triple now, so I reeeeally don't want to lose that price.

Also, I'm using it as a DNS server (among other things), and I really like the IP address, so I don't really wanna lose that either...

What do?8 -

Rule of thumb when buying things. You need to research a lot and canvass other competitors of that product/service.

In short, I forgot to research pCloud's upload speed. They throttle my upload speed to 1Mbps only which is really slow (I'm in Asia by the way, their server is in US). Got tempted by their $350 2TB lifetime.

I tested the basic upload speed using browser 33.9MB file:

- pCloud 1 min 9 sec

- Dropbox 13 sec

- Google Drive 12 sec

- Mega.Nz 1 min 56 sec

- (I will test next sync.com)

I have a 100Mbps upload and 35 Mbps download speed. So it means "Lifetime" slow upload speed.

Side Question: Which Cloud backup service do you use and why? Thanks!2 -

When I have to upload files to client's server urgently, the internet sucks. The uploading speed is going nitro while I'm uploading my data to OneDrive :-/

-

My manager had someone else manage me for my whole time at the company so far. Nearly two years now. Anything I’d come to him with, he’d direct me to this other person.

Fair enough, dude’s really good and I learn a lot from him. I see why they trust him with so much. I think he’s a genius. I’ll never be that good. Embarrassed I’m only a few years his junior. Wonder why he’s okay with being a manager for employee pay. Don’t think about it much, normal corporate BS.

Well it got way more “normal” when his ass got laid off without notice. Feel terrible. Him and 70% of my branch’s full timers. Wonder how I got so lucky. Everyone’s gone. We barely have enough people to do a standup. They all had 5+ years on their belts minimum. Only the contractors are left.

Manager emergency meets with me. Tells me all his best staff are gone and I am now the only front end guy on the team. He tells me he is not confident in the fact I am responsible for all of the old guys work and he is worried. He thinks I can’t do it cause he thinks I suck. Fuck me man.

My manager is pissing himself realizing he has lost the only people keeping HIS job for him. He has no clue my skill level. He sees my PR’s take a bit longer to merge, yet doesn’t realize I asked that friend of mine who was managing me to critique my code a bit harder, mentorship if you will, so we’d often chat about how to make the code better or different ways of approaching problems from his brain, which I appreciated. He has seen non-blocking errors come through in our build pipelines, like a quota being reached for our kube cluster (some server BS idfk, all I know is I message this Chinese man on slack when I get this error and he refreshes the pods for me) which means we can only run a build 8x in one day before we are capped. Of all people, he should be aware of this error message and what is involved with fixing it but he sees it and nope, he reaches out to me (after the other guy had logged out already, of course) stating my merged code changes broke the build and reverts it before EOD. Next day, build works fine. He has the other guy review my PR and approve, goes on assuming he helped me fix my broken code.

Additionally, he’s been off the editor for so long this fool wouldn’t even pass an intro to JavaScript course if he tried. He doesn’t know what I’m doing because HE just doesn’t know what I’m doing. Fuck me twice man.

I feel awful.

The dude who got fired has been called in for pointless meetings TO REVIEW MY CODE still. Like a few a week since he was laid off. When I ask my manager to approve my proposals, or check to verify the sanity of something (lots of new stuff, considering I’m the new manager *coughs*) he tells me he will check with him and get back to me (doesn’t) or he tells me to literally email him myself, but not to make any changes until he signs off on them.

It’s crazy cause he still gets on me about the speed of stuff. Bro we got NOTHING coming from top down because we just fired the whole damn corp and you have me emailing an ex-employee to verify PATCH LEVEL CHANGES TO OUR FUCKING CODE.

GET ME OUT3 -

First let me start this rant by saying: Don't use SharePoint lists as your primary data store if you can avoid it. You're gonna have a bad time.

My coworkers and I work on a system where we need to pull tons of data down from a SharePoint site and run various algorithms and operations on it. Generate reports, that sort of thing. This is all done in the browser using a Typescript React SPFX webpart. Basically using SharePoint as a DB/DAL.

Because of the sheer amount of data we end up pulling down (our system in production is the single source of truth for one of the largest companies in Canada, and they're currently building a pipeline as we speak), in order to maintain a reasonable speed while using it, we have some pretty intense caching logic implemented, logic that ensures we get new items when new items are detected, and merges changes to already exisiting objects. It's pretty brilliant, and that's before we even consider the custom paging that my coworker implemented in order to get around the IndexedDB max size of 100MB.

Well that's all well and good, and works great in production, but it is a horror to work with. Because EVERYTHING we touch on the server is cached locally, it can be IMPOSSIBLE to detect data anomalies, be they local or server side -.- You don't know how many hours I have completely WASTED fixing a "bug" that didn't really exist... Just incorrect data in the cache12 -

!rant, so I'm trying to decide on what caching system I should use. It's for a PHP app, using Symfony as framework, tlgether with Doctrine for DB. The caches in Question are memcached, APCu or redis.

The goal: speed shit up.

The app currently uses Symfony 2.8 and is hosted on a single server (so no distributed system is needed). I'd currently opt for APCu, but more since it's not distributed, there won't be an overhead from that. A nice thing about memcached would be the abillity to store user seeions, even if we would decide to have multiple servers in the future.

What would you reccomend and why?3 -

You now you work at a good job when you download a ISO image of DVD size and the speed max is the server on the other end where you are downloading from.

-

-- Have you ever self hosted a Linux/Free Bsd server at home?

-- What was the maintenance like in terms of operation and cost compared to an online service?

I and my partner are planning to self host our Ubuntu server locally because currently though we spend less than $1100 a month on Azure with moderate CPU usage but we plan to scale out with believes that the server cost might sky-rocket.

We made a budget of $25k for the setup which includes cost of hardware, bandwidth and power.

We also made some research concerning most used hardwares for home servers because we really are newbees talk of hardware. What we found are options related to the Intel Xeon as CPU, some others say use NAS, while some are more of advertising.

$25k on the desk,

we care more about speed than of space. How can we make the setup totally worth it? You don't have to spare us a change, just some headlight and way to go.

Your advice are needed. Thank you.8 -

Any tips to speed up wordpress site. I have googled and tried as many solutions I can except adding cdn. I have minified images, html, css and js. I have used caching on the server with litespeed cache. There are not many plugins on the site.

The plugins installed are elementor, litespeed, orbit fox, wp-optimize, updraft plus and wpforms lite. The site takes around 4 to 5 seconds to fully load. I am doing this for a releative(don't worry he is sane and I am doing pretty simple stuff for him which is simply not worth charging). I cannot use cloudflare cdn since they need nameserver access and the hosting service used is hostinger which have put a lot of dns records which I don't understand and don't wanna mess with unless it is the last option.12 -

I am super frustrated and don't have the energy to translate into a general language so here goeas some hinglish venting:

bc bosses ne leni deni kr rkhi hai... itna badhiya relaxed hoke chutti se wapis aaya tha, 2 din me mood ka bhosada kr diya apni harkato se.

yes, bosses , saala systummm chal rha h boss pe boss pe boss ka.

sardi me saare velle huye pade hai to harr aadhe ghnte me meeting le rhe h.

almost saari team ne aukaat dikhai hui hai , koi 5 din ki chutti pe to koi 7 din ki chutti pe, to jo mil rha h bs usi ko pele jaa rhe hai ye ppt ke chode.

mereko ek feature banane ko diya hua h... saala har cheez pehle idhr udhr delegate krke 15 din ke kaam ko 45 din ka bana diya, ab release deadline pass hai to meeting pe meeting rakh rhe h . bhosadiwaalo , meeting rakhne se tumhare baap ka code tumhari maa likhegi?

upr se thand bc.... itti thand me kon tumhare tatte chaatne office jaa rha h? jo jaa rha h usi ko bulao mereko ghr pe rajai me kaam krne do. saala gaand sookh ke aadu ho gyi h thand se, nd inhe fir bhi metro se 2 ghnte lgane ke baad banda office me chahiye . team me bs 7 lund h(technically 5 lund and 2 !lund) nd unke se 5 bahane maar ke ghr pe baithe huye h... ek langoorni manager aa jati h apne boss ke boss ka hilane... nd expect krti hai mai bhi aake saathme hilau. mereko nahi hilana yaar :'(

4 gnte to travel me waste krwa do, parking space maango to bolenge ki tumhara band level kam h, office me inka wifi vpn ke saath apni maa chudata h 2kbps ki speed se . emulator gaand marvate hai, nd fir bhi inhe banda chahiye office me nd feature ready chahiye 2 din me.

agar khud gaand me ungal nahi dete , to inki policies gaand me ungli krti h... saala 9 bje app le saare dev server band... oh maiiyavo din me 5 ghnte meeting rkhne ke baad tumhe lgta h ki koi kaam hua hoga.. nd bande ko khane hugne mootne me bhi 1-2 ghnte chale jaate h ghr pe... ek to tumhara time waste ko compensate krna chah rha hu apni marzi se raat me kaam krke, but tumhari gaand me usse bhi keede hai... to rote raho bc. meri jhaat tumhe degi festure banake Christmas se pehle.. bhupp

saala kachha utaar ke khol ke baith jaata hu, aake saare bosses ekek krke meri maar lo5 -

VICTIMIZED BY CRYPTO SCAM: RECOVER YOUR LOST FUNDS WITH TRUST GEEKS HACK EXPERT