Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "#asm"

-

I hate everybody who says JavaScript is the best language because of loose typing and its easy to learn, YES OF COURSE IT IS EASY!

ITS FUCKING JAVASCRIPT! IT WAS MEANT TO BE EASY! AND THEN SOME ASSHOLE CAME ALONG, CREATED NODE AND THOUGHT THAT IT WAS A GOOD IDEA!

NOW WE HAVE TO DEAL WITH THIS SHIT EVERYWHERE BECAUSE PEOPLE WHO WROTE CODE FOR UX NOW THINK THEY KNOW WHAT NEEDS TO HAPPEN ON THE SERVERSIDE!!

GOD FUCKING DAMNIT I HATE THIS ANALTOY OF A LANGUAGE.

YOU THINK JAVASCRIPT IS THE BEST?! DO YOU REALLY??!!! OH YEAH!?!

WELL FUCK YOU AND GO TO HELL, YOU ARE NOT A DEVELOPER IN MY EYES, GO HOME KIDDO, LEARN C OR ASM OR HOW A FUCKING COMPUTER ACTUALLY WORKS!!

AND THEN TELL ME AGAIN JAVASCRIPT IS A WELL DESIGNED AND PROPER LANGUAGE!!

I'M OUT!32 -

In Russia we have a huge techno-nazi community. They often can be found on some programming forums and a website called Habr.

They’ll shame you if you’re a web developer and don’t write in Asm or C. They spread toxic memes and insulting “stupid humanitarians”. One particular guy constructed the whole ideology that is focused on technological enthusiasts being the “master race” and implies recycling non-technical people in so-called “bioreactors” for energy.

Please don’t be like them.32 -

I am sure this has happened to all of us in some extent with some variations.

Colleague not writing comments on code.

Ask him something like "How am I suppose understand that piece of garbage you have written when there is no comments or documentation?"

This keeps happening for a long time. Some time after, I write a kernel module using idiomatic C and ASM blocks for optimizations (for some RTOS) and purposely not write neither documentation nor comments.

When he asked for an explanation, I answered to everything he questioned as general as I could for "that trivial piece of code".

After that he always documents his code!

Win! 🏆4 -

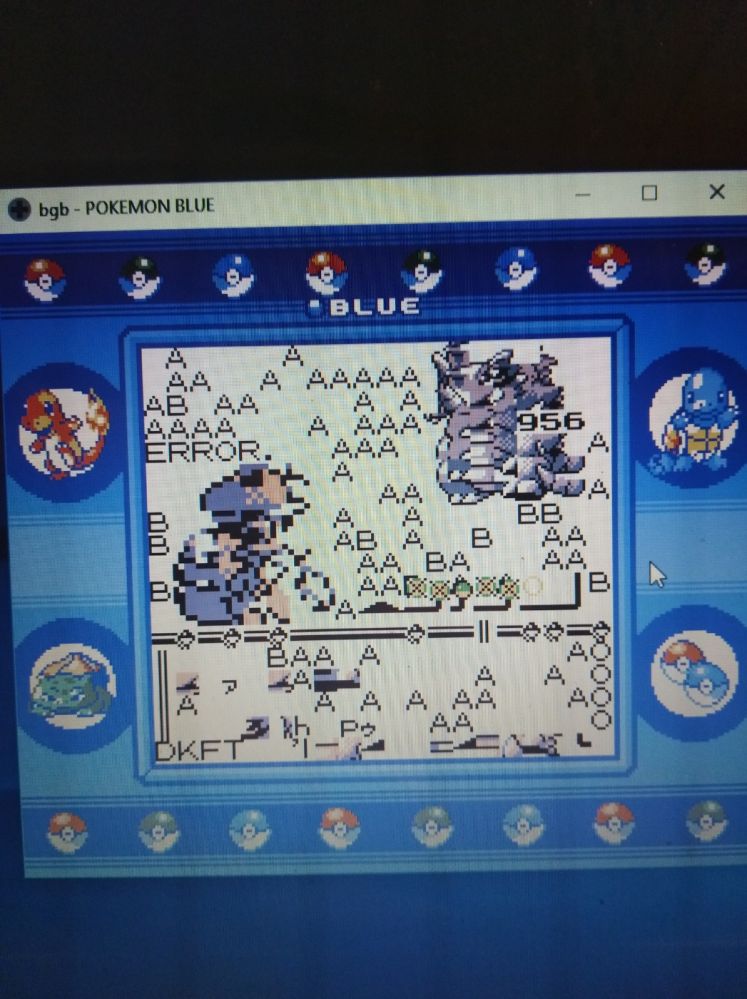

EEEEEEEEEEEE Some fAcking languages!! Actually barfs while using this trashdump!

The gist: new job, position required adv C# knowledge (like f yea, one of my fav languages), we are working with RPA (using software robots to automate stuff), and we are using some new robot still in beta phase, but robot has its own prog lang.

The problem:

- this language is kind of like ASM (i think so, I'm venting here, it's ASM OK), with syntax that burns your eyes

- no function return values, but I can live with that, at least they have some sort of functions

- emojies for identifiers (like php's $var, but they only aim for shitty features so you use a heart.. ♥var)

- only jump and jumpif for control flow

- no foopin variable scopes at all (if you run multiple scripts at the same time they even share variables *pukes*)

- weird alt characters everywhere. define strings with regular quotes? nah let's be [some mental illness] and use prime quotes (‴ U+2034), and like ⟦ ⟧ for array indexing, but only sometimes!

- super slow interpreter, ex a regular loop to count to 10 (using jumps because yea no actual loops) takes more than 20 seconds to execute, approx 700ms to run 1 code row.

- it supports c# snippets (defined with these stupid characters: ⊂ ⊃) and I guess that's the only c# I get to write with this job :^}

- on top of that, outdated documentation, because yea it's beta, but so crappin tedious with this trail n error to check how every feature works

The question: why in the living fartfaces yolk would you even make a new language when it's so easy nowadays to embed compilers!?! the robot is apparently made in c#, so it should be no funcking problem at all to add a damn lua compiler or something. having a tcp api would even be easier got dammit!!! And what in the world made our company think this robot was a plausible choice?! Did they do a full fubbing analysis of the different software robots out there and accidentally sorted by ease of use in reverse order?? 'cause that's the only explanation i can imagine

Frillin stupid shitpile of a language!!! AAAAAHHH

see the attached screenshot of production code we've developed at the company for reference.

Disclaimer: I do not stand responsible for any eventual headaches or gauged eyes caused by the named image.

(for those interested, the robot is G1ANT.Robot, https://beta.g1ant.com/) 4

4 -

I'm struggling with a bug. It's not my bug, it's in a lib - in an assembly function commented as "this is where the magic happens". After 27 hours of trying to determine what triggers said bug my best guess is it's a relationship between the position of Mimas and the mood of my neighbour's chonker. If it's not triggered by the Saturn's moon and a pet animal... I'm out of ideas.

Now how do I break it to my PM that we have to kill a cat?6 -

Q: How to be a malware analyst without having a knowledge on x86 ASM?

A: Start learning 32 bit ASM instead.2 -

Did you know Chris Sawyer developed rollercoaster tycoon almost entirely in assembly?

Almost as impressive as Temple OS.6 -

Short one, but it really gets me every time:

PLEASE tell me that I am not the only one typing hex-numbers in all caps!!!

I literally can't stand to see them in lowercase!!!

Every code I use with hex numbers in it (primarily ASM and C) I HAVE TO convert them into uppercase!!!

Is it just me and my stupid OCD or are there other ones like me????10 -

College student here.

What are the most important skills/assets one should bring to the workplace? As a developer and a colleague. 5

5 -

I started learning ASM x86_64, so I chose MASM with VS 2013 because is the best for debugging

So I just waste like 2 hours trying to make a simple program like printing fibonacci numbers to start with ASM

The problem started when using printf function, after calling printf function, local vars became garbage, after googling and looking for the ultimate answer for the problem I found the site with the ultimate answer (https://cs.uaf.edu/2017/fall/...) and it was that MASM64 when calling a function you must allocate space with the actual space from the current function + the allocated space for the printf11 -

Just talked to a guy who codes microcontrolers in assembler. He wants to use a raspberry pi as an interface device between TCP and Serial port. He asked me how fast that would run over internet and I told him that it depends on the connection an other things it takes at least some milli secs to transmit.

He is like:

What the fuck do you mean milli secs?

I poll data on my 20 mhz controler every like micro sec or so and that Pi got 1Ghz.

Me like: Yea go fucking code that shit on your own with ASM.2 -

Chrome 57 adds Web Assembly support.

I just know I'm going to be asked to use it soon. I just know it. It's not even close to a finished specification!9 -

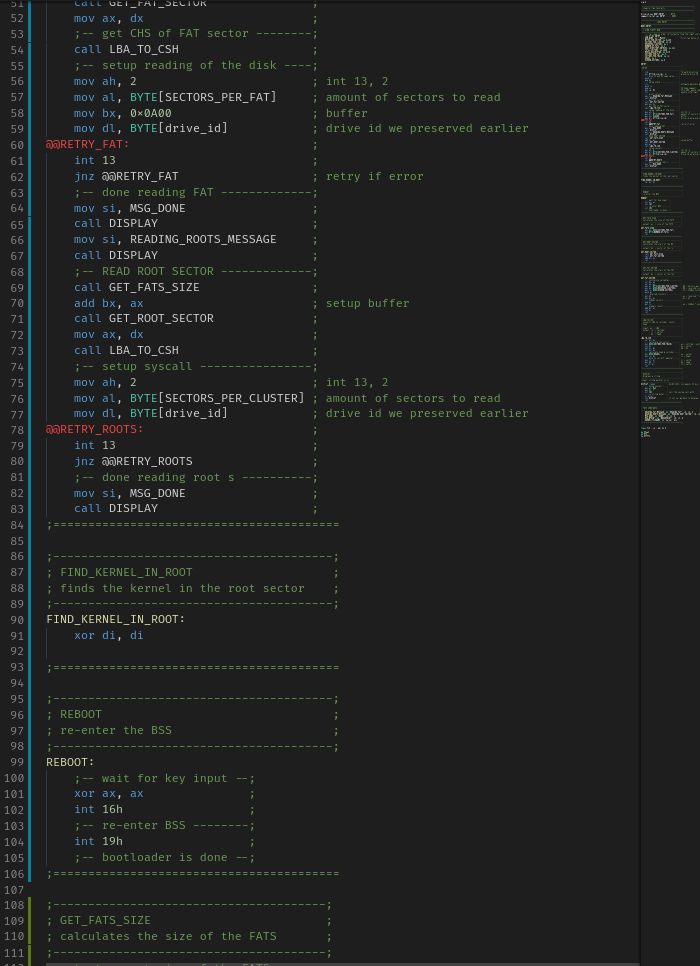

Here is another rather big example of how C++ is WAY slower than assembler (picture)

Sure - std::copy is convenient

but asm is just way faster.

This code should be compatible with EVERY x86_64 CPU.

I even do duffs device without having the loop:

the loop happens in the rep opcode which allows for prefetching (meaning that it doesnt destroy the prefetch queue and can even allow for preprocessing).

BTW: for those who commented on my comment porn last time: I made sure to satisfy your cravings ;-)

To those who can't make sense of my command line:

C++ 1m24s

ASM 19s

To those who tell me to call clang with -o<something>:

1) clang removes the call to copy on o3 or o2

2) the result isnt better in o1 (well... one second but that might be due to so many other things, and even if... one second isn't that much) 25

25 -

!rant

TFW your graphics ASM code works on the first try.

Yes, it just happened to me, and it's a relatively annoying rectangle drawing routine.

Writing complicated ASM code and making it work on the first try is definitely a new thing to me, I feel so powerful! >:D4 -

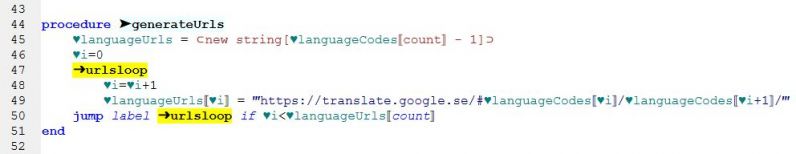

My goal was to hack the game to increment a random byte of memory every frame if SELECT was pressed. Mission accomplished!

(Done with ASM hacks, not recompiling from the pokeblue disasm) 1

1 -

Saw a post in which some dude said that he lost his virginity to C++. Well, I got it the hard way - I actually lost mine to assembler.6

-

A /thread.

I have to say something important. As the story progresses, the rage will keep fueling up and get more spicy. You should also feel your blood boil more. If not, that's because you're happy to be a slave.

This is a clusterfuck story. I'll come back and forth to some paragraphs to talk about more details and why everything, INCLUDING OUR DEVELOPER JOBS ARE A SCAM. we're getting USED as SLAVES because it's standardized AS NORMAL. IT IS EVERYTHING *BUT* NORMAL.

START:

As im watching the 2022 world cup i noticed something that has enraged me as a software engineer.

The camera has pointed to the crowd where there were old football players such as Rondinho, Kaka, old (fat) Ronaldo and other assholes i dont give a shit about.

These men are old (old for football) and therefore they dont play sports anymore.

These men don't do SHIT in their lives. They have retired at like 39 years old with MULTI MILLIONS OF DOLLARS IN THEIR BANK ACCOUNT.

And thats not all. despite of them not doing anything in life anymore, THEY ARE STILL EARNING MILLIONS AND MILLIONS OF DOLLARS PER MONTH. FOR WHAT?????

While i as a backend software engineer get used as a slave to do extreme and hard as SHIT jobs for slave salary.

500-600$ MAX PER MONTH is for junior BACKEND engineers! By the law of my country software businesses are not allowed to pay less than $500 for IT jobs. If thats for backend, imagine how much lower is for frontend? I'll tell you cause i used to be a frontend dev in 2016: $200-400 PER MONTH IS FOR FRONTEND DEVELOPERS.

A BACKEND SOFTWARE ENGINEER with at least 7-9 years of professional experience, is allowed to have $1000-2000 PER MONTH

In my country, if you want to have a salary of MORE THAN $3000/Month as SOFTWARE ENGINEER, you have to have a minimum of Master's Degree and in some cases a required PhD!!!!!!

Are you fucking kidding me?

Also. (Btw i have a BSc comp. sci. Degree from a valuable university) I have taken a SHIT ton of interviews. NOT ONE OF THEM HAVE ASKED ME IF I HAVE A DEGREE. NO ONE. All HRs and lead Devs have asked me about myself, what i want to learn and about my past dev experience, projects i worked on etc so they can approximate my knowledge complexity.

EVEN TOPTAL! Their HR NEVER asked me about my fycking degree because no one gives a SHIT about your fucking degree. Do you know how can you tell if someone has a degree? THEY'LL FUCKING TELL YOU THEY HAVE A DEGREE! LMAO! It was all a Fucking scam designed by the Matrix to enslave you and mentally break you. Besides wasting your Fucking time.

This means that companies put degree requirement in job post just to follow formal procedures, but in reality NO ONE GIVES A SHIT ABOUT IT. NOOBOODYYY.

ALSO: I GRADUATED AND I STILL DID NOT RECEIVE MY DEGREE PAPER BECAUSE THEY NEED AT LEAST 6 MONTHS TO MAKE IT. SOME PEOPLE EVEN WAITED 2 YEARS. A FRIEND OF MINE WHO GRADUATED IN FEBRUARY 2022, STILL DIDNT RECEIVE HIS DEGREE TODAY IN DECEMBER 2022. ALL THEY CAN DO IS PRINT YOU A PAPER TO CONFIRM THAT I DO HAVE A DEGREE AS PROOF TO COMPANIES WHO HIRE ME. WHAT THE FUCK ARE THEY MAKING FOR SO LONG, DIAMONDS???

are you fucking kidding me? You fucking bitch. The sole paper i can use to wipe my asshole with that they call a DEGREE, at the end I CANT EVEN HAVE IT???

Fuck You.

This system that values how much BULLSHIT you can memorize for short term, is called "EDUCATION", NOT "MEMORIZATION" System.

Think about it. Don't believe be? Are you one of those nerds with A+ grades who loves school and defends this education system? Here I'll fuck you with a single question: if i gave you a task to solve from linear algebra, or math analysis, probabilistics and statistics, physics, or theory, or a task to write ASM code, would you know how to do it? No you won't. Because you "learned" that months or years ago. You don't know shit. CHECK MATE. You can answer those questions by googling. Even the most experienced software engineers still use google. ALL of friends with A+ grades always answered "i dont know" or "i dont remember". HOW IF YOU PASSED IT WITH A+ 6 DAYS AGO? If so, WHY THE FUCK ARE WE WASTING YEARS OF AN ALREADY SHORT HUMAN LIFE TO TEMPORARILY MEMORIZE GARBAGE? WHY DONT WE LEARN THAT PROCESS THROUGH WORKING ON PRACTICAL PROJECTS??? WOULDNT YOU AGREE THATS A BETTER SOLUTION, YOU MOTHERFUCKER BITCH ASS SLAVE SUCKA???

Im can't even afford to buy my First fuckinf Car with this slave salary. Inflation is up so much that 1 bag of BASIC groceries from Walmart costs $100. IF BASIC GROCERIES ARE $100, HOW DO I LIVE WITH $500-600/MONTH IF I HAVE OTHER EXPENSES?

Now, back to slavery. Here's what i learned.

1800s: slaves are directly forced to work in exchange for food to survive.

2000s: slaves are indirectly forced to work in exchange for money as a MIDDLEMAN that can be used to buy food to survive.

????

This means: slavery has not gone anywhere. Slavery has just evolved. And you're fine with it.

Will post part 2 later.8 -

WHY IS IT SO FUCKIN ABSURDLY HARD TO PUSH BITS/BYTES/ASM ONTO PROCESSOR?

I have bytes that I want ran on the processor. I should:

1. write the bytes to a file

2a. run a single command (starting virtual machine (that installed with no problems (and is somewhat usable out-of-the-box))) that would execute them, OR

2b. run a command that would image those bytes onto (bootable) persistent storage

3b. restart and boot from that storage

But nooo, that's too sensible, too straightforward. Instead I need to write those bytes as a parameter into a c function of "writebytes" or whatever, wrap that function into an actual program, compile the program with gcc, link the program with whatever, whatever the program, build the program, somehow it goes through some NASM/MASM "utilities" too, image the built files into one image, re-image them into hdd image, and WHO THE FUCK KNOWS WHAT ELSE.

I just want... an emulator? probably. something. something which out of the box works in a way that I provide file with bytes, and it just starts executing them in the same way as an empty processor starts executing stuff.

What's so fuckin hard about it? I want the iron here, and I want a byte funnel into that iron, and I want that iron to run the bytes i put into the fuckin funnel.

Fuckin millions of indirection layers. Fuck off. Give me an iron, or a sensible emulation of that iron, and give me the byte funnel, and FUCK THE FUCK AWAY AND LET ME PLAY AROUND.6 -

The best/worst code comments you have ever seen?

Mine:

//Upload didn't work, have to react:

system.println('no result');

//$Message gives out a message in the compiler log.

{$Message Hint 'Feed the cat'}

//Not really needed

//Closed source - Why even comments?

//Looks like bullshit, but it has to be done this way.

//This one's really fucked up.

//If it crashes, click again.

asm JMP START end; //because no goto XP

catch {

//shit happens

}

//OMG!!! And this works???

asm

...

mov [0], 0 //uh, maybe there is a better way of throw an exception

...

mov [0], 0 //still a strange way to notify of an error

// this makes it exiting -- in other words: unstable !!!!!

//Paranoic - can't happen, but I trust no one.

else {

//please no -.-

sleep(0);

}

//wuppdi

for (int i = random(500); i < 1000 + random(500 + random(250)); i++)

{

// Do crap, so its harder to decompile

}

//This job would be great if it wasn't for the f**king customers.

//TODO: place this peace of code somewhere else...

// Beware of bugs in the code above; I have only proved it correct, not tried it.

{$IFDEF VER93}

//Good luck

{$DEFINE VER9x}

{$ENDIF}

//THIS SHIT IS LEAKING! SOMEONE FIX IT! :)

/* no comment */5 -

My boss keeps pushing me to do „any“ courses..

I’d say I’m doing my job exceptionally well. In fact he even told me before he promoted me.

I had to tell him what I wanna learn in the next 2-3 years. I told him I wanna be decent in C++ because i love the language and in my opinion every dev can improve by learning a low level language.

Have some MITx courses and stuff I wanna do (I actually want to do them) but he keeps pushing me to send him the courses so he can push me and (I think) Monitor my progress..

C/Cpp and asm have always been my love, I wanna improve and learn. But I wanna do it for myself, not for my boss. The company doesn’t have any use for it anyway..

And those courses are 4 weeks to 12 months with scheduled assessments.

I shouldn’t have mentioned it. Now it’s an expectation they have.

Now I have to force myself into doing those courses in time.. on a schedule..?

90% of then will bore the shit out of me cause I already know it and the remaining 10% are stuff I wanna look at when I feel like it. But I don’t have a paper that says I know those 90% so yeah..

Why can’t he just be happy with the work I do during working hours and leave my free time up to me???10 -

Probably when I was younger and got my AI working for her checkers game I made in Z80 asm for TI-86. Spent so much time on it not working and then seeing it finally working and taking jumps and scoring the board positions and it seemed semi intelligent even thought it was a very weak ai ... Still I was excited 😁5

-

I'm curious, what was the most ridiculously otherworldly, the least understandable, eye-opening code you have every seen?

BUT, I mean that in a good way. And what did you learn from it?

For me personally, I would probably say some of the c++ stdlib implementations. Just totally not English in some places. I mean seriously, sometimes asm is more readable than c++15 -

So planned on going out drinking to wind down from programming for the week but couldn't find anyone to go out with... So instead I stayed at home researching x86_64 and ARM assembly while drinking hard cider on my own... Well... A night well spent?4

-

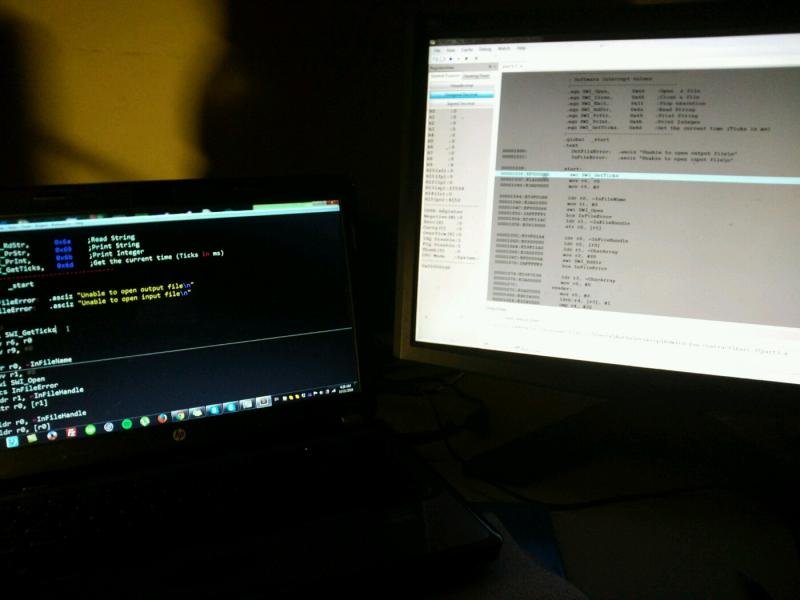

I'm pretty sure I've said this before but I'm attempting to transition parts of my Patchwork OS to a new one that supports GNU GRUB / Multiboot.

I think I am finally going insane.5 -

The "click" moment always feels fucking amazing. TI made some retarded ASM routines (as usual) for drawing various things to the screen, most of the time whatever you try to draw takes upwards of 3 frames at 15MHz to draw. A LINE KNOWN TO BE 100% STRAIGHT SHOULD NOT TAKE 1/3 OF A FRAME TO PLOT EACH PIXEL OF. I managed to get it down to 300-some cycles per pixel on the 2 i've messed with, which still isn't great, but it's a massive increase in efficiency, so fuck it, i'm happy. The "click" was when I managed to get a serious optimization working that took over 3 hours to debug.2

-

I've been BSing my skillset for so long to myself, it's a veritable toolbox of mixed knowledge but no complete sets...

I wonder if it's too late for me to catch up or if I will ever actually complete any learning...

I am yet to finish learning

Html

CSS

PHP

Ruby

C#

ASM I can do i386 but not x86

VB

Pascal if you can believe

C

C++

Java

JS

Python

Powershell

Bash

My main skill is basically just remembering anything I do, including code syntax and example code fragments well enough to quote at people which makes me a lazy learner. -

I've read that devRant is using javascript and the likes, what I'm wondering is how one uses javascript for android apps. I know PhoneGap exists, but I also read that there is some performance issues with it. what does devRant use?2

-

First "computer" : Electronika BK. Had some fun with table software and some basic

first X86 : Intel 80286 with wooping 1MB of ram and 40 MB hard drive.

First fun experiance :

Me : "I'm gonna clean folders"

Me : "What are these files on the c: ? I'll move them into a folder"

(Youknow like io.sys, autoexec.bat)

Reboots :

Computer : "Please insert a boot drive"

Me : "The what now?"

Needed some help to fix it.

At least I learnt how boot loader works and wrote my own small thingy in asm2 -

*le me being frustrated af trying to compile asm.

.section won't work for fasm. And some other things won't work for Nasm.

Now I got the .obj aka .o from the .asm. But ldrdf.exe from the nasm compiler isn't working properly. And I can't find a troubleshoot online. Seems like this will be a sleepless night...2 -

- I have done this, this and this. I'm an amazing programmer even though i copied it from SO.

- Allright, could you explain this part since you did not write one single comment.

- (insert generic bullshit excuse)

you don't think he's the one getting the internship amd the summer job since he's the loudest? dear god, my fist, his face.3 -

My friend tried to disassemble FakeSMC (hackintoshers where are u at) into assembly code.

My friend: yo dude, let's look at FakeSMC's ASM!

Me: u stoopid or wut

My friend: don't worry, it's gonna be so much fun!

Me friend after an hour and an accidental modification the the file through ASM: bro i need your help, my hackintosh won't boot and I need your backup13 -

I haven't seen Gary Bernhardt's presentations linked in here yet, hopefully thats because everyones already seen them - but if you haven't, prepare yourself:

https://destroyallsoftware.com/talk...1 -

Everyone: *circle jerking over cyberpunk 2077 and Red dead redemption 2*

Me: yeah looks cool but I think it'd be more interesting to build a modern operating system in pure unadulterated ASM...

Am I the only one not getting hyped over those games and just brain-dead or is anyone else in the same boat?17 -

Learned ARM assembly in just a day. I guess I proved myself wrong when I thought it's gonna be hard to jump to another architecture.

PS. Originally worked with 16 Bit Windows Assembly. 😂 3

3 -

A big development company needed summer interns, the job required java and the likes and it was the first big interview i've had. This wasn't a problem, i thought, until i got there. worth noting is that Im still in school and and the last time i used java extensivly was a year prior to the interview. I completly blanked on the, rather basic, questions. needless to say, I didnt get it.2

-

How to discover and exploit vulnerabiliy in program or IoT firmware?C++, asm, writing zero-days, i have always been amazed by that. Art.

-

section .data

msg: db "ASM, Love Or Hate?",10

msglen: equ $-msg

GLOBAL _start

_start:

mov rdx, [msg]

mov rdi, msglen

call print_stdout

mov rax, 0x3c

mov rdi, 0

syscall

print_stdout:

mov rax, SYS_WRITE

mov rdi, STDOUT

syscall

ret5 -

Hitting `git commit -asm` when there are still untracked files.

And you knew that you fucked up the moment you see the result of your `git status`.2 -

2005, after I tried to program my computer to be quicker. By the time I realized that it was impossible, I was hooked.

-

Can someone explain me how a global discriptor table is implemented in a kernel? My problem is basicly that I want to build a kernel that can run seperate programs in userland. But I don't quite understand how the GDT and LDT should be implemented.1

-

Brrruh "mov" only works when both registers have the same size.

Could've told me this beforehand dude.5 -

when I get the assignment of debugging my group members uncommented Java Swing application, I seriously have to untangle that mess for days

-

Well, not best experience per se, but most memorable one.

So I am accepted to CS program at the university - happy days!

First lecture of the first day of the first semester in the first year...

...It just had to be that guy. He was famous for for his strictness among the faculty as we later found out.

But, the lecture. It's 8.25 am, I am making my way into auditorium, and it's filled with freshmen like me, of course. Instead of cheerful chatter noise I hear literally silence. What the? I catch the glimpse of the blackboard - the professor is there, hard at work writing out some stuff that can't comprehend. Double checked the name of the lecture - computer architecture.

8.30 - so it begins, I remember taking a place along the front rows in order to see more clearly. Professor turns to us and just starts the lecture, saying that he'll introduce himself later at the end and there is no time to waste. OK...

And he just dumps the layout of x86 computer architecture and a mixture of basic ASM jargon on us WITHOUT TURNING TO US FOR LIKE 30 MINS while writing things out on the blackboard.

The he finally turns 180 degrees very quickly, evaluates our expression (I know mine was WTF is this I don't even understand half the words), sighs, turns back and continues with the lecture. -

Anybody wanna collaborate?

I am currently developing a (MASM) assembler-only kernel for i386 targets. My goal is to build a kernel that is made for web applications by design.

https://github.com/wittmaxi/maxos

If anybody wants to help me, feel free to shoot me a message on Telegram! I'm also happy to teach you some ASM :)

The project is mostly a fun project anyway, so no big pressure6 -

Just finished an Assembly homework... For the first (and hope the last) time in my life I complained about arrays starting from 0.2

-

So for one of my uni courses we're writing arm assembly. That's pretty cool. What's not cool is the shit textbook written by the instructor, the asshat elitists on every single fucking help thread, and the fucking garbage documentation.

But hey, maybe I'm doing something wrong. I mean, after all, you should need to spend 5+ hours to discover where the fuck you should place a label for a god damn binary constant. Oh, and once you've finally got code that'll link, good fuckin luck getting it to load the address of that constant in a register.

At least I have a good explanation on why those guys on the forums are such fucking dicks: Stockholm Syndrome. -

What is the best source for learning x86 asm and binary exploitation? Got any recommendations for me? (books?) I already know godbolt.org I'd also be interested in optimisation.5

-

hey devs,

Today i stumbled on something that piqued my interest, but i couldnt find an answer on google:

intel is throttling MMX instructions on their newer CPU's.

my question is: why?

i didnt find either the response to that question nor even the acknowledgement of that fact, yet...

https://youtube.com/watch/...

(the interesting stuff starts at 8.30)16