Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "i know binary"

-

*Now that's what I call a Hacker*

MOTHER OF ALL AUTOMATIONS

This seems a long post. but you will definitely +1 the post after reading this.

xxx: OK, so, our build engineer has left for another company. The dude was literally living inside the terminal. You know, that type of a guy who loves Vim, creates diagrams in Dot and writes wiki-posts in Markdown... If something - anything - requires more than 90 seconds of his time, he writes a script to automate that.

xxx: So we're sitting here, looking through his, uhm, "legacy"

xxx: You're gonna love this

xxx: smack-my-bitch-up.sh - sends a text message "late at work" to his wife (apparently). Automatically picks reasons from an array of strings, randomly. Runs inside a cron-job. The job fires if there are active SSH-sessions on the server after 9pm with his login.

xxx: kumar-asshole.sh - scans the inbox for emails from "Kumar" (a DBA at our clients). Looks for keywords like "help", "trouble", "sorry" etc. If keywords are found - the script SSHes into the clients server and rolls back the staging database to the latest backup. Then sends a reply "no worries mate, be careful next time".

xxx: hangover.sh - another cron-job that is set to specific dates. Sends automated emails like "not feeling well/gonna work from home" etc. Adds a random "reason" from another predefined array of strings. Fires if there are no interactive sessions on the server at 8:45am.

xxx: (and the oscar goes to) fuckingcoffee.sh - this one waits exactly 17 seconds (!), then opens an SSH session to our coffee-machine (we had no frikin idea the coffee machine is on the network, runs linux and has SSHD up and running) and sends some weird gibberish to it. Looks binary. Turns out this thing starts brewing a mid-sized half-caf latte and waits another 24 (!) seconds before pouring it into a cup. The timing is exactly how long it takes to walk to the machine from the dudes desk.

xxx: holy sh*t I'm keeping those

Credit: http://bit.ly/1jcTuTT

The bash scripts weren't bogus, you can find his scripts on the this github URL:

https://github.com/narkoz/...58 -

EDIT: devRant April Fools joke (2018)

-------------------------

Hey everyone! As some of you have already noticed, @trogus and I made a decision (based on the suggestion of some members of the community) to start transitioning all scores on the app to binary. We have started by making all user scores in our mobile apps and website (if you’re logged in) appear in binary. We think this makes the app more fun while remaining usable at the same time!

Please let us know what you think and happy binary-reading! 87

87 -

Lads, I will be real with you: some of you show absolute contempt to the actual academic study of the field.

In a previous rant from another ranter it was thrown up and about the question for finding a binary search implementation.

Asking a senior in the field of software engineering and computer science such question should be a simple answer, specifically depending on the type of job application in question. Specially if you are applying as a SENIOR.

I am tired of this strange self-learner mentality that those that have a degree or a deep grasp of these fundamental concepts are somewhat beneath you because you learned to push out a website using the New Boston tutorials on youtube. FOR every field THAT MATTERS a license or degree is hold in high regards.

"Oh I didn't go to school, shit is for suckers, but I learned how to chop people up and kinda fix it from some tutorials on youtube" <---- try that for a medical position.

"Nah it's cool, I can fix your breaks, learned how to do it by reading blogs on the internet" <--- maintenance shop

"Sure can write the controller processing code for that boing plane! Just got done with a low level tutorial on some websites! what can go wrong!"

(The same goes for military devices which in the past have actually killed mfkers in the U.S)

Just recently a series of people were sent to jail because of a bug in software. Industries NEED to make sure a mfker has aaaall of the bells and whistles needed for running and creating software.

During my masters degree, it fucking FASCINATED me how many mfkers were absolutely completely NEW to the concept of testing code, some of them with years in the field.

And I know what you are thinking "fuck you, I am fucking awesome" <--- I AM SURE YOU BLOODY WELL ARE but we live in a planet with billions of people and millions of them have fallen through the cracks into software related positions as well as complete degrees, the degree at LEAST has a SPECTACULAR barrier of entry during that intro to Algos and DS that a lot of bitches fail.

NOTE: NOT knowing the ABSTRACTIONS over the tools that we use WILL eventually bite you in the ASS because you do not fucking KNOW how these are implemented internally.

Why do you think compiler designers, kernel designers and embedded developers make the BANK they made? Because they don't know memory efficient ways of deploying a product with minimal overhead without proper data structures and algorithmic thinking? NOT EVERYTHING IS SHITTY WEB DEVELOPMENT

SO, if a mfker talks shit about a so called SENIOR for not knowing that the first mamase mamasa bloody simple as shit algorithm THROWN at you in the first 10 pages of an algo and ds book, then y'all should be offended at the mkfer saying that he is a SENIOR, because these SENIORS are the same mfkers that try to at one point in time teach other people.

These SENIORS are the same mfkers that left me a FUCKING HORRIBLE AND USELESS MESS OF SPAGHETTI CODE

Specially to most PHP developers (my main area) y'all would have been well motherfucking served in learning how not to forLoop the fuck out of tables consisting of over 50k interconnected records, WHAT THE FUCK

"LeaRniNG tHiS iS noT neeDed!!" yes IT fucking IS

being able to code a binary search (in that example) from scratch lets me know fucking EXACTLY how well your thought process is when facing a hard challenge, knowing the basemotherfucking case of a LinkedList will damn well make you understand WHAT is going on with your abstractions as to not fucking violate memory constraints, this-shit-is-important.

So, will your royal majesties at least for the sake of completeness look into a couple of very well made youtube or book tutorials concerning the topic?

You can code an entire website, fine as shit, you will get tested by my ass in terms of security and best practices, run these questions now, and it very motherfucking well be as efficient as I think it should be(I HIRE, NOT YOU, or your fucking blog posts concerning how much MY degree was not needed, oh and btw, MY degree is what made sure I was able to make SUCH decissions)

This will make a loooooooot of mfkers salty, don't worry, I will still accept you as an interview candidate, but if you think you are good enough without a degree, or better than me (has happened, told that to my face by a candidate) then get fucking ready to receive a question concerning: BASIC FUCKING COMPUTER SCIENCE TOPICS

* gays away into the night52 -

I'm 20, and I consider myself to be as junior as they come. I only started programming seriously in June 2016,and since then, I've been doing mainly Android Work, and making my own servers and backends(using AWS/Firebase nd stuff).

For the first time in life, I was approached by a recruiter for a company on linkedIn. They "stumbled upon" my Github profile and wanted to see if I was interested in an internship opportunity. This company is an early stage start up, by that I mean a dude with an idea calling himself the CEO and a guy who "runs a tech blog" and only knows college level C programming (explaination follows).

So they want me to make the app for their startup. and for that, I ws first asked to solve a couple problems to prove my competence and a "technical interview" followed.

They gave me 3 questions, all textbook, GCD of 2 numbers, binary search and Adding an element to the linked List, code to be written on a piece of paper. As the position was that of an Android Developer, I assumed that Java should be the language of choice. Assumed because when I asked, the 'tech blogger' said, yeah whatever.

But wait, that ain't all, as soon as I was done, Mr. Blogger threw a fit, saying I shouldn't assume and that I must write it in C. I kept my cool (I'm not the most patient person), and wrote the whole thing in C.

He read it, and asked me what I've written and then told me how wrong I was to write 2 extra lines instead of recursion for GCD. I explained that with numbers large enough, we run the risk of getting a stackoverflow and it's best to apply non recursive solution if possible. He just heard stackoverflow and accused me of cheating. I should have left right then, but I don't know why, I apologized and again, in detail explained what was happening to this fucktard. Once this was done, He asked me how, if I had to, I'd use this exact code in my Android App. I told him that Id rather write this in Java/Kotlin since those are the languages native to Android apps. I also said that I'd export these as a Library and use JNI for the task. (I don't actually know how, I figured I can study if I have to).

Here's his reply, "WTF! We don't want to make the app in Java, we will use C (Yeh, not C++, C). and Don't use these fancy TOOLS like JNI or Kotlin in front of me, make a proper application."

By this I was clear that this guy is not fit to be technical lead and that I should leave. I said, "Sir, I don't know how, if even possible, can we make an Android App purely in C. I am sorry, but this job is not for me".

I got up and was about to leave the room, when we said, "Yeah okay, I was just testing you".

Yeah right, the guy's face looked like a howling monkey when I said Library for C, and It has been easier for me to explain code to my 10 year old cousin that this dumbfuck.

He then proceeded to ask me about my availability, and I said that I can at max to 15-20 hours a week since my college schedule is pretty tight. I asked me to get him a prototype in 2 months and also offered me a full time job after I graduate. (That'd be 2 years from now). I said thank you for the offer, but I am still not sure of I am the right person for this job.

He then said, "Oh you will be when I tell you your monthly stipend."

I stopped for a second, because, money.

And then he proceeded to say 2 words which made me walk out without saying a single word.

"One Thousand".

I live in India, 1000 INR translates to roughly $15. I made 25 times that by doing nothing more than add a web view to an activity and render a company's responsive website in it so it looks like an app.

If this wasn't enough, the recruiter later had the audacity to blame me for it and tell me how lucky I am to even get an offer "so good".

Fuck inexperienced assholes trying shit they don't understand and thinking that the other guy is shitsworth.10 -

Me talking to a recruiter (even though I am not looking for a job)

Me: If I walk into an interview, and they ask me to reverse a binary tree for a frontend Reac or Vue position or something along those lines, I will end the call and/or walk away from it.

Him: I get similar feelings from other programmers, I don't quite understand why the notion is as common

Me: Because it is fucking useless, it servers no purpose to a dev to know about that when building frontends with react, I link my github profile, for which they can find advanced backend-frontend related projects, compiler and interpreter projects, plus the title I currently have at my workplace and a bunch of other shit, I am not interviewing for a teaching position at an institute, but an actual place of work, for which if they want to know about DS and A they can review my profile which has a repo of DS and A in about 5 different languages including plain C++. I do not need to be offended by such notions since they server no purpose on the frontend, and neither do other devs. If anything it should be a casual conversation during the interview, not a basis for employment.

Recruiter: .........thank you for explaining this to me, I am sure I can bring it up to the agencies doing the reviews and interviews. Are you still interested?

Me: Are they going to give me a coding assignment for a project or a bs question like what I mentioned?

Him: I don't know

Me: then I am not interested12 -

( rant || !rant ) && idiots

console.info( this.isLongRant );

console.warn( "contains strong language and wordpress" );

A friend of mine sent two of his "friends" to me because they wanted me to build a website for their new business (~idea).

So I had a meeting with them.

First of all they wanted me to have a look on the current (work in progress) site.

First impression of the frontend:

OH BOY!?

Well, imagine this:

- a 90s/2k background (dotted/pixelated cloud in baby-blueish as backgroud with repeat)

- the logo was made by the sister of one of the guys, it wasn't too bad, but badly aligned, asymmetrical

- some obvious $offTheShelfShopPlugin with $randomStockContent

- the fucking slider had a small loading bar to indicate changes, it appears like an hyperanxious child on ADHS

- below the logo TWO FUCKING GIF SPINNERS to indicate nothing else but how fucking brain amputated these two dudes are, including the dev who is responsible for adding this. (to this point, they only told me, that a webagency did the setup and some basic work on the site, more on that later)

- no styling concept at all, random fonts and stuff everywhere including default styles of the shop plugin.

- FUUUUUCK WTF wil come furtherin this meeting?

After seeing a pile of binary puke fisted out of a 60yo nonstop-intern who changed his jobtitle from dildo-traveling-salesman to fullstack-frontend-dev by wrinting it on a post-it-note, I imagined, there has to be something wrong with the backend as well.

Boy was I right!

Yes, you guessed it! A random Wordpress adminpanel login appeared! OH NO....

I really wanted to levae this meeting immediately.

I was not able to hold my disgust back and I told them right in their face, what a shit pile of nutty squirrel turds this current page is. And that Wordpress is not the right choice at all for a shop.

Then came the best part: They basically told me, that they terminated the previous contract with the webagency because they were too expensive (they are cheap, compared to others, I know people who know their prices) and that they wanted to create A BIG MARKETPKACE with multiple ressellers who can have their shop in their website. Something similar to FUCKING AMAZON. ON FUCKING WORDPRESS!?!?!?

They even asked me if I wanted to be their partner & developer and that they can't pay much at the moment until the marketplace starts to grow.

I more or less told them to go fuck themselves with a rusty pitchfork.2 -

Some fucker installed a keylogger on my Ubuntu laptop at home and registered it as a systemd service. From Wireshark, it's sending each keystroke to a server in France using irc. Tried accessing the server but the moron shut it down immediately. It's the last time am fucking installing code from prebuilt binaries. If I can't build it from source then fuck off your sniffing cunt. I was about to log in into a database from that machine.

UPDATE: I found the actual file sending the keystrokes but it's binary. Anyone know how I can decode a binary file?36 -

Boy, this Monday mornig was crazy...

At 7 am, as I just left my flat, I received an ultra urgent email from the CEO of a company we exchanged the fileserver for, that the network shares are not available.

I instantly turned around, went back to my flat, fired up my HAL9000 supercomputer and connected remotely.

4 levels deep (PC => VPN => Remotedesktop => vSphere Client => VM) I felt like I was in the movie Inception and tried to figure out what happened.

I don't know why, but in the logs it said that the fileserver VM was down since 4am. Holy sithlord... why?

After restarting and the usual problems with Windows Network Names, everything was back online.

My special thanks go to Mr. Coffee, who is always a great companion during monday mornings, Mr. VPN, the great fellow who invented the VPN and last but not least "The Internet" for connecting me to a world of binary, where every idea finds a listener and where Ajit Pai can be memed without concequences.

FUCK YOU Ajit. Harlem Shake is so 2013.2 -

My System Analysis professor wants to fail me because I refuse to store PDF files in the database in my project.

He wants me to store THE WHOLE BINARY FILE in the database instead of on the filesystem.

When I tried to explain why that would be bad, he interrupted me and began the "you think you know more than I do? I've been teaching this for X years" speech.

How do such people become professors?23 -

I love Linux, but its community can be so full of incompetent assholes..

Just now I asked in Freenode ##linux how to get the process ID of my current running process in bash. I got my answer - it's a shell built-in called "$$".

Then people start to nitpick some more - why do you need it? How is that different from an exit? - to which my response was.. well I know the whole idea behind exit codes, and I'd use it whenever possible, in all defined behavior that allows my program to terminate itself whenever it can. This pidfile however would be used to exit itself and provide diagnostic information whenever the program enters undefined behavior - a segfault in C language. Scenarios in which I don't have full control over the script's behavior anymore, such as the system entering an unworkable state where the system stalled, still got some binaries in RAM but the rootfs got unwritable, such as now - very helpfully, thanks HP! - when my laptop likely overheated and shat itself. I issued sudo reboot into it, but even that wouldn't issue properly anymore due to the /sbin/poweroff binary becoming inaccessible too. I had to issue a hard power cycle.. one of the few times in which I'm thankful to HP for actually causing shit like this, lol.

Point is, that undefined behavior is what I'm trying to mitigate against. I certainly can't let any files other than diagnostics remain in nonvolatile storage like that, especially when their state should be predictable in order to ensure good operation (like files expressing whether the script is already running or not, i.e. lock files).

Back to that IRC chat. Aside from the answer, I got ridicule from people who probably don't even know how to properly compile a kernel. Ubuntu users, overconfident scum. Sometimes I feel like I should ask questions in channels like #archlinux only, where such incompetency is ridiculed on its own.13 -

Yesterday (or the day before that depending on your timezone and day-night schedule - this Friday) my OnePlus 6T arrived. After only 2 days of time between placing the order and actually getting the phone, quite impressive!

The DHL guy asked me upon receipt - is it the OnePlus 6T? - Yes it is!! - "An amazing device it is!", he said. And honestly.. he couldn't be more right.

I might be a bit biased on this because after all I did just spend €630 on this phone. But it feels so snappy, high quality, the 8GB of RAM is just.. it blows my mind. But I'm sure that the other reviews did this sort of jazz already.

The things that set this phone apart for me though were the following.

When I get a new phone or tablet, usually the first thing I do is rooting it. This one was no different, about an hour after receipt it was successfully rooted and loaded with Magisk. Currently I'm still in the phase of "getting to know the phone", wherein fuckups are usual. This time again being no different - I removed some apps and apparently did something to it that the search engines - both Google and DuckDuckGo - didn't quite like, as both of them would crash upon application launch. Me in full panic mode of course, desperately trying to find the stock ROM (which doesn't seem to be present in its usual form) or a new set of GApps (which didn't resolve the issue). OnePlus does seem to offer its OTA updates in zip archives though. So I downloaded its latest update (same as what was on the device) and applied it.

That's when the nerdgasm happened.

The "update" was simply a matter of going into the settings, tapping this and that and applying the update. No recovery, no unrooting, no nothing. The update just went like that despite the phone being rooted and just having had TWRP flashed to it. I always wanted this sort of thing, which even the Nexus couldn't offer - having the cake and eating it too. Being able to root the device and muck around with it while still being able to update the device timely without too many hurdles. This fucking thing does it!!!

That is to say, after my initial nerdgasm I did find that it bulldozed over my su binary (effectively unrooting the thing), custom emoji I've set (iOS 12 because fuck Google's most recent emoji set) and some other things. But those are easy to install back, much more so than it would've been to download a whole Android release and dirty flash it, as it was on the Nexus.

Other than that, battery life, dash charging (edit: on that topic, it does remain cool like a cucumber despite getting 15-20W of power jammed into it, quite impressive!), snappiness, the usual jazz.. eh, as I said earlier that's the usual reviewer stuff. But this feature of being able to upgrade the phone while it's modified, that's something which seems to be severely underrated by those.

Oh and during kernel builds, I couldn't quite get the source to work - probably due to my lack of experience with builds of Android kernels - but I did find that this phone actually exposes its kernel config through /proc/config.gz as it should. None of my MediaTek devices do this, so that's something that I found really appealing. Always nice to see when a manufacturer exposes this information to give you a stock sort of config that you can be rest assured will work configuration-wise. And it allows you to see what the stock kernel is actually built with, which again is really nice. I quite like this! It really encourages further development.11 -

Fuck Apple and its review system

So, this started in december. We wanted to publsih an app, after years of development.

Submit to review, and passes on the first try. Well, what do you know. We are on manual release option, so we can release together with the android counterpart. Well yes, but someone notices that the app name is not what was aggreed (App Name instead of AppName). Okay, should be easy, submit the same app, just the name changed. If it passed once, it will pass again, right? HAH

Rejected, because the description, why we use the device’s camera is too general. Well... its the purpose of the app... but whatever, i read the guidelines, okay, its actually documented with exapmles. BUT THEN WHY THE FUCK COULDNT YOU SAY THAT ON THE FIRST UPLOAD?

Whatever, fix it, new version, accepted, ready to release just in time.

It doesindeed roll out,but of course, we notice that the app has a giant issue, but only on specific phones. None of our test phones had this problem, but those who have, essentially cannot use our program. Nasty as it is, the fix is really easy, done in 5 minutes. Upload it asap, literally nothing changed from user point of view, except now it doesnt crash on said devices. Meanwhile 1 star reviews are arriving from these users - of course with all the right. Apple should allow this patch quickly, right? HAH

THE REAL BULLSHIT COMES NOW

With only config files changed, the same binary uploaded we get rejected? What now? Lets read it. “Metadata rejected, no need to upload new binary”.... oh fine only the store page is wrong? Easy. Read the message, what went wrong. “Referencing third party content is nit permitted on the app store” meaning that no android test device should be shown. Fine, your rules. They even send a picutre of the offending element. BUT ITS NOT EVEN ON THE STORE. THATS A SCREENSHOT OF THE APP. HOW IS THAT METADATA? I ask about this, and i get a reply, from either a bot, or a person who cant speak or read english, and only pasted a sample answer, repeating the previous message. WTF. Fine, i guess you are dumb, but since they stop replying to our queries, do the only sensible thing, re-record the offending tutorial video that actually contained an android device. This is about 2 weeks, after the first try to apply a simple patch to a broken app. And still, how did it pass the review 2 times?

Whatever, reupload again, play the waiting game for a week, when the promised average wait time is 2 days, they hit us with a message, that they want to know what patent we use in our apps core functionality. WTF WHY NOW? It didnt bother you for a month, let it release ti production and now you delay a simple patch for this? We send them what they know. Aaaaand they reply: sorry we need more time to review your app. FUUUUUUCKKK YOUUU. You are reviewing a PATCH with close to zero functional change!!! Then, this shit goes on, every week we ask about an ETA, always asking for patience... at the end it took another 3 weeks... so december 15 to jan 21 in total...

FOR. A. SINGLE. FUCKING. PATCH

Bottom line is what is infurating, apple cares that there is an android device in the tutorial video, but they dont care that a significant percentage of our users simply cannot use the app.

Im done7 -

FUCK LINUX

now that I have your attention, and you’re probably angry, too, please, even if you don’t read this rant, never use code.org again. now, onto the rant…

god dammit, code.org sucks. I mean, anyone who created it or associates with it should, well, be considered a terrorist. they’re bombing students futures in computer science with false, useless, bullshit information. not to mention, their sponsors like bill gates, mark zuckerburg, and other rich asses, talk in a video about some boring ass shit that is hard to understand for anyone who doesn’t program, and not to mention, they use a fucking five dollar microphone. ear rape. even if you look at a textual version of it, then read the information on it, it’s practically useless because it's so terribly explained, and also useless. ironically enough, they focus on their animations more than their actual explinations, or their students for that matter. the fact that we had to encode a picture in binary, made me about 50% dumber, give or take a 0 or 1. then, we had to do it in hex, which wasn’t really much better, although more realistic I supposed. what's really the most depressing thing about this class is its application in the real world. I've learnt nothing whatsoever that will help me in the real world, or in computer science. I suppose there's two things that may be useful (that I already knew): hex, and that TCP doesn't lose packets. that's it. those two things. five seconds worth of knowledge from the first quarter of the year. the ideas just make me want to throw up. teaching the main ideas of computer science without actually teaching it? one of the teachers (probably a good one) enrolled her students in an online programming course just so they could understand, because the explanations are just so terrible. this is the only [high school] computer science course offered by code.org, and I signed up because it's an AP computer science class (tried to get into AP Java, the day I was supposed to take the test to get into an upper level class, I was told it didn't count as a tech credit). seriously, fuck code.org. it makes you dumber. their 'app lab' environment is pointless, just like everything else. the app lab is basically where you have a set of commands and have to make a dog bark() or a storm trooper miss() [and that's hell when they haven't introduced while loops yet]. the app lab is literally code.org going out of their way to make everything that their students are learning pointless in the real world. seriously, why can't we just use a <canvas> like an ACTUAL PROGRAMMER would do if they were to make a browser game, not use an app engine so slow it would be faster to update windows and android studio each time I run an 'app' in their 'environment'. their excuse is that the skills "transfer over" to the real world. BITCH! IF I DIDN'T KNOW JAVA, AND I WANTED TO MAKE A GAME IN JAVA, I'M NOT GOING TO LEARN PYTHON, THEN "TRANSFER" THE SKILLS I LEARNT, I'M GOING TO LEARN FUCKING JAVA. AND THAT GOES FOR EVER OTHER LANGUAGE, PROJECT, ETC.

I'm begging you code.org, stop, get help.9 -

BEEP-BEEP

Every now and then, periodically

BEEP-BEEP

and then quiet. Get into working mode, concentrate again, a...

BEEP-BEEP

wtf is that.. Took down my smoke alarm, prolly the battery is getting low. Put it next to me, waiting...

BEEP-BEEP

nope, it's gotta be smth else. How can I hunt it down when I can't even tell which direction it's coming from?!? I know. Play smart. Measure the period.

BEEP-BEEP

it's been 3 minutes 5 seconds since the last BEEP-fucking-BEEP. Now I can plan my time ahead. Go to one room, wait fo..

BEEP-BEEP

Nope, it's not there. Carry on with all the other rooms, waiting for that annoying beeping.

BEEP-BEEP

I think I at least know the room. Good, narowed it down.

BEEP-BEEP

this is getting really annoying. I've been playing with this nonsense for an hour already. Alright, it's in my kid's room. The PC is off, toys are off. What could it be....? Binary search the f

BEEP-BEEP

uck out of it! aight, I first try to identify from which part of the room it's coming from. Stand in the middle and tu

BEEP-BEEP

ahh, right, it's behind me then. Fine. That's the PC corner. But it's off, it can't be making sounds. Esp when it has no speakers plugged in - it's got only Bluetooth speakers which haven't been turned on for what, a year already? but then w

BEEP-BEEP

hat could it be... Sounds like it's indeed coming from the PC corner. Checking all the LEDs -- all are off, nothing's turned on. Move the speakers away, try digging around to see if the kiddo didn't leave his toys behind the

BEEP-BEEP

PC. Wait, the sound has moved behind me... And I've only moved my BT speakers. Which are turned off. That's odd... could it be? Put one to one ear and another one to another and wait for the remaining 15 seconds

BEEP-(you are a fucking idiot)-BEEP

whispers in my left ear.

Turns out, some BT speakers can make low-battery sounds even when they are turned off.10 -

Interview:

Candidate claims being seasoned "senior".

Him: i don't know how the solve this

Me: you have to use binary search

Him: ahhaaa

Me: do you know binary search?

Him: yes

Me: can you please explain binary search?

Him: eghm, hmm, sorry I can't20 -

brain: ABSTRACTION ABSTRACTION ABSTRACTION too much ABSTRACTION!

me: jeez calm down a lil i just deployed a boilerplate ember web app with cli tools with next to nothing amount of 'my' code.

b: YES U SUCKER THAT'S WHAT WENT WRONG U DON'T KNOW SHIT ABOUT THE LIL STUFF THAT HAPPENS BEHIND THE SCENES THE FUCK MAN U CALL YOURSELF A CS STUDENT YOU CAN'T EVEN WRITE A COMPILER YET

m: sooo remember when we were studying logic gates and binary conversions and you sigkilled all my threads cuz it was 'boring'?

b: why yes why do you ask

m: WELL that's where we'll end up again if you don't stop nagging me about going down. Trust me, I KNOW how to starve you and you'll beg me to use Python again. You start making advanced data structures in C and the next thing you know you're writing assembly code 'just for fun'.

I have a hackathon coming right up and I have to use a framework or my team loses the advantage. Are we good?

b: well if you put it that way...BUT AFTER THAT YOU'RE TAKING ME TO AN ALGORITHM SESSION

m: *eerily stares at the dusty book in the corner*

you... have a deal3 -

I don't know if you guys caught this but everyone's binary scores are negative. The first bit is 1.

Yay 7

7 -

In my previous rant about IPv6 (https://devrant.com/rants/2184688 if you're interested) I got a lot of very valuable insights in the comments and I figured that I might as well summarize what I've learned from them.

So, there's 128 bits of IP space to go around in IPv6, where 64 bits are assigned to the internet, and 64 bits to the private network of end users. Private as in, behind a router of some kind, equivalent to the bogon address spaces in IPv4. Which is nice, it ensures that everyone has the same address space to play with.. but it should've been (in my opinion) differently assigned. The internet is orders of magnitude larger than private networks. Most SOHO networks only have a handful of devices in them that need addressing. The internet on the other hand has, well, billions of devices in it. As mentioned before I doubt that this total number will be more than a multiple of the total world population. Not many people or companies use more than a few public IP addresses (again, what's inside the SOHO networks is separate from that). Consider this the equivalent of the amount of public IP's you currently control. In my case that would be 4, one for my home network and 3 for the internet-facing servers I own.

There's various ways in which overall network complexity is reduced in IPv6. This includes IPSec which is now part of the protocol suite and thus no longer an extension. Standardizing this is a good thing, and honestly I'm surprised that this wasn't the case before.

Many people seem to oppose the way IPv6 is presented, hexadecimal is not something many people use every day. Personally I've grown quite fond of the decimal representation of IPv4. Then again, there is a binary conversion involved in classless IPv4. Hexadecimal makes this conversion easier.

There seems to be opposition to memorizing IPv6 addresses, for which DNS can be used. I agree, I use this for my IPv4 network already. Makes life easier when you can just address devices by a domain name. For any developers out there with no experience with administration that think that this is bullshit - imagine having to remember the IP address of Facebook, Google, Stack Overflow and every other website you visit. Add to the list however many devices you want to be present in the imaginary network. For me right now that's between 20 and 30 hosts, and gradually increasing. Scalability can be a bitch.

Any other things.. Oh yeah. The average amount of devices in a SOHO network is not quite 1 anymore - there are currently about half a dozen devices in a home network that need to be addressed. This number increases as more devices become smart devices. That said of course, it's nowhere close to needing 64 bits and will likely never need it. Again, for any devs that think that this is bullshit - prove me wrong. I happen to know in one particular instance that they have centralized all their resources into a single PC. This seems to be common with developers and I think it's normal. But it also reduces the chances to see what networks with many devices in it are like. Again, scalability can be a bitch.

Thanks a lot everyone for your comments on the matter, I've learned a lot and really appreciate it. Do check out the previous rant and particularly the comments on it if you're interested. See ya!25 -

I am a firmware developer with 4 years experience. C and sometimes assembly is my bread and butter.

Like 2 years ago, I was really interested to make a switch to application development. Got referred by my friend to her startup.

But I was a bit rusty with my data structures, high level languages and interpersonal skills.

The first question was to find the number of occurences for each word in a paragraph. The language choice was Java. But I was allowed to use C++ since it was the closest relative to Java that I knew.

And I started implementing a binary search tree from scratch and started inserting each tokenised word into it, wrote a traversal algorithm.

The interviewer, luckily, was a patient guy. After I completed my whole mess, he asked is it possible to do this in a slightly better way with constant time access without traversal.

I said yes, we can with a hash table but I dont know how to implement one. He replied I dont expect you to implement the hash table but see you use it. I asked him if I am allowed to used the standard library, for which he said ofcourse.

*facepalm*.

Finally I understood his expectation, referred cppreference.com and used an unordered_map.

Later there were some quesion on databases for which I tried my best to answer. And I frankly replied that I am not comfortable with JS frameworks as of now. Got rejected.

So the mistake is I never asked basic questions like what is the time complexity expects, if I was allowed to use standard library, didnt spend some extra time on studying stuffs needed for the domain switch and most importantly I panicked.7 -

Me in school: Math? When do I need know those details? I can look them up and just code it.

Me in high school: Computer science is way too math-y. I want to code!

Me coding php: Just make it work.

Me coding typescript: Just make it work.

Me coding scala: Just make it ... what ... how do I make it work!?!

Me asking stackoverflow: How do I do X in scala some functional programming stuff in mind in order to keep immutability.

Somebody way smarter than I: "In scalaz, a function A => A is called an endomorphism and is a Monoid whose associative binary operation is function composition and whose identity is the identity function"

Me now: Fuck my old arrogant self.1 -

You wanna know what the fuck we did in our goddamn code.org class today, wait no, the last whole fucking week. YES OR NO QUESTIONS. I GET BINARY IS FUCKING 0'S AND 1'S. FOR GOD SAKES I KNOW BINARY. I EVEN KNOW FUCKING TERNARY. AND. YOU KNOW WHAT TEACHER ? EVERYONE ELSE COULD LEARN BINARY IN FIVE GODDAMN MINUTES. "Is code.org worthy of being kicked in the ass and tied up on a railroad when the trains coming?" Is a perfect binary question. This whole fucking class I feel like I'm in an english class for five year olds in spain. HEY TEACHER I DON'T CARE IF BILL GATES OR MARK SUCKERBURG OR BARAK OBAMA OR GODDAMN CHRIS BOSH SUPPORTS IT. ITS FOR THERE FUCKING REPUTATION. PEOPLE WITH HALF A BRAIN KNOW THESE PEOPLE DON'T GIVE A FUCK. THEY EACH HAVE HUNDREDS OF MILLIONS OR EVEN BILLIONS OF DOLLARS, BUT THEY ALL CHOSE TO USE A FIVE DOLLAR MIC JUST TO FUCK WITH US. EVERY TIME I WALK IN THAT CLASS I FEEL DEGRADED LIKE I'VE BEEN PUT BACK IN PRESCHOOL. THANK YOU TEACHER, I ALWAYS WANTED TO LEARN BINARY TO MAKE MY FUCKING SIMPLE JAVASCRIPT APP AS MY FINAL PROJECT FOR THE REST OF THE YEAR.4

-

Want to make someone's life a misery? Here's how.

Don't base your tech stack on any prior knowledge or what's relevant to the problem.

Instead design it around all the latest trends and badges you want to put on your resume because they're frequent key words on job postings.

Once your data goes in, you'll never get it out again. At best you'll be teased with little crumbs of data but never the whole.

I know, here's a genius idea, instead of putting data into a normal data base then using a cache, lets put it all into the cache and by the way it's a volatile cache.

Here's an idea. For something as simple as a single log lets make it use a queue that goes into a queue that goes into another queue that goes into another queue all of which are black boxes. No rhyme of reason, queues are all the rage.

Have you tried: Lets use a new fangled tangle, trust me it's safe, INSERT BIG NAME HERE uses it.

Finally it all gets flushed down into this subterranean cunt of a sewerage system and good luck getting it all out again. It's like hell except it's all shitty instead of all fiery.

All I want is to export one table, a simple log table with a few GB to CSV or heck whatever generic format it supports, that's it.

So I run the export table to file command and off it goes only less than a minute later for timeout commands to start piling up until it aborts. WTF. So then I set the most obvious timeout setting in the client, no change, then another timeout setting on the client, no change, then i try to put it in the client configuration file, no change, then I set the timeout on the export query, no change, then finally I bump the timeouts in the server config, no change, then I find someone has downloaded it from both tucows and apt, but they're using the tucows version so its real config is in /dev/database.xml (don't even ask). I increase that from seconds to a minute, it's still timing out after a minute.

In the end I have to make my own and this involves working out how to parse non-standard binary formatted data structures. It's the umpteenth time I have had to do this.

These aren't some no name solutions and it really terrifies me. All this is doing is taking some access logs, store them in one place then index by timestamp. These things are all meant to be blazing fast but grep is often faster. How the hell is such a trivial thing turned into a series of one nightmare after another? Things that should take a few minutes take days of screwing around. I don't have access logs any more because I can't access them anymore.

The terror of this isn't that it's so awful, it's that all the little kiddies doing all this jazz for the first time and using all these shit wipe buzzword driven approaches have no fucking clue it's not meant to be this difficult. I'm replacing entire tens of thousands to million line enterprise systems with a few hundred lines of code that's faster, more reliable and better in virtually every measurable way time and time again.

This is constant. It's not one offender, it's not one project, it's not one company, it's not one developer, it's the industry standard. It's all over open source software and all over dev shops. Everything is exponentially becoming more bloated and difficult than it needs to be. I'm seeing people pull up a hundred cloud instances for things that'll be happy at home with a few minutes to a week's optimisation efforts. Queries that are N*N and only take a few minutes to turn to LOG(N) but instead people renting out a fucking off huge ass SQL cluster instead that not only costs gobs of money but takes a ton of time maintaining and configuring which isn't going to be done right either.

I think most people are bullshitting when they say they have impostor syndrome but when the trend in technology is to make every fucking little trivial thing a thousand times more complex than it has to be I can see how they'd feel that way. There's so bloody much you need to do that you don't need to do these days that you either can't get anything done right or the smallest thing takes an age.

I have no idea why some people put up with some of these appliances. If you bought a dish washer that made washing dishes even harder than it was before you'd return it to the store.

Every time I see the terms enterprise, fast, big data, scalable, cloud or anything of the like I bang my head on the table. One of these days I'm going to lose my fucking tits.10 -

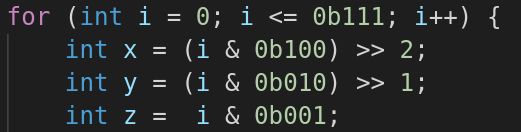

Is this a bad way to traverse corners of a cube?

The objective is obvious from the context and I assume that programmers know how binary works. 10

10 -

So, I work in a game development studio, right?

We're trying to launch the title on as many platforms as reasonable, because as a social VR app we're kinda rowing upstream.

So far, Steam and Oculus have been fairly reasonable, if oddly broken and inconsistent.

Enter store 3.

Basically no in-game transaction support (our asking prompted them to *start* developing it. No, it's not very complete). No patch-update system (You want an update? Gotta download the whole fsckin' thing!). No beta-testing functionality for most of their stuff ("Just write the code like the example, it will work, trust us!"). No tools besides the buggy SDK (Wanna upload that new build? Say hello to this page in your web browser!).

So, in other words: Fun.

We've been trying to get actively launched for two months now. Keep in mind that the build has been up on Steam and Oculus for over a year and half a year (respectively), so the actual binary functionality is, presumably fine.

The best feedback we get back tends to be "Well, when we click the Launch button it crashes, so fail."

Meanwhile we're going back and forth, dealing with other-side-of-the-world timezone lag, trying to figure out what is so different from their machines as ours. Eventually we get them to start sending logs (and no, Windows Event logs are not sufficient for GAMES, where did you even get that idea????) except the logs indicate that the program is getting killed so terribly that the engine's built-in crash handler can't even kick in to generate memory dumps or even know it died.

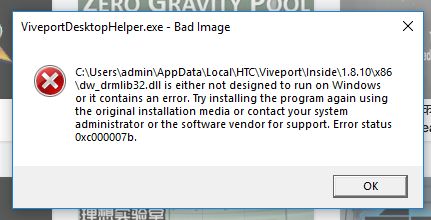

All this boils down to today, where I get a screenshot of their latest attempt.

I just can't even right now. 5

5 -

Once again I have loads.

My best teachers were...

The contractor that taught me C#, ASP MVC and SQL Server. Dude was a legend, so calm and collected. He wanted to learn JQuery and Bootstrap so at the same time as teaching, he was learning from me. Such an inspirational person, to know your subordinates still have something to teach you. He also taught me a lot about working methodically and improving my pragmatism.

The other, in school I studied computing A-Level. 100% scored at least one of the exams... basically I knew my stuff.

But, as a kid, I didn’t know how to formulate my answers, or even string together coherent answers for the exams. This dude noticed, first thing he did was said “well you’re better at this bit than me, practice but you’ll be fine” (manually working out two’s complement binary of a number).

Second thing he did was say “you know what man, you know what you’re on about but nobody else is ever going to know that”.

He helped me on the subjects I wasn’t perfect on, then he helped me on formulating my answers correctly.

He also put up with my shit attendance, being a teenager with a motorcycle who thinks he knows it all, has its downsides.

As a result, I aced the hell out of that course, legendary grades and he got himself a bit of a bonus for it to use on his holiday. Everyone’s a winner.

Liam, Jason, if you guys are out there I owe you both thanks for making me the person I am today.

The worst, I’ve had too many to name... but it comes down to this:

- identify your students strengths and weaknesses, focus on the weaknesses

- identify your own and know when to ask for help yourself

- be patient, learning hurts.

You can always tell a passionate teacher from one who’s there for the paycheck.1 -

Let's try this.

In the project I'm working there is an strict rule : NO COMMENTS!!!

I mean wth, the times I've spend hours trying to understand the crappy legacy code in VB.Net that has been there almost decades, that wouldn't happen with comments, I know i know there are some supernatural developers that think in binary and their eyes work as compilers, but I'm not like that, so seriously go to hell.

P.S. Of course I follow that rule, after all, my code is so damn perfect that even a baby can understand it.

jkundefined devops etiquette stupidest pichardo for president stupid stupider stupid stuff jk rant code smells comments3 -

(long post is long)

This one is for the .net folks. After evaluating the technology top to bottom and even reimplementing several examples I commonly use for smoke testing new technology, I'm just going to call it:

Blazor is the next Silverlight.

It's just beyond the pale in terms of being architecturally flawed, and yet they're rushing it out as hard as possible to coincide with the .Net 5 rebranding silo extravaganza. We are officially entering round 3 of "sacrifice .Net on the altar of enterprise comfort." Get excited.

Since we've arrived here, I can only assume the Asp.net Ajax fiasco is far enough in the past that a new generation of devs doesn't recall its inherent catastrophic weaknesses. The architecture was this:

1. Create a component as a "WebUserControl"

2. Any time a bound DOM operation occurs from user interaction, send a payload back to the server

3. The server runs the code to process the event; it spits back more HTML

Some client-side js then dutifully updates the UI by unceremoniously stuffing the markup into an element's innerHTML property like so much sausage.

If you understand that, you've adequately understood how Blazor works. There's some optimization like signalR WebSockets for update streaming (the first and only time most blazor devs will ever use WebSockets, I even see developers claiming that they're "using SignalR, Idserver4, gRPC, etc." because the template seeds it for them. The hubris.), but that's the gist. The astute viewer will have noticed a few things here, including the disconnect between repaints, inability to blend update operations and transitions, and the potential for absolutely obliterative, connection-volatile, abusive transactional logic flying back and forth to the server. It's the bring out your dead approach to seeing how much of your IT budget is dedicated to paying for bandwidth and CPU time.

Blazor goes a step further in the server-side render scenario and sends every DOM event it binds to the server for processing. These include millisecond-scale events like scroll, which, at least according to GitHub issues, devs are quickly realizing requires debouncing, though they aren't quite sure how to accomplish that. Since this immediately becomes an issue with tickets saying things like, "scroll event crater server, Ugg need help! You said Blazorclub good. Ugg believe, Ugg wants reparations!" the team chooses a great answer to many problems for the wrong reasons:

gRPC

For those who aren't familiar, gRPC has a substantial amount of compression primarily courtesy of a rather excellent binary format developed by Google. Who needs the Quickie Mart, or indeed a sound markup delivery and view strategy when you can compress the shit out of the payload and ignore the problem. (Shhh, I hear you back there, no spoilers. What will happen when even that compression ceases to cut it, indeed). One might look at all this inductive-reasoning-as-development and ask themselves, "butwai?!" The reason is that the server-side story is just a way to buy time to flesh out the even more fundamentally broken browser-side story. To explain that, we need a little perspective.

The relationship between Microsoft and it's enterprise customers is your typical mutually abusive co-dependent relationship. Microsoft goes through phases of tacit disinterest, where it virtually ignores them. And rightly so, the enterprise customers tend to be weaksauce, mono-platform, mono-language types who come to work, collect a paycheck, and go home. They want to suckle on the teat of the vendor that enables them to get a plug and play experience for delivering their internal systems.

And that's fine. But it's also dull; it's the spouse that lets themselves go, it's the girlfriend in the distracted boyfriend meme. Those aren't the people who keep your platform relevant and competitive. For Microsoft, that crowd has always been the exploratory end of the developer community: alt.net, and more recently, the dotnet core community (StackOverflow 2020's most loved platform, for the haters). Alt.net seeded every competitive advantage the dotnet ecosystem has, and dotnet core capitalized on. Like DI? You're welcome. Are you enjoying MVC? Your gratitude is understood. Cool serializers, gRPC/protobuff, 1st class APIs, metadata-driven clients, code generation, micro ORMs, etc., etc., et al. Dear enterpriseur, you are fucking welcome.

Anyways, b2blazor. So, the front end (Blazor WebAssembly) story begins with the average enterprise FOMO. When enterprises get FOMO, they start to Karen/Kevin super hard, slinging around money, privilege, premiere support tickets, etc. until Microsoft, the distracted boyfriend, eventually turns back and says, "sorry babe, wut was that?" You know, shit like managers unironically looking at cloud reps and demanding to know if "you can handle our load!" Meanwhile, any actual engineer hides under the table facepalming and trying not to die from embarrassment.36 -

Well I feel like an idiot thanks to my IT teacher. This guy, this fucking guy thinks that we’re seeing computer for the first time. He’s literally saying “You see this black bar on the bottom? That’s taskbar.”. It’s like he’s teaching 7 years old childs 😤

But the worst part is my class mated don’t know such basics! They don’t know how binary code works, what is motherboard, how to login to school domain on Windows.

But on the flip side, they look at me like at the God 😏7 -

The saddest and funniest side of our industry is (atleast in India): someone works hard and makes it to the best colleges, do great projects on AI, ML; get a good score on Leetcode, codechef; gets a job in FAANG-like companies...

Changes colors in CSS and texts in HTML.

And, why is there so much emphasis on Data Structures and Algorithms? I mean, a little bit is fine, but why get obsessed with it when you never write algorithms in production code?

Now, don't tell me that, we use libraries and we should know what we are doing, no, we don't use algorithms even in libraries.

Now, before you tell me that MySQL uses B-tree for maintaining indexes, you really don't need to solve tricky questions to be able to understand how a B-tree works.

It's just absurd.

I know how to little bit on how design scalable systems.

I know how to write good code that is both modular and extensible.

I know how to mentor interns and turn them into employees.

I know how to mentor junior engineers (freshers) and help them get started.

Heck I can even invert a binary tree.

But some FAANG company would reject me because I cannot solve a very tricky dynamic programming question.4 -

Hey, been gone a hot minute from devrant, so I thought I'd say hi to Demolishun, atheist, Lensflare, Root, kobenz, score, jestdotty, figoore, cafecortado, typosaurus, and the raft of other people I've met along the way and got to know somewhat.

All of you have been really good.

And while I'm here its time for maaaaaaaaath.

So I decided to horribly mutilate the concept of bloom filters.

If you don't know what that is, you take two random numbers, m, and p, both prime, where m < p, and it generate two numbers a and b, that output a function. That function is a hash.

Normally you'd have say five to ten different hashes.

A bloom filter lets you probabilistic-ally say whether you've seen something before, with no false negatives.

It lets you do this very space efficiently, with some caveats.

Each hash function should be uniformly distributed (any value input to it is likely to be mapped to any other value).

Then you interpret these output values as bit indexes.

So Hi might output [0, 1, 0, 0, 0]

while Hj outputs [0, 0, 0, 1, 0]

and Hk outputs [1, 0, 0, 0, 0]

producing [1, 1, 0, 1, 0]

And if your bloom filter has bits set in all those places, congratulations, you've seen that number before.

It's used by big companies like google to prevent re-indexing pages they've already seen, among other things.

Well I thought, what if instead of using it as a has-been-seen-before filter, we mangled its purpose until a square peg fit in a round hole?

Not long after I went and wrote a script that 1. generates data, 2. generates a hash function to encode it. 3. finds a hash function that reverses the encoding.

And it just works. Reversible hashes.

Of course you can't use it for compression strictly, not under normal circumstances, but these aren't normal circumstances.

The first thing I tried was finding a hash function h0, that predicts each subsequent value in a list given the previous value. This doesn't work because of hash collisions by default. A value like 731 might map to 64 in one place, and a later value might map to 453, so trying to invert the output to get the original sequence out would lead to branching. It occurs to me just now we might use a checkpointing system, with lookahead to see if a branch is the correct one, but I digress, I tried some other things first.

The next problem was 1. long sequences are slow to generate. I solved this by tuning the amount of iterations of the outer and inner loop. We find h0 first, and then h1 and put all the inputs through h0 to generate an intermediate list, and then put them through h1, and see if the output of h1 matches the original input. If it does, we return h0, and h1. It turns out it can take inordinate amounts of time if h0 lands on a hash function that doesn't play well with h1, so the next step was 2. adding an error margin. It turns out something fun happens, where if you allow a sequence generated by h1 (the decoder) to match *within* an error margin, under a certain error value, it'll find potential hash functions hn such that the outputs of h1 are *always* the same distance from their parent values in the original input to h0. This becomes our salt value k.

So our hash-function generate called encoder_decoder() or 'ed' (lol two letter functions), also calculates the k value and outputs that along with the hash functions for our data.

This is all well and good but what if we want to go further? With a few tweaks, along with taking output values, converting to binary, and left-padding each value with 0s, we can then calculate shannon entropy in its most essential form.

Turns out with tens of thousands of values (and tens of thousands of bits), the output of h1 with the salt, has a higher entropy than the original input. Meaning finding an h1 and h0 hash function for your data is equivalent to compression below the known shannon limit.

By how much?

Approximately 0.15%

Of course this doesn't factor in the five numbers you need, a0, and b0 to define h0, a1, and b1 to define h1, and the salt value, so it probably works out to the same. I'd like to see what the savings are with even larger sets though.

Next I said, well what if we COULD compress our data further?

What if all we needed were the numbers to define our hash functions, a starting value, a salt, and a number to represent 'depth'?

What if we could rearrange this system so we *could* use the starting value to represent n subsequent elements of our input x?

And thats what I did.

We break the input into blocks of 15-25 items, b/c thats the fastest to work with and find hashes for.

We then follow the math, to get a block which is

H0, H1, H2, H3, depth (how many items our 1st item will reproduce), & a starting value or 1stitem in this slice of our input.

x goes into h0, giving us y. y goes into h1 -> z, z into h2 -> y, y into h3, giving us back x.

The rest is in the image.

Anyway good to see you all again. 20

20 -

Disclaimer: I hold no grudges or prejudices toward [CENSORED] company. I love the concept of the business model and the perks they pay their employees. Unfortunately, the company is very petty, and negligence is the core of the management. I got into an interview for the position, of Senior Software Engineer, and the interview wouldn't take place if wasn't for me to follow up with the person in charge countless times a day. The Vice President of Engineering was the most confused person ever encountered. Instead of asking challenging questions that plausibly could explain and portray how well I can manage a team, the methodology of working with various technology, and my problem-solving skills. They asked me questions that possibly indicated they don't even know what they need or questions that can easily get from a Google Search. I was given 40 hours to build a demo application whereby I had to send them a copy of the source code and the binary file. The person who contacted me don't even bother with what I told her that it is not a good practice to place the binary in cloud storage (Google Drive, OneDrive, etc) and I request extra time to complete the demo application. Since I got the requirement to hand them the repository of the codebase, it is common practice to place the binary in the release section in the Git Platform (Jire, Azure DevOps, Github, Gitlab, etc). Which he surprisingly doesn't know what that is. There's the API key I place locally in .env hidden from the codebase (it's not good practice to place credentials in the codebase), I got a request that not only subscript to an API is necessary but I have to place them in the codebase. I succeed to pass the source code on time with the quality of 40 hours, I told him that I could have done it better, clearer and cleaner if I was given more grace of time. (Because they are not the only company asking me to write a demo application prior to the assessment. Extra grace was I needed)

So long story short, I asked him how is it working in a [CENSORED] company during my turn to ask questions. I got told that the "environment is friendly, diverse". But with utmost curiosity, I contacted several former employees (Software Engineer) on LinkedIn, and I got told that the company has high turnover, despises diversity the nepotism is intense. Most of the favours are done based on how well you create an illusion of you working for them and being close to the upper management. I request shreds of evidence from those former employees to substantiate what they told me. Seeing the pieces of evidence of how they manage the projects, their method of communication, and how biased the upper management actually is led me to withdraw from continuing my application. Honestly, I wouldn't want to work for a company where the majority can't communicate. -

//First rant

So I've been working trying to get a file exporter for a binary file format mostly reverse engineered - 2001 Super Monkey Ball 2 (GameCube) if anyone's interested.

Everything works fine, goals show up in the right places, wormholes work as intended, etc. That is everything, except every single level you create will be invisible, or crash (Depending on which version of Dolphin emu you use).

This happens whenever trying to specify object names for 3D objects. I checked, all the many offsets seem correct, Object names are correct. Tried both null terminated strings and fixed 80 character strings - nothing.

Some other guy also made an exporter that works, however the code is an absolute mess - basically unreadable. It also lacks some newer parts of the file spec, which is the main reason as to why I'm rewriting it.

And as I'm working with an almost entirely unheard of file format, there are few people to go to for help. The 2 I know who are also familiar with the LZ file format have no idea either...

Sigh.1 -

If you're going to build an open source command line tool, please for fuck sake publish the Linux x64 artifact to the world. I don't want to waste half my day setting up a box just to compile your project.

I know you build the artifact, I see it in your public CI system. The badge at the top of your GitHub repo even says it's good today. So seriously, why can't you just publish that binary to S3 so I don't have to waste my day ranting.1 -

This is gonna get someone illogically upset, but idc about that.

I know it's ignorance of semantics but I'm tired of propagated ignorance changing the meaning of things.

Non-binary is NOT a legitimate term for whatever 'gender' you are!

I get what *whoever-started-it* was going for, but it's NOT valid. If you want to say that youre not male or female, fine... just don't abuse binary systems to do it. Just say youre non-bool/anti-boolean or identify with one of the, apparently 50, shades of gray.

I keep getting into logical loops to nowhere about this nonsense. No one is even defining what's supposed to be the 1 vs the 0. Which then makes me think '1 must be male... genitalia=1 in many ways...' which then sources back to the historic validity of males vs undervalued/less than human interpretations of females...

Then <brake>.

Ofc these people aren't going into the historical significance... they don't even realise how binary works! Ofc they'd have no clue that all 0s= no data... and 0s only have significance when viewed in placement to the 1s.

Let's all start using proper terminologies, like non-boolean. Maybe i can start a trend by paying people pennies to learn/teach wtf a boolean value is, and that binary can represent anything. With proper encoding the array is limitless... so being binary is actually a giant spectrum... therefore makes no sense to be "non-binary".

Ok... im done. It had to be said.

Who wants to start identifying as non, or educating wtf is, boolean with me???33 -

Major rant incoming. Before I start ranting I’ll say that I totally respect my professor’s past. He worked on some really impressive major developments for the military and other companies a long time ago. Was made an engineering fellow at Raytheon for some GPS software he developed (or lead a team on I should say) and ended up dropping fellowship because of his health. But I’m FUCKING sick of it. So fucking fed up with my professor. This class is “Data Structures in C++” and keep in mind that I’ve been programming in C++ for almost 10 years with it being my primary and first language in OOP.

Throughout this entire class, the teacher has been making huge mistakes by saying things that aren’t right or just simply not knowing how to teach such as telling the students that “int& varOne = varTwo” was an address getting put into a variable until I corrected him about it being a reference and he proceeded to skip all reference slides or steps through sorting algorithms that are wrong or he doesn’t remember how to do it and saying, “So then it gets to this part and....it uh....does that and gets this value and so that’s how you do it *doesnt do rest of it and skips slide*”.

First presentation I did on doubly linked lists. I decided to go above and beyond and write my own code that had a menu to add, insert at position n, delete, print, etc for a doubly linked list. When I go to pull out my code he tells me that I didn’t say anything about a doubly linked list’s tail and head nodes each have a pointer pointing to null and so I was getting docked points. I told him I did actually say it and another classmate spoke up and said “Ya” and he cuts off saying, “No you didn’t”. To which I started to say I’ll show you my slides but he cut me off mid sentence and just yelled, “Nope!”. He docked me 20% and gave me a B- because of that. I had 1 slide where I had a bullet point mentioning it and 2 slides with visual models showing that the head node’s previousNode* and the tail node’s nextNode* pointed to null.

Another classmate that’s never coded in his life had screenshots of code from online (literally all his slides were a screenshot of the next part of code until it finished implementing a binary search tree) and literally read the code line by line, “class node, node pointer node, ......for int i equals zero, i is less than tree dot length er length of tree that is, um i plus plus.....”

Professor yelled at him like 4 times about reading directly from slide and not saying what the code does and he would reply with, “Yes sir” and then continue to read again because there was nothing else he could do.

Ya, he got the same grade as me.

Today I had my second and final presentation. I did it on “Separate Chaining”, a hashing collision resolution. This time I said fuck writing my own code, he didn’t give two shits last time when everyone else just screenshot online example code but me so I decided I’d focus on the PowerPoint and amp it up with animations on models I made with the shapes in PowerPoint. Get 2 slides in and he goes,

Prof: Stop! Go back one slide.

Me: Uh alright, *click*

(Slide showing the 3 collision resolutions: Open Addressing, Separate Chaining, and Re-Hashing)

Prof: Aren’t you forgetting something?

Me: ....Not that I know of sir

Prof: I see Open addressing, also called Open Hashing, but where’s Closed Hashing?

Me: I believe that’s what Seperate Chaining is sir

Prof: No

Me: I’m pretty sure it is

*Class nods and agrees*

Prof: Oh never mind, I didn’t see it right

Get another 4 slides in before:

Prof: Stop! Go back one slide

Me: .......alright *click*

(Professor loses train of thought? Doesn’t mention anything about this slide)

Prof: I er....um, I don’t understand why you decided not to mention the other, er, other types of Chaining. I thought you were going to back on that slide with all the squares (model of hash table with animations moving things around to visualize inserting a value with a collision that I spent hours on) but you didn’t.

(I haven’t finished the second half of my presentation yet you fuck! What if I had it there?)

Me: I never saw anything on any other types of Chaining professor

Prof: I’m pretty sure there’s one that I think combines Open Addressing and Separate Chaining

Me: That doesn’t make sense sir. *explanation why* I did a lot of research and I never saw any other.

Prof: There are, you should have included them.

(I check after I finish. Google comes up with no other Chaining collision resolution)

He docks me 20% and gives me a B- AGAIN! Both presentation grades have feedback saying, “MrCush, I won’t go into the issues we discussed but overall not bad”.

Thanks for being so specific on a whole 20% deduction prick! Oh wait, is it because you don’t have specifics?

Bye 3.8 GPA

Is it me or does he have something against me?7 -

In the 90s most people had touched grass, but few touched a computer.

In the 2090s most people will have touched a computer, but not grass.

But at least we'll have fully sentient dildos armed with laser guns to mildly stimulate our mandatory attached cyber-clits, or alternatively annihilate thought criminals.

In other news my prime generator has exhaustively been checked against, all primes from 5 to 1 million. I used miller-rabin with k=40 to confirm the results.

The set the generator creates is the join of the quasi-lucas carmichael numbers, the carmichael numbers, and the primes. So after I generated a number I just had to treat those numbers as 'pollutants' and filter them out, which was dead simple.

Whats left after filtering, is strictly the primes.

I also tested it randomly on 50-55 bit primes, and it always returned true, but that range hasn't been fully tested so far because it takes 9-12 seconds per number at that point.

I was expecting maybe a few failures by my generator. So what I did was I wrote a function, genMillerTest(), and all it does is take some number n, returns the next prime after it (using my functions nextPrime() and isPrime()), and then tests it against miller-rabin. If miller returns false, then I add the result to a list. And then I check *those* results by hand (because miller can occasionally return false positives, though I'm not familiar enough with the math to know how often).

Well, imagine my surprise when I had zero false positives.

Which means either my code is generating the same exact set as miller (under some very large value of n), or the chance of miller (at k=40 tests) returning a false positive is vanishingly small.

My next steps should be to parallelize the checking process, and set up my other desktop to run those tests continuously.

Concurrently I should work on figuring out why my slowest primality tests (theres six of them, though I think I can eliminate two) are so slow and if I can better estimate or derive a pattern that allows faster results by better initialization of the variables used by these tests.

I already wrote some cases to output which tests most frequently succeeded (if any of them pass, then the number isn't prime), and therefore could cut short the primality test of a number. I rewrote the function to put those tests in order from most likely to least likely.

I'm also thinking that there may be some clues for faster computation in other bases, or perhaps in binary, or inspecting the patterns of values in the natural logs of non-primes versus primes. Or even looking into the *execution* time of numbers that successfully pass as prime versus ones that don't. Theres a bevy of possible approaches.

The entire process for the first 1_000_000 numbers, ran 1621.28 seconds, or just shy of a tenth of a second per test but I'm sure thats biased toward the head of the list.

If theres any other approach or ideas I may be overlooking, I wouldn't know where to begin.16 -

If you've ever tried using Go plugins raise your hand.

If you've ever tried doing plugins in Go, raise your hand.

If you think that the following rant will be interesting, raise your hand.

If you raised your hand, press [Read More]:

This is a tale of pain and sorrow, the sorrow of discovering that what could be a wonderful feature is woefully incomplete, and won't be for a very long time...

Go plugins are a cool feature: dynamically load pre-compiled code, and interact with it in a useful and relatively performant way (e.g. for dynamically extending the capabilities of your program). So far it sounds great, I know right?

Now let me list off some issues (in order of me remembering them):

1. You can't unload them (due to some bs about dlopen), so you need to restart the application...

2. They bundle the stdlib like a regular Go binary, despite the fact that they're meant to be dynamic!

3. #2 wouldn't be so bad if they didn't also require identical versions of all dependencies in both binaries (meaning you'd need to vendor the dependencies, and also hope you are using the right Go version).

4. You need to use -trimpath or everything dies...

All in all, they are broken and no one is rushing to fix it (literally, the Go team said they aren't really supporting it currently...).

So what other options are there for making plugins in Go?

There's the Hashicorp method of using RPC, where you have two separate applications one the plugin, one the plugin server, and they communicate over RPC. I don't like it. Why? Because it feels like a hack, it's not really efficient and it carries a fear of a limitation that I don't like...

Then we come to a somewhat more clever approach: using Lua (or any other scripting language), it's well known, it's what everyone uses (at least in games...). But, it simply is too hard to use, all the Go Lua VMs I could find were simply too hard to set up...

Now we come to the most creative option I've seen yet: WASM. Now you ask "WASM!? But that's a web thing, how are you gonna make that work?" Indeed, my son, it is a web thing, but that doesn't mean I can't use it! Someone made a WASM VM for Go, and the pros are that you can use any WASM supporting language (i.e. any/all of them). Problem inefficient, PITA to use, and also suffers from the same issues that were preventing me from using Lua.

Enter Yaegi, a Go interpreter created by the same guys who made (and named) Traefik. Yes, you heard me right, an INTERPRETER (i.e. like python) so while it's not super performant (and possibly suffering from large inefficiency issues), it's very easy to set up, and it means that my plugins can still be written in Go (yay)! However, don't think this method doesn't have its own issues, there's still the problem of effectively abstracting different types of plugins without requiring too much boilerplate (a hard problem that I'm actively working on, commits coming soon). However, this still feels to be the best option.

As you can see, doing plugins in Go is a very hard problem. In the coming weeks (hopefully), I'm going to (attempt to at least) benchmark all the different options, as well as publish a library that should help make using Yaegi based plugins easier. All of this stuff will go (see what I did there 😉) in a nice blog post that better explains the issues and solutions. But until then I have some coding to do...

Have a good night(/day)!13 -

Hopefully, you already know that the company controlled by the alledged reptiloid subhuman and olimpic testicle juggler formerly known as Mister Zuck My Tits is not to be trusted.

But as is always the case in this bitch, I've been forced into cowjizz flooded swamps' worth of stinking shit platforms for the sake of avoiding isolation.

And so, I've just found yet another way in which Facebook **THUNDERSTRIKE** ... the company, not the geriatric ward, is one of the CROWN ACHIEVEMENTS of human civilization.

Let me tell you something: some people are fucking broke. Hell, some people sleep on the streets, live on scraps, and willingly engage in acts of public defecation when provoked. But I'm not even talking about them no, just plain *broke*.

And so imagine being that guy who doesn't really use his phone much, except maybe for sharing cat pictures with mom because that's what being an absolute chad is all about. You don't get a new phone, because money is a __little__ bit tight. But THEN...

The dreaded CAPITAL strikes, and requests of you to bend and fall onto your knees so as to provide intense, intimate and manual -- as well as oral -- PLEASURE to the [NOT SO] METAPHORICAL PENIS of the """SYSTEM""".

Oh, what an abominable, drooooooling revenant that lies before you!