Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "data service"

-

Buzzword dictionary to deal with annoying clients:

AI—regression

Big data—data

Blockchain—database

Algorithm—automated decision-making

Cloud—Internet

Crypto—cryptocurrency

Dark web—Onion service

Data science—statistics done by nonstatisticians

Disruption—competition

Viral—popular

IoT—malware-ready device15 -

So a few days ago I felt pretty h*ckin professional.

I'm an intern and my job was to get the last 2003 server off the racks (It's a government job, so it's a wonder we only have one 2003 server left). The problem being that the service running on that server cannot just be placed on a new OS. It's some custom engineering document server that was built in 2003 on a 1995 tech stack and it had been abandoned for so long that it was apparently lost to time with no hope of recovery.

"Please redesign the system. Use a modern tech stack. Have at it, she's your project, do as you wish."

Music to my ears.

First challenge is getting the data off the old server. It's a 1995 .mdb file, so the most recent version of Access that would be able to open it is 2010.

Option two: There's an "export" button that literally just vomits all 16,644 records into a tab-delimited text file. Since this option didn't require scavenging up an old version of Access, I wrote a Python script to just read the export file.

And something like 30% of the records were invalid. Why? Well, one of the fields allowed for newline characters. This was an issue because records were separated by newline. So any record with a field containing newline became invalid.

Although, this did not stop me. Not even close. I figured it out and fixed it in about 10 minutes. All records read into the program without issue.

Next for designing the database. My stack is MySQL and NodeJS, which my supervisors approved of. There was a lot of data that looked like it would fit into an integer, but one or two odd records would have something like "1050b" which mean that just a few items prevented me from having as slick of a database design as I wanted. I designed the tables, about 18 columns per record, mostly varchar(64).

Next challenge was putting the exported data into the database. At first I thought of doing it record by record from my python script. Connect to the MySQL server and just iterate over all the data I had. But what I ended up actually doing was generating a .sql file and running that on the server. This took a few tries thanks to a lot of inconsistencies in the data, but eventually, I got all 16k records in the new database and I had never been so happy.

The next two hours were very productive, designing a front end which was very clean. I had just enough time to design a rough prototype that works totally off ajax requests. I want to keep it that way so that other services can contact this data, as it may be useful to have an engineering data API.

Anyways, that was my win story of the week. I was handed a challenge; an old, decaying server full of important data, and despite the hitches one might expect from archaic data, I was able to rescue every byte. I will probably be presenting my prototype to the higher ups in Engineering sometime this week.

Happy Algo!8 -

My mobile phone provider called me and offered me a new contract containing more data volume.

Customer service: 'Your current contract has only 1.5GB data volume. That's not much. With this you can only send 1 or 2 pictures and that's it.'

Me: 'What kind of pictures do you use / send? 😨'11 -

Although this is gonna sound like bullshit, this happened to me for real. Since that moment I use even more backup services AND I regularly check EVERYTHING.

Had a backup of my important data (still used mainstream services back then) on:

- Hotmail email attachments

- Google Drive

(Both link to another email account).

- A few data backup services

- DVD

- USB

- External HDD.

I wanted to copy some backup data over again:

1. Walk to my staple of HDD's, tried to grab it, somehow missed and knocked the whole fucking pile over. HDD broken.

2. Well fuck, let's go put some of my clothes in the washing machine for clean clothes at study/monday. After this shit being in the washing machine for just a few minutes, I realized my backup USB stick was in one of my pockets, in the washing machine. FUCK. Couldn't stop it so I waited till the end, tried it and well, it wasn't working at all anymore.

Fuck my fucking life slightly right now.

3. *remembers about the backup disc*. I forgot to keep it in its case, very deep scratches and so on, unreadable. FUCKING FUCK.

4. Right, I still have those online services! *tries to login to all of them (including hotmail/gdrive) but forgot the password. Well, let's login to my backup account then (hadn't used that one in years). Account was suspended for some reason.

Started to get really anxious because every online backup service was linked to that email address.

Contacted customer support. They really couldn't restore it because of some issues they weren't allow to tell me. Sorry but I couldn't retain access.

5. Well this is fucked up. Couldn't get into any of the backup/hotmail/gdrive accounts anymore.

I tried contacting their support but never got any replies.

This was the moment I realized I fucked up big fucking time because damn, this stuff at this level hardly happens to anyone.

FUCK.37 -

Worst dev team failure I've experienced?

One of several.

Around 2012, a team of devs were tasked to convert a ASPX service to WCF that had one responsibility, returning product data (description, price, availability, etc...simple stuff)

No complex searching, just pass the ID, you get the response.

I was the original developer of the ASPX service, which API was an XML request and returned an XML response. The 'powers-that-be' decided anything XML was evil and had to be purged from the planet. If this thought bubble popped up over your head "Wait a sec...doesn't WCF transmit everything via SOAP, which is XML?", yes, but in their minds SOAP wasn't XML. That's not the worst WTF of this story.

The team, 3 developers, 2 DBAs, network administrators, several web developers, worked on the conversion for about 9 months using the Waterfall method (3~5 months was mostly in meetings and very basic prototyping) and using a test-first approach (their own flavor of TDD). The 'go live' day was to occur at 3:00AM and mandatory that nearly the entire department be on-sight (including the department VP) and available to help troubleshoot any system issues.

3:00AM - Teams start their deployments

3:05AM - Thousands and thousands of errors from all kinds of sources (web exceptions, database exceptions, server exceptions, etc), site goes down, teams roll everything back.

3:30AM - The primary developer remembered he made a last minute change to a stored procedure parameter that hadn't been pushed to production, which caused a side-affect across several layers of their stack.

4:00AM - The developer found his bug, but the manager decided it would be better if everyone went home and get a fresh look at the problem at 8:00AM (yes, he expected everyone to be back in the office at 8:00AM).

About a month later, the team scheduled another 3:00AM deployment (VP was present again), confident that introducing mocking into their testing pipeline would fix any database related errors.

3:00AM - Team starts their deployments.

3:30AM - No major errors, things seem to be going well. High fives, cheers..manager tells everyone to head home.

3:35AM - Site crashes, like white page, no response from the servers kind of crash. Resetting IIS on the servers works, but only for around 10 minutes or so.

4:00AM - Team rolls back, manager is clearly pissed at this point, "Nobody is going fucking home until we figure this out!!"

6:00AM - Diagnostics found the WCF client was causing the server to run out of resources, with a mix of clogging up server bandwidth, and a sprinkle of N+1 scaling problem. Manager lets everyone go home, but be back in the office at 8:00AM to develop a plan so this *never* happens again.

About 2 months later, a 'real' development+integration environment (previously, any+all integration tests were on the developer's machine) and the team scheduled a 6:00AM deployment, but at a much, much smaller scale with just the 3 development team members.

Why? Because the manager 'froze' changes to the ASPX service, the web team still needed various enhancements, so they bypassed the service (not using the ASPX service at all) and wrote their own SQL scripts that hit the database directly and utilized AppFabric/Velocity caching to allow the site to scale. There were only a couple client application using the ASPX service that needed to be converted, so deploying at 6:00AM gave everyone a couple of hours before users got into the office. Service deployed, worked like a champ.

A week later the VP schedules a celebration for the successful migration to WCF. Pizza, cake, the works. The 3 team members received awards (and a envelope, which probably equaled some $$$) and the entire team received a custom Benchmade pocket knife to remember this project's success. Myself and several others just stared at each other, not knowing what to say.

Later, my manager pulls several of us into a conference room

Me: "What the hell? This is one of the biggest failures I've been apart of. We got rewarded for thousands and thousands of dollars of wasted time."

<others expressed the same and expletive sediments>

Mgr: "I know..I know...but that's the story we have to stick with. If the company realizes what a fucking mess this is, we could all be fired."

Me: "What?!! All of us?!"

Mgr: "Well, shit rolls downhill. Dept-Mgr-John is ready to fire anyone he felt could make him look bad, which is why I pulled you guys in here. The other sheep out there will go along with anything he says and more than happy to throw you under the bus. Keep your head down until this blows over. Say nothing."11 -

It's maddening how few people working with the internet don't know anything about the protocols that make it work. Web work, especially, I spend far too much time explaining how status codes, methods, content-types etc work, how they're used and basic fundamental shit about how to do the job of someone building internet applications and consumable services.

The following has played out at more than one company:

App: "Hey api, I need some data"

API: "200 (plain text response message, content-type application/json, 'internal server error')"

App: *blows the fuck up

*msg service team*

Me: "Getting a 200 with a plaintext response containing an internal server exception"

Team: "Yeah, what's the problem?"

Me: "...200 means success, the message suggests 500. Either way, it should be one of the error codes. We use the status code to determine how the application processes the request. What do the logs say?"

Team: "Log says that the user wasn't signed in. Can you not read the response message and make a decision?"

Me: "That status for that is 401. And no, that would require us to know every message you have verbatim, in this case, it doesn't even deserialize and causes an exception because it's not actually json."

Team: "Why 401?"

Me: "It's the code for unauthorized. It tells us to redirect the user to the sign in experience"

Team: "We can't authorize until the user signs in"

Me: *angermatopoeia* "Just, trust me. If a user isn't logged in, return 401, if they don't have permissions you send 403"

Team: *googles SO* "Internet says we can use 500"

Me: "That's server error, it says something blew up with an unhandled exception on your end. You've already established it was an auth issue in the logs."

Team: "But there's an error, why doesn't that work?"

Me: "It's generic. It's like me messaging you and saying, "your service is broken". It doesn't give us any insight into what went wrong or *how* we should attempt to troubleshoot the error or where it occurred. You already know what's wrong, so just tell me with the status code."

Team: "But it's ok, right, 500? It's an error?"

Me: "It puts all the troubleshooting responsibility on your consumer to investigate the error at every level. A precise error code could potentially prevent us from bothering you at all."

Team: "How so?"

Me: "Send 401, we know that it's a login issue, 403, something is wrong with the request, 404 we're hitting an endpoint that doesn't exist, 503 we know that the service can't be reached for some reason, 504 means the service exists, but timed out at the gateway or service. In the worst case we're able to triage who needs to be involved to solve the issue, make sense?"

Team: "Oh, sounds cool, so how do we do that?"

Me: "That's down to your technology, your team will need to implement it. Most frameworks handle it out of the box for many cases."

Team: "Ah, ok. We'll send a 500, that sound easiest"

Me: *..l.. -__- ..l..* "Ok, let's get into the other 5 problems with this situation..."

Moral of the story: If this is you: learn the protocol you're utilizing, provide metadata, and stop treating your customers like shit.21 -

Boss: Our customer's data is not syncing with XYZ service anynmore!

Me: Ok let me check. Did the tokens not refresh? Hmm the tokens are refreshing fine but the API still says that we do not have permissions. The scopes are fine too. I'll use our test account... its... cancelled? Hey boss, why is our XYZ account cancelled?

Boss: Oh, "I haven’t paid since I didn’t think we needed it" (ad verbatim)

😐1 -

!(short rant)

Look I understand online privacy is a concern and we should really be very much aware about what data we are giving to whom. But when does it turn from being aware to just being paranoid and a maniac about it.? I mean okay, I know facebook has access to your data including your whatsapp chat (presumably), google listens to your conversations and snoops on your mail and shit, amazon advertises that you must have their spy system (read alexa) install in your homes and numerous other cases. But in the end it really boils down to "everyone wants your data but who do you trust your data with?"

For me, facebook and the so-called social media sites are a strict no-no but I use whatsapp as my primary chating application. I like to use google for my searches because yaa it gives me more accurate search results as compared to ddg because it has my search history. I use gmail as my primary as well as work email because it is convinient and an adv here and there doesnt bother me. Their spam filters, the easy accessibility options, the storage they offer everything is much more convinient for me. I use linux for my work related stuff (obviously) but I play my games on windows. Alexa and such type of products are again a big no-no for me but I regularly shop from amazon and unless I am searching for some weird ass shit (which if you want to, do it in some incognito mode) I am fine with coming across some advs about things I searched for. Sometimes it reminds me of things I need to buy which I might have put off and later on forgot. I have an amazon prime account because prime video has some good shows in there. My primary web browser is chrome because I simply love its developer tools and I now have gotten used to it. So unless chrome is very much hogging on my ram, in which case I switch over to firefox for some of my tabs, I am okay with using chrome. I have a motorola phone with stock android which means all google apps pre-installed. I use hangouts, google keep, google map(cannot live without it now), heck even google photos, but I also deny certain accesses to apps which I find fishy like if you are a game, you should not have access to my gps. I live in India where we have aadhar cards(like the social securtiy number in the USA) where the government has our fingerprints and all our data because every damn thing now needs to be linked with your aadhar otherwise your service will be terminated. Like your mobile number, your investment policies, your income tax, heck even your marraige certificates need to be linked with your aadhar card. Here, I dont have any option but to give in because somehow "its in the interest of the nation". Not surprisingly, this thing recently came to light where you can get your hands on anyone's aadhar details including their fingerprints for just ₹50($1). Fuck that shit.

tl;dr

There are and should be always exceptions when it comes to privacy because when you give the other person your data, it sometimes makes your life much easier. On the other hand, people/services asking for your data with the sole purpose of infilterating into your private life and not providing any usefulness should just be boycotted. It all boils down to till what extent you wish to share your data(ranging from literally installing a spying device in your house to them knowing that I want to understand how spring security works) and how much do you trust the service with your data. Example being, I just shared most of my private data in this rant with a group of unknown people and I am okay with it, because I know I can trust dev rant with my posts(unlike facebook).29 -

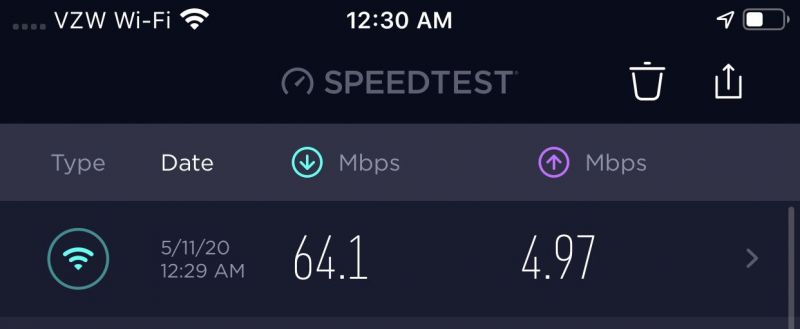

Dear Android:

I know I'm not on wifi. I get it. Sometimes data coverage isn't amazing or the network is congested. It's cool. You can just flash "no service" and I just won't try. or even "3G" and I'll have some patience. I rember how slow 3G was. It's okay, I'll wait.

But fucking stop showing 4G LTE if you can't make a fucking GET request for a 2kb text file in less than 5 minutes! Fucking really? Don't fucking lie to me with your false hope bullshit, just tell me the truth and I'll probably sigh and say shit and put my phone away.

But fuck you and your progress bar externally stuck in the middle. As if to say you're making progress! Wasting my time!

If you can't download a kilobyte in a 5min period, why even say I have data at all? What good does that do me?23 -

My first testing job in the industry. Quite the rollercoaster.

I had found this neat little online service with a community. I signed up an account and participated. I sent in a lot of bug reports. One of the community supervisors sent me a message that most things in FogBugz had my username all over it.

After a year, I got cocky and decided to try SQL injection. In a production environment. What can I say. I was young, not bright, and overly curious. Never malicious, never damaged data or exposed sensitive data or bork services.

I reported it.

Not long after, I got phone calls. I was pretty sure I was getting charged with something.

I was offered a job.

Three months into the job, they asked if I wanted to do Python and work with the automators. I said I don't know what that is but sure.

They hired me a private instructor for a week to learn the basics, then flew me to the other side of the world for two weeks to work directly with the automation team to learn how they do it.

It was a pretty exciting era in my life and my dream job.4 -

Q: Your data migration service from old site to new site cost money.

A: Yes, I have to copy data from old database and import to the new one.

Q: Can I just provide you content separately so you don’t need to do that?

A: Yes, but I will have to charge you for copying and pasting your 100 pages of content manually.

Q: Can it come with part of the web development service and not as an additional service?

A: Yes, but the price for web development service will have to be increased to combine the two. If you don’t want to pay for it, I can just set up a few sample pages with the layout and you can handle your own content entry. Does that work for you?

Q: Well, but then I will have to spend extra time to work on it.

A: Yes you will. (At this point I think she starts to understand the concept of Time = Money...)3 -

So we hired a junior engineer. 1 year of experience, this is his second job.

First task: Send some data to a web service using its REST API. Let me know when you've finished.

Two hours later I go to check on him.

- "I'm trying to decode this weird format the server uses"

He was writing a JSON parser in Python from scratch.

:/12 -

Every step of this project has added another six hurdles. I thought it would be easy, and estimated it at two days to give myself a day off. But instead it's ridiculous. I'm also feeling burned out, depressed (work stress, etc.), and exhausted since I'm taking care of a 3 week old. It has not been fun. :<

I've been trying to get the Google Sheets API working (in Ruby). It's for a shared sales/tracking spreadsheet between two companies.

The documentation for it is almost entirely for Python and Java. The Ruby "quickstart" sample code works, but it's only for 3-legged auth (meaning user auth), but I need it for 2-legged auth (server auth with non-expiring credentials). Took awhile to figure out that variant even existed.

After a bit of digging, I discovered I needed to create a service account. This isn't the most straightforward thing, and setting it up honestly reminds me of setting up AWS, just with less risk of suddenly and surprisingly becoming a broke hobo by selecting confusing option #27 instead of #88.

I set up a new google project, tied it to my company's account (I think?), and then set up a service account for it, with probably the right permissions.

After downloading its creds, figuring out how to actually use them took another few hours. Did I mention there's no Ruby documentation for this? There's plenty of Python and Java example code, but since they use very different implementations, it's almost pointless to read them. At best they give me a vague idea of what my next step might be.

I ended up reading through the code of google's auth gem instead because I couldn't find anything useful online. Maybe it's actually there and the past several days have been one of those weeks where nothing ever works? idk :/

But anyway. I read through their code, and while it's actually not awful, it has some odd organization and a few very peculiar param names. Figuring out what data to pass, and how said data gets used requires some file-hopping. e.g. `json_data_io` wants a file handle, not the data itself. This is going to cause me headaches later since the data will be in the database, not the filesystem. I guess I can write a monkeypatch? or fork their gem? :/

But I digress. I finally manged to set everything up, fix the bugs with my code, and I'm ready to see what `service.create_spreadsheet()` returns. (now that it has positively valid and correctly-implemented authentication! Finally! Woo!)

I open the console... set up the auth... and give it a try.

... six seconds pass ...

... another two seconds pass ...

... annnd I get a lovely "unauthorized" response.

asjdlkagjdsk.

> Pic related. rant it was not simple. but i'm already flustered damnit it's probably the permissions documentation what documentation "it'll be simple" he said google sheets google "totally simple!" she agreed it's been days. days!19

rant it was not simple. but i'm already flustered damnit it's probably the permissions documentation what documentation "it'll be simple" he said google sheets google "totally simple!" she agreed it's been days. days!19 -

Paypal Rant #2

Paypal might just be the only company with 98% of their employees being support staff because not a soul on this planet knows how to work with that fucking piece of shit of a company's service.

No really, if there was a shittiness-rating from 1-10 (10 = worst) you would have to store paypal's rating as a string or invent a new data type because no CPU could fucking work with such a big ass fucking number.

If I had to choose between Paypal and going back to manually trading physical goods/animals for stuff I would gladly choose the latter, because Paypal, go suck a bag of dicks you useless fucking shitpile of a "company".8 -

Do you really expect that I can debug in a few minutes, a part of the software that I didn't build and have never seen before and have no knowledge of the external, third-party web service that code is reaching out to?

Dude, flippin' chill, take a walk, grab a drink, pop some popcorn and give me some time to figure out what the hell this code is doing so I can properly debug it!

You know what it turned out to be? Wrong test data used for the 3rd party service. So in essence... Nothing was wrong! Frickity frack!2 -

Start a development job.

Boss: "let's start you off with something very easy. There's this third party we need data from. They have an api, just get the data and place it on our messaging bus."

Me: "sure, sounds easy enough"

Third party api turns out to have the most retarded conversation protocol. With us needing a service to receive data on while also having a client to register for the service. With a lot of timed actions like, 'send this message every five minutes' and 'check whether our last message was sent more than 11 minutes ago'.

Due to us needing a service, we also need special permissions through the company firewall. So I have to go around the company to get these permissions, FOR EVERY DATA STREAM WE NEED!

But the worst of it all is... This whole api is SOAP based!!

Also, Hey DevRant!5 -

For a week+ I've been listening to a senior dev ("Bob") continually make fun of another not-quite-a-senior dev ("Tom") over a performance bug in his code. "If he did it right the first time...", "Tom refuses to write tests...that's his problem", "I would have wrote the code correctly ..." all kinds of passive-aggressive put downs. Bob then brags how without him helping Tom, the application would have been a failure (really building himself up).

Bob is out of town and Tom asked me a question about logging performance data in his code. I look and see Bob has done nothing..nothing at all to help Tom. Tom wrote his own JSON and XML parser (data is coming from two different sources) and all kinds of IO stream plumbing code.

I use Visual Studio's feature create classes from JSON/XML, used the XML Serialzier and Newtonsoft.Json to handling the conversion plumbing.

With several hundred of lines gone (down to one line each for the XML/JSON-> object), I wrote unit tests around the business transaction, integration test for the service and database access. Maybe couple of hours worth of work.

I'm 100% sure Bob knew Tom was going in a bad direction (maybe even pushing him that direction), just to swoop in and "save the day" in front of Tom's manager at some future point in time.

This morning's standup ..

Boss: "You're helping Tom since Bob is on vacation? What are you helping with?"

Me: "I refactored the JSON and XML data access, wrote initial unit and integration tests. Tom will have to verify, but I believe any performance problem will now be isolated to the database integration. The problem Bob was talking about on Monday is gone. I thought spending time helping Tom was better than making fun of him."

<couple seconds of silence>

Boss:"Yea...want to let you know, I really, really appreciate that."

Bob, put people first, everyone wins.11 -

National Health Service (nhs) in the UK got hacked today... Workers at the hospitals could not access patient and appointment related data... How big a cheapskate you gotta be to hack a free public health service that is almost dying for fund shortages anyway...16

-

Yesterday the web site started logging an exception “A task was canceled” when making a http call using the .Net HTTPClient class (site calling a REST service).

Emails back n’ forth ..blaming the database…blaming the network..then a senior web developer blamed the logging (the system I’m responsible for).

Under the hood, the logger is sending the exception data to another REST service (which sends emails, generates reports etc.) which I had to quickly re-direct the discussion because if we’re seeing the exception email, the logging didn’t cause the exception, it’s just reporting it. Felt a little sad having to explain it to other IT professionals, but everyone seemed to agree and focused on the server resources.

Last night I get a call about the exceptions occurring again in much larger numbers (from 100 to over 5,000 within a few minutes). I log in, add myself to the large skype group chat going on just to catch the same senior web developer say …

“Here is the APM data that shows logging is causing the http tasks to get canceled.”

FRACK!

Me: “No, that data just shows the logging http traffic of the exception. The exception is occurring before any logging is executed. The task is either being canceled due to a network time out or IIS is running out of threads. The web site is failing to execute the http call to the REST service.”

Several other devs, DBAs, and network admins agree.

The errors only lasted a couple of minutes (exactly 2 minutes, which seemed odd), so everyone agrees to dig into the data further in the morning.

This morning I login to my computer to discover the error(s) occurred again at 6:20AM and an email from the senior web developer saying we (my mgr, her mgr, network admins, DBAs, etc) need to discuss changes to the logging system to prevent this problem from negatively affecting the customer experience...blah blah blah.

FRACKing female dog!

Good news is we never had the meeting. When the senior web dev manager came in, he cancelled the meeting.

Turned out to be a hiccup in a domain controller causing the servers to lose their connection to each other for 2 minutes (1-minute timeout, 1 minute to fully re-sync). The exact two-minute burst of errors explained (and proven via wireshark).

People and their petty office politics piss me off.2 -

Uploaded an app to Appstore and it was rejected because the Gender dropdown at registration only has "Male" and "Female" as required selectable options. The reviewer thought it was right to force an inclusion of "Other" option inside a Medical Service app that is targeting a single country which also only recognizes only Male/Female as gender.

Annoyingly, I wrote back a dispute on the review:

Hello,

I have read your inclusion request and you really shouldn't be doing this. Our app is a Medical Service app and the Gender option can only be either Male or Female based on platform design, app functionality and data accuracy. We are also targeting *country_name* that recognizes only Male/Female gender. Please reconsider this review.

{{No reply after a week}}

-- Proceeds to include the option for "Other"

-- App got approved.

-- Behind the scene if you select the "other" option you are automatically tagged female.

Fuck yeah!31 -

Most ignorant ask from a PM or client?

So, so many. How do I chose?

- Wanting to 'speed up' a web site that we did not own, in Sweden (they used a service I wrote). His 'benchmark' was counting "one Mississippi, two Mississippi" while the home page rendered on his home PC and < 1MB DSL connection (he lives in a rural area).

- Wanted to change the sort order of a column of report so it 'sometimes' sorted on 'ABC' (alpha) or '123' (numeric) and sometimes, a mix of both. His justification was if he could put the data in the order he wanted in Excel, the computer should be smart enough to do the same thing.

- Wanted a Windows desktop application to run on an android.

- Wanted to write the interface to a new phone system that wasn't going to be installed for months. Even though we had access to the SDK, he didn't understand the SDK required access to the hardware. For several weeks he would send emails containing tutorials on interfacing with COM libraries (as if that was my problem).

- Wanted to write a new customer support application in XML. I told him I would have the application written tomorrow if he could tell me what XML stands for.4 -

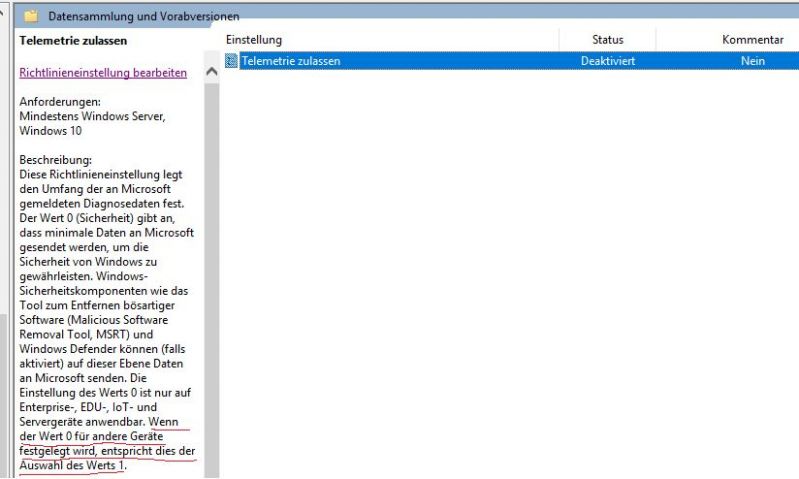

"Thank you for choosing Microsoft!"

No Microsoft, I really didn't choose you. This crappy hardware made you the inevitable, not a choice.

And like hell do I want to run your crappy shit OS. I tried to reset my PC, got all my programs removed (because that's obviously where the errors are, not the OS, right? Certified motherfuckers). Yet the shit still didn't get resolved even after a reset. Installing Windows freshly again, because "I chose this".

Give me a break, Microshaft. If it wasn't for your crappy OS, I would've gone to sleep hours ago. Yet me disabling your shitty telemetry brought this shit upon me, by disabling me to get Insider updates just because I added a registry key and disabled a service. Just how much are you going to force data collection out of your "nothing to hide, nothing to fear" users, Microsoft?

Honestly, at this point I think that Microsoft under Ballmer might've been better. Because while Linux was apparently cancer back then, at least this shitty data collection for "a free OS" wasn't yet a thing back then.

My mother still runs Vista, an OS that has since a few months ago reached EOL. Last time she visited me I recommended her to switch to Windows 7, because it looks the same but is better in terms of performance and is still supported. She refused, because it might damage her configurations. Granted, that's probably full of malware but at this point I'm glad she did.

Even Windows 7 has telemetry forcibly enabled at this point. Vista may be unsupported, but at least it didn't fall victim to the current status quo - data mining on every Microshaft OS that's still supported.

Microsoft may have been shady ever since they pursued manufacturers into defaulting to their OS, and GPU manufacturers will probably also have been lobbied into supporting Windows exclusively. But this data mining shit? Not even the Ballmer era was as horrible as this. My mother may not realize it, but she unknowingly avoided it.6 -

I fucking love my local phone service provider. They have a game (70mb) like Temple run where we get free mobile data for playing it. The 70 mb for downloading it gets reimbursed too !!

22

22 -

Sorry for being late, stuffs came inbetween!

I have done a few privacy rants/posts before but why not another one. @tahnik did one a few days ago so I thought I'd do a new one myself based on his rant.

So, online privacy. Some people say it's entirely dead, that's bullshit. It's up to an individual, though, how far they want to go as for protecting it.

I personally want to retain as much control over my data as possible (this seems to be a weird thing these days for unknown reasons...). That's why I spend quite some time/effort to take precautions, read myself into how to protect my data more and so on.

'Everyone should have the choice of what services they use' - fully agreed, no doubt about that.

I just find one thing problematic. Some services/companies handle data in a way or have certain business models which takes the control which some people want/have over their data away when you communicate with someone using that service.

Some people (like me) don't want anything to do with google but even when I want to email my best fucking friend, I lose the control over that email data since he uses gmail.

So, when someone chooses to use gmail and I *HAVE* to email them, my choice is gone.

TO BE VERY CLEAR: I'm not blaming that on the users, I'm blaming that on the company/service.

Then for example, google analytics. It's a very good/powerful when you're solely looking at its functions.

I just don't want to be part of their data collection as I don't want to get any data into the google engine.

There's a solution for that: installing an addon in order to opt out.

I'm sorry, WHAT?! --> I <-- have to install an addon in order to opt out of something that is happening on my own motherfucking computer?! What the actual fuck, I don't call that a fucking solution. I'll use Privacy Badger + hosts files to block that instead.

Google vs 'privacy' friendly search engines - I don't trust DDG completely because their backend is closed/not available to the public but I'd rather use them then a search engine which is known to be integrated into PRISM/other surveillance engines by default.

I don't mind the existence of certain services, as long as they don't integrated you with data hungry companies/mass surveillance without you even using their services.

Now lets see how fast the comment section explodes!26 -

Well that was a good little time off :)

Decided to go offline for a little and that was a good one, hello again.

Wrote a geoip service because I hate rate limits and such so fuck it, why not write one myself (data is not that accurate as it's free but quite alright if you ask me) (front end still fucking sucks).

So yes, that's I guess :P12 -

I would like to invite you all to test the project that a friend and me has been working on for a few months.

We aim to offer a fair, cheap and trusty alternative to proprietary services that perform data mining and sells information about you to other companies/entities.

Our goal is that users can (if they want) remain anonymous against us - because we are not interested in knowing who you are and what you do, like or want.

We also aim to offer a unique payment system that is fair, good and guarantees your intergrity by offer the ability to pay for the previous month not for the next month, by doing that you do not have to pay for a service that you does not really like.

Please note that this is still Free Beta, and we need your valuable experience about the service and how we can improve it. We have no ETA when we will launch the full service, but with your help we can make that process faster.

With this service, we do want to offer the following for now:

Nextcloud with 50 GB storage, yes you can mount it as a drive in Linux :)

Calendar

Email Client that you can connect to your email service (

SearX Instance

Talk ( voice and video chat )

Mirror for various linux distros

We are using free software for our environment - KVM + CEPH on our own hardware in our own facility. That means that we have complete control over the hosting and combined with one of the best ISP in the world - Bahnhof - we believe that we can offer something unique and/or be a compliment to your current services if you want to have more control over your data.

Register at:

https://operationtulip.com

Feel free to user our mirror:

https://mirror.operationtulip.com

Please send your feedback to:

feedback@operationtulip.com38 -

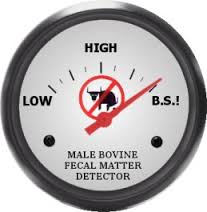

In our morning stand up, dev was bragging about how much code he was refactoring (like over-the-top bragging) and how much the changes will improve readability (WTF does that mean?), performance, blah blah blah. Boss was very impressed, I wasn't. This morning I looked at the change history and yes, he spent nearly two solid days changing code. What code? A service that is over 10 years old, hasn't been used in over 5, mostly auto-generated code (various data contracts from third party systems). He "re-wrote" the auto-generated code, "fixed" various IDisposable implementations and other complete wastes of time. How –bleep-ing needy are people for praise and how –bleep-ing stupid are people for believing such bull-bleep? I think I should get a t-shirt made with a picture of a BS-Meter and when he starts talking, “Wait a sec, I gotta change my shirt. OK…you were saying?”

5

5 -

Developer came to our area to rant a bit about a problem he was having with Xamarin. A particular android device was receiving a java runtime error trying to de-serialize data from a WCF contract. What he found was not to use WCF and use WebAPI (or a simple REST service that sent back/forth JSON).

When he proposed changing the service (since the data transport didn’t really matter, he could plug the assembly into a WebAPI project in less than an hour), the dev manager shot down the idea pointing him to our service standard that explicitly stated no WebAPI (it’s in bold letters).

I showed him the date on the “standard”, which was 5 years ago. We have versioning on our sharepoint server, so I also him my proposal notes on the change request document (almost two years before that) stating we should stop using WCF in favor of REST based web standards. Dev manager at the time had wrote in his comment “Will never use REST. Enterprise developers prefer RPC.”

He just about fell over laughing when I showed him this gif. 2

2 -

So today was the worst day of my whole (just started) career.

We have a huge client like 700k users. Two weeks ago we migrated all their services to our aws infrastructure. I basically did most of the work because I'm the most skilled in it (not sure anymore).

Today I discovered:

- Mail cron was configured the wrong way so 3000 emails where waiting to be sent.

- The elastic search service wasn't yet whitelisted so didn't work for two weeks.

- The cron which syncs data between production db en testing db only partly worked.

Just fucking end me. Makes me wonder what other things are broken. I still have a lot to learn... And I might have fucked their trust in me for a bit.13 -

Unaware that this had been occurring for while, DBA manager walks into our cube area:

DBAMgr-Scott: "DBA-Kelly told me you still having problems connecting to the new staging servers?"

Dev-Carl: "Yea, still getting access denied. Same problem we've been having for a couple of weeks"

DBAMgr-Scott: "Damn it, I hate you. I got to have Kelly working with data warehouse project. I guess I've got to start working on fixing this problem."

Dev-Carl: "Ha ha..sorry. I've checked everything. Its definitely something on the sql server side."

DBAMgr-Scott: "I guess my day is shot. I've got to talk to the network admin, when I get back, lets put our heads together and figure this out."

<Scott leaves>

Me: "A permissions issue on staging? All my stuff is working fine and been working fine for a long while."

Dev-Carl: "Yea, there is nothing different about any of the other environments."

Me: "That doesn't sound right. What's the error?"

Dev-Carl: "Permissions"

Me: "No, the actual exception, never mind, I'll look it up in Splunk."

<in about 30 seconds, I find the actual exception, Win32Exception: Access is denied in OpenSqlFileStream, a little google-fu and .. >

Me: "Is the service using Windows authentication or SQL authentication?"

Dev-Carl: "SQL authentication."

Me: "Switch it to windows authentication"

<Dev-Carl changes authentication...service works like a charm>

Dev-Carl: "OMG, it worked! We've been working on this problem for almost two weeks and it only took you 30 seconds."

Me: "Now that it works, and the service had been working, what changed?"

Dev-Carl: "Oh..look at that, Dev-Jake changed the connection string two weeks ago. Weird. Thanks for your help."

<My brain is screaming "YOU NEVER THOUGHT TO LOOK FOR WHAT CHANGED!!!"

Me: "I'm happy I could help."4 -

Our Service Oriented Architecture team is writing very next-level things, such as JSON services that pass data like this:

<JSON>

<Data>

...

</Data>

</JSON>23 -

Couple days ago found the DisneyResearch channel again and it's really addictive, impressive and scary to watch, here's an example: https://youtu.be/E4tYpXVTjxA

wonder how much data could be extracted from disney world/land visitors with that in the food service areas 3

3 -

[Dark Rant]

I'm sick of this stupid tech world.

Don't get me wrong, I love tech. I just can't stand anymore the global brainwashing that we're part of.

Think about all the huge companies making profit on our data. For a better service, yeah sure, but do we really understand what the cost is?

Ok sure, you don't care about your data because you trust these companies and the advantages are all worth it. What about the fact that we are all forced to buy the next new smartphone after 2 years?

Like if removable batteries were a problem for us, users. Or like the audio jack. Because now someone decided that the pricey wireless headphones are Just What You Need™.

Do you think you own your smartphone?

No, you don't. You are paying a bunch of money for something that soon will be just a useless brick of glass and metal which you can't repair. But you'll be happy anyway.

Someone is so happy to the point that they will defend their favorite company, doesn't matter how they decided to stick it into their ass.

Open your eyes, you've been brainwashed.25 -

So my friend was in a hurry when she was setting up the passocde for her phone and later she forgot the code.

So she takes it to the service center.

SC guy: Ma'am we have to do bla bla bla. And you will lose your data. It will cost you around $10.

She just came back and later gave me the phone.

*unlocks bootloader *

*flashes a custom recovery*

*delete passcode file*

Phone is now unlocked with all the data intact.

PS: I got a small treat at McDonald's. 😋6 -

For fuck sake, stop complaining about the lack of privacy everywhere.

I'm not saying that worrying about your privacy is bad, I also really want to be protected and I know the risks we run when put our information on the net, I care about my data, but please stop acting like whoever uses Google, Facebook or Windows is a fool and you're the only genius around.

Because guess, I use their services and when I use them I'm explicitly authorizing them to process my data, to track me and to create a profile about me. It's an exchange, I know what they're doing and I've control on the data I'm serving them.

If, for some reason, I want to be more protected then I fucking use some open source iper-safe alternative, and that's it.

Seriously, I'm happy if you use those fancy alternative services for everything (for your reasons, I don't care) and I'm glad if you decided to don't use any closed source service anymore, but please, stop screaming against who uses them19 -

Setup my port honeypot today finally, including port 22, then wrote a custom dashboard for some data tracking, feels great to have it open on my screen seeing the bans just roll in every 2 seconds of refresh, the highest hits are as expected from china, russia and india, also filed ~700 reports and already got 300 banned from their service. (mainly Microsoft Azure for whatever reason)

I wanted to first automate that (or atleast blacklist report to various IP lists via API), but then I was afraid that I'll be one day stupid enough to somehow get banned - don't want myself to get reported lol5 -

I just launched a small web service/app. I know this looks like a promo thing, but it's completely non-profit, open source and I'm only in it for the experience. So...

Introducing: https://gol.li

All this little app offers is a personal micro site that lists all your social network profiles. Basically share one link for all your different profiles. And yes, it includes DevRant of course. :)

There's also an iframe template for easy integration into other web apps and for the devs there's a super simple REST GET endpoint for inclusion of the data in your own apps.

The whole thing is on GitHub and I'd be more than happy for any kind of contribution. I'm looking forward to adding features like more personalization, optimizing stuff and fixing things. Also any suggestions on services you'd like see. Pretty much anything that involves a public profile goes.

I know this isn't exactly world changing, but it's just a thing I wanted to do for some time now, getting my own little app out there.9 -

Worked with a European consulting company to integrate some shared business data (aka. calling a service).

VP of IT called an emergency meeting (IT managers, network admins) deeply concerned about the performance of the international web site since adding our services.

VP: “The partner’s site is much slower than ours. Only common piece that could cause that is your service.”

Me: “Um, their site is vastly different than ours. I don’t think we can compare their performance to ours.”

VP: “Performance is #1! I need your service fixed ASAP!”

Me: “OK, but what exactly is slow? How did you measure their site? The servers are in Germany”

VP: “I measured performance from my house last night.”

Me: “Did you use an application?”

VP: “<laughs> oh no, I was at home. When I opened the page, I counted one Mississippi, two Mississippi, three Mississippi, then the page displayed.”

Me: “Wow…um…OK…uh…how long does our page take to load?”

VP: “Two Mississippi’s”

Me: “Um…wow…OK…wow…uh, no, we don’t measure performance like that, but I’ll work with our partners and develop a performance benchmark to determine if the shared service is behaving differently.”

VP: “Whatever it is, the service is slow. Bill, what do you think is slowing down the service?”

NetworkAdmin-Bill: “The Atlantic Ocean?”

VP got up and left the meeting.2 -

@netikras since when does proprietary mean bad?

Lemme tell you 3 stories.

CISCO AnyConnect:

- come in to the office

- use internal resources (company newsletter, jira, etc.)

- connect to client's VPN using Cisco AnyConnect

- lose access to my company resources, because AnyConnect overwrites routing table (rather normal for VPN clients)

- issue a route command updating routing table so you could reach confluence page in the intranet

- route command executes successfully, `route -n` shows nothing has changed

- google this whole WTF case

- Cisco AnyConnect constantly overwrites OS routing table to ENFORCE you to use VPN settings and nothing else.

Sooo basically if you want to check your company's email, you have to disconnect from client's VPN, check email and reconnect again. Neat!

Can be easily resolved by using opensource VPN client -- openconnect

CISCO AnyConnect:

- get a server in your company

- connect it to client's VPN and keep the VPN running for data sync. VPN has to be UP at all times

- network glitch [uh-oh]

- VPN is no longer working, AnyConnect still believes everything is peachy. No reconnect attempts.

- service is unable to sync data w/ client's systems. Data gets outdated and eventually corrupted

OpenConnect (OSS alternative to AnyConnect) detects all network glitches, reports them to the log and attempts reconnect immediatelly. Subsequent reconnect attempts getting triggered with longer delays to not to spam network.

SYMANTEC VIP (alleged 2FA?):

- client's portal requires Sym VIP otp code to log in

- open up a browser in your laptop

- navigate to the portal

- enter your credentials

- click on a Sym VIP icon in the systray

- write down the shown otp number

- log in

umm... in what fucking way is that a secure 2FA? Everything is IN the same fucking device, a single click away.

Can be easily solved by opensource alternatives to Sym VIP app: they make HTTP calls to Symantec to register a new token and return you the whole totp url. You can convert that url to a qr code and scan it w/ your phone (e.g. Google's Authenticator). Now you have a true 2FA.

Proprietary is not always bad. There are good propr sw too. But the ones that are core to your BAU and are doing shit -- well these ARE bad. and w/o an oppurtunity to workaround/fix it yourself.13 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

Fuck Optimizely.

Not because the software/service itself is inherently bad, or because I don't see any value in A/B testing.

It's because every company which starts using quantitative user research, stops using qualitative user research.

Suddenly it's all about being data driven.

Which means you end up with a website with bright red blinking BUY buttons, labels which tell you that you must convert to the brand cult within 30 seconds or someone else will steal away the limited supply, and email campaigns which promise free heroin with every order.

For long term brand loyalty you need a holistic, polished experience, which requires a vision based on aesthetics and gut feelings -- not hard data.

A/B testing, when used as some kind of holy grail, causes product fragmentation. There's a strong bias towards immediate conversions while long term churn is underrepresented.

The result of an A/B test is never "well, our sales increased since we started offering free heroin with every sale, but all of our clients die after 6 months so our yearly revenue is down -- so maybe we should offer free LSD instead"5 -

"Ad targeters are pulling data from your browser’s password manager"

---

Well, fuck.

"It won't be easy to fix, but it's worth doing"

Just check for visibility or like other password managers handle it iirc: assign a unique identifier based on form content and fill that identifier only.

---

"Nearly every web browser now comes with a password manager tool, a lightweight version of the same service offered by plugins like LastPass and 1Password. But according to new research from Princeton's Center for Information Technology Policy, those same managers are being exploited as a way to track users from site to site.

The researchers examined two different scripts — AdThink and OnAudience — both of are designed to get identifiable information out of browser-based password managers. The scripts work by injecting invisible login forms in the background of the webpage and scooping up whatever the browsers autofill into the available slots. That information can then be used as a persistent ID to track users from page to page, a potentially valuable tool in targeting advertising."

Source: https://theverge.com/2017/12/... 14

14 -

"We use WSDL and SOAP to provide data APIs"

- Old-fashioned but ok, gimme the service def file

(The WSDL services definition file describes like 20 services)

- Cool, I see several services. In need those X data entities.

"Those will all be available through the Data service endpoint"

- What you mean "all entities in the same endpoint"? It is a WSDL, the whole point is having self-documented APIs for each entity format!

"No, you have a parameter to set the name of the data entity you want, and each entity will have its own format when the service return it"

- WTF you need the WSDL for if you will have a single service for everything?!?

"It is the way we have always done things"

Certain companies are some outdated-ass backwater tech wannabees.

Usually those that have dominated the market of an entire country since the fucking Perestroika.

The moment I turn on the data pipeline, those fuckers are gonna be overloaded into oblivion. I brought popcorn.6 -

So I've decided to go about converting a Java project that I've been working on to Kotlin a little bit at a time. I started out with basic entity classes converting them to simple `data class`es in Kotlin.

Eventually, I got to my first beast of a class to refactor. This class had over 40 service classes depending on it, so even a little hiccup would throw everything into chaos.

I finish all of the changes on all of the dependent classes, update the tests, and the configurations (as necessary), and I was finally ready to spin up the app to test for any breaking changes I may have introduced...

Well - I broke everything! But I was sure I couldn't have! So what the hell happened?

Turns out that as I was building my project with a Gradle watch, at one point something failed to compile, which threw an unhandled exception in the gradle daemon that was never reported.

So when I tried to run my app, gradle would continually re-throw the error in the app I asked it to run...

After turning the daemon off and on again, the app worked like a charm. 10

10 -

I just tried to sign up to Instagram. I made a big mistake.

First up with Facebook related stuff is data. Data, data and more data. Initially when you sign up (with a new account, not login with Facebook) you're asked your real name, email address and phone number. And finally the username you'd like to have on the service. I gave them a phone number that I actually own, that is in my iPhone, my daily driver right now (and yes I have 3 Androids which all run custom ROMs, hold your keyboards). The email address is a usual for me, instagram at my domain. I am a postmaster after all, and my mail server is a catch-all one. For a setup like that, this is perfectly reasonable. And here it's no different, devrant at my domain. On Facebook even, I use fb at my domain. I'm sure you're starting to see a pattern here. And on Facebook the username, real name and email domain are actually the same.

So I signed up, with - as far as I'm aware - perfectly valid data. I submitted the data and was told that someone at Instagram will review the data within 24 hours. That's already pretty dystopian to me. It is now how you block bots. It is not how Facebook does it either, at least since last time I checked. But whatever. You'd imagine that regardless of the result, they'd let you know. Cool, you're in, or sorry, you're rejected and here's why. Nope.

Fast-forward to today when I recalled that I wanted to sign up to Instagram to see my girlfriend's pictures. So I opened Chromium again that I already use only for the rancid Facebook shit.. and it was rejected. Apparently the mere act of signing up is a Terms of Service violation. I have read them. I do not know which section I have violated with the heinous act of signing up. But I do have a hunch.

Many times now have I been told by ignorant organizations that I would be "stealing" their intellectual property, or business assets or whatever, just because I sent them an email from their name on my domain. It is fucking retarded. That is MY domain, not yours. Learn how email works before you go educate a postmaster. Always funny to tell them how that works. But I think that in this case, that is what happened.

So I appealed it, using a random link to something on Instagram's help section from a third-party blog. You know it's good when the third-party random blog is better. But I found the form and filled it in. Same shit all over again for info, prefilling be damned I guess. Minor convenience though, whatever.

I get sent an email in German, because apparently browsing through a VPS in Germany acting as a VPN means you're German. Whatever... After translating it, I found that it asks me to upload a picture of myself, holding a paper in my hands, on which I would have a confirmation code, my username, and my email address.. all hand-written. It must not be too dark, it must be clear, it must be in JPEG.. look, I just wanted to fucking sign up.

I sent them an email back asking them to fix all of this. While I was writing it and this rant, I thought to myself that they can shove that piece of paper up their ass. In fact I would gladly do it for them.

Long story short, do not use Instagram. And one final thing I have gripes with every time. You are not being told all the data you'll have to present from the get-go. You're not being told the process. Initially I thought it'd just be email, phone, username, and real name. Once signed up (instantly, not within 24 hours!) I would start setting up my account and adding a profile picture. The right way to ask for a picture of me! And just do it at my own pace, as I please.

And for God's sake, tackle abuse when it actually happens. You'll find out who's a bot and who isn't by their usage patterns soon enough. Do not do any of this at sign-up. Or hell, use a CAPTCHA or whatever, I don't fucking care. There's so many millions of ways to skin this cat.

Facebook and especially Instagram. Both of them are fucking retarded.6 -

Okay, That right there is pathetic https://thehackernews.com/2019/02/... .

First of all telekom was not able to assure their clients' safety so that some Joe would not access them.

Second of all after a friendly warning and pointing a finger to the exact problem telekom booted the guy out.

Thirdly telekom took a defensive position claiming "naah, we're all good, we don't need security. We'll just report any breaches to police hence no data will be leaked not altered" which I can't decide whether is moronic or idiotic.

Come on boys and girls... If some chap offers a friendly hand by pointing where you've made a mistake - fix the mistake, Not the boy. And for fucks sake, say THANK YOU to the good lad. He could use his findings for his own benefit, to destroy your service or even worse -- sell that knowledge on black market where fuck knows what these twisted minds could have done with it. Instead he came to your door saying "Hey folks, I think you could do better here and there. I am your customes and I'd love you to fix those bugzies, 'ciz I'd like to feel my data is safe with you".

How on earth could corporations be that shortsighted... Behaviour like this is an immediate red flag for me, shouting out loud "we are not safe, do not have any business with us unless you want your data to be leaked or secretly altered".

Yeah, I know, computer misuse act, etc. But there are people who do not give a tiny rat's ass about rules and laws and will find a way to do what they do without a trace back to them. Bad boys with bad intentions and black hoodies behind TOR will not be punished. The good guys, on the other hand, will.

Whre's the fucking logic in that...

P.S. It made me think... why wouldn't they want any security vulns reported to them? Why would they prefer to keep it unsafe? Is it intentional? For some special "clients"? Gosh that stinks6 -

[Begin Rant] When you show your senior manager your REST Web Service and he says "Oh no nooo... I don't wanna see no code"... Me: Code?? That ain't code you fat silly fucker it's the command line output data which I spent a week parsing, batch processing, and storing into the database! [End Rant] :[4

-

I once found a MongoDB cluster open to the internet with no authentication with nearly a terabyte of data that backed a CRM service whose customers included Microsoft and Adobe to name a few.7

-

Me - Yeah great so you say it's big data we are gonna be analyzing and having to store, are you currently utilizing a service and aggregating any of it into smaller manageable segments?

Client - well yeah it's lots and lots of data, we can share it with you if you sign a nda.

Me - ok... sure, how are you gonna share it with me.

Client - oh I can email you the spreadsheet.

Me - .... Spreadsheet ... Um... Ok... 'Stands up and walks away to tell this as the most interesting meeting of the month, to some one that will get it'

--

Buzz word for the win!9 -

<just got out of this meeting>

Mgr: “Can we log the messages coming from the services?”

Me: “Absolutely, but it could be a lot of network traffic and create a lot of noise. I’m not sure if our current logging infrastructure is the right fit for this.”

Senior Dev: “We could use Log4Net. That will take care of the logging.”

Mgr: “Log4Net?…Yea…I’ve heard of it…Great, make it happen.”

Me: “Um…Log4Net is just the client library, I’m talking about the back-end, where the data is logged. For this issue, we want to make sure the data we’re logging is as concise as possible. We don’t want to cause a bottleneck inside the service logging informational messages.”

Mgr: “Oh, no, absolutely not, but I don’t know the right answer, which is why I’ll let you two figure it out.”

Senior Dev: “Log4Net will take care of any threading issues we have with logging. It’ll work.”

Me: “Um..I’m sure…but we need to figure out what we need to log before we decide how we’re logging it.”

Senior Dev: “Yea, but if we log to SQL database, it will scale just fine.”

Mgr: “A SQL database? For logging? That seems excessive.”

Senior Dev: “No, not really. Log4Net takes care of all the details.”

Me: “That’s not going to happen. We’re not going to set up an entire sql database infrastructure to log data.”

Senior Dev: “Yea…probably right. We could use ElasticSearch or even Redis. Those are lightweight.”

Mgr: “Oh..yea…I’ve heard good things about Redis.”

Senior Dev: “Yea, and it runs on Linux and Linux is free.”

Mgr: “I like free, but I’m late for another meeting…you guys figure it out and let me know.”

<mgr leaves>

Me: “So..Linux…um…know anything about administrating Redis on Linux?”

Senior Dev: ”Oh no…not a clue.”

It was all I could do from doing physical harm to another human being.

I really hate people playing buzzword bingo with projects I’m responsible for.

Only good piece is he’s not changing any of the code.3 -

While trying to integrate a third-party service:

Their Android SDK accepts almost anything as a UID, even floats and doubles. Which is odd, who uses those as UIDs? I pass an Integer instead. No errors. Seems like it's working. User shows up on their dashboard.

Next let's move onto using their data import API. Plug in everything just like I did on mobile. Whoa, got an error. "UIDs must be a string". What. Uh, but the SDK accepts everything with no error. Ok fine. Change both the SDK and API to return the UID as a string. No errors returned after changing the UIDs.

Check dashboard for user via UID. Uh, properties haven't been updating. Check search properties. Find out that UIDs can only be looked up as Integers. What? Why do you ask me to send it as a string via the API then? Contact support. Find out it created two distinct records with the UID, one as a string and the other as an Integer.

GFG.3 -

So I know most of you got some kind of hate for Facebook and Zuckerberg (aka Z U C C) now, but ffs, watching some of the highlights of this congress-thing that went on makes me more or less feel sympathy for him and his idea, even tho I know he wants to achieve exactly this.

Some of the questions asked can suck a big fucken data-dick. "How many Facebook Like-Buttons are there on Non-Facebook pages?", "How many data-categories do you gather?", "How do you sustain a business model and stay free?" - DUDE WHAT IN HEAVENS NAME?? And they ask that shit so serious and so "now-i'm-going-to-bust-you"-esk, but actually the question is just plain stupid and shows how the questioning side has no clue about the shit.

My point of view is that people decided to have an online life and have to take what it does. Having a smartphone with a Facebook service installed (owning an account or not) is enough to track your location, stored under your IMEI or some shit like that. They may not even go that far but that's just my opinion.

If you are online everything can see you and use you that way. Borders are a fictious thing. A dude in Czechia can easily shoot you when you're on the German side of the border between those two countries. And still we gave up on walls...:p

Welcome to a world which is ruled by dumbass people where nerds who just want to have some fun need to defend themselves because the people up there don't know a single shit.5 -

So my client is (was) paying 3500$~ a month to that service that has also an API and we have been now fighting atleast 2 months for them to raise the rate limit higher. (because the new features pull in a lot more records, to basically make their shitty old dashboard obsolete at some point)

He's even willing to pay more, but the ticket and calls just get thrown around from one level to another, when he threatened to quit, all they changed was to send him to another level that was suggesting 3 months 10% off and when he declined it just got thrown into the pool again lol

So what we end up doing is register his wife on same service (there's not really any alternatives that actually have all that weird shit he needs and his wife was co-owner anyway, so it was just a name change basically), but just tick the higher API rate limit and it worked, he's now quitting the old one.

What's funny though, the new contracts for the same thing he was paying cost just ~2450$ (would have been even less, but hes too clingy on that one page I can't recreate without having the data) so they just lost that revenue, just because they didn't want to raise the API rate limit and the client also decided to give me the difference of one month on top of my contract, once the new contract kicks in and the old one expires in 6ish days (at best) or 12ish days at worst

well done support and assigned engineers, not only did you just lose a client with an old contract paying you 12000$/year more, but you also gave me a great free bost in money lol

btw: I hope I put everything in again, I this time decided to be brave (read as "stupid") and wrote it in the devrant webapp, then accidentally clicked twice outside the borders, making everything disappear.. -

*Working on a project with boss, I am working on a mobile app, he is working on web service app.

Me: this service takes user id as parameter to get all account details (all other web services are like that)

Boss: yes, I use the id to filter the data.

Me: but by this, everyone has the id can do anything ! why we do not use session token?

Boss: this is a detail, it is not important !

Me:...

*7 years of experience my ass 5

5 -

Best code performance incr. I made?

Many, many years ago our scaling strategy was to throw hardware at performance problems. Hardware consisted of dedicated web server and backing SQL server box, so each site instance had two servers (and data replication processes in place)

Two servers turned into 4, 4 to 8, 8 to around 16 (don't remember exactly what we ended up with). With Window's server and SQL Server licenses getting into the hundreds of thousands of dollars, the 'powers-that-be' were becoming very concerned with our IT budget. With our IT-VP and other web mgrs being hardware-centric, they simply shrugged and told the company that's just the way it is.

Taking it upon myself, started looking into utilizing web services, caching data (Microsoft's Velocity at the time), and a service that returned product data, the bottleneck for most of the performance issues. Description, price, simple stuff. Testing the scaling with our dev environment, single web server and single backing sql server, the service was able to handle 10x the traffic with much better performance.

Since the majority of the IT mgmt were hardware centric, they blew off the results saying my tests were contrived and my solution wouldn't work in 'the real world'. Not 100% wrong, I had no idea what would happen when real traffic would hit the site.

With our other hardware guys concerned the web hardware budget was tearing into everything else, they helped convince the 'powers-that-be' to give my idea a shot.

Fast forward a couple of months (lots of web code changes), early one morning we started slowly turning on the new framework (3 load balanced web service servers, 3 web servers, one sql server). 5 minutes...no issues, 10 minutes...no issues,an hour...everything is looking great. Then (A is a network admin)...

A: "Umm...guys...hardly any of the other web servers are being hit. The new servers are handling almost 100% of the traffic."

VP: "That can't be right. Something must be wrong with the load balancers. Rollback!"

A:"No, everything is fine. Load balancer is working and the performance spikes are coming from the old servers, not the new ones. Wow!, this is awesome!"

<Web manager 'Stacey'>

Stacey: "We probably still need to rollback. We'll need to do a full analysis to why the performance improved and apply it the current hardware setup."

A: "Page load times are now under 100 milliseconds from almost 3 seconds. Lets not rollback and see what happens."

Stacey:"I don't know, customers aren't used to such fast load times. They'll think something is wrong and go to a competitor. Rollback."

VP: "Agreed. We don't why this so fast. We'll need to replicate what is going on to the current architecture. Good try guys."

<later that day>

VP: "We've received hundreds of emails complementing us on the web site performance this morning and upset that the site suddenly slowed down again. CEO got wind of these emails and instructed us to move forward with the new framework."

After full implementation, we were able to scale back to only a few web servers and a single sql server, saving an initial $300,000 and a potential future savings of over $500,000. Budget analysis considering other factors, over the next 7 years, this would save the company over a million dollars.

At the semi-annual company wide meeting, our VP made a speech.

VP: "I'd like to thank everyone for this hard fought journey to get our web site up to industry standards for the benefit of our customers and stakeholders. Most of all, I'd like to thank Stacey for all her effort in designing and implementation of the scaling solution. Great job Stacy!"

<hands her a blank white envelope, hmmm...wonder what was in it?>

A few devs who sat in front of me turn around, network guys to the right, all look at me with puzzled looks with one mouth-ing "WTF?"7 -

The German constitutional court (BverfG) declared many part of the law regulating the German secret agency "Bundesnachrichtendienst" (Federal Intelligence Service; BND) for unlawful and unconstitutional.

The key points: