Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "k8s"

-

Sometime it feels like I'm surrounded with idiots.

Got a Ticket:

Support: Please delete installation ABC from Server D.

Me: Checks everything. Installation is on Server E. Asks if this is correct?

Support: Just follow the instructions!

Me: Okey dokey. If you want me to be a hammer the installation is a nail... Drop database, Remove all files. nuke K8s resources

Support: Why did you delete the installation ABC? You should delete XYZ!

Me: Cause the ticket told to delete ABC on Server D and YOU told me to follow your instructions!

Support: Yeah but we just reused an old ticket. We wanted XYZ deleted!

It's not a big deal I can restore the shit but I hate it if a day starts with this kind of shit! 18

18 -

We are moving to kubernetes.

Nothing much has changed except we now get to say

it works on my cluster.

instead of

it work on my machine.1 -

Got laid off last week with the rest of the dev team, except one full stack Laravel dev. Investors money drying up, and the clowns can't figure out how to sell what we have.

I was all of devops and cloud infra. Had a nice k8s cluster, all terraform and gitops. The only dev left is being asked to migrate all of it to Laravel forge. 7 ML microservices, monolith web app, hashicorp vault, perfect, mlflow, kubecost, rancher, some other random services.

The genius asked the dev to move everything to a single aws account and deploy publicly with Laravel forge... While adding more features. The VP of engineering just finished his 3 year plan for the 5 months of runway they have left.

I already have another job offer for 50k more a year. I'm out of here!13 -

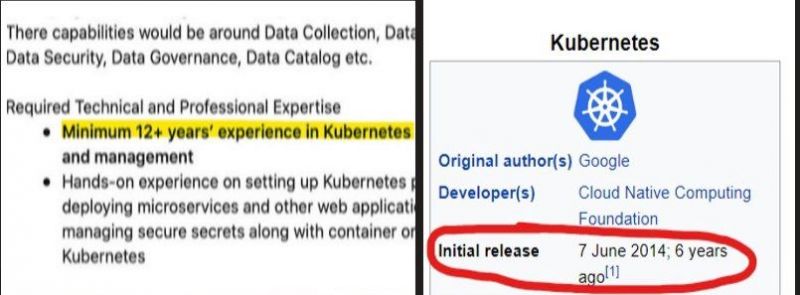

HR: you didn’t write in your job experience that you know kubernetes and we need people who know it.

Me: I wrote k8s

HR: What’s that ?

…

Do you know docker ?

Do you know what docker is ?

Do you use cloud ?

Can you read and write ?

Are you able to open the door with your left hand ?

What if we cut your hands and tell you to open the doors, how would you do that ?

What are your salary expectations?

Do you have questions, I can’t answer but I can forward them. Ask question, ask question, questions are important.

What is minimal wage you will agree to work ?

You wrote you worked with xy, are you comfortable with yx ?

We have fast hiring process consisting of 10 interviews, 5 coding assessments, 3 talks and finally you will meet the team and they will decide if you fit.

Why do you want to work … here ?

Why you want to work ?

How dare you want to work ?

Just find work, we’re happy you’re looking for it.

What databases you know ?

Do you know nosql databases ?

We need someone that knows a,b,c,d….x,y,z cause we use 1,2,3 … 9,10.

We need someone more senior in this technology cause we have more junior people.

Are you comfortable with big data?

We need someone who spoke on conference cause that’s how we validate that people can speak.

I see you haven’t used xy for a while ( have 5 years experience with xy ) we need someone who is more expert in xy.

How many years of experience you have in yz ??? (you need to guess how many we want cause we look for a fortune teller )

Not much changed in job hunting, taking my time to prepare to leetcode questions about graphs to get a job in which they will tell me to move button 1px to the left.

Need to make up some stories about how I was bad person at work and my boss was angry and told me to be better so I become better and we lived happy ever after. How I argued with coworkers but now I’m not arguing cause I can explain. How bad I was before and how good I am now. Cause you need to be a better person if you want to work in our happy creepy company.

Because you know… the tree of DOOM… The DOMs day.5 -

To this day I can't figure out why people still drink the windows koolaid.

It's less secure, slower, bloatier (is that a word?), Comes with ads, intrudes on privacy, etc. People say it's easier to use than Linux, but 99% of what anyone does happens on a chrome based web browser which is the same on all systems!

When it comes to dev, it boggles the mind that people will virtualize a Linux kernel in Windows to use npm, docker, k8s, pip, composer, git, vim, etc. What is Windows doing for you but making your life more complicated? All your favorite browsers and IDEs work on Linux, and so will your commands out of the box.

Maybe an argument can be made for gaming, but that's a chicken an egg scenario. Games aren't built for Linux because the Linux market is too small to be worth supporting, not that the games won't work on it...20 -

Yes I totally care about what some dipshit at [insert conference] has to say, now let me in on my desktop that doesn't run anything that has anything to do with k8s pls

7

7 -

Fun day, lots of relief and catharsis!

Client I was wanting to fire has apparently decided that the long term support contract I knew was bullshit from go will instead be handled by IBM India and it's my job to train them in the "application." Having worked with this team (the majority of whom have been out of university for less than a year), I can say categorically that the best of them can barely manage to copy and paste jQuery examples from SO, so best of fucking luck.

I said, "great!," since I'd been planning on quitting anyways. I even handed them an SOW stating I would train them for 2 days on the application's design and structure, and included a rider they dutifully signed that stated, "design and structure will cover what is needed to maintain the application long term in terms of its basic routing, layout and any 'pages' that we have written for this application. The client acknowledges that 3rd party (non-[us]) documentation is available for the technologies used, but not written by [us], effective support of those platforms will devolve to their respective vendors on expiry of the current support contract."

Contract in hand, and client being too dumb to realize that their severing of the maintenance agreement voids their support contract, I can safely share what's not contractually covered:

- ReactiveX

- Stream based programming

- Angular 9

- Any of the APIs

- Dotnet core

- Purescript

- Kafka

- Spark

- Scala

- Redis

- K8s

- Postgres

- Mongo

- RabbitMQ

- Cassandra

- Cake

- pretty much anything not in a commit

I'm a little giddy just thinking about the massive world of hurt they've created for themselves. Couldn't have happened to nicer assholes.3 -

There's this huuuge project I was a part of for half a year. I was kicked off along with a few dozens of other devs (>60% of total manpower) a while ago for particular company reasons.

Now the remaining devs are oh-so-enjoying their time there..

1. workload has not changed

2. deadlines have not changed

3. no one will have Christmas-NewYear vacation

4. a new k8s-based infra is scheduled to roll out to PROD on Dec 23 (k8s is still far from ready - might need a few more months)

The most fun part is that it's not client's mgmt who has decided for #4 -- it's our own....

Boys.. Girls.. Save yourselves.3 -

School gave me 3 DigitalOcean droplets to try out Kubernetes in the cloud, awesome!

Wrote an Ansible script to not only simply install docker and add users but also add kubernetes, nice!

Oh wait, error?! Well I should've known this wasn't going to be easy... ah well no problem. Let's see... Ansible is cryptic as always, it can't connect to the API server? Is it even running?

Let's ssh to the master, ah nothing is running, great. Let's try out kubeadm init and see what happens, oh gosh, my Docker version has not been validated! No problem, let's just downgrade!

How do I do that? Oh I know, change the version in the role! Wait that version doesn't exit? Let's travel to Docker's website and see what versions exist of docker-ce, oh I see, it needs a subversion, no problem.

Oh that errors too? Wait then what... Oh I need a ~ and a ubuntu and a 0 somewhere, my mistake!

Let's run it again! Fails!

Same ssh process, oh wait...

Oh god no...

Kubernetes requires 2 cores and these things only have 1...

Welp, time to ask the teachers to resize my droplet by a small amount tomorrow, hopefully I'll get a new error!

----------------------------------------------

My adventure so far with Kubernetes. I'm not installing it for any serious/prod reason, just for educational purposes. K8s seems like 'endgame' to me, like one of the 'big guys' that big enterprises use so I'm eager to throw stuff at a droplet and see what happens.

Going further down the rabbit hole tomorrow!

Wish me luck :3

(And yes, I could've figured this all out beforehand with documentation, but this is more fun in my opinion)8 -

This begs for a rant... [too bad I can't post actual screenshots :/ ]

Me: He k8s team! We're having trouble with our k8s cluster. After scaling up and running h/c and Sanity tests environment was confirmed as Healthy and Stable. But once we'd started our load tests k8s cluster went out for a walk: most of the replicas got stoped and restarted and I cannot find in events' log WHY that happened. Could you please have a look?

k8s team [india]: Hello, thank you for reaching out to k8s support. We will check and let you know.

Me: Oh, you're welcome! I'll be just sitting here quietly and eagerly waiting for your reply. TIA! :slightly_smiling_face:

<5 minutes later>

k8s team India: Hi. Could you give me a list of replicas that were failing?

Me: I gave you a Grafana link with a timeframe filter. Look there -- almost all apps show instability at k8s layer. For instance APP_1 and APP_2 were OK. But APP_3, APP_4 and APP_5 were crashing all over the place

k8s team India: ok I will check.

<My shift has ended. k8s team works in different timezone. I've opened up Slack this morning>

k8s team India: HI. APP_1 and APP_2 are fine. I don't even see any errors from logs, no restarts. All response codes are 200.

Me: 🤦♂️ .... Man, isn't that what I've said? ... 🤦♂️5 -

I’m so fucking sick and tired of !devs telling me how simple a feature should be to implement.

Like motherfucker the most complicated thing you’ve ever done with a computer is attempt (and fail) at working with tables in Microsoft Word and you’re trying to tell me how long a new feature/K8s architecture/noSQL aggregation should take to implement?

A monitor cable wiggling loose paralyses you for hours but I’m supposed to bow down to your understanding of what is causing a bug?6 -

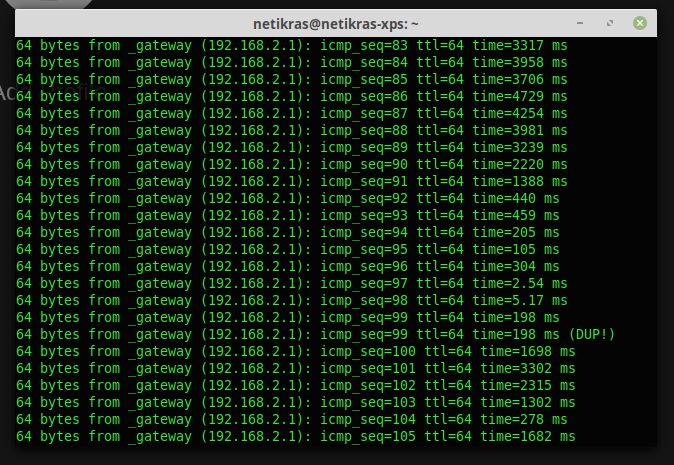

Building my own router was a great idea. It solved almost all of my problems.

Almost.

Just recently have I started to build a GL CI pipeline for my project. >100 jobs for each commit - quite a bundle. Naturally, I have used up all my free runners' time after a few commits, so I had to build myself a runner. "My old i7 should do well" - I thought to myself and deployed the GL runner on my local k8s cluster.

And my router is my k8s master.

And this is the ping to my router (via wifi) every time after I push to GL :)

DAMN IT!

P.S. at least I have Noctua all over that PC - I can't hear a sound out of it while all the CPUs are at 100% 8

8 -

Some "engineers" entire jobs seems to only consist of enforcing ridiculous bureaucracy in multinational companies.

I'm not going to get specific, the flow is basically:

- Developer that has to actually write code and build functionality gets given a task, engineer needs X to do it - a jenkins job, a small k8s cluster, etc.

- Developer needs to get permission from some highly placed "engineer" who hasn't touched a docker image or opened a PR in the last 2 years

- Sends concise documentation on what needs to be built, why X is needed, etc.

Now we enter the land of needless bureaucracy. Everything gets questioned by people who put near 0 effort into actually understanding why X is needed.

They are already so much more experienced than you - so why would they need to fucking read anything you send them.

They want to arrange public meetings where they can flaunt their "knowledge" and beat on whatever you're building publicly while they still have nearly 0 grasp of what it actually is.

I hold a strong suspicion that they use these meetings simply as a way to publicly show their "impact", as they'll always make sure enough important people are invited. X will 99% of the time get approved eventually anyway, and the people approving it just know the boxes are being ticked while still not understanding it.

Just sick of dealing with people like this. Engineers that don't code can be great, reasonable people. I've had brilliant Product Owners, Architects, etc. But some of them are a fucking nightmare to deal with.7 -

oh, I have a few mini-projects I'm proud of. Most of them are just handy utilities easing my BAU Dev/PerfEng/Ops life.

- bthread - multithreading for bash scripts: https://gitlab.com/netikras/bthread

- /dev/rant - a devRant client/device for Linux: https://gitlab.com/netikras/...

- JDBCUtil - a command-line utility to connect to any DB and run arbitrary queries using a JDBC driver: https://gitlab.com/netikras/...

- KubiCon - KuberneterInContainer - does what it says: runs kubernetes inside a container. Makes it super simple to define and extend k8s clusters in simple Dockerfiles: https://gitlab.com/netikras/KubICon

- ws2http - a stateful proxy server simplifying testing websockets - allows you to communicate with websockets using simple HTTP (think: curl, postman or even netcat (nc)): https://gitlab.com/netikras/ws2http -

Today was a SHIT day!

Working as ops for my customer, we are maintaining several tools in different environments. Today was the day my fucking Kubernetes Cluster made me rage quit, AGAIN!

We have a MongoDB running on Kubernetes with daily backups, the main node crashed due a full PVC on the cluster.

Full PVC => Pod doesn't start

Pod doesn't start => You can't get the live data

No live data? => Need Backup

Backup is in S3 => No Credentials

Got Backup from coworker

Restore Backup? => No connection to new MongoDB

3 FUCKING HOURS WASTED FOR NOTHING

Got it working at the end... Now we need to make an incident in the incident management software. Tbh that's the worst part.

And the team responsible for the cluster said monitoring wont be supported because it's unnecessary....3 -

Sorry, need to vent.

In my current project I'm using two main libraries [slack client and k8s client], both official. And they both suck!

Okay, okay, their code doesn't really suck [apart from k8s severely violating Liskov's principle!]. The sucky part is not really their fault. It's the commonly used 3rd-party library that's fucked up.

Okhttp3

yeah yeah, here come all the booos. Let them all out.

1. In websockets it hard-caps frame size to 16mb w/o an ability to change it. So.. Forget about unchunked file transfers there... What's even worse - they close the websocket if the frame size exceeds that limit. Yep, instead of failing to send it kills the conn.

2. In websockets they are writing data completely async. Without any control handles.. No clue when the write starts, completes or fails. No callbacks, no promises, no nothing other feedback

3. In http requests they are splitting my request into multiple buffers. This fucks up the slack cluent, as I cannot post messages over 4050 chars in size . Thanks to the okhttp these long texts get split into multiple messages. Which effectively fucks up formatting [bold, italic, codeblocks, links,...], as the formatted blocks get torn apart. [didn't investigate this deeper: it's friday evening and it's kotlin, not java, so I saved myself from the trouble of parsing yet unknown syntax]

yes, okhttp is probably a good library for the most of it. Yes, people like it, but hell, these corner cases and weird design decisions drive me mad!

And it's not like I could swap it with anynother lib.. I don't depend on it -- other libs I need do! -

Just needing somewhere to let some steam off

Tl;dr: perfectly fine commandline system is replaced by bad ui system because it has a ui.

For a while now we have had a development k8s cluster for the dev team. Using helm as composing framework everything worked perfectly via the console. Being able to quickly test new code to existing apps, and even deploy new (and even third party apps) on a simar-to-production system was a breeze.

Introducing Rancher

We are now required to commit every helm configuration change to a git repository and merge to master (master is used on dev and prod) before even being able to test the the configuration change, as the package is not created until after the merge is completed.

Rolling out new tags now also requires a VCS change as you have to point to the docker image version within a file.

As we now have this awesome new system, the ops didn't see a reason to give us access to kubectl. So the dev team is stuck with a ui, but this should give the dev team more flexibility and independence, and more people from the team can roll releases.

Back to reality: since the new system we have hogged more time from ops than we have done in a while, everyone needs to learn a new unintuitive tool, and the funny thing, only a few people can actually accept VCS changes as it impacts dev and prod. So the entire reason this was done, so it is reachable to more people, is out the window.3 -

I’m currently working with a devops team in the company to migrate our old ass jboss servers architecture to kubernetes.

They’ve been working in this for about a year now, and it was supposed to be delivered a few months back, no one knew what’s going on and last week they manage to have something to see at least.

I’ve never seen anything so bad in my short life as a developer, at the point that the main devops guy can’t even understand his own documentation to add ci/cd to a project.

It goes from trigger manually pipelines in multiple branches for configuration and secrets, a million unnecessary env variables to set, to docker images lacking almost all requisites necessary to run the apps.

You can clearly see the dude goes around internet copy pasting stuff without actually understanding what going on behind as every time you ask him for the guts of the architecture he changes the topic.

And the worst of all this, as my team is their counterpart on development we’ve fighting for weeks to make them understand that is impossible the proceed with this process with over 100 apps and 50+ developers.

Long story short, last two weeks I’ve been fixing the “dev ops” guy mess in terms of processes and documentation but I think this is gonna end really bad, not to sound cocky or anything but developers level is really low, add docker and k8s in top of that and you have a recipe for disaster.

Still enjoying as I have no fault there, and dude got busted.9 -

Hey peoples,

Have a look at my new project.

https://github.com/thosebeans/...

It's a docker container to deploy a wireguard interface.

Why would you do something like this you ask, well there's actually 2 main reasons.

1. Distributing wireguard configuration over k8s/swarm/whatever.

2. Bringing up wireguard Interfaces automatically, on systems that don't support that normally, eg. Alpinelinux.2 -

why the fuck those images wouldn't load? they come corrupt from K8S, but they are fine if I run the container locally, like... wtf? is Ingress NGINX doing something to them or did I configure something wrong?!

15

15 -

- finish my project's backend, make a proto frontend

- throw the proto away and hire someone to do it for me. Or at least make the design...

- learn that freaking k8s ffs...

- if there will be spare time left - move from on-prem to aws/gcp, maybe launch an alpha

- improve my people skills

- bump my salary significantly5 -

Hello devRant!

Man its been a while, i havent logged in here in like 4 years.

Recently ive been getting into home-labbing, and i thought to myself

"all of these people on youtube/reddit run Plex on pre-built NASs that have awful celerons and whatnot, we can do much better!"

And by "much better" i meant a bare metal k8s cluster.

My hybris knows no bounds apparently.

Turns out this shit is quite hard.

Really gives u an appreciation of just how much stuff cloud providers magically abstract away....

My final goal is to run stableDiffusion on this thing, even know i know full-well the moment i try Nvidia will fuck me raw with some hidden enterprise subscrition :) -

Google acquired two interesting products companies last week.

One is making customizable phone apps from spreadsheets the other is gathering sales data from local shops.

appsheet and pointy

At this point I think they’re still missing code editor. Microsoft have visual studio and amazon as always was first and acquired c9.io when vscode was one year old.

How the fuck they missed the code that would run remotely on multiple machines should have ability to connect to one node with debugger after they fucked docker with their k8s.6 -

Task: Deploy MinIO in k8s cluster

Me: deploys the first docker image found on google: bitnami/minio

MinIO: starts

Me: log in

MinIO: Fuck you! There's a cryptic error: Expected element type <Error> but have <HTML>

Me: spends half a day trying out different vendors, different versions, different environments (works on local BTW)

Me: got tired, restored the manifest to what it was at the beginning. Gave it the last try before signing off

MinIO: works 100%

wtf... So switching it back and forth fixed the problem, whatever it was. Oh well, yet another day.6 -

Everyone rides on k8s today....

But in the end it's just like Docker Swarm and Compose combined.

I don't know if Google is playing Elon Musk anymore.2 -

Unstableness of core technology stack. The more developers are there, the more complicated architecture they create that often doesn’t give any significant value besides what if something goes wrong ?

What if you make mistake ?

What if power goes down ?

I feel I am last optimistic thinking software developer on this planet.

I feel that those tools just try to give some sort of power to the management over developer free mind.

Creatures like multicloud, cloud, k8s I feel that it’s just beginning not the end of road. And this beginning is a wrong turn.

It’s just another vendor lock in.

But I might be wrong.3 -

## Learning k8s

Okay, that's kind of obvious, I just have no idea why I didn't think of it..

I've made a cluster out of a rpi, a i7 PC and a dell xps lappy. Lappy is a master and the other two are worker nodes.

I've noticed that the rpi tends to hardly ever run any of my pods. It's only got 3 of them assigned and neither of them work. They all say: "Back-off restarting failed container" as a sole message in pod's description and the log only says 'standard_init_linux.go:211: exec user process caused "exec format error"' - also the only entry.

Tried running the same image locally on the XPS, via docker run -- works flawlessly (apart from being detached from the cluster of other instances).

Tried to redeploy k8s.yaml -- still raspberry keeps failing.

wtf...

And then it came to me. Wait.. You idiot.. Now ssh to that rpi and run that container manually. Et voila! "docker: no matching manifest for linux/arm/v7 in the manifest list entries."

IDK whether it's lack of sleep or what, but I have missed the obvious -- while docker IS cross-platform, it's not a VM and it does not change the instructions' set supported by the node's cpu. Effectively meaning that the dockerized app is not guaranteed to work on any platform there is!

Shit. I'll have to assemble my own image I guess. It sucks, since I'll have to use CentOS, which is oh-so-heavy compared to Alpine :( Since one of the dependencies does not run well there..

Shit.

Learning k8s is sometimes so frustrating :)2 -

## Learning k8s

Run on k8s -- fails

Run the same img on Docker -- works

it's been a while since I've been this frustrated...8 -

Oh my.. I think I'm enjoying molesting kubernetes :)

A while ago I got pissed at k8s because with 1.24 they brought backward-incompatible changes, ruling my cluster broken. Then I thought to myself: "why not create a Docker image that would run kubernetes inside? Separate images for control plane, agent and client"

Took me a while, but I think tonight I've had a breakthrough (I love how linux works...)!! The control-plane is spinning up!! Running on containerd

Still needs some work and polishing, but hey! Ephemeral k8s installation with a single docker-run command sure sounds tempting!

P.S. Yes, I know there is `kind` and 'kinder', but I'm reluctant to install a separate tool that installs a set of tools for me. Kind of... too shady. Too many moving parts. Too deeply hidden parts I may have to fix. Having a dumb-simple Dockerfile gives me the openness, flexibility and simplicity I want. + I can always use it as a base image to add my customizations later on! Reinstalling a cluster would be a breeeeeeze6 -

They say that runing the same command over and over again is a sign of insanity.

LIKE HELL IT IS!!!

I've been running `terraform apply` for the last hour (trying to dump an EKS token in plain-text, because my k8s-related providers failed to auth to the cluster), and miraculously the problem went away. Now the error is no more.

Insanity?

I beg to differ!

Narf! 2

2 -

"shiny shiny" time... Lets standup a kubernetes cluster and then find a problem to solve! #BuzzWordDrivenDev2

-

Guys, I want to get into a DevOps role.

I'm already looking into Linux, Terraform, Ansible and k8s.

If you are a DevOps Engineer, what kind of tooling or knowledge do I need to know before applying to companies?

Any tip is welcome and I would greatly appreciate it! Thank you!9 -

Not a rant, just to mention, if someone is interested in getting started with k8s ( like me ) there is the summer of k8s - https://getambassador.io/summer-of-... if you want to give it a try

-

## Learning k8s

Sooo yeah, 2 days have been wasted only because I did not reset my cluster correctly the first time. Prolly some iptables rules were left that prevented me from using DNS. Nothing worked...

2 fucking days..

2 FUCKING DAYS!!! F!!!11 -

Continuing to learn k8s ecosystem and to achieve acceptable level

With trying eventually Helm, Argo CD and even trying to use not managed setup for k8s.

Going though books to find out theory about being SRE.

And about data intensive apps.

Learning and trying Kafka

Learning and trying FastAPI and diving in generally to async python ecosystem

Learning Go.

Learning few more books to increase code quality and its compositioning.

Getting more practice in monitoring and logging systems with applicating them to k8s.3 -

Wow.. Kubernetes makes me high!!

VERY literally.

Today O dug into k8s from a devops/admin perspective. Soooo many figures at play! Tried my best to understand it all in one go.

Now I feel like I used to feel back in my student days after successfully finishing a whole bottle of wine.

Dizzy and happy as fuck! 😁 and want to puke a little

go k8s!3 -

Finally!! I've managed to molest containerd and kubernetes!!

Now I can run k8s in a container :) yayy!!!

https://gitlab.com/netikras/KubICon

Next: figure out a way to automatically and transparently share certs6 -

boi, k8s is hard, even if it is managed by Digital Ocean. Is there something I can install on my droplet that can help me release software from my gitlab repositories?

I'm on Debian 10.114 -

Over the last few weeks, I've containerised the last of our "legacy" stacks, put together a working proof of concept in a mixture of DynamoDB and K8s (i.e. no servers to maintain directly), passing all our integration tests for said stack, and performed a full cost analysis with current & predicted traffic to demonstrate long term server costs can be less than half of what they are now on standard pricing (even less with reserved pricing). Documented all the above, pulled in the relevant higher ups to discuss further resources moving forward, etc. That as well as dealing with the normal day to day crud of batting the support department out the way (no, the reason Bob's API call isn't working is because he's using his password as the API key, that's not a bug, etc. etc.) and telling the sales department that no, we can't bolt a feature on by tomorrow that lets users log in via facial recognition, and that'd be a stupid idea anyway. Oh, and tracking down / fixing a particularly nasty but weird occasional bug we were getting (race hazards, gotta love 'em.)

Pretty pleased with that work, but hey, that's just my normal job - I enjoy it, and I like to think I do good work.

In the same timeframe, the other senior dev & de-facto lead when I'm not around, has... "researched" a single other authentication API we were considering using, and come to the conclusion that he doesn't want to use it, as it's a bit tricky. Meanwhile passed all the support stuff and dev stuff onto others, as he's been very busy with the above.

His full research amounts to a paragraph which, in summary, says "I'm not sure about this OAuth thing they mention."

Ok, fine, he works slowly, but whatever, not my problem. Recently however, I learn that he's paid *more than I am*. I mean... I'm not paid poorly, if anything rather above market rate for the area, so it's not like I could easily find more money elsewhere - but damn, that's galling all the same.5 -

Folks are bragging about having 99 microservices. I don't know for what joy folks create that much microservices. They may have their own reason. I'm trying to understand what is the workflow for small companies with few microservices. Could anyone shed some light? I'm thinking of building orchestrator where I don't have fancy features like k8s and get the basic job done. Focused more on simplicity and UX.4

-

What CI software are you using?

Are you happy with it or what do you hate about it.

I tried 5 different CI platforms in the past week, and I did not like any of them..

Any recommendations? (Can also be self hosted, I have a k8s cluster at my disposal)

// a short rant about team city

wE uSe koTliN dSL to reduce how much configuration is needed, fuck you I ended up with even more, it's horrible I have 40+ micro services, meta runners sounded like a awesome feature until I found out you need to define one for ever single fucking project...

Oh and on top of that, you cannot use one from root parent, but also it cannot be named the same.

Why is all ci software just so retarded - sorry I really cannot put it any other way10 -

## Learning k8s

Interesting. So sometimes k8s network goes down. Apparently it's a pitfall that has been logged with vendor but not yet fixed. If on either of the nodes networking service is restarted (i.e. you connect to VPN, plug in an USB wifi dongle, etc..) -- you will lose the flannel.1 interface. As a result you will NOT be able to use kube-dns (because it's unreachable) not will you access ClusterIPs on other nodes. Deleting flannel and allowing it to restart on control place brings it back to operational.

And yet another note.. If you're making a k8s cluster at home and you are planning to control it via your lappy -- DO NOT set up control plane on your lappy :) If you are away from home you'll have a hard time connecting back to your cluster.

A raspberry pi ir perfectly enough for a control place. And when you are away with your lappy, ssh'ing home and setting up a few iptables DNATs will do the trick

netikras@netikras-xps:~/skriptai/bin$ cat fw_kubeadm

#!/bin/bash

FW_LOCAL_IP=127.0.0.15

FW_PORT=6443

FW_PORT_INTERMED=16443

MASTER_IP=192.168.1.15

MASTER_USER=pi

FW_RULE="OUTPUT -d ${MASTER_IP} -p tcp -j DNAT --to-destination ${FW_LOCAL_IP}"

sudo iptables -t nat -A ${FW_RULE}

ssh home -p 4522 -l netikras -tt \

-L ${FW_LOCAL_IP}:${FW_PORT}:${FW_LOCAL_IP}:${FW_PORT_INTERMED} \

ssh ${MASTER_IP} -l ${MASTER_USER} -tt \

-L ${FW_LOCAL_IP}:${FW_PORT_INTERMED}:${FW_LOCAL_IP}:${FW_PORT} \

/bin/bash

# 'echo "Tunnel is open. Disconnect from this SSH session to close the tunnel and remove NAT rules" ; bash'

sudo iptables -t nat -D ${FW_RULE}

And ofc copy control plane's ~/.kube to your lappy :)3 -

last week I finished a course on k8s "simply, for developers". Today I decided to fiddle with kubectl to move a project I'm working on to k8s and it was a complete success (thing runs on minikube and pulls containers from a private Gitlab registry).

I'm happy with the results, there is still room for learning k8s and devops in general. -

What it looks like in a tutorial:

https://matthewpalmer.net/kubernete...

or

https://howtoforge.com/how-to-insta...

What it looks like on my machine:

https://github.com/kubernetes/...

k8s vs. me

3 :: 012 -

Multi cloud, multi account, VPCs with k8s clusters all tied together with rancher and vault. Deployed in Terraform.

What a monster that was to create!3 -

DevOps:

How far does the Ops part go in DevOps? Is it like: Developer are going to manage k8s clusters (with cloud-providers etc.)? Or is it "only" writing deployments etc...?9 -

Making distributed scheduler that queue and run tasks on containers or other executors in future and also pulls new tasks from defined git repositories.

Tasks are added based on simple yaml configuration.

Need that for my side projects that gather data from multiple sources from time to time.

k8s looks to heavy for that and airflow can’t be configured like I want it to be so I started writing my own on Monday.

Nearly finished poc version.2 -

FOMO on technology is very frustrating.

i have a few freelance and hobby projects i maintain. mostly small laravel websites, go apis, etc ..

i used to get a 24$/ month droplet from digital ocean that has 4vCPUs and 8GB RAM

it was nore than enough for everything i did.

but from time to time i get a few potential clients that want huge infrastructure work on kubernetes with monitoring stacks etc...

and i dont feel capable because i am not using this on the daily, i haven't managed a full platform with monitoring and everything on k8s.

sure u can practice on minikube but u wont get to be exposed to the tiny details that come when deploying actual websites and trying to setup workflows and all that. from managing secrets to grafana and loki and Prometheus and all those.

so i ended up getting a k8s cluster on DO, and im paying 100$ a month for it and moving everything to it.

but what i hate is im paying out of pocket, and everything just requires so much resources!!!!3 -

Recently many of us may have seen that viral image of a BSOD in a Ford car, saying the vehicle cannot be driven due to an update failure.

I haven't been able to verify the story in established news sources, so I won't be further commenting on it, specifically.

But the prospects of the very concept are quite... concerning.

Deploying updates and patches to software can be reasonably called *the software industry*. We almost have no V0 software in production nowadays, anywhere (except for some types of firmware).

Thus, as car and other devices become more and more reliant on larger software rather than much shorter onboard firmware, infrastructure for online updates becomes mandatory.

And large scale, major updates for deployed software on many different runtime environments can be messy even on the most stable situations and connections (even k8s makes available rolling updates with tests on cloud infrastructure, so the whole thing won't come crashing down).

Thereby, an update mess on automotive-OS software is a given, we just have to wait for it.

When it comes... it will be a mess. Auto manufacturers will adopt a "move fast and break things" approach, because those who don't will appear to be outcompeted by those who deploy lots of shiny things, very often.

It will lead to mass outages on otherwise dependable transportation - private transportation.

Car owners, the demographic that most strongly overlaps with every other powerful demographic, will put significant pressure on governments to do something about it.

Governments (and I might be wrong here) will likely adapt existing recall implementation laws to apply to automotive OS software updates.

That means having to go to the auto shop every time there is a software update.

If Windows may be used as a reference for update frequency, that means several times per day.

A more reasonable expectation would be once per month.

Still completely impossible for large groups of rural car owners.

That means industry instability due to regulation and shifting demographics, and that could as well affect the rest of the software industry (because laws are pesky like that, rules that apply to cars could easily be used to reign in cloud computing software).

Thus... Please, someone tells me I overlooked something or that I am underestimating the adaptability of the powers at play, because it seems like a storm is on the horizon, straight ahead.5 -

restoring a 2TB DEV DB running in k8s on SPOT instances is like trying to aim at a moving target. With a slingshot.8

-

Nextjs

I just realized

unit tests and integration tests dont exist in nextjs

So now i wondered

What about integrating AWS cloud functions with nextjs?

What about docker with nextjs?

Kubernetes with nextjs??

How TF do u implement a CI/CD pipeline with nextjs if there is nothing to test?!?!??

Nextjs seems like itself is self sufficient. WTF? Everything has been packed and cluttered into 1 giant pile of horsedump and called Nextjs Framework where you dont need k8s to run it or anything. Might as well then rename this fucking "framework" to GOD of all frameworks since it appears it can fucking do anything and everything without requiring HEAVY DevOps bullshit.

ALL of it can be cluttered in 1 nextjs project and you have a fully functional production working website that can basically do ANYTHING and EVERYTHING.

How???

Am i fucking going insane? Am i missing something here??19 -

SO, after finishing uni I joined a startup.

"We'll cover devops stuff! Aws certifications for everyone! And later k8s!"

So I'm here, learning VueJS.

(Tbf, the situation is better than it seems, like being here, boss is a honest person. Still, fuck.)4 -

Learning to like manjaro, a lot, setting up i3 for a workstation and kubernetes cluster with a couple of manjaro workstations with just the cli installed... few gotchas on the way, get Hyper-V enhanced mode working but get a message session error on dbus launch - easy fix it is already launched by lightdm, the cli install doesn't start the network driver by default but can get a whole 3 node k8s cluster running in under an hour from scratch and forward i3 to a nice, fast, little windows x-server that I got for free with Microsoft reward points.. winning!

-

I cant believe the project I'm working on does not use kubernetes or terraform. Not even docker. How is this multi trillion dollar project even in business?

I feel so sad for not having the opportunity to work with one of the most fundamental and most important technologies to know as a devops engineer... So sad

I cant advance or improve. Im just stuck in their ecosystem like Apple

This corporation is probably ran by 90 year old grandpa men from world war 1. However considering they are so large and still in business this gives me hope that anyone can make it even if you're stupid

Think about it

They are proof that you can run a giant business with hundreds of employees, not use k8s and the most modern devops technologies, and still operate just fine.

The devops code i have to maintain is older than the amount of years i exist. Its very messy and most of this shit is not even devops related. Its more of some kind of linux administrative tasks mixed with 3 drops of actual devops (bash scripts, ansible scripts, ci/cd pipeline)

And yet im paid more than i have ever been paid in any job so far

What should i do. Stay due to "high" money or..ask for a project with k8s. I put "high" in quotes because it is extreme luxury in my shithole country, im now among top 1% earners of the country, and yet i make less than 30k a year. With less than 30k a year i cant buy a good car but i can live very comfortably in my country. I cant complain about this salary since i think its finally enough to invest to get a chance to earn more and still have enough left to live comfortably.

Before i was just working to survive. Now im working to live. Its an upgrade.

Due to not working with difficult stuff like k8s i cant demand for more money. It wouldnt feel justified. I'm stuck here

What would u do8 -

As if dealing with an unstable infrastructure and being unable to properly test microservices I work on Isn't enraging enough now developers at my workplace have to double as Ops too and have to configure themselves the K8s pods and containers in which our code runs. That would be ok for me if it isn't for the fact which we should do that trough company's shitty documented and totally leaky abstractions.2

-

I cant find 1 single normal Fucking tutorial explaining how to code FULL DEVOPS PIPELINE for deployment to AWS.

A pipeline that includes

- gitlab (ci cd)

- jenkins

- gradle

- sonarqube

- docker

- trivy

- update k8s manifest

- terraform

- argocd

- deploy to EKS

- send slack notification

How Fucking hard is it for someone to make a tutorial about this????? How am i supposed to learn how to code this pipeline????10 -

Hee guys I will be running Ubuntu/Debian as my main development environment. Which distro is the best for development with k8s, docker and elixir?

Thanks for in the input guys! Really appreciate it!12 -

A better half of the day wasted.

Why?

Because fabric8 k8s client probably has a bug, where it fails to deliver the last part of the exec() output. Or the whole output, if it's short enough.

brilliant... Aaaaaand we're going back to the official k8s client with ~10 parameters in their methods.

Fucking awesome. -

are Helm charts supposed to be extended somehow by declaring them as the "base" in a new Helm chart? I'm trying to find documentation about how to refer a chart at https://artifacthub.io/ as base in a fresh Helm chart to overwrite the default values in the fresh values.yaml1

-

I guess these days I work with Golang, gRPC, and Kubernetes. I guess that's a dev stack. Or turning into one at the very least. The only thing that annoys me about this stack, is how different deployments for kubernetes are different for CSPs. The fact that setting up a kubernetes/Golang dev environment is take a lot of time and effort. And gRPC can be a pain in the ass to work with as well. Since it's fairly new in large scale enterprise use, finding best practices can be pretty hard, and everything is "feet in the fire" and "trial by error" when dealing with gRPC.

And Golang channels can get very hairy and complicated really really fast. As well as the context package in Golang. And Golang drama with package managers. I wish they would just settle on GoDeps or vgo and call it a day.

And for the love of God, ADD FUCKING GENERICS! Go code can be needlessly long and wordy. The alternative "struct function members" can be pretty clunky at times. -

What container/virtualisation technology do you guys prefer?

Also I've been thinking of picking up either k8s or docker, mainly docker for ease of deployment or do you think it's wortb learning both perhaps? I do know they have a bit of different use cases6 -

Get to know the new company better (Changed job shortly before Christmas).

Learn some DPs, DDD, k8s, finish introduction to hacking course, start doing htb and thm machines, finish and defend my thesis, finish books clean code, thinking in java (reading it to fill in gaps on knowledge), a few books about pentesting.

Among non tech goals: pass drivers license exam for cars, another one for motorcycles, go back to learning russian. -

## Learning k8s

Okay, seriously, wtf.. Docker container boots up just fine, but k8s startup from the same image -- fails. After deeper investigation (wasted a few hours and a LOT of patience on this) I've found that k8s is right.. I should not be working.

Apparently when you run an app in ide (IDEA) it creates the ./out/ directory where it stores all the compiled classes and resources. The thing is that if you change your resources in ./src/main/resources -- these changes do NOT reflect in ./out/. You can restart, clean your project -- doesn't matter. Only after you nuke the ./out and restart your app from IDE it will pick up your new resources.

WTF!!!

and THAT's why I was always under an impression that my app's module works well. But it doesn't, not by a tiny rat's ass!

Now the head-scratcher is WHY on Earth does Docker shows me what I want to see rather than acting responsibly and shoving that freaking error to my stdout...

Truth be told I was hoping it's k8s that's misbehaving. Oh well..

Time to get rid od legacy modes' support and jump on proper implementation! So much time wasted.. for nothing :(9 -

alright, so I'm seeding the DB with a 4GB SQL dump through a k8s port-forward across the world (behind the pond), port-forwarded through socat (a bastian container).

What are the chances I'll succeed? :D4 -

Spent two days debugging a k8s config. Turns out Rancher doesn't create ingress controllers on EKS instances, and I have to do that manually.

Thank you random stranger in github issues! I've tipped you some BAT!2 -

hey there

recently I've been thinking about a good way to deploy mass amounts of containers on kubernetes, but before I just go with docker, I want to learn about some alternatives

what are some other container softwares you guys use?2 -

Just got an email that Datree is closing shop. That's too bad, I considered it a mandatory production k8s tool.

I guess I'll have to whip up some Frankenstein replacement with Open Policy Agent... -

k8s is so good orchestrating its shit, yet it can't handle a node rolling update with deployments having one replica and a PDB of unavailable=0,

Dude... make a gooddamn exception, surge a damn pod for draining the node. -

I started a side project recently:

Open Source 2D MMORPG with K8s in Azure (AKS) and Unity:

https://youtube.com/user/...

https://github.com/KDSBest/...