Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "containers"

-

I’m kind of pissy, so let’s get into this.

My apologies though: it’s kind of scattered.

Family support?

For @Root? Fucking never.

Maybe if I wanted to be a business major my mother might have cared. Maybe the other one (whom I call Dick because fuck him, and because it’s accurate) would have cared if I suddenly wanted to become a mechanic. But in both cases, I really doubt it. I’d probably just have been berated for not being perfect, or better at their respective fields than they were at 3x my age.

Anyway.

Support being a dev?

Not even a little.

I had hand-me-down computers that were outmoded when they originally bought them: cutting-edge discount resale tech like Win95, 33/66mhz, 404mb hd. It wouldn’t even play an MP3 without stuttering.

(The only time I had a decent one is when I built one for myself while in high school. They couldn’t believe I spent so much money on what they saw as a silly toy.)

Using a computer for anything other than email or “real world” work was bad in their eyes. Whenever I was on the computer, they accused me of playing games, and constantly yelled at me for wasting my time, for rotting in my room, etc. We moved so often I never had any friends, and they were simply awful to be around, so what was my alternative? I also got into trouble for reading too much (seriously), and with computers I could at least make things.

If they got mad at me for any (real or imagined) reason (which happened almost every other day) they would steal my things, throw them out, or get mad and destroy them. Desk, books, decorations, posters, jewelry, perfume, containers, my chair, etc. Sometimes they would just steal my power cables or network cables. If they left the house, they would sometimes unplug the internet altogether, and claim they didn’t know why it was down. (Stealing/unplugging cables continued until I was 16.) If they found my game CDs, those would disappear, too. They would go through my room, my backpack and its notes/binders/folders/assignments, my closet, my drawers, my journals (of course my journals), and my computer, too. And if they found anything at all they didn’t like, they would confront me about it, and often would bring it up for months telling me how wrong/bad I was. Related: I got all A’s and a B one year in high school, and didn’t hear the end of it for the entire summer vacation.

It got to the point that I invented my own language with its own vocabulary, grammar, and alphabet just so I could have just a little bit of privacy. (I’m still fluent in it.) I would only store everything important from my computer on my only Zip disk so that I could take it to school with me every day and keep it out of their hands. I was terrified of losing all of my work, and carrying a Zip disk around in my backpack (with no backups) was safer than leaving it at home.

I continued to experiment and learn whatever I could about computers and programming, and also started taking CS classes when I reached high school. Amusingly, I didn’t even like computers despite all of this — they were simply an escape.

Around the same time (freshman in high school) I was a decent enough dev to actually write useful software, and made a little bit of money doing that. I also made some for my parents, both for personal use and for their businesses. They never trusted it, and continually trashtalked it. They would only begrudgingly use the business software because the alternatives were many thousands of dollars. And, despite never ever having a problem with any of it, they insisted I accompany them every time, and these were often at 3am. Instead of being thankful, they would be sarcastically amazed when nothing went wrong for the nth time. Two of the larger projects I made for them were: an inventory management system that interfaced with hand scanners (VB), and another inventory management system for government facility audits (Access). Several websites, too. I actually got paid for the Access application thanks to a contract!

To put this into perspective, I was selected to work on a government software project about a year later, while still in high school. That didn’t impress them, either.

They continued to see computers as a useless waste of time, and kept telling me that I would be unemployable, and end up alone.

When they learned I was dating someone long-distance, and that it was a she, they simply took my computer and didn’t let me use it again for six months. Really freaking hard to do senior projects without a computer. They begrudgingly allowed me to use theirs for schoolwork, but it had a fraction of the specs — and some projects required Flash, which the computer could barely run.

Between the constant insults, yelling, abuse (not mentioned here), total lack of privacy, and the theft, destruction, etc. I still managed to teach myself about computers and programming.

In short, I am a dev despite my parents’ best efforts to the contrary.30 -

So i heard that docker is now a thing and decided to put my servers into containers as well (see image)...but somehow I still don't understand how is this supposed to simplify deployment, vertical scaling and so on...? :D

6

6 -

enabling firewall on a vps to secure my docker containers and forgetting to add openssh to allowed list --> ssh blocked 😃🔫24

-

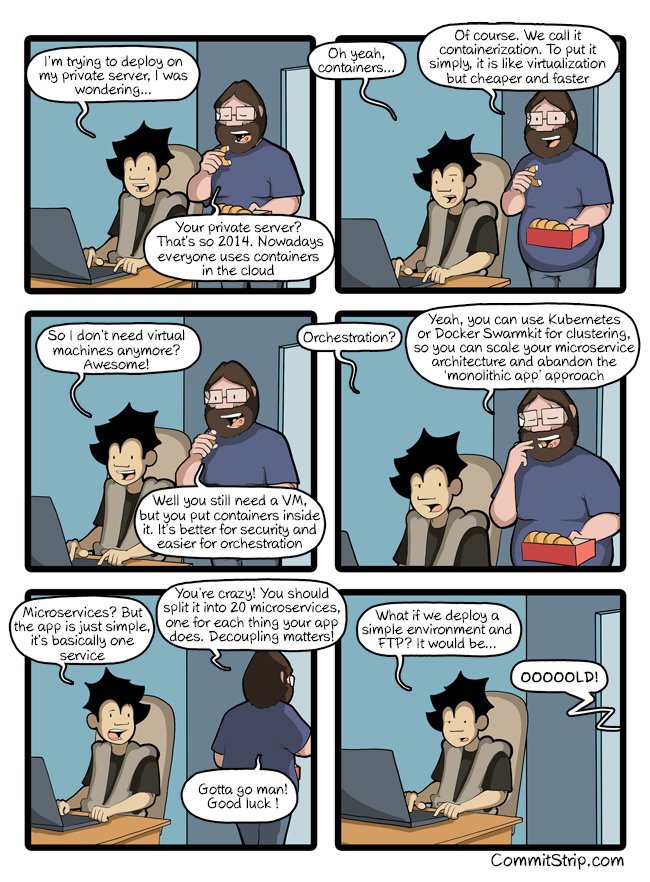

The 2014's called, they want their private server back!

Source: CommitStrip undefined swarmkit microservices container orchestration containers private server docker vps comic commitstrip kubernetes joke4

undefined swarmkit microservices container orchestration containers private server docker vps comic commitstrip kubernetes joke4 -

That awkward moment when I was able to run three docker containers on a 512MB server:

1. DotNet core web service

2. MySQL

3. OpenVPN

BUT I cannot run:

1. NodeJs web service

2. MongoDB container

Spent two hours configuring the damn server to get hit by this T_T14 -

I'm not even that old and I've had it with young cocksure, full of them self language/environment evangelists.

- "C# is always better than Java, don't bother learning it"

- "Lol python is all you need"

- "Omg windows/linux/mac sucks use this instead"

The list goes on really, at some point you have got to realize that while specialization is great, you have to learn a little bit of everything. It broadens you horizon a lot.

Yea, C# does some nifty stuff, but Java does too, learn both. Yea I'm sure Linux is better for hosting docker containers, but your clients are on mac or windows, learn to at least navigate and operate all three etc. Embrace knowledge from all the different tech camps it can only do you good and you will be so much more flexible and employable than your close minded peers :)

Hell even PHP has a lot to teach us (Even more than just to be a bad example, har har)9 -

Soooo my little encryption tool makes progress. <3

After a short break from development, we had our first successful loaded container yesterday!

This means:

- Protocoll is working

- We can create containers and store/copress files in it

- we're awesome

- I love it

- you are awesome, too!

(Loaded containers will be inaccessible for movement to different directories while our tool is open) 49

49 -

So this client wanted a demo on Dockers. So I gave the demo with some microservices running on different containers. Later the clients come back and say, "Docker is good. But please fit all the microservices in one container." I say but that defeats the purpose of microservices. But no, the client say. I tried explaining but no is no. Shit!! Fine! Have it your way!!5

-

DISCLAIMER: UNPOPULAR OPINION

I'm tired of the Linux community, they effectively discourage me of taking part in any discussion online

I'm currently making Windows-only soft, some game stuff, some legacy DirectX stuff you got it.

Everytime I go online, this shitty pattern happens, when I stumble upon a problem in project I don't know how to fix and I ask for help

These are responses

- HA, HA, WINDOWS BAD, HA, HA, GET REAL SYSTEM

- In Linux, we can do X too. I mean it has 4x less functionality and way shittier UX and is even harder to implement but it can probably work on too Linux, so it's better, yes, just move to Linux

- btw you didn't like Linux before? Try this distro man, it's better <links random distro>

Is there anything valuable in the Linux community? I feel like these people don't like Linux anyway, they just hate Windows. Every opinion, tip is always opinion based. Anyone who works on internals knows how much better and how well thought is Windows kernel compared to Linux kernel. Also, if someone unironically uses Linux distro on desktop PC then he's a masochist because desktop Linux is dieing. So many distros ceased work only this year.

Is it a good tool for servers and docker containers? I don't have my head stuck up my ass to admit that yes, it's much better than Windows here.

This community got me stressed right now, I fear that when I go to bathroom or open my microwave there's gonna be a Linux distro recommendation there

😠😡😠😴48 -

This rant is particularly directed at web designers, front-end developers. If you match that, please do take a few minutes to read it, and read it once again.

Web 2.0. It's something that I hate. Particularly because the directive amongst webdesigners seems to be "client has plenty of resources anyway, and if they don't, they'll buy more anyway". I'd like to debunk that with an analogy that I've been thinking about for a while.

I've got one server in my home, with 8GB of RAM, 4 cores and ~4TB of storage. On it I'm running Proxmox, which is currently using about 4GB of RAM for about a dozen VM's and LXC containers. The VM's take the most RAM by far, while the LXC's are just glorified chroots (which nonetheless I find very intriguing due to their ability to run unprivileged). Average LXC takes just 60MB RAM, the amount for an init, the shell and the service(s) running in this LXC. Just like a chroot, but better.

On that host I expect to be able to run about 20-30 guests at this rate. On 4 cores and 8GB RAM. More extensive migration to LXC will improve this number over time. However, I'd like to go further. Once I've been able to build a Linux which was just a kernel and busybox, backed by the musl C library. The thing consumed only 13MB of RAM, which was a VM with its whole 13MB of RAM consumption being dedicated entirely to the kernel. I could probably optimize it further with modularization, but at the time I didn't due to its experimental nature. On a chroot, the kernel of the host is used, meaning that said setup in a chroot would border near the kB's of RAM consumption. The busybox shell would be its most important RAM consumer, which is negligible.

I don't want to settle with 20-30 VM's. I want to settle with hundreds or even thousands of LXC's on 8GB of RAM, as I've seen first-hand with my own builds that it's possible. That's something that's very important in webdesign. Browsers aren't all that different. More often than not, your website will share its resources with about 50-100 other tabs, because users forget to close their old tabs, are power users, looking things up on Stack Overflow, or whatever. Therefore that 8GB of RAM now reduces itself to about 80MB only. And then you've got modern web browsers which allocate their own process for each tab (at a certain amount, it seems to be limited at about 20-30 processes, but still).. and all of its memory required to render yours is duplicated into your designated 80MB. Let's say that 10MB is available for the website at most. This is a very liberal amount for a webserver to deal with per request, so let's stick with that, although in reality it'd probably be less.

10MB, the available RAM for the website you're trying to show. Of course, the total RAM of the user is comparatively huge, but your own chunk is much smaller than that. Optimization is key. Does your website really need that amount? In third-world countries where the internet bandwidth is still in the order of kB/s, 10MB is *very* liberal. Back in 2014 when I got into technology and webdesign, there was this rule of thumb that 7 seconds is usually when visitors click away. That'd translate into.. let's say, 10kB/s for third-world countries? 7 seconds makes that 70kB of available network bandwidth.

Web 2.0, taking 30+ seconds to load a web page, even on a broadband connection? Totally ridiculous. Make your website as fast as it can be, after all you're playing along with 50-100 other tabs. The faster, the better. The more lightweight, the better. If at all possible, please pursue this goal and make the Web a better place. Efficiency matters.9 -

!rant

I'm just amazed what 512MB of RAM can do :O

That's htop from my VPS I feel sorry for the CPU though.

It is running three docker containers:

1. Dotnet Core

2. MySQL

3. OpenVPN 26

26 -

Are you using socat?

Any interesting use case you would like to share?

I am using it to create fake / proxy docker containers for network testing. 7

7 -

God damn fucking Windows bullshit.

Why is the fuck does Microsoft HATE its users?

Latest updates, and no fuck Windows 11, completely BREAKS all of my WSL environments.

Home directories are gone, or the environments are corrupt and won't even run.

99% of the issues these dense shit-fucks cause are because they RaNdOmLy reboot for their dumbass updates instead of scheduling them with the end user. During these rebots, do you thing they wait for everything to shut down?

HELL NO!

They just shut that shit down like they fucking own it. Editors? Gone. Browsers? Gone. WSL Consoles? Gone. Docker containers? Gone. IEdge? Hey, we have great news, we made IE your default browser again! BTW, your upgrade to Windows 11 is free until we force you to upgrade!

I'm so fed up with it.....so fucking tired of it...

The only reason why I even use WSL these days is to ssh into my Linux devices or run some quick dev tests in containers. Why not use PuTTY for SSH? Because it fucking SUCKS that's why.

I'm feeling so many emotions right now over bullshit that shouldn't even be happening. I'm literally at the point that I'm just going to install Linux on this device and just create a Windows VM on one of my hosts so I can still do "work" things that involve leadership.18 -

Now seriously, WHAT THE FUCK???

Every single time I have to work with people from a particular country [you have one guess. Yepp, that's the one], I see A-FUCKING-LOOOOOOT of manual work?!?

"can you reboot the server?"

-"sure, let me help you, sir" <20 minutes later> "done"

"can you unlock my account?"

-"yes, just a moment sir" <20 minutes later> "please check now"

"can you restart this environment w/ 200 instances?"

-"yes sir, let me check" <6 hours later> "please check now"

"you've missed 18 containers"

-"oh okay sir, will restart them now" <2hours later> "please check now"

[I am already OoO]

why is it that every time I have to work with you guys I am the one who is automating shit. How come you never think of/do any automata? You are fucking technitians, you should know how. WHY DO YOU ENJOY CLICKING ALL-DAY-LONG????

I'm serious. Why??? I'm struggling to understand...20 -

Our client decided to save some $$. At the end of each business day teams downscale their environments before leaving and the next day scale them up in the morning to start working.

The idea is not bad, but they are a bit too ignorant to the fact that some environments are exceeding AWS APIs limits already (huge, HUGE accounts, huge environments, each env easily exceeding /26 netmask, not even taking containers into account). Sooo... scaling up might take a while. Take today for example:

- come in to the office at 7

- start scaling up

- have lunch

- ~15:00 scaleup has finished

- one component is not working, escalating respective folks to fix them

- ~17:00 env is ready for work

- 17:01 initiate scaledown process and go home

Sounds like a hell of a productive day!!! -

Junior dev at my workplace keeps telling me how efficient docker is.

He decided to solve his latest task with a containers in swarm mode.

As expected, things went sideways, and I had the joy of cleaning up behind him.

A couple of days later, I noticed that I was running low on disk space - odd.

Turns out docker was eating up some 60 GB with a bunch dangling images - efficient is a funny term for this.17 -

Manager: "Can we get an accurate report on how many containers we have on the Kubernetes cluster?"

Me: "Well not really since Kubernetes is designed to be dynamic and agile with the number of resources and containers being created and deleted being subject to change at a moment's notice."

Manager: "I want numbers"

Me: "Okay well if we look at a simple moving average over time, we can see how the number of containers changes and then grab a rough answer from that"

Manager: "These numbers look a little round, are you sure these are exact?"

I'm going to throw myself into a pile of used heroin needles and hope i get stuck with whatever the hell this guy has to somehow be a manager while also being this retarded.13 -

Go home grafana, you're drunk....

Maybe I shouldn't run 50 containers on a system with 2 cores and 6 GB RAM. 4

4 -

Sysadmin: Apps on containers and kube is mandatory from now on, scaling is mandatory!

Devs: The systems weren’t designed for containers, we haven’t prepared shirt for scaling!

Sysadmin: Hold my beer!

-

Okay guys, this is it!

Today was my final day at my current employer. I am on vacation next week, and will return to my previous employer on January the 2nd.

So I am going back to full time C/C++ coding on Linux. My machines will, once again, all have Gentoo Linux on them, while the servers run Debian. (Or Devuan if I can help it.)

----------------------------------------------------------------

So what have I learned in my 15 months stint as a C++ Qt5 developer on Windows 10 using Visual Studio 2017?

1. VS2017 is the best ever.

Although I am a Linux guy, I have owned all Visual C++/Studio versions since Visual C++ 6 (1999) - if only to use for cross-platform projects in a Windows VM.

2. I love Qt5, even on Windows!

And QtDesigner is a far better tool than I thought. On Linux I rarely had to design GUIs, so I was happily surprised.

3. GUI apps are always inferior to CLI.

Whenever a collegue of mine and me had worked on the same parts in the same libraries, and hit the inevitable merge conflict resolving session, we played a game: Who would push first? Him, with TortoiseGit and BeyondCompare? Or me, with MinTTY and kdiff3?

Surprise! I always won! 😁

4. Only shortly into Application Development for Windows with Visual Studio, I started to miss the fun it is to code on Linux for Linux.

No matter how much I like VS2017, I really miss Code::Blocks!

5. Big software suites (2,792 files) are interesting, but I prefer libraries and frameworks to work on.

----------------------------------------------------------------

For future reference, I'll answer a possible question I may have in the future about Windows 10: What did I use to mod/pimp it?

1. 7+ Taskbar Tweaker

https://rammichael.com/7-taskbar-tw...

2. AeroGlass

http://www.glass8.eu/

3. Classic Start (Now: Open-Shell-Menu)

https://github.com/Open-Shell/...

4. f.lux

https://justgetflux.com/

5. ImDisk

https://sourceforge.net/projects/...

6. Kate

Enhanced text editor I like a lot more than notepad++. Aaaand it has a "vim-mode". 👍

https://kate-editor.org/

7. kdiff3

Three way diff viewer, that can resolve most merge conflicts on its own. Its keyboard shortcuts (ctrl-1|2|3 ; ctrl-PgDn) let you fly through your files.

http://kdiff3.sourceforge.net/

8. Link Shell Extensions

Support hard links, symbolic links, junctions and much more right from the explorer via right-click-menu.

http://schinagl.priv.at/nt/...

9. Rainmeter

Neither as beautiful as Conky, nor as easy to configure or flexible. But it does its job.

https://www.rainmeter.net/

10 WinAeroTweaker

https://winaero.com/comment.php/...

Of course this wasn't everything. I also pimped Visual Studio quite heavily. Sam question from my future self: What did I do?

1 AStyle Extension

https://marketplace.visualstudio.com/...

2 Better Comments

Simple patche to make different comment styles look different. Like obsolete ones being showed striked through, or important ones in bold red and such stuff.

https://marketplace.visualstudio.com/...

3 CodeMaid

Open Source AddOn to clean up source code. Supports C#, C++, F#, VB, PHP, PowerShell, R, JSON, XAML, XML, ASP, HTML, CSS, LESS, SCSS, JavaScript and TypeScript.

http://www.codemaid.net/

4 Atomineer Pro Documentation

Alright, it is commercial. But there is not another tool that can keep doxygen style comments updated. Without this, you have to do it by hand.

https://www.atomineerutils.com/

5 Highlight all occurrences of selected word++

Select a word, and all similar get highlighted. VS could do this on its own, but is restricted to keywords.

https://marketplace.visualstudio.com/...

6 Hot Commands for Visual Studio

https://marketplace.visualstudio.com/...

7 Viasfora

This ingenious invention colorizes brackets (aka "Rainbow brackets") and makes their inner space visible on demand. Very useful if you have to deal with complex flows.

https://viasfora.com/

8 VSColorOutput

Come on! 2018 and Visual Studio still outputs monochromatically?

http://mike-ward.net/vscoloroutput/

That's it, folks.

----------------------------------------------------------------

No matter how much fun it will be to do full time Linux C/C++ coding, and reverse engineering of WORM file systems and proprietary containers and databases, the thing I am most looking forward to is quite mundane: I can do what the fuck I want!

Being stuck in a project? No problem, any of my own projects is just a 'git clone' away. (Or fetch/pull more likely... 😜)

Here I am leaving a place where gitlab.com, github.com and sourceforge.net are blocked.

But I will also miss my collegues here. I know it.

Well, part of the game I guess?7 -

FUCK capitalist greed! I have befallen to their tricks once again. The daily dosage on my gummy vitamins was three a day but the total gummies in the container wasn't divisible by three so I had to buy three containers and eat one from each per day!21

-

So we started looking into docker. As always I needed to do the research and I was fine with it.

We have 4 projects that are sold into one suite so logically I follow the microservices build structure.

3 months later after everything has been set up, we get called into a meeting. The whole suite should be a monolith as microservices doesn't make sense to the people planning everything.

Ok pulled my current plans out abd made everything a monolith. Just note I also get pulled away to other Business Units to do work for them.

Get pulled into another meeting 2 months later. Why isn't the docker containers in microservices!? It is stupid running as a monolith and we should've done our jobs better etc...

After the meeting my manager and I just sighed and walked to the office. So basically 5 months doing the the exact same thing we did in 3 weeks.

Now they want to develop other services and want to strip every method into a microservice and bundle it together.

Life of a DevOps engineer right!1 -

"WTF? These records should have been inserted into the table!"

...Hours of checking code, trying to figure out how this is possible, can't find a way to have this scenario happen...

...Add additional debug and troubleshooting code, add more verbose logging, redeploy to all the containers, reset all the tables, many apologies to the boss for the delay....

...Co-worker comes in: "oh, hey, sorry, accidently deleted some stuff from the database last night before i left."1 -

I like to come up with superhero names for my docker containers. That makes me feel like the Uber-villain when I run "docker kill elasticman"4

-

Between containers, cluster managers and virtual machines we've lost track of where our code even is.4

-

Seriously, Ubuntu can go burn in hell far as I care.

I've spent the better part of my morning attempting to set it up to run with the correct Nvidia drivers, Cuda and various other packages I need for my ML-Thesis.

After countless random freezes, updates,. Downgrades and god-knows-what, I'm going back to Windows 10 (yes, you read that right). It's not perfect but at least I don't have to battle with my laptop to get it running. The only thing which REALLY bothers me about it is the lack of GPU pass-through, meaning running local docker containers rely solely on the CPU. In itself not a huge issue if only I didn't NEED THE GOD DAMN GPU FOR THE TRAINING21 -

Hololens development forced me into Visual Studio after spending years doing Unity development with MonoDevelop in MacOS.

Why haven't anyone told me to switch sooner! Thanks to Visual Studio + ReSharper, my brain farts turn into a coherent code almost automatically.

I hate that I need MacOS for the iOS development and Win 10 for Hololens. Running Win 10 on Parallels kinda works, but it is a compromise. Developing without headphones/earplugs is out of the question if you don't want to go deaf.

I wan't all the tools for a single OS so I don't have to maintain multiple computers and even more importantly travel with multiple laptops. Just love the security check question "Do you have any electronics with you? Please put it into the container." - "Could I get a couple more containers, please..."9 -

The internet says "containers are the holy grail, it's cross-platform and you can run your images and get the same result everywhere"

The practice says: nope... it doesn't do thatrant containers architecture os myth cross-platform theory ordering practise filesystem devops platforms6 -

So this one made me create an account on here...

At work, there's a feature of our application that allows the user to design something (keeping it vague on purpose) and to request a 3D render of their creation.

Working with dynamically positioned objects, textures and such, errors are bound to happen. That's why we implemented a bug report feature.

We have a small team tasked with monitoring the bug reports and taking action upon it, either by fixing a 3D scene, or raising the issue to the dev team.

The other day, a member of that team told me (since I'm part of the dev team) he had received a complain that the image a user received was empty. Strange, we didn't update the code in a while.

So I check the server, all the docker containers are running fine, the code is fine, no errors anywhere.

Then, as I'm scratching my head, that guy comes back to me and says "I don't know if it can help you, but it's been doing it for a week and a half now".

"And we're only hearing about it now?!", I replied.

"Well, I have bug reports going back to the 15th, but we haven't been checking the reports for a while now since everything was fine", he says as if it was actually a normal thing to say.

"How can you know everything is fine if you're not looking at the thing that says if there's an issue?!", I replied with a face filled with despair.

"Well we didn't receive any new reports in a while, so we just stopped looking. And now the report tool window is actually closed on my machine", he says with a smile and a little laugh in his tone.

In the end, I got to fix the server issue quite easily. But still, the feature wasn't working for 1.5 weeks and more that 330 images weren't sent properly...

So yeah, Doctor, the patient's heart is beating again! Let's unplug the monitor, it should be fine.

Welcome to my little piece of hell :)7 -

Actually I'm pleasantly surprised about Windows' stability nowadays. It's capable for running for up to a week with no stability issues, whereas systemd on the other hand.. let's just say that my Arch containers could do better right now.

Data mining aside, damn man.. Microsoft is improving for once! Is this the so-many'th unusable/somewhat stable switch? I mean, it's not like we haven't seen that happen yet! Windows 98, shit! Windows 2000, kinda alright! Windows Me, shit! Windows XP, kinda alright! Windows Vista, oh don't even get me started on that pile of garbage! Windows 7, again kinda okay! Windows 8, WHERE THE FUCK DID THAT START MENU GO YOU MOTHERFUCKERS?!!! Windows 10, well at least that Start menu got fixed. Then it got into some severe QA issues, which now seem to have gotten somewhat fixed again.

I'm starting to see a pattern here! 🤔13 -

We have a long time developer that was fired last week. The customer decided that they did not want to be part of the new Microsoft Azure pattern. They didn't like being tied to a vendor that they had little control over; they were stuck in Windows monoliths for the last 20 years. They requested that we switch over to some open source tech with scalable patterns.

He got on the phone and told them that they were wrong to do it. "You are buying into a more expensive maintenance pattern!" "Microsoft gives the best pattern for sustaining a product!" "You need to follow their roadmap for long term success!" What a fanboy.

Now all of his work including his legacy stuff is dumped on me. I get to furiously build a solution based on scalable node containers for Kubernetes and some parts live in AWS Lambda. The customer is super happy with it so far and it deepened their resolve to avoid anything in the "Microsoft shop" pattern. But wow I'm drowning in work.24 -

+ Death of Wix and WordPress (hopefully)

+ Abandonment of Java

- Bajillion JavaScript frameworks

- Software engineers trying to automate everything

+ Cloud blockchain AI containers that mine bitcoin with machine learning technology in high demand2 -

Thanks to mandatory password change, today:

- My windows account got locked because my phone kept logging into wifi using

old password.

- Google Hangouts were silently running in background with old session until I re-opened it. Work of others delayed by 4 hours due to missing message notifications.

- Docker for Windows lost credentials needed to use SMB mounts - 1h of debugging why my containers mount empty folders ( now I will know)

- Google G-Sync for Outlook asked for new password on outlook restart - few mails delayed.

All of that for sake of security that could be easily solved with 2FA instead, not faking that "I do not change number at the end of my password" -

I had this weird dream(emphasis on dream)

I was in a resort in bali waiting for my drinks and this cute girl comes over to my table.

Me : omg finally i can get a girlfriend

Me : hello beautiful

Her : hey i have this problem with my website *shows the messed up site with no divisions/containers * "can you fix this?"

Me : okay ;_;

PS : i started learning css, html and 2 other web technologies a week ago, and this is already happening to me, should i quit?5 -

A while back I took over responsibility for getting one of our developers up to speed, after the other guy basically gave up on him.

Management insisted that this new recruit was our guy. I was kind of going along, since I had been there during the recruits first meeting with us, and he seemed to know his stuff.

I was very wrong. He was suppose to have been working with kubernetes, but suddenly did not know what a container was. After explaining it to him, he said along the lines of “yeah, sure, I was only testing you, I know all about this”.

He did the same thing for a number of other technologies. Always said that he knew very well what it was, and that I did not need to teach him those things.

Yet, he always seemed to get stuck with basic stuff, like installing node, setting up env-vars, starting docker-containers locally and that sort of things.

I mean, it is perfectly fine to say that you don’t know. I even consider it a great answer; it shows honesty and makes me trust you more. But with this guy, it was just impossible to get him up and running, since he always “knew”, but yet always needed help.

We had to let him go. Since I had been the one who had spent most time with him, it was natural that I was to be the one to tell him. I was not looking forward to it, I’m not reallly a persons-guy. Still, I was calm and honest with him and basically told him that I had found it impossible to work with him, kind of harshly.

He then asked me if he could put me on as a reference for his future job-applications. I told him politely that I did not think that was a great idea. He asked why, I told him I would be unable to say anything that would benefit him. He then asked me to lie.

I didn’t know what to say, except for “no!”. Never saw him again after that.3 -

So we found an interesting thing at work today...

Prod servers had 300GB+ in locked (deleted) files. Some containers marked them for deletion but we think the containers kept these deleted files around.

300 GB of ‘ghost’ space being used and `du` commands were not helping to find the issue.

This is probably a more common issue than I realize, as I’m on the newer side to Linux. But we got it figured out with:

`lsof / | grep deleted`3 -

Installing my company's microsystems architecture to run locally is a pita because it is 60 GB of docker containers. With my 256 GB Macbook, that's a scaling problem for the years to come.6

-

They give you 2 containers, one with one amibea the second with 2 amibeas.

Amibeas divide themselves into 2 identical amibeas after 3 minutes.

The container with 2 amibeas get filled up after 3 hours.

How long does it take the one with one amibea to get filled up.

The test was named:"Javascript Test"....

I first thought, should I write this in JS?

Spoiler: the answer is 3h and 3 minutes.

But why? What's the link with JS?3 -

Boss only likes stuff he can see and that looks pretty. Doesn't understand code, servers, containers, DBs, etc. Praise is attributed by something looking nice in the frontend, whether or not it does crazy stuff behind the scenes.

Spent a week working on a project whilst boss was away. Got to about Thursday and thought, oh poop, I've built all this API stuff, but not much frontend. So I panic built frontend screens with no functionality just so I had something to show.

Wish I had another dev to share backend progress with (and code review)...8 -

Debugging a request that got lost in a myriad of containers of a scaled application....

It wouldn't be worth a rant if there wasn't some kinky SM stuff in it, wouldn't it?

Regexes. The fucker who wrote a lot of the NGINX (🤢) configuration decided to use the Perl Regexes with named group matching. A lot.

So now I have to fight wild variables supposedly coming from nowhere (as they stem from the named groups)… fucking single location redirects instead of maps.... A d have to write an explanatory documentation while going down the rabbit hole of trying to find out where the fuck that shitty frigging bastard redirected wrong.

I really wish I could eradicate the person who wrote this shit.... -

A few days ago Aruba Cloud terminated my VPS's without notice (shortly after my previous rant about email spam). The reason behind it is rather mundane - while slightly tipsy I wanted to send some traffic back to those Chinese smtp-shop assholes.

Around half an hour later I found that e1.nixmagic.com had lost its network link. I logged into the admin panel at Aruba and connected to the recovery console. In the kernel log there was a mention of the main network link being unresponsive. Apparently Aruba Cloud's automated systems had cut it off.

Shortly afterwards I got an email about the suspension, requested that I get back to them within 72 hours.. despite the email being from a noreply address. Big brain right there.

Now one server wasn't yet a reason to consider this a major outage. I did have 3 edge nodes, all of which had equal duties and importance in the network. However an hour later I found that Aruba had also shut down the other 2 instances, despite those doing nothing wrong. Another hour later I found my account limited, unable to login to the admin panel. Oh and did I mention that for anything in that admin panel, you have to login to the customer area first? And that the account ID used to login there is more secure than the password? Yeah their password security is that good. Normally my passwords would be 64 random characters.. not there.

So with all my servers now gone, I immediately considered it an emergency. Aruba's employees had already left the office, and wouldn't get back to me until the next day (on-call be damned I guess?). So I had to immediately pull an all-nighter and deploy new servers elsewhere and move my DNS records to those ASAP. For that I chose Hetzner.

Now at Hetzner I was actually very pleasantly surprised at just how clean the interface was, how it puts the project front and center in everything, and just tells you "this is what this is and what it does", nothing else. Despite being a sysadmin myself, I find the hosting part of it insignificant. The project - the application that is to be hosted - that's what's important. Administration of a datacenter on the other hand is background stuff. Aruba's interface is very cluttered, on Hetzner it's super clean. Night and day difference.

Oh and the specs are better for the same price, the password security is actually decent, and the servers are already up despite me not having paid for anything yet. That's incredible if you ask me.. they actually trust a new customer to pay the bills afterwards. How about you Aruba Cloud? Oh yeah.. too much to ask for right. Even the network isn't something you can trust a long-time customer of yours with.

So everything has been set up again now, and there are some things I would like to stress about hosting providers.

You don't own the hardware. While you do have root access, you don't have hardware access at all. Remember that therefore you can't store anything on it that you can't afford to lose, have stolen, or otherwise compromised. This is something I kept in mind when I made my servers. The edge nodes do nothing but reverse proxying the services from my LXC containers at home. Therefore the edge nodes could go down, while the worker nodes still kept running. All that was necessary was a new set of reverse proxies. On the other hand, if e.g. my Gitea server were to be hosted directly on those VPS's, losing that would've been devastating. All my configs, projects, mirrors and shit are hosted there.

Also remember that your hosting provider can terminate you at any time, for any reason. Server redundancy is not enough. If you can afford multiple redundant servers, get them at different hosting providers. I've looked at Aruba Cloud's Terms of Use and this is indeed something they were legally allowed to do. Any reason, any time, no notice. They covered all their bases. Make sure you do too, and hope that you'll never need it.

Oh, right - this is a rant - Aruba Cloud you are a bunch of assholes. Kindly take a 1Gbps DDoS attack up your ass in exchange for that termination without notice, will you?4 -

A lot of docker containers.

I often have to use docker containers while I don't understand it as well yet and quite some containers literally come with zero documentation or bad docs.

This both as for how to set the containers up and how to debug stuff.

This is one of the big reasons why I'm not as big of a fan of docker yet.7 -

Inherited a simple marketplace website that matches job seekers and hospitals in healthcare. Typically, all you need for this sort of thing is a web server, a database with search

But the precious devs decided to go micro-services in a container and db per service fashion. They ended up with over 50 docker containers with 50ish databases. It was a nightmare to scale or maintain!

With 50 database for for a simple web application that clearly needs to share data, integration testing was impossible, data loss became common, very hard to pin down, debugging was a nightmare, and also dangerous to change a service’s schema as dependencies were all tangled up.

The obvious thing was to scale down the infrastructure, so we could scale up properly, in a resource driven manner, rather than following the trend.

We made plans, but the CTO seemed worried about yet another architectural changes, so he invested in more infrastructure services, kubernetes, zipkin, prometheus etc without any idea what problems those infra services would solve.1 -

So apparently I can't test my apps on my own device without paying my Apple Developer Certificate.

I knew it is needed to pay for it if you want to publish/distribute your app but c'mon... This is ridiculous.

My app was literally a fresh app creation, a fucking white screen one page fucking app and when I tried to run in on my iPhone, then I ended up having this problem:

dyld: Library not loaded: @rpath/libswiftCore.dylib

Referenced from: /var/containers/Bundle/Application/BCD48EAA-82C2-46F6-ADEE-45C740C3B66D/HWorld.app/HWorld

Reason: no suitable image found. Did find:

/private/var/containers/Bundle/Application/BCD48EAA-82C2-46F6-ADEE-45C740C3B66D/HWorld.app/Frameworks/libswiftCore.dylib: code signing blocked mmap() of '/private/var/containers/Bundle/Application/BCD48EAA-82C2-46F6-ADEE-45C740C3B66D/HWorld.app/Frameworks/libswiftCore.dylib'

(lldb)

If any of you guys know how to solve it without paying (even more) PLEASE let me know

THANKS14 -

My last successful project was a small project I did together with my gf in javascript. She needed to make some algorithms for school for transfering

freight containers and picking them up. I made some visuals and buttons for her to press. And she added a file with algorithms based on the helper functions I created. such as: GetFirstEmptyPosition() or PlaceContainerAt(x, y)

She learned a bit of programming. And I learned a bit of javascript.5 -

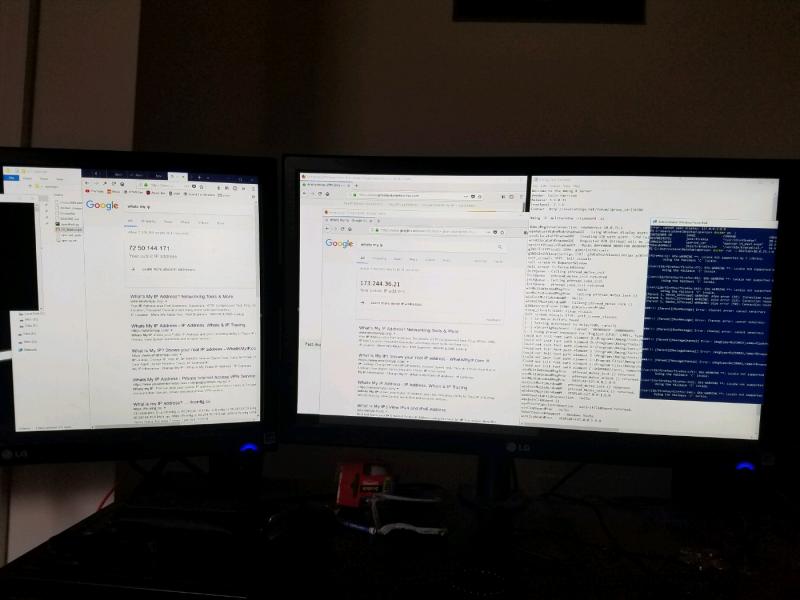

Two weeks of my life! All of this is on a win10 host with docker for windows. This is Docker running openvpn, and docker running Firefox in another container sharing VPN access from first container and also opens an x11 window port for Firefox GUI. Then x11 window server on Windows host to receive GUI. So left is firefox clearnet running native, right is Firefox over vpn in all containers, simultaneously.

1

1 -

Management: List your most significant achievement during this cycle.

Me: Developed and implemented a full stack of database micro containers that allow scalability and reusability.

-

I am currently blocked from doing my job by a firewall policy handed down from corporate that prevents WSL2 from connecting to the internet. Three days of no dev environment and counting.

We make linux software to be hosted on linux in linux containers in linux. We use linux command line tools to make it work.

"NO! WE ARE THE ALL-POWERFUL IT DEPARTMENT AND YOU MUST USE WINDOWS BECAUSE FUCK YOU THAT'S WHY."14 -

Between high school and college, working in a circuit board manufacturing storeroom.

Fun fact: when we are bagging small boards, we do not gently lay them in containers, they're usually thrown at least 6 feet into a bin of the same type of board after they're placed in the bag. We also don't remake a board when pins are bent, we just bend them back with tweezers. And you know that rule about not touching the gold connectors... Yeah... So much for that... Did I remember to mention that these boards are for medical equipment?

On the bright side, we at least have electrostatic discharge control going on all the time.3 -

Fuck i hate myself right now...

> Wanted to install minikube on my homeserver(which doesn't have a graphics card in it)

> Error: Virtualization is not enabled

> Take the server out of my rack, opens my desktop pc, takes the gpu out, puts in it my server, and then goes into bios.

> Find that virtualization is enabled.

> Realize that I'm running a dozen docker containers on a daily basis from my server, so OF FREAKING COURSE virtualization is enabled...

So after all that, I figure out that I should probably just google my issue, which leads to me find out, it's just an issue with virtualbox, and simply running 'minikube config set vm-driver none', it fixes it, and I am now running minikube.............

Took half an hour of work, to realize that I'm a complete fucking idiot, who shouldn't be allowed near a computer2 -

Added a bond interface in my Proxmox installation for added cromulence, works, reboot again, works, reboot once more just to be sure, network down.. systemctl restart networking, successfully put the host's network back up.. lxc-attach 100, network in containers is still down apparently.. exit container, pct shutdown 100, pct start 100, lxc-attach again... Network now works fine in containers too.

Systemd's aggressive parallelization that likely tried to put the shit up too early is so amazing!

I'm literally almost crying in despair at how much shit this shitstaind is giving me lately.

Thank you Poettering for this great init, in which I have to manually restart shit on reboot because the "system manager" apparently can't really manage. Or be a proper init for that matter.

/rant

And yes I know that you've never had any issues with it. If you've got nothing better to say than that then please STFU. "Works for me" is also a rant I wrote a while back.12 -

Got told by a senior engineer to basically fuck off with my standard library containers like vector because they are used by people who dont know how to write code in c++ and don't know how to handle pointers.

Am I wrong for trying to use as much possible code from the standard library?13 -

Forget about a missing semicolon. I was forwarding Neo4j to port 4747 and calling it in Asp.Net with port 7474 in Docker containers. It took me 6 hours to figure that one out. Lol, It's time for the weekend.4

-

My productivity has gradually increased untill now by using:

Linux-server+VSCode (+Git+Terminal)+tmux+tmux-resurrect

Any further suggestion on dev tooling setup would be appreciated.

I primarily work on DevOps projects - bash scripts, linux server apps, containers, kubernetes, 11

11 -

Hello guys. Today I bring you my list of top 3 programs that use too much memory

🪟 Windows 10+

⚛️ Web browsers, Electron

🐋 Docker containers

Honorable mention: ☕Java

The developers of those programs should put more effort into optimizing memory usage13 -

Containerize everything

My containers are too big, let's just remove some useless binaries...

Later

~ $ less /var/log/foobar.log

/bin/sh: less: not found

~ $ cat /var/log/foobar.log

/bin/sh: cat: not found

~ $ ls /var/log/foobar.log

/bin/sh: ls: not found

~ $ su

/bin/sh: su: not found

~ $ exit

/bin/sh: exit: not found2 -

I'm learning docker and I just started a container running a Linux distro.

What was the first command I run in the container?

rm -Rf / --no-preserve-root3 -

"Millions of slaves"

"When you kill it, you kill everything."

-- Guy at work doing presentation about docker2 -

Today was a good day.

I was told to use in-house BitBucket runners for the pipelines. Turns out, they are LinuxShellRunners and do not support docker/containers.

I found a way to set up contained, set up all the dependencies and successfully run my CI tasks using dagger.io (w/o direct access to the runner -- only through CI definition yaml and Job logs in the BitBucket console).

Turns out, my endeavour triggered some alerts for the Infra folks.

I don't care. I'm OOO today. And I hacked their runners to do what I wanted them to do (but they weren't supposed to do any of it). All that w/o access to the runners themselves.

It was a good day :)))))

Now I'll pat myself on my back and go get a nice cup of tea for my EOD :)3 -

Get a request for commit rights for my container repo; another developer would be lovely. Let's see what they know and want to work on.

*reading message*

*reading message*

"...I'd like to enable your containers to hold other containers."

They already do. Stay the fuck away from my code. -

Why the f*** do people add their BD on LinkedIn??? Who wants to know when a f**** recruiter gets another year older??? If I am close to you I will know or have you on FB.... WTF, who anyway had the idea that would be a good feature to add???4

-

The fuck is up with venv, conda, pip, pip3, python3, CRYPTOGRAPHY_OPENSSL_NO_LEGACY and "you can't install packages in docker based environments" DUDE STOP WHAT THE FUCK

How the fuck is that the scripting language of choice? It has by far the most confusing and messy runtime setup. Like it's easier to make sense of Javas version-shenanigans than this bullshit.

And then you think well what gives. Runs > python ...

"This environment is externally managed and you can go kill yourself, JUST LOOK UP PEP-666" LIKE NO YOU FUCK, JUST RUN THE FUCKING SCRIPT!

It's nice you thought about separation of versions but DOCKRR DOCKER DOCKER THERE ARE CONTAINERS WHY THE FUCK DO YOU DO SOME BULLSHIT WITH ENVS IN FOLDERS REQUIRING SOME RUNTIME BULLSHIT WHAT NO STOP WWHYYY7 -

Don't you just love it when an official Docker image suddenly switches from one base image to another, and they automatically update all existing tags? Oh you've had it locked to v1.2.3, guess what, v1.2.3 now behaves slightly differently because it's been compiled with OpenSSL 3. Yeah, we updated a legacy version of the software just to recompile it with the latest version of OpenSSL, even though the previous version of OpenSSL is still receiving security fixes.

I don't think it's the image maintainers or Docker's fault though. Docker images are expected to be self-contained, and updating the base image is necessary to get the latest security fixes. They had two options: to keep the old base image which has many outdated and vulnerable libraries, or to update the base image and recompile it with OpenSSL 3.

What really bothers me about the whole thing is that this is the exact fucking problem containers were supposed to solve. But even with all the work that goes into developing and maintaining container images, it still isn't possible to do anything about the fact that the entire Linux ecosystem gives exactly zero fucks about backwards compatibility or the ability to run legacy software.12 -

In flutter , there’s something called TextButton.icon. Which render a button look like this :

(👍🏼 Like Button)

But there’s this tiny twat decided to use countless of nested column in a nested row and containers just to create a fucking button! This particular class contains 1438 lines of code! Most of the code are redundant and nested fucking shit.

I want to punch this guy so hard but I do not intend to start a ww3 with china.

That means I have no choice but refactor it as I implement a feature requested by the product team, every components break. It is like a land mine field here. One changes , the entire application crash.

So there are useless mother fucking Sherlock fucking holmes kept telling me that “don’t worry about refactoring now , just complete the task.” , like seriously “how in the name of mother fucking god of all arseholes can I complete my task when I can’t change even one component?”

These people are fucking genius. Their intelligence resurrected Einstein and made him die the second time.3 -

So I just was invited to look at an internal issue at a company that has modified BEM styling rules for their website, because the website started looking very weird on some pages..

I instantly knew that someone had to have fucked with them, because theres no way margin--top-50 all of the sudden isn't working.

Looked at their git history and apparently a senior approved a juniors styles change, where he modified things like margin--bottom-0 to be 500px or block--float-left to be float: right in specific containers with an id like "#uw81hf_"

WHY DO YOU APPROVE THIS GARBAGE WITHOUT CHECKING IT?! AND HOW DID THIS PASS A SECOND CHECK?!!! WTF -

We'd just finished a refactor of the gRPC strategy. Upgraded all the containers and services to .Net core 3, pushed a number of perf changes to the base layer and a custom adaptive thread scheduler with a heuristic analyzer to adjust between various strategies.

Went from 1.7M requests/s on 4 cores and 8gb ram to almost 8M requests/s on the same, ended up having to split everything out distributed 2 core instances because we were bottlenecking against 10gb/e bandwidth in AWS.2 -

For whatever ungodly reason my containers library, which has extensive testing, profiling, and benchmarks against other containers libraries receives regular emails directed towards me about it, always one of two things

1) "don't reinvent the wheel" I have to assume these people haven't looked at the performance characteristics or features at all. I didn't waste away weeks of my life. I needed something and couldn't find it anywhere. I'm outperforming many crap implementations by nearly an order of magnitude, and can offer queries upon the containers in both generalized and specialized forms. As an analogy, I made airless 3d printed wheels, and people are regularly telling me I should still be using ancient wooden spoke wheels; they probably would argue in favor of using a horse drawn carriage as well. How is it possible technically minded people can also be so anti-progress?

2) "Please rewrite this in X language." You know what? YOU rewrite it. I chose what I did because it made it easy to do what I needed to do. Hilariously, the languages I get asked to use most often, are the same who's containers libraries perform worst in the benchmarks.

Both sound like half baked developers trying to sound superior. Pull your head out of your ass and actually outperform me and others. I'm so fucking sick of this "all talk no action" bullshit.5 -

During one of our visits at Konza City, Machakos county in Kenya, my team and I encountered a big problem accessing to viable water. Most times we enquired for water, we were handed a bottle of bought water. This for a day or few days would be affordable for some, but for a lifetime of a middle income person, it will be way too much expensive. Of ten people we encountered 8 complained of a proper mechanism to access to viable water. This to us was a very demanding problem, that needed to be sorted out immediately. Majority of the people were unable to conduct income generating activities such as farming because of the nature of the kind of water and its scarcity as well.

Such a scenario demands for an immediate way to solve this problem. Various ways have been put into practice to ensure sustainability of water conservation and management. However most of them have been futile on the aspect of sustainability. As part of our research we also considered to check out of the formal mechanisms put in place to ensure proper acquisition of water, and one of them we saw was tree planting, which was not sustainable at all, also some few piped water was being transported very long distances from the destinations, this however did not solve the immediate needs of the people.We found out that the area has a large body mass of salty water which was not viable for them to conduct any constructive activity. This was hint enough to help us find a way to curb this demanding challenge. Presence of salty water was the first step of our solution.

SOLUTION

We came up with an IOT based system to help curb this problem. Our system entails purification of the salty water through electrolysis, the device is places at an area where the body mass of water is located, it drills for a suitable depth and allow the salty water to flow into it. Various sets of tanks and valves are situated next to it, these tanks acts as to contain the salty water temporarily. A high power source is then connected to each tank, this enable the separation of Chlorine ions from Hydrogen Ions by electrolysis through electrolysis, salt is then separated and allowed to flow from the lower chamber of the tanks, allowing clean water to from to the preceding tanks, the preceding tanks contains various chemicals to remove any remaining impurities. The whole entire process is managed by the action of sensors. Water alkalinity, turbidity and ph are monitored and relayed onto a mobile phone, this then follows a predictive analysis of the data history stored then makes up a decision to increase flow of water in the valves or to decrease its flow. This being a hot prone area, we opted to maximize harnessing of power through solar power, this power availability is almost perfect to provide us with at least 440V constant supply to facilitate faster electrolysis of the salty water.

Being a drought prone area, it was key that the outlet water should be cold and comfortable for consumers to use, so we also coupled our output chamber with cooling tanks, these tanks are managed via our mobile application, the information relayed from it in terms of temperature and humidity are sent to it. This information is key in helping us produce water at optimum states, enabling us to fully manage supply and input of the water from the water bodies.

By the use of natural language processing, we are able to automatically control flow and feeing of the valves to and fro using Voice, one could say “The output water is too hot”, and the system would respond by increasing the speed of the fans and making the tanks provide very cold water. Additional to this system, we have prepared short video tutorials and documents enlighting people on how to conserve water and maintain the optimum state of the green economy.

IBM/OPEN SOURCE TECHNOLOGIES

For a start, we have implemented our project using esp8266 microcontrollers, sensors, transducers and low payload containers to demonstrate our project. Previously we have used Google’s firebase cloud platform to ensure realtimeness of data to-and-fro relay to the mobile. This has proven workable for most cases, whether on a small scale or large scale, however we meet challenges such as change in the fingerprint keys that renders our device not workable, we intend to overcome this problem by moving to IBM bluemix platform.

We use C++ Programming language for our microcontrollers and sensor communication, in some cases we use Python programming language to process neuro-networks for our microcontrollers.

Any feedback conserning this project please? 8

8 -

!dev

Two containers had accident this morning. Result? Wasted 45 mins in traffic and my 90 mins commute to office became 135 mins.

// I really don't care about causalities and stuff. Is it me alone who is too self centered? 🤔3 -

We have a customer that doesn't have a SINGLE linux admin. So now I have to fight with Docker EE on Windows Server 2019 (linux containers). Just fucking kill me already. Nothing works and when it does it just seems so shaky. Not like I didn't try to tell my team that linux containers on Docker EE for windows aren't officially supported and highly experimental.

But wtf do I expect from someone that STILL sells SAP and from someone that is stupid enough to buy it.2 -

I like how a co-worker is expecting a Windows Container to work in Linux, and vice versa.

No, it doesn't work like that you fucking baffoon, Linux rootfses needs the Linux kernel (hence why it runs on WSL2 or Hyper-V using LCOW), and same can be said for Windows Containers.

How dumb of a human being must you assume everything should "just work" in a container?5 -

Yes its completely necessary to have a spring server with a mysql database with docker containers all over your ass for 3 fucking endpoints and a (url, varchar, varchar) schema. Fuck you. How the fuck do i run all this shit and how do you expect me to create a frontend for something that has no documented endpoints?? Fuck you.

In other news, im now a senior.3 -

I am sure now, my server hates me T_T

opened an SSH from Vultr portal and after working for few minutes that thing suddenly rebooted, am I a bad person :(

In its defense I had two docker containers that literary ate all the 512MB ram available :35 -

Don't forget, docker desktop will require paid licenses after 31/1/22

Docker Desktop remains free for small businesses (fewer than 250 employees AND less than $10 million in annual revenue), personal use, education, and non-commercial open source projects.

Just a friendly reminder to fuck docker and migrate to a better solution.25 -

Hey guys! lambda is amazing! Docker containers! They said the whole amazing point with containers is that they run the same everywhere! Except not really, because lambda 'containers' are an abomination of *nix standards with arbitrary rules that really don't make sense! That's ok though, you can push your shit to fargate, then it will work more like those docker containers you know and love and can run locally! Oh wait! fargate is a pain in the ass x 2 just to setup! You want to expose your REST api running on a container to the world? well ha, you'd better be ready to spend literally 2 weeks to configure every fucking piece of technology that every existed just to do that!!!! it's great, AWS, i love it, i'm so fucking big brained smart!!!

give me a break.... back in my day you'd set up an nginx instance, put your REST / websocket / graphQL service whatever behind it, and call it a day!!!!!!!

even with tools like pulumi or terraform this is a pain in the ass and a half, i mean what are we really doing here folks

way too complicated, the whole AWS infrastructure is setup for companies who need such a level of granularity because they have 1 billion users daily... too bad there are like 5 companies on the planet who need this level of complexity!!!!!!!

oh, and if your ego is bashed because of this post, maybe reread it and realize you're the 🤡

i'm unhappy because i was lied to. docker containers are docker containers, until they aren't. *nix standards are *nix standards, until they aren't

bed time.12 -

Hey! This is a followup to my last story.

TL;DR: I thinking of quitting my old job, got an offer at a startup, about the same pay, but much better working conditions.

First of all, the meeting with my lead. It was a performance report on her side to me, and I got 100 to 110% in performance in all points. My lead said "this team without you wouldn't be this team anymore" - which makes me feel a little bit bad for her if I decide to quit. She is a great team lead, but I don't belive the old company is worth my time anymore.

Now to the new company. Shortly after that performance report meeting, I had a call with the ceo, and what do I have to say besides: What a cool dude. He listened to me, asked me questions about my previous jobs (not just as programmer) and so on. But because first looks are deceiving, I went to their office last thursday. And wow. Their are exactly what I imagined them to be. Cool, young folks, 100% tech enthusiasts, and open minded.

One of the new hires in the new company wanted a 6 months internship between his studies. Instead they offered him a full time job - for the 6 months. They even offered me to pay back my scholarship that I will own my old company for leaving early. This is awesome.

The only things that will be worse than my old job are, that I have to negotiate payment instead of yearly increases, 4 days less paid vacation, so only 26 days, and 40h weeks. And they have no workers council, which isn't good, but it's not the worst either.

I got them fixed on 57.000€, not including an up to 10.000€ annual bonus. The way you achieve your bonus seems good to. It's split in two parts, internal and external bonus. Internal bonus is when you engage with internal events like tech calls, sharing your knowledge on your main IT topics, etc. External Bonus is a bit more complicated, but also straight forward. You work on projects for customers, and if you have less than 3 weeks a year that you dont participate in an project, you get the full bonus.

Last friday, I filed a request for a certificate of employment from my current team lead, this is odd for her because I have never done it before, and she asked why I requested it. I said to her that we can talk about it, and she agreed but didn't call me, yet.

Lastly, another good friend of mine will be employed by my team soon, but for a fraction of the payment that I currently receive! He is doing the exact same work, and even worse, he is doing project managment for his main developer project too! And is getting less paid... I just cant...

Yesterday we needed to update a few cloud instances, the only other person who knows about setting up CICD and our OpenShift Containers than me is only in part time and works two days a week, his trainee didn't know anything, so it's up to me. This isn't hard or anything, but it shows that this system our mangement maintains will fail soon, maybe even with me going? I sure hope so tbh.

One of you guys said, I should go to my team lead and negotiate a higher pay, but the truth is, that because we are a big ISP we have an collective agreement for payment and are grouped by tasks (which is bull shit btw, because I'm doing tasks much higher paid than currently). This also means that I cannot simply jump in another group, and can only increase my current pay to about 115%, which is done automatically every year by 5% up to 115%. Anything above is considered extra, but I don't think they will go with it.

I will decide this week about my future at the old company, but I really don't know what to do...2 -

Because I am very interested in cyber security and plan on doing my masters in it security I always try to stay up to date with the latest news and tools. However sometimes its a good idea to ask similar-minded people on how they approach these things, - and maybe I can learn a couple of things. So maybe people like @linuxxx have some advice :D Let's discuss :D

1) What's your goto OS? I currently use Antergos x64 and a Win10 Dualboot. Most likely you guys will recommend Linux, but if so what ditro, and why? I know that people like Snowden use QubesOS. What makes it much better then other distro? Would you use it for everyday tasks or is it overkill? What about Kali or Parrot-OS?

2) Your go-to privacy/security tools? Personally, I am always conencted to a VPN with openvpn (Killswitch on). In my browser (Firefox) I use UBlock and HttpsEverywhere. Used NoScript for a while but had more trouble then actual use with it (blocked too much). Search engine is DDG. All of my data is stored in VeraCrypt containers, so even if the system is compromised nobody is able to access any private data. Passwords are stored in KeePass. What other tools would you recommend?

3) What websites are you browsing for competent news reports in the it security scene? What websites can you recommend to find academic writeups/white papers about certain topics?

4) Google. Yeah a hate-love relationship, but its hard to completely avoid it. I do actually have a Google-Home device (dont kill me), which I use for calender entries, timers, alarms, reminders, and weather updates as well as IOT stuff such as turning my LED lights on and off. I wouldn"t mind switching to an open source solution which is equally good, however so far I couldnt find anything that would a good option. Suggestions?

5) What actions do you take to secure your phone and prevent things such as being tracked/spyed? Personally so far I havent really done much except for installing AdAway on my rooted device aswell as the same Firefox plugins I use on my desktop PC.

6) Are there ways to create mirror images of my entire linux system? Every now and then stuff breaks, that is tedious to fix and reinstalling the system takes a couple of hours. I remember from Windows that software such as Acronis or Paragon can create a full image of your system that you can backup and restore at any point to get a stable, healthy system back (without the need to install everything by hand).

7) Would you encrypt the boot partition of your system, even tho all data is already stored in encrypted containers?

8) Any other advice you can give :P ?12 -

At work everybody uses Windows 10. We recently switched from Vagrant to Docker. It's bad enough I have to use Windows, it's even worse to use Docker for Windows. If God forbid, you're ever in this situation and have to choose, pick Vagrant. It's way better than whatever Docker is doing... So upon installing version 2.2.0.0 of Docker for Windows I found myself in the situation where my volumes would randomly unmount themselves and I was going crazy as to why my assets were not loading. I tried 'docker-compose restart' or 'down' and 'up -d', I went into Portainer to check and manually start containers and at some point it works again but it doesn't last long before it breaks. I checked my yml config and asked my colleagues to take a look. They also experience different problems but not like mine. There is nothing wrong with the configuration. I went to check their github page and I saw there were a lot of issues opened on the same subject, I also opened one. Its over a week and I found no solution to this problem. I tried installing an older version but it still didn't work. Also I think it might've bricked my computer as today when I turned on my PC I got greeted by a BSOD right at system start up... I tried startup repair, boot into safe mode, system restore, reset PC, nothing works anymore it just doesn't boots into windows... I had to use a live USB with Linux Mint to grab my work files. I was thinking that my SSD might have reached its EoL as it is kinda old but I didn't find any corrupt files, everything is still there. I can't help but point my finger at Docker since I did nothing with this machine except tinkering with Docker and trying to make it work as it should... When we used Vagrant it also had its problems but none were of this magnitude... And I can't really go back to Vagrant unless my team also does so...

10

10 -

Is it just me or is systemd 240 royally fucked up?

My containers running Arch don't get connected to the network and systemd-networkd fails to start. On my laptop, the network is also unable to connect sometimes. And it consistently fails to complete shutdown without hard poweroff. The only viable temporary solution was rolling back to a snapshot in ALA that still has 239. Is that really supposed to be how a critical system component like the init is supposed to behave and get taken care of its issues?

Fuck QA, amirite 🤪.. seriously, that's even worse than Windows' "features" 😒12 -

Ok c++ professionals out there, I need your opinion on this:

I've only written c++ as a hobby and never in a professional capacity. That other day I noticed that we have a new c++ de developer at the office of which my first impression wasn't the greatest. He started off with complaining about having to help people out a lot (which is very odd as he was brought in to support one of our other developers who isn't as well versed in c++). This triggered me slightly and I decided to look into some of the PRs this guy was reviewing (to see what kind of stuff he had to support with and if it warranted his complaints).

It turns out it was the usual beginner mistakes of overusing raw pointers/deletes and things like not using various other STL containers. I noticed a couple of other issues in the PR that I thought should be addressed early in the projects life cycle, such as perhaps introduce a PCH as a lot of system header includes we're sprinkled everywhere to which our new c++ developer replies "what is pch?". I of course reply what it is and it's use, but I still get the impression that he's never heard of this concept. He also had opinions that we should always use shared_ptr as both return and argument types for any public api method that returns or takes a pointer. This is a real-time audio app, so I countered that with "maybe it's not always a good idea as it will introduce overhead due to the number of times certain methods are called and also might introduce ABI compability issues as its a public api.". Essentially my point was "let's be pragmatic and not religiously enforce certain things".

Does this sound alarming to any of you professional c++ developers or am I just being silly here?6 -

I give up.

I have to make a bunch of disparate things work together, in an otherwise easy-sounding ask, and they’re all broken. Every one of them is broken. Even links between them are broken. Devs hardcoding incorrect values; devs pushing broken code, broken dependencies, broken configs. The orchestration is broken. The containers are broken. The NATS/gRPC flow is broken. Nothing works out of the box; many of the pieces require config and env hacking to run, and when they do run, the data formats don’t match between services (nor do e.g. account IDs). I can’t do it anymore. I was so burned out before this ticket that I couldn’t look at anything work related without feeling physical pain. And now this.

I’ve spent weeks just getting things to run and talk, and being ignored when I ask for help. There have been walls every step of the way, and I’m still not done. I can’t do this anymore.14 -

Currently learning Kubernetes Cluster. Wow so much to learn.

Much easier to setup any app for a Cluster. Only building the Image takes time 🙄

Containers are the future ✌🏻6 -

So apparently Docker exposes all the forwarded ports on *all* the interfaces, making all running containers available to the entire internet BY DEFAULT.

I have a question:

WHY???10 -

You can connect to Docker containers directly via IP in Linux, but not on Mac/Windows (no implementation for the docker0 bridged network adapter).

You can map ports locally, but if you have the same service running, it needs different ports. Furthermore if you run your tests in a container on Jenkins, and you let it launch other containers, it has to connect via IP address because it can't get access to exposed host ports. Also you can't run concurrent tests if you expose host ports.

My boss wanted me to change the tests so it maps the host port and changes from connecting to the IP to localhost if a certain environment variable was present. That's a horrible idea. Tests should be tests and not run differently on different environments. There's no point in having tests otherwise!

Finally found a solution where someone made a container that routed traffic to docker containers via a set of tun adapters and openvpn. It's kinda sad Docker hasn't implemented this natively for Mac/Windows yet.4 -

Docker for Mac loves burning my CPU. Even if I delete all images and containers and do a fresh reinstall and JUST have Docker for Mac running, I always see crazy CPU usage on my Late 2016 15" Macbook Pro.

6

6 -

one thing I don't get from Kubernetes

if a group of containers are called pods, and pods are containers, and containers in Kubernetes are also inside containers, therefore, its a clusterfuck of containers?1 -

Tonight I'd thought I'd get a make bulk iced coffee... A fairly easy task except I used the wrong container.....

Apparently not all plastic containers can hold hot liquid....

Spent an hour wiping coffee off the counter top, everything that was on it, and the floor... Probably need to give it a good cleaning with floor cleaner tmr too...

But yes there went my evening of relaxing.... 16

16 -

Damn it!!! Fuck! That's 2 hours of my life I'm never getting back... FUCK!

{"op":"replace","path":"/spec/template/spec/containers/0/resources/limit/cpu","value":"4.0"}9 -

Starting to migrate apps from a messy structure to docker containers. Have not worked a lot with docker before so i'll hope it goes as smooth as people told me

9

9 -

Docker with nginx-proxy and nginx-proxy-le (Lets Encrypt) is fucking awesome!

I only have to specify environment variables with email and host name when starting new containers with web servers, and the proxy containers will automatically make a proxy to the new container, and generate lets encrypt ssl certificates. I don’t have to lift a fucking finger, it is so ducking genius2 -

I really hate working on work that require constant reporting and decision making: I don't understand how people are olay with work like that. let me present 2 cases : for context we are working on a complete ui revamp of our app.

case 1 Screen A ui revamp.

task : change screen A ui as per new figma

me : evaluates, give estimates, make new screen by changing ui wherever applicable , adding logics for new screen wherever applicable , and removing old logics whichever not mentioned in figma. finishes a task in standard timelines