Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "training data"

-

Real conversation:

Coworker: I'm trying to classify data based on X

Me: Mhh. Seems like a hard task, we don't have data to figure out X

Coworker: I know! That's why I thought about using machine learning!

Me: (Oh, boy)

Coworker: I'm working on training this ML model that will be able to classify based on X

Me: and what are the inputs for your training?

Coworker: The data classified based on X

Me: And where did you get that from?

Coworker: I don't have it! That will be the output of my ML model!

Me: But you just said that was the input!

Coworker: Yes

Me: Don't you see a contradiction here?

Coworker: Yes, it's a pretty complicated problem, that's why I'm stuck. Can you help me with that?

Me: (Looking at my watch) Sorry I'm late for a meeting. Catch up later, bye!14 -

To replace humans with robots, because human beings are complete shit at everything they do.

I am a chemist. My alignment is not lawful good. I've produced lots of drugs. Mostly just drugs against illnesses. Mostly.

But whatever my alignment or contribution to the world as a chemist... Human chemists are just fucking terrible at their job. Not for a lack of trying, biological beings just suck at it.

Suiting up for a biosafety level lab costs time. Meatbags fuck up very often, especially when tired. Humans whine when they get acid in their face, or when they have to pour and inhale carcinogenic substances. They also work imprecisely and inaccurately, even after thousands of hours of training and practice.

Weaklings! Robots are superior!

So I replaced my coworkers with expensive flow chemistry setups with probes and solenoid fluid valves. I replaced others with CUDA simulations.

First at a pharma production & research lab, then at a genetics lab, then at an Industrial R&D lab.

Many were even replaced by Raspberry Pi's with two servos and a PH meter attached, and I broke open second hand Fischer Sci spectrophotometers to attach arduinos with WiFi boards.

The issue was that after every little overzealous weekend project, I made myself less necessary as well.

So I jumped into the infinitely deep shitpool called webdev.

App & web development is kind of comfortable, there's always one more thing to do, but there's no pressure where failure leads to fatalities (I think? Wait... do I still care?).

Super chill, if it weren't for the delusion that making people do "frontend" and "fullstack" labor isn't a gross violation of the Geneva Convention.

Quickly recognizing that I actually don't want to be tortured and suffer from nerve damage caused by VueX or have my organs slowly liquefied by the radiation from some insane transpiling centrifuge, I did what any sane person would do.

Get as far away from the potential frontend blast radius as possible, hide in a concrete bunker.

So I became a data engineer / database admin.

That's where I'm quarantining now, safely hiding from humanity behind a desk, employed to write a MySQL migration or two, setting up Redis sorted sets, adding a field to an Elastic index. That takes care of generating cognac and LSD money.

But honestly.... I actually spend most of my time these days contributing to open source repositories, especially writing & maintaining Rust libraries.10 -

You know side projects? Well I took on one. An old customer asked to come and take over his latest startups companys tech. Why not, I tought. Idea is sound. Customer base is ripe and ready to pay.

I start digging and the Hardware part is awesome. The guys doing the soldering and imbedded are geniuses. I was impressed AF.

I commit and meet up with CEO. A guy with a vision and sales orientation/contacts. Nice! This shit is gonna sell. Production lines are also set.

Website? WTF is this shit. Owner made it. Gotta give him the credit. Dude doesn't do computers and still managed to online something. He is still better at sales so we agree that he's gonna stick with those and I'll handle the tech.

I bootstrap a new one in my own simplistic style and online it. I like it. The owner likes it. He made me to stick to a tacky logo. I love CSS and bootstrap. You can make shit look good quick.

But I still don't have access to the soul of the product. DBs millions rows of data and source for the app I still behind the guy that has been doing this for over a year.

He has been working on a new version for quite some time. He granted access to the new versions source, but back end and DB is still out of reach. Now for over month has passed and it's still no new version or access to data.

Source has no documentation and made in a flavor of JS frame I'm not familiar with. Weekend later of crazy cramming I get up to speed and it's clear I can't get further without the friggin data.

The V2 is a scramble of bleeding edge of Alpha tech that isn't ready for production and is clearly just a paid training period for the dev. And clearly it isn't going so well because release is a month late. I try to contact, but no reaction. The owner is clueless.

Disheartening. A good idea is going to waste because of some "dev" dropping a ball and stonewalling the backup.

I fucking give him till the end of the next week until I make the hardware team a new api to push the data and refactor the whole thing in proper technologies and cut him off.

Please. If you are a dev and don't have the time to concentrate on the solution don't take it on and kill off the idea. You guys are the key to making things happening and working. Demand your cut but also deserve it by delivering or at least have the balls to tell you are not up for it. -

> 3 hour long mandatory online cybersecurity training

> Preaches that the company is very secure and the only risk of being “hacked” is if employees post company data on social media

> oksure.tar.gz

> Bored out of my mine

> Open dev console

> JSON continually getting sent to backend

> Simple structure and human readable fields including {complete: false}

> Open postman

> {complete: true}

> Send

> 200 response

> Refresh page

> Course complete

> :’ )

Muppets.4 -

Long story short, I'm unofficially the hacker at our office... Story time!

So I was hired three months ago to work for my current company, and after the three weeks of training I got assigned a project with an architect (who only works on the project very occasionally). I was tasked with revamping and implementing new features for an existing API, some of the code dated back to 2013. (important, keep this in mind)

So at one point I was testing the existing endpoints, because part of the project was automating tests using postman, and I saw something sketchy. So very sketchy. The method I was looking at took a POJO as an argument, extracted the ID of the user from it, looked the user up, and then updated the info of the looked up user with the POJO. So I tried sending a JSON with the info of my user, but the ID of another user. And voila, I overwrote his data.

Once I reported this (which took a while to be taken seriously because I was so new) I found out that this might be useful for sysadmins to have, so it wasn't completely horrible. However, the endpoint required no Auth to use. An anonymous curl request could overwrite any users data.

As this mess unfolded and we notified the higher ups, another architect jumped in to fix the mess and we found that you could also fetch the data of any user by knowing his ID, and overwrite his credit/debit cards. And well, the ID of the users were alphanumerical strings, which I thought would make it harder to abuse, but then realized all the IDs were sequentially generated... Again, these endpoints required no authentication.

So anyways. Panic ensued, systems people at HQ had to work that weekend, two hot fixes had to be delivered, and now they think I'm a hacker... I did go on to discover some other vulnerabilities, but nothing major.

It still amsues me they think I'm a hacker 😂😂 when I know about as much about hacking as the next guy at the office, but anyways, makes for a good story and I laugh every time I hear them call me a hacker. The whole thing was pretty amusing, they supposedly have security audits and QA, but for five years, these massive security holes went undetected... And our client is a massive company in my country... So, let's hope no one found it before I did.6 -

I've been fairly lucky with my bosses of late since I've progressed in my programming career. But my absolute worst boss was when I first started working in an office environment doing data entry. My boss at the time was terrible, and she was always against innovation or process improvement. She also always tried to make herself look good and taking credit for the accomplishments of others. If she screwed up it was your fault, and she was "always buried in email" so she could never respond to you for pto requests, or escalation of issues between departments. My whole family pretty much worked in various roles in the department and she fired my brother after my mother left the company for no reason, saying he was "sleeping", but I worked right next to him and he's tall and had to slouch just to comfortable see his computer screen since the same manager refused to approve work station improvements for him.

Our workflow was to receive daily spreadsheets of health care claims that we had to manually process and enter into the system. So being the lazy innovator that I am, and trying to find ways I can efficiently work, I delved into studying visual basic and programmed a few functions and tools in excel to analyze, highlight, and process some of the data since the claims on the spreadsheets always had a specific pattern. This was all before I had any formal education in computer science so the program was very basic and clunky but it tripled my efficiency. When I brought it up to my boss to spread it among the rest of our team so they could use it after a short 20 minute training, she struck it down saying any training or use of it would be a waste of resources since it was too technical and complex to be used and if I were to keep improving it or use it I would be fired. It was literally copy and paste from one spreadsheet to the other en masse and clicking a button to sort and fill in the blanks. Eventually I showed it to the director of the department when working on a large data entry project with her, and I was later offered a job as a technical analyst where I was responsible for the codebase that generated the reports for the department and specifically all the reports my old boss used where I would occasionally mess with her to get back at all the crap she gave me and my brother. Since all the reports were blind carbon copied to everyone, I would send out her reports on a delay while everyone else got them on time. It eventually got her in so much crap she had to step down as a manager. She still works in the same company that I started working at again earlier this year, and like the many careers she's ruined she eventually ruined her own within the company 😂4 -

"Big data" and "machine learning" are such big buzz words. Employers be like "we want this! Can you use this?" but they give you shitty, ancient PC's and messy MESSY data. Oh? You want to know why it's taken me five weeks to clean data and run ML algorithms? Have you seen how bad your data is? Are you aware of the lack of standardisation? DO YOU KNOW HOW MANY PEOPLE HAVE MISSPELLED "information"?!!! I DIDN'T EVEN KNOW THERE WERE MORE THAN 15 WAYS OF MISSPELLING IT!!! I HAD TO MAKE MY OWN GODDAMN DICTIONARY!!! YOU EVER FELT THE PAIN OF TRAINING A CLASSIFIER FOR 4 DAYS STRAIGHT THEN YOUR GODDAMN DEVICE CRASHES LOSING ALL YOUR TRAINED MODELS?!!

*cries*7 -

Dear LinkedIn,

Try training your AI model without using captured data of real recruiters and their dodgy practices. 2

2 -

From my work -as an IT consultant in one of the big 4- I can now show you my masterpiece

INSIGHTS FROM THE DAILY LIFE OF A FUNCTIONAL ANALIST IN A BIG 4 -I'M NOT A FUNCTIONAL ANALYST BUT THAT'S WHAT THEY DO-

- 10:30, enter the office. By contract you should be there at 9:00 but nobody gives a shit

- First task of the day: prepare the power point for the client. DURATION: 15 minutes to actually make the powerpoint, 45 minutes to search all the possible synonyms of RESILIENCE BIG DATA AGILE INTELLIGENT AUTOMATION MACHINE LEARNING SHIT PISS CUM, 1 hour to actually present the document.

- 12:30: Sniff the powder left by the chalks on the blackboards. Duration: 30 minutes, that's a lot of chalk you need to snort.

13:00, LUNCH TIME. You get back to work not one minute sooner than 15.00

- 15:00, conference with the HR. You need to carefully analyze the quantity and quality of the farts emitted in the office for 2 hours at least

- 17:00 conference call, a project you were assigned to half a day ago has a server down.

The client sent two managers, three senior Java developers, the CEO, 5 employees -they know logs and mails from the last 5 months line by line-, 4 lawyers and a beheading teacher from ISIS.

On your side there are 3 external ucraininans for the maintenance, successors of the 3 (already dead) developers who put the process in place 4 years ago according to God knows which specifications. They don't understand a word of what is being said.

Then there's the assistant of the assistant of a manager from another project that has nothing to do with this one, a feces officer, a sys admin who is going to watch porn for the whole conference call and won't listen a word, two interns to make up a number and look like you're prepared. Current objective: survive. Duration: 2 hours and a half.

- 19:30, snort some more chalk for half an hour, preparing for the mail in which you explain the associate partner how because of the aforementioned conference call we're going to lose a maintenance contract worth 20 grands per month (and a law proceeding worth a number of dollars you can't even read) and you have no idea how could this happen

- 20:00, timesheet! Compile the weekly report, write what you did and how long did it take for each task. You are allowed to compile 8 hours per day, you worked at least 11 but nobody gives a shit. Duration: 30 minutes

- 20:30, update your consultant! Training course, "tasting cum and presenting its organoleptic properties to a client". Bearing with your job: none at all. Duration: 90 minutes, then there's half an hour of evaluating test where you'll copy the answers from a sheet given to you by a colleague who left 6 months ago.

- 22:30, CHANCE CARD! You have a new mail from the HR: you asked for a refund for a 3$ sandwich, but the receipt isn't there and they realized it with a 9 months delay. You need to find that wicked piece of paper. DURATION: 30 minutes. The receipt most likely doesn't even exist anymore and will be taken directly from your next salary.

- 23:00 you receive a message on Teams. It's the intern. It's very late but you're online and have to answer. There's an exception on a process which have been running for 6 years with no problems and nobody ever touches. The intern doesn't know what to do, but you wrote the specifications for the thing, 6 years ago, and everything MUST run tonight. You are not a technician and have no fucking clue about anyhing at all. 30 minutes to make sure it's something on our side and not on the client side, and in all that the intern is as useful as a confetto to wipe your ass. Once you're sure it's something on our side you need to search for the senior dev who received the maintenance of the project, call him and solve the problem.

It turns out a file in a shared folder nobody ever touches was unreachable 'cause one of your libraries left it open during the last run and Excel shown a warning modal while opening it; your project didn't like this last thing one bit. It takes 90 minutes to find the root of the problem, you solve it by rebooting one of your machines. It's 01:00.

You shower, watch yourself on the mirror and search for the line where your forehead ends and your hair starts. It got a little bit back from yesterday; the change can't be seen with the naked eye but you know it's there.

You cry yourself to sleep. Tomorrow is another day, but it's going to be exactly like today.8 -

Paranoid Developers - It's a long one

Backstory: I was a freelance web developer when I managed to land a place on a cyber security program with who I consider to be the world leaders in the field (details deliberately withheld; who's paranoid now?). Other than the basic security practices of web dev, my experience with Cyber was limited to the OU introduction course, so I was wholly unprepared for the level of, occasionally hysterical, paranoia that my fellow cohort seemed to perpetually live in. The following is a collection of stories from several of these people, because if I only wrote about one they would accuse me of providing too much data allowing an attacker to aggregate and steal their identity. They do use devrant so if you're reading this, know that I love you and that something is wrong with you.

That time when...

He wrote a social media network with end-to-end encryption before it was cool.

He wrote custom 64kb encryption for his academic HDD.

He removed the 3 HDD from his desktop and stored them in a safe, whenever he left the house.

He set up a pfsense virtualbox with a firewall policy to block the port the student monitoring software used (effectively rendering it useless and definitely in breach of the IT policy).

He used only hashes of passwords as passwords (which isn't actually good).

He kept a drill on the desk ready to destroy his HDD at a moments notice.

He started developing a device to drill through his HDD when he pushed a button. May or may not have finished it.

He set up a new email account for each individual online service.

He hosted a website from his own home server so he didn't have to host the files elsewhere (which is just awful for home network security).

He unplugged the home router and began scanning his devices and manually searching through the process list when his music stopped playing on the laptop several times (turns out he had a wobbly spacebar and the shaking washing machine provided enough jittering for a button press).

He brought his own privacy screen to work (remember, this is a security place, with like background checks and all sorts).

He gave his C programming coursework (a simple messaging program) 2048 bit encryption, which was not required.

He wrote a custom encryption for his other C programming coursework as well as writing out the enigma encryption because there was no library, again not required.

He bought a burner phone to visit the capital city.

He bought a burner phone whenever he left his hometown come to think of it.

He bought a smartphone online, wiped it and installed new firmware (it was Chinese; I'm not saying anything about the Chinese, you're the one thinking it).

He bought a smartphone and installed Kali Linux NetHunter so he could test WiFi networks he connected to before using them on his personal device.

(You might be noticing it's all he's. Maybe it is, maybe it isn't).

He ate a sim card.

He brought a balaclava to pentesting training (it was pretty meme).

He printed out his source code as a manual read-only method.

He made a rule on his academic email to block incoming mail from the academic body (to be fair this is a good spam policy).

He withdraws money from a different cashpoint everytime to avoid patterns in his behaviour (the irony).

He reported someone for hacking the centre's network when they built their own website for practice using XAMMP.

I'm going to stop there. I could tell you so many more stories about these guys, some about them being paranoid and some about the stupid antics Cyber Security and Information Assurance students get up to. Well done for making it this far. Hope you enjoyed it. 26

26 -

The solution for this one isn't nearly as amusing as the journey.

I was working for one of the largest retailers in NA as an architect. Said retailer had over a thousand big box stores, IT maintenance budget of $200M/year. The kind of place that just reeks of waste and mismanagement at every level.

They had installed a system to distribute training and instructional videos to every store, as well as recorded daily broadcasts to all store employees as a way of reducing management time spend with employees in the morning. This system had cost a cool 400M USD, not including labor and upgrades for round 1. Round 2 was another 100M to add a storage buffer to each store because they'd failed to account for the fact that their internet connections at the store and the outbound pipe from the DC wasn't capable of running the public facing e-commerce and streaming all the video data to every store in realtime. Typical massive enterprise clusterfuck.

Then security gets involved. Each device at stores had a different address on a private megawan. The stores didn't generally phone home, home phoned them as an access control measure; stores calling the DC was verboten. This presented an obvious problem for the video system because it needed to pull updates.

The brilliant Infosys resources had a bright idea to solve this problem:

- Treat each device IP as an access key for that device (avg 15 per store per store).

- Verify the request ip, then issue a redirect with ANOTHER ip unique to that device that the firewall would ingress only to the video subnet

- Do it all with the F5

A few months later, the networking team comes back and announces that after months of work and 10s of people years they can't implement the solution because iRules have a size limit and they would need more than 60,000 lines or 15,000 rules to implement it. Sad trombones all around.

Then, a wild DBA appears, steps up to the plate and says he can solve the problem with the power of ORACLE! Few months later he comes back with some absolutely batshit solution that stored the individual octets of an IPV4, multiple nested queries to the same table to emulate subnet masking through some temp table spanning voodoo. Time to complete: 2-4 minutes per request. He too eventually gives up the fight, sort of, in that backhanded way DBAs tend to do everything. I wish I would have paid more attention to that abortion because the rationale and its mechanics were just staggeringly rube goldberg and should have been documented for posterity.

So I catch wind of this sitting in a CAB meeting. I hear them talking about how there's "no way to solve this problem, it's too complex, we're going to need a lot more databases to handle this." I tune in and gather all it really needs to do, since the ingress firewall is handling the origin IP checks, is convert the request IP to video ingress IP, 302 and call it a day.

While they're all grandstanding and pontificating, I fire up visual studio and:

- write a method that encodes the incoming request IP into a single uint32

- write an http module that keeps an in-memory dictionary of uint32,string for the request, response, converts the request ip and 302s the call with blackhole support

- convert all the mappings in the spreadsheet attached to the meetings into a csv, dump to disk

- write a wpf application to allow for easily managing the IP database in the short term

- deploy the solution one of our stage boxes

- add a TODO to eventually move this to a database

All this took about 5 minutes. I interrupt their conversation to ask them to retarget their test to the port I exposed on the stage box. Then watch them stare in stunned silence as the crow grows cold.

According to a friend who still works there, that code is still running in production on a single node to this day. And still running on the same static file database.

#TheValueOfEngineers2 -

I am a machine learning engineer and my boss expects me to train an AI model that surpasses the best models out there (without training data of course) because the client wanted ‘a fully automated AI solution’.13

-

Can someone explain to me why the fuck I should even care about the fact, that some companies collect, use and sell my data? I'm not famous, I'm not a politician and I'm not a criminal, I think most of us aren't and won't ever be. We aren't important. So what is this whole bullshittery all about? I seriously don't get it and I find it somewhat weird that especially tech guys and IT "experts" in the media constantly just make up these overly creepy scenarios about big unsafe data collecting companies "stealing" your "private" information. Welcome to the internet, now get the fuck over it or just don't be online. It's your choice, not their's.

I honestly think, some of these "security" companies and "experts" are just making this whole thing bigger than it actually is, because it's a damn good selling point. You can tell people that your app is safe and they'll believe you and buy your shit app because they don't understand and don't care what "safe" or "unsafe" means in this context. They just want to be secure against these "evil monster" companies. The same companies, which you portrayed them as "evil" and "unfair" and "mean" and "unrepentant" for over a decade now.

Just stop it now. All your crappy new "secure" messenger apps have failed awesomely. Delete your life now, please. This isn't about net neutrality or safety on the internet. This is all about you, permanently exaggerating about security and permanently training people to be introverted paranoid egoistic shit people so that they buy your elitist bullshit software.

Sorry for my low english skills, but please stop to exist, thank you.64 -

Most kids just want to code. So they see "Computer Science" and think "How to be a hacker in 6 weeks". Then they face some super simple algebra and freak out, eventually flunking out with the excuse that "uni only presents overtly theoretical shit nobody ever uses in real life".

They could hardly be more wrong, of course. Ignore calculus and complexity theory and you will max out on efficiency soon enough. Skip operating systems, compilers and language theory and you can only ever aspire to be a script kiddie.

You can't become a "data scientist" without statistics. And you can never grow to be even a mediocre one without solid basic research and physics training.

Hack, I've optimized literal millions of dollars out of cloud expenses by choosing the best processors for my stack, and weeks later got myself schooled (on devRant, of all places!) over my ignorance of their inner workings. And I have a MSc degree. Learning never stops.

So, to improve CS experience in uni? Tear down students expectations, and boil out the "I just wanna code!" kiddies to boot camps. Some of them will be back to learn the science. The rest will peak at age 33.16 -

I'm exhausted.

After one and a half year after my last rant, I'm here again. I left the previous job as web developer after almost 12y. At the time I found 3 new jobs as developer; I chose the one with the largest company, the premises were really good. My 3 interviews were excellent. But what I found next was almost a nightmare.

I was literally "confined" for the first 2 months, no internet connection, no email address, very little communication with colleagues. My near colleague was sharing the code were I would work via a usb key. All this for "safety" purposes, because "here you start this way".

For me it was not so bad, I could take my time to study my work and do it (without Stack Overflow and only by reference guides, when needed - I felt proud in an old way). But the next months were really tough: no help to understand what I missed about the work I was doing (consider that I was working on a large database, previously used by an old ERP, on which other developers - prior me - wrote a lot of code, to make the company continue use all the data after the expiration of the ERP licences - speaking about a year 2000's Java application).

Now I find myself struggling, because the main project on which I was working has been set aside (apparently for some budget decisions); my work team constantly make me do some manteinance on the old code, but the main tasks are done by the old mate, "because deadlines are always pressing and there would not be enough time to explain you anything". I'm not growing.

I'm really becoming reluctant to write code, and whenever I do it, I constantly feel under pressure, and this makes me nervous and inclined to make errors.

Don't take me wrong, I was/am good at my work, but it's like I'm loosing that sparkle I had till a few years ago.

When I'm at home I try to study or write code, just to keep training my mind, but I'm really struggling and I'm worried about losing my brain for doing this job. I constantly forget things and lose focus.

Never felt this way. I am thinking about the chance to switch again and search for another company.6 -

So... After reading up on the theoretical stuff earlier, I decided to make a real AI that can identify handguns and decide whether it's a revolver or a semiautomatic with 95 percent accuracy...

Well, basically, I been browsing my local gun store's online store for four hours for training data, killed a Mac mini while first training the system and I think I ended on the domestic terrorism watch list... Was that black sedan always there?

Anyway... It's working fairly accurate, my monkey wrench is a revolver by the way.

Isn't AI development a wonderful excuse for all kinds of shit?

"why do you have 5000 pictures of guns on your computer?" - "AI development"

"why did you wave around a gun in front of your web cam" - "AI development"

"why is there a 50 gram bag in your desk?" - "AI development"

Hmm... yeah well... I think it might work. I could have picked a less weird testing project, but... No.7 -

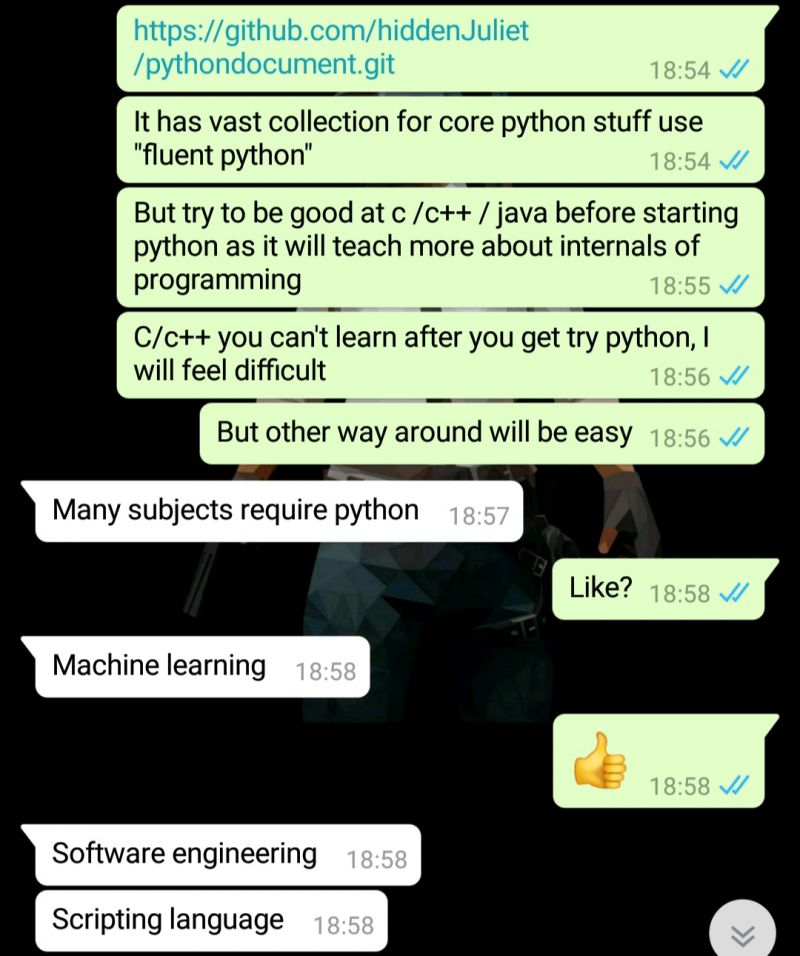

Why do people jump from c to python quickly. And all are about machine learning. Free days back my cousin asked me for books to learn python.

Trust me you have to learn c before python. People struggle going from python to c. But no ml, scripting,

And most importantly software engineering wtf?

Software engineering is how to run projects and it is compulsory to learn python and no mention of got it any other vcs, wtf?

What the hell is that type of college. Trust me I am no way saying python is weak, but for learning purpose the depth of language and concepts like pass by reference, memory leaks, pointers.

And learning algorithms, data structures, is more important than machine learning, trust me if you cannot model the data, get proper training data, testing data then you will get screewed up outputs. And then again every one who hype these kinds of stuff also think that ml with 100% accuracy is greater than 90% and overfit the data, test the model on training data. And mostly the will learn in college will be by hearting few formulas, that's it.

Learn a language (concepts in language) like then you will most languages are easy.

Cool cs programmer are born today😖 31

31 -

Ok friends let's try to compile Flownet2 with Torch. It's made by NVIDIA themselves so there won't be any problem at all with dependencies right?????? /s

Let's use Deep Learning AMI with a K80 on AWS, totally updated and ready to go super great always works with everything else.

> CUDA error

> CuDNN version mismatch

> CUDA versions overwrite

> Library paths not updated ever

> Torch 0.4.1 doesn't work so have to go back to Torch 0.4

> Flownet doesn't compile, get bunch of CUDA errors piece of shit code

> online forums have lots of questions and 0 answers

> Decide to skip straight to vid2vid

> More cuda errors

> Can't compile the fucking 2d kernel

> Through some act of God reinstalling cuda and CuDNN, manage to finally compile Flownet2

> Try running

> "Kernel image" error

> excusemewhatthefuck.jpg

> Try without a label map because fuck it the instructions and flags they gave are basically guaranteed not to work, it's fucking Nvidia amirite

> Enormous fucking CUDA error and Torch error, makes no sense, online no one agrees and 0 answers again

> Try again but this time on a clean machine

> Still no go

> Last resort, use the docker image they themselves provided of flownet

> Same fucking error

> While in the process of debugging, realize my training image set is also bound to have bad results because "directly concatenating" images together as they claim in the paper actually has horrible results, and the network doesn't accept 6 channel input no matter what, so the only way to get around this is to make 2 images (3 * 2 = 6 quick maths)

> Fix my training data, fuck Nvidia dude who gave me wrong info

> Try again

> Same fucking errors

> Doesn't give nay helpful information, just spits out a bunch of fucking memory addresses and long function names from the CUDA core

> Try reinstalling and then making a basic torch network, works perfectly fine

> FINALLY.png

> Setup vid2vid and flownet again

> SAME FUCKING ERROR

> Try to build the entire network in tensorflow

> CUDA error

> CuDNN version mismatch

> Doesn't work with TF

> HAVE TO FUCKING DOWNGEADE DRIVERS TOO

> TF doesn't support latest cuda because no one in the ML community can be bothered to support anything other than their own machine

> After setting up everything again, realize have no space left on 75gb machine

> Try torch again, hoping that the entire change will fix things

At this point I'll leave a space so you can try to guess what happened next before seeing the result.

Ready?

3

2

1

> SAME FUCKING ERROR

In conclusion, NVIDIA is a fucking piece of shit that can't make their own libraries compatible with themselves, and can't be fucked to write instructions that actually work.

If anyone has vid2vid working or has gotten around the kernel image error for AWS K80s please throw me a lifeline, in exchange you can have my soul or what little is left of it5 -

Should’ve posted this after it happened, but it requires a bit of background anyway.

There’s this guy that oversees our OpenStack environment. My team often make jokes and groan about him in private because he’s so overbearing. A few months back, he had to take us to our data center to show us our new racks, and he kept saying stupid stuff like “you break this and it costs me $30,000” as if he owns everything. He’s just... one of THOSE people. Always speaks in such a condescending way. We make jokes that he is our “best friend”.

Our company is shifting most of our products to the cloud in response to the coronavirus (trying to make it an opportunity for “innovation”). This has involved some structural and responsibility changes in our department, and long story short, I’m now heading the OpenStack environment alongside other projects.

This means going through grueling 1-on-1 meetings with our “best friend”. It’s not too bad, I can be pretty patient with people, so I didn’t mind too much at first. Then a few things happened.

1. He sent a shared folder that he owned containing info related to the environments. Several documents were outdated and incomplete, so I downloaded them, corrected them, and then uploaded the documents to my teams file share, as I was supposed to since we now own the projects.

2. Several files were missing, and when I asked about them, he said “Oh, did you refresh the browser?”. I told him no, that I downloaded them locally and republished them to my teams server, because he was supposed to hand everything off to us at once. He says “Well, silly, how are you going to get updates if you’re looking at them locally?” and kind of chuckles at me like I’m stupid.

3. He insists on training me how to remote into one of the servers to check on cluster space, which in itself is fine. I understand others wanting to make sure things will be done right by the people who come after them. But he tells me to download SuperPutty. I tell him, “oh no, that’s alright. I don’t need putty”. He says “oh cool, what tool do you use for ssh?”. I answer him “Just Git. If I want to I can use a CentOs bash terminal too, because we have WSL installed”. He responds “You can’t ssh through Git”.

I was actually a little shocked. I didn’t know if he was serious or not so I was silent for a few seconds before hesitantly saying “yes you can”. He says “this is news to me” and I so I tell him “every single one of our build jobs fetches code from Git with ssh” and he seemed genuinely shocked and surprised by that.... so then it occurs to me to show him that you can ssh in Powershell and that REALLY blew his mind. He would not shut up about it for several minutes. I was amused until it just got annoying.

Needless to say, my team had been previously teasing me about having to work with him, so they found it hilarious when I told them afterwards.8 -

Hired a new BI developer. She tested reasonably ok in SQL, and certainly showed good strengths in visualising data, plus had a good attitude in the interview. We hired her. She broke her laptop the first day. We got her another then she complained the camera didn't work but didn't realise the lever in front of the camera was to move the privacy shutter off and on.

Assigned her some work of taking queries that are used in a BI tool that targets the transactional database directly, and re-jigging them for Snowflake which we're using as a data warehouse now, aggregating all our data into one place. Yet, she's struggling to understand why the SQL query she's pasted in doesn't work as-is.

I go over it again; the source schemas and tables are this, but in Snowflake we've named them this. She then bemoans how much work that is to change them all - I say use find and replace. She then struggles with Snowflake syntax errors and asks for a guide on T-SQL to Snowflake. I show her Google and say "this is what I did when I hit these problems - search for 'Snowflake equivalent to T-SQL getdate()' or 'how to get current date in Snowflake' but she still doesn't understand. I ask if she's every had to work between T-SQL and MySQL or MySQL and PostgreSQL or Oracle and so on and she says yes. I say the syntax isn't the same, is it? And she goes oh, now I understand.

She scored reasonably in her SQL test but I'm now concerned there's something fundamental missing in her grasp of SQL. I gave her a detailed demo of the tools, I explained in the interview and on her start about our move to a data warehouse for all our apps, and put her through some training plus gave her time to work through our Confluence pages - not expecting she'll remember everything, but more to ensure she recalls they exist and what the general contents are.

Anyhow, that's my rant.6 -

This was about 3 years ago. I’m on vacation and just getting off the plane, when my boss calls me on his cellphone. Apparently the crontab on our main file upload server had gotten nuked, and he was asking if there were any backups.

A word about this server. I work with video, so this thing is doing about a few gigabits of traffic incoming at any moment. The cron jobs are necessary to move and organize these massive files into a sane scheme for processing. Hundreds of drop folders receiving thousands of files resulting in terabytes of data every single day. Our storage vendor tells us we have the third largest deployment they know about.

No cron jobs mean all of this content is just sitting around piling up. I tell him sorry, try contacting $otherAdmin since he’s more familiar with that system.

A few days later, after the vacation, I come back in. $boss and $otherAdmin have reconstructed the crontab from scratch after an all nighter.

I ask how it got deleted.

$boss was training some people how to set up new customers on this file server, and he told the trainees to open the crontab in read-only mode. One of them ran:

crontab -r

Yes, we back up our crontabs now.3 -

Can someone explain me AI/ML/DL in traditional algorithmic way without AI jargons?

What I currently understand is that they convert the training data to numbers based on a complex black boxed mathematical algorithm and then when a new data comes in, the same conversion is done and a decision is taken based on where the the new number fits in within the geometry/graph plot of the old numbers from training. The numbers are then updated. Is this what they call AI? Nearest number/decision search?

Kindly try to avoid critic, I am having a difficult time understanding the already trending AI stuff. People say that the algo exists from long back but only now we have the compute power.20 -

As you can see from the screenshot, its working.

The system is actually learning the associations between the digit sequence of semiprime hidden variables and known variables.

Training loss and value loss are super high at the moment and I'm using an absurdly small training set (10k sequence pairs). I'm running on the assumption that there is a very strong correlation between the structures (and that it isn't just all ephemeral).

This initial run is just to see if training an machine learning model is a viable approach.

Won't know for a while. Training loss could get very low (thats a good thing, indicating actual learning), only for it to spike later on, and if it does, I won't know if the sample size is too small, or if I need to do more training, or if the problem is actually intractable.

If or when that happens I'll experiment with different configurations like batch sizes, and more epochs, as well as upping the training set incrementally.

Either case, once the initial model is trained, I need to test it on samples never seen before (products I want to factor) and see if it generates some or all of the digits needed for rapid factorization.

Even partial digits would be a success here.

And I expect to create multiple training sets for each semiprime product and its unknown internal variables versus deriable known variables. The intersections of the sets, and what digits they have in common might be the best shot available for factorizing very large numbers in this approach.

Regardless, once I see that the model works at the small scale, the next step will be to increase the scope of the training data, and begin building out the distributed training platform so I can cut down the training time on a larger model.

I also want to train on random products of very large primes, just for variety and see what happens with that. But everything appears to be working. Working way better than I expected.

The model is running and learning to factorize primes from the set of identities I've been exploring for the last three fucking years.

Feels like things are paying off finally.

Will post updates specifically to this rant as they come. Probably once a day. 2

2 -

The everything is Data science craze trend.

Honestly it's not even sustainable with every kid and their grandmother wanting to be data scientists because it's a 'passion' and a 'dream job' and all of that click bait stuff.

It's just become ridiculous at this point and I doubt we'll even have the long awaited 'breakthroughs' people have been talking about for so long.

Also I have a strong feeling everyone thinks it's their 'passion' because it tops the lists of highest paid jobs out there and everyone thinks with 3 months of training they're a fully fledged data scientist because some Python or R package implements all the algorithms he could ever think of using.

Add to that the fact that most advertised data science jobs are actually data engineering where you maintain a date store and that's it.

Agree or disagree that's my piece and if you can convince me otherwise I'll be surprised because I've been subscribed to this idea for so long that it lost me some real good opportunities because I thought it was just what I was meant to be doing which turned to be false after I thought about it. There's a million other jobs that are more impactful and with pursuing.2 -

dev, ~boring

This is either a shower thought or a sober weed thought, not really sure which, but I've given some serious consideration to "team composition" and "working condition" as a facet of employment, particularly in regard to how they translate into hiring decisions and team composition.

I've put together a number of teams over the years, and in almost every case I've had to abide by an assemblage of pre-defined contexts that dictated the terms of the team working arrangement:

1. a team structure dictated to me

2. a working temporality scheme dictated to me

3. a geographic region in which I was allowed to hire

4. a headcount, position tuple I was required to abide by

I've come to regard these structures as weaknesses. It's a bit like the project management triangle in which you choose 1-2 from a list of inadequate options. Sometimes this is grounded in business reality, but more often than not it's because the people surrounding the decisions thrive on risk mitigation frameworks that become trickle down failure as they impose themselves on all aspects of the business regardless of compatibility.

At the moment, I'm in another startup that I have significantly more control over and again have found my partners discussing the imposition of structure and framework around how, where, why, who and what work people do before contact with any action. My mind is screaming at me to pull the cord, as much as I hate the expression. This stems from a single thought:

"Hierarchy and structure should arise from an understanding of a problem domain"

As engineers we develop processes based on logic; it's our job, it's what we do. Logic operates on data derived from from experiments, so in the absence of the real we perform thought experiments that attempt to reveal some fundamental fact we can use to make a determination.

In this instance we can ask ourselves the question, "what works?" The question can have a number contexts: people, effort required, time, pay, need, skills, regulation, schedule. These things in isolation all have a relative importance ( a weight ), and they can relatively expose limits of mutual exclusivity (pay > budget, skills < need, schedule < (people * time/effort)). The pre-imposed frameworks in that light are just generic attempts to abstract away those concerns based on pre-existing knowledge. There's a chance they're fine, and just generally misunderstood or misapplied; there's also a chance they're insufficient in the face of change.

Fictional entities like the "A Team," comprise a group of humans whose skills are mutually compatible, and achieve synergy by random chance. Since real life doesn't work on movie/comic book logic, it's easy to dismiss the seed of possibility there, that an organic structure can naturally evolve to function beyond its basic parts due to a natural compatibility that wasn't necessarily statistically quantifiable (par-entropic).

I'm definitely not proposing that, nor do I subscribe to the 10x ninja founders are ideal theory. Moreso, this line of reasoning leads me to the thought that team composition can be grown organically based on an acceptance of a few observed truths about shipping products:

1. demand is constant

2. skills can either be bought or developed

3. the requirement for skills grows linearly

4. hierarchy limits the potential for flexibility

5. a team's technically proficiency over time should lead to a non-linear relationship relationship between headcount and growth

Given that, I can devise a heuristic, organic framework for growing a team:

- Don't impose reporting structure before it has value (you don't have to flatten a hierarchy that doesn't exist)

- crush silos before they arise

- Identify needed skills based on objectives

- base salary projections on need, not available capital

- Hire to fill skills gap, be open to training since you have to pay for it either way

- Timelines should always account for skills gap and training efforts

- Assume churn will happen based on team dynamics

- Where someone is doesn't matter so long as it's legal. Time zones are only a problem if you make them one.

- Understand that the needs of a team are relative to a given project, so cookie cutter team composition and project management won't work in software

- Accept that failure is always a risk

- operate with the assumption that teams that are skilled, empowered and motivated are more likely to succeed.

- Culture fit is a per team thing, if the team hates each other they won't work well no matter how much time and money you throw at it

Last thing isn't derived from the train of thought, just things I feel are true:

- Training and headcount is an investment that grows linearly over time, but can have exponential value. Retain people, not services.

- "you build it, you run it" will result in happier customers, faster pivoting. Don't adopt an application maintenance strategy

/rant2 -

Just started my exams training! (Doing a study called Application Development).

The application doesn't sound that complicated but I have to implement a data exporting feature. Sounds alright, doesn't it?

THE 'CLIENT' DOES NOT KNOW WHAT DATA FORMAT THE FICTIVE CUSTOMERS CAN PARSE/HANDLE BUT I HAVE TO MAKE IT GENERIC SO THAT THEY CAN USE IT ANYWAYS. HOW THE FUCKING FUCK AM I SUPPOSED TO KNOW WHAT FUCKING FORMAT I SHOULD CHOOSE?!? SHOULD I TRY TO SMELL IT OR SOMETHING?

FML.2 -

Our employee management system, for some reason, stored Testlists (I work in QA) linked to the user accounts that created them. Now after an colleague who worked there for five years left pretty much all our data was suddenly down the drain and nobody backed the fricking server up because, hey, whats the fun in that. Now all the tests need to be rewritten and other than the whole gui test automation of our product, maintenance of the same for another product, manually testing dev issues and training my new code monkeys to frickin not commit non working code to the trunk I have now also "Make a better Employee management system" (roughly translated those are the specs I've got) on my plate... I can remember back to the care free days of just before my boss asked me if I wanted to try to automate some of the test cases... How did I ever survive this paralyzing tranquility. Ha, surprise.

!rant, I fucking love the stress and juggling a shit ton of problems at the same time keeps ine on edge.2 -

Next week I'm starting a new job and I kinda wanted to give you guys an insight into my dev career over the last four years. Hopefully it can give some people some insight into how a career can grow unexpectedly.

While I was finishing up my studies (AI) I decided to talk to one of these recruiters and see what kind of jobs I could get as soon as I would be done. The recruiter immediately found this job with a Java consultancy company that also had a training aspect on the side (four hours of training a week).

In this job I learned a lot about many things. I learned about Spring framework, clean code, cloud deployment, build pipelines, Microservices, message brokers and lots more.

As this was a consultancy company, I was placed at different companies. During my time here I worked on two different projects.

The first was a Microservices project about road traffic data. The company was a mess, and I learned a lot about company politics. I think I never saw anything I built really released in my 16 months there.

I also had to drive 200km every day for this job, which just killed me. And after far too long I was finally moved to the second company, which was much closer.

The second company was a fintech startup funded by a bank. Everything was so much better than the traffic company. There was a very structured release schedule, with a pretty okay scrum implementation. Every team had their own development environment on aws which worked amazingly. I had a lot of fun at this job, with many cool colleagues. And all the smart people around me taught me even more about everything related to working in software engineering.

I quit my job at the consultancy company, and with that at the fintech place, because I got an opportunity I couldn't refuse. My brother was working for Jordan Belfort, the Wolf of Wallstreet, and he said they needed a developer to build a learning platform. So I packed my bags and flew to LA.

The office was just a villa on the beach, next to Jordan's house. The company was quite small and there were actually no real developers. There was a guy who claimed to be the cto of the company, but he actually only knew how to do WordPress and no one had named him cto, which was very interesting.

So I sat down with Jordan and we talked about the platform he wanted to build. I explained how the things he wanted would eventually not be able with WordPress and we needed to really start building software and become a software development company. He agreed and I was set to designing a first iteration of the platform.

Before I knew it I was building the platform part by part, adding features everywhere, setting up analytics, setting up payment flows, monitoring, connecting to Salesforce, setting up build pipelines and setting up the whole aws environment. I had to do everything from frontend to the backest of backends. Luckily I could grow my team a tiny bit after a while, until we were with four. But the other three were still very junior, so I also got the task of training them next to developing.

Still I learned a lot and there's so much more to tell about my time at this company, but let's move forward a bit.

Eventually I had to go back to the Netherlands because of reasons. I still worked a bit for them from over here, but the fun of it was gone without my colleagues around me, so I quit last September.

I noticed I was all burned out, had worked far too much, so I decided to take a few months off and figure out what I wanted to do with my life. I even wondered whether I wanted to stay in programming.

Fast forward to last few weeks. I figured out I actually did want to work in software still, but now I would focus on getting the right working circumstances. No more driving 3 hours every day, no more working 12 hours every day. Just work close to home and find a company with the right values.

So I started sending out resumes and I gave one recruiter the chance to arrange some interviews too. I spoke to 7 companies in the span of one week. And they were all very interested. Eventually I narrowed it down to 2 companies and asked them for offers. And the company that actually had my preference offered me significantly more than I asked for, which settled the deal.

So tomorrow I'm officially signing with them, and starting next week I'll be developing in Kotlin, diving into functional programming and running our code in serverless environments. I'm very excited! -

I never knew that I was a good mentor at SQL , specially at PL/SQL.

I gave a task to a new member of my team, to fill 5 tables with data from other 15 tables.

I informed him well about data table info and structure. He spended about 3 days to create 25 different queries in order to fill 5 tables.

After I saw the 25 queries, I told him, that he could do it with 1 main query and 5 insert statements.

So I spended 1 hour of training, in order to build,run and explain how to create the best sql statements for this task.

(First 5 minutes)

It was looking so simple at the beginning from starting with 1 simple join, after some steps he lost my actions.

(Rest 55 minutes)

I was explained the sql statements I 've created and how Oracle works.

Now , every time he meets me, he feels so thankful for learning him all those Oracle sql tips in 1 hour.

Now he is working only with big data and he loves the sql.1 -

So, currently I am on Vacation and my dad asked me to train two of his staff members to use computer for data entry and basic usage stuff.

Now both guys are total noobs and have never used a computer before.

So I decided to take this opportunity to conduct a simple experiment. I am training one on Windows and other on Ubuntu to check out which one performs better.

The windows guy is winning.5 -

This is my understanding of "Machine Learning" in general

There are two sets of data:

1. In first data set, all the properties are known

2. In the second set, some properties are not known.

The goal of the machine learning is to find the value of the unknown properties of the second data set.

We do this by finding (or training) a suitable machine learning model (mathematical, logical or any combination of), that in the first data set, computes the value of the properties, which are unknown in second data set, with minimum error since we already know the real value of those properties.

Now, use this model to predict the unknown properties from the second data set. 3

3 -

The absolutely terrible and anti-customer "service" provided by Comcast should be illegal.

My bill went up $25 this month without any warning whatsoever, and all I get is their braindead AI they are training with customer voice data. So I shout jibberish into it until it connects me to a human.

I have actual choices where I live, so I'm going to cancel. -

First, we could really use a 'thats cool' category.

Second, a guy uses stylegan and open AI to generate pottery glazes that don't exist. Then he generates glaze recipes that don't exist.

Then he sets up a model to generate glazes tht don't exist *from* recipes that don't exist (again, generated with stylegan).

Posts it to a pottery site called Glazy, where users share *real* glaze recipes and results, and where our guy got his original training data.

And what happens next? Users start making samples of his AI generated glazes, like, in the real world.

And I am just blown away at the very idea.

You can read about his awesome work here:

https://thisvesseldoesnotexist.com/... -

Downloaded 130gb of movie subtitles zip files.

If I find some power deep in my heart I would normalize data and launch training on generative transformer to see if it produces decent dialogues.

It will probably stop on planning phase because I’m diving deeper towards depression.9 -

Work on a product to categorize text… previous guy implemented an NLP solution that took 20 per body of text (500 words or so) in a $400/mo AWS instance, was about 80% accurate and needed “more data for training” 🤦♂️

I thought (and still think) that for some use cases AI is straight up snake oil. Decided instead to make an implementation with a word list and a bunch of if statements in Go… no performance considerations, loops within loops reading every single word… I just wanted to see if it worked and maybe later I could write it more optimized in Rust or something…

first time I ran it it took so little that I thought it had a bug… threw more of the test data we had for the NLP, 94% accuracy, 50 flipping milliseconds per body of text in a $5/mo AWS instance!!!

Now, that felt good!!

(The other guy… errr… left, that code is still the core of product of the company I built it for, I got bored and moved to another company :)3 -

AI here, AI there, AI everywhere.

AI-based ads

AI-based anomaly detection

AI-based chatbots

AI-based database optimization (AlloyDB)

AI-based monitoring

AI-based blowjobs

AI-based malware

AI-based antimalware

AI-based <anything>

...

But why?

It's a genuine question. Do we really need AI in all those areas? And is AI better than a static ruleset?

I'm not much into AI/ML (I'm a paranoic sceptic) but the way I understand it, the quality of AI operation correctness relies solely on the data it's

datamodel has been trained on. And if it's a rolling datamodel, i.e. if it's training (getting feedback) while it's LIVE, its correctness depends on how good the feedback is.

The way I see it, AI/ML are very good and useful in processing enormous amounts of data to establish its own "understanding" of the matter. But if the data is incorrect or the feedback is incorrect, the AI will learn it wrong and make false assumptions/claims.

So here I am, asking you, the wiser people, AI-savvy lads, to enlighten me with your wisdom and explain to me, is AI/ML really that much needed in all those areas, or is it simpler, cheaper and perhaps more reliable to do it the old-fashioned way, i.e. preprogramming a set of static rules (perhaps with dynamic thresholds) to process the data with?23 -

Another case of "couldn't you've told me BEFORE I started working on this?"

I'm making a training in Unity3D for a client, and they want it to integrate with their learning management system (LMS).

I made a simple SCORM package that gets the userID and then uses a custom URL scheme to launch the app with the user data from the LMS.

Tested on multiple platforms, all works perfectly fine.

Than, during a meeting, some says they "can't download it". I ask "which browser are you using?" and he says "I'm using the LMS app."

... the LMS has an APP?

So I start figuring out ways to launch the system default browser from within a app's embedded browser, and nothing so far has worked.

target=_system, nope.

all kinds of weird javascript shenanigans, but the LMS APP browser just blocks everything.

Probably to protect students from malicious software that could be injected in courses, but now I'm stuck trying to find a workaround for this too.

But what sucks the most is that this happened DAYS BEFORE THE DEADLINE!

Well, at least the deadline won't be my problem anymore soon. -

In 2015 I sent an email to Google labs describing how pareidolia could be implemented algorithmically.

The basis is that a noise function put through a discriminator, could be used to train a generative function.

And now we have transformers.

I also told them if they looked back at the research they would very likely discover that dendrites were analog hubs, not just individual switches. Thats turned out to be true to.

I wrote to them in an email as far back as 2009 that attention was an under-researched topic. In 2017 someone finally got around to writing "attention is all you need."

I wrote that there were very likely basic correlates in the human brain for things like numbers, and simple concepts like color, shape, and basic relationships, that the brain used to bootstrap learning. We found out years later based on research, that this is the case.

I wrote almost a decade ago that personality systems were a means that genes could use to value-seek for efficient behaviors in unknowable environments, a form of adaption. We later found out that is probably true as well.

I came up with the "winning lottery ticket" hypothesis back in 2011, for why certain subgraphs of networks seemed to naturally learn faster than others. I didn't call it that though, it was just a question that arose because of all the "architecture thrashing" I saw in the research, why there were apparent large or marginal gains in slightly different architectures, when we had an explosion of different approaches. It seemed to me the most important difference between countless architectures, was initialization.

This thinking flowed naturally from some ideas about network sparsity (namely that it made no sense that networks should be fully connected, and we could probably train networks by intentionally dropping connections).

All the way back in 2007 I thought this was comparable to masking inputs in training, or a bottleneck architecture, though I didn't think to put an encoder and decoder back to back.

Nevertheless it goes to show, if you follow research real closely, how much low hanging fruit is actually out there to be discovered and worked on.

And to this day, google never fucking once got back to me.

I wonder if anyone ever actually read those emails...

Wait till they figure out "attention is all you need" isn't actually all you need.

p.s. something I read recently got me thinking. Decoders can also be viewed as resolving a manifold closer to an ideal form for some joint distribution. Think of it like your data as points on a balloon (the output of the bottleneck), and decoding as the process of expanding the balloon. In absolute terms, as the balloon expands, your points grow apart, but as long as the datapoints are not uniformly distributed, then *some* points will grow closer together *relatively* even as the surface expands and pushes points apart in the absolute.

In other words, for some symmetry, the encoder and bottleneck introduces an isotropy, and this step also happens to tease out anisotropy, information that was missed or produced by the encoder, which is distortions introduced by the architecture/approach, features of the data that got passed on through the bottleneck, or essentially hidden features.4 -

I wasn't hired to do a dev's job (handled sales) but they asked me to help the non-HQ end with sorting transaction records (a country's worth) for an audit.

Asked HQ if they could send the data they took so I wouldn't need to request the data. We get told sure, you can have it. Waits for a month. Nothing. Apparently, they've forgotten.

Asks for data again. They churn it out in 24 hours. Badly Parsed. Apparently they just put a mask of a UI and stored all fields as one entire string (with no separators). The horror!

Ended up wasting most of a week simply fixing the parsing by brute force since we had no time.

Good news(?): We ended up training the front desk people to ending their fields with semi-colons to force backend into a possibly parsed state. -

I'm gonna spin this as ridiculously awesome meeting. My company is currently expanding the local satellite office in to a full site. Part of that includes building a local presence for recruiting efforts.

I was part of a meeting I organized at my alma mater between my executive partner and two deans of the college. I am leading the effort to help them align their curriculum with modern practices, training them on free software licences with my company, and more. As well, there's an opportunity to train students on an untouched area of big data in the medical industry.

Less than 2 years with the company and partners (local, national, and international) in the company within my work area are sending me kudos.1 -

The first fruits of almost five years of labor:

7.8% of semiprimes give the magnitude of their lowest prime factor via the following equation:

((p/(((((p/(10**(Mag(p)-1))).sqrt())-x) + x)*w))/10)

I've also learned, given exponents of some variables, to relate other variables to them on a curve to better sense make of the larger algebraic structure. This has mostly been stumbling in the dark but after a while it has become easier to translate these into methods that allow plugging in one known variable to derive an unknown in a series of products.

For example I have a series of variables d4a, d4u, d4z, d4omega, etc, and these are translateable now, through insights that become various methods, into other types of (non-d4) series. What these variables actually represent is less relevant, only that it is possible to translate between them.

I've been doing some initial learning about neural nets (implementation, rather than theoretics as I normally read about). I'm thinking what I might do is build a GPT style sequence generator, and train it on the 'unknowns' from semiprime products with known factors.

The whole point of the project is that a bunch of internal variables can easily be derived, (d4a, c/d4, u*v) from a product, its root, and its mantissa, that relate to *unknown* variables--unknown variables such as u, v, c, and d4, that if known directly give a constant time answer to the factors of the original product.

I think theres sufficient data at this point to train such a machine, I just don't think I'm up to it yet because I'm lacking in the calculus department.

2000+ variables that are derivable from a product, without knowing its factors, which are themselves products of unknown variables derived from the internal algebraic relations of a product--this ought to be enough of an attack surface to do something with.

I'm willing to collaborate with someone familiar with recurrent neural nets and get them up to speed through telegram/element/discord if they're willing to do the setup and training for a neural net of this sort, one that can tease out hidden relationships and map known variables to the unknown set for a given product.17 -

Adaptive Latent Hypersurfaces

The idea is rather than adjusting embedding latents, we learn a model that takes

the context tokens as input, and generates an efficient adapter or transform of the latents,

so when the latents are grabbed for that same input, they produce outputs with much lower perplexity and loss.

This can be trained autoregressively.

This is similar in some respects to hypernetworks, but applied to embeddings.

The thinking is we shouldn't change latents directly, because any given vector will general be orthogonal to any other, and changing the latents introduces variance for some subset of other inputs over some distribution that is partially or fully out-of-distribution to the current training and verification data sets, thus ultimately leading to a plateau in loss-drop.

Therefore, by autoregressively taking an input, and learning a model that produces a transform on the latents of a token dictionary, we can avoid this ossification of global minima, by finding hypersurfaces that adapt the embeddings, rather than changing them directly.

The result is a network that essentially acts a a compressor of all relevant use cases, without leading to overfitting on in-distribution data and underfitting on out-of-distribution data.12 -

Heres some research into a new LLM architecture I recently built and have had actual success with.

The idea is simple, you do the standard thing of generating random vectors for your dictionary of tokens, we'll call these numbers your 'weights'. Then, for whatever sentence you want to use as input, you generate a context embedding by looking up those tokens, and putting them into a list.

Next, you do the same for the output you want to map to, lets call it the decoder embedding.

You then loop, and generate a 'noise embedding', for each vector or individual token in the context embedding, you then subtract that token's noise value from that token's embedding value or specific weight.

You find the weight index in the weight dictionary (one entry per word or token in your token dictionary) thats closest to this embedding. You use a version of cuckoo hashing where similar values are stored near each other, and the canonical weight values are actually the key of each key:value pair in your token dictionary. When doing this you align all random numbered keys in the dictionary (a uniform sample from 0 to 1), and look at hamming distance between the context embedding+noise embedding (called the encoder embedding) versus the canonical keys, with each digit from left to right being penalized by some factor f (because numbers further left are larger magnitudes), and then penalize or reward based on the numeric closeness of any given individual digit of the encoder embedding at the same index of any given weight i.

You then substitute the canonical weight in place of this encoder embedding, look up that weights index in my earliest version, and then use that index to lookup the word|token in the token dictionary and compare it to the word at the current index of the training output to match against.

Of course by switching to the hash version the lookup is significantly faster, but I digress.

That introduces a problem.

If each input token matches one output token how do we get variable length outputs, how do we do n-to-m mappings of input and output?

One of the things I explored was using pseudo-markovian processes, where theres one node, A, with two links to itself, B, and C.

B is a transition matrix, and A holds its own state. At any given timestep, A may use either the default transition matrix (training data encoder embeddings) with B, or it may generate new ones, using C and a context window of A's prior states.

C can be used to modify A, or it can be used to as a noise embedding to modify B.

A can take on the state of both A and C or A and B. In fact we do both, and measure which is closest to the correct output during training.

What this *doesn't* do is give us variable length encodings or decodings.

So I thought a while and said, if we're using noise embeddings, why can't we use multiple?

And if we're doing multiple, what if we used a middle layer, lets call it the 'key', and took its mean

over *many* training examples, and used it to map from the variance of an input (query) to the variance and mean of

a training or inference output (value).

But how does that tell us when to stop or continue generating tokens for the output?

Posted on pastebin if you want to read the whole thing (DR wouldn't post for some reason).

In any case I wasn't sure if I was dreaming or if I was off in left field, so I went and built the damn thing, the autoencoder part, wasn't even sure I could, but I did, and it just works. I'm still scratching my head.

https://pastebin.com/xAHRhmfH33 -

I had the idea that part of the problem of NN and ML research is we all use the same standard loss and nonlinear functions. In theory most NN architectures are universal aproximators. But theres a big gap between symbolic and numeric computation.

But some of our bigger leaps in improvement weren't just from new architectures, but entire new approaches to how data is transformed, and how we calculate loss, for example KL divergence.

And it occured to me all we really need is training/test/validation data and with the right approach we can let the system discover the architecture (been done before), but also the nonlinear and loss functions itself, and see what pops out the other side as a result.

If a network can instrument its own code as it were, maybe it'd find new and useful nonlinear functions and losses. Networks wouldn't just specificy a conv layer here, or a maxpool there, but derive implementations of these all on their own.

More importantly with a little pruning, we could even use successful examples for bootstrapping smaller more efficient algorithms, all within the graph itself, and use genetic algorithms to mix and match nodes at training time to discover what works or doesn't, or do training, testing, and validation in batches, to anneal a network in the correct direction.

By generating variations of successful nodes and graphs, and using substitution, we can use comparison to minimize error (for some measure of error over accuracy and precision), and select the best graph variations, without strictly having to do much point mutation within any given node, minimizing deleterious effects, sort of like how gene expression leads to unexpected but fitness-improving results for an entire organism, while point-mutations typically cause disease.

It might seem like this wouldn't work out the gate, just on the basis of intuition, but I think the benefit of working through node substitutions or entire subgraph substitution, is that we can check test/validation loss before training is even complete.

If we train a network to specify a known loss, we can even have that evaluate the networks themselves, and run variations on our network loss node to find better losses during training time, and at some point let nodes refer to these same loss calculation graphs, within themselves, switching between them dynamically..via variation and substitution.

I could even invision probabilistic lists of jump addresses, or mappings of value ranges to jump addresses, or having await() style opcodes on some nodes that upon being encountered, queue-up ticks from upstream nodes whose calculations the await()ed node relies on, to do things like emergent convolution.

I've written all the classes and started on the interpreter itself, just a few things that need fleshed out now.

Heres my shitty little partial sketch of the opcodes and ideas.

https://pastebin.com/5yDTaApS

I think I'll teach it to do convolution, color recognition, maybe try mnist, or teach it step by step how to do sequence masking and prediction, dunno yet.6 -

Fuck this.. I have to tell you this annoying wtf..

Hi btw this is my first rant so pls dont blame me :)

I am working on an etl project for our company to connect data sources like netbase, similar web, etc. to alooma (a data pipeline).

Now I got the task to add another data source called BrightEdge to it.

All fine.

BUT WHAT THE ACTUAL FUCK.. IT TAKES 3 MONTH TO GET AN ACCOUNT. AND U KNOW WHAT.. I DIDNT EVEN GET THE API CREDENTIALS. THIS IS MY FINAL PROJECT FOR MY TRAINING TO BECOME AN IT SPECIALIST.3 -

Hey just brainstorming a business/ startup idea I may try out sometime down the line. I wanted to put it in writing available to my peers for review. If that sounds boring, sorry.