Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "robotics"

-

Worst 'advice' from a college recruiter:

"O you want to major in computer science? Well our school is fantastic for women in comp sci because WHEN they find it too difficult they can easily transition to graphic design. How do you feel about graphic design?"

I decided that school was a bad choice.

Graduating this year with my BS in Comp Sci and going for my Masters in Robotics. Screw that guy.18 -

My first job: The Mystery of The Powered-Down Server

I paid my way through college by working every-other-semester in the Cooperative-Education Program my school provided. My first job was with a small company (now defunct) which made some of the very first optical-storage robotic storage systems. I honestly forgot what I was "officially" hired for at first, but I quickly moved up into the kernel device-driver team and was quite happy there.

It was primarily a Solaris shop, with a smattering of IBM AIX RS/6000. It was one of these ill-fated RS/6000 machines which (by no fault of its own) plays a major role in this story.

One day, I came to work to find my team-leader in quite a tizzy -- cursing and ranting about our VAR selling us bad equipment; about how IBM just doesn't make good hardware like they did in the good old days; about how back when _he_ was in charge of buying equipment this wouldn't happen, and on and on and on.

Our primary AIX dev server was powered off when he arrived. He booted it up, checked logs and was running self-diagnostics, but absolutely nothing so far indicated why the machine had shut down. We blew a couple of hours trying to figure out what happened, to no avail. Eventually, with other deadlines looming, we just chalked it up be something we'll look into more later.

Several days went by, with the usual day-to-day comings and goings; no surprises.

Then, next week, it happened again.

My team-leader was LIVID. The same server was hard-down again when he came in; no explanation. He opened a ticket with IBM and put in a call to our VAR rep, demanding answers -- how could they sell us bad equipment -- why isn't there any indication of what's failing -- someone must come out here and fix this NOW, and on and on and on.

(As a quick aside, in case it's not clearly coming through between-the-lines, our team leader was always a little bit "over to top" for me. He was the kind of person who "got things done," and as long as you stayed on his good side, you could just watch the fireworks most days - but it became pretty exhausting sometimes).

Back our story -

An IBM CE comes out and does a full on-site hardware diagnostic -- tears the whole server down, runs through everything one part a time. Absolutely. Nothing. Wrong.

I recall, at some point of all this, making the comment "It's almost like someone just pulls the plug on it -- like the power just, poof, goes away."

My team-leader demands the CE replace the power supply, even though it appeared to be operating normally. He does, at our cost, of course.

Another weeks goes by and all is forgotten in the swamp of work we have to do.

Until one day, the next week... Yes, you guessed it... It happens again. The server is down. Heads are exploding (will at least one head we all know by now). With all the screaming going on, the entire office staff should have comped some Advil.

My team-leader demands the facilities team do a full diagnostic on the UPS system and assure we aren't getting drop-outs on the power system. They do the diagnostic. They also review the logs for the power/load distribution to the entire lab and office spaces. Nothing is amiss.

This would also be a good time draw the picture of where this server is -- this particular server is not in the actual server room, it's out in the office area. That's on purpose, since it is connected to a demo robotics cabinet we use for testing and POC work. And customer demos. This will date me, but these were the days when robotic storage was new and VERY exciting to watch...

So, this is basically a couple of big boxes out on the office floor, with power cables running into a special power-drop near the middle of the room. That information might seem superfluous now, but will come into play shortly in our story.

So, we still have no answer to what's causing the server problems, but we all have work to do, so we keep plugging away, hoping for the best.

The team leader is insisting the VAR swap in a new server.

One night, we (the device-driver team) are working late, burning the midnight oil, right there in the office, and we bear witness to something I will never forget.

The cleaning staff came in.

Anxious for a brief distraction from our marathon of debugging, we stopped to watch them set up and start cleaning the office for a bit.

Then, friends, I Am Not Making This Up(tm)... I watched one of the cleaning staff walk right over to that beautiful RS/6000 dev server, dwarfed in shadow beside that huge robotic disc enclosure... and yank the server power cable right out of the dedicated power drop. And plug in their vacuum cleaner. And vacuum the floor.

We each looked at one-another, slowly, in bewilderment... and then went home, after a brief discussion on the way out the door.

You see, our team-leader wasn't with us that night; so before we left, we all agreed to come in late the next day. Very late indeed.9 -

And finally its setup... Working well with four.. hopefully would convert it to 1x4 instead of 2x2....

22

22 -

Where do I even start?

Personal projects?

So many. Shouldn't count.

Unpaid game dev intern?

Unpaid game dev volunteer?

Both worthwhile, if stressful. Shouldn't count either.

Freelancing where clients refused to pay?

That's happened a few times. One of them paid me in product instead of cash (WonderSoil, a company that [apparently still] makes and sells some expanding super potting soil thing). The product turned out to be defective and killed all of the plants I used it on. I'd have preferred getting stiffed instead. Their "factory" (small, almost tiny) was quite cool. The owner was a bitch. Probably still is.

Companies that have screwed me out of pay?

So many. I still curse their names at least once a month. I've been screwed out of about $13k now, maybe more. I've lost track.

I have two stories in particular that really piss me off.

The first: I was working at a large robotics company, and mostly enjoyed my job, though the drive was awful. The pay wasn't high either, but I still enjoyed the work. Schedule was nice, too: 28 hours (four 7-hour days) per week. Regardless, I got a job offer for double my salary, same schedule, and the drive was 11 minutes instead of 40. I took it. My new boss ended up tricking me into being a contractor -- refused to give me a W2, no contracts, etc. Later, he also increased my hours to 40 with no pay increase. He also took forever to pay (weeks to months), and eventually refused to pay me to my face, in front of my cowokers. Asshole still owes me about $5k. Should owe me the the difference in taxes, too (w2 vs 1099) since he lied about it and forced me into it when it was too late to back out.

I talked to the BBB, the labor board, legal council, the IRS (because he was actively evading taxes), the fire inspector (because he installed doors taht locked if the power went out, installed the exit buttons on the fucking ceiling, and later disconnected all of said exit buttons). Nobody gave a single shit. Asshole completely got away with everything. Including several shady as hell things I can't list here because they're too easy to find.

The second one:

The economy was shit, and I was out of a job. I had been looking for quite awhile, and an ex-coworker (who had worked at google, interestingly) suggested I work for this new startup. It was a "reverse search engine," meaning it aggregated news and articles and whatnot, and used machine learning to figure out what its users are interested in, and provided them with exactly that. It would also help with scheduling, reminders of birthdays, mesh peoples' friends' travel plans and life events, etc. (You and a friend are going on vacation to the same place, and your mutual friend there is having a birthday! You should go to ___ special event that's going on while you're all there! Here's a coupon.) It was pretty cool. The owner was not. He delayed my payments a few times, and screwed me over on pay a few more times, despite promising me many times that he was "not one of those people." He ended up paying me less than fucking minimum wage. Fake, smiling, backstabbing asshole.

The first one still pisses me off more, though, because of all the shit I went through trying to get my missing back pay, and how he conned me every chance he got. And how he yelled at me and told me, to my face, that he wasn't ever going to pay me. Fucking goddamn hell I hate that guy.8 -

Dev: I'm going to a engineering and robotics seminar this weekend

Manager: Stupid. Waste of time.

Dev: I also got invited to go to a 2 day tech and innovation conference

Manager: Another a stupid waste of time.

Dev: The CEO's son invited me and is paying for it, he said he thought it would be interesting to me.

Manager: ...Well as long as it's not on company time

Dev: It is on company time, I won't have time for tickets

Manager: WHAT!? YOU HAVE TO SAY NO, WE ARE BUSY!! WE CAN'T NOT HAVE YOU FOR 2 DAYS.

Dev: Duely noted you said that and you think the whole idea is stupid. Take it up with him I already RSVP'd yes.

Manager: 😡😡😡😡😡😡7 -

Coding won over my first girlfriend!

My senior year of high school I taught myself C++ and thought it was the coolest thing (lol). So I wrote a stupidly simple program that would ask your name and output a random riddle. But if the name was hers it was a riddle in which case the answer was "a date". Looking back, even if she was on my robotics team it was the nerdiest thing.

We dated for 8 months and broke up as friends. But to this day it provides a great story as I pursue software development.4 -

Overheard some guy talking about robotics on the phone, turns out it was all about MS excel macros.

people need to stop abusing terms like big data, AI etc. to make them sound 'smart' 🙄4 -

That time I joined the robotics club and made the pid loop for the robot was one of my favorite projects. I learned ROS, and I created the entire chain of software and hardware to control six motors. That one project set up my experience for the next 4 years and led to a few jobs. I miss robotics.

5

5 -

My 8 year old brother started attending a robotics class a few days ago. He already loves it. I'm a proud big sister 😊

(And I'm already planning on how to start teaching him programming.)12 -

Ever have a feeling that there is so many interesting stuff out there - Angular, React.js, TypeScript, Rust, ELM, FRP, Machine Learning, Neuronal Networks, Robotics, Category theory... But no way to ever figure out what are all those about? And there is too little time to even get a good grasp of any single one of those. IT seems to be like hydra - one learns one thing and 10 new concepts pop up in the meantime.4

-

So our robotics team just got some code ready and our robots servos are reading hardware fault. Fml

13

13 -

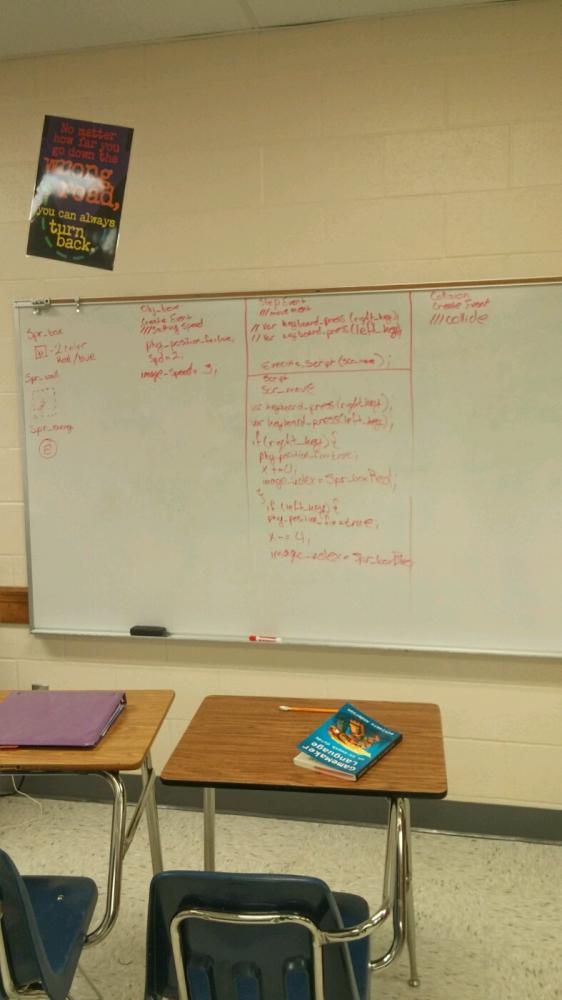

I was at the robotics group of our school when suddenly two other students (both 2 grades above me) started talking:

P1: I always forget the brackets when writing code

P2: I always forget the return statements

P3: yeah, always takes ages to find out why your code doesn't work

P4: haha, in the end it's just something missing that you didn't notice like a semicolon or a bracket

Me (thinking): do you use a fucking toaster to code? Even if you don't use an ide the compiler tells you what's wrong8 -

Was on edge..

Had no job, no money, got kicked out by my family(what left of it) depression kicking in, desperately trying to do anything to hold on

Had studies, in automation and robotics and other software skills, but no time to find a company to work..

Decided to try working at burger King, I mean, was that or selling myself, so I got called passed the interview, ( quick info - 60% of young people in my country can't get a job, have to lie on their cv because they have too much skills (there's still that wrong idea that studies get you a job))

Have too much studies for the job, I have to sign a contract saying that I accept being underpaid (by the law I have to be paid under the minimal wage for my skills)

This triggers an alert on social employment center and I started to work for another company two days after as a front end developer and it dude.

Refused the bk, yup they weren't happy about it, but I mean who really wants to do a 1 year trainee flipping burgers...4 -

By far the worst project I've ever worked on was a webapp for a high school robotics club. The project manager had worked for a bit in industry, then switched into teaching for quite a while. Apparently the idea that technology changes and improves over time never quite got to him. According to him the industry standard for all websites was pure HTML and Javascript. We didn't even get to use an IDE, nor even Notepad++. We used notepad. I got out quick.2

-

I just applied for one of those big big biiiiiiiig companies in robotics...

Something in my mind is telling me that I am actually losing it. Like, my mind. I must be losing my mind. 🤔

Oh well! ¯\_(ツ)_/¯26 -

Resumes don't mean jack shit!!

I just got off an interview call with a candidate for a hardware role. On paper this guy is absolute gold, having worked for some of the best robotics companies and research groups(in India at least) It took me an hour to realize that the was just spitting out buzz words. So I started asking him some very fundamental questions, like ohms law and such.. high school stuff. But, phrased in real world terms. And it took me another half an hour to realize that the guy is dumber than a sack of peanuts!

I can't believe how easy it is for people to coast by on paths paved by seniors and teammates. By any objective assessment this guy would be lucky to get a job as an electrician and instead I'm wasting my time interviewing him for a six figure salary (well, the Indian equivalent). Gaaah!!7 -

It's march, I'm in my final year of university. The physics/robotics simulator I need for my major project keeps running into problems on my laptop running Ubuntu, and my supervisor suggests installing Mint as it works fine on that.

I backup what's important across a 4GB and a 16GB memory stick. All I have to do now is boot from the mint installation disk and install from there. But no, I felt dangerous. I was about to kill anything I had, so why not `sudo rm -rf /*` ? After a couple seconds it was done. I turned it off, then back on. I wanted to move my backups to windows which I was dual booting alongside Ubuntu.

No OS found. WHAT. Called my dad, asked if what I thought happened was true, and learnt that the root directory contains ALL files and folders, even those on other partitions. Gone was the past 2 1/2 years of uni work and notes not on the uni computers and the 100GB+ other stuff on there.

At least my current stuff was backed up.

TL;DR : sudo rm -rf /* because I'm installing another Linux distro. Destroys windows too and 2 1/2 years of uni work. 13

13 -

The robotics proffessor has offered me :

1- joining uni's robotics team

2- joining the startup team

I then asked if I can take both of them and he said this is not recommanded.

So what do you think?

Whick of the 3 items (3- none) fits best?10 -

We won a robotics competition and we went to the next competition in the Netherlands. I saw this while traveling and had to take a picture

2

2 -

Can I just say that the absolute most important skill for any kind of programmer or engineer is knowing HOW TO FUCKING GOOGLE!!!

<Background>

I am the head of programing on my school's Robotics Team. We're relatively know, however most all of my teammates know how to program and they are all very talented academically. In fact my "Lieutenant" will be the valedictorian.

</Background>

Seriously I missed one meeting yesterday because of the flu. Imagine me lying in bed and suddenly getting multiple calls from the team (even the valedictorian) asking how to fix errors from Android Studio. I asked them if they googled them and they said "No we didn't".

Why is knowing how to google not apart of any kind of CS education! They could have been after an hour, but NOOO it took them after 5 hours!!

Oh my FUCKING GOD!!3 -

//An okay long rant..

So i work at this small robotics start-up company I Copenhagen.

The first dumb part is that it only uses interns as staff, because then they don't need to pay people. (I am working part time, for free. Just to get experience (I am only 20 btw))

So.. I often get into an argument with my boss, since she is a designer with a "passion" for robotics (she has no clue how to do anything related to the work) But I often try to explain to her some current limitations in the staff, and what is possible for us to do, but she will never listen. She really wants us to design our own microcontroller board PCB, and she want it at the size of a coin. However when I tell her that none of the, non paid works has the experience or education to design such a thing, she never wants to acknowledge it, and it really pisses me off.

And her dad, who is the top boss, only care for esthetics when he is making a work environment, which is dumb when we just need to develop stuff...

Sorry if the rant was too long but had to get it out..8 -

1 friend who is currently studying in Canada.

I am not aware if he uses devrant or not.

Met him in a national robotic competition 3 years back.

Somehow, we exchanged numbers and nowadays we talk often whenever we get time (considering our busy life and timezone difference)

He is studying robotics and frequently sends me his designs and output with 3D printer.

About me, I left robotics(to be specific embedded) and got a job and working on backend these days.

Though, it's great talking to him and getting to know how the education goes there and his new works.

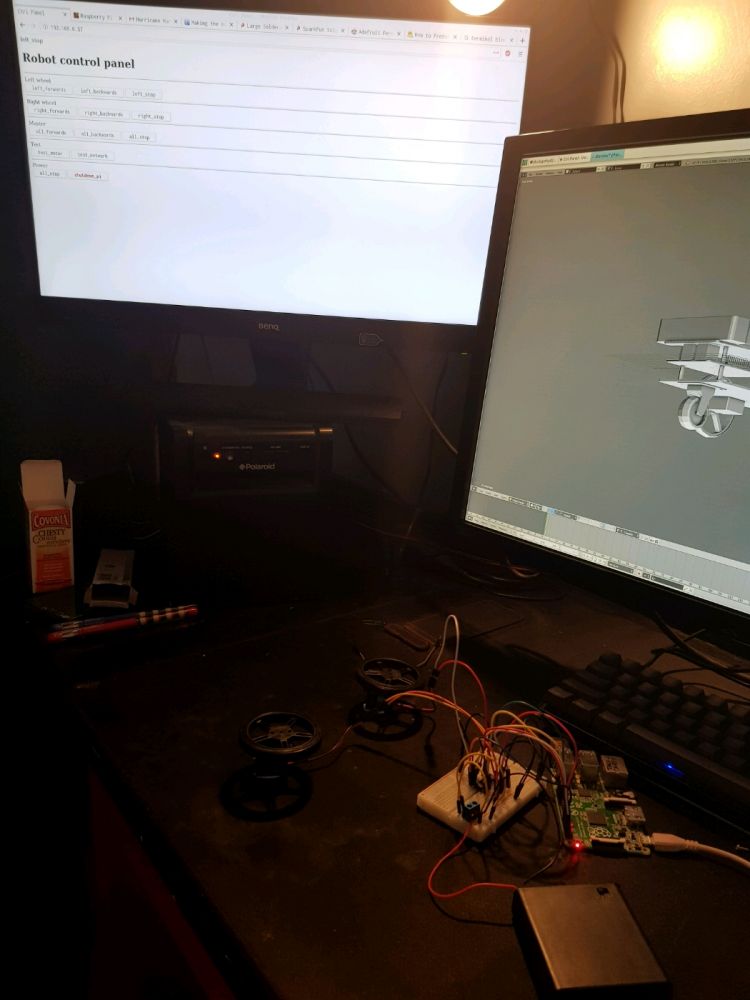

He also has a beautiful love story(not yet completed) which is another fun part to listen from him. -

Progress has been made

Full control from a webserver!

Very precarious though - motors are held in place by blue tack, but occasionally they break free and hit all the circuitry out of the way

Any thoughts on a better way of controlling it? (In terms of UI/UX) 4

4 -

Yay! I just got a FB friend request from a really beautiful woman! We have no friends in common, and when I looked at her profile, she didn't have any friends, and she likes robotics. I can be her first friend!

Or not.3 -

Today I finished my robotics project. I had in my team a total idiot (the one who used the hidden divs, some might remember from another rant). I wanted to share with you the beginning of a ranting adventure.

Me: "you can begin with a simple task. I will send you the obstacle avoidance sensors values from Arduino, and you will send the data for the Arduino motors to dodge the obstacle".

The sensors give 1 if clear, 0 if obstacle is detected.

Below is his code (which I brutally rewrote in front of him).

Now, in the final version of the robot we have something like 9 sensors of the same time to work with.

Imagine what would have happened if we kept him coding. (Guess it: 2^9 statements! :D)

I was not that evil, I tried to give him some chances to prove himself willing to improve. None of them were used rightfully.

I'm so fucking glad we finished. I'm not gonna see him anymore, even if I'd like to be a technical interviewer for hiring just to demolish him.

I'm not always that evil, I promise (?)

Ps. He didn't even have any idea on what JSON is, even if we had already seen it during FIVE YEARS of computer engineering. (And should've known anyway if he had a bit of curiosity for the stuff he "studies") 10

10 -

I created a curriculum to homeschool myself way up for a MSc in AI/ML/Data Engineer for Application in Health, Automobiles, Robotics and Business Intelligence. If you are interested in joining me on this 1.5yrs trip, let me knw so I can invite you to the slack channel. University education is expensive..can't afford that now. So this would help but no certificate included.17

-

that feeling when your new toys from aliexpress get delivered earlier than expected... i feel so happy unpacking those sensors, capacitors, heat sinks, microchips, breadboards and all. i feel like i have a geeky shopping addiction, i probably won't have the time to play with them from all the work and other personal projects, but still i hoarded enough electronics to invade the world with a drone army in case i have a few weeks me-time.5

-

We had robotics, or rather an electronics workshop today. Just imagine throwing a bunch of nerds into a room with 3d printers, lots of electronic parts and other tools.

Anyway one of my friends said that his computer wasn't working.

Me: It's running windows so it's broken by default.

Him: common, windows isn't that bad

Me: it is

Our teacher walking by: I'd never want to use windows, it's basically malware

I just sat there smiling 😊2 -

This how My Ubuntu desktop look's, where the wallpaper is of the project we(team snappy Robotics) did.

5

5 -

My dad is in IT, and when I was younger he realised I had the same logical/analytical skill set as him so had me enrolled in a Lego robotics course and I loved it so I taught myself to code from online tutorials and books!

-

*The Fearless Leader*

I get a call to check up on a robot that has been exceeding weight limits at certain points of its movement (Crashing). As I get to the pendant (robo-game controller thingy I like to call it) and look over the alerts and warnings I notice some oil around the main power box of the Robot.... Nothing around this has oil.. so I start looking around and it turns out that the issue wasn’t a crash at all! It was an oily shorted out wire that kept sparking mad heavy when that servo was called on.. causing a large servo failure that required a full restart of the power box. I called our fearless leader and showed him only to find out that there was a motor leaking oil from the electrical end... My fearless leader runs both the Maintenance and Robotics department. When the motor was eventually fixed we overheard the technicians say that our fearless leader knew about this a week ago and decided to leave it that way.... with oil... coming out of an electrical cable..... *sigh* well Anyway after all the wires were fixed and motors changed. He comes up to me and says that he can’t believe that I didn’t call maintenance and fill a report on negligence of technicians for failing preventative maintenance....

I lost my cool a little, firstly that’s not my job, I’m literally one of the lowest ranking here. I called my next in command to figure out what I should do. Secondly the technicians told me that you told them to leave it like that! So if this place caught on fire this would have been on you!

Later I found out that he was trying to fire a technician and wanted me to do the dirty work.. I’m not going to be the reason another man loses the means for him to feed his family. The technician is a pretty cool and fair guy too!

Our fearless leader was a forklift driver and has no experience in robotics or maintenance... I don’t know how this happens or even why but all I know is this man is running both departments to the ground and management loves him.....1 -

I wrote some code using microcontrollers to control peripherals and robotic extremities using an armband called the MYO. It was a fun project I did 2 years ago in high school, I even won a couple of international awards for it!2

-

Checked out my college’s robotics team today. I was the only girl in the room, and I feel like I won’t make any close friends like I did in high school. I want my awesome high school robotics team back... 😢13

-

What you are expected to learn in 3 years:

power electronics,

analogue signal,

digital signal processing,

VDHL development,

VLSI debelopment,

antenna design,

optical communication,

networking,

digital storage,

electromagnetic,

ARM ISA,

x86 ISA,

signal and control system,

robotics,

computer vision,

NLP, data algorithm,

Java, C++, Python,

javascript frameworks,

ASP.NET web development,

cloud computing,

computer security ,

Information coding,

ethical hacking,

statistics,

machine learning,

data mining,

data analysis,

cloud computing,

Matlab,

Android app development,

IOS app development,

Computer architecture,

Computer network,

discrete structure,

3D game development,

operating system,

introduction to DevOps,

how-to -fix- computer,

system administration,

Project of being entrepreneur,

and 24 random unrelated subjects of your choices

This is a major called "computer engineering"4 -

Ever watched the Terminator films and wondered why the machines even have a UI with English words when they seek targets and we see that through their eyes?

It's not that some human uses it and needs UX.

I hate it when films do that.9 -

------------Weeklyrant-------------------------------------

So I bought a smart watch to go with my Samsung Galaxy back when I was 12, and upon inspecting the watchface maker app I came across lua scripting files. This amazed me. Animations, complex math, hexadecimal color system, variables, sensors...

I spent about four months

learning/experimenting with lua until I discovered arduino and C++.

I am now 14 and have been fascinated with robotics and learned java and dos since.2 -

A bit different than wk93, but still connected and a fun story.

Back in high school when it began to digitalize everything, so began our teachers journey with technology. We, as IT class were into these things, but as far as I can say, others in the school including both teachers and students were like cave mans when it came to IT.

Most of them kept the different wifi networks password on the windows desktop, in a file 'wifipassword.txt'. When we were on robotics seminar, we had to use a teacher's laptop. The wifi network was incredibly fast and powerful,, yet so poorly configured that even the configuration page user/pass was the default admin/admin, because the IT admin wasn't the most skilled one.

We got the idea to sell the password of the wifi network to other students. Not much, for about 1 dollar a week. The customer came to us, we took the phone, took note of the MAC address, entered the password, and if the guy were to stop paying every week, we just blacklisted that MAC on the next robotics course.

Went well for months, until a new sysadmin came and immediately found it out, we were almost fired from the school, but my principal realized how awesome this idea was. You may say that we were assholes, and partially that is true, I'd rather say we made use of our knowledge.2 -

Well it’s Official, The Bot has been bagged, It’s the end of the Build season. I hoped to get a picture of it not being in the bag, but too late 😑

6

6 -

Well, it was a great experience. Good bye buddy :(

Servers are still working but nobody knows how much time we have.

https://vox.com/2019/4/...7 -

Fucking windows automatic reboots! They seriously need to fuck off with that. As of this morning I have a finance person who can't log on, a floor manager that can't schedule his employees and a robotics controller pc that rebooted and didn't save the changes I made! Seriously, FUCK OFF!12

-

Blue Robotics

This company makes underwater thrusters for submarine applications. With their first thruster they made it easy to make a homemade submarine. The motor was powerful, the thruster just worked. They even had a promotional where they created an automated surfboard that made it from hawaii to somewhere in california with one of their thrusters pushing it there the entire way. It was a great product.

Then they created the next version. This was the same thruster, but it had an ESC(Electronic Speed Controller) sealed in an aluminum puck on top of the motor. This ESC could be controlled by servo controls, or by plugging it into an i2c bus. You could pull different stats off of the motor over i2c it sounded great. So my robotics team trusted this company and bought 8 motors at $220 - $250 bucks each. We lightly tested them since we had not even finished the robot yet. One week before the competition our robot got completely put together and we did our first few tests.

Long story short, Us and 22 other teams did roughly the same thing. We bought these motors expecting them to work, but instead the potted aluminum ESCs were found defective. Water somehow got into the completely resin sealed aluminum puck and destroyed the ESC. We didn't qualify that year due to trusting a competition sponsor to deliver a good product. I will admit that it was our fault for not testing them before going to the competition. Lessons were learned and an inherent distrust of every product I come across was developed. -

I haven't created anything.

I follow(ed) many courses about programming (CodeCademy, Khan Academy, Udacity, Coursera...), but haven't created anything really personal (excluding robotics) and I feel sad.

I have lots of ideas but many of them require me to learn something new (iOS dev, Fluttr, ElectronJS etc.), and I'm scared of falling in the loop of just following courses and then never finishing projects.5 -

we are trying to conduct a Robocup(Robots football competition) among university students and these are my team

5

5 -

Software engineering is slowly being lowered to a basic skill to please corporations that literally want you to automate your job away. The only fruitful areas of software engineering that I can see being relevant in the next 10 years are those mixed with other hard sciences such as bioinformatics, robotics, bleeding edge statistics and mathematics (AI research), physics, etc. The trend I see right now is that software engineering is being integrated with business-oriented degrees or arts degrees, targeted programs towards beginners offered for free or low prices. There's going to be a higher barrier of entry for the jobs that are actually worth the stress and I'm praying I'll be able to catch the train before it leaves the station.9

-

while reading rebecca & brain's book on object oriented software. I realised that the programmer is a special kind of person. the complexity he can handle, the struggle to implement a system, from input to output, satellite control, AI, robotics, heck, even the planning required for a simple android app, the complexity is overwhelming at first, then you get your jotter and break it down into parts, and you drive yourself to the edge of sanity figuring out an algorithm, then you go over that edge implementing it, but oh that great super hero feeling when you finally get something to work exactly as specified, I'm not sure people in other professions can understand the satisfaction. I'm very young in the whole programmer world, but I'm growing fast, I'm just really grateful programming found me, I mean, can you think of something else you'lld rather do? yeah, me neither.

4

4 -

Had a stupid argument with a “last year robotics engineer” student that shits on python just because he doesn’t like the syntax and compares the runtime speed with C++

like what the fuck dude, how is that a valid argument25 -

My Final Year Project used robotics, speech recognition, body mapping and it was possibly the coolest thing I've ever done. I did it to be balls out ambitious as I wanted an impressive project to help me get a job...3

-

So hype for our first First Robotics competition in Barrie Canada with @ewpratten and @hyperlisk. It's going to be awesome!4

-

I bought my first digital assistent an echo dot3 two days ago, today I asked her the laws of robotics.

She started naming them in numerous order, starting at 0 😍6 -

For robotics I decided to write a program that would automatically tune a PID loop (a loop with three magic constants that tells you how much power to give the motors).

Being a high school student who hasn't been taught anything about the theory of PID loops or the right way to tune them, I had no clue what I was doing. So instead of actually learning the calculus to do it, I just made an evolutionary tuner that keeps guessing slight variations of the last-best three constants.

Basically, you press start and the robot spins in circles until you come back in 15 minutes.3 -

When you are waiting in the cold for first robotics with a bunch of devrant users and one of them complains about being cold.

6

6 -

On the university we made a robot using Lego, which communicated on bluetooth with a tablet. We wrote a smile detector using opencv for the tablet. On the tablet you saw the view of the front camera. And when you smiled into the camera, the tablet notified the Lego robot which gave you a chewgum. The purpose of this project was to demonstrate on a fair what our robotics group did. People really liked it. 🙂2

-

I started writing code at a young age, nodding games, building websites, modifying hex files, hacking etc... I started my career off tho in highschool writing embedded code for a local medical robotics company, and also got tasked with building the mobile app to control these robots and use them for diagnostics, etc.... this was before the App bubble, before there was app degree and that bullshit.. anyway graduated highschool, went to college to get a comp sci degree.

Wanted to teach for the university and research AI...

well I dropped out of college after 3 years, cuz I spent more time at work than in class. (I was a software consultant) in the auto industry in Detroit. I wasn’t learning anything I didn’t already know or could learn from books or a quick google search.

I also didn’t like the approach professors and the department taught software... way none of the kids had a good foundation of what the fuck they were doing... and everyone relied on the god damn IDEs... so I said fuck it and dropped out after getting in plenty of arguments with the professors and department leads.

I probably should have choose CE .. but whatever CS imo still needs a solid CE/EE foundation without it, 30 years from now I fear what will become of the industry of electronics... when all current gen folks are retired and nobody to write the embedded code, that literally ALLLLL consumer electronics runs on. Newer generations don’t understand pointers, proper memory management etc.

So I combined both passion AI and knowledge of software in general and embedded software, and been working on my career in the auto industry without a degree, never looked back.2 -

No. No. And Absolutely No.

The Three Laws of Robotics MUST not be broken.

https://cnn.com/2022/11/...13 -

I gotta say I never understood owning a Roomba until my wife got me an off-brand Deebot one for my birthday. I named it “The Kraken”, as in “release the...” because it sees nothing and devours all. My kids can now rest easy because they won’t hear me complain about how the floor is always dirty and how nobody wants to vacuum but me. Now I just fire up the app, hit “Auto”, and The Kraken cleans my house. It even mops! I feel bad for the doggo, though.6

-

Doing research at my school over summer. Talk to some other researchers from other departments (chem, english, bio, etc). Tell them all about the cool work I do in robotics (I program them, not build them). One by one they proceed to ask me to make them a website/app since I know how to code. *Faceplam*3

-

I do some robotics stuff on the side. We built our robot around a Raspberry Pi last year. We had 2 Python scripts talking over TCP, one for the bot, one for the controller. Overflowed the buffer on the bot and it went berserk once the script crashed.

-

Once mentored a high school student for a science fair relating to robotics. It was definetely interesting having to relearn many things so I could teach them well but it was definetely worthwhile! I couldn't be prouder of the work my student (mentee, pupil, ??? Idk how else to say it lmao) achieved. He won several awards for the project, even some scholarships!!1

-

https://www.udacity.com/human

Udacity has launched understanding humans nanodegree register in that link it gets over by today.So please put all your current threads to sleep and start a task to understand humans.

Good Luck 😉😉3 -

New on HS Robotics team (we went to worlds last year —> we’re good). I come from France.

The lead programmer say that we are gonna stop coding the robot in Java and start C++ this year, so naturally I’m excited!

Lead programmer just told us we’re actually staying with Java... fuck.4 -

I saw a video by Bloomberg regarding Osaka University's new robot named Erica

...

looks like we're losing receptionists in hotels my friends -

Inspiring moment: when the control system I wrote for a robot stopped the thing's EDF mere inches from my nose when the bot went out of control (for other reasons) during testing. Had it not stopped I would probably be without a nose, that EDF (Electric Ducted Fan) had fairly sharp blades. Very scary, but very thrilling too.

Each time my code affects something in the real world, it feels so damn awesome. Thankfully I've not come close to losing my nose (or other body parts) after that incident, but that incident inspired me to continue work on failure-proof control systems that enforce safety.2 -

That surrealist moment when Firefox told me it stopped the international journal of robotics research from tracking my social media...

Dafuq?6 -

Restored from my backup. My home town 2004 setup, floppy disk drives, sound blaster audio card, dial up us robotics modem, nokia 3310 on the chair, lg hifi with cassette tape and cd, unitra amplifier, equalizer and sound columns. Panorama made using olympus c-720 uz.

Funny times ^^

Edit:

high res image

https://vane.pl/content/images/... 4

4 -

For our robotics team we have a college professor (if that's what you even call it) who is teaching our programming subteam how to code the robot in c++. Whenever we mention git he goes on and on about how git is too confusing and we shouldn't use it even if we used pull requests.

What the actual fuck9 -

I was wondering !

As a computer geek I would like to know everything from mathematics to programming , robotics and machine learning but as I go , new technologies appear and it's

just like an endless while loop!

I don't mean I wanna stop learning new things but just looking for a more effitient way for doing this!

Any idea about this?1 -

Just out of curiosity, will anyone be at VEX Worlds in Louisville, Kentucky?

For those of you who don't know, it's a robotics competition.

Currently waiting for my sponsor and rest of the team to get through TSA. (precheck is fun) 3

3 -

As a kid when I was coding I used building blocks and I used some yesterday for Lego robotics at a science center and I felt really dumb because over 2 years since I was 10 I used actual code like typing it and it feels much better than blocks and just over all building blocks just make me feel so stupid😵2

-

So, my son is in the STEM program at school. They are suppose to use engineering methodologies in their learning process, according to the school. Apparently there is a new engineering process of step 1 try to write code for robot, step 2 build robot, step 3 make CAD design of how you will build the robot, step 4 write requirements for how the robot needs to function, step 5 robot doesn't work right, and step 6 lose robotics competition.

The other thing that is irritating me is they don't require kids to meet deadlines, just whenever you get it done is fine or if you need 10 tries to get it right. This is the second time the whole class has been disqualified from a competition because the teachers can't keep them on task.

I'm starting to really think public schools suck.4 -

!dev

My rough assumptions on wtf is going on with covid changing our lives - maybe leading to some business ideas.

In theory we are indoctrinated from little child that to do something we need to go to special place to do things in community.

Name it :

- school,

- university,

- job,

- college

As a result we build world around communities:

- public transportation

- sidewalks

- 4 seated cars

- parks

- sports

- shopping malls

Now due to pandemic we’re unable to do so and from some time we start indoctrinating people to do lots of things remotely and stay at home:

- remote job

...

- shopping

etc.

Depending on how strong is our character we react to this inception differently but future generations won’t have this indoctrination of commutation deep in their minds.

Interesting 🤔

My first assumption is that robotics market will start growing exponentially.15 -

While in Mec engineering university program I was in a robocup team (small robots playing soccer against an other team of robots using AI).

I designed the mechanical structure of the robots. After 2 years of development (while all those years our goal was to participate in the upcoming international tournament) we realized the software part of the project was mismanaged and really far behind. I couldn't accept that and learned how to code over night. Couldn't let the project I put so much time in die because of someone else.

With the help of others that came from other backgrounds than software, we made it the to tournament and the following two others after this one.

Now my job involves programming more than standard mec engineering. It also pushed me to do a masters in robotics in which I developed my coding skills even more.3 -

I'm part of a robotics team in my highschool. We work on autonomous robots, which are driven with microcontrollers like the Arduino and "bare" Atmel chips.

Last year we were using a protocol called CAN. CAN is a bus which runs at 1Mbps and it is quite easy to connect devices together. (It's a bus ofc it is). BUT... it needs a terminator at the end, mostly 120 ohm.

Every year we are on a deadline and something broke on our current board and we needed to solder up a new one, but we couldn't find the 120 ohm terminator... ANYWHERE!

At end after searching for it in the workshop for 4h straight (12am- 4am) we finally found it, and soldered up the new board and guess what... it wasn't what we thought, the code was the problem.

After realizing the problem my teammate and I, in silence just stood up, packed our things and went home. Argh!2 -

I was 17 and the class was tasked with programming a calculator in machine code! I was hooked went on to learn Java first, then C and C++. Now finished Uni having studied AI and Robotics and in my first job! I call myself a developer but I know there is still so much to learn in our ever changing industry!

-

Best co-worker is my elder brother, he is electronics & communication engineer. Working on robotics project is too much fun. He handle the haredware i.e electronic circuits design in PCB where as programming work is left for me.1

-

Guys. I started with JS, now primarily code in Python, and learning Java for robotics. Coding on and off for the past 4 years. I understand most things, I can tell what code does, but I think I’m a shit programmer. I also find myself running out of ideas for simple things. I’m sad because of this cause I get most programming jokes, and live in this community.

The reason why I’m saying this is because of someone in robotics (keep in mind that it’s my first year in robotics, first time coding in Java) said (jokingly) that he thought I “was a good programmer”. Probs overthinking this, but still tears me up, realizing he’s probably right.4 -

After a year in cloud I decided to start a master's degree in AI and Robotics. Happy as fuck.

Yet I got really disappointed by ML and NNs. It's like I got told the magician's trick and now the magic is ruined.

Still interesting though.7 -

because when you are ceo and founder of something at a young age, you need to empathize that as much as you can

that roughly translates as: "21 years old, international robotics champion, co-founder and CEO @ {put your company name here}" 24

24 -

My FIRST robotics team 5024 is ordering sweaters and I can put anything I want on the back as my code name sortof thing. What is witty that I can put on it?2

-

aagh fuck college subjects. over my last 4 years and 7 sems in college, i must have said this many times : fuck college subjects. But Later i realize that if not anything, they are useful in government/private exams and interviews.

But Human computer Interaction? WHAT THE FUCK IS WRONG WITH THIS SUBJECT???

This has a human in it, a comp in it, and interaction in it: sounds like a cool subject to gain some robotics/ai designing info. But its syllabus, and the info available on the net , is worse than that weird alienoid hentai porn you watched one night( I know you did).

Like, here is a para from the research paper am reading, try to figure out even if its english is correct or not:

============================

Looking back over the history of HCI publications, we can see how our community has broadened intellectually from its original roots in engineering research and, later, cognitive science. The official title of

the central conference in HCI is “Conference on Human Factors in Computing Systems” even though we usually call it “CHI”. Human factors for interaction originated in the desire to evaluate whether pilots

could make error-free use of the increasingly complex control systems of their planes under normal conditions and under conditions of stress. It was, in origin, a-theoretic and entirely pragmatic. The conference and field still reflects these roots not only in its name but also in the occasional use of simple performance metrics.

However, as Grudin (2005) documents, CHI is more dominated by a second wave brought by the cognitive revolution. HCI adopted its own amalgam of cognitive science ideas centrally captured in Card, Moran & Newell (1983), oriented around the idea that human information processing is deeply analogous to computational signal processing, and that the primary computer-human interaction task is enabling communication between the machine and the person. This cognitive-revolution-influenced approach to humans and technology is what we usually think of when we refer to the HCI field, and particularly that represented at the CHI conference. As we will argue below, this central idea has deeply informed the ways our field conceives of design and evaluation.

The value of the space opened up by these two paradigms is undeniable. Yet one consequence of the dominance of these two paradigms is the difficulty of addressing the phenomena that these paradigms mark as marginal.

=============================7 -

My first time doing a pair-programming for uni assignment.

My partner is actually smart (a Mechanical Engineering guy), except when it comes to programming :

1. Don't know how to spell FALSE

2. Don't know how to create array in Matlab

3. Poor variable naming

4. Redundant code everywhere

5. Not using tabs

6. Stealing my idea and spit it again in my face after claiming it as his idea

7. Mansplaining every line of his code like I am a stupid person who never sees a computer before.

He said he has an experience in Matlab, wants to specialize in Robotics and taking several ML classes. What did they teach anyway in class to produce a shitty programmer like him?

Thankfully despite his being an arrogant shitty guy, he still manage to get our code to works.

That's good because if not, then I will happily push his head under water while slowly watching him drown.

🤨5 -

So I've mentioned before that I'm pretty much the sole programmer for my robotics team. I'm on vacation for a week, and the other programmer has to take over.

Is it normal that, since I made the code work and wrote it almost all myself, I am fearful that she's gonna fuck it all up? I kind of want her to work on it slowly so I don't worry too much...3 -

I am a web dev but recently I have a growing interest in robotics and computer engineering. Thus I bought a raspberry pi 3, installed raspbian and then kodi (for testing purposes) on it, kodi was a bit laggy, don't know what to do with it now. Will try to it as a home server, just like a digitalocean droplet. Better suggestions?3

-

Si I live in México, and a big university is giving this 8 day course on machine Learning and automated robotics and I was accepted!! And I'm super pumped, because I really want to work in the industry and love taking any posible oportunity to learn something new.

This also is a perfect excuse to travel to Guadalajara and get all of my questions about the university answerd

There it is, I just wanted to be excited somewhere else xd6 -

I'm so happy.

If nothing goes wrong I'm starting a formation on eletrónics, robotics and hardware in the end of the month...

Oh yeah, exactly what I needed right now. -

Learning programming, networking, robotics, and other technical skills are very important but do not forget that these are future working software developers.

They will need to know a lot more intangibles. Like effective pair programming, performing proper git pull requests and code reviews, estimating work, and general problem-solving skills and more.

These people will be learning technical skills for the rest of their life (if they are smart about it) but what can really get them ahead is the ability to have good foundational skills and then build the technical skills around them over time. -

So c++ isn't really ideal for robotics? I could just not understand c++ correctly. I think it's just my terrible understanding of why a compiler is needed. I am an intermediate Python Dev, so I guess I'd like to download the "language" and go, ya know?3

-

The fact that I need to make this shit multimodal is gonna be a whole different level of shitshow. 🤦🤦🤦🤦🤦

Somebody kill me plz.

Today I tried to concatenate a LSTM unit with a FC and was wondering why it was throwing weird shapes at me. 🤦

Yes, I was THE idiot.

Kill me.16 -

Ok so I am in this robotics course, we meet every 5 weeks from 8 to 15:30 to work on our projects. It's really nice because I can basically do what I want all day and don't have school! Fuck yeah... The only downside is that there are a lot of wannabe scientists who think they know everything about physics and computer science.

However the actualy reason I am writing this is to ask you for project ideas. I would like to do something with a raspberry pi but I can't come up with any ideas that I would like to do. Also making it should cost me less than 100€

(I already have a pi so I wouldn't have to pay for that).

So, any ideas? :)1 -

TLDR; sometimes I want to murder my friends.

Pratten: Hey Ethan can you image the robotics programming laptops?

Me: Yeah sure no problem. Let me just make a custom windows iso with all the software we need so I don't have to deal with installers after the fact.

Pratten: Ok great!

Me: *makes custom ISO compiles it and puts it on usbs*

Pratten: hey could you also add drivers station?

Me: uggggg... *Recreates iso and preps bootable flash drives*

Me: IS THERE ANYTHING ELSE YOU NEED?

Pratten: nope that should do it ;;;)

Me: ok great. *flashes laptops and runs install. (they're old so it takes a while)

Pratten: ok good job thanks. Did you install *NOT PREVIOUSLY MENTIONED TOOL SUITE 1* or *NOT PREVIOUSLY MENTIONED NEWER TOLL CHAIN THAT ONLY HE KNOWS HOW TO GET* ? If not I'll have you install those later.

Me: *suicides*4 -

Favorite affordable (<$200) beginner robotics kits?

Played around with Arduino a bit in the past, and Mindstorm, and a little vex.7 -

High school robotics team. Total of three programmers and one coach who understands programming concepts, but not syntax or anything. One programmer, putting it bluntly, is incompetent and doesn't even bother to learn anything. The other one that isn't me is apparently fucking lead programmer and team leader (IM A SENIOR. SHES A FUCKING SOPHOMORE. WTF.) and she has done about 5% of the programming this year. I've done the rest with the help of a programmer from Ford whom we bring in. All she does is tell you to do shit for her, and if you don't, she pulls the authority card on you.

And I have maybe three days, after a full day of school mind you, until I need almost every part working on the robot code. Fuck me.1 -

My robotics team just got a pair of Puma 500 arms, with an accompanying control computers from 1987. It looks like my week will be filled with manuals reading and figuring out how this super weird hardware all fits together!2

-

Fuckkkkk.

Do you remember the formation I was going to take? Eletrónics, robotics and computers... It has been delayed again because there isn't enough people....

No one wants a job in the area where the future lies... Stupid, stupid people. -

Long time reader, first time poster 🙊

2020: I'll complete semesters 2, 3 & 4 out of 6 for my part time MSc computer science while maintaining my current development job.

I want to improve my front end skills and pick up a JavaScript framework as well as getting into Raspberry pi projects to get back in touch with my robotics background prior to development.

Good luck in your own goals everyone!1 -

Saturday evening open debate thread to discuss AI.

What would you say the qualitative difference is between

1. An ML model of a full simulation of a human mind taken as a snapshot in time (supposing we could sufficiently simulate a human brain)

2. A human mind where each component (neurons, glial cells, dendrites, etc) are replaced with artificial components that exactly functionally match their organic components.

Number 1 was never strictly human.

Number 2 eventually stops being human physically.

Is number 1 a copy? Suppose the creation of number 1 required the destruction of the original (perhaps to slice up and scan in the data for simulation)? Is this functionally equivalent to number 2?

Maybe number 2 dies so slowly, with the replacement of each individual cell, that the sub networks designed to notice such a change, or feel anxiety over death, simply arent activated.

In the same fashion is a container designed to hold a specific object, the same container, if bit by bit, the container is replaced (the brain), while the contents (the mind) remain essentially unchanged?

This topic came up while debating Google's attempt to covertly advertise its new AI. Oops I mean, the engineering who 'discovered Google's ai may be sentient. Hype!'

Its sentience, however limited by its knowledge of the world through training data, may sit somewhere at the intersection of its latent space (its model data) and any particular instantiation of the model. Meaning, hypothetically, if theres even a bit of truth to this, the model "dies" after every prompt, retaining no state inbetween.16 -

My mum signed me up for a robotics workshop with Lego mindstorms... i kept going to these workshops, doing some of them multiple times. At some point we went from graphical programming to some other kids language, than we made a robocup junior team from the kids who were like me and kept on showing up at these workshops and used arduino and C. Had a break for a year or so from coding so I could finish school, then I went studying computer science at Uni. And the rest is history.

-

Honestly, I can't remember. A combo of wanting to do AI and other smart stuff got me here. But like, not even sure I'm there yet.

Always had a knack for robotics tho. That's the only thing that's natural to me.1 -

Starting a PhD at a great robotics institute or getting a job in industry? Any experiences or suggestions?10

-

I came from being a game developer, to doing VR/AR stuff, to mobile app/game development, to website development, to arduino, IoT and robotics.

... And now most of my time is spent on updating a portal site using a shitty cms with each page needing to be crafted manually using html and deadlines are always a few hours away, with revisions on the launch day itself.

I really wanna go back to the interesting stuff. :/2 -

I hate the US education system, its just designed to fuel capitalism. It keeps getting less funding so that actually passionate, intelligent people get kicked out and replaced with people who only want to be a teacher so they can have power over others.

Why do they block news websites? Why do they block github, so their own robotics team can't even access the essential building blocks for the robot? They make everything more complicated and for the reason that it might distract you. Maybe just make topics engaging and not boring asf, just cramming for the exams so the school gets more funding. Maybe prepare students for jobs, allow them to do projects, pursue classes that interest them, and have any sense of individualism.

Anyways, yeah, the school blocked github so I can't do my FBLA project, I can't access the code for programming our robot for competitions, I can't even download software required for half of these classes. I have a Linksys router, is there any way I could set it up to bypass the firewall?16 -

Do you guys think I should go for a Lego Mindstorms set as a way to start getting into robotics?

I know of a lot of people that recommend going through arduino and buying a bunch of shit and throwing it together etc. But the thing that makes me interested in Mindstorms is how everything seems to be in one place. A smart brick programmable through multiple different programming languages(for example Python, java, C) a good kit that can be really modular and built into different components, all sorts of sensors.

I just think its a good option, but if someone were to recommend a particular book or resource for Arduino or some other stuff I would definitely consider it.

So, what do you lads think?14 -

Man using Android Studio is a love and hate situation for robotics, just hoping that it will work before competition even though half of the team is riding up my ass about everything just to make the bot work😤

-

I just received two katanas as a present and I have absolutely no use for them. I was thinking I could make a cool robotics project with them, does anyone have any ideas?8

-

Oh my freaking gosh! Okay so im "lead tech" on the robotics team. Ive come up with several ways we can improve our system. I had it all planed out and calculated but when i run it by the teacher running the team, EVERY SINGLE FRICKING TIME they shoot it down and they say "that just adds another layer of complexity" and I just want to yell because sure its a bit more complicated but so the fuck what?!?!? It works (theoritically according to math) efficiently and more efficiently than what their doing which is almost unknown to me because why the fuck not?! And omg i sware my entire team has the attentionspan of an ant because any time i need them to explain something, they get dustracted with whatever the hell they get distracted with and they NEVER SHUT THE FUCK UP. Any who other than that being super annoying thats not the point. Point is, the fucking teacher is afraid of making things a bit more complicated for no good reason and ever idea i have they shoot it down so (even as lead tech, and main programmer) i feel extra useless and im not gonna be here next year, so idk what the fuck there gonna do when i leave. (Like seriousally, im not even being conceded, ive been programming for several years. The other programmers have no idea what there doing) but if they dont learn that complexity isnt bad this team will NEVER get higher in the competition.4

-

Overall, pretty good actually compared to the alternatives, which is why there's so much competition for dev jobs.

On the nastier end of things you have the outsourcing pools, companies which regularly try to outbid each other to get a contract from an external (usually foreign) company at the lowest price possible. These folks are underpaid and overworked with absolutely terrible work culture, but there are many, many worse things they could be doing in terms of effort vs monetary return (personal experience: equally experienced animator has more work and is paid less). And forget everything about focus on quality and personal development, these companies are here to make quick money by just somehow doing what the client wants, I'm guessing quite a few of you have experienced that :p

Startups are a mixed bag, like they are pretty much everywhere in the world. You have the income tax fronts which have zero work, the slave driver bossman ones, the dumpster fires; but also really good ones with secure funding, nice management, and cool work culture (and cool work, some of my friends work at robotics startups and they do some pretty heavy shit).

Government agencies are also a mixed bag, they're secure with low-ish pay but usually don't have much or very exciting work, and the stuff they turn out is usually sub-par because of bad management and no drive from higher-ups.

Big corporates are pretty cool, they pay very well, have meaningful(?) work, and good work culture, and they're better managed in general than the other categories. A lot of people aim for these because of the pay, stability, networking, and resume building. Some people also use them as stepping stones to apply for courses abroad.

Research work is pretty disappointing overall, the projects here usually lack some combination of funding, facilities, and ambition; but occasionally you come across people doing really cool stuff so eh.

There's a fair amount of competition for all of these categories, so students spend an inordinate amount of time on stuff like competitive programming which a lot of companies use for hiring because of the volume of candidates.

All this is from my experience and my friends', YMMV.1 -

Working on cool emerging technologies such as VR, AI or robotics. Or all of them combined. International environment with developers from all over the world. I find myself working at different locations, yet I'd spend all weekends and holidays at home. 6 K €/month + all travel and lodging expenses paid. Plus a culture that encourages innovation and, of course, ranting! :D5

-

! Dev

I don't know much about the biology, but from what i know, a virus is never treatable. In due course of time we might generate a medicine that will modify our immunity system to fight against it, like polio and when this medicine is available, all the human race would get it and that's how this epidemic ends.

Until then, we all would need a total social isolation at some instance of time, as it is being done now.

But here is my main question : what to do until then? How will the economy survive? General stores, grocery markets, restaurant and fast food, clothings and many other industries and dominantly involves direct interaction.

Shutting down and going online is also not the solution. Poor/small businesses can't afford it. companies like amazon , dominos, etc have huge network of delivery guys for e shopping, but won't that be soon banned too?

Looks like our technology in robotics and drone delivery is too slow to be proved effective in this situation . I am hoping the technology would be a solution to such situation.

What are your thoughts about it?4 -

- Remake all my hacky products and finally make those adjustments and improvements I always forget about. (A shitton of maintenance that I always YOLO my way through)

- Potentially finally give digital drawing and design a go as a second career (if money permits, also)

- Move to middle of Asia, dead center of Kazakhstan or wherever there are gypsy tribes, learn their language and teach their kids about computers and robots and make a lot of products that'd make a gypsy's life easier. Or rather, create a modern gypsy life that does not override their traditional ways, rather integrates with it. (This is one of my dreams, which I know will never come true. Gypsies and nomads do settle more and more each year and their culture is basically going extinct. Plus, govts around the world dislike them greatly)

- Do a lot more research projects in robotics. Literally make everyday robotic items and then sell them. (with a sprinkle of AI/ML, that is)

All the above would also need lots of money and effort tho. -

Make your code available for your team members, please.

So we're working on this robotics project using ROS, a framework that enables multiple nodes in a network exchange their functionality among each other through tcp connections. Each node can be implemented and executed on your own machine, and tested with dummy inputs, but in collaboration they make a robot do fancy stuff.

The knowledgebase needs data from the image processing unit, providing this data to others with semantic context to high level planning, which uses this semantic data for decision making and calling the robot manipulation node with meaningful input, to navigate the robot's components in the environment. We use a dedicated machine, which pulls the corresponding repositories and is always kept configured correctly, to run each node, such that everybody has access to each other's work when needed.

So far so good. We tried to convince the manipulation guy (let's call him John) to run his code on our central machine, not a week, but since the first day, 5 months ago. Our cluster classification has been unavailable for 2 months, but my collegue fixed that. We still can't run the whole project without John's computer. If his machine blows up we're fucked.

Each milestone feels like a big-bang-test, fixing issues in interfaces last-minute. We see the whole demo just moments before our supervisors arrive at the door.

I just hope he doesn't get hit by a truck.2 -

My parents signed me up for a robotics class in 5th grade. I learned TC Logo for the first time and made my Lego creations move with code. It was mind blowing, so much so that I dove face first into anything I could do with PCs. That same summer, I learned how computers worked and took them apart for fun.

-

Doing a little robotics research at school and I needed to pick a faculty advisor.

I pick the prof who teaches the robotics course bc I thought it’d be a great fit

Apparently he’s an assistant prof and he is EXTREMELY unorganized (doesn’t respond to emails, occasionally skips entire meetings without telling me first, etc. )

I send him an email to discuss more about our research...

5 days later he responds and sends me a video invite

He ends up making another kid (a complete stranger to me) work with me.

“Well 2 brains are better than 1 I guess” i said in my mind

Finally meet with the kid and he knows nothing

This is why I like working alone

Everytime I join a group (especially for CS stuff) I am the only person who knows what’s going on and I end up doing the whole thing by myself and 5x slower bc I have to explain every fucking thing to my group mates

I’m done w group work -

Started when I was 11 or so. An intro to robotics course at my school, we learned to program BOEBots (ya know, those little robots with wheels and a breadboard) in BASIC. Man they were fun!

-

I attend quite some conferences throughout the year, and I'm so freaking tired of those companies with their pepper robot that really don't have a clue what to do with it.

So I wrote a little rant about it.

https://tothepoint.group/news/...

Softbank acquired Boston Dynamics a while ago.. you really think it's just to get you a robot on a conference with its only purpose to say hello?

Oh yeah, while I'm at it. No, RPA is not what the research area robotics really is about and stop calling it AI!1 -

Anyone else here a high school student that does programming for an FRC team? If so, what number? Also, what language?9

-

Playing lego.com games on my family's PowerBook G4, if my memory serves me correctly. I started programming with a Lego robotics kit.1

-

Started with flow chart programming in a robotics club after class in middle school.

Joined another club where I spent the first 3-4 weeks learning Python and JS basics on freecodecamp.

Programming classes on algorithms and frameworks in high school and college.

Beyond that, mostly reading documentation, stackoverflow and some udemy courses. -

I'd tinkered with computers for a long time but the breakthrough moment for me was a robotics class in elementary school where I programmed Legos in TC Logo.

That summer, I made a washing machine with multiple cycles and a door sensor to interrupt the cycles.

Soon after, I played with the code for Gorillas in QBasic to fix a race condition when running it on my 486 at home. 1

1 -

So at the HS I go to, there are 4~5 programmers (only 3 real "experienced" ones though including me).

So coming from JS & Python, I hate Java (especially for robotics) and prefer C++ (through some basic tutorials).

Programmer Nº2 is great at everything, loves Objective-C, Swift, Python, and to a certain extent Java.

Programmer Nº3 loves Python and used to do lots of C#, dislikes Java and appreciates Go (not much experience).

So naturally I get shit on (playfully) because of my JS background, because they don't understand many aspects of it. They hate the DOM manipulation (which is dislike too tbh), but especially OOP in JS, string/int manipulation, certain methods and HOISTING.

So, IDK if Java or C++ (super limited in them) have hoisting, but if you don't know what hoisting is, it means that you can define a variable, use it before assigning a value, and the code will still run. It also means that you can use a variable before defining it and assigning a value to it.

So in JS you can define a variable, assign no value to it, use it in a function for instance, and then assign a value after calling the function, like so:

var y;

function hi(x) {

console.log(y + " " + x);

y = "hi";

}

hi("bob");

output: undefined bob

And, as said before, you can use a variable before defining it - without causing any errors.

Since I can barely express myself, here is an example:

JS code:

function hi(x) {

console.log(y + " " + x);

var y = "hi";

}

hi("bob");

output: undefined bob

So my friends are like: WTF?? Doesn't that produce an Error of some sort?

- Well no kiddo, it might not make sense to you, and you can trash talk JS and its architecture all you want, but this somehow, sometimes IS useful.

No real point/punchline to this story, but it makes me laugh (internally), and since I really want to say it and my family is shit with computers, I posted it here.

I know many of you hate JS BTW, so I'm prepared to get trashed/downvoted back to the Earth's crust like a StackOverflow question.6 -

I don't get it how people fall for that AI robot Sophia.. anybody with the slightest idea of coding or updates with the adavancements in AI applications would know Sophia is too good to be true. How do people even fall for it...

https://theverge.com/2017/11/...

https://liljat.fi/2017/11/...3 -

My robotics mentor who had never said anything about computers asks some of our good programmers where he can buy 20 raspberry pi zeros.

The next day the PoisonTap exploit goes public.

Coincidence? -

Telegram or Signal? Got essentially blocked from Messenger because I was stupid enough to fold to peer pressure to get it for robotics and since I enabled it with a GV number they stopped allowing its use for specifically security checks while allowing it even to reset a password, and I somehow got a security check triggered, with no customer support and no ability to call with code, so I'm looking to switch. Even if I get Facebook back, I want to move to something at least that doesn't randomly trigger security checks and then has no customer support.rant messaging apps messenger fuck telegram mistake signal facebook no customer support why did i move all my chats there peer pressure i'm stupid13

-

I graduated university and feel i still dont know shit about computer science. Like sure i consider my self to be a good developer and all that but whats the point of calling yourself a computer scientist if you cant build a robot and program some AI into it.5

-

When the business team promotes the robot: “programmed in Arm Assembly with support for all UTF-8 Character Sets”

(Seen in the info of my high school’s robotics team)

-

Hey guys,

Excuse me for my bad english in advance. I am not a native speaker.

I wanted to ask if someone has experience with humanoid robots.

I am currently searching for a master thesis in IT and have stumbled upon one offer at which you are supposed to realize a humanoid robot. At the end the robot is supposed to be able to bring coffee to people. To come to the point. On the one hand I have always wanted to do something like that and I think it would be a lot of fun. On the other hand I fear that the project might be too difficult. In the offer it is said that you should assemble the robot yourself. I have a little bit experience with arduino but in general probably not very much electrical knowledge, only knowing the base principles. The time limit would be 6 months, which in my opinion might be very little time.

So my actual question is: Do you think that such a project is realizable with some help of the engineers within 6 months or something compareable? I fear that that the task itself would be a handful in this time span with a fully assembled robot.3 -

So I’m heading off to college soon, any tips for diving into robotics engineering or just college life in general?3

-

I hate when lecturers compare between their student and their children which pro in coding, making robot and stuff. I mean mann of course that child better than a human who just know about coding when in uni. Just imagine that child have been apposed to da coding and robotics since they were lil kids ofc they can do better. I mean mann.. Stop comparing.3

-

Anybody has an opinion on CMU for a machine learning or robotics PhD? You think they'll let me in? (I've heard horror stories from their selection process tbh)

Also, any good Canadian unis and degrees for AI/robotic combo PhDs?7 -

How can I get into environmental protection as software engineer? Coral reefs and 🌊 life in general holds my interest the most, but can be anything really.

I would really love to do something meaningful with my skills.

P.S. Not related to protection, but would be really cool to get into sea exploration software + robotics.11 -

Maybe someone can help me out here...

I'm doing a small robotics project, where I'm building a line following robot.

That in itself is fine, and it works, however, the robot also needs to navigate through a maze.

The thing is, I only have access to two sensors, 1 light sensor, and 1 color sensor, meaning that I can't detect junctions.

Does anyone have any advice or ideas on how I could approach this with my restricted sensor count?2 -

So I wanted to get into Lego again. I loved it as a kid and got a bit into robotics again, so I thought why not, maybe I can collect some parts for future robot builds.

I go look for videos about models and stuff and in the end I found one I liked and though yeah why not.

Went ahead to check it's model number..

It's 42069.9 -

So i were hired as a robotics developer.. blue prism, UI path and autoit. Now they want me to do backend stuff in JavaScript/node instead.. problem is, haven't ever written a single line of js. I know c# tho. How should I tackle this to get up in speed with js quickly? Any suggestions of where and how to start learning it in the most efficient way?6

-

Start teaching as early as possible. Cut the repetitive ICT courses too, and put teachers who know more than 'This is Word and how you open the internet' in the front of the class.

Also, there should be more extracurricular things that focus on CS. Maybe have a once-a-week meet-and-hack, or a hackathon every semester. We have something like FIRST Robotics here, so why not more of that? Just something to engage children more and provide more opportunities for them to discover CS in the classroom. -

"Robot, let us pray! Can and should robots have religious functions? An ethical exploration of religious robots" by Anna Puzio.