Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "calculation"

-

Yesterday, in a meeting with project stakeholders and a dev was demoing his software when an un-handled exception occurred, causing the app to crash.

Dev: “Oh..that’s weird. Doesn’t do that on my machine. Better look at the log”

- Dev looks at the log and sees the exception was a divide by zero error.

Dev: “Ohhh…yea…the average price calculation, it’s a bug in the database.”

<I burst out laughing>

Me: “That’s funny.”

<Dev manager was not laughing>

DevMgr: “What’s funny about bugs in the database?”

Me: “Divide by zero exceptions are not an indication of a data error, it’s a bug in the code.”

Dev: “Uhh…how so? The price factor is zero, which comes from a table, so that’s a bug in the database”

Me: “Jim, will you have sales with a price factor of zero?”

StakeholderJim: “Yea, for add-on items that we’re not putting on sale. Hats, gloves, things like that.”

Dev: “Steve, did anyone tell you the factor could be zero?”

DBA-Steve: “Uh...no…just that the value couldn’t be null. You guys can put whatever you want.”

DevMgr: “So, how will you fix this bug?”

DBA-Steve: “Bug? …oh…um…I guess I could default the value to 1.”

Dev: “What if the user types in a zero? Can you switch it to a 1?”

Me: “Or you check the factor value before you try to divide. That will fix the exception and Steve won’t have to do anything.”

<awkward couple of seconds of silence>

DevMgr: “Lets wrap this up. Steve, go ahead and make the necessary database changes to make sure the factor is never zero.”

StakeholderJim: “That doesn’t sound right. Add-on items should never have a factor. A value of 1 could screw up the average.”

Dev: “Don’t worry, we’ll know the difference.”

<everyone seems happy and leaves the meeting>

I completely lost any sort of brain power to say anything after Dev said that. All the little voices kept saying were ‘WTF? WTF just happened? No really…W T F just happened!?’ over and over. I still have no idea on how to articulate to anyone with any sort of sense about what happened. Thanks DevRant for letting me rant.13 -

Probably the biggest one in my life.

TL:DR at the bottom

A client wanted to create an online retirement calculator, sounds easy enough , i said sure.

Few days later i get an email with an excel file saying the online version has to work exactly like this and they're on a tight deadline

Having a little experience with excel, i thought eh, what could possibly go wrong, if anything i can take off the calculations from the excel file

I WAS WRONG !!!

17 Sheets, Linking each other, Passing data to each sheet to make the calculation

( Sure they had lot of stuff to calculate, like age, gender, financial group etc etc )

First thing i said to my self was, WHAT THE FREAKING FUCK IS THIS ?, WHAT YEAR IS THIS ?

After messing with it for couple of hours just to get one calculation out of it, i gave up

Thought about making a mysql database with the cell data and making the calculations, but NOOOO.

Whoever made it decided to put each cell a excel calculation ( so even if i manage to get it into a database and recode all the calculations it would be wayyy pass the deadline )

Then i had an epiphany

"What if i could just parse the excel file and get the data ?"

Did a bit of research sure enough there's a php project

( But i think it was outdated and takes about 15-25 seconds to parse, and makes a copy of the original file )

But this seemed like the best option at the time.

So downloaded the library, finished the whole thing, wrote a cron job to delete temporary files, and added a loading spinner for that delay, so people know something is happening

( and had few days to spare )

Sent the demo link to client, they were very happy with it, cause it worked same as their cute little excel file and gave the same result,

It's been live on their website for almost a year now, lot of submissions, no complains

I was feeling bit guilty just after finishing it, cause i could've done better, but not anymore

Sorry for making it so long, to understand the whole thing, you need to know the full story

TL:DR - Replicated the functionality of a 17 sheet excel calculator in php hack-ishly.8 -

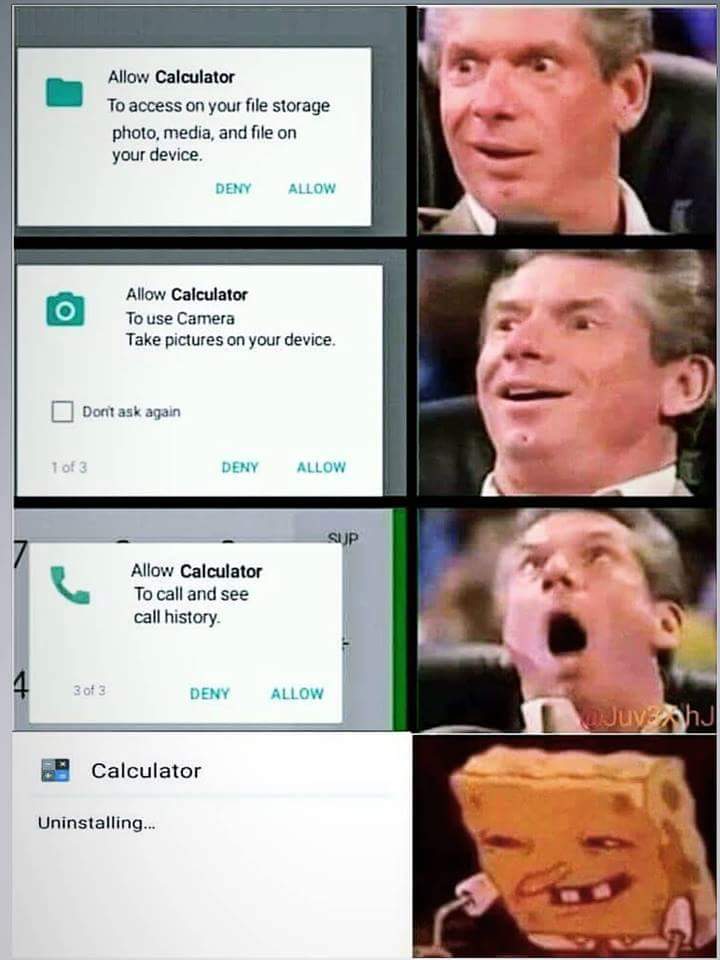

Maybe it does some sort of calculation to let us know who is going to call next by accessing calls? #facepalm

5

5 -

Flashback to when I was in 7th grade

Art teacher: Taco, your focal point is wrong

Me: *looks* ....no it's not

Art teacher: *looks* ...oh, you're right

Fast forward to c++ class

Prof: Taco your calculation is incorrect

Me: *looks* ....no it's not

Prof: *looks* ...oh, you're right8 -

I AM SUCH AN IDIOT I USED 88 INSTEAD OF 8.8 IN MY CALCULATION AND DIDNT FIND THE ISSUE UNTIL 1 FUCKING WEEK LATER!!!!!4

-

A client asked me to work on a new website for them. They setup a WordPress site with a basic theme and asked for the following additions:

- Job application system

- Employee management

- Employee scheduling/holidays

- Online clock-in/out and pay calculation

- Training videos/modules for employees with progress tracking

And their budget... $75

😄🔫4 -

it's funny, how doing something for ages but technically kinda the wrong way, makes you hate that thing with a fucking passion.

In my case I am talking about documentation.

At my study, it was required to write documentation for every project, which is actually quite logical. But, although I am find with some documentation/project and architecture design, they went to the fucking limit with this shit.

Just an example of what we had to write every time again (YES FOR EVERY MOTHERFUCKING PROJECT) and how many pages it would approximately cost (of custom content, yes we all had templates):

Phase 1 - Application design (before doing any programming at all):

- PvA (general plan for how to do the project, from who was participating to the way of reporting to your clients and so on - pages: 7-10.

- Functional design, well, the application design in an understandeable way. We were also required to design interfaces. (Yes, I am a backender, can only grasp the basics of GIMP and don't care about doing frontend) - pages: 20-30.

- Technical design (including DB scheme, class diagrams and so fucking on), it explains it mostly I think so - pages: 20-40.

Phase 2 - 'Writing' the application

- Well, writing the application of course.

- Test Plan (so yeah no actual fucking cases yet, just how you fucking plan to test it, what tools you need and so on. Needed? Yes. but not as redicilous as this) - pages: 7-10.

- Test cases: as many functions (read, every button click etc is a 'function') as you have - pages: one excel sheet, usually at least about 20 test cases.

Phase 3 - Application Implementation

- Implementation plan, describes what resources will be needed and so on (yes, I actually had to write down 'keyboard' a few times, like what the actual motherfucking fuck) - pages: 7-10.

- Acceptation test plan, (the plan and the actual tests so two files of which one is an excel/libreoffice calc file) - pages: 7-10.

- Implementation evalutation, well, an evaluation. Usually about 7-10 FUCKING pages long as well (!?!?!?!)

Phase 4 - Maintaining/managing of the application

- Management/maintainence document - well, every FUCKING rule. Usually 10-20 pages.

- SLA (Service Level Agreement) - 20-30 pages.

- Content Management Plan - explains itself, same as above so 20-30 pages (yes, what the fuck).

- Archiving Document, aka, how are you going to archive shit. - pages: 10-15.

I am still can't grasp why they were surprised that students lost all motivation after realizing they'd have to spend about 1-2 weeks BEFORE being allowed to write a single line of code!

Calculation (which takes the worst case scenario aka the most pages possible mostly) comes to about 230 pages. Keep in mind that some pages will be screenshots etc as well but a lot are full-text.

Yes, I understand that documentation is needed but in the way we had to do it, sorry but that's just not how you motivate students to work for their study!

Hell, students who wrote the entire project in one night which worked perfectly with even easter eggs and so on sometimes even got bad grades BECAUSE THEIR DOCUMENTATION WASN'T GOOD ENOUGH.

For comparison, at my last internship I had to write documentation for the REST API I was writing. Three pages, providing enough for the person who had to, to work with it! YES THREE PAGES FOR THE WHOLE MOTHERFUCKING PROJECT.

This is why I FUCKING HATE the word 'documentation'.36 -

These fuckface wantrapeneurs, posting jobs (paying to do so) and then offering bullshit like:

- We have no funding, so you'll work for free for some time.

- Paying in fucking crypto.

- Wanting a full stack rainbow puking and shitting unicorn for peanuts

- Fucking scammers, posing as legit companies and asking you to install Anydesk.

- Asking absurd interview tasks and times (a couple of days worth of work for a task).

- Whiteboard and live coding interviews with bullshit questions thinking they're Google, while having 20 devs.

- Negotiating salaries and when presented with contract get the salary reduced by double the amount.

- Having idiotic shit on their company websites like a fucking dog as a team member associated as happiness asshole. (One idiot even had a labrador during the video interview while cuddling him)

- Companies asking you to install tracking software with cam recording to keep you in check. (Yeah, you can go fuck yourselves)

- Having absurd compensation schemes, like pay calculation based on the "impact" your work has

Either I'm unlucky or job hunting has become something else since I last started searching.4 -

So I have a teacher that when he use "C++" it is basically C with a .cpp file-extension and -O0 compiler flag.

Last assignment was to implement some arbitrary lengthy calculation with a tight requirement of max 1 second runtime, to force us to basically handroll C code without using std and any form of abstraction. But because the language didn’t freeze in time 1998, there is a little keyword named "constexpr" that folded all my classes, arrays, iterators, virtual methods, std::algorithms etc, into a single return statement. Thus making my code the fastest submitted.

Lesson of the story, use the language to the fullest and always turn on the damn optimizer

Ok now I’m done 😚 6

6 -

POSTMORTEM

"4096 bit ~ 96 hours is what he said.

IDK why, but when he took the challenge, he posted that it'd take 36 hours"

As @cbsa wrote, and nitwhiz wrote "but the statement was that op's i3 did it in 11 hours. So there must be a result already, which can be verified?"

I added time because I was in the middle of a port involving ArbFloat so I could get arbitrary precision. I had a crude desmos graph doing projections on what I'd already factored in order to get an idea of how long it'd take to do larger

bit lengths

@p100sch speculated on the walked back time, and overstating the rig capabilities. Instead I spent a lot of time trying to get it 'just-so'.

Worse, because I had to resort to "Decimal" in python (and am currently experimenting with the same in Julia), both of which are immutable types, the GC was taking > 25% of the cpu time.

Performancewise, the numbers I cited in the actual thread, as of this time:

largest product factored was 32bit, 1855526741 * 2163967087, took 1116.111s in python.

Julia build used a slightly different method, & managed to factor a 27 bit number, 103147223 * 88789957 in 20.9s,

but this wasn't typical.

What surprised me was the variability. One bit length could take 100s or a couple thousand seconds even, and a product that was 1-2 bits longer could return a result in under a minute, sometimes in seconds.

This started cropping up, ironically, right after I posted the thread, whats a man to do?

So I started trying a bunch of things, some of which worked. Shameless as I am, I accepted the challenge. Things weren't perfect but it was going well enough. At that point I hadn't slept in 30~ hours so when I thought I had it I let it run and went to bed. 5 AM comes, I check the program. Still calculating, and way overshot. Fuuuuuuccc...

So here we are now and it's say to safe the worlds not gonna burn if I explain it seeing as it doesn't work, or at least only some of the time.

Others people, much smarter than me, mentioned it may be a means of finding more secure pairs, and maybe so, I'm not familiar enough to know.

For everyone that followed, commented, those who contributed, even the doubters who kept a sanity check on this without whom this would have been an even bigger embarassement, and the people with their pins and tactical dots, thanks.

So here it is.

A few assumptions first.

Assuming p = the product,

a = some prime,

b = another prime,

and r = a/b (where a is smaller than b)

w = 1/sqrt(p)

(also experimented with w = 1/sqrt(p)*2 but I kept overshooting my a very small margin)

x = a/p

y = b/p

1. for every two numbers, there is a ratio (r) that you can search for among the decimals, starting at 1.0, counting down. You can use this to find the original factors e.x. p*r=n, p/n=m (assuming the product has only two factors), instead of having to do a sieve.

2. You don't need the first number you find to be the precise value of a factor (we're doing floating point math), a large subset of decimal values for the value of a or b will naturally 'fall' into the value of a (or b) + some fractional number, which is lost. Some of you will object, "But if thats wrong, your result will be wrong!" but hear me out.

3. You round for the first factor 'found', and from there, you take the result and do p/a to get b. If 'a' is actually a factor of p, then mod(b, 1) == 0, and then naturally, a*b SHOULD equal p.

If not, you throw out both numbers, rinse and repeat.

Now I knew this this could be faster. Realized the finer the representation, the less important the fractional digits further right in the number were, it was just a matter of how much precision I could AFFORD to lose and still get an accurate result for r*p=a.

Fast forward, lot of experimentation, was hitting a lot of worst case time complexities, where the most significant digits had a bunch of zeroes in front of them so starting at 1.0 was a no go in many situations. Started looking and realized

I didn't NEED the ratio of a/b, I just needed the ratio of a to p.

Intuitively it made sense, but starting at 1.0 was blowing up the calculation time, and this made it so much worse.

I realized if I could start at r=1/sqrt(p) instead, and that because of certain properties, the fractional result of this, r, would ALWAYS be 1. close to one of the factors fractional value of n/p, and 2. it looked like it was guaranteed that r=1/sqrt(p) would ALWAYS be less than at least one of the primes, putting a bound on worst case.

The final result in executable pseudo code (python lol) looks something like the above variables plus

while w >= 0.0:

if (p / round(w*p)) % 1 == 0:

x = round(w*p)

y = p / round(w*p)

if x*y == p:

print("factors found!")

print(x)

print(y)

break

w = w + i

Still working but if anyone sees obvious problems I'd LOVE to hear about it.36 -

So I once had a job as a C# developer at a company that rewrote its legacy software in .Net after years of running VB3 code - the project had originally started in 1994 and ran on Windows 3.11.

As one of the only two guys in the team that actually knew VB I was eventually put in charge of bug for bug compatibility. Since our software did some financial estimations that were impossible to do without it (because they were not well defined), our clients didn't much care if the results were slightly wrong, as long as they were exactly compatible with the previous version - compatibility proved the results were correct.

This job mostly consisted of finding rounding errors caused by the old VB3 code, but that's not what I'm here to talk about today.

One day, after dealing with many smaller functions, I felt I was ready to finally tackle the most complicated function in our code. This was a beast of a function, called Calc, which was called from everywhere in the code, did a whole bunch of calculations, and returned a single number. It consisted of 500 or so lines of spaghetti.

This function had a very peculiar structure:

Function Calc(...)

...

If SomeVariable Then

...

If Not SomeVariable Then

...

(the most important bit of calculation happened here)

...

End If

...

End If

...

End Function

But for some reason it actually worked. For days I tried to find out what's going on, where the SomeVariable was being changed or how the nesting indentation was actually wrong and didn't match the source, but to no avail. Eventually, though, after many days, I did find the answer.

SomeVariable = 1

Somehow, the makers of VB3 though it would be a good idea for Not X to be calculated as (-1 - X). So if a variable was not a boolean (-1 for True, 0 for False), both X and Not X could be truthy, non-zero values.

And kids these days complain about JavaScript's handling of ==...7 -

Developed my own programming language to teach programming at community college.

I needed an easy to learn language with as few brackets as possible cz these caused the most problems for beginners. Called it robocode. =)

Then i built an IDE around it where you have to program a little sheep to eat all gras in an area. The goal was to teach how to learn the syntax, the libary, debugging and to "see" the code run while the program and the little sheep runs, ..halt the programm, inspect variables, check the positions on the grass, ...i think you get the picture.

Later i built another IDE where you can program a Tetris.

robocode now also powers the calculation in our buisness application.

...i think thats my most successful project so far.

here's a screenshot of the RoboSheep IDE (be nice, it's a few years old) and the links to the download sites. I'm sorry, it's all german cz i never localized it. 12

12 -

I've decided that whenever a non technical person be it a client or a non technical PM tells me it's easy or tells me it'll take only x hours, I'm going to tell them to either do it themselves, or let me do my estimate calculation. You don't fucking understand one line of what I do yet you can magically calculate the amount of time I'll take on the task? No fucking thank you sir.2

-

Web Developer with no common sense: “I’m going to query the currency calculator API for each of the 1000 records to convert.”.

Web Developer with common sense: “I’m going to query the currency rates API and use the calculation to convert each of the 1000 records.”6 -

Well, if your tests fails because it expects 1557525600000 instead of 1557532800000 for a date it tells you exactly: NOTHING.

Unix timestamp have their point, yet in some cases human readability is a feature. So why the fuck don't you display them not in a human readable format?

Now if you'd see:

2019-05-10T22:00:00+00:00

vs the expected

2019-05-11T00:00:00+00:00

you'd know right away that the first date is wrong by an offset of 2 hours because somebody fucked up timezones and wasn't using a UTC calculation.

So even if want your code to rely on timestamps, at least visualize your failures in a human readable way. (In most cases I argue that keeping dates as an iso string would be JUST FUCKING FINE performance-wise.)

Why do have me parse numbers? Show me the meaningful data.

Timestamps are for computers, dates are for humans.3 -

Dear fellow mate,

I can't reject your request to leave. You have your right to request casual/medical or any suitable form of leave. It's up to the HR to do their calculation and process your leaves.

All I can do is tell you the overview of our team's tasks status. And status of tasks assigned to you. Then tasks status of possible colleagues who can cover your position.

The reason I shared those with you is that you act like you have no knowledge about them.

That's all I have for you. Decide on your own what to do. But consider with this new information.

Regards,

Cursee3 -

Coworker: so once the algorithm is done I will append new columns in the sql database and insert the output there

Me: I don't like that, can we put the output in a separate table and link it using a foreign key. Just to avoid touching the original data, you know, to avoid potential corruption.

C: Yes sure.

< Two days later - over text >

C: I finished the algo, i decided to append it to the original data in order to avoid redundancy and save on space. I think this makes more sense.

Me: ahdhxjdjsisudhdhdbdbkekdh

No. Learn this principal:

" The original data generated by the client, should be treated like the god damn Bible! DO NOT EVER CHANGE ITS SCHEMA FOR A 3RD PARTY CALCULATION! "

Put simply: D.F.T.T.O

Don't. Fucking. Touch. The. Origin!5 -

*tries to shrink an NTFS volume in preparation for a new BTRFS volume*

(shameless ad: check out https://github.com/maharmstone/...! BTRFS on Windows, how cool is that?)

Windows Disk Management: ah surely, I can do that for you.

*clicks "shrink"*

…

Well that disk calculation process is taking a long time...

*checks Task Manager*

*notices a pretty disk-intensive defrag process*

… Yeah.. defragging. Seems reasonable. Guess I'll just let it finish its defragmentation process. After that it should just be able to shrink the NTFS filesystem and modify the partition table without any issues. After all, I've done this manually in Linux before, and after defragging (to relocate the files on the leftmost sectors of the disk) it finished in no time.

*defrag finishes*

Alright, time to shrink!

….

Taking a shitton of time...

*checks Task Manager again*

System taking a lot of disk this time.. not even a defrag? How long can this shit take at 40MB/s simultaneous read and write?

…

*many minutes passed, finished that episode of Elfen Lied, still ongoing...*

Fucking piece of Microshit. Are you really copying over the entire 1.3TB that that disk is storing?! Inefficient piece of crap.. living up to the premise of Shitware indeed!!!14 -

Real-time physics calculation of +250000 blocks on AMD Athlon.

No worries boys, I had fire extinguisher.4 -

While writing a raytracing engine for my university project (a fairly long and complex program in C++), there was a subtle bug that, under very specific conditions, the ray energy calculation would return 0 or NaN, and the corresponding pixel would be slightly dimmer than it should be.

Now you might think that this is a trifling problem, but when it happened to random pixels across the screen at random times it would manifest as noise, and as you might know, people who render stuff Absolutely. Hate. Noise. It wouldn't do. Not acceptable.

So I worked at that thing for three whole days and finally located the bug, a tiny gotcha-type thing in a numerical routine in one corner of the module that handled multiple importance sampling (basically, mixing different sampling strategies).

Frustrating, exhausting, and easily the most gruelling bug hunt I've ever done. Utterly worth it when I fixed it. And what's even better, I found and squashed two other bugs I hadn't even noticed, lol -

Just a heads up - there was a problem with the calculation of the "top" tab day, week and month calculations the last couple of days so there might have been some rants not showing up.

It has been fixed and everything has been recalculated now. -

Got a ticket form a client reporting a calculation giving the wrong outcome.

In return I ask her what she thinks the outcome should be and why.

"The right answer because I said so."

Yeah thanks that's going to help a lot. -

Got my first serious project about a year ago. Made it clear to the client that we are developing a Windows app. After around 80-100 hours of work client just goes "how about we make this a web app?" Got a "financial support" instead of the agreed payment. Got around 4 times less money than agreed upon. They never ended up using some parts of the software (I ran the server so I knew that they weren't using it)

I once had a nightmare explaining to the client that he cannot use a 30+ MB image as his home page background. Average internet speed in my country is around 1-2 MB/s. I even had to do the calculation for him because he couldn't figure out the time it took for the visitor to load the image.3 -

when a project manager asks for an effort calculation due to changed requirements, but the calculation itself takes longer than the implementation would...

-

God bless being a student. I just moved a massive calculation to uni's jupyter servers. Saved me from a shitton of effort and burning my laptop down. 🙏

-

So ok here it is, as asked in the comments.

Setting: customer (huge electronics chain) wants a huge migration from custom software to SAP erp, hybris commere for b2b and ... azure cloud

Timeframe: ~10 months….

My colleague and me had the glorious task to make the evaluation result of the B2B approval process (like you can only buy up till € 1000, then someone has to approve) available in the cart view, not just the end of the checkout. Well I though, easy, we have the results, just put them in the cart … hmm :-\

The whole thing is that the the storefront - called accelerator (although it should rather be called decelerator) is a 10-year old (looking) buggy interface, that promises to the customers, that it solves all their problems and just needs some minor customization. Fact is, it’s an abomination, which makes us spend 2 months in every project to „ripp it apart“ and fix/repair/rebuild major functionality (which changes every 6 months because of „updates“.

After a week of reading the scarce (aka non-existing) docs and decompiling and debugging hybris code, we found out (besides dozends of bugs) that this is not going to be easy. The domain model is fucked up - both CartModel and OrderModel extend AbstractOrderModel. Though we only need functionality that is in the AbstractOrderModel, the hybris guys decided (for an unknown reason) to use OrderModel in every single fucking method (about 30 nested calls ….). So what shall we do, we don’t have an order yet, only a cart. Fuck lets fake an order, push it through use the results and dismiss the order … good idea!? BAD IDEA (don’t ask …). So after a week or two we changed our strategy: create duplicate interface for nearly all (spring) services with changed method signatures that override the hybris beans and allow to use CartModels (which is possible, because within the super methods, they actually „cast" it to AbstractOrderModel *facepalm*).

After about 2 months (2 people full time) we have a working „prototype“. It works with the default-sample-accelerator data. Unfortunately the customer wanted to have it’s own dateset in the system (what a shock). Well you guess it … everything collapsed. The way the customer wanted to "have it working“ was just incompatible with the way hybris wants it (yeah yeah SAP, hybris is sooo customizable …). Well we basically had to rewrite everything again.

Just in case your wondering … the requirements were clear in the beginning (stick to the standard! [configuration/functinonality]). Well, then the customer found out that this is shit … and well …

So some months later, next big thing. I was appointed technical sublead (is that a word)/sub pm for the topics‚delivery service‘ (cart, delivery time calculation, u name it) and customerregistration - a reward for my great work with the b2b approval process???

Customer's office: 20+ people, mostly SAP related, a few c# guys, and drumrole .... the main (external) overall superhero ‚im the greates and ur shit‘ architect.

Aberage age 45+, me - the ‚hybris guy’ (he really just called me that all the time), age 32.

He powerpoints his „ tables" and other weird out of this world stuff on the wall, talks and talks. Everyone is in awe (or fear?). Everything he says is just bullshit and I see it in the eyes of the others. Finally the hybris guy interrups him, as he explains the overall architecture (which is just wrong) and points out how it should be (according to my docs which very more up to date. From now on he didn't just "not like" me anymore. (good first day)

I remember the looks of the other guys - they were releaved that someone pointed that out - saved the weeks of useless work ...

Instead of talking the customer's tongue he just spoke gibberish SAP … arg (common in SAP land as I had to learn the hard way).

Outcome of about (useless) 5 meetings later: we are going to blow out data from informatica to sap to azure to datahub to hybris ... hmpf needless to say its fucking super slow.

But who cares, I‘ll get my own rest endpoint that‘ll do all I need.

First try: error 500, 2. try: 20 seconds later, error message in html, content type json, a few days later the c# guy manages to deliver a kinda working still slow service, only the results are wrong, customer blames the hybris team, hmm we r just using their fucking results ...

The sap guys (customer service) just don't seem to be able to activate/configure the OOTB odata service, so I was told)

Several email rounds, meetings later, about 2 months, still no working hybris integration (all my emails with detailed checklists for every participent and deadlines were unanswered/ignored or answered with unrelated stuff). Customer pissed at us (god knows why, I tried, I really did!). So I decide to fly up there to handle it all by myself16 -

Wow, I still remember some math after decades. Today, I needed some parameter calculation in an interval with smooth transition at both ends (i.e. continuously differentiable). So I used a 3rd degree polynomial where the values and derivations gave a 4x4 linear equation system. I lazily hacked that into WolframAlpha, and it works nicely.1

-

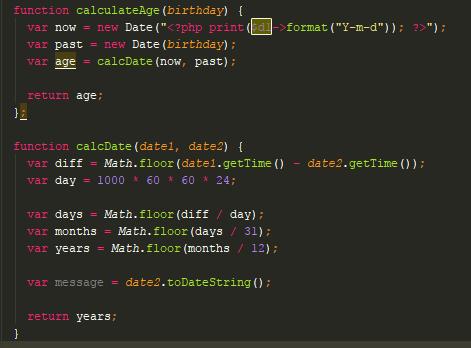

Came across this in a site work took on from another agency. Bad JS calculation of someone's age, there was many other terrible date manipulation parts throughout the site

4

4 -

Years ago, we were setting up an architecture where we fetch certain data as-is and throw it in CosmosDb. Then we run a daily background job to aggregate and store it as structured data.

The problem is the volume. The calculation step is so intense that it will bring down the host machine, and the insert step will bring down the database in a manner where it takes 30 min or more to become accessible again.

Accommodating for this would need a fundamental change in our setup. Maybe rewriting the queries, data structure, containerizing it for auto scaling, whatever. Back then, this wasn't on the table due to time constraints and, nobody wanted to be the person to open that Pandora's box of turning things upside down when it "basically works".

So the hotfix was to do a 1 second threadsleep for every iteration where needed. It makes the job take upwards of 12 hours where - if the system could endure it - it would normally take a couple minutes.

The solution has grown around this behavior ever since, making it even harder to properly fix now. Whenever there is a new team member there is this ritual of explaining this behavior to them, then discussing solutions until they realize how big of a change it would be, and concluding that it needs to be done, but...

not right now.2 -

I hate the feeling you get when you do a lengthy, drooling task that once finished got you nowhere.

My day was mostly productive for a Sunday, woke up late as all Sundays, spent the afternoon writing a proposal and exercising when I saw a notification for a homework for tonight at 12.

A research paper about Dijkstra's philosopher problem, 8 pages minimum. To be honest I've seen the problem a long time ago while studying C++ and I had the theory down and that is my issue, it becomes inherently boring and useless in my head. Is in this situations that my mind gets lazy.

I wrote the first 3 pages in half an hour but I was done, I started revising the proposal and fixed a calculation error, checked Rust's take on the philosophers issue and decided to save it for winter break along with learning Rust (although got some basics down), made rough budget approximations for the next 3 months, lost myself a little bit on deep house music (notable tracks tadow from masego, nevermind - Dennis Lloyd and gold - Chet faker), etc...all in all it took me 3 hours more to finish the assignment, including breaks and dinner.

I am working on a lot of stuff lately and my main project's sprint ends this Tuesday and it pisses me off, after all that I learnt nothing new, got nowhere with my project and will probably get 80 because Google docs has no margin setting. Worse than being lazy for fun is inevitably being lazy for being compelled to do low priority tasks by your head's standards. 6

6 -

A becomes B

B becomes C

C becomes A

D becomes B

E becomes A

Now add real hostnames... Make this list longer (roughly 15-18)

Add resource calculation, migration of VMs, organizing new hardware, removing and rebuilding hosts, etc.

I think my brain is permanently damaged and cannot be repaired.

Hardware migration finally over tomorrow.

I really won't miss the fuckton of Excel lists, constant speaking mistakes, having sore fingers from mutilating the desk calculator etc.

I'm too tired to be happy. But... It's over.1 -

Almost burnout story? What about right now...

Customer was really positive about the new site we are creating for them then, out of the blue panic, they complain about features (calculations) which aren't implemented yet (they didn't provide any information for the calculation until 1 week ago.) And they complain that the site does not has any content

THAT IS RIGHT YOU DUMB FUCKS...

I can't magically create content for YOUR site... -

We'll build an mathematics-6th-grader-calculation-game in IT-class. ("Math-Tetris")

In Java.

I hate Java.9 -

At the institute I did my PhD everyone had to take some role apart from research to keep the infrastructure running. My part was admin for the Linux workstations and supporting the admin of the calculation cluster we had (about 11 machines with 8 cores each... hot shit at the time).

At some point the university had some euros of budget left that had to be spent so the institute decided to buy a shiny new NAS system for the cluster.

I wasn't really involved with the stuff, I was just the replacement admin so everything was handled by the main admin.

A few months on and the cluster starts behaving ... weird. Huge CPU loads, lots of network traffic. No one really knows what's going on. At some point I discover a process on one of the compute nodes that apparently receives commands from an IRC server in the UK... OK code red, we've been hacked.

First thing we needed to find out was how they had broken in, so we looked at the logs of the compute nodes. There was nothing obvious, but the fact that each compute node had its own public IP address and was reachable from all over the world certainly didn't help.

A few hours of poking around not really knowing what I'm looking for, I resort to a TCPDUMP to find whether there is any actor on the network that I might have overlooked. And indeed I found an IP adress that I couldn't match with any of the machines.

Long story short: It was the new NAS box. Our main admin didn't care about the new box, because it was set up by an external company. The guy from the external company didn't care, because he thought he was working on a compute cluster that is sealed off behind some uber-restrictive firewall.

So our shiny new NAS system, filled to the brink with confidential research data, (and also as it turns out a lot of login credentials) was sitting there with its quaint little default config and a DHCP-assigned public IP adress, waiting for the next best rookie hacker to try U:admin/P:admin to take it over.

Looking back this could have gotten a lot worse and we were extremely lucky that these guys either didn't know what they had there or didn't care. -

So recently I had an argument with gamers on memory required in a graphics card. The guy suggested 8GB model of.. idk I forgot the model of GPU already, some Nvidia crap.

I argued on that, well why does memory size matter so much? I know that it takes bandwidth to generate and store a frame, and I know how much size and bandwidth that is. It's a fairly simple calculation - you take your horizontal and vertical resolution (e.g. 2560x1080 which I'll go with for the rest of the rant) times the amount of subpixels (so red, green and blue) times the amount of bit depth (i.e. the amount of values you can set the subpixel/color brightness to, usually 8 bits i.e. 0-255).

The calculation would thus look like this.

2560*1080*3*8 = the resulting size in bits. You can omit the last 8 to get the size in bytes, but only for an 8-bit display.

The resulting number you get is exactly 8100 KiB or roughly 8MB to store a frame. There is no more to storing a frame than that. Your GPU renders the frame (might need some memory for that but not 1000x the amount of the frame itself, that's ridiculous), stores it into a memory area known as a framebuffer, for the display to eventually actually take it to put it on the screen.

Assuming that the refresh rate for the display is 60Hz, and that you didn't overbuild your graphics card to display a bazillion lost frames for that, you need to display 60 frames a second at 8MB each. Now that is significant. You need 8x60MB/s for that, which is 480MB/s. For higher framerate (that's hopefully coupled with a display capable of driving that) you need higher bandwidth, and for higher resolution and/or higher bit depth, you'd need more memory to fit your frame. But it's not a lot, certainly not 8GB of video memory.

Question time for gamers: suppose you run your fancy game from an iGPU in a laptop or whatever, with 8GB of memory in that system you're resorting to running off the filthy iGPU from. Are you actually using all that shared general-purpose RAM for frames and "there's more to it" juicy game data? Where does the rest of the operating system's memory fit in such a case? Ahhh.. yeah it doesn't. The iGPU magically doesn't use all that 8GB memory you've just told me that the dGPU totally needs.

I compared it to displaying regular frames, yes. After all that's what a game mostly is, a lot of potentially rapidly changing frames. I took the entire bandwidth and size of any unique frame into account, whereas the display of regular system tasks *could* potentially get away with less, since most of the frame is unchanging most of the time. I did not make that assumption. And rapidly changing frames is also why the bitrate on e.g. screen recordings matters so much. Lower bitrate means that you will be compromising quality in rapidly changing scenes. I've been bit by that before. For those cases it's better to have a huge source file recorded at a bitrate that allows for all these rapidly changing frames, then reduce the final size in post-processing.

I've even proven that driving a 2560x1080 display doesn't take oodles of memory because I actually set the timings for such a display in order for a Raspberry Pi to be able to drive it at that resolution. Conveniently the memory split for the overall system and the GPU respectively is also tunable, and the total shared memory is a relatively meager 1GB. I used to set it at 256MB because just like the aforementioned gamers, I thought that a display would require that much memory. After running into issues that were driver-related (seems like the VideoCore driver in Raspbian buster is kinda fuckulated atm, while it works fine in stretch) I ended up tweaking that a bit, to see what ended up working. 64MB memory to drive a 2560x1080 display? You got it! Because a single frame is only 8MB in size, and 64MB of video memory can easily fit that and a few spares just in case.

I must've sucked all that data out of my ass though, I've only seen people build GPU's out of discrete components and went down to the realms of manually setting display timings.

Interesting build log / documentary style video on building a GPU on your own: https://youtube.com/watch/...

Have fun!18 -

I just realize I made QA wait for 5 minutes while testing session handling on the app when I missed just a single zero and made the session expire after 30 seconds instead (instead of 300000 milli calculation produced 30000)

He tried 3 times and then opened a ticket not knowing why session is always expired

wonder if I should tell him >_> -

I have been debugging for like hours trying to figure out the cause an unknown bug spoiling my UI by making my elements overlap.

I'm working on a Unit Converter that takes kWh and then converts to mWh. (Logical Conversion: 1000 kWh = 1 mWh).

Just an easy shit i thought, using Javascript I just passed the dynamically generated kWh value to a function that takes maximum of 6 chars and multiply it by 0.001 to get the required result but this was where my problem started. All values came out as expected until my App hits a particular value (8575) and outputs a very long set of String (8.575000000000001), i couldn't figure the cause of this until i checked my console log and found the culprit value, and then i change the calculation logic from multiply by 0.001 to divide by 1000 and it came out as expected (8.575)

My question is that;

Is my math logically wrong or is this another Javascript Calculation setback? 13

13 -

Background story:

One of the projects I develop generates advice based on energy usage and a questionare with 300 questions.

Over 400 different variables determine what kind of advice is given. Lots of userinput and over a thousand textblocks that need to show or not.

Rant:

WTF do you want me to do when you tell me. It's not giving the right advice for the lights.

Why the for the love of.. do I need to ask you everytime. If something is not working. Tell me what and for wich user. Don't tell me calculation whatever is not working, I don't know that calculation. Your calculations are maintainable in your cms.

And how, like I really wonder, do you expect me, when not telling me what user is having this problemen to find and fix it, You just want me to random guess one of the thousands users that should be given that specific advice?

FCK, like 80% of my time solving problems is spend trying to figure out wtf your talking about.

And then what a miricale the function is doing exactly what is it doing but you forgot a variable. It's not like the code I write suddenly decides it does not feel like giving the right answer.3 -

Have you ever been working on something really important and in the middle of a calculation, you suddenly remember.. that you had saved the last Muffin from 2 days ago inside a container on the shelf where you never ever Store stuff.... out of the blue ......and you wonder till you get home and find that muffin.

...ah what a beautiful mind.... Seems analogous to a Google now notification but in your brain... -

My department vp announced to our sales team that our company would have an online Web based catalog capable of custom style configuration, pricing calculation, quote generation. the catalog would manage all of our customers different discount rates automatically and notify the correct sales rep any time a customer completes a quote. This was announced on September 30(ish) with a launch date of December 1. I'm the only Web developer and I found out afer the announcement. :/2

-

TIL how time-delay relays are used in circuits with a hell lot of power. For example to power and control the motor of a machine that lifts 20 people up to the sky and back to ground again.

FYI this is a simple circuit. We will hopefully start experimenting with SPS/Speicherprogrammierbare Steuerung (Programmable Logic Controllers). I cannot wait to make use of our predesigned circuits with complex logic gates with the new unit we will get to know.

Unfortunately, we will do practical networking (testing cables for the signal strength and speed, building a network of telephones and call each other - guess this is going to be the funniest part lol, etc.) before the SPS and LOGO software phase.

Btw. I am doing an all-nighter rn and repeating everything we did in our recent signal calculation lesson. We have another exam tomorrow. 11

11 -

Me: *changes a long and complex calculation to fix old mistakes*

Program: *keeps outputting the same wrong result*

Me: *goes mad for a good hour trying to discover the problem by debugging it like a angry rat*

Also me after one hour of debugging: *discovers he never changed the output source of the function and it's still outputting the old result*3 -

Rant rant rant!

Le me subscribe to website to buy something.

Le register, email arrives immediately.

*please not my password as clear text, please not my password as clear text *

Dear customer your password is: ***

You dense motherfucker, you special bread of idiotic asshole its frigging 2017 and you send your customer password in an email!???

They frigging even have a nice banner in their website stating that they protect their customer with 128bit cryptography (sigh)

Protect me from your brain the size of a dried pea.

Le me calm down, search for a way to delete his profile. Nope no way.

Search for another shop that sells the good, nope.

Try to change my info: nope you can only change your gender...

Get mad, modify the html and send a tampered form: it submits... And fail because of a calculation on my fiscal code.

I wanna die, raise as a zombie find the developers of that website kill them and then discard their heads because not even an hungry zombie would use that brains for something.1 -

How can you explain to a senior dev, with more than 15 years of experience, that for money calculation (like VAT) you can't use the fucking floating types?!?!?!?!?9

-

Spending half the day wondering why the longest javascript calculation in existence is not matching client supplied excel by 0.22 percent.. Realised one number was parseInt'd instead of parseFloat'd... FML

-

TLDR; I was editing the wrong file, let's go to bed.

We have this huge system that receives data from an API endpoint, does a whole bunch of stuff, going through three other servers, and then via some calculation based on the data received from the UI, and data received from the endpoint, it finally sends the calculated fields to the UI via websocket.

Poor me sitting for over 4 hours debugging and changing values in the logic file trying to understand why one of the fields ends up being null.

Of course every change needs a reboot to all the 4 servers involved, and a hard refresh of the UI.

I even tried to search for the word null in that file, but to no avail.

After scattering hundreds of console logs, and pulling my hair out, I found out that I am editing the wrong file.

I guess it's time for some sleep.1 -

Code reviewing, came across this:

Double val = item.getPrice() * pointMultiplier;

String[] s = String.split(val.toString(), "\\.");

points = Integer.parseInt(s[0]);

if(Integer.parseInt(s[1]) > 0) { points++; }

Usually I'd leave it at that, but to add insult to injury this was a level 3 developer who had been there for four (4!!!!) years.

She argued with me that we shouldn't round up loyalty points if theres only 0.00001 in the calculation.

I argued that since it's a BigDecimal, we can set the rounding factor of it.

She didn't understand that solution, refused to hear it.

The code is probably still there.5 -

Worst architecture I've seen?

The worst (working here) follow the academic pattern of trying to be perfect when the only measure of 'perfect' should be the user saying "Thank you" or one that no one knows about (the 'it just works' architectural pattern).

A senior developer with a masters degree in software engineering developed a class/object architecture for representing an Invoice in our system. Took almost 3 months to come up with ..

- Contained over 50 interfaces (IInvoice, IOrder, IProduct, etc. mostly just data bags)

- Abstract classes that implemented the interfaces

- Concrete classes that injected behavior via the abstract classes (constructors, Copy methods, converter functions, etc)

- Various data access (SQL server/WCF services) factories

During code reviews I kept saying this design was too complex and too brittle for the changes everyone knew were coming. The web team that would ultimately be using the framework had, at best, vague requirements. Because he had a masters degree, he knew best.

He was proud of nearly perfect academic design (almost 100% test code coverage, very nice class diagrams, lines and boxes, auto-generated documentation, etc), until the DBAs changed table relationships (1:1 turned into 1:M and M:M), field names, etc, and users changed business requirements (ex. concept of an invoice fee changed the total amount due calculation, which broke nearly everything).

That change caused a ripple affect that resulted in a major delay in the web site feature release.

By the time the developer fixed all the issues, the web team wrote their framework and hit the database directly (Dapper+simple DTOs) and his library was never used.1 -

There was some erroneous calculation in the leaves taken for the month in her account certificate, even after repeated correction request from her end. This had happened twice.

She just stood up, shouted at the HR (who was responsible for this) and headed straight to the co-founder's cabin. After 4 hours of discussions, she came out. Whispered to me that she just quit, and went away. Never saw her again. -

So, I've been reading all this complaints about micro services which started to be loud thanks to the mad CEO of Twitter.

Keep reading but I am curious about your opinion as well

To me all the point of micro services has never been about improving the speed, in fact it might have a negative impact on the performances of an application. I think that given the calculation power we have nowdays, it's not a big deal

However on the other side, it makes all the rest so much easier.

When there's a problem on one service, I can just debug the given service without spending hours starting a huge slow turtle

If something goes down, it doesn't make unhealthy the whole app, and if I am lucky it's not gonna be a critical service (so very few people will be pissed).

I have documentation for each of them so it's easier to find what I am looking for.

If I have to work on that particular service, I don't have to go through thousands of tangled lines of code unrelated to each other but instead work on an isolated, one-purpose service.

Releasing takes minutes, not hours, and without risk of crashing everything.

So I understand the complaint about the fact that it's making the app run slower but all the rest is just making it easier.

Before biting my ass, I am not working at Twitter, I don't know the state of their application (which seems to be extremely complicated for an app deigned to post a bit of text and a few pictures), but in a company with skilled people, and a well designed architecture.12 -

In an IT management class, the professor wanted us to estimate the operation costs for a small IT company, breaking them down by service offered. I remember creating a markdown file, multiple times executing the line `echo $RANDOM >> estimations.md`. We rounded the numbers slightly, pimped the document a bit and submitted a nice PDF. When we had to present our work, the professor asked us how we had proceeded to calculate those results. We told him a story about an Excel file we worked on, but did not submit, because we thought he'd be interested in the end result and not care about those details. He asked us to submit that Excel calculation, because he wanted to comprehend our method. So we got together, created an Excel sheet, copied our "estimations" into column C and called it "service cost". For column B, we used the same "cost per man hour" value (scientifically estimated using the RAND() function) for every row. Finally, we divided the "service cost" by the "cost per man hour" for every row, put the result in column A and called it "effort (in man hours)". The professor, being able to "reproduce" our estimation, accepted our solution.2

-

Forgot to bring me laptop to college, so had to use the age old Turbo C complier on college PC. So had to do some power calculation.. used the pow() function, forgot to include math.h...

And bamm!!! It compiled and I had no idea why the pow function isn't giving the correct value!!

Lesson learnt: never trust anything!!2 -

Forgot to do server side verification.

As the service (an injectable game) was expanding and the old system relied on server side calculation without anything returned from the user, the expansion was done a little too fast.

The result could have been anyone passing wrong data and receiving the grand price like a holiday worth $10k. Quick fix ... -

The previous developer didn't write a freaking single test for a system that does a lot of calculations. Performance was shit so I got tasked with re-writing everything from DB queries to the actual calculation functions.

This has been the worst developer hell I've ever been. Without tests I cannot change anything without knowing if something breaks!!!

I gotta understand first the mess this guy left behind, then freaking write the tests that are missing and finally refactor the stuff. FML.

Btw, its Python and the guy didint even bother to do some basic type annotations so it's even worse. Function arguments are "data", "score", some are dicts, some are floats, some are lists.

Faaaaaaaaaaaaaack!!!!3 -

Having a degree in math helpes immensely with programming. Abstract reasoning, calculation simplification, sussinct data representation, nice things to have.

-

By any chance can someone explain logarithms to me and how they relate to complexity growth? I can do the calculation but it's only from memorisation. I just don't understand how it relates to a graph.10

-

That feeling when your newly added calculation module now has 93% code coverage in unit tests, with the client working on a test case for the last bit.2

-

Killing people is bad. But, there should be a law to allow killing people who don't write proper unit tests for their code. And also those "team leaders" who approve and merge code without unit tests.

Little backstory. Starts with a question.

What is the most critical part of a quoting tool (tool for resellers to set discounts and margins and create quotations)? The calculations, right?

If one formula is incorrect in one use case, people lose real money. This is the component which the user should be able to trust 100%. Right?

Okay. So this team was supposed to create a calculation engine to support all these calculations. The development was done, and the system was given to the QA team. For the last two months, the QA team finds bugs and assigns those to the development team and the development team fix those and assigns it back to the QA team. But then the QA team realizes that something else has been broken, a different calculation.

Upon investigation, today, I found out that the developers did not write a single unit test for the entire engine. There are at least 2000 different test cases involving the formulas and the QA team was doing all of that manually.

Now, Our continuous integration tool mandates coverage of 75%. What the developer did was to write a dummy test case, so that the entire code was covered.

I really really really really really think that developers should write unit tests, and proper unit tests, for each of the code lines (or, “logical blocks of code”) they write.19 -

Approaching the limit of what TI-BASIC can do without busting out the modern calcs. Doing a simple operation on the whole 95x63 1bpp graph mode screen, say, turning all pixels on or off, takes over a minute. Add any sort of calculation to that...

I'm already using BASIC-callable machine code snippets to scroll the screen one pixel (which are nigh instantly finished,) and i'm so fucking tired of scrolling effects...

God, adding sound is gonna be a nightmare...

EDIT: for reference, dev machine is a TI-84+ Silver Edition. Not one of the ones with the eZ80 and backlit color screens, the greenish Z80 1bpp screened one. The one that's an 8MHz Zilog that TI decided to make multitask. The one where oscillating the screen at an integer division of full framerate fries it in seconds? -

Yep....nothing wrong here.

Seems like a good time calculation.

Just Origin being Origin.

And that's when you know it time to go back to Steam :)

-

I had the idea that part of the problem of NN and ML research is we all use the same standard loss and nonlinear functions. In theory most NN architectures are universal aproximators. But theres a big gap between symbolic and numeric computation.

But some of our bigger leaps in improvement weren't just from new architectures, but entire new approaches to how data is transformed, and how we calculate loss, for example KL divergence.

And it occured to me all we really need is training/test/validation data and with the right approach we can let the system discover the architecture (been done before), but also the nonlinear and loss functions itself, and see what pops out the other side as a result.

If a network can instrument its own code as it were, maybe it'd find new and useful nonlinear functions and losses. Networks wouldn't just specificy a conv layer here, or a maxpool there, but derive implementations of these all on their own.

More importantly with a little pruning, we could even use successful examples for bootstrapping smaller more efficient algorithms, all within the graph itself, and use genetic algorithms to mix and match nodes at training time to discover what works or doesn't, or do training, testing, and validation in batches, to anneal a network in the correct direction.

By generating variations of successful nodes and graphs, and using substitution, we can use comparison to minimize error (for some measure of error over accuracy and precision), and select the best graph variations, without strictly having to do much point mutation within any given node, minimizing deleterious effects, sort of like how gene expression leads to unexpected but fitness-improving results for an entire organism, while point-mutations typically cause disease.

It might seem like this wouldn't work out the gate, just on the basis of intuition, but I think the benefit of working through node substitutions or entire subgraph substitution, is that we can check test/validation loss before training is even complete.

If we train a network to specify a known loss, we can even have that evaluate the networks themselves, and run variations on our network loss node to find better losses during training time, and at some point let nodes refer to these same loss calculation graphs, within themselves, switching between them dynamically..via variation and substitution.

I could even invision probabilistic lists of jump addresses, or mappings of value ranges to jump addresses, or having await() style opcodes on some nodes that upon being encountered, queue-up ticks from upstream nodes whose calculations the await()ed node relies on, to do things like emergent convolution.

I've written all the classes and started on the interpreter itself, just a few things that need fleshed out now.

Heres my shitty little partial sketch of the opcodes and ideas.

https://pastebin.com/5yDTaApS

I think I'll teach it to do convolution, color recognition, maybe try mnist, or teach it step by step how to do sequence masking and prediction, dunno yet.6 -

I grew up in Russia. We don't use imperial units in any way, shape or form: we're fully metric. Every single person who taught me at school and whatnot was born and raised in Soviet Union that was also fully metric, and science was worshiped. We used to laugh at imperial units.

Given all that, I... don't hate imperial system. Inches, feet, miles, Fahrenheit degrees, gallons, all units based on human proportion. Just think where the word for "feet" came from.

Zero C is meh, nothing in particular. A hunge boils your blood kills you instantly. It's useless: it's a "shit ton of heat" in human scale.

Zero F is chilly, a hunge F is toasty. It's based around human perception. To me, there is no difference between 100 and 90 C, but the difference between 100 and 90 F is more perceptible, and thus more useful to a human being.

Same with every other unit. What's a gallon? A gallon is enough for an average Joe to get drunk, that's what a gallon is.

Where it all falls apart is when you're trying to create something. When you're trying to get some calculation going. When you're making — not consuming — you need your units to add up, e.g. to have the SYSTEM in place. Imperial system is not a system.

Imperial units are perfect for _consuming_ stuff: a gallon of milk, a pint of beer, a (real) footlong sandwich, a pound of meat. Six foot high dude with seven inch dick.

Metric units are indispensable when you're _making_ stuff, at any scale. That's the difference. Imperial units are the tool of consumption, metric system is the tool of creation.

Only the time units seem to be the same for everyone on earth right now. Time itself in its mercilessness gives the same treatment to all entities, doesn't matter if you're a human or a grain of sand.20 -

That calculation engineer who knows some programming and thinks he can tell you how to do your job 🙄

-

Trying to explain a game developer in a community, why its a bad idea to only make client side patches for equiptment to appear correctly..

me: because the server needs to be aware of the changes made to avoid faulty calculations, for instance if the client calculates a fatal but the server disagree..

dev: but it works...

me: yes, but not optimally.

dev: Working as intended (TM)

me: ... teh fuq?

not sure if he's a bad troll or wut..1 -

I'm covering for a colleague who has 2 weeks of vacation. Everything is made with Drupal 7, and it's a backend + frontend chimera with no head and 50 anuses.

So, last monday i get told i have to show a value based on the formula:

value * (rate1 - rate2) / 2

On thursday, every calculation in that page is suddenly wrong and I get balmed for it. Turns out, now it has to be:

value * (rate1 - rate2) / rate1 / 2

Today, I get told again the calculations are wrong. "It has to be wrong, the amount changes when rate1 changes!". There'll be a meeting later today to discuss such behaviour.

All these communications happened via e-mail, so I'm quite sure it's not my fault... But, SERIOUSLY! Do they think programmers' time is worthless? Now I'll have to waste at least 1 hour in a useless meeting because they cba to THINK before giving out specs?!

Goddammit. Nice monday.2 -

You know what's hard...

Fixing a bug which occurred in production without having any logs because you log that useful info at debug level. 😧

Now take pen and paper, do calculation on your own and speculate what would have happened in production.3 -

So I recently finished a rewrite of a website that processes donations for nonprofits. Once it was complete, I would migrate all the data from the old system to the new system. This involved iterating through every transaction in the database and making a cURL request to the new system's API. A rough calculation yielded 16 hours of migration time.

The first hour or two of the migration (where it was creating users) was fine, no issues. But once it got to the transaction part, the API server would start using more and more RAM. Eventually (30 minutes), it would start doing OOMs and the such. For a while, I just assumed the issue was a lack of RAM so I upgraded the server to 16 GB of RAM.

Running the script again, it would approach the 7 GiB mark and be maxing out all 8 CPUs. At this point, I assumed there was a memory leak somewhere and the garbage collector was doing it's best to free up anything it could find. I scanned my code time and time again, but there was no place I was storing any strong references to anything!

At this point, I just sort of gave up. Every 30 minutes, I would restart the server to fix the RAM and CPU issue. And all was fine. But then there was this one time where I tried to kill it, but I go the error: "fork failed: resource temporarily unavailable". Up until this point, I believed this was simply a lack of memory...but none of my SWAP was in use! And I had 4 GiB of cached stuff!

Now this made me really confused. So I did one search on the Internet and apparently this can be caused by many things: a lack of file descriptors or even too many threads. So I did some digging, and apparently my app was using over 31 thousands threads!!!!! WTF!

I did some more digging, and as it turns out, I never called close() on my network objects. Thus leaving ~30 new "worker" threads per iteration of the migration script. Thanks Java, if only finalize() was utilized properly.1 -

So I had a Skype interview today . They asked me to write a very basic calculation function.. as am looking at the question.. I was like that's too easy .. so I start coding and it just didn't want to work .. as soon as the Skype meeting over and they gave me the usual will get bk to u... I look at my code again and in less then a minute I wrote a new code that solved it .. 😓😭 ..2

-

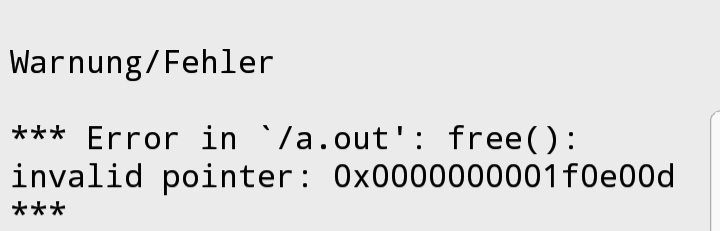

Some compilers give an error message on forgotten type casting. From that it shows good typing style casting. So you also avoid clerical errors that can lead to the program crash in the worst case. With some types it is also necessary to perform type casting comma on others Types, however, do this automatically for the compiler.

In short:Type casting is used to prevent mistakes.

An example of such an error would be:

#include <stdio.h>

#include <stdlib.h>

int main ()

{

int * ptr = malloc (10*sizeof (int))+1;

free(ptr-1);

return 0;

}

By default, one tries to access the second element of the requested memory. However, this is not possible, since pointer calculation (+,-) does not work for a void pointer.

The improved example would be:

int * ptr = ((int *) malloc (10*sizeof (int)))+1;

Here, typecasting is done beforehand and this turns the void pointer into its int pointer and pointer calculation can be applied. Note: If instead of error "no output" is displayed on the sololearn C compiler try another compiler. 1

1 -

Sometimes it feels like ms office is just made to piss off common people when the usage gets near the boundaries. Why does recalculating an excel file with 20 tabs take 20 minutes.. !? And why i cant i do anything with excel at all with any sheet for that 20 minutes. And why cant i cancel the recalculation for a fkin minute..? If i press escape the calculation stops, and immediately resumes 3 seconds later.. And for the love of god.. I would never understand why there is no global setting to turn off auto recalculation and when i find something similar it resets on restart.. WTF...5

-

Fucking hell my insights are late ones...

So I am working with fluid dynamics simulation. I went home fired up the laptop and started the calculations. This is how the events went:

9 PM: starting the calculation

10 PM: checking on the graphs to see whether everything will be alright if I leave it running. Then went to sleep.

2 AM: Waking up in shock, that I forgot to turn on autosave after every time step. Then reassured myself that this is only a test and I won't need the previous results anyway.

5 AM: waking up, everything seems to be fine. I pause the calculation hibernate the laptop and went to work.

6:40 AM on my way to the front door a stray thought struck into my mind... What if it lost contact with the licence server, while entering hibernated state. Bah never mind... It will establish a new connection when I switch it back on.

6.45 AM Switching on the laptop. Two error messages greet me.

1. Lost contact with license server.

2. Abnormal exit.

Looking on the tray the paused simulation is gone. Since I didn't enabled autosave, I have to start it all over again. Well. Lesson learned I guess. Too bad it cost 8 hours of CPU time.2 -

Stop calling data analysis tool AI... Stop calling droping notifications by % of new events on occurance calculation - AI!

-

my psychopathic college gave me a task based on the given data to calculate the probablity with which we will die from cancer and the worst part is this calculation is accurate7

-

2 days ago I started solving the problems on https://projecteuler.net/ recommended to me by @AlmondSauce and I already regret knowing python, a relatively simple calculation -ish took roughly 4 hours to compute7

-

by simply making the bias random on the second input for a two bit binary input during activation calculation, it's possible to train a neural net to calculate the XOR function in one layer.

I know for a fact. I just did it.14 -

In a e-commerce project my client asked for tax calculation with static some x value all the time.

Me: As promised your site is live and please check it.

Client: Checks everything. I want this tax to be dynamic.

Me: That was not mentioned earlier. Now I need to redo the design which takes much time.

Client: You will just change the addition at the end then what's with the design change.

Me: ( I killed him already in my mind)

Truestory 🍻2 -

!rant

I finally published my first open source project. A package for calculation a geohash of a geolocation for pharo smalltalk.

I know that most of the users don't know smalltalk but it's the best OOP you can code with. And geohash is such a great algorithm. Lovely combination2 -

Little calculation:

you have probably around 20 days holiday per year.

After 60 year old everything is more or less a gift, so I wouldn't count on it.

Let's say you are going to work 30 years. That is 600 days to do whatever you want.

It's less than 2 years.

Shit.

Try to count how many days left of freedom.

Shit.

Suddenly all the things I have to work on today have lost all priority6 -

I recently met with a client (a UK-focused homewares company sold by the likes of Next etc) who were meeting with Amazon the next day . Amazon has told them that people search for their name every 6 mins on Amazon. This according to my calculation is c. 7200 searches a month. There are 8,100 searches monthly globally on Google for their brand name according to Google Keyword Planner - suggesting that Amazon is close to becoming the major search destination for shopping (if it isn't already!) in the UK.2

-

What would you say to an employer offering a job at a starting point of only a percentage of the top salary you'll earn once the project is finished? Let's say they offered to pay you $40k to work full time building a highly complex website with multiple API integrations and sophisticated estimate calculation workflows, plus you'd be doing marketing and copywriting, with a goal of achieving 300 signups a day. In the middle of the project they boost the pay to, say, $60k salary rate. At the 12 month mark, which is the final "launch" date anticipated (and onward), you get $100k/year and you're the only person paid to do All The Things.

Oh, and also, the previous person they hired to do it failed to deliver and was let go.

Would you turn that down because to earn so little at first on the speculation that the venture will be a success and that you'll eventually get to the $100k level, plus their failure with the previous person, is too much of a gamble? Asking for a friend. ;)5 -

>making bruteforce MD5 collision engine in Python 2 (requires MD5 and size of original data, partial-file bruteforce coming soon)

>actually going well, in the ballpark of 8500 urandom-filled tries/sec for 10 bytes (because urandom may find it faster than a zero-to-FF fill due to in-practice files not having many 00 bytes)

>never resolves

>SOMEHOW manages to cut off the first 2 chars of all generated MD5 hashes

>fuck, fixed

>implemented tries/sec counter at either successful collision or KeyboardInterrupt

>implemented "wasted roll" (duplicate urandom rolls) counter at either collision success or KeyboardInterrupt

>...wait

>wasted roll counter is always at either 0% or 99%

>spend 2 hours fucking up a simple percentage calculation

>finally fixed

>implement pre-bruteforce calculation of maximum try count assuming 5% wasted rolls (after a couple hours of work for one equation because factorials)

>takes longer than the bruteforce itself for 10 bytes

this has been a rollercoaster but damn it's looking decent so far. Next is trying to further speed things up using Cython! (owait no, MicroPeni$ paywalled me from Visual Studio fucking 2010)4 -

Started to learn Reinforcement Leaning, from level 0: Atari Pong Game. Stopped and think a bit on the gradient calculation part of the blog.... hmm, I guess it's been almost a year since my Machine Learning basic course. Good thing is old memory eventually came back and everything starts to make sense again.

Wish me luck...

Following this blog:

https://karpathy.github.io/2016/05/...3 -

Some programmer or QA person somewhere in the world is having a moment of great reflection on the subject of thoroughly testing their code. If not that, then on the subject of a super crappy manager who knew better and pushed to production anyway.

“Then in September this year, nearly three years later, he got a letter from Wells Fargo. "Dear Jose Aguilar," it read, "We made a mistake… we're sorry." It said the decision on his loan modification was based "on a faulty calculation" and his loan "should have been" approved.

"It's just like, 'Are you serious? Are you kidding me?' Like they destroyed my kids' life and my life, and now you want me to – 'We're sorry?'"

https://cbsnews.com/news/... -

On https://reactjs.org/docs/... it is declared that useEffect runs after render is done.

However... if you put into useEffect an expensive calculation or operation e.g. "add +1 to x billion times", it will get stuck after updating the data, but before the re-render is done.

This leads to inconsistency between the DOM and the state which I believe is a foundational point of react. Moreover, the statement that "useEffect runs after render" is false.

See also: https://stackoverflow.com/questions...

The solution is to add a timeout to that expensive operation, e.g. 50 ms so the re-render can finish itself.

The integrity of my belief in react has received a shrapnel today. Argh :D Guys, how this can be? It seems that useEffect is not being run after re-render.13 -

I have one question to everyone:

I am basically a full stack developer who works with cloud technologies for platform development. For past 5-6 months I am working on product which uses machine learning algorithms to generate metadata from video.

One of the algorithm uses tensorflow to predict locale from an image. It takes an image of size ~500 kb and takes around 15 sec to predict the 5 possible locale from a pre-trained model. Now when I send more than 100 r ki requests to the code concurrently, it stops working and tensor flow throws some error. I am using a 32 core vcpu with 120 GB ram. When I ask the decision scientists from my team they say that the processing is high. A lot of calculation is happening behind the scene. It requires GPU.

As far as I understand, GPU make sense while training but while prediction or testing I do not think we will need such heavy infra. Please help me understand if I am wrong.

PS : all the decision scientists in the team basically dumb fucks, and they always have one answer use GPU.8 -

Welp, how much longer till someone building some magic to crack any modern encryption in blink of an eye.

...

tl;dr Google claimed it has managed to cut calculation time to 200 seconds from what it says would take a traditional computer 10,000 years to complete.

https://nydailynews.com/news/...18 -