Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "memory used"

-

Interviewer: Welcome, Mr X. Thanks for dropping by. We like to keep our interviews informal. And even though I have all the power here, and you are nothing but a cretin, let’s pretend we are going to have fun here.

Mr X: Sure, man, whatever.

I: Let’s start with the technical stuff, shall we? Do you know what a linked list is?

X: (Tells what it is).

I: Great. Can you tell me where linked lists are used?

X:: Sure. In interview questions.

I: What?

X: The only time linked lists come up is in interview questions.

I:: That’s not true. They have lots of real world applications. Like, like…. (fumbles)

X:: Like to implement memory allocation in operating systems. But you don’t sell operating systems, do you?

I:: Well… moving on. Do you know what the Big O notation is?

X: Sure. It’s another thing used only in interviews.

I: What?! Not true at all. What if you want to sort a billion records a minute, like Google has to?

X: But you are not Google, are you? You are hiring me to work with 5 year old PHP code, and most of the tasks will be hacking HTML/CSS. Why don’t you ask me something I will actually be doing?

I: (Getting a bit frustrated) Fine. How would you do FooBar in version X of PHP?

X: I would, er, Google that.

I: And how do you call library ABC in PHP?

X: Google?

I: (shocked) OMG. You mean you don’t remember all the 97 million PHP functions, and have to actually Google stuff? What if the Internet goes down?

X: Does it? We’re in the 1st world, aren’t we?

I: Tut, tut. Kids these days. Anyway,looking at your resume, we need at least 7 years of ReactJS. You don’t have that.

X: That’s great, because React came out last year.

I: Excuses, excuses. Let’s ask some lateral thinking questions. How would you go about finding how many piano tuners there are in San Francisco?

X: 37.

I: What?!

X: 37. I googled before coming here. Also Googled other puzzle questions. You can fit 7,895,345 balls in a Boeing 747. Manholes covers are round because that is the shape that won’t fall in. You ask the guard what the other guard would say. You then take the fox across the bridge first, and eat the chicken. As for how to move Mount Fuji, you tell it a sad story.

I: Ooooooooookkkkkaaaayyyyyyy. Right, tell me a bit about yourself.

X: Everything is there in the resume.

I: I mean other than that. What sort of a person are you? What are your hobbies?

X: Japanese culture.

I: Interesting. What specifically?

X: Hentai.

I: What’s hentai?

X: It’s an televised art form.

I: Ok. Now, can you give me an example of a time when you were really challenged?

X: Well, just the other day, a few pennies from my pocket fell behind the sofa. Took me an hour to take them out. Boy was it challenging.

I: I meant technical challenge.

X: I once spent 10 hours installing Windows 10 on a Mac.

I: Why did you do that?

X: I had nothing better to do.

I: Why did you decide to apply to us?

X: The voices in my head told me.

I: What?

X: You advertised a job, so I applied.

I: And why do you want to change your job?

X: Money, baby!

I: (shocked)

X: I mean, I am looking for more lateral changes in a fast moving cloud connected social media agile web 2.0 company.

I: Great. That’s the answer we were looking for. What do you feel about constant overtime?

X: I don’t know. What do you feel about overtime pay?

I: What is your biggest weakness?

X: Kryptonite. Also, ice cream.

I: What are your salary expectations?

X: A million dollars a year, three months paid vacation on the beach, stock options, the lot. Failing that, whatever you have.

I: Great. Any questions for me?

X: No.

I: No? You are supposed to ask me a question, to impress me with your knowledge. I’ll ask you one. Where do you see yourself in 5 years?

X: Doing your job, minus the stupid questions.

I: Get out. Don’t call us, we’ll call you.

All Credit to:

http://pythonforengineers.com/the-p...89 -

Being paid to rewrite someone else's bad code is no joke.

I'll give the dev this, the use of gen 1,2,3 Pokemon for variable names and class names in beyond fantastic in terms of memory and childhood nostalgia. It would be even more fantastic if he spelt the names correctly, or used it to make a Pokemon game and NOT A FUCKING ACCOUNTANCY PROGRAM.

There's no correspondence in name according to type, or even number. Dev has just gone batshit, left zero comments, and now somehow Ryhorn is shitting out error codes because of errors existing in Charmeleon's asshole.

The things I do for money...24 -

We had a Commodore64. My dad used to be an electrical engineer and had programs on it for calculations, but sometimes I was allowed to play games on it.

When my mother passed away (late 80s, I was 7), I closed up completely. I didn't speak, locked myself into my room, skipped school to read in the library. My dad was a lovely caring man, but he was suffering from a mental disease, so he couldn't really handle the situation either.

A few weeks after the funeral, on my birthday, the C64 was set up in my bedroom, with the "programmers reference guide" on my desk. I stayed up late every night to read it and try the examples, thought about those programs while in school. I memorized the addresses of the sound and sprite buffers, learnt how programs were managed in memory and stored on the casette.

I worked on my own games, got lost in the stories I was writing, mostly scifi/fantasy RPGs. I bought 2764 eproms and soldered custom cartridges so I could store my finished work safely.

When I was 12 my dad disappeared, was found, and hospitalized with lost memory. I slipped through the cracks of child protection, felt responsible to take care of the house and pay the bills. After a year I got picked up and placed in foster care in a strict Christian family who disallowed the use of computers.

I ran away when I was 13, rented a student apartment using my orphanage checks (about €800/m), got a bunch of new and recycled computers on which I installed Debian, and learnt many new programming languages (C/C++, Haskell, JS, PHP, etc). My apartment mates joked about the 12 CRT monitors in my room, but I loved playing around with experimental networking setups. I tried to keep a low profile and attended high school, often faking my dad's signatures.

After a little over a year I was picked up by child protection again. My dad was living on his own again, partly recovered, and in front of a judge he agreed to be provisory legal guardian, despite his condition. I was ruled to be legally an adult at the age of 15, and got to keep living in the student flat (nation-wide foster parent shortage played a role).

OK, so this sounds like a sobstory. It isn't. I fondly remember my mom, my dad is doing pretty well, enjoying his old age together with an nice woman in some communal landhouse place.

I had a bit of a downturn from age 18-22 or so, lots of drugs and partying. Maybe I just needed to do that. I never finished any school (not even high school), but managed to build a relatively good career. My mom was a biochemist and left me a lot of books, and I started out as lab analyst for a pharma company, later went into phytogenetics, then aerospace (QA/NDT), and later back to pure programming again.

Computers helped me through a tough childhood.

They awakened a passion for creative writing, for math, for science as a whole. I'm a bit messed up, a bit of a survivalist, but currently quite happy and content with my life.

I try to keep reminding people around me, especially those who have just become parents, that you might feel like your kids need a perfect childhood, worrying about social development, dragging them to soccer matches and expensive schools...

But the most important part is to just love them, even if (or especially when) life is harsh and imperfect. Show them you love them with small gestures, and give their dreams the chance to flourish using any of the little resources you have available.21 -

Father bought a PC in 1997. Back then very few had it. I learned doing things like accessing the internet and sending emails, among others. I remember having added age on websites to be allowed to sign up at times :P My sisters used to play games on it sometimes. The first few ones we had were Tomb Raider: The Last Revelation, Tomb Raider Chronicles, American McGee's Alice(Which caused us to upgrade the PC xD)... And some others.

I have a memory of this pseudo-3D-looking game where you move in a maze and try answering questions. I want to remember its name, but I cannot :(

We literally have video evidence of me liking the computer as a child, yet my parents either say I'm addicted or deny I've ever liked it before. Not only that, but continuously limiting my time with the PC hasn't been a literal obstacle in my way of trying to do things in their opinion. Funny how my parents think the last few years I've been my worst when they've hurt me in those years so much that our relationship is guaranteed not working out. There were doubts in my head before, but now it's cemented and there is no way of going back. Father, for example, tells me it's too late to do anything with a PC now(As well as how I've been unable to use the PC. He looks at these pro players' footage in some TV show and he's like, „You've been unable to use your hobbies“, as if they have never ever screamed at me for perceived gaming and not actually cared to check), and I need to look for a „real“ job.

Sorry. I went to bed at 2:00 in the morning. Feel like a zombie because of ongoing weirdly insufficient sleep, even though I sleep kinda more than normal. Even when I took Melatonine for that it didn't help at all.

Childhood was where beating began. I was about 6/7. Right when I entered school. The first school that I attended was a private one and supposedly for „Wunderkinds“, while in reality I haven't seen a SINGLE teacher or psychologist approve of it, their argument being that children were basically drowned in work that wasn't age-appropriate(I don't mean anything bad. Just that teaching about Galaxies and all in first grade isn't the brightest idea). There was always a mountain of homework to do and as opposed to some other countries, we had to do it on a day to day basis. We didn't have a week-long deadline. I was predictably not keeping up with it as I could have, had it been a normal amount, so my parents decided I didn't want to study and began their methods of getting me to „study“. I have yet to see a person able to keep up with that school's tempo, no matter the age.

This place was also where I got bullied. I felt I had nowhere to be: At home, the parents' situation, at school, the bully. I never really went outside to play with other children, so I missed that part of childhood.

After the second year of school I was transferred to an advanced German school, called like that because they taught German and not English there. I also got to learn a bit of Russian before they removed it from school. In that period I used to attend ballet. But for less than a year. And piano, which I remember having attended for quite a long while, some years, if my memory isn't fried. I quit it because of it having been forced on me. Last piece I ever played fully was Beethoven's Marmotte.

In this school I was once again the outcast of the class. I had some people to interact with. All of those interactions lasted a few years at most. Then, because of a part of my class choosing me as a laughing-stock N2 and another girl as the N1, I found my best friend, who I still have today. She's the only friend I have nearby.

Most of the time I hated myself. Even today I struggle with that sometimes.

After that came university. This us where I got something like a friend circle at last. But it still didn't last. I got in a relationship with one of the guys, but I was just attracted. There was another I couldn't dare getting close to. Turns out he also had something for me. Then he disappeared from our lives and a year after, I still cannot forget the person. If I want to, I have to deprive myself of my own personality. Not a thing I'm willing to give up. Then I broke up with the guy I was in a relationship with and completely disappeared from the friendship circle. To be honest, I had reasons to. They refused to even try to look for the guy and they called him a friend for years. Sometimes parents hitting me can occur even today, but if I REALLY piss them off.

Now I'm here and oh, my God, I'm officially am aunt now! My sister gave birth to a daughter this morning... She's in Berlin with mother and both she and the child are doing great. I just hope she manages to be a good mother.20 -

https://git.kernel.org/…/ke…/... sure some of you are working on the patches already, if you are then lets connect cause, I am an ardent researcher for the same as of now.

So here it goes:

As soon as kernel page table isolation(KPTI) bug will be out of embargo, Whatsapp and FB will be flooded with over-night kernel "shikhuritee" experts who will share shitty advices non-stop.

1. The bug under embargo is a side channel attack, which exploits the fact that Intel chips come with speculative execution without proper isolation between user pages and kernel pages. Therefore, with careful scheduling and timing attack will reveal some information from kernel pages, while the code is running in user mode.

In easy terms, if you have a VPS, another person with VPS on same physical server may read memory being used by your VPS, which will result in unwanted data leakage. To make the matter worse, a malicious JS from innocent looking webpage might be (might be, because JS does not provide language constructs for such fine grained control; atleast none that I know as of now) able to read kernel pages, and pawn you real hard, real bad.

2. The bug comes from too much reliance on Tomasulo's algorithm for out-of-order instruction scheduling. It is not yet clear whether the bug can be fixed with a microcode update (and if not, Intel has to fix this in silicon itself). As far as I can dig, there is nothing that hints that this bug is fixable in microcode, which makes the matter much worse. Also according to my understanding a microcode update will be too trivial to fix this kind of a hardware bug.

3. A software-only remedy is possible, and that is being implemented by all major OSs (including our lovely Linux) in kernel space. The patch forces Translation Lookaside Buffer to flush if a context switch happens during a syscall (this is what I understand as of now). The benchmarks are suggesting that slowdown will be somewhere between 5%(best case)-30%(worst case).

4. Regarding point 3, syscalls don't matter much. Only thing that matters is how many times syscalls are called. For example, if you are using read() or write() on 8MB buffers, you won't have too much slowdown; but if you are calling same syscalls once per byte, a heavy performance penalty is guaranteed. All processes are which are I/O heavy are going to suffer (hostings and databases are two common examples).

5. The patch can be disabled in Linux by passing argument to kernel during boot; however it is not advised for pretty much obvious reasons.

6. For gamers: this is not going to affect games (because those are not I/O heavy)

Meltdown: "Meltdown" targeted on desktop chips can read kernel memory from L1D cache, Intel is only affected with this variant. Works on only Intel.

Spectre: Spectre is a hardware vulnerability with implementations of branch prediction that affects modern microprocessors with speculative execution, by allowing malicious processes access to the contents of other programs mapped memory. Works on all chips including Intel/ARM/AMD.

For updates refer the kernel tree: https://git.kernel.org/…/ke…/...

For further details and more chit-chats refer: https://lwn.net/SubscriberLink/...

~Cheers~

(Originally written by Adhokshaj Mishra, edited by me. ) 22

22 -

Someday my toaster is going to have an IP address. A bad automatic firmware update will most likely cause it to get stuck on the bagel setting until I plug a usb key in and reflash the memory.

Grandma's refrigerator will probably get viruses, lock itself and freeze all the food inside, demanding bitcoin before defrosting.

My blender will probably be used in a massive DDoS attack because Ninja's master MAC address list got leaked and the hidden control panel login is admin/admin.

Ovens will burn houses down when people call in to have them preheat on their way home from work.

Correlations between the number of times the lights are turned on and how many times the toilet is flushed will yield recommendations to run the dishwasher on Thursdays because it's simply more energy efficient.

My dog will tweet when he's hungry and my smart watch will recommend diet dog food in real-time because he's really been eating too much lately--"Do you want to setup a recurring order on Amazon fresh?"

Sometimes living in a cave sounds nice...12 -

I finally built a new PC with 8GB memory, i7 4790K and SSD for OS.

My old system was a core2duo with 2GB memory. Android studio used to take 20mins for gradle sync and another 20min for signing apks. "Live preview" and "emulator" was a thing of the future for me. Never used it.

But now things have changed. This thing boots up in less than 5 sec and studio gradle takes less than 30sec. I'm so happy right now! Its like a dream come true! *cries in happiness* 14

14 -

Apple has a real problem.

Their hardware has always been overpriced, but at least before it had defenders pointing out that it was at least capable and well made.

I know, I used to be one of them.

Past tense.

They have jumped the shark.

They now make pretentious hipster crap that is massively overpriced and doesn't have the basic features (like hardware ports) to enable you to do your job.

I mean, who needs an ESC key? What is wrong with learning to type CTRL-[ instead? Muscle memory? What's that?

They have gone from "It just works" to "It just doesn't work" in no time at all.

And it is Developers who are most pissed off. A tiny demographic who won't be visible on the financial bottom line until their newly absent software suddenly makes itself known two, three years down the line.

By which time it is too late to do anything.

But hey! Look how thin (and thermally throttled) my new laptop is!19 -

I have been a mobile developer working with Android for about 6 years now. In that time, I have endured countless annoyances in the Android development space. I will endure them no more.

My complaints are:

1. Ridiculous build times. In what universe is it acceptable for us to wait 30 seconds for a build to complete. Yes, I've done all the optimisations mentioned on this page and then some. Don't even mention hot reload as it doesn't work fast enough or just does not work at all. Also, buying better hardware should not be a requirement to build a simple Android app, Xcode builds in 2 seconds with a 8GB Macbook Air. A Macbook Air!

2. IDE. Android Studio is a memory hog even if you throw 32GB of RAM at it. The visual editors are janky as hell. If you use Eclipse, you may as well just chop off your fingers right now because you will have no use for them after you try and build an app from afresh. I mean, just look at some of the posts in this subreddit where the common response is to invalidate caches and restart. That should only be used as a last resort, but it's thrown about like as if it solves everything. Truth be told, it's Gradle's fault. Gradle is so annoying I've dedicated the next point to it.

3. Gradle. I am convinced that Gradle causes 50% of an Android developer's pain. From the build times to the integration into various IDEs to its insane package management system. Why do I need to manually exclude dependencies from other dependencies, the build tool should just handle it for me. C'mon it's 2019. Gradle is so bad that it requires approx 54GB of RAM to work out that I have removed a dependency from the list of dependencies. Also I cannot work out what properties I need to put in what block.

4. API. Android API is over-bloated and hellish. How do I schedule a recurring notification? Oh use an AlarmManager. Yes you heard right, an AlarmManager... Not a NotificationManager because that would be too easy. Also has anyone ever tried running a long running task? Or done an asynchronous task? Or dealt with closing/opening a keyboard? Or handling clicks from a RecyclerView? Yes, I know Android Jetpack aims to solve these issues but over the years I have become so jaded by things that have meant to solve other broken things, that there isn't much hope for Jetpack in my mind 😤

5. API 2. A non-insignificant number of Android users are still on Jelly Bean or KitKat! That means we, as developers, have to support some of your shitty API decisions (Fragments, Activities, ListView) from all the way back then!

6. Not reactive enough. Android has support for Databinding recently but this kind of stuff should have been introduced from the very start. Look at React or Flutter as to how easy it is to make shit happen without any effort.

7. Layouts. What the actual hell is going on here. MDPI, XHDPI, XXHDPI, mipmap, drawable. Fuck it, just chuck it all in the drawable folder. Seriously, Android should handle this for me. If I am designing for a larger screen then it should be responsive. I don't want to deal with 50 different layouts spread over 6 different folders.

8. Permission system. Why was this not included from the very start? Rogue apps have abused this and abused your user's privacy and security. Yet you ban us and not them from the Play Store. What's going on? We need answers.

9. In Android, building an app took me 3 months and I had a lot of work left to do but I got so sick of Android dev I dropped it in favour of Flutter. I built the same app in Flutter and it took me around a month and I completed it all.

10. XML.

If you're a new dev, for the love of all that is good in this world, do NOT get into Android development. Start with Flutter or even iOS. On Flutter and build times are insanely fast and the hot reload is under 500ms constantly. It's a breath of fresh air and will save you a lot of headaches AND it builds for iOS flawlessly.

To the people who build Android, advocate it and work on it, sorry to swear, but fuck you! You have created a mess that we have to work with on a day-to-day basis only for us to get banned from the app store! You have sold us a lie that Android development is amazing with all the sweet treat names and conferences that look bubbly and fun. You have allowed to get it so bad that we can't target an API higher than 18 because some Android users are still using devices that support that!

End this misery. End our pain. End our suffering. Throw this abomination away like you do with some of your other projects and migrate your efforts over to Flutter. Please!

#NoToGoogleIO #AndroidSummitBoycott #FlutterDev #ReactNative16 -

When you create a bunch of objects in Java and it crashes because you're used to the memory usage of C's structs.

3

3 -

The stupid stories of how I was able to break my schools network just to get better internet, as well as more ridiculous fun. XD

1st year:

It was my freshman year in college. The internet sucked really, really, really badly! Too many people were clearly using it. I had to find another way to remedy this. Upon some further research through Google I found out that one can in fact turn their computer into a router. Now what’s interesting about this network is that it only works with computers by downloading the necessary software that this network provides for you. Some weird software that actually looks through your computer and makes sure it’s ok to be added to the network. Unfortunately, routers can’t download and install that software, thus no internet… but a PC that can be changed into a router itself is a different story. I found that I can download the software check the PC and then turn on my Router feature. Viola, personal fast internet connected directly into the wall. No more sharing a single shitty router!

2nd year:

This was about the year when bitcoin mining was becoming a thing, and everyone was in on it. My shitty computer couldn’t possibly pull off mining for bitcoins. I needed something faster. How I found out that I could use my schools servers was merely an accident.

I had been installing the software on every possible PC I owned, but alas all my PC’s were just not fast enough. I decided to try it on the RDS server. It worked; the command window was pumping out coins! What I came to find out was that the RDS server had 36 cores. This thing was a beast! And it made sense that it could actually pull off mining for bitcoins. A couple nights later I signed in remotely to the RDS server. I created a macro that would continuously move my mouse around in the Remote desktop screen to keep my session alive at all times, and then I’d start my bitcoin mining operation. The following morning I wake up and my session was gone. How sad I thought. I quickly try to remote back in to see what I had collected. “Error, could not connect”. Weird… this usually never happens, maybe I did the remoting wrong. I went to my schools website to do some research on my remoting problem. It was down. In fact, everything was down… I come to find out that I had accidentally shut down the schools network because of my mining operation. I wasn’t found out, but I haven’t done any mining since then.

3rd year:

As an engineering student I found out that all engineering students get access to the school’s VPN. Cool, it is technically used to get around some wonky issues with remoting into the RDS servers. What I come to find out, after messing around with it frequently, is that I can actually use the VPN against the screwed up security on the network. Remember, how I told you that a program has to be downloaded and then one can be accepted into the network? Well, I was able to bypass all of that, simply by using the school’s VPN against itself… How dense does one have to be to not have patched that one?

4th year:

It was another programming day, and I needed access to my phones memory. Using some specially made apps I could easily connect to my phone from my computer and continue my work. But what I found out was that I could in fact travel around in the network. I discovered that I can, in fact, access my phone through the network from anywhere. What resulted was the discovery that the network scales the entirety of the school. I discovered that if I left my phone down in the engineering building and then went north to the biology building, I could still continue to access it. This seems like a very fatal flaw. My idea is to hook up a webcam to a robot and remotely controlling it from the RDS servers and having this little robot go to my classes for me.

What crazy shit have you done at your University?9 -

I had to open the desktop app to write this because I could never write a rant this long on the app.

This will be a well-informed rebuttal to the "arrays start at 1 in Lua" complaint. If you have ever said or thought that, I guarantee you will learn a lot from this rant and probably enjoy it quite a bit as well.

Just a tiny bit of background information on me: I have a very intimate understanding of Lua and its c API. I have used this language for years and love it dearly.

[START RANT]

"arrays start at 1 in Lua" is factually incorrect because Lua does not have arrays. From their documentation, section 11.1 ("Arrays"), "We implement arrays in Lua simply by indexing tables with integers."

From chapter 2 of the Lua docs, we know there are only 8 types of data in Lua: nil, boolean, number, string, userdata, function, thread, and table

The only unfamiliar thing here might be userdata. "A userdatum offers a raw memory area with no predefined operations in Lua" (section 26.1). Essentially, it's for the API to interact with Lua scripts. The point is, this isn't a fancy term for array.

The misinformation comes from the table type. Let's first explore, at a low level, what an array is. An array, in programming, is a collection of data items all in a line in memory (The OS may not actually put them in a line, but they act as if they are). In most syntaxes, you access an array element similar to:

array[index]

Let's look at c, so we have some solid reference. "array" would be the name of the array, but what it really does is keep track of the starting location in memory of the array. Memory in computers acts like a number. In a very basic sense, the first sector of your RAM is memory location (referred to as an address) 0. "array" would be, for example, address 543745. This is where your data starts. Arrays can only be made up of one type, this is so that each element in that array is EXACTLY the same size. So, this is how indexing an array works. If you know where your array starts, and you know how large each element is, you can find the 6th element by starting at the start of they array and adding 6 times the size of the data in that array.

Tables are incredibly different. The elements of a table are NOT in a line in memory; they're all over the place depending on when you created them (and a lot of other things). Therefore, an array-style index is useless, because you cannot apply the above formula. In the case of a table, you need to perform a lookup: search through all of the elements in the table to find the right one. In Lua, you can do:

a = {1, 5, 9};

a["hello_world"] = "whatever";

a is a table with the length of 4 (the 4th element is "hello_world" with value "whatever"), but a[4] is nil because even though there are 4 items in the table, it looks for something "named" 4, not the 4th element of the table.

This is the difference between indexing and lookups. But you may say,

"Algo! If I do this:

a = {"first", "second", "third"};

print(a[1]);

...then "first" appears in my console!"

Yes, that's correct, in terms of computer science. Lua, because it is a nice language, makes keys in tables optional by automatically giving them an integer value key. This starts at 1. Why? Lets look at that formula for arrays again:

Given array "arr", size of data type "sz", and index "i", find the desired element ("el"):

el = arr + (sz * i)

This NEEDS to start at 0 and not 1 because otherwise, "sz" would always be added to the start address of the array and the first element would ALWAYS be skipped. But in tables, this is not the case, because tables do not have a defined data type size, and this formula is never used. This is why actual arrays are incredibly performant no matter the size, and the larger a table gets, the slower it is.

That felt good to get off my chest. Yes, Lua could start the auto-key at 0, but that might confuse people into thinking tables are arrays... well, I guess there's no avoiding that either way.13 -

So, some time ago, I was working for a complete puckered anus of a cosmetics company on their ecommerce product. Won't name names, but they're shitty and known for MLM. If you're clever, go you ;)

Anyways, over the course of years they brought in a competent firm to implement their service layer. I'd even worked with them in the past and it was designed to handle a frankly ridiculous-scale load. After they got the 1.0 released, the manager was replaced with some absolutely talentless, chauvinist cuntrag from a phone company that is well known for having 99% indian devs and not being able to heard now. He of course brought in his number two, worked on making life miserable and running everyone on the team off; inside of a year the entire team was ex-said-phone-company.

Watching the decay of this product was a sheer joy. They cratered the database numerous times during peak-load periods, caused $20M in redis-cluster cost overrun, ended up submitting hundreds of erroneous and duplicate orders, and mailed almost $40K worth of product to a random guy in outer mongolia who is , we can only hope, now enjoying his new life as an instagram influencer. They even terminally broke the automatic metadata, and hired THIRTY PEOPLE to sit there and do nothing but edit swagger. And it was still both wrong and unusable.

Over the course of two years, I ended up rewriting large portions of their infra surrounding the centralized service cancer to do things like, "implement security," as well as cut memory usage and runtimes down by quite literally 100x in the worst cases.

It was during this time I discovered a rather critical flaw. This is the story of what, how and how can you fucking even be that stupid. The issue relates to users and their reports and their ability to order.

I first found this issue looking at some erroneous data for a low value order and went, "There's no fucking way, they're fucking stupid, but this is borderline criminal." It was easy to miss, but someone in a top down reporting chain had submitted an order for someone else in a different org. Shouldn't be possible, but here was that order staring me in the face.

So I set to work seeing if we'd pwned ourselves as an org. I spend a few hours poring over logs from the log service and dynatrace trying to recreate what happened. I first tested to see if I could get a user, not something that was usually done because auth identity was pervasive. I discover the users are INCREMENTAL int values they used for ids in the database when requesting from the API, so naturally I have a full list of users and their title and relative position, as well as reports and descendants in about 10 minutes.

I try the happy path of setting values for random, known payment methods and org structures similar to the impossible order, and submitting as a normal user, no dice. Several more tries and I'm confident this isn't the vector.

Exhausting that option, I look at the protocol for a type of order in the system that allowed higher level people to impersonate people below them and use their own payment info for descendant report orders. I see that all of the data for this transaction is stored in a cookie. Few tests later, I discover the UI has no forgery checks, hashing, etc, and just fucking trusts whatever is present in that cookie.

An hour of tweaking later, I'm impersonating a director as a bottom rung employee. Score. So I fill a cart with a bunch of test items and proceed to checkout. There, in all its glory are the director's payment options. I select one and am presented with:

"please reenter card number to validate."

Bupkiss. Dead end.

OR SO YOU WOULD THINK.

One unimportant detail I noticed during my log investigations that the shit slinging GUI monkeys who butchered the system didn't was, on a failed attempt to submit payment in the DB, the logs were filled with messages like:

"Failed to submit order for [userid] with credit card id [id], number [FULL CREDIT CARD NUMBER]"

One submit click later and the user's credit card number drops into lnav like a gatcha prize. I dutifully rerun the checkout and got an email send notification in the logs for successful transfer to fulfillment. Order placed. Some continued experimentation later and the truth is evident:

With an authenticated user or any privilege, you could place any order, as anyone, using anyon's payment methods and have it sent anywhere.

So naturally, I pack the crucifixion-worthy body of evidence up and walk it into the IT director's office. I show him the defect, and he turns sheet fucking white. He knows there's no recovering from it, and there's no way his shitstick service team can handle fixing it. Somewhere in his tiny little grinchly manager's heart he knew they'd caused it, and he was to blame for being a shit captain to the SS Failboat. He replies quietly, "You will never speak of this to anyone, fix this discretely." Straight up hitler's bunker meme rage.13 -

Woohoo! 32k achieved!!! Finally I can post some new rant without risking some sudden overshoot 😁

So putting celebrations aside for a minute, a while ago I've noticed a tingle when I stroke my finger across metal areas of my tablet, or the sides of my phone (which probably has metal near it too) while it's charging. And it's been bugging me ever since.

Now, some things to note are that it only happens when my feet are touching the ground though slippers, and that the frequency is so low that I can actually feel the tingle when I slide my finger across the material. This to me at least seems like electricity flows through me into ground, and touching the ground directly provides a path so easy for the electrons to run away that I don't feel it at all. But if I lift my feet off the ground entirely, I just get charged up and after that, nothing else happens.

So those are my ideas. The answers on the subject on the other hand.. absolute cancer. Unsurprisingly, most of them came from Apple users. Here's some of them.

https://discussions.apple.com/threa...

- I've not noticed it, but if you're concerned bring the phone to Apple for evaluation.

- Me too facing same problem.. did u visit apple care?

And one good answer at least...

- google emf sensitivity, its real. You are right, there is a small current flowing through your body, try to limit your usage. The problem with this issue is those who aren't affected (lucky ones for now) will tell you these products are 100% safe. To a degree they are, i used my ipod touch for about 2 years straight vwith virtually no symptoms. then the tingling started and it gets worse.You will get more sensitive to progressively less powerful things. I dont want to scare you but just limit your usage like i didnt do 🙂

Overall that discussion was pretty good actually, aside from "bring it to the Genius Bar, they'll know for sure and not just sell you another unit". But then there's Reddit.

https://reddit.com/r/iphone/...

- Ok, real reason is probably that the extension cord and/or outlet is probably not grounded correctly. Either that or you are using a cheap knockoff charger.

Either use a surge protector and/or use the authentic Apple Charger.

- It's not the volts that hurt you, it's the amps

- I think you are in deep love with your phone. That tingling sensation is usually referred to as "love" in human language.

- Do less acid, I would advise.

Okay, so that's the real cancer. Grounding issue sounds reasonable despite it being wrong. Grounding is actually not needed when your charging appliance doesn't have any exposed metal parts. And isolation from high voltage to low voltage side actually happens through things like routering holes into the PCB, creating spark gaps, and using galvanic isolation through things like optocouplers. As for a surge protector? I'm using them to protect my PC and my servers, but the only purpose they serve is to protect from.. you guessed it.. voltage surges, like lightning bolts hitting the grid. They don't do shit for grounding or reducing this tingle! What a fucking tool.

It's not the volts that kill, it's the amps.. yeah I'm sure that the debunking of that is easy to find. Not gonna explain that here. And the rest of it.. yeah it's just fucking cancer.

Now what's the real issue with this tingle? It's actually a Class-Y rated (i.e. kV rated) capacitor that's on the transformer of any switch-mode power supply, including phone chargers. If memory serves me right, it helps with decoupling the switching noise and so on. But as it's connected to the primary side of the transformer, if the cap is sufficiently large and you are sufficiently sensitive, it can actually cause that tingle by passing a fraction of the mains electricity into your body. It's totally safe though, as the power that these caps pass is very small. But to some, it's noticeable.

Hope you found this interesting! And thanks a lot for bringing me to 2^15. I really appreciate it ♥️ 14

14 -

I like memory hungry desktop applications.

I do not like sluggish desktop applications.

Allow me to explain (although, this may already be obvious to quite a few of you)

Memory usage is stigmatized quite a lot today, and for good reason. Not only is it an indication of poor optimization, but not too many years ago, memory was a much more scarce resource.

And something that started as a joke in that era is true in this era: free memory is wasted memory. You may argue, correctly, that free memory is not wasted; it is reserved for future potential tasks. However, if you have 16GB of free memory and don't have any plans to begin rendering a 3D animation anytime soon, that memory is wasted.

Linux understands this. Linux actually has three States for memory to be in: used, free, and available. Used and free memory are the usual. However, Linux automatically caches files that you use and places them in ram as "available" memory. Available memory can be used at any time by programs, simply dumping out whatever was previously occupying the memory.

And as you well know, ram is much faster than even an SSD. Programs which are memory heavy COULD (< important) be holding things in memory rather than having them sit on the HDD, waiting to be slowly retrieved. I much rather a web browser take up 4 GB of RAM than sit around waiting for it to read the caches image off my had drive.

Now, allow me to reiterate: unoptimized programs still piss me off. There's no need for that electron-based webcam image capture app to take three gigs of memory upon launch. But I love it when programs use the hardware I spent money on to run smoother.

Don't hate a program simply because it's at the top of task manager.6 -

This rant is particularly directed at web designers, front-end developers. If you match that, please do take a few minutes to read it, and read it once again.

Web 2.0. It's something that I hate. Particularly because the directive amongst webdesigners seems to be "client has plenty of resources anyway, and if they don't, they'll buy more anyway". I'd like to debunk that with an analogy that I've been thinking about for a while.

I've got one server in my home, with 8GB of RAM, 4 cores and ~4TB of storage. On it I'm running Proxmox, which is currently using about 4GB of RAM for about a dozen VM's and LXC containers. The VM's take the most RAM by far, while the LXC's are just glorified chroots (which nonetheless I find very intriguing due to their ability to run unprivileged). Average LXC takes just 60MB RAM, the amount for an init, the shell and the service(s) running in this LXC. Just like a chroot, but better.

On that host I expect to be able to run about 20-30 guests at this rate. On 4 cores and 8GB RAM. More extensive migration to LXC will improve this number over time. However, I'd like to go further. Once I've been able to build a Linux which was just a kernel and busybox, backed by the musl C library. The thing consumed only 13MB of RAM, which was a VM with its whole 13MB of RAM consumption being dedicated entirely to the kernel. I could probably optimize it further with modularization, but at the time I didn't due to its experimental nature. On a chroot, the kernel of the host is used, meaning that said setup in a chroot would border near the kB's of RAM consumption. The busybox shell would be its most important RAM consumer, which is negligible.

I don't want to settle with 20-30 VM's. I want to settle with hundreds or even thousands of LXC's on 8GB of RAM, as I've seen first-hand with my own builds that it's possible. That's something that's very important in webdesign. Browsers aren't all that different. More often than not, your website will share its resources with about 50-100 other tabs, because users forget to close their old tabs, are power users, looking things up on Stack Overflow, or whatever. Therefore that 8GB of RAM now reduces itself to about 80MB only. And then you've got modern web browsers which allocate their own process for each tab (at a certain amount, it seems to be limited at about 20-30 processes, but still).. and all of its memory required to render yours is duplicated into your designated 80MB. Let's say that 10MB is available for the website at most. This is a very liberal amount for a webserver to deal with per request, so let's stick with that, although in reality it'd probably be less.

10MB, the available RAM for the website you're trying to show. Of course, the total RAM of the user is comparatively huge, but your own chunk is much smaller than that. Optimization is key. Does your website really need that amount? In third-world countries where the internet bandwidth is still in the order of kB/s, 10MB is *very* liberal. Back in 2014 when I got into technology and webdesign, there was this rule of thumb that 7 seconds is usually when visitors click away. That'd translate into.. let's say, 10kB/s for third-world countries? 7 seconds makes that 70kB of available network bandwidth.

Web 2.0, taking 30+ seconds to load a web page, even on a broadband connection? Totally ridiculous. Make your website as fast as it can be, after all you're playing along with 50-100 other tabs. The faster, the better. The more lightweight, the better. If at all possible, please pursue this goal and make the Web a better place. Efficiency matters.9 -

What an absolute fucking disaster of a day. Strap in, folks; it's time for a bumpy ride!

I got a whole hour of work done today. The first hour of my morning because I went to work a bit early. Then people started complaining about Jenkins jobs failing on that one Jenkins server our team has been wanting to decom for two years but management won't let us force people to move to new servers. It's a single server with over four thousand projects, some of which run massive data processing jobs that last DAYS. The server was originally set up by people who have since quit, of course, and left it behind for my team to adopt with zero documentation.

Anyway, the 500GB disk is 100% full. The memory (all 64GB of it) is fully consumed by stuck jobs. We can't track down large old files to delete because du chokes on the workspace folder with thousands of subfolders with no Ram to spare. We decide to basically take a hacksaw to it, deleting the workspace for every job not currently in progress. This of course fucked up some really poorly-designed pipelines that relied on workspaces persisting between jobs, so we had to deal with complaints about that as well.

So we get the Jenkins server up and running again just in time for AWS to have a major incident affecting EC2 instance provisioning in our primary region. People keep bugging me to fix it, I keep telling them that it's Amazon's problem to solve, they wait a few minutes and ask me to fix it again. Emails flying back and forth until that was done.

Lunch time already. But the fun isn't over yet!

I get back to my desk to find out that new hires or people who got new Mac laptops recently can't even install our toolchain, because management has started handing out M1 Macs without telling us and all our tools are compiled solely for x86_64. That took some troubleshooting to even figure out what the problem was because the only error people got from homebrew was that the formula was empty when it clearly wasn't.

After figuring out that problem (but not fully solving it yet), one team starts complaining to us about a Github problem because we manage the github org. Except it's not a github problem and I already knew this because they are a Problem Team that uses some technical authoring software with Git integration but they only have even the barest understanding of what Git actually does. Turns out it's a Git problem. An update for Git was pushed out recently that patches a big bad vulnerability and the way it was patched causes problems because they're using Git wrong (multiple users accessing the same local repo on a samba share). It's a huge vulnerability so my entire conversation with them went sort of like:

"Please don't."

"We have to."

"Fine, here's a workaround, this will allow arbitrary code execution by anyone with physical or virtual access to this computer that you have sitting in an unlocked office somewhere."

"How do I run a Git command I don't use Git."

So that dealt with, I start taking a look at our toolchain, trying to figure out if I can easily just cross-compile it to arm64 for the M1 macbooks or if it will be a more involved fix. And I find all kinds of horrendous shit left behind by the people who wrote the tools that, naturally, they left for us to adopt when they quit over a year ago. I'm talking entire functions in a tool used by hundreds of people that were put in as a joke, poorly documented functions I am still trying to puzzle out, and exactly zero comments in the code and abbreviated function names like "gars", "snh", and "jgajawwawstai".

While I'm looking into that, the person from our team who is responsible for incident communication finally gets the AWS EC2 provisioning issue reported to IT Operations, who sent out an alert to affected users that should have gone out hours earlier.

Meanwhile, according to the health dashboard in AWS, the issue had already been resolved three hours before the communication went out and the ticket remains open at this moment, as far as I know.5 -

I used to think Electron apps were gonna do great and make it more accessible for companies to produce high quality programs with ease.

Oh boy I was wrong. All it did is enable big companies with the ability to refactor all of their software to run 5 times slower, consume 10 times more memory and kill your battery 20 times faster.

I fucking hate all of this prototype fast optimize later bullshit. Can I get some value for my dollar? How come technology is just being degraded for the same of "ease of programming".

You save programming time but sacrifice end user time, cus our time just doesn't fucking matter.9 -

I'll use this topic to segue into a related (lonely) story befitting my mood these past weeks.

This is entire story going to sound egotistical, especially this next part, but it's really not. (At least I don't think so?)

As I'm almost entirely self-taught, having another dev giving me good advice would have been nice. I've only known / worked with a few people who were better devs than I, and rarely ever received good advice from them.

One of those better devs was my first computer science teacher. Looking back, he was pretty average, but he held us to high standards and gave good advice. The two that really stuck with me were: 1) "save every time you've done something you don't want to redo," and 2) "printf is your best debugging friend; add it everywhere there's something you want to watch." Probably the best and most helpful advice I've ever received 😊

I've seen other people here posting advice like "never hardcode" or "modularity keeps your code clean" -- I had to discover these pretty simple concepts entirely on my own. School (and later college) were filled with terrible teachers and worse students, and so were almost entirely useless for learning anything new.

The only decent dev I knew had brilliant ideas (genetic algorithms, sandboxing, ...) before they were widely used, but could rarely implement them well because he was generally an idiot. (Idiot sevant, I think? Definitely the idiot part.) I couldn't stand him. Completely bypassing a ridiculously long story, I helped him on a project to build his own OS from scratch; we made very impressive progress, even to this day. Custom bootloader, hardware interfacing, memory management, (semi) sandboxed processes, gui, example programs ...; we were in highschool. I'm still surprised and impressed with what we accomplished.

But besides him, almost every other dev I met was mediocre. Even outside of school, I went so many years without having another competent dev to work with. I went through various jobs helping other dev(s) on their projects (or rewriting them), learning new languages/frameworks almost every time: php, pascal, perl, zend, js, vb, rails, node, .... I learned new concepts occasionally (which was wonderful) but overall it was just tedious and never paid well because I was too young to be taken seriously (and female, further exacerbating it). On the bright side, it didn't dwindle my love for coding, and I usually spent my evenings playing with projects of my own.

The second dev (and one one of the best I've ever met) went by Novo. His approach to a game engine reminded me of General Relativity: Everything was modular, had a rich inheritance tree, and could receive user input at any point along said tree. A user could attach their view/control to any object. (Computer control methods could be attached in this way as well.) UI would obviously change depending on how the user could interact and the number of objects; admins could view/monitor any of these. Almost every object / class of object could talk to almost everything else. It was beautiful. I learned so much from his designs. (Honestly, I don't remember the code at all, and that saddens me.) There were other things, too, but that one amazed me the most.

I havent met anyone like him ever again.

Anyway, I don't know if I can really answer this week's question. I definitely received some good advice while initially learning, but past that it's all been through discovering things on my own.

It's been lonely. ☹2 -

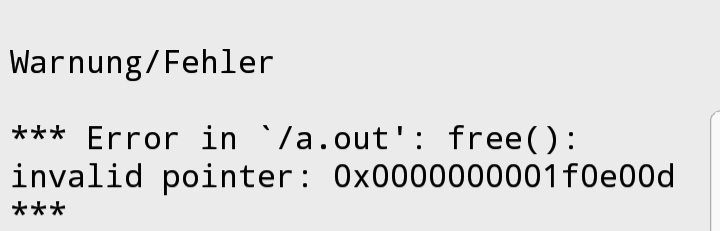

An overflow in C, the program was writing 16 Bytes in an array of length 10 due to some mistake.

The problem was this ended up overwriting another place in the memory that was used by another algorithm to perform calculations... So we thought that the algorithm was buggy all along.2 -

Perhaps you've seen my earlier post about the bottom half of a lamp post?

I've really stepped up my environment for this one. NOTE: Top half of the lamp post still not modeled.

Here, we see a 16K skybox, a a reflective sphere, and a glass sphere, in addition to my original lamp post base.

It used 2.24 GB of memory to render. But only took 47 seconds. 13

13 -

It has been bugging the shit out of me lately... the sheer number of shit-tier "programmers" that have been climbing out of the woodwork the last few years.

I'm not trying to come across as elitist or "holier than thou", but it's getting ridiculous and annoying. Even on here, you have people who "only do frontend development" or some other lame ass shit-stain of an excuse.

When I first started learning programming (PHP was my first language), it wasn't because I wanted to be a programmer. I used to be a member (my account is still there, in fact) of "HackThisSite", back when I was about 12 years old. After hanging out long enough, I got the hint that the best hackers are, in essence, programmers.

Want to learn how to do SQL injection? Learn SQL - write a program that uses an SQL database, and ask yourself how you would exploit your own software.

Want to reverse engineer the network protocol of some proprietary software? Learn TCP/IP - write a TCP/IP packet filter.

Back then, a programmer and a hacker were very much one in the same. Nowadays, some kid can download Python, write a "hello, world" program and they're halfway to freelancing or whatever.

It's rare to find a programmer - a REAL programmer, one who knows how the systems he develops for better than the back of his hand.

These days, I find people want the instant gratification that these simpler languages provide. You don't need to understand how virtual memory works, hell many people don't even really understand C/C++ pointers - and that's BASIC SHIT right there.

Put another way, would you want to take your car to a brake mechanic that doesn't understand how brakes work? I sure as hell wouldn't.

Watching these "programmers" out there who don't have a fucking clue how the code they write does what it does, is like watching a grown man walk around with a kid's toolbox full or plastic toys calling himself a mechanic. (I like cars, ok?!)

*sigh*

Python, AngularJS, Bootstrap, etc. They're all tools and they have their merits. But god fucking dammit, they're not the ONLY damn tools that matter. Stop making excuses *not* to learn something, Mr."IOnlyDoFrontEnd".

Coding ain't Lego's, fuckers.36 -

Life Before the Computer

An application was for employment

A program was a TV show

A cursor used profanity

A keyboard was a piano!

Memory was something that you lost with age

A CD was a bank account

And if you had a 3-inch floppy

You hoped nobody found out!

Compress was something you did to garbage

Not something you did to a file

And if you unzipped anything in public

You'd be in jail for awhile!

Log on was adding wood to a fire

Hard drive was a long trip on the road

A mouse pad was where a mouse lived

And a backup happened to your commode!

Cut - you did with a pocket knife

Paste you did with glue

A web was a spider's home

And a virus was the flu!

I guess I'll stick to my pad and paper

And the memory in my head

I hear nobody's been killed in a computer crash

But when it happens they wish they were dead!3 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

The GashlyCode Tinies

A is for Amy whose malloc was one byte short

B is for Basil who used a quadratic sort

C is for Chuck who checked floats for equality

D is for Desmond who double-freed memory

E is for Ed whose exceptions weren’t handled

F is for Franny whose stack pointers dangled

G is for Glenda whose reads and writes raced

H is for Hans who forgot the base case

I is for Ivan who did not initialize

J is for Jenny who did not know Least Surprise

K is for Kate whose inheritance depth might shock

L is for Larry who never released a lock

M is for Meg who used negatives as unsigned

N is for Ned with behavior left undefined

O is for Olive whose index was off by one

P is for Pat who ignored buffer overrun

Q is for Quentin whose numbers had overflows

R is for Rhoda whose code made the rep exposed

S is for Sam who skipped retesting after wait()

T is for Tom who lacked TCP_NODELAY

U is for Una whose functions were most verbose

V is for Vic who subtracted when floats were close

W is for Winnie who aliased arguments

X is for Xerxes who thought type casts made good sense

Y is for Yorick whose interface was too wide

Z is for Zack in whose code nulls were often spied

- Andrew Myers4 -

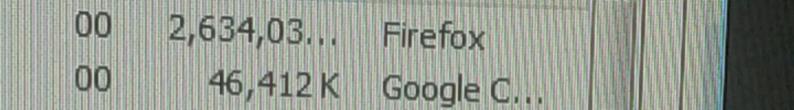

Our team makes a software in Java and because of technical reasons we require 1GB of memory for the JVM (with the Xmx switch).

If you don't have enough free memory the app without any sign just exits because the JVM just couldn't bite big enough from the memory.

Many days later and you just stand there without a clue as to why the launcher does nothing.

Then you remember this constraint and start to close every memory heavy app you can think of. (I'm looking at you Chrome) No matter how important those spreadsheets or illustrator files. Congratulation you just freed up 4GB of memory, things should work now! WRONG!

But why you might ask. You see we are using 32-bit version of java because someone in upper management decided that it should run on any machine (even if we only test it on win 7 and high sierra) and 32 is smaller than 64 so it must be downwards compatible! we should use it! Yes, in 2019 we use 32-bit java because some lunatic might want to run our software on a Windows XP 32-bit OS. But why is this so much of a problem?

Well.. the 32-bit version of Java requires CONTIGUOUS FREE SPACE IN MEMORY TO EVEN START... AND WE ARE REQUESTING ONE GIGABYTE!!

So you can shove your swap and closed applications up your ass but I bet you that you won't get 1GB contiguous memory that way!

Now there will be a meeting about this issue and another related to the issues with 32-bit JVM tomorrow. The only problem is that this issue only occures if you used up most of your memory and then try to open our software. So upper management will probably deem this issue minor and won't allow us to upgrade to 64-bit... in 20fucking1910 -

I haven't ranted for today, but I figured that I'd post a summary.

A public diary of sorts.. devRant is amazing, it even allows me to post the stuff that I'd otherwise put on a piece of paper and probably discard over time. And with keyboard support at that <3

Today has been a productive day for me. Laptop got restored with a "pacman -Syu" over a Bluetooth mobile data tethering from my phone, said phone got upgraded to an unofficial Android 9 (Pie) thanks to a comment from @undef, etc.

I've also made myself a reliable USB extension cord to be able to extend the 20-30cm USB-A male to USB-C male cord that Huawei delivered with my Nexus 6P. The USB-C to USB-C cord that allows for fast charging is unreliable.. ordered some USB-C plugs for that, in order to make some high power wire with that when they arrive.

So that plug I've made.. USB-A male to USB-A female, in which my short USB-C to USB-A wire can plug in. It's a 1M wire, with 18AWG wire for its power lines and 28AWG wires for its data lines. The 18AWG power lines can carry up to 10A of current, while the 28AWG lines can carry up to 1A. All wires were made into 1M pieces. These resulted in a very low impedance path for all of them, my multimeter measured no more than 200 milliohms across them, though I'll have to verify and finetune that on my oscilloscope with 4-wire measurement.

So the wire was good. Easy too, I just had to look up the pinout and replicate that on the male part.

That's where the rant part comes in.. in fact I've got quite uncomfortable with sentences that don't include at least one swear word at this point. All hail to devRant for allowing me to put them out there without guilt.. it changed my very mind <3

Microshaft WanBLowS.

I've tried to plug my DIY extension cord into it, and plugged my phone and some USB stick into it of which I've completely forgot the filesystem. Windows certainly doesn't support it.. turns out that it was LUKS. More about that later.

Windows returned that it didn't support either of them, due to "malfunctioning at the USB device". So I went ahead and plugged in my phone directly.. works without a problem. Then I went ahead and troubleshooted the wire I've just made with a multimeter, to check for shorts.. none at all.

At that point I suspected that WanBLowS was the issue, so I booted up my (at the time) problematic Arch laptop and did the exact same thing there, testing that USB stick and my phone there by plugging it through the extension wire. Shit just worked like that. The USB stick was a LUKS medium and apparently a clone of my SanDisk rootfs that I'm storing my Arch Linux on my laptop at at the time.. an unfinished migration project (SanDisk is unstable, my other DM sticks are quite stable). The USB stick consumed about 20mA so no big deal for any USB controller. The phone consumed about 500mA (which is standard USB 2.0 so no surprise) and worked fine as well.. although the HP laptop dropped the voltage to ~4.8V like that, unlike 5.1V which is nominal for USB. Still worked without a problem.

So clearly Windows is the problem here, and this provides me one more reason to hate that piece of shit OS. Windows lovers may say that it's an issue with my particular hardware, which maybe it is. I've done the Windows plugging solely through a USB 3.0 hub, which was plugged into a USB 3.0 port on the host. Now USB 3.0 is supposed to be able to carry up to 1A rather than 500mA, so I expect all the components in there to be beefier. I've also tested the hub as part of a review, and it can carry about 1A no problem, although it seems like its supply lines aren't shorted to VCC on the host, like a sensible hub would. Instead I suspect that it's going through the hub's controller.

Regardless, this is clearly a bad design. One of the USB data lines is biased to ~3.3V if memory serves me right, while the other is biased to 300mV. The latter could impose a problem.. but again, the current path was of a very low impedance of 200milliohms at most. Meanwhile the direct connection that omits the ~200ohm extension wire worked just fine. Even 300mV wouldn't degrade significantly over such a resistance. So this is most likely a Windows problem.

That aside, the extension cord works fine in Linux. So I've used that as a charging connection while upgrading my Arch laptop (which as you may know has internet issues at the time) over Bluetooth, through a shared BNEP connection (Bluetooth tethering) from my phone. Mobile data since I didn't set up my WiFi in this new Pie ROM yet. Worked fine, fixed my WiFi. Currently it's back in my network as my fully-fledged development host. So that way I'll be able to work again on @Floydian's LinkHub repository. My laptop's the only one who currently holds the private key for signing commits for git$(rm -rf ~/*)@nixmagic.com, hence why my development has been impeded. My tablet doesn't have them. Guess I'll commit somewhere tomorrow.

(looks like my rant is too long, continue in comments)3 -

Is obsidian a fucking joke?

Seriously, is it a joke? Why would you ever care so much about indexing literally everything, if the entire thing crashes and/or takes >5min to LITERALLY just open the fucking directory and/or (so help you) if that directory is full of projects/repos or whatever the fuck and the total size of said directory is like >5GB.

WHY THE FUCK WOULD YOU INDEX EVERYTHING? -- "Ohh obsidian's not supposed to be used a fully fledged IDE, ohh obsidian should just handle MD files and normal sized projects, ohh the plugins and ease-of-use" -- Fuck.

There's no fucking real reason to index everything, BY DEFAULT. You open a directory with Obsidian? Doesn't matter, it's 1 byte, it's 100GB, you get indexed. Deal with it. It will use LITERALLY every resource your computer has. I'm surprised it doesn't go galaxy brain and ping if any other computers/devices are on the network and then attempt to connect and use their hardware (obsidian can be like a node!).

How shit can you be at understanding basic data structures and algorithms, where you just revert to based google-chrome brain and let the FUCKING TEXT EDITOR -- OBSIDIAN IS A FUCKING TEXT EDITOR HOLY SHIT -- hog all conceivable memory.

I swear to <some-deity> if anyone fucking says "Ohhhhhhhh actually, it's not a text editor, it has plugins and features and shit, it does all dis cool stff", OR, "Ohhhhh actually, obsidian indexes things for a very specific/rationale/apt/pragmatic/academic reason" OR "ohhhh, I have 100 iphones, 1000 ipads and a trillion desktop computers that each have 256GB of memory, why you hating on obsidian?" then go kick rocks. The fucking lot of you. Are you fucking kidding me.8 -

Chrome, Firefox, and yes even you Opera, Falkon, Midori and Luakit. We need to talk, and all readers should grab a seat and prepare for some reality checks when their favorite web browsers are in this list.

I've tried literally all of them, in search for a lightweight (read: not ridiculously bloated) web browser. None of them fit the bill.

Yes Midori, you get a couple of bonus points for being the most lightweight. Luakit however.. as much as I like vim in my terminal, I do not want it in a graphical application. Not to mention that just like all the others you just use webkit2gtk, and therefore are just as bloated as all the others. Lightweight my ass! But programmable with Lua, woo! Not like Selenium, Chrome headless, ... does that for any browser. And that's it for the unique features as far as I'm concerned. One is slow, single-threaded and lightweight-ish (Midori) and another has vim keybindings in an application that shouldn't (Luakit).

Pretty much all of them use webkit2gtk as their engine, and pretty much all of them launch a separate process for each tab. People say this is more secure, but I have serious doubts about that. You're still running all these processes as the same user, and they all have full access to the X server they run under (this is also a criticism against user separation on a single X session in general). The only thing it protects against is a website crashing the browser, where only that tab and its process would go down. Which.. you know.. should a webpage even be able to do that?

But what annoys me the most is the sheer amount of memory that all of these take. With all due respect all of you browsers, I am not quite prepared to give 8 fucking gigabytes - half the memory in this whole box! - just for a dozen or so tabs. I shouldn't have to move my web browser to another lesser used 16GB box, just to prevent this one from going into fucking swap from a dozen tabs. And before someone has a go at the add-ons, there's 4 installed and that's it. None of them are even close to this complete and utter memory clusterfuck. It's the process separation. Each process consumes half a GB of memory, and there's around a dozen of them in a usual browsing session. THAT is the real problem. And I want to get rid of it.

Browsers are at their pinnacle of fucked up in my opinion, literally to the point where I'm seriously considering elinks. Being a sysadmin, I already live my daily life in terminals anyway. As such I also do have resources. But because of that I also associate every process with its cost to run it, in terms of resources required. Web browsers are easily at the top of the list.

I want to put 8GB into perspective. You can store nearly 2 entire DVD movies in that memory. However media players used to play them (such as SMPlayer) obviously don't do that. They use 60-80MB on average to play the whole movie. They also require far less processing power than YouTube in a web browser does, even when you download that exact same video with youtube-dl (either streamed within the media player or externally). That is what an application should be.

Let's talk a bit about these "complicated" websites as well. I hate to break it to you framework web devs, but you're a dime a dozen. The competition is high between web devs for that exact reason. And websites are not complicated. The document itself is plain old HTML, yes even if your framework converts to it in the background. That's the skeleton of your document, where I would draw a parallel with documents in office suites that are more or less written in XML. CSS.. oh yes, markup. Embolden that shit, yes please! And JavaScript.. oh yes, that pile of shit that's been designed in half a day, and has a framework called fucking isEven (which does exactly what it says on the tin, modulo 2 be damned). Fancy some macros in your text editor? Yes, same shit, different pile.

Imagine your text editor being as bloated as a web browser. Imagine it being prone to crashing tabs like a web browser. Imagine it being so ridiculously slow to get anything done in your productivity suite. But it's just the usual with web browsers, isn't it? Maybe Gopher wasn't such a bad idea after all... Oh and give me another update where I have to restart the browser when I commit the heinous act of opening another tab, just because you had to update your fucking CA certs again. Yes please!19 -