Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "no compiler"

-

I want to pay respects to my favourite teacher by far.

I turned up at university as a pretty arrogant person. This was because I had about 6 years of self-taught programming experience, and the classes started from the ansolute basics. I turned up to my first classes and everything was extremely easy. I felt like I wouldn't learn anything for at least a year.

Then, I met one of my lecturers for the first time. He was about 50~60 years old and had been programming for all of his career. He was known by everyone to be really strict and we were told by other lecturers that it could be difficult for some people to be his student.

His classes were awesome. He was friendly, but took absolutely no shit, and told everything as it was. He had great stories from his life, which he used to throw out during the more boring computer science topics. He had extremely strict rules for our programming style, and bloody good reasons for all of them. If we didn't follow a clear rule on an assignment, he'd give us 0%. To prove how well this worked, nobody got 0%.

We eventually learned that he was that way because he used to work on real-time systems for the military, where if something didn't work then people could die.

This was exactly what I needed. In around one semester I went from a capable self-taught kid, to writing code that was clear, maintainable and fast, without being hacky.

I learned so much in just that small time, and I owe it all to him. So often when I write code now I think back to his rules. Even if I disagree with some, I learned to be strict and consistent.

Sadly, during the break between our first and second year, he passed away due to illness. There was so many lessons still to be learned from him, and there's now no teachers with enough knowledge to continue his best modules like compiler writing.

He is greatly missed, I've never had greater respect for a teacher than for him.21 -

The ability to convince the compiler that there's no errors.

"Shhhhhh.. trust me, there's such things as a duoble. Now just tell me the build was successful"2 -

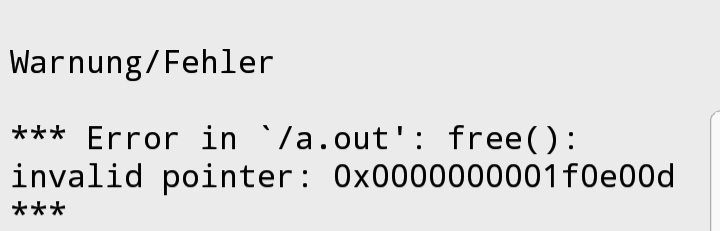

1. Have some issue with my code which spits out cryptic compiler error.

2. Ask on stack overflow, Reddit, etc for a solution.

3. Get scolded at for "not reading the documentation" and "asking questions which could be answered by just Googling". Still no clue what I'm doing wrong, or what the solution would be.

4. Find someone else's vaguely related problem.

5. Post my problematic code as the answer, with arrogant comment about OP being a retard for not figuring that out for themselves.

6. A dozen angry toxic nerds flock in to tell me how retarded and wrong I am, correcting me... solving my original problem.

7. Evil plan succeeded, my code compiles, and as a bonus I made the internet a worse place in the process.

I think if you tell a bunch of autistic neckbeards that "all coronaviruses are fundamentally incurable", you'd have a vaccine within a week.14 -

When I was in the army I wasn't officially a dev. But one commander needed someone to develop a bunch of stuff and couldn't get a dev officially, so I ended up as his "assistant", which was an awesome job with about 60% time spent on software development.

Except I wasn't an official developer, so I wasn't afforded many of the privileges developers get, like a slightly more powerful machine, a copy of Visual Studio, or an internet connection. In this environment you couldn't even download files and transfer the to your computer without a long process, and I couldn't get development tools past that process anyway.

So I was stuck with whatever dev tools I had pre-installed with Windows. Thankfully, I had the brand new Windows XP, so I had the .Net framework installed, which comes with the command line compiler csc. I got to work with notepad and csc; my first order of business: write an editor that could open multiple files, and press F5 to compile and run my project.

Being a noob at the time, with almost no actual experience, and nobody supervising my work, I had a few brilliant ideas. For example, I one day realized I could map properties of an object to a field in a database table, and thus wrote a rudimentary OR/M. My database, I didn't mention, was Access, because that didn't need installation. I connected to it properly via ADO.NET, at least.

The most surprising thing though, in retrospect, is the stuff I wrote actually worked.14 -

*On a programming support forum*

Guy: My compiler keeps throwing null pointer exception at line 128.

Me: Ok. Can you post your code real quick so I could figure out what is null at line 128?

Guy: No I'm not going to show my code to someone on the internet. What if you want to steal my code?

My mind: "Dude wtf why would I steal someone's code on a support forum?"

Me: *Use the next 15 minutes explaining that showing the code is necessary so that others can actually help him, and that no one on a support forum is going to steal his code.*

Guy: "You know what I'm more convinced that you want to copy my code. I might as well just try to fix this on my own."

What?14 -

this.title = "gg Microsoft"

this.metadata = {

rant: true,

long: true,

super_long: true,

has_summary: true

}

// Also:

let microsoft = "dead" // please?

tl;dr: Windows' MAX_PATH is the devil, and it basically does not allow you to copy files with paths that exceed this length. No matter what. Even with official fixes and workarounds.

Long story:

So, I haven't had actual gainful employ in quite awhile. I've been earning just enough to get behind on bills and go without all but basic groceries. Because of this, our electronics have been ... in need of upgrading for quite awhile. In particular, we've needed new drives. (We've been down a server for two years now because its drive died!)

Anyway, I originally bought my external drive just for backup, but due to the above, I eventually began using it for everyday things. including Steam. over USB. Terrible, right? So, I decided to mount it as an internal drive to lower the read/write times. Finding SATA cables was difficult, the motherboard's SATA plugs are in a terrible spot, and my tiny case (and 2yo) made everything soo much worse. It was a miserable experience, but I finally got it installed.

However! It turns out the Seagate external drives use some custom drive header, or custom driver to access the drive, so Windows couldn't read the bare drive. ffs. So, I took it out again (joy) and put it back in the enclosure, and began copying the files off.

The drive I'm copying it to is smaller, so I enabled compression to allow storing a bit more of the data, and excluded a couple of directories so I could copy those elsewhere. I (barely) managed to fit everything with some pretty tight shuffling.

but. that external drive is connected via USB, remember? and for some reason, even over USB3, I was only getting ~20mb/s transfer rate, so the process took 20some hours! In the interim, I worked on some projects, watched netflix, etc., then locked my computer, and went to bed. (I also made sure to turn my monitors and keyboard light off so it wouldn't be enticing to my 2yo.) Cue dramatic music ~

Come morning, I go to check on the progress... and find that the computer is off! What the hell! I turn it on and check the logs... and found that it lost power around 9:16am. aslkjdfhaslkjashdasfjhasd. My 2yo had apparently been playing with the power strip and its enticing glowing red on/off switch. So. It didn't finish copying.

aslkjdfhaslkjashdasfjhasd x2

Anyway, finding the missing files was easy, but what about any that didn't finish? Filesizes don't match, so writing a script to check doesn't work. and using a visual utility like windirstat won't work either because of the excluded folders. Friggin' hell.

Also -- and rather the point of this rant:

It turns out that some of the files (70 in total, as I eventually found out) have paths exceeding Windows' MAX_PATH length (260 chars). So I couldn't copy those.

After some research, I learned that there's a Microsoft hotfix that patches this specific issue! for my specific version! woo! It's like. totally perfect. So, I installed that, restarted as per its wishes... tried again (via both drag and `copy`)... and Lo! It did not work.

After installing the hotfix. to fix this specific issue. on my specific os. the issue remained. gg Microsoft?

Further research.

I then learned (well, learned more about) the unicode path prefix `\\?\`, which bypasses Windows kernel's path parsing, and passes the path directly to ntfslib, thereby indirectly allowing ~32k path lengths. I tried this with the native `copy` command; no luck. I tried this with `robocopy` and cygwin's `cp`; they likewise failed. I tried it with cygwin's `rsync`, but it sees `\\?\` as denoting a remote path, and therefore fails.

However, `dir \\?\C:\` works just fine?

So, apparently, Microsoft's own workaround for long pathnames doesn't work with its own utilities. unless the paths are shorter than MAX_PATH? gg Microsoft.

At this point, I was sorely tempted to write my own copy utility that calls the internal Windows APIs that support unicode paths. but as I lack a C compiler, and haven't coded in C in like 15 years, I figured I'd try a few last desperate ideas first.

For the hell of it, I tried making an archive of the offending files with winRAR. Unsurprisingly, it failed to access the files.

... and for completeness's sake -- mostly to say I tried it -- I did the same with 7zip. I took one of the offending files and made a 7z archive of it in the destination folder -- and, much to my surprise, it worked perfectly! I could even extract the file! Hell, I could even work with paths >340 characters!

So... I'm going through all of the 70 missing files and copying them. with 7zip. because it's the only bloody thing that works. ffs

Third-party utilities work better than Microsoft's official fixes. gg.

...

On a related note, I totally feel like that person from http://xkcd.com/763 right now ;;21 -

I've got a mini stroke today. My project ended and I got delegated elsewhere.

"It's going to be fine, it's c++, you will find yourself there"

Suspicious, it's a project everybody was staying out of as hard as they can. But hey, it's cool, how bad can it be? what can go wrong with that?

Reality was brutal, project that uses Boost C++ as framework and bjam as builder. Builds with a decent dose of luck, and only under special circumstances, only under one specific version of compiler. No docs, quartet of the code is in Fortran, just to use ancient lisp part which was second qarter. The most senior Dev around does not have idea how it all works. Also everything is inside one enormous try/catch block. Because of the reasons.

That's how people end up with severe alcoholism and meth addiction.8 -

Me talking to a recruiter (even though I am not looking for a job)

Me: If I walk into an interview, and they ask me to reverse a binary tree for a frontend Reac or Vue position or something along those lines, I will end the call and/or walk away from it.

Him: I get similar feelings from other programmers, I don't quite understand why the notion is as common

Me: Because it is fucking useless, it servers no purpose to a dev to know about that when building frontends with react, I link my github profile, for which they can find advanced backend-frontend related projects, compiler and interpreter projects, plus the title I currently have at my workplace and a bunch of other shit, I am not interviewing for a teaching position at an institute, but an actual place of work, for which if they want to know about DS and A they can review my profile which has a repo of DS and A in about 5 different languages including plain C++. I do not need to be offended by such notions since they server no purpose on the frontend, and neither do other devs. If anything it should be a casual conversation during the interview, not a basis for employment.

Recruiter: .........thank you for explaining this to me, I am sure I can bring it up to the agencies doing the reviews and interviews. Are you still interested?

Me: Are they going to give me a coding assignment for a project or a bs question like what I mentioned?

Him: I don't know

Me: then I am not interested12 -

At an interview, the first round was an online coding round. Two questions, one easy one hard, 90 minutes, easy peasy.

I solved the hard one first.

A bit of good logic, followed MVC pattern, all done. Worked flawlessly.

Submitted code. Online compiler threw up an internal error citing java is an invalid command(jdk not found).

Called the invigilators. What I heard next, I couldn't believe this shit.

"We're not responsible for any errors you may be having. Figure it out yourself"

I was like WTF dude. This is not even a compilation or runtime error!

After a heated discussion, I made him look at the code.

Him - what is all this classes and all? Why haven't you written everything inside the main function?

Me - those are model classes. Those are different helper functions. That is a recursive function to avoid 5 for loops and use divide and conquer. Ever heard of OOP? what kind of person writes a 300 line program inside one function?

Him - no no we write it like that only. Correct this.

Me - I fit everything inside the main function. Still the same error, java not installed. Called the idiot to have a look at it.

Him - yeah your code is wrong.

Me - may I know what's wrong with it? Can you fix it please?

Him - no no we aren't allowed to see the code (he had already read it twice. It was compiling and running perfectly, locally) .

Yeah you solved only 1 problem, you were supposed to solve 2.

Me - yes because the rest of the time I had the pleasure of your company. (It isn't everyday that I see talking buffoons.)11 -

My first poem for programmer girl 😘😘😘

My life is incomplete without you,

You are semicolon of my life

You are my increment operator,

you make my value increases

I am username and you are my password,

without you No one can access me

You are my initializer,

without you my life would point to nothing (NULL or “0”)

If I were a function you must be my parameter,

Because I will always need you

Can you be my private variable?

I want to be only one who can access you

You are my compiler,

My life wouldn’t start without you

You are my loop condition ,

I keep coming back to you

My love to you is like recursive function,

It will never ends & Will never enter into infinite loop

Forever and Ever10 -

> le server suddenly stops working, no boot, no POST, no beeps, no video

*le frantic cursing on how perhaps that's why the fucking thing was only €60 🤬*

*takes out RAM*

> le server still not booting

*places RAM back without doing anything else*

> le server boots up again

🤔🤔🤔

Is this what they mean with things like "compile it again and somehow the compiler will not complain anymore after a while"?16 -

I know a guy who writes everything in Haskell.

He started learning it because his parents got him into a math school (and math schools in Russia use either Python or Haskell), he liked it, but later he dropped out. Today, apart from Haskell, he only really knows HTML and CSS, and maybe some JavaScript.

He writes backend AND frontend in Haskell and uses some kind of JRPC stuff to manage all that. He told me that his life is a pure heaven. He IS RELEVANT (!!!!!!), his apps always run without bugs (because in Haskell you can mathematically prove that there are no bugs), they are performant, faster than C (because you can't write a complex enough app in C that will be as efficient as compiled Haskell, because it's you vs compiler). He doesn't have any problems in life whatsoever. He never got burned out, he never got anxiety or depression. He doesn't act pretentiously and stuff, he's just a normal person who rarely even mentions that he can program.

Science says it can't be done! You can't only know Haskell and be a relevant software engineer! You know what, he didn't _know_ it was impossible. He's like that grandpa from a meme, he got Alzheimers, but because of it he forgot that he had Alzheimers, and now remembers everything.

The fun thing is that he looks like a typical gopnik, with adidas suits and stuff.

What a gem of a person.26 -

"Fatal Error"

Exceptions? No, let's just halt the entire program.

Apparently a CS professor wrote this code.

"Needed to keep the compiler happy" 17

17 -

Let the student use their own laptops. Even buy them one instead of having computers on site that no one uses for coding but only for some multiple choice tests and to browse Facebook.

Teach them 10 finger typing. (Don't be too strict and allow for personal preferences.)

Teach them text navigation and editing shortcuts. They should be able to scroll per page, jump to the beginning or end of the line or jump word by word. (I am not talking vi bindings or emacs magic.) And no, key repeat is an antifeature.

Teach them VCS before their first group assignment. Let's be honest, VCS means git nowadays. Yet teach them git != GitHub.

Teach git through the command line. They are allowed to use a gui once they aren't afraid to resolve a merge conflict or to rebase their feature branch against master. Just committing and pushing is not enough.

Teach them test-driven development ASAP. You can even give them assignments with a codebase of failing tests and their job is to make them pass in the beginning. Later require them to write tests themselves.

Don't teach the language, teach concepts. (No, if else and for loops aren't concepts you god-damn amateur! That's just syntax!)

When teaching object oriented programming, I'd smack you if do inane examples with vehicles, cars, bikes and a Mercedes Benz. Or animal, cat and dog for that matter. (I came from a self-taught imperative background. Those examples obfuscate more than they help.) Also, inheritance is overrated in oop teachings.

Functional programming concepts should be taught earlier as its concepts of avoiding side effects and pure functions can benefit even oop code bases. (Also great way to introduce testing, as pure functions take certain inputs and produce one output.)

Focus on one language in the beginning, it need not be Java, but don't confuse students with Java, Python and Ruby in their first year. (Bonus point if the language supports both oop and functional programming.)

And for the love of gawd: let them have a strictly typed language. Why would you teach with JavaScript!?

Use industry standards. Notepad, atom and eclipse might be open source and free; yet JetBrains community editions still best them.

For grades, don't your dare demand for them to write code on paper. (Pseudocode is fine.)

Don't let your students play compiler in their heads. It's not their job to know exactly what exception will be thrown by your contrived example. That's the compilers job to complain about. Rather teach them how to find solutions to these errors.

Teach them advanced google searches.

Teach them how to write a issue for a library on GitHub and similar sites.

Teach them how to ask a good stackoverflow question :>5 -

Online tutorial pet peeves

————————————

My top 10 points of unsolicited ranting/advice to those making video tutorials:

1. Avoid lots of pauses, saying “umm” too much, or other unnecessary redundancy in speech (listen to yourself in a recording)

2. If I can’t understand you at 1.5 - 2x playback speed and you don’t already speak relatively quickly and clearly, I’m probably not going to watch for long (mumbling, inconsistent microphone volume, and background noise/music are frequent culprits)

3. It’s ok to make mistakes in a tutorial, so long as you also fix them in the tutorial (e.g., the code that is missing a semicolon that all of a sudden has one after it compiles correctly — but no mention of fixing it or the compiler error that would have been received the first time). With that said, it’s fine to fix mistakes pertinent to the topic being taught, but don’t make me watch you troubleshoot your non-relevant computer issues or problems created by your specific preferences (e.g., IDE functionality not working as expected when no specific IDE was prescribed for the tutorial)

4. Don’t make me wait on your slow computer to do something in silence—either teach me something while it’s working or edit the video to remove the lull

5. You knew you were recording your screen. Close your email, chat, and other applications that create notifications before recording. Or at least please don’t check them and respond while recording and not edit it out of the video

6. Stay on topic. I’m watching your video to learn about something specific. A little personality is good, but excessive tangents are often a waste of my time

7. [Specific to YouTube] Don’t block my view of important content with annotations (and ads, if within your control)

8. If you aren’t uploading quality HD recordings, enlarge your font! Don’t make me have to guess what character you typed

9. Have a game plan (i.e., objectives) before hitting the record button

10. Remember that it’s easier to rant and complain than to do something constructive. Thank you for spending your time making tutorial videos. It’s better for you to make videos and commit all my pet peeves listed above than to not make videos at all—don’t let one guy’s rant stop you from sharing your knowledge and experience (but if it helps you, you’re welcome—and you just might gain a new viewer!)14 -

Ok. Yesterday I finished building my compiler I have to say: it was a pretty darn big thing with 7000 Lines of code.

I did it alone and with almost no help.

I wanted to give some advice in case someone wants to program a compiler. I knaw its useless in times of lex and yacc, but anyway.

-have a good idea for the language

-learn about parser/lexer

-learn assembler

-do it like me: output the assembler to a file and let it assemble/link by the linux standart-tools (call the commands)

-Have fun. Fun is essential in coding

I hope I was able to help people who want to build a compiler alone... Yau can always ask questions ;~)

-3 -

I'm currently rewriting perfectly clean and functioning Scala code in Java (because "Enterprise", yay). The amount of unnecessary boilerplate I have to add is insane. I'm not even talking big complicated code but two liners or the lack of simple things like a range from 5 to 10.

Why do I have to write

List<Position> occupiedPositions = placedEntities.stream()

.flatMap((pe) -> pe.occupiedPositions().stream())

.collect(Collectors.toList());

instead of simply

val occupiedPositions = placedEntities.flatMap(_.occupiedPositions)

Why on earth does `occupiedPositions.distinct` suddenly become a monstrosity like `occupiedPositions.stream().distinct().collect(Collectors.toList())` where the majority of code is pure boilerplate? And this is supposed to be the new and better Java8 api which people use as evidence that Java is now suddenly "functional" (yeah no, just no).

Why do APIs that annotate parameters with @Nullable throw NullPointerExceptions when I pass a null? Why does the compiler not help prevent such stupidity? Why do we use static typing PLUS those annotations and it still crashes at runtime like every damn dynamic, interpreted language out there? That's not unfortunate, it's a complete waste of time.

Why is a simple idea like a range from x to 10 (in scala literally `x to 10`) not by default included in Java? There's Guava's version of Range which does not have a helper for integer ranges (even though they are the most used ones). Then there's apache.commons version which _has_ a helper for integers, but is strangely not iterable (wtf I don't even...).

Speaking of Iterable: How difficult could it be to convert an abstract Iterable<T> into a concrete List<T>? In scala it's surprisingly `someIterable.toList`. I found nothing like that so I took to stackoverflow where I found a thread in which people suggested everything from writing your own ListUtils helper class, using Guava (which is a huge dependency!) to using the new Java8 features inline (which is still about three lines long). I didn't know this was such a hard problem in computer science, TIL.

How anyone can be productive in this abomination of a language is beyond me now, even though I've used it for many years while learning to code (back then I didn't know there were much better ways to do things). The only good part is that I have to endure this nonsense for only about 3 days longer then I'm free again!12 -

I'm working on a programming language with a "bytecode" interpreter and a compiler that translates source code to said bytecode and... it sort of actually works!

I want to recreate an Erlang-style environment, currently you can write functions, call C++ functions via wrappers, have immutable-only values, and it has no explicit control structure apart from statement sequencing and the if-expression because I want to make it as functional as possible. Next thing on the list is to add a green threads implementation and ability to spawn and send messages to processes.

Still a WIP and heck even design-in-progress.

Now for the rant:

I'm using CMake for building C++ (interpreter) and Stack for Haskell (compiler) and I've been trying to get them to talk to each other for hours because I want CMake to manage the Stack build too and shove all the executables into one place. CMake documentation is weird and Stack isn't too helpful either, so I guess I'll just spend another few hours trying to get Stack to fuckin reveal its build directory to CMake and/or build to a given directory. Ugh. 8

8 -

❤️ Swift ❤️

for (i = 0; i < polygon.count; i++) {

// some print statement

} **

This highly advanced and futuristic piece of code made the Swift compiler eat 14+ GB of RAM while trying to syntax-highlight, before crashing my 8 GB-equipped Macbook.

** yeah, "C-style 'for' loopz syntaxx deprecated since Swift 3 blah blah". Let's reinvent an industry-standard for no goddam reason, because Swift is the FUTURE, oh, and because fuck you by the way.2 -

First dev job: port Unix on Transputer, a (now defunct) bizarre processor with no stack, no registers and no compiler. That was fun! And that was in 1991 😎

3

3 -

I could bitch about XSLT again, as that was certainly painful, but that’s less about learning a skill and more about understanding someone else’s mental diarrhea, so let me pick something else.

My most painful learning experience was probably pointers, but not pointers in the usual sense of `char *ptr` in C and how they’re totally confusing at first. I mean, it was that too, but in addition it was how I had absolutely none of the background needed to understand them, not having any learning material (nor guidance), nor even a typical compiler to tell me what i was doing wrong — and on top of all of that, only being able to run code on a device that would crash/halt/freak out whenever i made a mistake. It was an absolute nightmare.

Here’s the story:

Someone gave me the game RACE for my TI-83 calculator, but it turned out to be an unlocked version, which means I could edit it and see the code. I discovered this later on by accident while trying to play it during class, and when I looked at it, all I saw was incomprehensible garbage. I closed it, and the game no longer worked. Looking back I must have changed something, but then I thought it was just magic. It took me a long time to get curious enough to look at it again.

But in the meantime, I ended up played with these “programs” a little, and made some really simple ones, and later some somewhat complex ones. So the next time I opened RACE again I kind of understood what it was doing.

Moving on, I spent a year learning TI-Basic, and eventually reached the limit of what it could do. Along the way, I learned that all of the really amazing games/utilities that were incredibly fast, had greyscale graphics, lowercase text, no runtime indicator, etc. were written in “Assembly,” so naturally I wanted to use that, too.

I had no idea what it was, but it was the obvious next step for me, so I started teaching myself. It was z80 Assembly, and there was practically no documents, resources, nothing helpful online.

I found the specs, and a few terrible docs and other sources, but with only one year of programming experience, I didn’t really understand what they were telling me. This was before stackoverflow, etc., too, so what little help I found was mostly from forum posts, IRC (mostly got ignored or made fun of), and reading other people’s source when I could find it. And usually that was less than clear.

And here’s where we dive into the specifics. Starting with so little experience, and in TI-Basic of all things, meant I had zero understanding of pointers, memory and addresses, the stack, heap, data structures, interrupts, clocks, etc. I had mastered everything TI-Basic offered, which astoundingly included arrays and matrices (six of each), but it hid everything else except basic logic and flow control. (No, there weren’t even functions; it has labels and goto.) It has 27 numeric variables (A-Z and theta, can store either float or complex numbers), 8 Lists (numeric arrays), 6 matricies (2d numeric arrays), 10 strings, and a few other things like “equations” and literal bitmap pictures.

Soo… I went from knowing only that to learning pointers. And pointer math. And data structures. And pointers to pointers, and the stack, and function calls, and all that goodness. And remember, I was learning and writing all of this in plain Assembly, in notepad (or on paper at school), not in C or C++ with a teacher, a textbook, SO, and an intelligent compiler with its incredibly helpful type checking and warnings. Just raw trial and error. I learned what I could from whatever cryptic sources I could find (and understand) online, and applied it.

But actually using what I learned? If a pointer was wrong, it resulted in unexpected behavior, memory corruption, freezes, etc. I didn’t have a debugger, an emulator, etc. I had notepad, the barebones compiler, and my calculator.

Also, iterating meant changing my code, recompiling, factory resetting my calculator (removing the battery for 30+ sec) because bugs usually froze it or corrupted something, then transferring the new program over, and finally running it. It was soo slowwwww. But I made steady progress.

Painful learning experience? Check.

Pointer hell? Absolutely.4 -

EEEEEEEEEEEE Some fAcking languages!! Actually barfs while using this trashdump!

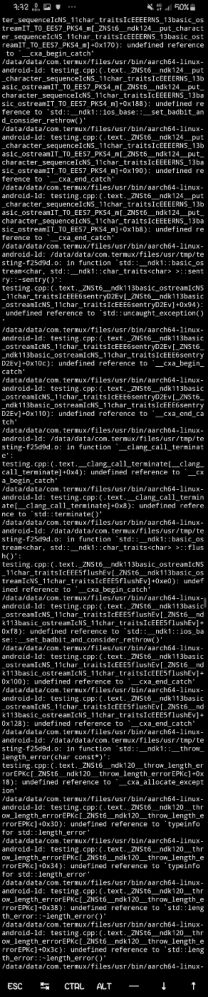

The gist: new job, position required adv C# knowledge (like f yea, one of my fav languages), we are working with RPA (using software robots to automate stuff), and we are using some new robot still in beta phase, but robot has its own prog lang.

The problem:

- this language is kind of like ASM (i think so, I'm venting here, it's ASM OK), with syntax that burns your eyes

- no function return values, but I can live with that, at least they have some sort of functions

- emojies for identifiers (like php's $var, but they only aim for shitty features so you use a heart.. ♥var)

- only jump and jumpif for control flow

- no foopin variable scopes at all (if you run multiple scripts at the same time they even share variables *pukes*)

- weird alt characters everywhere. define strings with regular quotes? nah let's be [some mental illness] and use prime quotes (‴ U+2034), and like ⟦ ⟧ for array indexing, but only sometimes!

- super slow interpreter, ex a regular loop to count to 10 (using jumps because yea no actual loops) takes more than 20 seconds to execute, approx 700ms to run 1 code row.

- it supports c# snippets (defined with these stupid characters: ⊂ ⊃) and I guess that's the only c# I get to write with this job :^}

- on top of that, outdated documentation, because yea it's beta, but so crappin tedious with this trail n error to check how every feature works

The question: why in the living fartfaces yolk would you even make a new language when it's so easy nowadays to embed compilers!?! the robot is apparently made in c#, so it should be no funcking problem at all to add a damn lua compiler or something. having a tcp api would even be easier got dammit!!! And what in the world made our company think this robot was a plausible choice?! Did they do a full fubbing analysis of the different software robots out there and accidentally sorted by ease of use in reverse order?? 'cause that's the only explanation i can imagine

Frillin stupid shitpile of a language!!! AAAAAHHH

see the attached screenshot of production code we've developed at the company for reference.

Disclaimer: I do not stand responsible for any eventual headaches or gauged eyes caused by the named image.

(for those interested, the robot is G1ANT.Robot, https://beta.g1ant.com/) 4

4 -

Interesting bug hunt!

Got called in because a co-team had a strange bug and couldn't make sense of it. After a compiler update, things had stopped working.

They had already hunted down the bug to something equivalent to the screenshot and put a breakpoint on the if-statement. The memory window showed the memory content, and it was indeed 42. However, the debugger would still jump over do_stuff(), both in single step and when setting a breakpoint on the function call. Very unusual, but the rest worked.

Looking closer, I noticed that the pointer's content was an odd number, but was supposed to be of type uint32_t *. So I dug out the controller's manual and looked up the instruction set what it would do with a 32 bit load from an unaligned address: the most braindead thing possible, it would just ignore the lowest two address bits. So the actual load happened from a different address, that's why the comparison failed.

I think the debugger fetched the memory content bytewise because that would work for any kind of data structure with only one code path, that's how it bypassed the alignment issue. Nice pitfall!

Investigating further why the pointer was off, it turned out that it pointed into an underlying array of type char. The offset into the array was correctly divisible by 4, but the beginning had no alignment, and a char array doesn't need one. I checked the mapfiles and indeed, the old compiler had put the array to a 4 byte boundary and the new one didn't.

Sure enough, after giving the array a 4 byte alignment directive, the code worked as intended. 8

8 -

[long]

When searching for internship via school I found this small startup with this cute project of building a teaching tool for programming. There were back then 2 programmers: the founder and the co-founder.

Then like 1 week before the internship started, the co-founder had a burnout and had to get off the project, while the company was so low on budget the founder, aka my new b0ss, had to work separate jobs to keep the company alive. (quite metal tbh)

It's funny because I'm a junior developer, 100%. I've been coding as a hobby for around 8 years now but I've never worked in a big company before. (No exception to this workplace either)

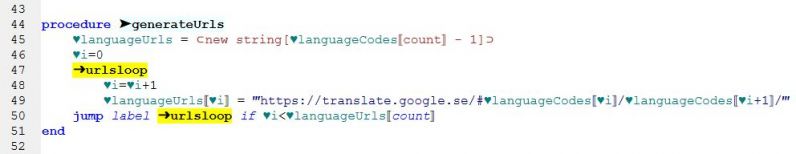

First project I get: rewrite the compiler. The Python compiler.

"But wait, why not just embed a real compiler from the first case?"

-nanananana it's never simple, as you probably know from your own projects.

The new compiler, as compared to existing embedded compiler solutions out there, needed these prime features:

- Walk through the code (debugger style), but programmatically.

- Show custom exceptions (ex: "A colon is needed at the end of an if-statement" instead of "Syntax error line 3")

- Have a "Did-you-mean this variable?" error for usage of unassigned variables.

- Be able to be embedded in Unity's WebGL build target

All for the use case of being a friendly compiler.

The last dash in the list is actually the biggest bottleneck which excluded all existing open-source projects (i could find). Compliant with WebAssembly I can't use threads among other things, IL2CPP has lots of restrictions, Unity has some as well...

Oh and it should of course be built using test-driven development.

"Good luck!" - said the founder, first day of work as she then traveled to USA for **3 weeks**, leaving me solo with the to-be-made codebase and humongous list of requirements.

---

I just finished the 6th week of internship, boss has been at "HQ" for 3 weeks now, and I just hit the biggest milestone yet for this project.

Yes I've been succeeding! This project has gone so well, and I'm surprising myself how much code I've been pumping out during these weeks.

I'm up now at almost 40'000 lines of source and 30'000 lines of code. ‼

( Biggest project I've ever worked on previously was at 8'000 lines of code )

The milestone (that I finished today) was for loops! As been trying to showcase in the GIF.

---

It's such a giant project and I can honestly say I've done some good work here. Self-five. Over-performing is a thing.

The things that makes me shiver though is that most that use this application will never know the intricates of it's insides, and the brain work put into it.

The project is probably over-engineered. A lot. Having a home-made compiler gives us a lot of flexibility for our product as we're trying to make more of a "pedagogic IDE". But no matter that I reinvented the wheel for the 105Gth time, it's still the most fun I've had with a project to date.

---

Also btw if anyone wants to see source code, please give me good reasons as I'm actively trying to convince my boss to make the compiler open-source.

Cheers! 4

4 -

Another project with legacy code got just dusted off at work. Shits fucked beyond recognition! We got:

- Rando variable names that mean nothing

- Timers running with a cycle time of 2.5ms if you start them with the multiplier 1.

- An Interrupt routine thats 300 lines long.

- Another interrupt thats starting an ADC conversion and waiting for it to complete before returning.

- For loops that start with one and subtract one from the iterator in the loop

- Every value that would normally be expressed as a regular number is written down in Hex. Eg: if(val==0x05)

- State machine built without writing down which state is which. Its just a number. (In hex obviously!)

- All running on a Microcontroller you cant debug on.

- Using a compiler no one has ever heard of before.

- Weird ass Port manipulations

- 15 different .hex and .elf files with no clue whats in them.

- No version control

- We tried explaining the code to a monkey and it hanged itself.9 -

Is it just me who sees this? JS development in a somewhat more complex setting (like vue-storefront) is just a horrible mess.

I have 10+ experience in java, c# and python, and I've never needed more than a a few hours to get into a new codebase, understanding the overall system, being able to guess where to fix a given problem.

But with JS (and also TS for that matter) I'm at my limits. Most of the files look like they don't do anything. There seems to be no structure, both from a file system point of view, nor from a code point of view.

It start with little things like 300 char long lines including various lambdas, closures and ifs with useless variables names, over overly generic and minified method/function names to inconsistent naming of files, classes and basically everything else.

I used to just set a breakpoint somewhere in my code (or in a compiled dependency) wait this it is being hit and go back and forth to learn how the system state changes.

This seems to be highly limited in JS. I didn't find the one way to just being able to debug, everything that is. There are weird things like transpilers, compiler, minifiers, bablers and what not else. There is an error? Go f... yourself ...

And what do I find as the number one tipp all across the internet? Console.log?? are you kidding me, sure just tell me, your kidding me right?

If I would have to describe the JS world in one word, I would use "inconsistency". It's all just a pain in the ass.

I remember when I switcher from VisualStudio/C# to Eclipse/Java I felt like traveling back in time for about 10 years. Everyting seemd so ... old-schoolish, buggy, weird.

When I now switch from java to JS it makes me feel the same way. It's all so highly unproductive, inconsistent, undeterministic, cobbled together.

For one inconveinience the JS communinity seems to like to build huge shitloads of stuff around it, instead of fixing the obvious. And noone seems to see that.

It's like they are all blinded somehow. Currently I'm also trying to implement a small react app based on react-admin. The simplest things to develop and debug are a nightmare. There is so much boilerplate that to write that most people in the internet just keep copying stuff, without even trying to understand what it actually does.

I've always been a guy that tries to understand what the fuck this code actuall does. And for most of the parts I just thing, that the stuff there is useless or could be done in a way more readable way. But instead, all the devs out there just seem to chose the "copy and fix somehow-ish" way.

I'm all in for component-izing stuff. I like encapsulation, I'm a OOP guy by heart. But what react and similar frameworks do is just insane. It's just not right (for some part).

Especially when you have to remember so much stuff that is just mechanics/boilerplate without having any actual "business logical function".

People always say java is so verbose. I don't think it is, there is so few syntax that it almost reads like a prose story. When I look at JS and TS instead, I'm overwhelmed by all the syntax, almost wondering every second line, what the actual fuck this could mean. The boilerplate/logic ration seems way to off ..

So it really makes me wonder, if all you JS devs out there are just so used to that stuff, that you cannot imagine how it could be done better? I still remember my C# days, but I admin that I just got used to java. So I can somehow understand that all. But JS is just another few levels less deeper.

But maybe I'm just lazy and too old ...4 -

Currently I'm working on 3D game engine and making a 3D minesweeper game with it.

I have started creating a compiler not long ago using my own implementation (no Lex no tools nothing just raw algorithms application) to hopefully some day I will be able to make a language that works on top of glsl inside my game engine. I have compilers design class this semester which haven't even started yet and made a lexical analyser generator. I also have another class about geographical information systems which I will be using my engine to create some demos for some 3D rendering techniques like level of details or maybe create something similar to arcgis which we will be using.

Oh man I have many stuff I want to do.

Here is a gif showing the state of my minesweeper game. I clearly lack artistic skills lol. One thing I will be making is to model the sphere as squares not triangles.

Finally I want to mention that I months ago saw someone here at devrant making a voronoi diagrams variant of this which inspired me to make this.

I made long post so

TLDR : having fun reinventing the weel and learning 😀

-

Warning: Long rant ahead!

So we built an amazing system for managing swarms of drones, and we have flown hundreds of hours, testing, etc.

Comes a client and says, that he wants to buy our system, but he wants to integrate it in a bigger system that is supposed to orchestrate many small systems.

Sounds like a deal.

So they send me on a week course (see previous rant: https://devrant.com/rants/2049071/...) to learn how to integrate our system in theirs.

I was sure that they have some API or something and it should be a breeze. but apparently they give us an SDK that includes all their files, and we have to build and run their entire system, and then build our own API inside of it!

And the reason we needed a week-long course, was to know all the paths where the XML configuration files exist!

So for the last month, I am hacking away inside this huge program, navigating thousands of files in a language I don't know, in order to build an API for their system, so that I can use it on our side.

Yesterday they informed us that a new version is available.

And sure enough, waiting in my inbox this morning was a link to download a new SDK.

No Changelog, No Instructions, Just a zip file with over 25,000 files.

So I phone my contact in their company to ask how exactly I am supposed to update their files, and his answer was: diff them!

WHAT! 25,000 files, half of them built by the c++ compiler, tens of configuration files scattered in different places, linking all the new libraries from scratch, are they crazy or what?

And then he tells me that they are working for 15 years this way. That's why everyone hates them I guess.

going to have a long day...

P.S. many more rants to come from this integration.4 -

See, static typing? that shit is for putos. You think you're so cool with your advanced intellisense being able to tell you "yo....dat shit ain't the type you think it is" or your compiler telling you "yo dumbass, you fucked this parameter up in here, you are doing <x> when in reality you should be doing #@$@#$@!<X at line !@#@#$#>"

pfffft static typing. Such a pansy ass thing to worry about.

Picture us, working outside of the safety net of static typing, as jungle explorers, walking slowly, with a machete in hand and our other hand clutched tightly at our hip pistol, not knowing what to shoot at, but eagerly prepared for when shit fucks up because whatever the fuck you did was not properly safeguarded by a compiler to tell you that you fucked up, even if the compiler message is unintelligible (looking at you C and C++)

We is men here, we is brave retarded adventurers.

As our sanity blips into oblivion and we look at our code that has no sort of type checking expecting our shitty intellisense extensions to protect us....

Edit: if you can't understand the sarcasm in here and the plea for sanity then you are obviously a retard and have no place in the world of development19 -

I spent over a decade of my life working with Ada. I've spent almost the same amount of time working with C# and VisualBasic. And I've spent almost six years now with F#. I consider all of these great languages for various reasons, each with their respective problems. As these are mostly mature languages some of the problems were only knowable in hindsight. But Ada was always sort of my baby. I don't really mind extra typing, as at least what I do, reading happens much more than writing, and tab completion has most things only being 3-4 key presses irl. But I'm no zealot, and have been fully aware of deficiencies in the language, just like any language would have. I've had similar feelings of all languages I've worked with, and the .NET/C#/VB/F# guys are excellent with taking suggestions and feedback.

This is not the case with Ada, and this will be my story, since I've no longer decided anonymity is necessary.

First few years learning the language I did what anyone does: you write shit that already exists just to learn. Kept refining it over time, sometimes needing to do entire rewrites. Eventually a few of these wound up being good. Not novel, just good stuff that already existed. Outperforming the leading Ada company in benchmarks kind of good. At the time I was really gung-ho about the language. Would have loved to make Ada development a career. Eventually build up enough of this, as well as a working, but very bad performing compiler, and decide to try to apply for a job at this company. I wasn't worried about the quality of the compiler, as anyone who's seriously worked with Ada knows, the language is remarkably complex with some bizarre rules in dark corners, so a compiler which passes the standards test indicates a very intimate knowledge of the language few can attest to.

I get told they didn't think I would be a good fit for the job, and that they didn't think I should be doing development.

A few months of rapid cycling between hatred and self loathing passes, and then a suicide attempt. I've got past problems which contributed more so than the actual job denial.

So I get better and start working even harder on my shit. Get the performance of my stuff up even better. Don't bother even trying to fix up the compiler, and start researching about text parsing. Do tons of small programs to test things, and wind up learning a lot. I'm starting to notice a lot of languages really surpassing Ada in _quality of life_, with things package managers and repositories for those, as well as social media presence and exhaustive tutorials from the community.

At the time I didn't really get programming language specific package managers (I do now), but I still brought this up to the community. Don't do that. They don't like new ideas. Odd for a language which at the time was so innovative. But social media presence did eventually happen with a Twitter account that is most definitely run by a specific Ada company masquerading as a general Ada advocate. It did occasionally draw interest to neat things from the community, so that's cool.

Since I've been using both VisualStudio and an IDE this Ada company provides, I saw a very jarring quality difference over the years. I'm not gonna say VS is perfect, it's not. But this piece of shit made VS look like a polished streamlined bug free race car designed by expert UX people. It. Was. Bad. Very little features, with little added over the years. Fast forwarding several years, I can find about ten bugs in five minutes each update, and I can't find bugs in the video games I play, so I'm no bug finder. It's just that bad. This from a company providing software for "highly reliable systems"...

So I decide to take a crack at writing an editor extension for VS Code, which I had never even used. It actually went well, and as of this writing it has over 24k downloads, and I've received some great comments from some people over on Twitter about how detailed the highlighting is. Plenty of bespoke advertising the entire time in development, of course.

Never a single word from the community about me.

Around this time I had also started a YouTube channel to provide educational content about the language, since there's very little, except large textbooks which aren't right for everyone. Now keep in mind I had written a compiler which at least was passing the language standards test, so I definitely know the language very well. This is a standard the programmers at these companies will admit very few people understand. YouTube channel met with hate from the community, and overwhelming thanks from newcomers. Never a shout out from the "community" Twitter account. The hate went as far as things like how nothing I say should be listened to because I'm a degenerate Irishman, to things like how the world would have been a better place if I was successful in killing myself (I don't talk much about my mental illness, but it shows up).

I'm strictly a .NET developer now. All code ported.5 -

My neural networks journey so far:

Look up tutorials -> see that Python is a popular tool for ML -> install Python -> pip install scipy -> breaks with some weird error involving BLAS library code -> spend half an hour fixing it -> try installing Theano -> breaks because my USERNAME HAS A SPACE IN IT LIKE SERIOUSLY? WTF -> make new account without a space in the name -> repeat till Theano -> run tests, found out that I didn't install CUDA support -> scrap the install and redo with CUDA support -> CUDA libraries take forever to download on shitty internet -> run tests -> breaks with some weird Theano compiler error -> go crying to friend -> friend tells me about Anaconda -> scrap the previous install and download Anaconda over shitty connection -> mess up conda environments because noobishness -> scrap, retry -> YESS I FINALLY GOT IT WORKING TIME TO DO SOME LEARNI-crap it's 4 in the morning already.

I realize that I'm a Python noob (and also, uni computers with GPUs have preconfigured Windows installed only, no Linux), but is installing Python libraries always such a pain? Am I doing something wrong? Installing via Anaconda felt like cheating, tbh.6 -

Here it is: get MythTV up and running.

In one corner, building from source, the granddaddy Debian!

In the other, prebuilt and ready to download, the meek but feisty Xubuntu!

Debian gets an early start, knowing that compiling on a single core VM won't break any records, and sends the compiler to work with a deft make command!

Xubuntu, relying on its user friendly nature, gets up and running quickly and starts the download. This is where the high-bandwidth internet really works in her favor!

Debian is still compiling as Xubuntu zooms past, and is ready to run!

MythTV backend setup leads her down a few dark alleys, such as asking where to put directories and then not making them, but she comes out fine!

Oh no! After choosing a country and language the frontend commit suicide with no error message! A huge blow to Xubuntu as this will take hours to diagnose!

Meanwhile, Debian sits in his corner, quietly chugging away on millions of lines of C++...

Xubuntu looks lost... And Debian is finished compiling! He's ready to install!

Who will win? Stay tuned to find out!4 -

literally what the fuck is the point of C++

>takes 3 years to make anything half-functional

>language was made in like fucking 1902 so it's damn near fucking impossible to make anything that works without sifting through bumfuck retarded syntax/libraries

>error messages that tell you absolutely nothing of use and are indecipherable garbage 90% of the time

fuck C, fuck it's retarded downie little brother C++, and fuck the stupid fucking boomers who say you're not a real programmer unless you force yourself to become a masochist by using either one of these stupid fucking languages

"oh but it's fast!!11!1!!" yeah but working with it sure as fuck isn't

half the fucking time if I just stop including certain headers in another file then the compiler throws like literally 400 fucking errors at me even though the thing(s) I excluded had no bearing on whatever the compiler decides it wants to loudly bitch and whine about

"oh but games were made on it!!!!111!" yeah not without fucking horrific spaghetti code and 900000 different libraries and dependancies designed just to make a single fucking window28 -

Stop teaching people deprecated bulls*it.

I'm taking a "Web Design" course and the teacher wants us to use html attributes and the <font> tag to format pages. He doesn't allow us to use CSS. Says "We'll get to CSS later, right now I'm teaching you HTML". He thought us the <frameset> thing which isn't even supported in HTML5. And of course no <header>, <footer>, <aside> etc.

Same thing in my C++ course. The computers don't even have a C++11 (or newer) compiler. Just an old version of Code::Blocks we're not allowed to update. It does support C++0x so you can still get some of the features, but still.4 -

I propose that the study of Rust and therefore the application of said programming language and all of the technology that compromises it should be made because the language is actually really fucking good. Reading and studying how it manages to manipulate and otherwise use memory without a garbage collector is something to be admired, illuminating in its own accord.

BUT going for it because it is a "beTter C++" should not constitute a basis for it's study.

Let me expand through anecdotal evidence, which is really not to be taken seriously, but at the same time what I am using for my reasoning behind this, please feel free to correct me if I am wrong, for I am a software engineer yes, I do have academic training through a B.S in Computer Science yes, BUT my professional life has been solely dedicated to web development, which admittedly I do not go on about technical details of it with you all because: I am not allowed to(1) and (2)it is better for me to bitch and shit over other petty development related details.

Anecdotal and otherwise non statistically supported evidence: I have seen many motherfuckers doing shit in both C and C++ that ADMIT not covering their mistakes through the use of a debugger. Mostly because (A) using a debugger and proper IDE is for pendejos and debugging is for putos GDB is too hard and the VS IDE is waaaaaa "I onlLy NeeD Vim" and (B) "If an error would have registered then it would not have compiled no?", thus giving me the idea that the most common occurrences of issues through the use of the C father/son languages come from user error, non formal training in the language and a nice cusp of "fuck it it runs" while leaving all sorts of issues that come from manipulating the realm of the Gods "memory".

EVERY manual, book, coming all the way back to the K&C book talks about memory and the way in which developers of these 2 languages are able to manipulate and work on it. EVERY new standard of the ISO implementation of these languages deals, through community effort or standard documentation about the new items excised through features concerning MODERN (meaning, no, the shit you learned 20 years ago won't fucking cut it) will not cut it.

THUS if your ass is not constantly checking what the scalpel of electrical/circuitry/computational representation of algorithms CONDONES in what you are doing then YOU are the fucking problem.

Rust is thus no different from the original ideas of the developers behind Go when stating that their developers are not efficient enough to deal with X language, Rust protects you, because it knows that you are a fucking moron, so the compiler, advanced, and well made as it is, will give you warnings of your own idiotic tendencies, which would not have been required have you not been.....well....a fucking idiot.

Rust is a good language, but I feel one that came out from the necessity of people writing system level software as a bunch of fucking morons.

This speaks a lot more of our academic endeavors and current documentation than anything else. But to me DEALING with the idea of adapting Rust as a better C++ should come from a different point of view.

Do I agree with Linus's point of view of C++? fuck no, I do not, he is a kernel engineer, a damn good one at that regardless of what Dr. Tanenbaum believes(ed) but not everyone writes kernels, and sometimes that everyone requires OOP and additions to the language that they use. Else I would be a fucking moron for dabbling in the dictionary of languages that I use professionally.

BUT in terms of C++ being unsafe and unsecured and a horrible alternative to Rust I personaly do not believe so. I see it as a powerful white canvas, in which you are able to paint software to the best of your ability WHICH then requires thorough scrutiny from the entire team. NOT a quick replacement for something that protects your from your own stupidity BY impending the use of what are otherwise unknown "safe" features.

To be clear: I am not diminishing Rust as the powerhouse of a language that it is, myself I am quite invested in the language. But instead do not feel the reason/need before articles claiming it as the C++ killer.

I am currently heavily invested in C++ since I am trying a lot of different things for a lot of projects, and have been able to discern multiple pain points and unsafe features. Mainly the reason for this is documentation (your mother knows C++) and tooling, ide support, debugging operations, plethora of resources come from it and I have been able to push out to my secret project a lot of good dealings. WHICH I will eventually replicate with Rust to see the main differences.

Online articles stating that one will delimit or otherwise kill the other is well....wrong to me. And not the proper approach.

Anyways, I like big tits and small waists.14 -

TL;DR Pluralsight should be ashamed for taking 299 USD a year and writing some very low-quality quizzes.

I've always heard that Pluralsight is a great platform having some high quality courses, so I chose it as a benefit, as our company was giving us some budget for learning purposes. I've paid (or rather the company did it in the end) 299 USD for this year, which, I guess is not much for US standards, but it is a lot for Eastern European standards.

I didn't actually get to the point of watching any of the courses, but I started to use a feature called "Stack up", which is a long series of questions in a specific theme, like Java, Kotlin, C++, etc., accessible once a day. I must say, I'm amazed by the fact, that people pay quite a great amount of money and they get something so poorly made with a lot of errors and stupid questions.

Take the question from the included image for example. Not only that the 2 possible answers are repeated (and thus I failed to select the correct one from 2 equal answers), but the supposedly correct answer is also missing some type specifications. No Java compiler will compile it this way as far as I know. There would be at least 3 ways to fix it.

Then there is today's gem (should be included as first comment) as well, where the answer is wrong in both Chrome 96, Firefox 95 and Node v10. Heck, THIS IS one of the reasons why you should never use `var` in your JavaScript code, but always `let` and `const`!

So the courses on Pluralsight might be good, but I would be ashamed, if I were to release something like this. People might actually try to solidify their knowledge by solving these quizzes but instead of learning something useful, they will be left with some bullshit. I just don't get how could they release a feature with so much incorrect information and I am kind of disappointed, even if I didn't try the courses yet. 9

9 -

Day 2 in ComSci class (following my last rant)

"Okay, so! All of the schoolwork and homework will be done on paper and pen, submit and I will grade it. Only once, no second chance"

Okay. Okay. This went over my head. What are you gonna do? OCR the code into the compiler, compile it and run to see if we fucked up to give us an F? What are you, god? Here's a brilliant idea, teach them Assembly! Guaranteed error to give us Fs! FUCK YOU3 -

So... C++... Yeah.

> Manage to get a MinGw compiler working in VSCode, and all is "well and good".

> Have difficulties installing SDL, follow tutorial verbatim.

> Compile error.

> 7 hours later no progress.

> 10 hours later no progress.

> 16 hours later no progress.

*Throws laptop at wall*3 -

wow... no kidding.... so C++ is like a language without a compiler holding ur hands and catching u when u screw up...

8

8 -

Buckle up, it's a long one.

Let me tell you why "Tree Shaking" is stupidity incarnate and why Rich Harris needs to stop talking about things he doesn't understand.

For reference, this is a direct response to the 2015 article here: https://medium.com/@Rich_Harris/...

"Tree shaking", as Rich puts it, is NOT dead code removal apparently, but instead only picking the parts that are actually used.

However, Rich has never heard of a C compiler, apparently. In C (or any systems language with basic optimizations), public (visible) members exposed to library consumers must have that code available to them, obviously. However, all of the other cruft that you don't actually use is removed - hence, dead code removal.

How does the compiler do that? Well, it does what Rich calls "tree shaking" by evaluating all of the pieces of code that are used by any codepaths used by any of the exported symbols, not just the "main module" (which doesn't exist in systems libraries).

It's the SAME FUCKING THING, he's just not researched enough to fully fucking understand that. But sure, tell me how the javascript community apparently invented something ELSE that you REALLY just repackaged and made more bloated/downright wrong (React Hooks, webpack, WebAssembly, etc.)

Speaking of Javascript, "tree shaking" is impossible to do with any degree of confidence, unlike statically typed/well defined languages. This is because you can create artificial references to values at runtime using string functions - which means, with the right input, almost anything can be run depending on the input.

How do you figure out what can and can't be? You can't! Since there is a runtime-based codepath and decision tree, you run into properties of Turing's halting problem, which cannot be solved completely.

With stricter languages such as C (which is where "dead code removal" is used quite aggressively), you can make very strong assertions at compile time about the usage of code. This is simply how C is still thousands of times faster than Javascript.

So no, Rich Harris, dead code removal is not "silly". Your entire premise about "live code inclusion" is technical jargon and buzzwordy drivel. Empty words at best.

This sort of shit is annoying and only feeds into this cycle of the web community not being Special enough and having to reinvent every single fucking facet of operating systems in your shitty bloated spyware-like browser and brand it with flashy Matrix-esque imagery and prose.

Fuck all of it.20 -

So legitimately accidentally started building a compiler... Don't ask how because I don't know...

(No it's not a 'true' compiler that will create executables, it just a compiler to make some data easier for my runtime to read it)2 -

Best:

Really getting into Rust. It has taught me so many things.

1. Null is evil

2. Sum types are amazing

3. Compiler can actually have good error output

4. Multi threading is actually really scary if you don't have a compiler to back you up

Worst:

I had to deal with SSIS. It has also taught me many things:

1. No matter how 'mature' a product is, it can be awful. Simply dump a random error code, the user can figure out what went wrong, no need for good error messages.

2. The modern concept of the database is crap. It's a gigantic global state that is used by everyone and owned by no one.

3. Don't use tools that aren't made to be used with version control.

4. Even when you tell your team that it's bad, you will be ignored. -

So, first time ranting, sorry if I mess anything up.

When I first started my current job and got introduced to the system we were coding in, something seemed a little fishy to me. Didn't like the system anyway, but at least the language is a compiler language, so it runs quite quickly, right?

In theory, yeah. If the lead dev liked the IDE that came with it. But he has to REALLY fucking hate it, because rather than using it, he codes in plaintext. No syntax highlighting, no auto-indent, nothing. And he's built the entire damn system around doing that. Sadly the compiler is only integrated into the IDE, so what do we do there? Copy the code from the plaintext file to the IDE to compile it there? No no, why would you. The language has a function you can use to compile some code at runtime.

And so he does. Every. Single. Fucking. Script. There's a single main script that runs and finds the correct textfile to then runtime-compile and execute. So we effectively made a compiler language into a massively unoptimized interpreter lang.

I even mentioned that this might be a problem, but I was completely dismissed, so at that point it's not my problem anymore and I have then switched to a different system anyway.

Couple weeks later I heard the same guy complaining that the scripts were running almost the whole night so we'd probably need some better hardware or something.

Well if only there was a really obvious solution that would improve the performance by probably about a factor of 20 or so...12 -

I found this on a wiki with Haskell Humor... it's interesting...

How to Shoot Your Self in the Foot With Haskell: Putting the unsafe in unsafePerformIO!

You shoot the gun, but the bullet gets trapped in the IO monad.

Couldn't match expected type 'Deer' against inferred type 'Foot'.

While compiling your program the compiler produces a type error long enough to overflow a kernel buffer, overwrite the trigger control register and shoot you in the foot.

After trying to decipher the type errors from the compiler, your head explodes.

After you've finally found a way to circumvent the type system and shoot yourself in the foot, Oleg appears out of nothing and shoots you in the foot for coming up with it before him.

You shoot the gun but nothing happens (Haskell is pure, after all).

Your foot is fine, until you try to walk on it, at which point it becomes mangled.

You have a shootFoot function which you've proven correct. QuickCheck validates it for arbitrary you-like values. It will be evaluated only when you end up at the hospital. You hope this doesn't come to pass, as it actually returns a bullet-ridden copy of yourself and you don't want to be garbage-collected.

foreign import ccall "shootparts.h shootfoot" shoot_foot :: Gun -> Programmer -> IO ()

shootSelfInFoot = unsafePerformIO . shoot . foot $ self -- Shoot self in foot 0 or more times depending on evaluation order

No instance for (Target Foot)

arising from use of `shoot' at SelfInflictedInjury.hs:1:0

Possible fix: add an instance declaration for (Target Foot)

In the expression: shoot foot

You go to shoot yourself in the foot but the bullet is in the ST monad and the gun is in the IO monad, so you can't.

You ask Haskell to shoot you in the foot but by the rules of lazy evaluation you don't need the result yet so it doesn't happen.

You decide to shoot yourself in the foot but get distracted devising a ballistics algebra and wondering if you can do the calculations in the type system.

You want to shoot yourself in the foot but realize there is no Gun datatype so use Arrows instead.

You shoot in the direction of your foot, but since you are inside the STM monad you can just retry until you figure out what to do.

You shoot yourself in the foot, but you are perfectly fine as long you just don't evaluate the foot.

You shoot yourself in the foot, but nothing happens unless you start walking.

Don't forget about memory consumption! If you don't look, the bullet causes heap overflow. If you look, the bullet causes stack overflow.

You *appear* to have deliberately shot yourself in the foot, and yet your program actually runs perfectly OK due to lazy evaluation. (So long as you remember to not look at your foot...)

You aim the gun at your foot, pull the trigger and remove the clip. When you look at your undamaged foot, the hammer clicks on an empty barrel.1 -

My two cent: Java is fucking terrible for computer science. Why the fuck would you teach somebody such a verbose language with so many unwritten rules?

If you really want your students to learn about computer, why not C? Java has no pointer, no passed by reference, no memory management, a lots of obscure classes structure and design pattern, this shit is garbage. The student will almost never has contact with the compiler, many don't even know of existence of a compiler.

Java is so enterprise focused and just fucked up for educating purpose. And I say it as somebody who (still) uses it as main language.

If you want your students to be productive and learn about software engineering, why not Python? Things are simple in Python can can be done way easier without students becoming code monkeys (assuming they don't use for each task a whole library). I mean java takes who god damn class and an explicitly declared entry point which is btw. fucking verbose to print something into the console.

Fuck Java.17 -

Im forced to work with c++ on windows for a course.

0) c++ is fucking unusable without a central repo for managing dependencies. Maybe im just too used to maven but you shouldnt be downloading dll files in 2018.

1) visual studio can go suck a fat one. I got a fairly fast pc and it takes fucking forever to load anything. For comparison, eclipse with all my plugins (and i have a lot) loads in ~10 seconds, vs2017 does in 35.

2) why the fuck is there no cross platform compilation for c++? Its supposed to run on everything right? Whats so hard about porting a fucking linux compiler so i dont realixe i have .exe files when i want to work with my laptop on the bus?

3) c++17 (? Or whatevers the newest) syntax is like a deep barf on a hot summer day after eating a whole watermelon. Its fucking unreadable and autopointers simply dont work. And its not even my lack of skill this time, its the code that the other members used and it worked for half of them.6 -

By constantly fucking around with things that interest me. If a topic fascinates me i will either lool for shit around youtube, read proper documentacion or buy specialized books for it.

Most recently it has been compiler design. I wanna write my own language, for testing and learning more than anything.

I dunno, it keeps shit fun and interesting. Now, much of that shit ain't applied to what I do in web. But it does help to keep the mind fresh as well as giving me the chance to eventually invent my own language. Write a large system with it, use it at the institution and have them pay me obscene ammounts of money to maintain it.

It will be like VB6 or VbScript, but with {}s, immutable values by default and no looping, cuz I am evil AF -

Coworker: I did not progress much but at least I managed to get rid of all compiler warnings.

Me: That's okay. What were they about?

Coworker: No idea.

Me: How did you get rid of them then??

Coworker: I removed the "Wall" option when I use gcc. -

When your new build is compiling and just scooting right along so you think... sure, I could go for some food. No. Nope. Not even. It chooses the exact moment you leave to nope the fuck out completely with the most random compiler errors that you would have never seen had you just been sitting there in the chair. It's like it knows. Maybe next time I leave I'll promise to bring it back a taco.1

-

MSbuild makes me want to blow my brains out.

I know it's no longer used in .NET Core and all the lucky people that don't have to deal with .NET Framework can happily move on.

But here I am, a complete idiot. Expecting MSBuild to build the exact same way from the CLI as it does if I run a build in Visual Studio. Expecting the build server to consistently produce the same result as if I built my solution locally.

Demanding meaningful earnings and error messages that don't leave me completely perplexed as to what's actually going on in the compiler.

Fuck me and fuck .NET Framework. Thank you for coming to my TED talk.8 -

finally got TI to cough up their SDK and I noticed there's no compiler or linker or anything. Turns out I need to use TASM.

...TASM is for MS-DOS or compatible. I'm on Linux.

Well, it went poorly, as usual, specifically like this:

- tried to automate building with DOSBox config and Python script: output binary always corrupted. Manually repeated, TASM mangles output on DOSBox every time. No PCem or 86box, and i'm on a Ryzen, so no KVM DOS. Out of luck there.

- TASM Linux build or wrapper? No build, but there is a wrapper! ...wait, it needs... 4 things written by random people to be made from source. I mean, that's not actually that bad... oh, after setting all of them up (and struggling through some autoconf/automake bullshit, one of the programs only had source for a 2.x kernel and autoconf/automake were not happy about it) it fails because one project's been worked on a lot more and dropped support for working with the other 3... goddammit.

- Community SDK? Several options for this... but all of them need .NET 2 to run on Win9x, don't work in Wine, or require... hey look, TASM! GODDAMMIT!

- DOS on a real machine? It's a massive bitch to shuttle files to and from a real DOS machine quickly and I can't take 30 minutes between builds that take me 4 minutes to change enough to need tested again.

why must i suffer like this22 -

Learning Rust.

Holy brainfucking brain melt, those references, scoping and borrowing and cloning and whatnot, because there is no garbage collector, but also no direct memory management.

It's cool, but also hard for a noob coming from the JVM/Android. The compiler error messages are helpful, but I immediately found some cryptic ones that don't help me at all.9 -

I was stuck at this error for the 4-6 days.. Did lots of research on stackoverflow, Google, YT.. Asked my peers tried like hell. Finally one of friends told me you aren't giving I/p and how can you expect an o/p there is no error neither in the compiler nor in the code..

Me: ;_; 9

9 -