Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "unix"

-

So, I needed a package installed on one of our Unix servers. The package manager--which is obsolete garbage--was failing with a message which can only be described as a variant of "Go fuck yourself". A quick Google search didn't help.

3 espressos and an eternity later, I have descended into a manic state. My hair has turned grey and I have started lactating. As a last-ditch effort, I try a new search query on Google, and the first link takes me to a forum with a thread discussing a similar issue. The last post in the thread has a solution which works for me. After fixing the issue, everything in the world feels right and I decide to thank the generous poster, who is like an angel to me at this point.

Guess what? The poster is none other than me. 8 months back, I had created a user account on the forum just to post the solution to a similar issue I had on another server.13 -

One of my most favorite quotes:

"UNIX is very simple, it just needs a genius to understand its simplicity."

- Dennis Ritchie2 -

I guess unix was made by pervs as I have to command it to Touch, Finger, Unzip, Strip, Mount, Fsck, Unmount and Sleep5

-

"UNIX is basically a simple operating system, but you have to be a genius to understand the simplicity."

-- Dennis Ritchie10 -

My $1 Unix stickers took 4 days to arrive at my complex in my Bulgarian village. Wonderful Monday to you all, you fucks!

19

19 -

If you understand -_-

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep😋13 -

Every Unix command eventually become an internet service .

Grep- > Google

rsync- > Dropbox

man- > stack overflow

cron- > ifttt5 -

!rant

Fun fact

Did you know that there is a UNIX command called "tac" which prints the contents of a file from bottom to top, unlike "cat" which prints them top to bottom.16 -

I really wish I had known this before. Check this out guys. You can type a unix command and find out what each options mean. Rather than man paging the commands this is a easier way to do it :)

5

5 -

On Thursday, May 20, 2088 9:55:59 PM, my life will be complete: Unix Epoch time will equal 0xdeadbeef.10

-

Facebook made my day: "Your email and resume say Unix-like systems, which is not exactly Unix."

Source: http://imgur.com/hw2pnDt16 -

My school computers are *the most secure machines* on the planet as per the network admins at school.

A simple Unix command like sudo -i allows you to break in the system with "root" as password.

Pretty secure, right?3 -

Don't really have one but I've git to say that I find it rather cool that Linus Torvalds thought "fuck it, we need an open Unix alternative" and that a very big potion of the world runs on the kernel he wrote for a big part, now.6

-

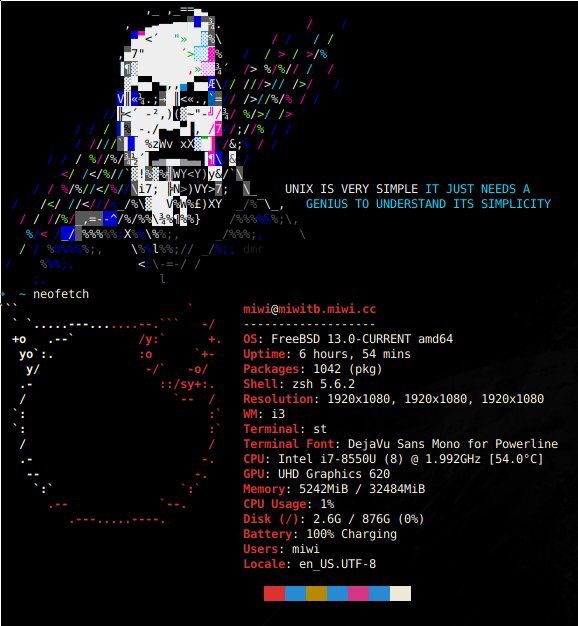

They: What do you do for a living?

Me: DevOps, FreeBSD Administration, Ruby, ...

They: hmm?

Me: administrator for UNIX systems

They: whut?

Me: I do stuff with computers

They: I really like Apple

Me: *sigh*

.... and every time, too...10 -

More Unix commands are becoming web services. What else can you think of?

Grep -> Google

rsync -> Dropbox

man -> stack overflow

cron -> ifttt"9 -

Oh boy, my fellow devRanters, I just signed an 4 digit monthly salary (that's a lot in Lithuania) job contract, I'm a future Unix infrastructure engineer :o

As per original concept of ranting, it's been almost two months since I wrote for the stickers and didn't get a reply >:(11 -

Me: "Ugh. Soo insensitive.." *angry muttering*

Curious cousin: "Whom? What? Why?"

Me: "My stupid Mac is not case sensitive so I have to mount a Unix partition and reference it from somewhere else. Why wouldn't they just make a case sensitive filesystem like a proper Unix based OS?"

Clearly uninterested cousin: "seriously?! You called your laptop insensitive? I thought you were talking about a guy" ..

Filthy casuals.6 -

"There are two major products that come out of Berkeley: LSD and UNIX. We don’t believe this to be a coincidence." - Jeremy S. Anderson3

-

After accidently broken my database, I have a Little Fun with Cortana.

I asked her if she Likes Linux.

She answered:

Linux, Unix, Tux.. I Like words ending on x. It reminds me of the Xbox.

:D2 -

"Those who do not understand Unix are condemned to reinvent it, poorly."

— Henry Spencer

Usenet signature, November 19875 -

My first semester in college I had a six-week Saturday course on how to use UNIX that ran from 9-12. The professor hada habit of going at least an hour over time each week, so by the fourth week we're getting a bit tired of it.

That particular session, right at noon, he decided to teach us how to message other people on the network. Finally, we made our way over to the wall command a half hour later. Bored to tears, I type the following into my console:

wall "Are we done yet?"

Suddenly, the projector updates:

Kaji says:

"Are we done yet?"

Not realizing my name was going to be attached to it, I sank back into my seat a bit. The professor glared at me for about 5 seconds, then promptly wrapped up. Future class sessions ended on time. -

I am a Unix Creationist. I believe the world was created on January 1, 1970 and, as prophesied, will end on January 19, 2038.2

-

"You use a Mac. How cute. You must not know anything about computers, Apple fanboy. Windows is far superior."

"Unix, bitch. Fuck you."

#atleastuselinuxffs9 -

"Can't we extract the UNIX from Linux and use it as our server operating system?"

fml

Linux isn't even based on UNIX, but this piece of shit of a bitch of client was so freaking stubborn, and wanted a RAR file of UNIX from me7 -

How to reproduce:

human - F**k

process - Fork

Is that skeumorphism of words/sound by Unix developers ?5 -

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep3 -

Since I've been seeing a lot of Linux distros, here's mine

Not a daily driver but something to get me used to it 8

8 -

When I was in college, our email was on a Unix server. We would login via serial connection or telnet over the network, and get a korn shell. The server was poorly secured. Everyone's login device was world writable. So people would just see who was online, see the username of someone they wanted to mess with, take note of the pts(network) or tty(serial) device their connection used, and cat ASCII penises to it.

cat animated_dong.txt > /dev/pts/4

It was a simpler time.2 -

Today I learned what GNU acronym stands for:

GNU = GNU's Not Unix!

😂 why doesn't Computer Science have more acronyms like that?14 -

Best advice for dev job hunting is work on your soft skills. Don't be a fucking hero, prove your teamwork ability.

Remember all the rules of all religions and social communities can be summed up in one line: "Don't be a dick!"1 -

Did you know..

There is an Easter egg in the Unix man command, if you call it at exactly 30 mins over midnight.

Then it prints "gimme gimme gimme"; (all night long)..7 -

Had a great time yesterday explaining a C++ dev on a UNIX box that yes, he actually has to shut down his machine before adding this new extra 8gb memory...4

-

Back in my sysadmin days we had an IT zoo to look after. And I mean it... Linux side was allright, but unix.... Most unices were no longer supported. Some of their vendors' companies were already long gone.

There was a distant corner in our estate known to like 2 people only, both have left the company long ago. And one server in that corner went down. It took 2 days to find any info about the device. And connecting to it looked like:

1 ssh to a jumpbox #1

2 ssh to a jumpbox #2

3 ssh to a dmz jumpbox

4 ssh to an aix workload

5 fire up a vnc server

6 open up a vnc client on my workstation, connect to than vnc server [forgot to mention, all ssh connections had to forward a vnc port to my pc]

7 in vnc viewer, open up a terminal

8 ssh to hp-uxes' jumpbox

9 ssh to the problematic hp-ux

.....6 -

Ever wonder why man prints 'gimme gimme gimme' only at 12:30? https://unix.stackexchange.com/q/...3

-

First dev job: port Unix on Transputer, a (now defunct) bizarre processor with no stack, no registers and no compiler. That was fun! And that was in 1991 😎

3

3 -

When you're a multimillion dollar company that ships software used by oil and gas and you still haven't moved away from VB6 for extensions while in the 21st century... Well, you're certainly missing out on the accelerating telegram industry.

-

I was looking through old entries in my keepass, and I happened across this bit from when I worked in places that still had unix servers. I was so angry at the impossible input issues they had that I put this into my 'handy commands' section.

2

2 -

Just spent 15 minutes trying to explain Unix time and Y2K to a liberal arts major who wanted to know why 2038 is such a huge deal. It was technical, frustrating, and challenging. Kinda like debugging3

-

Using a copyrighted image on a website not knowing it was copyrighted. That was stupid and humbling. So caught up in the roots lost sight of the leaves.

Lesson learned: Assume nothing, question everything.1 -

If I read a plugin description claiming that 777 permissions are required for it to work I swear I am going to fucking punch that idiot "developer" in the face and make sure they never touch a computer again.

If you don't understand the concept of unix system permissions then stay the fuck away from anything related to it and start a carreer at the car wash instead of cluttering the web with your bullshit.3 -

What Unix tool would like to use in real world? Like superpower :)

I would choose grep.

- easy ads filtering with -f

- fast search in books15 -

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep3 -

Me after a long coding session with a well prepared working flow: I am such a great computer scientist, I can conquer the world.

Right after that I found a repository for computer science papers and got immidiately hooked. Well, the level of knowledge and theory is so immense that it brought me back to ground of reality again: I know so little that it is almost ridiculous, even if I read and code 16 hours a day I may never understand computer science as a whole.

Le me sad.11 -

Dennis Ritchie.

Can't imagine life without C, I mean what if C wasn't even invenented? What if Unix wasn't there?

Dayumn!!!7 -

Yknow what the best part about Unix is? (Not Linux. Like old school Unix. AIX, HPUX, or in this specific case: Solaris)

It never needs to be updated. like ever. Even when new features are added 5 years ago to add features that GNU has had for literally decades. Updates are for the weak. Because why should I be able to type "netstat -natup" when instead you can enjoy several hours of developing the nightmare one-liner that is:

Pfiles /proc/* | awk '/^[0-9]/ {p=$0} /port/ {printf "%.4s %-30s %-8s %s\n", $1,$3,$5,p}' 2>/dev/null

Isn't that just so much more fun?!

Thanks guys. I'm going back to GNU now if you don't mind.6 -

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep5 -

Oldie but goldie:

"I started using vim a while ago and haven't stopped ever since. Mainly because I don't know how to exit..."4 -

Why devs like Linux/Unix :

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep3 -

I'm taking an entrepreneurship course and there is this one guy who talks empty words just to impress others.

He uses linux and at every single course he opens the terminal just to run the commands "sudo apt update" and "sudo apt remove", the rest of the time he is on discord.

He always switches to the terminal so you can see what an 'expert' he is17 -

"Linux is more secure." Put on your tin-foil hats. As you can:

>Root over 50% of linux servers you encounter in the wild with two easy scripts,

Linux_Exploit_Suggester [0], and unix-privesc-check [1].

(sauce: Phineas Phisher - http://pastebin.com/raw/cRYvK4jb)17 -

In case anyone's interested: Unixstickers currently has a sale going on, where you can get the pro-pack for $1 and world wide free shipping

https://unixstickers.com/products/...9 -

Idk if anyone here noticed.... sudo sounds like 速度 in Chinese, which means quickly. So the every time I use this command I just feel like I'm rushing computer to do something for me5

-

Is it just me, or does anyone miss logging into a Unix/Linux machine, doing a 'w' or 'who' and seeing a long list of folks all using the machine simultaneously? I still reflexively run 'who' as soon as I log into any real or virtual Unix or Linux machine and I am still slightly disappointed to find I'm all alone on it.3

-

What are your most used commands? Find out by running:

history | awk '{a[$2]++}END{for(i in a){print a[i] " " i}}' | sort -rn | head -1 10

10 -

"Unix filenames are case-sensitive?! Hur hur, that must be really confusing!"

Well, no, if you're not a fucking mouth-breathing cretin it isn't.2 -

You know what I realized something. And im gonna brag about it. I taught myself laravel, vue, JavaScript, basic Unix server admin stuff and more all without every asking a single question on a forum.

Basically out of laziness, and impatience, though.

Still, go me.4 -

Give everyone the ability to intuitively grasp the concept of unix timestamps.

No more timezones or DST, no more confusion about formats.7 -

So I am the resident Linux Guru and a contract manager asks me who wrote rm. I guessed and said Dennis Ritchie.

"I thought you were the UNIX Guy" he says. He goes on to claim that Robert Morris wrote it and named it rm after his initials. ... In front of the whole team he did this. Ok.

Did some research and even contacted Robert T Morris at MIT ( his son) and he pointed me to a sight with documentation from the initial UNIX research at Bell Labs where his dad did in fact work with Dennis Ritchie and Ken Thompson. Turns out, I was right.

-

Me: *give my reviewer about Unix in our Operating Systems lesson for the quiz*

The friend who I gave it: 1

1 -

if you want to learn about sockets I recommend reading this guide.

http://beej.us/guide/bgnet/...

is does require some prev knowledge of unix things, but easy to follow otherwise -

Master Foo and the Script Kiddie

(from the Rootless Root Unix Koans of Master Foo)

A stranger from the land of Woot came to Master Foo as he was eating the morning meal with his students.

“I hear y00 are very l33t,” he said. “Pl33z teach m3 all y00 know.”

Master Foo's students looked at each other, confused by the stranger's barbarous language. Master Foo just smiled and replied: “You wish to learn the Way of Unix?”

“I want to b3 a wizard hax0r,” the stranger replied, “and 0wn ever3one's b0xen.”

“I do not teach that Way,” replied Master Foo.

The stranger grew agitated. “D00d, y00 r nothing but a p0ser,” he said. “If y00 n00 anything, y00 wud t33ch m3.”

“There is a path,” said Master Foo, “that might bring you to wisdom.” The master scribbled an IP address on a piece of paper. “Cracking this box should pose you little difficulty, as its guardians are incompetent. Return and tell me what you find.”

The stranger bowed and left. Master Foo finished his meal.

Days passed, then months. The stranger was forgotten.

Years later, the stranger from the land of Woot returned.

“Damn you!” he said, “I cracked that box, and it was easy like you said. But I got busted by the FBI and thrown in jail.”

“Good,” said Master Foo. “You are ready for the next lesson.” He scribbled an IP address on another piece of paper and handed it to the stranger.

“Are you crazy?” the stranger yelled. “After what I've been through, I'm never going to break into a computer again!”

Master Foo smiled. “Here,” he said, “is the beginning of wisdom.”

On hearing this, the stranger was enlightened.1 -

Brush up on your unix skills while supporting charity and O'Reilly media: there's a Unix book humble bundle! Will not be equally interesting for all, but worth a look.

https://humblebundle.com/books/...2 -

Anyone else here who uses the 'clear' command excessively on unix systems?

I hate it when I want to move on to the next thing I have to do with a messy screen full with text, cls and clear are my favourites8 -

Back in college, one of my professors who was teaching Introduction to UNIX class, said :

"head -n 2 file.txt" displays the first 2 lines of file.txt.

I asked : and to show the last 2 ones ?

"Don't be a fool, +n instead of -n" he answered.

RIP UNIX ...9 -

Guys I know a programmer is never judged by the OS he uses, but fuck me I want to bite off my fingernails when installing windows...(never touched anything but unix for the last 5 years) :(

5

5 -

Professor: "I've been using java for many, many years and know close to everything."

Student: "How do I compile my code on the unix server?"

Professor: "uhh.... I don't know."

Let's start hiring people who actually know what they're doing.1 -

I love Unix, Linux all that shit (macOS can fuck off though). But why WHY WHY does every pissing update have to break something?! Guys I need fucking networking, not even intertubes just basic networking.

Anyway, I've come up with a solution. It is quicker for me to install a new OS and restore files from backup than to fix it, so that's what I do.10 -

The more I study IT and programming languages, the more I'm leaving Windows for Unix. Windows feels so heavy, I don't know why.5

-

Every Unix command eventually become an internet service.

Grep -> Google

rsync -> Dropbox

man -> stack overflow

cron -> ifttt"

Anything more you can think of?4 -

So Microsoft doesn't ship the ODBC drivers for Access on their new distros of office because they run in an isolated environment... THEN WHAT GOOD IS YOUR FUCKING EXPORT TO ODBC OPTION IS. YOU'RE MAKING MY JOB SO MUCH HARDER THAN IT NEEDS TO BE.6

-

If you need to relax, watch this dokumentation from the year 1982 about UNIX and C at bell labs with Dennis Ritchie, Ken Thompsen and mr. super cool Brian W. Kernighan.

https://youtu.be/tc4ROCJYbm0

amazing right? -

Writing 'echo var' instead of 'echo $var' in a shell has the result of the system successfully dad joking you

-

Dennis Ritchie and Ken Thompson, without whom there would be no C or Unix. Titans. Fountainheads of technology.

-

Biggest hurdle I have overcome is <b>myself</b>.

All my expectations, worries, fears, and doubts definitely caused major hurdles I had to crash through, trip and fall into, or they downright exploded into balls of fire as I would stand dumbfounded and burned by flames of regret.

Learning I was the blocker to greater achievement, success and ultimately happiness was a very hard lesson for me to learn, and a lesson and discipline that I still battle with today.

It is difficult to climb the seven story mountain of madness with heavy burdens, plodding with little progress.

Free the weight, and the natural warm air currents will lift high the spirit, and the body will follow.

"Angels fly because they take themselves lightly" ~GKC1 -

!rant

Found out about JetBrains student program and asp.net unix support and now I am the happiest person on earth!😊🙂😋 -

I don't know if we can be friend...

I don't like cable art, my desk is messy(no setup), no stickers on my mac, don't care I have to use mac or pc or unix, I don't code at night.....anymore, I don't have problem with ;

But....I love coffee.2 -

"One of my most productive days was throwing away 1,000 lines of code." - Ken Thompson (co-creator of Unix and Go)1

-

Cause heaps of YouTube tutorials are crap nowadays, I’d like to collect your favorites here. And when you are in need look up good channels here.

I’ll start with mine:

Jacob Sorber (C / Unix)

Creel (general low level stuff)

The Cherno (C++)8 -

UNIX is basically a simple operating system, but you have to be a genius to understand the simplicity.1

-

So the Computer Science departent at my university has a shelf where people can give away technical books they don't need anymore. I found a giant UNIX system administration book from 1995 there the other day, and i am blown away by how many useful things i could find in such an old book; basically all of the unix flavours mentioned are long dead (with the exception of bsd and linux ofc), and so is 99% of the software, but all of the core concepts and basic tasks still hold true in 2018... Isn't that amazing? :D Where else can you find a system that still works the same after 23 years?4

-

Welcome to the Unix stack exchange network where ...

FUCK YOU! I AM THE UNIX KING AND YOU SHOULD BE ASHAMED FOR NOT KNOWING WHAT I KNOW2 -

“MacOS is derived BSD/Unix while Linux is derived from Minx/Unix. They both are similar, but not identical toolchains.”

So, what about them is similar and what about them is different ?6 -

"This is the Unix philosophy: Write programs that do one thing and do it well. Write programs to work together. Write programs to handle text streams, because that is a universal interface." -Doug McIlroy

In today's context we can draw parallels with the microservice approach towards building software.2 -

Man, people have the weirdest fetishes for using the most unreadable acrobatic shell garbage you have ever seen.

Some StackOverflow answers are hilarious, like the question could be something like "how do I capture regex groups and put them in a variable array?".

The answer would be some multiline command using every goddamn character possible, no indentation, no spaces to make sense of the pieces.

Regex in unix is an unholy mess. You have sed (with its modes), awk, grep (and grep -P), egrep.

I'll take js regex anytime of the day.

And everytime you need to do one simple single goddamn thing, each time it's a different broken ass syntax.

The resulting command that you end up picking is something that you'll probably forget in the next hour.

I like a good challenge, but readability is important too.

Or maybe I have very rudimentary shell skills.5 -

My Unix class

👨💻using nice looking theme for vs code to edit my bash script

Prof: That's a nice looking theme( he thought it was vim theme)

Me: um.. um.. It's vs code, new guy in a town

Prof: uh! 🤔

Me: ( 5 sec silence) um, It's from Microsoft

Prof: GET OUT!3 -

I normally just have nightmares about the projects I'm working on, especially when I struggle with a bug for days. Those are usually about just me stressing out about it. However, I have a lot of dreams about computers/technology, not necessarily coding-related:

- datacenters were just potato fields. If you go work the field, you'd go data mining

- in Biology, when being taught how having children works, you only tell that "parenting is only chmod-ing the rights of your children until they become the owners themselves"

- IP addresses with emojis instead of numbers were a standard now and they actually managed to replace IPv4, because everyone was so into emojis. They named it IPvE

- I witnessed a new Big Bang when the 32-bit Unix time overflown in 2038, and we were all quantum bits3 -

Got my unix stickers today 🤓

Thanks to @gitlog for telling me about this and also to the person who posted it here for the first time 7

7 -

Somebody has to say it.

React is a lot more trouble that it's worth and has fewer good ideas than people give it credit for. It's a great tool for any other context that's not the browser, and the only reason its the new cool kid in town is because Facebook made it so, and because x-rays went nowhere.undefined remember writing a script tag and that was it react web development webpack is bullshit guys come on webdev5 -

"An air sickness bag, printed with the phrase "UNIX barf bag", was inserted into the inside back cover of every copy by the publisher.”

https://en.m.wikipedia.org/wiki/... -

Translating win32 calls to whatever the hell there is in Unix and Unix-like OSes (well, most of them) in order to port a certain game net code library and dear god why did I volunteer myself for this task

At least pevents is there to help, but too bad cmake doesn’t want to compile it with the flag I need (“-DWFMO”) in order to make the “WaitForMultipleEvents” method to work at all. Instead no matter what options I give it on the command line or how I tell VS Code to do it, it seems to give me the finger to my fucking face.

Doing it for games on the cooler OSes... doing it for the community... come on...2 -

my coworking has a windows machine and wants to work on unix, is bitching about all the distros and their ugglyness in interface, missing software and i'm just here on my mac like....

-

Well, they are just fucking kidding

Unix guru on entry level... That moment, when you realise that client is too lazy to pick option intermediate or expert, but expects somebody to do evetything 5

5 -

Hi, I am an Linux/Unix noobie.

Is there any other command line tools like GREP?

I want to take a look at it and think whetehr it will be great for a small school project or not.5 -

Recently got around to dual booting my home machine with Ubuntu.

Now every time I log in, in my head i hear the kid from Jurassic Park exclaim "this is a unix system!"1 -

To all Linux-, Unix-, MacOS-Users here.

Stop whatever your doing,

Install Elvish-Shell,

Forget what what you wanted to do because you get distracted by this awesome piece of software,

Eventually continue what you were doing in a few hours.5 -

Hello! My name is opalqnka and I am a Windows user.

I followed the 12-step program and now I am a step away to being certified LPIC Linux Engineer.

As step 12 preaches, here I humbly share the Program:

1. We admitted we were powerless over Windows - that our lives had become unmanageable.

2. Came to believe that a Power greater than ourselves could restore us to sanity.

3. Made a decision to turn our will and our lives over to the care of Linus as we understood Him.

4. Made a searching and fearless moral inventory of ourselves.

5. Admitted to Linus, to ourselves and to another human being the exact nature of our wrongs.

6. We’re entirely ready to have Linus remove all these defects of character.

7. Humbly asked Him to remove our shortcomings.

8. Made a list of all persons we had harmed, and became willing to make amends to them all.

9. Made direct amends to such people wherever possible, except when to do so would injure them or others.

10. Continued to take personal inventory and when we were wrong promptly admitted it.

11. Sought through bash scripting and kernel troubleshooting to improve our conscious contact with Linus as we understood Him, praying only for knowledge of His will for us and the power to carry that out.

12. Having had a spiritual awakening as the result of these steps, we tried to carry this message to Windows users and to practice these principles in all our affairs.4 -

*Emojis in UNIX*

- Open Cheese

- Open terminal

- Type :(){:| &};:

- See emojis formed live on Cheese5 -

cant we already get to a point where we have one single fat datetime format in CS...

ISO this, RFC that, UNIX those10 -

After the fucking graduating day, got Unix stickers, man I thought I would never get it, they reposted it with change in address . Wonderful service.

5

5 -

GNU is a recursive acronym of GNU is not Unix and ironically unixstickers.com is mostly selling GNU/Linux stickers.🤔2

-

- scripting some unix helpu script

- searching google and stackoverflow for simple solutions:

"Just use 'sed --akeib )-£?"/'/#'. Can't you read man pages ffs?"

"Dude, awk is totally the thing you want to use for this kinda thing!"

"Honestly, have you ever heard of perl?"4 -

That moment when you've been working on unix based project with vim for several days and you get back to Eclipse and always type :wq to save your file ...

-

Generator functions should be treated like sorting algorithms: Not worth your time if all you have is 4 or less async instructions.

Callback hell is actually kind of nice and warm when you're a just a few levels down. If you're really confused by your obfuscated code, you suck at node. -

Since Friday devRant posts where errors are introduced by very dumb things like commas have stood out to me.

Today's error fix. Line 1352. A string input defined in the spec file was set for 13 length. The body file had 12 dashes to represent this input.

Really, one dash, four days to solve.

Oh and Unix over Windows because my compiler on Windows didn't catch it but the Unix one sure did which is how I found it. -

Compiling on Windows feels like an Internet Browsing Simulator.

Really shows how incredible the central Repository and System Package Managment systems of Unix are.

Now... Back to downloading the remainder of the required libraries.7 -

https://thehackernews.com/2024/02/...

44 years after Unix, Windows gets sudo. I'm proud of the little guy. He's starting to grow up!13 -

So, you all may remember my rant about my visual impairment. This dude applied the UNIX philosophy to glasses: They do one thing, and one thing well. He actually calls them "task-specific glasses" but come on now, that's the OG UNIX concept!

No, I will not have to have surgery - supposedly. The glasses he builds are ~$25,000. He said that when we go over spring break, he'll determine if we can go from 98% to 100% positive. If this can be pulled off, my life could be forever changed, for the better, hopefully...3 -

Without Unix, there would have been no Minix (Tanenbaum et al.) orGNU (Richard Stallman et al.).Without Minix, there would be no inspiration to write Linux. Remember that Linus started his “project” because he didn’t like many of the design decisions Tanenbaum has taken in Minix, including the microkernel. In fact, Linus has tried to submit some changes to the professor and the latter rejected them. So the young chap decided to write his own kernel using his design.Without GNU, there would be no open source tools that Linus himself used to write, compile, test and distribute his project, to become a few years later a global phenomenon. Also, the fact that GNU was already an established Unix clone (minus an operating kernel) at that time helped Linus to focus on the missing part, the kernel. Otherwise, he would not have known where to start.And finally, Unix was the template all of the above (and more) were trying to imitate. Without it, there would have been nothing to clone from.1

-

Trying a new font for general use. This font was one of the options for powerline that's based on the terminal fonts from the mid 70's. It's kinda funny how much tech has changed and yet how little of it really has.

I won't use it for dev work though. That credit goes to Fira Code. 4

4 -

Had a customer today that claimed that crontab did not work as it should, and he has proof of that (in form of some very abstract logs).

Well, I guess noone can rely on one of the most fundamental binary in the unix world. -

New job had me working on a Mac.. great! Let's give this a shot and see what the fuss is about.

One year later and I can now knowledgeably say that macOS is balls slow, the worst Unix I've used day to day, Debian/Ubuntu 4 lyf.1 -

When you learn to work with unix but you forget what "man" stands for because english is not your native language so you man man...

Its "manual" btw... -

Catastrophic Unix failure :(

Full server rebuild ( samba, Apache, cloud) adding back all little tweaks

Thank God for daily backups (full backup restore failed to solve the problem, probably some weird pam issue)

FML 😄 -

Must be K&R, Ken Thompson for being the inventors of Unix and C. Also, lots of authors of various books prescribed in college

-

Lost 1.5 hours on Java trying to write a file until user enters EOF. Filled the program with .flush(). Then @freddy6896 points out that i was using Ctrl+C instead of Ctrl+D, killing the process before the .close() :/

-

Unix is so incredibly beautiful. Everyday I discover something new that makes me fall even deeper in love with it.

For real, I've become a huge YAGNI fan over the last few years and Unix is pure yagni. It's so beautifully pragmatic and simple yet flexible and powerful14 -

Was invited to interview by agent for 1yr contract position. Was accepted for permament position with bigger salary instead. Now I’m an Unix Infrastructure Engineer

-

Can anyone suggest good book for learning how an os works

Working of microprocessor

Unix

C

C++

book for complete software development form noob to expert8 -

Me writing Bash when I only knew Bash: ah yes ageless, timeless, forged in the crucible of 30 years of Unix production systems, an elegant weapon from a more civilized age

Me writing Bash after 6 months of Python: wtf is this shit2 -

What happens on Friday, 11 April 2262 23:47:16.855, to the Unix timestamp? It arrives to the maximum value8

-

Q: What do you get when you create a homebrew query language that uses both the stream oriented principles of Unix data pipes and the relational ideas underlying an RDBMS and use incomplete documentation to support it?

A: A frustrated borderline homicidal engineer.2 -

Isn't it ironic that today most of the operating systems are based unix while unix sounds like the word "unique"?1

-

So I had this JSON thingy, where I named the property containing a datetime string "timestamp".

For some reason, JS decided to convert that into a unix timestamp int on parse. Thx for nothing.6 -

I don't want to start a war here, but I love the power of vim.

I prefer vim.tiny because I can find it on practically any UNIX-based machine - out of the box. Copy over my .exrc and I'm already rolling.6 -

In Unix everything does one thing and does it well. Except emacs which does everything really badly1

-

Happy April Fools! Hope you all performed an obligatory `sudo rm -rf *` on at least one of your coworkers today!2

-

Next time you think you’re failing at a new open source venture, remember the stories of your UNIX ancestors (eunuchs ancestors?) ;)

Unix at 50: How the OS that powered smartphones started from failure

Today, Unix powers iOS and Android—its legend begins with a gator and a trio of researchers.

https://arstechnica.com/gadgets/...2 -

Still I’m not sure that /usr is stands for Universal System Resources, or maybe it stands for Unix System Resources or User System Resources :)2

-

Yes, you did nothing... You just restarted those 3 servers on Hyper-V without changing anything... And you did not change the Settings of 2 Windows Server to a static amount of Ram. And you also did not change the settings of the Unix Server to dynamic amount of Ram. And this has absolute nothing to do with the constantly crashing apps on the unix server sience then, because he does not support Dynamic Memory.

Fuck all those people who claim that they did nothing, even though it's obvious. -

I love my Mac but damn, most MacOS releases are so damn useless, I won't do a major OS overhaul (updating from Big Sur to Montrey) just to get Share Play and the opportunity to watch movies together with my few Mac using friends, I don't need those fucking marketing driven bells and whistles, just give me a stable UNIX base an efficient and good looking UI and regular security patches and I'm good.

I would be happy to keep using Mavericks but without yearly MacOS release how Apple would be able to convince normies to replace their 10 years old MacBooks?4 -

Worst project: porting SCO Unix on Chorus Microkernel. That was in 1995. Great project BUT after months of efforts finally SCO pulled the plug, a few weeks before we were about to get the kernel to boot ... Aarrgghh

-

I don't know if I'll read all but I had to buy absolutely this fantastic Unix book bundle!!

http://bit.ly/2gCT3mo3 -

After dealing with npm libs access permissions for an hour, glorious chmod -R 777 came to the rescue.2

-

Any suggestion on (pls, cheap) books that'd enable me to understand the unix/Linux environment in its most fundamental level?

I do use Linux for some 10 years already, but just now I moved to a distro that doesn't do everything to me. So my basic idea is to get the basics so I'd have the least problems when transitioning to other distros/*ix systems, so pls, the less its distro specific, the better or is.

Thx5 -

The unix shell syntax is weird looking.

Gonna learn it next. Using Arch without knowing how some scripts work isn't optimal.1 -

By chance, anybody heard of any plans to move Windows over to a Unix based something? These back slashes are driving me crazy!!!!2

-

I want to look a bit into other unix-like operating-systems, apart from Linux, so BSDs and such.

Can any of you tell me, with what or where to start ?4 -

So apparently the variable names foo and bar come from an old military term FUBAR which means f-ed up beyond all recognition.... Those OG UNIX guys hid their memes really well

-

Anyone got recommendations on a monitor setup above 1080p and 24" for developing in a unix environment? Don't know if I should go vertical, dual, or widescreen. I've seen research that dual monitors creates distraction. Thoughts?2

-

Wow old MS, just wow. Why running ASP.NET with VS in W7 on my potato laptop absolutely annihilates it, but running ASP.NET Core with VSCode (in UNIX) is blazingly fast?

-

I read. Alot. When I'm going to do something simple, even if I've done it before I google a bit on "how to do x" and see if there are better ways to do it. And I follow the rabbit hole a bit to see where it goes.

Example, I just learned about the unix command mktemp. I wasn't googling about making temporary files, but it was part of a solution to a different problem. Ive discussed how to make temporary files with colleagues before, and this builtin unix command has never come up. So many minutes wasted coming up with random filenames. -

Hey! I wrote "Memories of writing a parser for man pages" what do you think about it?

https://monades.roperzh.com/memorie... -

What If one day the UNIX time turn it opposite, and starting count down to January 1, 2070. When it comes to zero, it will be the end of world.4

-

I have a Macbook with 128GB PCIe SSD that's very pricey to upgrade. Considering switching to another UNIX distro but will that solve my storage problem or should I bite the bullet and just upgrade it? Or both?5

-

Hey guys, why yould you use linux over Mac OS X since they are both unix based ? Are there things Linux can do and OS X can’t ? Thx !9

-

Can someone explain linux to me? I can't see through Unix, Linux, GNU, mingw and all those things which somehow seem to be in the same context but i would like a tl;Dr to save my time :)2

-

I am stuck on a UNIX legaxy project. This is the perfect time to learn awk, sed, vim. I use them but I wanna get better.

I can read a book, course, but I WANT to write code, maybe something like the hackerrank challenges but harder.

How do I get advanced in awk, sed, vim?5 -

It is clear to everyone that Windows is a piece of crap in every possible way.

But I don't understand one thing. Unix existed before Windows. It has always been superb.

Why did Microsoft develop a whole bew crappy OS, instead of keeping Xenix(I know it was licensed) or forking a Unix-like os like Apple did with NeXTSTEP?

Imagine a world where every os is unix like. So wonderful.2 -

I learned to program at university. I remember my favourite practice exam was in AI where I used Lisp on a unix server to code a "find your way from the labirynth" problem. Everyone else used Pascal on PC-s. I don't know why 😃

-

An example-driven overview of the jq command using NASA NeoWs API

https://monades.roperzh.com/weekly-... -

Passwordless Unix login leading to a console menu. You can then FTP in for free and remove ~/.login . Boom ! Shell access! And I already had a superuser access from another "dialog" asking to confirm a dangerous action with the superuser's password. Boom! Root access !!

-

Dear Lord, please stop people from enforcing standards and bypassing them themselves.

Take kubernetes for example. Since v1.24 CRI has been announced as the standard, and kubernetes is shifting to live by it.

But it's not.

Yes, it's got the CRI spec defined and the unix://cri.sock used for that standardised communication. What nobody's telling you, is that that socket MUST be on the same runtime as the kube. I.e. you can't simply spin up a dockerd/containerd/cri-o server and share its CRI socket via CIFS/NFS/etc. Because kube-cp will assume that contained is running on the same host as cp and will try to access its services via localhost.

So effectively you feed the container via a socket to another machine, it spins up the container and that container tries to

- bind to your local machine's IP (not the one's the container is running on)

- access its dependencies via localhost:port, while they are actually running on your local machine (not the CRI host)

I HOPE this will change some day. And we'll have a clear cut between dependencies and dependents, separated by a single communications channel - a single unix socket. That'd be a solution I'd really enjoy working with. NOT the ip-port-connect-bind spaghetti we have now.4 -

!Rant

What's everyone's favorite operating system? I've been using Ubuntu for years now and I actually have no idea why as I have no real reason to be using it other than people tell me it is good.

What is everyone's favorite (unix) OS and why?1 -

Unix Epoch should have started in 2000, not 1970.

Those selfish people in the 1970 who made up the Unix epoch had little regard for the future. Thanks to their selfishness, the Unix date range is 1902 to 2038 with a 2³² integer. Honestly, who needs dates from 1902 to 1970 these days? Or even to 1990? Perhaps some ancient CD-ROMs have 1990s file name dates, but after that?

Now we have an impending year 2038 problem that could have been delayed by 30 years.

If it started on 2000-01-01, Unix epoch would be the number of seconds past since the century and millennium.13 -

I love linux but it doesn't run Windows applications natively, but Windows doesn't have the awesome UNIX shell so I'm kinda stuck6

-

Web application that you could read a file from UNIX. Problem, I'd you changed the URL, you could view any file, including any password file

-

What should my next book be? I’ve narrowed down to these—

A Commentary on Unix by John Lions

Clean Code by Robert C Main

Code Complete by Steve McConnell

SICP by Gerald Jay Sussman

Feel free to suggest any other book as well7 -

My first day of the "pool" in my school ended. Finally, the first day was learning how to properly use Unix systems. The "Moulinette" has to pass and validate the results, but we can't call it when we want. That's like a debugger that pass at fixed hours...

-

Hey pals, do you know any good RSS feeds about programing,Unix,hacking,... ?

Can be in German or English.8 -

f***ed with Nginx + php7.0 FPM

connect() to unix:/run/php/php7.0-fpm.sock failed (11: Resource temporarily unavailable) while connecting to upstream

#socket_vs_tcpip -

For me it must be the really specific things in the PHP core that are kept for historic reasons, especially this:

"easter_date — Get Unix timestamp for midnight on Easter of a given year" -

Spoonfeeding level 6666 and its still failing !!!

Me: ".....when that happens, press CTRL+C ,when you start seeing dollar sign you can enter command."

Co-Worker: I already logged in to unix server using putty and hardcoded the doplar sign,its still not working !!

:/3 -

It only takes three commands to install Gentoo:

> cfdisk /dev/hda && mkfs.xfs /dev/hda1 && mount /dev/hda1 /mnt/gentoo/ && chroot /mnt/gentoo/ && env-update && . /etc/profile && emerge sync && cd /usr/portage && scripts/bootsrap.sh && emerge system && emerge vim && vi /etc/fstab && emerge gentoo-dev-sources && cd /usr/src/linux && make menuconfig && make install modules_install && emerge gnome mozilla-firefox openoffice && emerge grub && cp /boot/grub/grub.conf.sample /boot/grub/grub.conf && vi /boot/grub/grub.conf && grub && init 6

that's the first one2 -

After missing Linux, OS X (bow macOS) and other Unix systems, I started installing WLS, anyone know if I can change shell to fish, install a package manager and point to repos?

-

Anyone here who knows stuff about Xenix. Would it be possible for Microsoft or any other party to build a modern operating system with it? Just theoretically.