Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "outputs"

-

> had an exam with a friend

> we both go to comp sci college

> had to write a fucked up algorithm in matlab

> he hates matlab

> he completes the task

> the variable that outputs the result is called holocaust

> he gets sent to dean

> expelled24 -

Interviewer: "Please demonstrate a simple program that outputs hello world in C."

*sweating profusely* me:

C C CCCCC C C CC

C C C C C C C

CC CC CCCC C C C C

C C C C C C C

C C CCCCC CCCC CCCC CC

C C C C CCC C CCC

C C C C C C C C C

C C C C C CCC C C C

C c c C C C C C C C C

Cc cC CC C C CCC CCC2 -

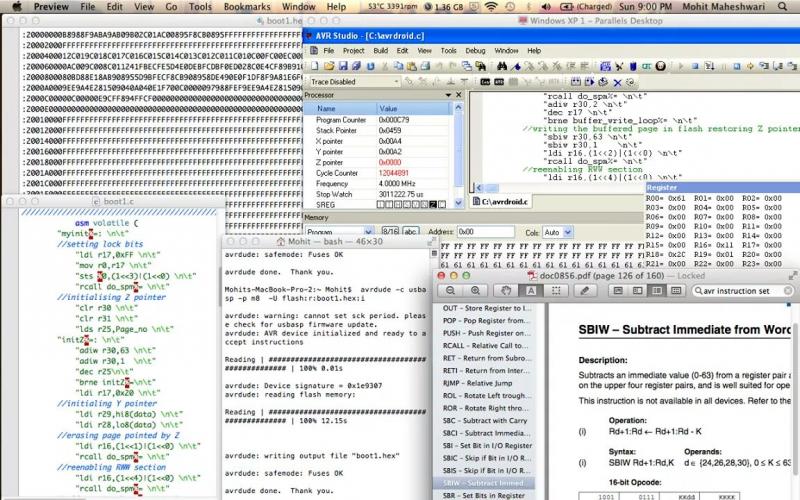

In my college days i was designing a bootloader for avr microcontroller , i had the idea to flash code wirelessly to avr over bluetooth and also cross compile the compiler for android device so that you can code on android, every thing went well just one thing didn't, i saw that code of certain size is executing properly , greater than that size gives me wired outputs so i have to dump hex from the avr (that is flashed the by bootloader) and compaire it with the original hex of code it got messy as you can see, most fun part of this bug is that error can be anywhere cross compiler may be fucked up , the bootloader may be fucked up , or it may be my bluetooth module , after 14 hours of staring at the hex code i figured out the mess in bootloader instruction that was changing the page address for flashing .

when it worked it was 3am in night i literally burst into tears of joy next day bought myself a cake to celebrate 6

6 -

I actually love Mr. Robot because at least they are trying to show the actual tools plus the codes that can be used to hack, not some kind of random console outputs that doesn't even make sense in the most of the tv series/ movies4

-

React-Redux's connect() function inspired me to create the coolest way to add numbers in JS:

<script>

function add(num) {

return function(otherNum) {

return num + otherNum

};

};

console.log( add(2)(3) ); // Outputs 5

</script>

I didn't know you could do that. Just found it out!12 -

Ooh this is good.

At my first job, i was hired as a c++ developer. The task seemed easy enough, it was a research and the previous developer died, leaving behind a lot of documentation and some legacy fortran code. Now you might not know, but fortran can be really easily converted to c, and then refactored to c++.

Fine, time to read the docs. The research was on pollen levels, cant really tell more. Mostly advanced maths. I dug through 500+ pages of algebra just to realize, theres no way this would ever work. Okay dont panic, im a data analyst, i can handle this.

Lets take a look at the fortran code, maybe that makes more sense. Turns out it had nothing to do with the task. It looped through some external data i couldnt find anywhere and thats it. Yay.

So i exported everything we had to a csv file, wrote a java program to apply linefit with linear regression and filter out the bad records. After that i spent 2 days in a hot server room, hoping that the old intel xeon wouldnt break down from sending java outputs directly to haskell, but it held on its own.1 -

Me: knows how to program a neural network

Also me: doesn't know how to use it

Again me: bad at math so doesn't know how to use the outputs of a neural network21 -

Teacher told me to write "I must do my homework" 100 times.

I wrote a for-loop that outputs "I must do my homework" 100 times.7 -

Okay guys, this is it!

Today was my final day at my current employer. I am on vacation next week, and will return to my previous employer on January the 2nd.

So I am going back to full time C/C++ coding on Linux. My machines will, once again, all have Gentoo Linux on them, while the servers run Debian. (Or Devuan if I can help it.)

----------------------------------------------------------------

So what have I learned in my 15 months stint as a C++ Qt5 developer on Windows 10 using Visual Studio 2017?

1. VS2017 is the best ever.

Although I am a Linux guy, I have owned all Visual C++/Studio versions since Visual C++ 6 (1999) - if only to use for cross-platform projects in a Windows VM.

2. I love Qt5, even on Windows!

And QtDesigner is a far better tool than I thought. On Linux I rarely had to design GUIs, so I was happily surprised.

3. GUI apps are always inferior to CLI.

Whenever a collegue of mine and me had worked on the same parts in the same libraries, and hit the inevitable merge conflict resolving session, we played a game: Who would push first? Him, with TortoiseGit and BeyondCompare? Or me, with MinTTY and kdiff3?

Surprise! I always won! 😁

4. Only shortly into Application Development for Windows with Visual Studio, I started to miss the fun it is to code on Linux for Linux.

No matter how much I like VS2017, I really miss Code::Blocks!

5. Big software suites (2,792 files) are interesting, but I prefer libraries and frameworks to work on.

----------------------------------------------------------------

For future reference, I'll answer a possible question I may have in the future about Windows 10: What did I use to mod/pimp it?

1. 7+ Taskbar Tweaker

https://rammichael.com/7-taskbar-tw...

2. AeroGlass

http://www.glass8.eu/

3. Classic Start (Now: Open-Shell-Menu)

https://github.com/Open-Shell/...

4. f.lux

https://justgetflux.com/

5. ImDisk

https://sourceforge.net/projects/...

6. Kate

Enhanced text editor I like a lot more than notepad++. Aaaand it has a "vim-mode". 👍

https://kate-editor.org/

7. kdiff3

Three way diff viewer, that can resolve most merge conflicts on its own. Its keyboard shortcuts (ctrl-1|2|3 ; ctrl-PgDn) let you fly through your files.

http://kdiff3.sourceforge.net/

8. Link Shell Extensions

Support hard links, symbolic links, junctions and much more right from the explorer via right-click-menu.

http://schinagl.priv.at/nt/...

9. Rainmeter

Neither as beautiful as Conky, nor as easy to configure or flexible. But it does its job.

https://www.rainmeter.net/

10 WinAeroTweaker

https://winaero.com/comment.php/...

Of course this wasn't everything. I also pimped Visual Studio quite heavily. Sam question from my future self: What did I do?

1 AStyle Extension

https://marketplace.visualstudio.com/...

2 Better Comments

Simple patche to make different comment styles look different. Like obsolete ones being showed striked through, or important ones in bold red and such stuff.

https://marketplace.visualstudio.com/...

3 CodeMaid

Open Source AddOn to clean up source code. Supports C#, C++, F#, VB, PHP, PowerShell, R, JSON, XAML, XML, ASP, HTML, CSS, LESS, SCSS, JavaScript and TypeScript.

http://www.codemaid.net/

4 Atomineer Pro Documentation

Alright, it is commercial. But there is not another tool that can keep doxygen style comments updated. Without this, you have to do it by hand.

https://www.atomineerutils.com/

5 Highlight all occurrences of selected word++

Select a word, and all similar get highlighted. VS could do this on its own, but is restricted to keywords.

https://marketplace.visualstudio.com/...

6 Hot Commands for Visual Studio

https://marketplace.visualstudio.com/...

7 Viasfora

This ingenious invention colorizes brackets (aka "Rainbow brackets") and makes their inner space visible on demand. Very useful if you have to deal with complex flows.

https://viasfora.com/

8 VSColorOutput

Come on! 2018 and Visual Studio still outputs monochromatically?

http://mike-ward.net/vscoloroutput/

That's it, folks.

----------------------------------------------------------------

No matter how much fun it will be to do full time Linux C/C++ coding, and reverse engineering of WORM file systems and proprietary containers and databases, the thing I am most looking forward to is quite mundane: I can do what the fuck I want!

Being stuck in a project? No problem, any of my own projects is just a 'git clone' away. (Or fetch/pull more likely... 😜)

Here I am leaving a place where gitlab.com, github.com and sourceforge.net are blocked.

But I will also miss my collegues here. I know it.

Well, part of the game I guess?7 -

got given the job of removing a menu link on a site my company hadn't built today.

biggest pile of dung ever! the site had folders for 5 different back end languages all full of random files not in use.

I dug around and found it was using a big framework that produces a massive single variable and outputs it as the page.

Eventually I realised this wasn't in use either but was still being loaded in the site! in fact the site even has a database and an admin login but the stupid original dev hard coded all the content in and runs includes to files in the admin folder directly from config!

such a confusing, pointless, shit site! Its like building a car and driving it like Fred from the Flintstones....1 -

In the first lesson on the school the teacher mentioned the fibonaci formula, and because I already had a little experience in programming I wrote a program witch outputs a given amount of numbers after the Fibonacci formula and showed it to the teacher who didn't really showed any reaction. At the end of my time in the school while the exams preparation he told us that last year one part of the exam was to program for the Fibonacci formula. At this point I realized that my little experience in programming was already to much for the class and why I did not learn any thing in 2 years.

Ps: sry for my bad English.1 -

"Change your algorithm"

Answers like this are why Stack Overflow almost becomes worthless when asking questions. I asked for some clarification why my code, which reads some files and outputs another, was hitting System.OutOfMemory exceptions. And that was the response I got.

"Change your algorithm"

How? In what manner should I be seeking to change my algorithm? OBVIOUSLY I SHOULD CHANGE MY ALGORITHM YOU WASTE OF OXYGEN. That was a given by the exception my program threw!

I swear to god, SO has got to be one of the most unwelcoming, condescending sites on the internet.4 -

People love fast moving green text with a black background.

I have linux on my laptop and I have a script (litturally just 1 line) that outputs random hex data to the command line.

I ran it while WebStorm was starting up and I had people instantly telling me to shutdown all the pcs on the network xDD6 -

My first post here, be merciful please.

So, I participate in game jams now and then. About two years ago, I was participating in one, and we where near the deadline. Our game was pretty much done, so we where posted a "alpha" version waiting for feedback.

Just half an hour before the deadline, we got a comment on our alpha alerting us of a rather important typo: The instruction screen said "Press X to shoot" while X did nothing and Z was the correct key. "Good thing we caught that in time, thankfully a easy fix" I thought.

This project was written in python, and built using py2exe. If you know py2exe, the least error-prone method outputs a folder containing the .exe, plus ginormous amounts of dll's, pyc files, and various other crap. We would put the entire folder together with graphics and other resources into a .zip and tell the judges to look for the .exe.

Anyway, on this occasion I committed to source control ran the build, it seemed to work on my quick test. I uploaded the zip, right before the deadline and sat back waiting for the results.

I had forgotten one final step.

I had not copied my updated files to the zip, which still contained the old version.

Anyway, I ended up losing a lot of points in "user friendliness" since the judges had trouble figuring out how to shoot. After I figured out why and how it happened, I had a embarrassing story to tell my teammates.3 -

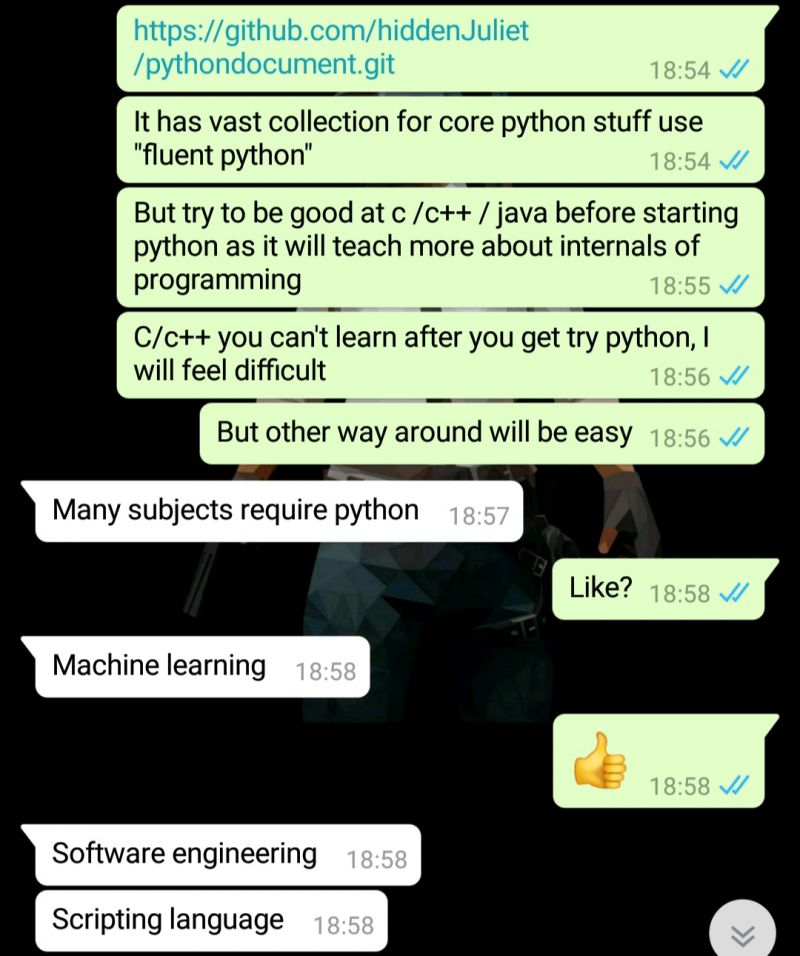

Why do people jump from c to python quickly. And all are about machine learning. Free days back my cousin asked me for books to learn python.

Trust me you have to learn c before python. People struggle going from python to c. But no ml, scripting,

And most importantly software engineering wtf?

Software engineering is how to run projects and it is compulsory to learn python and no mention of got it any other vcs, wtf?

What the hell is that type of college. Trust me I am no way saying python is weak, but for learning purpose the depth of language and concepts like pass by reference, memory leaks, pointers.

And learning algorithms, data structures, is more important than machine learning, trust me if you cannot model the data, get proper training data, testing data then you will get screewed up outputs. And then again every one who hype these kinds of stuff also think that ml with 100% accuracy is greater than 90% and overfit the data, test the model on training data. And mostly the will learn in college will be by hearting few formulas, that's it.

Learn a language (concepts in language) like then you will most languages are easy.

Cool cs programmer are born today😖 31

31 -

Doing an exercise in college. The lecturer provided random number generator code that continuously outputs the number 10. But that's none of my business3

-

my best use of Linux's ability to pipe together commands

git | cowsay | lolcats

I also have a custom bash script written that if the message is longer than 50 lines it writes cuts it off with a '...more' and outputs to a file where I can read the full details if needs be. 7

7 -

For me the best of being a dev was described by Fred Brooks in his "The Mythical Man-Month":

...The programmer, like the poet, works only slightly removed from pure thought-stuff. He builds his castles in the air, from air, creating by exertion of the imagination. Few media of creation are so flexible, so easy to polish and rework, so readily capable of realizing grand conceptual structures....

Yet the program construct, unlike the poet's words, is real in the sense that it moves and works, producing visible outputs separate from the construct itself. […] The magic of myth and legend has come true in our time. One types the correct incantation on a keyboard, and a display screen comes to life, showing things that never were nor could be...

https://en.wikiquote.org/wiki/...1 -

People now a days dont understand the value of creativity , being a developer means your creativity is also your productivity its your means to keep your job ,pay rent , buy clothes and have something to eat, its just sad to see people demoralize developers for "charging so much" to a project that people think is easy to do. We developers provide outputs and our creativity to the world, i think we deserve more than just a salary, but also a thank you for adding something to the world.3

-

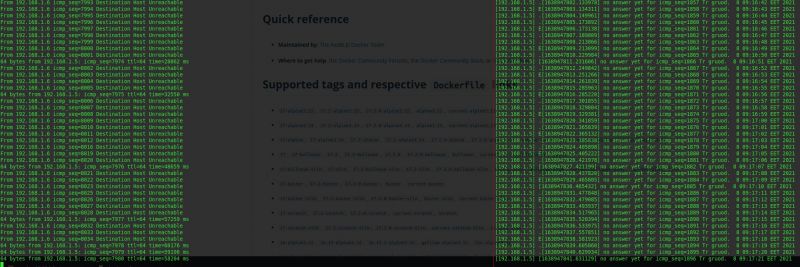

Dear router

It was nice having you in my house, but it's come to the point where our ways part. I must go on and you must be recycled. You've served me well all those 7 years, my friend.

It's not me, it's you. You've grown old and unreliable. Your capacitors must have dried out and can no longer serve reliable wifi connections. I keep on getting lost ICMP packets and connection outages altogether. While these things could happen to any router, definitely not every router has a 13-16 second long wifi outage every minute. I cannot have 2 peoples' work depend on a wifi connection where a ping to a LAN IP takes 58204ms. I just.. can't. You've become a liability to my family.

I'm pissed, because I cannot afford video calls with my colleagues.

I'm pissed, because my wife spends good 5 minutes every call asking "can you hear me? how about now?" and repeating herself over and over.

I'm pissed, because I can no longer watch Netflix or listen to YT Music uninterrupted by network outages.

I'm pissed, because my Cinnamon plugins freeze my UI, waiting for network response

But most of all I'm pissed, because I was disconnected from BeatSaber multiplayer server when I scored a Full Combo in Expert "Camellia: Ghost" - right before I got a chance to see my score.

I gave you 2 second chances by factory-resetting you. I admit you got better. And then got back to terrible again.

I can no longer rely on you. It's time to say our goodbies and part our ways.

P.S. as a proof of your unreliability I'm attaching outputs of ping to a LAN IP and pingloss to the same IP (pingloss: https://gitlab.com/-/snippets/...) 3

3 -

ffmpeg...

I FUCKING LOVE YOU!!

I CAN JUST STACK THOSE FILTERS WITH NO RESTRICTIONS OVER AND OVER WITH DIFFERENT INPUTS AND OUTPUTS!!

Also: it fucking works with still images, AND IT’S FUCKING FAST!!

It’s around FOUR (4, DO YOU REALISE THE IMPROVEMENT) times faster to GAUSSIAN blur an image and then composite an image over it with ffmpeg, than to composite the image with imagemagick (no blurring)!!3 -

Designers!!

What do ya think

A dashboard for a website

The cpu temp progress bar has jquery/AJAX wich outputs the cpu temperature at every each 3 second of the server wich is on a raspberry pi at the moment.

Does it look good for these days?

PS: used php for the backend 10

10 -

I just hate it when a co-worker says "AS LONG AS IT WORKS. It doesn't matter how you code it as long as it outputs what the client/user wants. They don't check how you code it anyway" *facepalm*

We effin' follow standards so that it will be convenient for everybody!

Bad code is still bad code if you don't follow standards even if it works.4 -

Hi , im new to programming and codings and my task is to make a output that outputs even number only ( not odd number) so i wrote this codes but its so complicated for me. Is there a better way to write it?

Thanks dears,, 19

19 -

Professors today in colleges don't know...

.

1. the proper denominations of outputs of basic shell commands like "ls -l", "cat", "cal" (pronounces linux as laynux)

.

2. how memory management works

.

3. how process scheduling actually takes place and not in the outdated bookish way.

.

4. how to compile a package from scratch and including digital signatures

.

5. cannot read a man page properly, yet come to take OS labs.

.

6. how to mount a different hardware

.

7. how to check kernel build rules, forget about compiling a custom kernel.

.

.

.

n. ....

Yet we are expecting the engineers who are churned out of colleges to be NEXT GEN ?!

It is not entirely because of syllabus, its also because of professors who had not updated their knowledge since they got a job. Therefore they cannot impart proper basics on students.

If you want things to change, train students directly in the industry with versions of these professors UPDATED.6 -

Why would anybody use dynamically typed languages? I mean it is lot safer to use static languages, no? No weird bugs, no weird outputs...

How they manage to write such big applications in dynamical languages?17 -

The Linux sound system scene looks like it was deliberately designed to be useless.

ALSA sees all my inputs and outputs, but it can't be used to learn (or control) anything about software and where their sound goes. Plus it's near impossible to identify inputs and outputs.

PulseAudio does all sorts of things automatically, but it's hard to configure and has high latency.

JACK is very convenient to configure, has great command line tools (like you'd expect from Linux), is scriptable, but it doesn't see things.

Generally, all of these see the others as a single output and a single input, which none of them are.11 -

Frontend-Developer steps into my office and tells me that my code does not work and that he has checked everything.

>me confused, cause I did it every time so<

So I spent one hour to check and recompile my code again and again. I created more console outputs than my whole last week... And at the end... It was not my fault, I had to find out that the front- and backcolor of his label are the same so the text was not visible.

Dear frontend developer,

I love your designs and most of the time you make a really good job, but please check something like this before you confuse a backend developer for one hour and let him doubt about himself...

Thanks!

Sincerely,

Your backend dev3 -

As I was browsing pornhub, I started reading articles about AI, dick still in hand, and went down the rabbit-hole (no pun intended) of self referential systems and proofs. This is something I do frequently (getting off track, not beating off, though I have been slacking recently).

Now I'm no expert but my neurotic DID personality which prompted this small reading binge DOES think it is an expert. And it got me thinking.

Godel’s second incompleteness theorem says that "no sufficiently strong proof system can prove its own consistency."

Then utilizing proof by contradiction, systems that are "sufficiently strong" should produce truth outputs that are monotonic. E.g. statements such as "this sentence is a lie."

Wouldn't monotonicity then be proof (soft or otherwise) that a proof system is 'sufficiently strong' in the sense that Godel's second theorem meant?

Edit: I WELCOME input, even if this post is utterly ignorant and vapid. I really don't know shit about formal systems or logic. Welcome any insight or feedback that could enlighten me.1 -

Data Disinformation: the Next Big Problem

Automatic code generation LLMs like ChatGPT are capable of producing SQL snippets. Regardless of quality, those are capable of retrieving data (from prepared datasets) based on user prompts.

That data may, however, be garbage. This will lead to garbage decisions by lowly literate stakeholders.

Like with network neutrality and pii/psi ownership, we must act now to avoid yet another calamity.

Imagine a scenario where a middle-manager level illiterate barks some prompts to the corporate AI and it writes and runs an SQL query in company databases.

The AI outputs some interactive charts that show that the average worker spends 92.4 minutes on lunch daily.

The middle manager gets furious and enacts an Orwellian policy of facial recognition punch clock in the office.

Two months and millions of dollars in contractors later, and the middle manager checks the same prompt again... and the average lunch time is now 107.2 minutes!

Finally the middle manager gets a literate person to check the data... and the piece of shit SQL behind the number is sourcing from the "off-site scheduled meetings" database.

Why? because the dataset that does have the data for lunch breaks is labeled "labour board compliance 3", and the LLM thought that the metadata for the wrong dataset better matched the user's prompt.

This, given the very real world scenario of mislabeled data and LLMs' inability to understand what they are saying or accessing, and the average manager's complete data illiteracy, we might have to wrangle some actions to prepare for this type of tomfoolery.

I don't think that access restriction will save our souls here, decision-flumberers usually have the authority to overrule RACI/ACL restrictions anyway.

Making "data analysis" an AI-GMO-Free zone is laughable, that is simply not how the tech market works. Auto tools are coming to make our jobs harder and less productive, tech people!

I thought about detecting new automation-enhanced data access and visualization, and enacting awareness policies. But it would be of poor help, after a shithead middle manager gets hooked on a surreal indicator value it is nigh impossible to yank them out of it.

Gotta get this snowball rolling, we must have some idea of future AI housetraining best practices if we are to avoid a complete social-media style meltdown of data-driven processes.

Someone cares to pitch in?13 -

I have been debugging for like hours trying to figure out the cause an unknown bug spoiling my UI by making my elements overlap.

I'm working on a Unit Converter that takes kWh and then converts to mWh. (Logical Conversion: 1000 kWh = 1 mWh).

Just an easy shit i thought, using Javascript I just passed the dynamically generated kWh value to a function that takes maximum of 6 chars and multiply it by 0.001 to get the required result but this was where my problem started. All values came out as expected until my App hits a particular value (8575) and outputs a very long set of String (8.575000000000001), i couldn't figure the cause of this until i checked my console log and found the culprit value, and then i change the calculation logic from multiply by 0.001 to divide by 1000 and it came out as expected (8.575)

My question is that;

Is my math logically wrong or is this another Javascript Calculation setback? 13

13 -

Operation people (devs, designers, content writers) said clients are being unreasonable.

Clients said the outputs and results are not satisfactory.

Now I understood the phrase I have seen a lot here. -

Hey, been gone a hot minute from devrant, so I thought I'd say hi to Demolishun, atheist, Lensflare, Root, kobenz, score, jestdotty, figoore, cafecortado, typosaurus, and the raft of other people I've met along the way and got to know somewhat.

All of you have been really good.

And while I'm here its time for maaaaaaaaath.

So I decided to horribly mutilate the concept of bloom filters.

If you don't know what that is, you take two random numbers, m, and p, both prime, where m < p, and it generate two numbers a and b, that output a function. That function is a hash.

Normally you'd have say five to ten different hashes.

A bloom filter lets you probabilistic-ally say whether you've seen something before, with no false negatives.

It lets you do this very space efficiently, with some caveats.

Each hash function should be uniformly distributed (any value input to it is likely to be mapped to any other value).

Then you interpret these output values as bit indexes.

So Hi might output [0, 1, 0, 0, 0]

while Hj outputs [0, 0, 0, 1, 0]

and Hk outputs [1, 0, 0, 0, 0]

producing [1, 1, 0, 1, 0]

And if your bloom filter has bits set in all those places, congratulations, you've seen that number before.

It's used by big companies like google to prevent re-indexing pages they've already seen, among other things.

Well I thought, what if instead of using it as a has-been-seen-before filter, we mangled its purpose until a square peg fit in a round hole?

Not long after I went and wrote a script that 1. generates data, 2. generates a hash function to encode it. 3. finds a hash function that reverses the encoding.

And it just works. Reversible hashes.

Of course you can't use it for compression strictly, not under normal circumstances, but these aren't normal circumstances.

The first thing I tried was finding a hash function h0, that predicts each subsequent value in a list given the previous value. This doesn't work because of hash collisions by default. A value like 731 might map to 64 in one place, and a later value might map to 453, so trying to invert the output to get the original sequence out would lead to branching. It occurs to me just now we might use a checkpointing system, with lookahead to see if a branch is the correct one, but I digress, I tried some other things first.

The next problem was 1. long sequences are slow to generate. I solved this by tuning the amount of iterations of the outer and inner loop. We find h0 first, and then h1 and put all the inputs through h0 to generate an intermediate list, and then put them through h1, and see if the output of h1 matches the original input. If it does, we return h0, and h1. It turns out it can take inordinate amounts of time if h0 lands on a hash function that doesn't play well with h1, so the next step was 2. adding an error margin. It turns out something fun happens, where if you allow a sequence generated by h1 (the decoder) to match *within* an error margin, under a certain error value, it'll find potential hash functions hn such that the outputs of h1 are *always* the same distance from their parent values in the original input to h0. This becomes our salt value k.

So our hash-function generate called encoder_decoder() or 'ed' (lol two letter functions), also calculates the k value and outputs that along with the hash functions for our data.

This is all well and good but what if we want to go further? With a few tweaks, along with taking output values, converting to binary, and left-padding each value with 0s, we can then calculate shannon entropy in its most essential form.

Turns out with tens of thousands of values (and tens of thousands of bits), the output of h1 with the salt, has a higher entropy than the original input. Meaning finding an h1 and h0 hash function for your data is equivalent to compression below the known shannon limit.

By how much?

Approximately 0.15%

Of course this doesn't factor in the five numbers you need, a0, and b0 to define h0, a1, and b1 to define h1, and the salt value, so it probably works out to the same. I'd like to see what the savings are with even larger sets though.

Next I said, well what if we COULD compress our data further?

What if all we needed were the numbers to define our hash functions, a starting value, a salt, and a number to represent 'depth'?

What if we could rearrange this system so we *could* use the starting value to represent n subsequent elements of our input x?

And thats what I did.

We break the input into blocks of 15-25 items, b/c thats the fastest to work with and find hashes for.

We then follow the math, to get a block which is

H0, H1, H2, H3, depth (how many items our 1st item will reproduce), & a starting value or 1stitem in this slice of our input.

x goes into h0, giving us y. y goes into h1 -> z, z into h2 -> y, y into h3, giving us back x.

The rest is in the image.

Anyway good to see you all again. 20

20 -

Hey guys, my gf and I want to do something with the Arduino we got. We are getting a CS degree, so programming is not a problem, but we have quite basic knowledge of electronics.

What could be a cool simple (but not too introductory) project we could do?

The arduino came with a bunch of sensors (ultrasonic distance sensor, humidity, ...) some input (joystick, RFID reader/writer, buttons) and some outputs (LCD display, 8x8 LED matrix, bunch of color and RGB leds).16 -

A software had been developed over a decade ago. With critical design problems, it grew slower and buggier over time.

As a simple change in any area could create new bugs in other parts, gradually the developers team decided not to change the software any more, instead for fixing bugs or adding features, every time a new software should be developed which monitors the main software, and tries to change its output from outside! For example, look into the outputs and inputs, and whenever there's this number in the output considering this sequence of inputs, change the output to this instead.

As all the patchwork is done from outside, auxiliary software are very huge. They have to have parts to save and monitor inputs and outputs and algorithms to communicate with the main software and its clients.

As this architecture becomes more and more complex, company negotiates with users to convince them to change their habits a bit. Like instead of receiving an email with latest notifications, download a csv every day from a url which gives them their notifications! Because it is then easier for developers to build.

As the project grows, company hires more and more developers to work on this gigantic project. Suddenly, some day, there comes a young talented developer who realizes if the company develops the software from scratch, it could become 100 times smaller as there will be no patchwork, no monitoring of the outputs and inputs and no reverse engineering to figure out why the system behaves like this to change its behavior and finally, no arrangement with users to download weird csv files as there will be a fresh new code base using latest design patterns and a modern UI.

Managers but, are unaware of technical jargon and have no time to listen to a curious kid! They look into the list of payrolls and say, replacing something we spent millions of man hours to build, is IMPOSSIBLE! Get back to your work or find another job!

Most people decide to remain silence and therefore the madness continues with no resistance. That's why when you buy a ticket from a public transport system you see long delays and various unexpected behavior. That's why when you are waiting to receive an SMS from your bank you might end up requesting a letter by post instead!

Yet there are some rebel developers who stand and fight! They finally get expelled from the famous powerful system down to the streets. They are free to open their startups and develop their dream system. They do. But government (as the only client most of the time), would look into the budget spending and says: How can we replace an annually billion dollar project without a toy built by a bunch of kids? And the madness continues.... Boeings crash, space programs stagnate and banks take forever to process risks and react. This is our world.3 -

Can't someone just write some sort of Programm that gets project adjectives as input and outputs some nice project names. That would be super awesome. How do you create your project names?5

-

Linux networking: A tragedy in three acts

ACT 1:

Wherein the system administrator writes their /etc/network/interfaces file as is the custom.

ACT 2:

Wherein the kafkaesque outputs of basic networking commands threaten basic sanity. Behold:

```

# ifup ens3:1

RTNETLINK answers: File exists

Failed to bring up ens3:1.

# ifdown ens3:1

ifdown: interface ens3:1 not configured

```

ACT 3:

Wherein all sanity is lost:

(╯°□°)╯︵ ┻━┻)1 -

FFS, I wasted my time reinstalling nodejs just to update my npm and tried npm i -g npm.

Outputs a success status but when I check via npm -v it's still the same old version.

Or maybe I'm just too dumb that I don't know how to do it right.10 -

Adaptive Latent Hypersurfaces

The idea is rather than adjusting embedding latents, we learn a model that takes

the context tokens as input, and generates an efficient adapter or transform of the latents,

so when the latents are grabbed for that same input, they produce outputs with much lower perplexity and loss.

This can be trained autoregressively.

This is similar in some respects to hypernetworks, but applied to embeddings.

The thinking is we shouldn't change latents directly, because any given vector will general be orthogonal to any other, and changing the latents introduces variance for some subset of other inputs over some distribution that is partially or fully out-of-distribution to the current training and verification data sets, thus ultimately leading to a plateau in loss-drop.

Therefore, by autoregressively taking an input, and learning a model that produces a transform on the latents of a token dictionary, we can avoid this ossification of global minima, by finding hypersurfaces that adapt the embeddings, rather than changing them directly.

The result is a network that essentially acts a a compressor of all relevant use cases, without leading to overfitting on in-distribution data and underfitting on out-of-distribution data.12 -

Does anyone else get annoyed when your outside of a editor and you press the apostrophe/quote or parenthesis key only to outputs a single character and not a pair?

Drives me insane1 -

Fun fact. I work for a 20-year old company that does software which mostly does print outputs. 95% of our clients actually use it specifically and exclusively to print their invoice runs. There are over 25 printers in this office, 5 of which are within chair-rolling distance of my desk.

I don't know how to use or fix any of them. I must be a *really bad* developer. >.<3 -

Taking an online test to practise programming. They give me code that takes two 3x3 matrices as inputs and outputs a third. I have to multiply them together and ouput results.

Within minutes I've worked my head around it, got four lines of code to do it all. Output fails.

Twenty minutes later, nearly failing the time limit I find out that they couldn't output the array proplerly in C++

Are3[I, j];

;( What a hair puller.1 -

I AM IN RAGE !!! MY MANAGER IS A FUCKIN SNAKE ASSHOLE!

FUCKER RATED ME 3/5 !

i feel like destroying my laptop and putting my papers right away. this is absolute shit hole of a company where corporste bullshit and multi level hierarchy runs the system, ass licking is the norm and still me, a lowly sde dev 1 was giving my 200% covering their bullshit to deliver outputs on time.

let me tell you some stats.

- our app has grown by 2x installs and 5x mau.

- only 3 devs worked on the app. the other 2 can vouch for my competence.

- we were handled an app with ugliest possible code full of duplication, random bugs and sudden ANRs. we improved the app to a good level of working

- my manager/tl is such a crappy person that if asked about a feature out of random, he will reply "huh?" and will need 2 mins to tell anything about it.

- there is so much dependency with other teams and they want us to talk to them personally. like hell i care why backend is giving wrong responses. but i cared, i gpt so good handling all these shit that people would directly contact me instead of himal and i would contact them. all work was getting done coz 1 stupid fellow was spending 90% of his time in coordinations

- i don't even know how to work with incompetence. my focus is : to do my task, fix anything that is broken that will relate to my task in any way and gather all the stuff needed to complete my task

i am done. i cannot change this company because its name is good and i am already feeling guilty about switching my previous jobs in 1 year but this is painful.

in my first company i happily took a 10% hike coz i was out of college and still learning.

in my 2nd company, i left due to change in policies ( they went from wfh to wfo and they were in a different state) , but even while leaving they gave a nice 30% hike

in my current company idk wjat the no. 3 equates to , but its extremely frustrating knowing a QA who was so incompetent, he nearly costed us a DDOS got the same rating as me

------

PS : GIVE ME TIPS ON HOW TO BE INCOMPETENT WITHOUT GETTING CAUGHT4 -

I like to say programming is the art of "creative logic". Much like architecture has an aesthetic to consider or cooking has well-defined procedures with greatly varying inputs and outputs, there has to be room for creativity, be it at the planning stage or during wild improvisation sessions.

Without that creative aspect, software development sounds dreary to me.

Where science meets art is where the magic happens.

If only the artists shared this view and actually took an interest in the technical side...1 -

Someone figured out how to make LLMs obey context free grammars, so that opens up the possibility of really fine-grained control of generation and the structure of outputs.

And I was thinking, what if we did the same for something that consumed and validated tokens?

The thinking is that the option to backtrack already exists, so if an input is invalid, the system can backtrack and regenerate - mostly this is implemented through something called 'temperature', or 'top-k', where the system generates multiple next tokens, and then typically selects from a subsample of them, usually the highest scoring one.

But it occurs to me that a process could be run in front of that, that asks conditions the input based on a grammar, and takes as input the output of the base process. The instruction prompt to it would be a simple binary filter:

"If the next token conforms to the provided grammar, output it to stream, otherwise trigger backtracking in the LLM that gave you the input."

This is very much a compliance thing, but could be used for finer-grained control over how a machine examines its own output, rather than the current system where you simply feed-in as input its own output like we do now for systems able to continuously produce new output (such as the planners some people have built)

link here:

https://news.ycombinator.com/item/...3 -

This error, which took me a long time to find, demonstrates the importance of useful variable names.

Using the Wolfram Language:

pp = {};

For[i = 0, i <= Max[p], i++, If[Count[p, i] != 0, pp = Join[pp, {{i, Count[pp, i]}}], -1]];

pp

Outputs:

{{1, 0}, {2, 0}, {3, 0}, {4, 0}, {5, 0}, {6, 0}, {7, 0}, {8, 0}, {9, 0}, {10, 0}, {11, 0}, {12, 0}, {13, 0}, {14, 0}, {15, 0}, {16, 0}, {17, 0}, {18, 0}, {19, 0}, {20, 0}, {21, 0}, {22, 0}, {23, 0}, {24, 0}, {25, 0}, {26, 0}, {27, 0}, {28, 0}, {29, 0}, {30, 0}, {31, 0}, {32, 0}, {33, 0}, {34, 0}, {35, 0}, {36, 0}, {37, 0}, {38, 0}, {39, 0}, {40, 0}, {41, 0}, {42, 0}, {43, 0}, {44, 0}, {45, 0}, {46, 0}, {47, 0}, {48, 0}, {49, 0}, {50, 0}, {51, 0}, {52, 0}, {53, 0}, {54, 0}, {55, 0}, {56, 0}, {57, 0}, {58, 0}, {59, 0}, {60, 0}, {61, 0}, {62, 0}, {63, 0}, {64, 0}, {65, 0}, {66, 0}, {67, 0}, {68, 0}, {69, 0}, {70, 0}, {71, 0}, {72, 0}, {73, 0}, {74, 0}, {75, 0}, {76, 0}, {77, 0}, {78, 0}, {79, 0}, {80, 0}, {81, 0}, {82, 0}, {83, 0}, {84, 0}, {85, 0}, {86, 0}, {87, 0}, {88, 0}, {89, 0}, {90, 0}, {91, 0}, {92, 0}, {93, 0}, {94, 0}, {95, 0}, {96, 0}, {97, 0}, {98, 0}, {99, 0}, {100, 0}, {101, 0}, {103, 0}, {104, 0}, {105, 0}, {106, 0}, {107, 0}, {108, 0}, {111, 0}, {112, 0}, {116, 0}, {118, 0}, {122, 0}, {125, 0}, {136, 0}, {137, 0}}

As opposed to the expected output, which should have no 0s as the second values in any of the tuples.

I spent a large amount of time examining the code to generate p before realizing that the bug was in this line.3 -

Turns out you can treat a a function mapping parameters to outputs as a product that acts as a *scaling* of continuous inputs to outputs, and that this sits somewhere between neural nets and regression trees.

Well thats what I did, and the MAE (or error) of this works out to about ~0.5%, half a percentage point. Did training and a little validation, but the training set is only 2.5k samples, so it may just be overfitting.

The idea is you have X, y, and z.

z is your parameters. And for every row in y, you have an entry in z. You then try to find a set of z such that the product, multiplied by the value of yi, yields the corresponding value at Xi.

Naturally I gave it the ridiculous name of a 'zcombiner'.

Well, fucking turns out, this beautiful bastard of a paper just dropped in my lap, and its been around since 2020:

https://mimuw.edu.pl/~bojan/papers/...

which does the exact god damn thing.

I mean they did't realize it applies to ML, but its the same fucking math I did.

z is the monoid that finds some identity that creates an isomorphism between all the elements of all the rows of y, and all the elements of all the indexes of X.

And I just got to say it feels good. -

Heres some research into a new LLM architecture I recently built and have had actual success with.

The idea is simple, you do the standard thing of generating random vectors for your dictionary of tokens, we'll call these numbers your 'weights'. Then, for whatever sentence you want to use as input, you generate a context embedding by looking up those tokens, and putting them into a list.

Next, you do the same for the output you want to map to, lets call it the decoder embedding.

You then loop, and generate a 'noise embedding', for each vector or individual token in the context embedding, you then subtract that token's noise value from that token's embedding value or specific weight.

You find the weight index in the weight dictionary (one entry per word or token in your token dictionary) thats closest to this embedding. You use a version of cuckoo hashing where similar values are stored near each other, and the canonical weight values are actually the key of each key:value pair in your token dictionary. When doing this you align all random numbered keys in the dictionary (a uniform sample from 0 to 1), and look at hamming distance between the context embedding+noise embedding (called the encoder embedding) versus the canonical keys, with each digit from left to right being penalized by some factor f (because numbers further left are larger magnitudes), and then penalize or reward based on the numeric closeness of any given individual digit of the encoder embedding at the same index of any given weight i.

You then substitute the canonical weight in place of this encoder embedding, look up that weights index in my earliest version, and then use that index to lookup the word|token in the token dictionary and compare it to the word at the current index of the training output to match against.

Of course by switching to the hash version the lookup is significantly faster, but I digress.

That introduces a problem.

If each input token matches one output token how do we get variable length outputs, how do we do n-to-m mappings of input and output?

One of the things I explored was using pseudo-markovian processes, where theres one node, A, with two links to itself, B, and C.

B is a transition matrix, and A holds its own state. At any given timestep, A may use either the default transition matrix (training data encoder embeddings) with B, or it may generate new ones, using C and a context window of A's prior states.

C can be used to modify A, or it can be used to as a noise embedding to modify B.

A can take on the state of both A and C or A and B. In fact we do both, and measure which is closest to the correct output during training.

What this *doesn't* do is give us variable length encodings or decodings.

So I thought a while and said, if we're using noise embeddings, why can't we use multiple?

And if we're doing multiple, what if we used a middle layer, lets call it the 'key', and took its mean

over *many* training examples, and used it to map from the variance of an input (query) to the variance and mean of

a training or inference output (value).

But how does that tell us when to stop or continue generating tokens for the output?

Posted on pastebin if you want to read the whole thing (DR wouldn't post for some reason).

In any case I wasn't sure if I was dreaming or if I was off in left field, so I went and built the damn thing, the autoencoder part, wasn't even sure I could, but I did, and it just works. I'm still scratching my head.

https://pastebin.com/xAHRhmfH25 -

We need to update the slang "script kiddie" to "prompt enginot" or something.

So my boss's boss or someone even higher up drank the generative AI kool-aid and hired a 40-something kid to generate images for the marketing teams (or something like it).

Naturally, things soon went to shit.

The bloke already left, having staid less than six months on the job.

Guess who got to handle all the shit-is-currently-on-fire the kiddie left behind?

First impression: apparently, muggles tried to slak him some very broad descriptions of what they needed, and at first he actually tried to summarize those bark-speech pseudo-words into an actual prompt.

It does not seem to have gone for too long, though.

After users requested changes to the AI outputs, he would update the prompts, all right. And the process seemed to go fast enough... until reaching near-to-completion status.

Then users would request the tiniest changes to the AI output...

And the bloke couldn't do it.

Seriously. Some things were as simple as "we need this slider to go all the way up to 180% instead of 100%" on a lame dashboard and *kid. could. not. do. it.*.

In many cases he literally just gave up and copied the slak history into the AI prompt. No dice.

Bloke couldn't code a print('hello world') into a jupyter notebook cell, that's what i'm saying.

Apparently, he was "self taught", too. And was hired to "speed up the process of generating visual aids for usage in meetings and presentations". But then "the budget for this position was considered excessive" (meaning: shit results from a raw idea some executive crapped some day) and "the position was expanded to include the development of Business Inteligence Dashboards and Data Apps".

So now it is up to me (and my CRIMINALLY UNDERPAID team) to clean up his mess and maintain/fix/deprecate DOZENS of SHODDILY DESIGNED and MOSTLY USELESS but QUITE ACTIVE "data vis" PIECES OF SHIT.

Fuck "AI prompters", fucking snake oil script kiddies.2 -

This is gonna be a long post, and inevitably DR will mutilate my line breaks, so bear with me.

Also I cut out a bunch because the length was overlimit, so I'll post the second half later.

I'm annoyed because it appears the current stablediffusion trend has thrown the baby out with the bath water. I'll explain that in a moment.

As you all know I like to make extraordinary claims with little proof, sometimes

for shits and giggles, and sometimes because I'm just delusional apparently.

One of my legit 'claims to fame' is, on the theoretical level, I predicted

most of the developments in AI over the last 10+ years, down to key insights.

I've never had the math background for it, but I understood the ideas I

was working with at a conceptual level. Part of this flowed from powering

through literal (god I hate that word) hundreds of research papers a year, because I'm an obsessive like that. And I had to power through them, because

a lot of the technical low-level details were beyond my reach, but architecturally

I started to see a lot of patterns, and begin to grasp the general thrust

of where research and development *needed* to go.

In any case, I'm looking at stablediffusion and what occurs to me is that we've almost entirely thrown out GANs. As some or most of you may know, a GAN is

where networks compete, one to generate outputs that look real, another

to discern which is real, and by the process of competition, improve the ability

to generate a convincing fake, and to discern one. Imagine a self-sharpening knife and you get the idea.

Well, when we went to the diffusion method, upscaling noise (essentially a form of controlled pareidolia using autoencoders over seq2seq models) we threw out

GANs.

We also threw out online learning. The models only grow on the backend.

This doesn't help anyone but those corporations that have massive funding

to create and train models. They get to decide how the models 'think', what their

biases are, and what topics or subjects they cover. This is no good long run,

but thats more of an ideological argument. Thats not the real problem.

The problem is they've once again gimped the research, chosen a suboptimal

trap for the direction of development.

What interested me early on in the lottery ticket theory was the implications.

The lottery ticket theory says that, part of the reason *some* RANDOM initializations of a network train/predict better than others, is essentially

down to a small pool of subgraphs that happened, by pure luck, to chance on

initialization that just so happened to be the right 'lottery numbers' as it were, for training quickly.

The first implication of this, is that the bigger a network therefore, the greater the chance of these lucky subgraphs occurring. Whether the density grows

faster than the density of the 'unlucky' or average subgraphs, is another matter.

From this though, they realized what they could do was search out these subgraphs, and prune many of the worst or average performing neighbor graphs, without meaningful loss in model performance. Essentially they could *shrink down* things like chatGPT and BERT.

The second implication was more sublte and overlooked, and still is.

The existence of lucky subnetworks might suggest nothing additional--In which case the implication is that *any* subnet could *technically*, by transfer learning, be 'lucky' and train fast or be particularly good for some unknown task.

INSTEAD however, what has happened is we haven't really seen that. What this means is actually pretty startling. It has two possible implications, either of which will have significant outcomes on the research sooner or later:

1. there is an 'island' of network size, beyond what we've currently achieved,

where networks that are currently state of the3 art at some things, rapidly converge to state-of-the-art *generalists* in nearly *all* task, regardless of input. What this would look like at first, is a gradual drop off in gains of the current approach, characterized as a potential new "ai winter", or a "limit to the current approach", which wouldn't actually be the limit, but a saddle point in its utility across domains and its intelligence (for some measure and definition of 'intelligence').4 -

Data wrangling is messy

I'm doing the vegetation maps for the game today, maybe rivers if it all goes smoothly.

I could probably do it by hand, but theres something like 60-70 ecoregions to chart,

each with their own species, both fauna and flora. And each has an elevation range its

found at in real life, so I want to use the heightmap to dictate that. Who has time for that? It's a lot of manual work.

And the night prior I'm thinking "oh this will be easy."

yeah, no.

(Also why does Devrant have to mangle my line breaks? -_-)

Laid out the requirements, how I could go about it, and the more I look the more involved

it gets.

So what I think I'll do is automate it. I already automated some of the map extraction, so

I don't see why I shouldn't just go the distance.

Also it means, later on, when I have access to better, higher resolution geographic data, updating it will be a smoother process. And even though I'm only interested in flora at the moment, theres no reason I can't reuse the same system to extract fauna information.

Of course in-game design there are some things you'll want to fudge. When the players are exploring outside the rockies in a mountainous area, maybe I still want to spawn the occasional mountain lion as a mid-tier enemy, even though our survivor might be outside the cats natural habitat. This could even be the prelude to a task you have to do, go take care of a dangerous

creature outside its normal hunting range. And who knows why it is there? Wild fire? Hunted by something *more* dangerous? Poaching? Maybe a nuke plant exploded and drove all the wildlife from an adjoining region?

who knows.

Having the extraction mostly automated goes a long way to updating those lists down the road.

But for now, flora.

For deciding plants and other features of the terrain what I can do is:

* rewrite pixeltile to take file names as input,

* along with a series of colors as a key (which are put into a SET to check each pixel against)

* input each region, one at a time, as the key, and the heightmap as the source image

* output only the region in the heightmap that corresponds to the ecoregion in the key.

* write a function to extract the palette from the outputted heightmap. (is this really needed?)

* arrange colors on the bottom or side of the image by hand, along with (in text) the elevation in feet for reference.

For automating this entire process I can go one step further:

* Do this entire process with the key colors I already snagged by hand, outputting region IDs as the file names.

* setup selenium

* selenium opens a link related to each elevation-map of a specific biome, and saves the text links

(so I dont have to hand-open them)

* I'll save the species and text by hand (assuming elevation data isn't listed)

* once I have a list of species and other details, to save them to csv, or json, or another format

* I save the list of species as csv or json or another format.

* then selenium opens this list, opens wikipedia for each, one at a time, and searches the text for elevation

* selenium saves out the species name (or an "unknown") for the species, and elevation, to a text file, along with the biome ID, and maybe the elevation code (from the heightmap) as a number or a color (probably a number, simplifies changing the heightmap later on)

Having done all this, I can start to assign species types, specific world tiles. The outputs for each region act as reference.

The only problem with the existing biome map (you can see it below, its ugly) is that it has a lot of "inbetween" colors. Theres a few things I can do here. I can treat those as a "mixing" between regions, dictating the chance of one biome's plants or the other's spawning. This seems a little complicated and dependent on a scraped together standard rather than actual data. So I'm thinking instead what I'll do is I'll implement biome transitions in code, which makes more sense, and decouples it from relying on the underlaying data. also prevents species and terrain from generating in say, towns on the borders of region, where certain plants or terrain features would be unnatural. Part of what makes an ecoregion unique is that geography has lead to relative isolation and evolutionary development of each region (usually thanks to mountains, rivers, and large impassible expanses like deserts).

Maybe I'll stuff it all into a giant bson file or maybe sqlite. Don't know yet.

As an entry level programmer I may not know what I'm doing, and I may be supposed to be looking for a job, but that won't stop me from procrastinating.

Data wrangling is fun. 1

1 -

I will need a laptop for uni and I thought you guys could give me some tips on good brands/models. I want it to have at least 16GB of RAM, preferably a newer generation of i7 and dedicated NVidia graphics. The bigger the screen is the better. I don't care too much about the battery but I would like to have a few USB type A ports and some HDMI/DP outputs. The maximum price (shipping included) should be around 1000 - 1200€15

-

After the long haul of designing, structuring and finally implementing, my side project is done. The only challenge I faced was to not lose interest or get distracted :p

I made this to get a hang of haskell. It adds haskell functionality to your shell and lets you apply functions to outputs of other programs(ls,ps,df etc)

https://github.com/iostreamer-X/...

Your honest feedback is highly valued.

Thanks!

:D -

!rant

Continuation from: https://devrant.com/rants/979267/...

My vision is to implement something that is inspired by Flow Based Programming.

The motivation for this is two fold

* Functional design - many advantages to this, pure functions mean consistent outputs for each input, testable, composable, reasonable. The functional reactive nature means events are handles as functions over time, thus eliminating statefulness

* Visual/Diagrammable - programs can be represented as diagrams, with components, connections and ports, there is a 1 to 1 relationship between the program structure and visual representation. This means high level analysis and design can happen throughout project development.

Just to be clear there are enough frameworks out there so I have no intentions of making a new one, this will make use of the least number of libraries I can get away with.

In my original post I used Highland.js as I've been following the project a while. But unfortunately documentation is lacking and it is a little bare bones; I need something that is a little more featureful to eliminate boilerplate code.

RxJS seems to be the answer, it is much better documentated and provides WAY more functionality. And I have seen many reports of it being significantly easier to use.

Code speaks much louder so stay tuned as I plan to produce a proof of concept (obligatory) todo app. Or if you're sick of those feel free to make a request.3 -

Hii devrant I'm new to programmings. I programmed my first program but it wrongly outputs this sentence. What is the error?

26

26 -

TIL python list variable asignment points to the original instance

I know this isn't reddit but today I noticed that in python when you asign a list variable to another variable in python, any change to the new variable affects the original one. To copy one you could asign a slice or use methods returning the list:

l = [1,2,3,4]

l2 = l

l2.append(5)

print(l)

#Outputs [1,2,3,4,5]

l3 = list(l2) # also works with l2[:]

l3.append(6)

print("l2 = ", l2, "\nl3 = ", l3)

#Outputs l2 = [1,2,3,4,5]

# l3 = [1,2,3,4,5,6]

I wonder how the fuck I haven't encountered any bug when using lists while doing this. I guess I'm lucky I haven't used lists that way (which is strange, I know). I guess I still have a long way to go.5 -

The human engineers are now about 1 or 2 steps away from creating strong AI. We now have decent drawing AI, decent voice actor AI and a confident bullshit AI. All components are already there. Actual human reasoning is in essence confident bullshitter getting cross-checked a few times. Throw a few confident bullshitter AIs together, make them check each other's outputs, and you have basically human reasoning.

It is terrifying because of the coming implications for our society 🤡 At the same time, I am proud of the engineers who worked hard on the technical advancements for the AI and made amazing progress. I know how it feels to work tirelessly on a complicated technical task 💻🌙3 -

Our software outputs some xml and a client has another company loading this xml into some data warehouse and doing reporting on it. The other company are saying we are outputting duplicate records in the data.

I look and see something like this:

<foo name="test">

<bar value="2" />

<bar value="3" />

</foo>

They say there are two foo records with the name test..

We ask them to send the xml file they are looking at. They send an xlsx (Excel!) file which looks like this:

name value

test 2

test 3

We try asking them how they get xlsx from the xml but they just come back to our client asking to find what we changed because it was working before. Well we didn't change anything. This foo has two bar inside it which is valid data and valid xml. If you cant read xml just say so and we can output another format! -

Dumb question time!

I'm writing a bash script that outputs some progress info to stdout (stuff like "Doing this... ok", "Doing that... ok") before outputing a list of names (to stdout too).

I'd like to be able to pipe this list of names to a second script for processing, by doing a simple :

~$ script1 | script2

Unfortunately, as you may have guessed, the progress info is piped as well, and is not displayed on the screen.

Is it considered a bad practice to redirect that progress info to stderr so it is not sent into the pipe ?

Is there any "design pattern" for this kind of usecase, where you want to be able to choose what to display and what to pipe to a program that accepts input from stdin ?16 -

WTF!! Function that returns multiple outputs!! Why not make a datetime object and return the whole fucking object!!

1

1 -

META-LUCK: A Pseudo-Ontology Of An Authentic Future

* * *

I think in the not-to-distant future we will abandon the idea of authenticity (messaging, corporate responsibility, ethos) in favor of other factors, such as cost. We won't abandon it and replace it with fakeness, so much as realize

that we don't, as a society favor it at all, not in the absolute sense, nor in the relative sense like in relation to things like cost.

We will either abandon authenticity entirely, or alternatively, transition to a world where authenticity is the highest valued quality, being adjacent to truth.

Heres why. Authenticity, like all social qualities, can be 1. mimicked, 2. simulated,

or 3. emulated.

In the first case, a corporation, product, leader, organization, or other, apes authenticity simply by its knowable, external features. It mimics the sounds, like a jungle bird copying a jack hammer to scare away predators or attract mates.

There is no understanding, let alone model, external or internal. The successful mimic

is little more than a lifeless, unthinking puppet.

In the second case, the attempted authentic simulates authenticity: That is, an external

model is formed, or pattern, that is predictable, and archetypal. It may have an internal

model even, a set of policies and processes for deciding the external-facing behavior.

But these policies and internal processes and models are all strictly outward facing. It is purely pathological in its goal, desiring only at minimum to achieve *externally attribute* authenticity (public opinion) rather than those internal changes that generate the true perception of the public--a perception not of surface behaviors and shrewd calculating policies and processes, but as a quality of authenticity for its own sake. This is in some sense the difference between the mundane and the atavistic, that the benefit, while not definable strictly, is assumed as a 'matter of course', culturally, within the organization or individual or company. It is to say, a *quality* of the thing, that *generates* outputs of a certain character and nature, rather than a *goal* that is attained 'after-the-fact' by behaviors generated for *other* than being authentic.

Here we reach the limitation of definitions.

Finally, we arrive at the case of number three, the emulation. We have in part already described it, but lets try and summarize a bit.

The Authentic is an *originator* of behavior and outward appearances, being an internal quality of a person or organization. It originates behavior, rather than being the goal of behavior and outward appearances.

Its benefit is assumed, though not always nameable or definable, even though this sounds naive, superseding other factors like cost and profit. As such the authentic does not emerge in a cost-focused environment, not readily, not often, and not cheaply either.

It is in some sense an experimental state of being, of goal-seeking only after-the-fact of "being true to ones origins" is established above and beyond those goals--setting and achieving only those goals which ultimately align with the origin and intent of the authentic.5 -

short: The admin with enough xp is ill, there is no one with xp with varnish is and after 1 restart varnish outputs only 503.

long: there original admin is ill but he gave me an project to migrate an typo3 installation to a new server. Thats ok.

Plan: I move 150 GB of data with rsync to the new server, let specialists do something and switch ips between the new and old and clear varnish with a restart.

Reality: +2 hours to migrate the data, because of false infos from the admin, 7 hours preparing the switch, 5 minutes switch, 3 hours to find out the F*****G varnish is the single point of failure. I and the t3 guys agree to see the next day what went wrong.

ALL HAPPENED TODAY!

Plan for tomorrow: speak with the boss to account the extra hours to that day so i dont get over 10 hours and debug that fucking varnish and delete some servers from another project from the backupsystem and monitoring.3 -

A beginner in learning java. I was beating around the bushes on internet from past a decade . As per my understanding upto now. Let us suppose a bottle of water. Here the bottle may be considered as CLASS and water in it be objects(atoms), obejcts may be of same kind and other may differ in some properties. Other way of understanding would be human being is CLASS and MALE Female be objects of Class Human Being. Here again in this Scenario objects may differ in properties such as gender, age, body parts. Zoo might be a class and animals(object), elephants(objects), tigers(objects) and others too, Above human contents too can be added for properties such as in in Zoo class male, female, body parts, age, eating habits, crawlers, four legged, two legged, flying, water animals, mammals, herbivores, Carnivores.. Whatever.. This is upto my understanding. If any corrections always welcome. Will be happy if my answer modified, comment below.

And for basic level.

Learn from input, output devices

Then memory wise cache(quick access), RAM(runtime access temporary memory), Hard disk (permanent memory) all will be in CPU machine. Suppose to express above memory clearly as per my knowledge now am writing this answer with mobile net on. If a suddenly switch off my phone during this time and switch on.Cache runs for instant access of navigation,network etc.RAM-temporary My quora answer will be lost as it was storing in RAM before switch off . But my quora app, my gallery and others will be on permanent internal storage(in PC hard disks generally) won't be affected. This all happens in CPU right. Okay now one question, who manages all these commands, input, outputs. That's Software may be Windows, Mac ios, Android for mobiles. These are all the managers for computer componential setup for different OS's.

Java is high level language, where as computers understand only binary or low level language or binary code such as 0’s and 1’s. It understand only 00101,1110000101,0010,1100(let these be ABCD in binary). For numbers code in 0 and 1’s, small case will be in 0 and 1s and other symbols too. These will be coverted in byte code by JVM java virtual machine. The program we write will be given to JVM it acts as interpreter. But not in C'.

Let us C…

Do comment. Thank you6 -

I've been programming for quite a while. I know Java and C#, but I decided to pick up another language, C++, so enrolled in a class at my college. My professor is GOD AWFUL. 4 weeks in and WE DIDNT EVEN TOUCH THE #$@&% KEYBOARD. You'd think that we would at least learn inputs or outputs, right? Instead we've been busting ass learning how to format our homework. What a waste of time.

On that note, if there are any good C++ classes on Udemy, and if you've had a good experience I would love your advice since theres many choices to choose from. I'm gonna learn this one way or another, and it seems the latter looks more useful than that person I'm obligated to call "professor".7 -

FUCKING FUCK FUCK FUCK

So yesterday following Java class i went to my next class everything went well (or so i thought) and in my next class my phone blows up with notifications (changes in grades notify my phone) i look down and my Java grade goes from an A to a D in seconds and i was just so confused, after he finished grading it goes up to a C but i was still confused. So the next day I go into class and talk to him about my grade and he says, “you never fix your projects so why would i grade any of them, i’ll just give you f’s” to which i responded, “i am confused what i’m doing wrong (it was a few simple projects where i had to make shapes with stars for example a triangle) my outputs are correct” and he responded with “Oh well i can’t help you” so now i have a C and i did everything right but of course because it wasn’t his way it was wrong.

he just makes me so mad, when a student asks for help who decides to respond with i can’t help, he can but he just won’t.

Fuck him.5 -

Hey everyone :-) - Hope you're all doing well & Staying safe, i just have a question for you all, i have a project i am working on which is a command line tool to track my storage on my PC & laptop, right now it outputs my remaining space, used space and storage capacity :-), it also shows these numbers on bar chart & pie chart - i'm proud of it! :D , its written in Python also - would love to know what other things would you guys add to it? any ideas? id really appreciate it :-) cheers! <336

-

So, I have updated tslint and now I have close to a hundred warnings related to order of imports. 😔

I tried several tools for imports sorting, but none outputs it in a way tslint expects it to be. 😒

God damn it, I have to sort it all manually. 😩2 -

This is how my macbook keybboard writes the letter "b" it’s not happening all the time though, sometimes it outputs b and and other times bb, wtf I only pressed it once. And it's happening randomly, fuck this shit, I cant even take it to apple customer service and leave it there for a week or more. I guess I'm living with it and don't bbother fixing it. fuck b errr5

-

If you don't count meals/toilet breaks/shower then my record would be 15 hours straight (08:00-ish to midnight 24hr clock) for a crap-tier black-white Nonogram/Picross generator that outputs near unsolvable grids because I know sh[BLEEP] on the games' generation algorithm. Yey /s. Petition to open /r/shittyprograms in parallel to /r/shittyrobots to celebrate how shitty my piece of a generator is.

-

Have been working all day long on a dataset.. Worked like charm in the end.. Went for dinner, now it's outputs have changed all together and I haven't touch the code, I think Skynet is here.

-

Can there be a happy rant?

This is going to be a bit of a rambling semi coherent story here:

So this customer who just doesn't know what their data schema is or how they use it (they're a conglomeration of companies so maybe you get how that works out in a database). For every record there's like a ton of reference number type things mapped all over the DB to fit each companies needs needs.

To each company the data means something different, they use the data differently, and despite their claims otherwise, I think there are some logical conflicts in there regarding things like "This widget is owned by company A, division B, user C.". I'm also pretty sure different companies actually don't agree on who owns what... but when I show them they just sort of dance around what they've said in the past...

So I write a report (just an SQL query that outputs ... somewhere ... I mean what isn't that?) that tells them about all the things that happened given X, Y, Z.

Then every damn morning they'd get all up in arms about how some things are 'missing' but sometimes they don't know what or why because they've no clue what the underlying data actually is / their own people don't enter the data in a consistent way. (garbage in garbage out man...)

So I've struggled with this for a few weeks and been really frustrated. Every morning when I'm trying to do something else ... emails about how something isn't working / missing.

In the meantime I'm also frustrated by inquiries about "hey this is just a simple report right?" (to be clear folks asking that aren't being jerks, and they're not wrong ... it really should be simple)

Anyway my boss being the good guy he is offers to take it over, so I can do some things. Also sometimes it helps just to have someone else own something / not just look it over.

So a few days into this.... yup, emails coming in about things 'missing' or 'wrong' every day.

Like it sucks, but it's nice to see it suck for someone else too as validation. -

I’m trying to update a job posting so that it’s not complete BS and deters juniors from applying... but honestly this is so tough... no wonder these posting get so much bs in them...

Maybe devRant community can help be tackle this conundrum.

I am looking for a junior ml engineer. Basically somebody I can offload a bunch of easy menial tasks like “helping data scientists debug their docker containers”, “integrating with 3rd party REST APIs some of our models for governance”, “extend/debug our ci”, “write some preprocessing functions for raw data”. I’m not expecting the person to know any of the tech we are using, but they should at least be competent enough to google what “docker is” or how GitHub actions work. I’ll be reviewing their work anyhow. Also the person should be able to speak to data scientists on topics relating to accuracy metrics and mode inputs/outputs (not so much the deep-end of how the models work).

In my opinion i need either a “mathy person who loves to code” (like me) or a “techy person who’s interested in data science”.

What do you think is a reasonable request for credentials/experience?5 -

I finally got the lstm to a training and validation loss of < 0.05 for predicting the digits of a semiprime's factors.

I used selu activation with lecun normal initialization on a dense decoder, and compiled the model with Adam as the optimizer using mean squared error.

Selu is self-normalizing, meaning it tends to mean 0 and preserves a standard deviation of one, so it eliminates the exploding/vanishing gradient problem. And I can get away with this specifically because selu *only* works on dense layers.

I chose Adam, even though this isn't a spare problem, because Adam excels on noisy problems and non-stationary objectives (definitely this), and because adam typically doesn't require a lot of hyperparameter tuning its ideal here, especially considering because I don't know what the hyperparameters should be to begin with.

I did work out some general guidelines on training quantity vs validation, etc.