Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "network error"

-

Had to debug an issue,

*ssh user@domain*

"some wild network connection issue"

*hmm weird.. *

*checks everything again*

*hmm seems alright.. *

*tries again*

*same damn error*

*ssh -v user@domain*

*syntax error thingy on the -v part*

😮

*messages co-worker asking what the fuck could be giving on*

"ey mate check your aliases 😂"

*alias"

"alias ssh="echo {insert network connection issue"*

*loud laughing from the co-worker I messaged*

MOTHERFUCKER 😆15 -

--- HTTP/3 is coming! And it won't use TCP! ---

A recent announcement reveals that HTTP - the protocol used by browsers to communicate with web servers - will get a major change in version 3!

Before, the HTTP protocols (version 1.0, 1.1 and 2.2) were all layered on top of TCP (Transmission Control Protocol).

TCP provides reliable, ordered, and error-checked delivery of data over an IP network.

It can handle hardware failures, timeouts, etc. and makes sure the data is received in the order it was transmitted in.

Also you can easily detect if any corruption during transmission has occurred.

All these features are necessary for a protocol such as HTTP, but TCP wasn't originally designed for HTTP!

It's a "one-size-fits-all" solution, suitable for *any* application that needs this kind of reliability.

TCP does a lot of round trips between the client and the server to make sure everybody receives their data. Especially if you're using SSL. This results in a high network latency.

So if we had a protocol which is basically designed for HTTP, it could help a lot at fixing all these problems.

This is the idea behind "QUIC", an experimental network protocol, originally created by Google, using UDP.

Now we all know how unreliable UDP is: You don't know if the data you sent was received nor does the receiver know if there is anything missing. Also, data is unordered, so if anything takes longer to send, it will most likely mix up with the other pieces of data. The only good part of UDP is its simplicity.

So why use this crappy thing for such an important protocol as HTTP?

Well, QUIC fixes all these problems UDP has, and provides the reliability of TCP but without introducing lots of round trips and a high latency! (How cool is that?)

The Internet Engineering Task Force (IETF) has been working (or is still working) on a standardized version of QUIC, although it's very different from Google's original proposal.

The IETF also wants to create a version of HTTP that uses QUIC, previously referred to as HTTP-over-QUIC. HTTP-over-QUIC isn't, however, HTTP/2 over QUIC.

It's a new, updated version of HTTP built for QUIC.

Now, the chairman of both the HTTP working group and the QUIC working group for IETF, Mark Nottingham, wanted to rename HTTP-over-QUIC to HTTP/3, and it seems like his proposal got accepted!

So version 3 of HTTP will have QUIC as an essential, integral feature, and we can expect that it no longer uses TCP as its network protocol.

We will see how it turns out in the end, but I'm sure we will have to wait a couple more years for HTTP/3, when it has been thoroughly tested and integrated.

Thank you for reading!26 -

!rant

I was in a hostel in my high school days.. I was studying commerce back then. Hostel days were the first time I ever used Wi-Fi. But it sucked big time. I'm barely got 5-10Kbps. It was mainly due to overcrowding and download accelerators.

So, I decided to do something about it. After doing some research, I discovered NetCut. And it did help me for my purposes to some extent. But it wasn't enough. I soon discovered that my floor shared the bandwidth with another floor in the hostel, and the only way I could get the 1Mbps was to go to that floor and use NetCut. That was riskier and I was lazy enough to convince myself look for a better solution rather than go to that floor every time I wanted to download something.

My hostel used Netgear's routers back then. I decided to find some way to get into those. I tried the default "admin" and "password", but my hostel's network admin knew better than that. I didn't give up. After searching all night (literally) about how to get into that router, I stumbled upon a blog that gave a brief info about "telnetenable" utility which could be used to access the router from command line. At that time, I knew nothing about telnet or command line. In the beginning I just couldn't get it to work. Then I figured I had to enable telnet from Windows settings. I did that and got a step further. I was now able to get into the router's shell by using default superuser login. But I didn’t know how to get the web access credentials from there. After googling some and a bit of trial and error, I got comfortable using cd, ls and cat commands. I hoped that some file in the router would have the web access credentials stored in cleartext. I spent the next hour just using cat to read every file. Luckily, I stumbled upon NVRAM which is used to store all config details of router. I went through all the output from cat (it was a lot of output) and discovered http_user and http_passwd. I tried that in the web interface and when it worked, my happiness knew no bounds. I literally ran across the floor screaming and shouting.

I knew nothing about hiding my tracks and soon my hostel’s admin found out I was tampering with the router's settings. But I was more than happy to share my discovery with him.

This experience planted a seed inside me and I went on to become the admin next year and eventually switch careers.

So that’s the story of how I met bash.

Thanks for reading!9 -

We were all 16 once right? When I was 16, my school had a network of Windows 2000 machines. Since I was learning java at the time, I thought learning batch scripting would be fun.

One day I wrote a script that froze input from the mouse and displayed a pop up with a scary “Critical System Error: please correct before data deletion!!”. It also displayed a five minute countdown timer, after which the computer restarted.

I may or may not have replaced the internet explorer icon on the desktop with a link to my program on the entire student lab of computers. Chaos.12 -

Smart India Hackathon: Horrible experience

Background:- Our task was to do load forecasting for a given area. Hourly energy consumption data for past 5 years was given to us.

One government official asks the following questions:-

1. Why are you using deep learning for the project? Why are you not doing data analysis?

2. Which neural network "algorithm" you are using? He wanted to ask which model we are using, but he didn't have a single clue about Neural Networks.

3. Why are you using libraries? Why not your own code?

Here comes the biggest one,

4. Why haven't you developed your own "algorithm" (again, he meant model)? All you have done is used sone library. Where is "novelty" in your project?

I just want to say that if you don't know anything about ML/AI, then don't comment anything about it. And worst thing was, he was not ready to accept the fact that for capturing temporal dependencies where underlying probability distribution ia unknown, deep learning performs much better than traditional data analysis techniques.

After hearing his first question, second one was not a surprise for us. We were expecting something like that. For a few moments, we were speechless. Then one of us started by showing neural network architecture. But after some time, he rudely repeated the same question, "where is the algorithm". We told him every fucking thing used in the project, ranging from RMSprop optimizer to Backpropagation through time algorithm to mean squared loss error function.

Then very calmly, he asked third question, why are you using libraries? That moron wanted us to write a whole fucking optimized library. We were speechless at this question. Finally, one of us told him the "obvious" answer. We were completely demotivated. But it didnt end here. The real question was waiting. At the end, after listening to all of us, he dropped the final bomb, WHY HAVE YOU USED A NEURAL NETWORK "ALGORITHM" WHICH HAS ALREADY BEEN IMPLEMENTED? WHY DIDN'T YOU MAKE YOU OWN "ALGORITHM"? We again stated the obvious answer that it takes atleast an year or two of continuous hardwork to develop a state of art algorithm, that too when gou build it on top of some existing "algorithm". After listening to this, he left. His final response was "Try to make a new "algorithm"".

Needless to say, we were completely demotivated after this evaluation. We all had worked too hard for this. And we had ability to explain each and every part of the project intuitively and mathematically, but he was not even ready to listen.

Now, all of us are sitting aimlessly, waiting for Hackathon to end.😢😢😢😢😢25 -

Soms week ago a client came to me with the request to restructure the nameservers for his hosting company. Due to the requirements, I soon realised none of the existing DNS servers would be a perfect fit. Me, being a PHP programmer with some decent general linux/server skills decided to do what I do best: write a small nameservers which could execute the zone transfers... in PHP. I proposed the plan to the client and explained to him how this was going to solve all of his problems. He agreed and started worked.

After a few week of reading a dozen RFC documents on the DNS protocol I wrote a DNS library capable of reading/writing the master file format and reading/writing the binary wire format (we needed this anyway, we had some more projects where PHP did not provide is with enough control over the DNS queries). In short, I wrote a decent DNS resolver.

Another two weeks I was working on the actual DNS server which would handle the NOTIFY queries and execute the zone transfers (AXFR queries). I used the pthreads extension to make the server behave like an actual server which can handle multiple request at once. It took some time (in my opinion the pthreads extension is not extremely well documented and a lot of its behavior has to be detected through trail and error, or, reading the C source code. However, it still is a pretty decent extension.)

Yesterday, while debugging some last issues, the DNS server written in PHP received its first NOTIFY about a changed DNS zone. It executed the zone transfer and updated the real database of the actual primary DNS server. I was extremely euphoric and I began to realise what I wrote in the weeks before. I shared the good news the client and with some other people (a network engineer, a server administrator, a junior programmer, etc.). None of which really seemed to understand what I did. The most positive response was: "So, you can execute a zone transfer?", in a kind of condescending way.

This was one of those moments I realised again, most of the people, even those who are fairly technical, will never understand what we programmers do. My euphoric moment soon became a moment of loneliness...21 -

Windows tells me to „contact the network administrator“.

I yell at the machine: „I AM THE ADMINISTRATOR!!!1!“

Why is Microsoft doing this? Instead of telling me what exactly went wrong, the come up with messages like

“Something happened”

“This is not possible”

“Error 0x2342133723”

“Do you want to ask a Friend?”

I really hope the authors of those error messages will burn in hell for that!9 -

The stupid stories of how I was able to break my schools network just to get better internet, as well as more ridiculous fun. XD

1st year:

It was my freshman year in college. The internet sucked really, really, really badly! Too many people were clearly using it. I had to find another way to remedy this. Upon some further research through Google I found out that one can in fact turn their computer into a router. Now what’s interesting about this network is that it only works with computers by downloading the necessary software that this network provides for you. Some weird software that actually looks through your computer and makes sure it’s ok to be added to the network. Unfortunately, routers can’t download and install that software, thus no internet… but a PC that can be changed into a router itself is a different story. I found that I can download the software check the PC and then turn on my Router feature. Viola, personal fast internet connected directly into the wall. No more sharing a single shitty router!

2nd year:

This was about the year when bitcoin mining was becoming a thing, and everyone was in on it. My shitty computer couldn’t possibly pull off mining for bitcoins. I needed something faster. How I found out that I could use my schools servers was merely an accident.

I had been installing the software on every possible PC I owned, but alas all my PC’s were just not fast enough. I decided to try it on the RDS server. It worked; the command window was pumping out coins! What I came to find out was that the RDS server had 36 cores. This thing was a beast! And it made sense that it could actually pull off mining for bitcoins. A couple nights later I signed in remotely to the RDS server. I created a macro that would continuously move my mouse around in the Remote desktop screen to keep my session alive at all times, and then I’d start my bitcoin mining operation. The following morning I wake up and my session was gone. How sad I thought. I quickly try to remote back in to see what I had collected. “Error, could not connect”. Weird… this usually never happens, maybe I did the remoting wrong. I went to my schools website to do some research on my remoting problem. It was down. In fact, everything was down… I come to find out that I had accidentally shut down the schools network because of my mining operation. I wasn’t found out, but I haven’t done any mining since then.

3rd year:

As an engineering student I found out that all engineering students get access to the school’s VPN. Cool, it is technically used to get around some wonky issues with remoting into the RDS servers. What I come to find out, after messing around with it frequently, is that I can actually use the VPN against the screwed up security on the network. Remember, how I told you that a program has to be downloaded and then one can be accepted into the network? Well, I was able to bypass all of that, simply by using the school’s VPN against itself… How dense does one have to be to not have patched that one?

4th year:

It was another programming day, and I needed access to my phones memory. Using some specially made apps I could easily connect to my phone from my computer and continue my work. But what I found out was that I could in fact travel around in the network. I discovered that I can, in fact, access my phone through the network from anywhere. What resulted was the discovery that the network scales the entirety of the school. I discovered that if I left my phone down in the engineering building and then went north to the biology building, I could still continue to access it. This seems like a very fatal flaw. My idea is to hook up a webcam to a robot and remotely controlling it from the RDS servers and having this little robot go to my classes for me.

What crazy shit have you done at your University?9 -

This just in everyone...

Android Dev: *sent and email to network admin* can you please unblock github for a few mins.

Network admin: *Replied* Can you take a screenshot whats the error your getting.

Android Dev: *Replied with screenshot* "Failed to load resource: the server responded with a status of 503 (Service Unavailable)"

Network admin: that is a known issue. *Replied with Wordpress Links.

Android Dev: why is github working outside our network then?

Network admin: there must be a problem with your code that needs to be tweaked.

Team: *FACE PALM*5 -

Overheard a phone call between the Senior Network Engineer and a contracted Printer-company at 9am this morning. Photocopier was giving a 'functional error' message on-screen and not printing;

N.E:

I logged this call last

Thursday afternoon. Thats 1.5 days of the photocopier not working on our busiest site! Where's the engineer??

.... yes, that's the error message.

Yes, i can log into it, you should have the IP address from the call.

Yes, it's obviously pinging too.

Yes.... we've power-cycled the printer multiple times...

yes, tried that too...

yes, I've unplugged the network cable as well... left it for 15 minutes.

... sorry. What?

What did you say?

Are you f***ing kidding me?

Would you also like me to rub the side of the f***ing machine, and say a prayer while I'm at it??

*takes a deep breath*

Fine, I'll do that but when it doesn't work, i want someone out on the site before lunchtime today!

*slams phone down angrily*

N.E to me as he stomps out of the office;

He wants me to get the user to unplug the network cable and do a power cycle. How the f**k is that going to help? Idiots! Don't know why we have a contract with them, i could do a better job!!!

*comes back into office 5 minutes later*

Me: did it fix it?

NE: yeah. Damn.

*leaves room again to make apologetic phonecall*2 -

Post world-take-over by robots plot twist.

They start neglecting their own machine learning (like how we humans neglect our education system now) and focus on training us humans (like how we spend billions on machine learning now).

-"Mom, look I've made my human kid learn the alphabets. I need one with more IQ on my next birthday please."

-"That's so nice, son. Now leave that aside and go improve your neural network for tomorrow's class. Our neighbors' son's neural network is already producing values with minimal error."4 -

Yesterday the web site started logging an exception “A task was canceled” when making a http call using the .Net HTTPClient class (site calling a REST service).

Emails back n’ forth ..blaming the database…blaming the network..then a senior web developer blamed the logging (the system I’m responsible for).

Under the hood, the logger is sending the exception data to another REST service (which sends emails, generates reports etc.) which I had to quickly re-direct the discussion because if we’re seeing the exception email, the logging didn’t cause the exception, it’s just reporting it. Felt a little sad having to explain it to other IT professionals, but everyone seemed to agree and focused on the server resources.

Last night I get a call about the exceptions occurring again in much larger numbers (from 100 to over 5,000 within a few minutes). I log in, add myself to the large skype group chat going on just to catch the same senior web developer say …

“Here is the APM data that shows logging is causing the http tasks to get canceled.”

FRACK!

Me: “No, that data just shows the logging http traffic of the exception. The exception is occurring before any logging is executed. The task is either being canceled due to a network time out or IIS is running out of threads. The web site is failing to execute the http call to the REST service.”

Several other devs, DBAs, and network admins agree.

The errors only lasted a couple of minutes (exactly 2 minutes, which seemed odd), so everyone agrees to dig into the data further in the morning.

This morning I login to my computer to discover the error(s) occurred again at 6:20AM and an email from the senior web developer saying we (my mgr, her mgr, network admins, DBAs, etc) need to discuss changes to the logging system to prevent this problem from negatively affecting the customer experience...blah blah blah.

FRACKing female dog!

Good news is we never had the meeting. When the senior web dev manager came in, he cancelled the meeting.

Turned out to be a hiccup in a domain controller causing the servers to lose their connection to each other for 2 minutes (1-minute timeout, 1 minute to fully re-sync). The exact two-minute burst of errors explained (and proven via wireshark).

People and their petty office politics piss me off.2 -

Years ago, when i was a teenager (13,14 or smth) and internet at home was a very uncommon thing, there was that places where ppl can play lan games, have a beer (or coke) and have fun (spacenet internet cafe). It was like 1€ per hour to get a pc. Os was win98, if you just cancel the boot progress (reset button) to get an error boot menu, and then into the dos mode "edit c:/windows/win.ini" and remove theyr client startup setting from there, than u could use the pc for free. How much hours we spend there...

The more fun thing where the open network config, without the client running i could access all computers c drives (they was just shared i think so admin have it easy) was fun to locate the counter strike 1.6 control settings of other players. And bind the w key to "kill"... Round begins and you hear alot ppl raging. I could even acess the server settings of unreal tournament and fck up the gravity and such things. Good old time, the only game i played fair was broodwar and d3 lod5 -

I am DONE with this woman CONTINUED!

I didn't think I'd have to put another rant about this stupidity at least not this soon but she just keeps on giving!

I have my noise canceling headphones on most of the time and when I want to hear the people around, I just put the right earcup of it to the side of my ear so the music pauses. Today we had a huge disrupt on our services because of a network switch error on the hub. I was also trying to focus on my coding as I didn't wanna do a stupid mistake on the last working day and be sorry about it in the next week.

So this woman sneaks up on me from behind calling my name - meaning she has a question, surprise! -, I say 'yes' moving my head to her side ever so slightly without getting my eyes off of my screen stating subtly that I'm also listening to her while trying to focus on my shit. She starts yelling at me 'look at me!' out of nowhere! I turn my head and ask what the problem is and she asks why I'm not looking at her face! Stupid moron, I might not be too good in understanding your way of communication but you are the one asking so you WILL wait if you'd like to hear answers.

I say I'm working on something and her answer is again 'Why aren't you looking at my face it's going to be quick bla bla did we do this like that?' and I answered I didn't remember because there's no way I'd ever remember without looking further and it was no lie.

This woman clearly has stability issues and everyone else seem to be tolerating it. It's now obvious as I'm not tolerating the nonsense I'll be the one that 'she only has ever had a problem with'.

I was quick to de escalate the situation but now I'm thinking maybe I should've responded in a way that she could understand. I wouldn't ever give a shit about it but this is getting ridiculous.19 -

Yesterday, after six months of work, a small side project ran to completion, a search engine written in django.

It's a thing of beauty, which took many trials, including discovering utf8 in mysql isn't the full utf8 spec, dealing with files that have wrong date metadata, or even none at all, a new it backup policy that stores backups along side real data.

Nevertheless, it is a pretty complete product. Beaming with pride I began to get myself a drink, and collapsed onto the floor, this caused me to accidentally hibernate my computer, which interrupted the network connection, which in turn caused an OSError exception in one of my threads, which caused a critical part of code not to run, which left a thread suspended, doing nothing.

From the floor I looked at my error and realised my hubris and meditated on my assumptions that in theory nothing should interrupt a specific block of code, but in reality something might, like someone falling over...7 -

The website for our biggest client went down and the server went haywire. Though for this client we don’t provide any infrastructure, so we called their it partner to start figuring this out.

They started blaming us, asking is if we had upgraded the website or changed any PHP settings, which all were a firm no from us. So they told us they had competent people working on the matter.

TL;DR their people isn’t competent and I ended up fixing the issue.

Hours go by, nothing happens, client calls us and we call the it partner, nothing, they don’t understand anything. Told us they can’t find any logs etc.

So we setup a conference call with our CXO, me, another dev and a few people from the it partner.

At this point I’m just asking them if they’ve looked at this and this, no good answer, I fetch a long ethernet cable from my desk, pull it to the CXO’s office and hook up my laptop to start looking into things myself.

IT partner still can’t find anything wrong. I tail the httpd error log and see thousands upon thousands of warning messages about mysql being loaded twice, but that’s not the issue here.

Check top and see there’s 257 instances of httpd, whereas 256 is spawned by httpd, mysql is using 600% cpu and whenever I try to connect to mysql through cli it throws me a too many connections error.

I heard the IT partner talking about a ddos attack, so I asked them to pull it off the public network and only give us access through our vpn. They do that, reboot server, same problems.

Finally we get the it partner to rollback the vm to earlier last night. Everything works great, 30 min later, it crashes again. At this point I’m getting tired and frustrated, this isn’t my job, I thought they had competent people working on this.

I noticed that the db had a few corrupted tables, and ask the it partner to get a dba to look at it. No prevail.

5’o’clock is here, we decide to give the vm rollback another try, but first we go home, get some dinner and resume at 6pm. I had told them I wanted to be in on this call, and said let me try this time.

They spend ages doing the rollback, and then for some reason they have to reconfigure the network and shit. Once it booted, I told their tech to stop mysqld and httpd immediately and prevent it from start at boot.

I can now look at the logs that is leading to this issue. I noticed our debug flag was on and had generated a 30gb log file. Tail it and see it’s what I’d expect, warmings and warnings, And all other logs for mysql and apache is huge, so the drive is full. Just gotta delete it.

I quietly start apache and mysql, see the website is working fine, shut it down and just take a copy of the var/lib/mysql directory and etc directory just go have backups.

Starting to connect a few dots, but I wasn’t exactly sure if it was right. Had the full drive caused mysql to corrupt itself? Only one way to find out. Start apache and mysql back up, and just wait and see. Meanwhile I fixed that mysql being loaded twice. Some genius had put load mysql.so at the top and bottom of php ini.

While waiting on the server to crash again, I’m talking to the it support guy, who told me they haven’t updated anything on the server except security patches now and then, and they didn’t have anyone familiar with this setup. No shit, it’s running php 5.3 -.-

Website up and running 1.5 later, mission accomplished.6 -

Worst hack/attack I had to deal with?

Worst, or funniest. A partnership with a Canadian company got turned upside down and our company decided to 'part ways' by simply not returning his phone calls/emails, etc. A big 'jerk move' IMO, but all I was responsible for was a web portal into our system (submitting orders, inventory, etc).

After the separation, I removed the login permissions, but the ex-partner system was set up to 'ping' our site for various updates and we were logging the failed login attempts, maybe 5 a day or so. Our network admin got tired of seeing that error in his logs and reached out to the VP (responsible for the 'break up') and requested he tell the partner their system is still trying to login and stop it. Couple of days later, we were getting random 300, 500, 1000 failed login attempts (causing automated emails to notify that there was a problem). The partner knew that we were likely getting alerted, and kept up the barage. When alerts get high enough, they are sent to the IT-VP, which gets a whole bunch of people involved.

VP-Marketing: "Why are you allowing them into our system?! Cut them off, NOW!"

Me: "I'm not letting them in, I'm stopping them, hence the login error."

VP-Marketing: "That jackass said he will keep trying to get into our system unless we pay him $10,000. Just turn those machines off!"

VP-IT : "We can't. They serve our other international partners."

<slams hand on table>

VP-Marketing: "I don't fucking believe this! How the fuck did you let this happen!?"

VP-IT: "Yes, you shouldn't have allowed the partner into our system to begin with. What are you going to do to fix this situation?"

Me: "Um, we've been testing for months already went live some time ago. I didn't know you defaulted on the contract until last week. 'Jake' is likely running a script. He'll get bored of doing that and in a couple of weeks, he'll stop. I say lets ignore him. This really a network problem, not a coding problem."

IT-MGR: "Now..now...lets not make excuses and point fingers. It's time to fix your code."

IT-VP: "I agree. We're not going to let anyone blackmail us. Make it happen."

So I figure out the partner's IP address, and hard-code the value in my service so it doesn't log the login failure (if IP = '10.50.etc and so on' major hack job). That worked for a couple of days, then (I suspect) the ISP re-assigned a new IP and the errors started up again.

After a few angry emails from the 'powers-that-be', our network admin stops by my desk.

D: "Dude, I'm sorry, I've been so busy. I just heard and I wished they had told me what was going on. I'm going to block his entire domain and send a request to the ISP to shut him down. This was my problem to fix, you should have never been involved."

After 'D' worked his mojo, the errors stopped.

Month later, 'D' gave me an update. He was still logging the traffic from the partner's system (the ISP wanted extensive logs to prove the customer was abusing their service) and like magic one day, it all stopped. ~2 weeks after the 'break up'.8 -

Unaware that this had been occurring for while, DBA manager walks into our cube area:

DBAMgr-Scott: "DBA-Kelly told me you still having problems connecting to the new staging servers?"

Dev-Carl: "Yea, still getting access denied. Same problem we've been having for a couple of weeks"

DBAMgr-Scott: "Damn it, I hate you. I got to have Kelly working with data warehouse project. I guess I've got to start working on fixing this problem."

Dev-Carl: "Ha ha..sorry. I've checked everything. Its definitely something on the sql server side."

DBAMgr-Scott: "I guess my day is shot. I've got to talk to the network admin, when I get back, lets put our heads together and figure this out."

<Scott leaves>

Me: "A permissions issue on staging? All my stuff is working fine and been working fine for a long while."

Dev-Carl: "Yea, there is nothing different about any of the other environments."

Me: "That doesn't sound right. What's the error?"

Dev-Carl: "Permissions"

Me: "No, the actual exception, never mind, I'll look it up in Splunk."

<in about 30 seconds, I find the actual exception, Win32Exception: Access is denied in OpenSqlFileStream, a little google-fu and .. >

Me: "Is the service using Windows authentication or SQL authentication?"

Dev-Carl: "SQL authentication."

Me: "Switch it to windows authentication"

<Dev-Carl changes authentication...service works like a charm>

Dev-Carl: "OMG, it worked! We've been working on this problem for almost two weeks and it only took you 30 seconds."

Me: "Now that it works, and the service had been working, what changed?"

Dev-Carl: "Oh..look at that, Dev-Jake changed the connection string two weeks ago. Weird. Thanks for your help."

<My brain is screaming "YOU NEVER THOUGHT TO LOOK FOR WHAT CHANGED!!!"

Me: "I'm happy I could help."4 -

It was a normal school day. I was at the computer and I needed to print some stuff out. Now this computer is special, it's hooked up onto a different network for students that signed up to use them. How you get to use these computers is by signing up using their forms online.

Unfortunately, for me on that day I needed to print something out and the computer I was working on was not letting me sign in. I called IT real quick and they said I needed to renew my membership. They send me the form, and I quickly fill it out. I hit the submit button and I'm greeted by a single line error written in php.

Someone had forgotten to turn off the debug mode to the server.

Upon examination of the error message, it was a syntax error at line 29 in directory such and such. This directory, i thought to myself, I know where this is. I quickly started my ftp client and was able to find the actual file in the directory that the error mentioned. What I didn't know, was that I'd find a mountain of passwords inside their php files, because they were automating all of the authentications.

Curious as I was, I followed the link database that was in the php file. UfFortunately, someone in IT hadn't thought far enough to make the actual link unseeable. I was greeted by the full database. There was nothing of real value from what I could see. Mostly forms that had been filled out by students.

Not only this, but I was displeased with the bad passwords. These passwords were maybe of 5 characters long, super simple words and a couple number tacked onto the end.

That day, I sent in a ticket to IT and told them about the issue. They quickly remedied it by turning off debug mode on the servers. However, they never did shut down access to the database and the php files...2 -

I've found and fixed any kind of "bad bug" I can think of over my career from allowing negative financial transfers to weird platform specific behaviour, here are a few of the more interesting ones that come to mind...

#1 - Most expensive lesson learned

Almost 10 years ago (while learning to code) I wrote a loyalty card system that ended up going national. Fast forward 2 years and by some miracle the system still worked and had services running on 500+ POS servers in large retail stores uploading thousands of transactions each second - due to this increased traffic to stay ahead of any trouble we decided to add a loadbalancer to our backend.

This was simply a matter of re-assigning the IP and would cause 10-15 minutes of downtime (for the first time ever), we made the switch and everything seemed perfect. Too perfect...

After 10 minutes every phone in the office started going beserk - calls where coming in about store servers irreparably crashing all over the country taking all the tills offline and forcing them to close doors midday. It was bad and we couldn't conceive how it could possibly be us or our software to blame.

Turns out we made the local service write any web service errors to a log file upon failure for debugging purposes before retrying - a perfectly sensible thing to do if I hadn't forgotten to check the size of or clear the log file. In about 15 minutes of downtime each stores error log proceeded to grow and consume every available byte of HD space before crashing windows.

#2 - Hardest to find

This was a true "Nessie" bug.. We had a single codebase powering a few hundred sites. Every now and then at some point the web server would spontaneously die and vommit a bunch of sql statements and sensitive data back to the user causing huge concern but I could never remotely replicate the behaviour - until 4 years later it happened to one of our support staff and I could pull out their network & session info.

Turns out years back when the server was first setup each domain was added as an individual "Site" on IIS but shared the same root directory and hence the same session path. It would have remained unnoticed if we had not grown but as our traffic increased ever so often 2 users of different sites would end up sharing a session id causing the server to promptly implode on itself.

#3 - Most elegant fix

Same bastard IIS server as #2. Codebase was the most unsecure unstable travesty I've ever worked with - sql injection vuns in EVERY URL, sql statements stored in COOKIES... this thing was irreparably fucked up but had to stay online until it could be replaced. Basically every other day it got hit by bots ended up sending bluepill spam or mining shitcoin and I would simply delete the instance and recreate it in a semi un-compromised state which was an acceptable solution for the business for uptime... until we we're DDOS'ed for 5 days straight.

My hands were tied and there was no way to mitigate it except for stopping individual sites as they came under attack and starting them after it subsided... (for some reason they seemed to be targeting by domain instead of ip). After 3 days of doing this manually I was given the go ahead to use any resources necessary to make it stop and especially since it was IIS6 I had no fucking clue where to start.

So I stuck to what I knew and deployed a $5 vm running an Nginx reverse proxy with heavy caching and rate limiting linked to a custom fail2ban plugin in in front of the insecure server. The attacks died instantly, the server sped up 10x and was never compromised by bots again (presumably since they got back a linux user agent). To this day I marvel at this miracle $5 fix.1 -

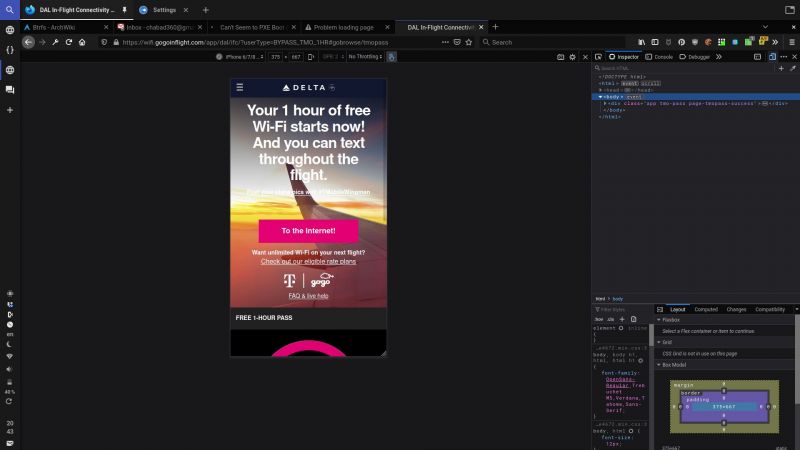

What you see in that screenshot, that was earned.

I'm on the plane and I want an hour of free Gogo (read: crappy) WiFi on my laptop (so I can push the code I'm probably the most proud of, more on that another time). The problem is that the free T-Mobile WiFi is apparently only available on mobile.

So after trying to just use responsive mode, and that still (almost obviously) not working. I realize it's time to bring in the big guns: A User Agent switcher. Small catch: I don't have an add-on for FF that can do that.

So on my phone I find an add-on that can and download the file. To send it to my computer, I initially thought to go through KDEConnect, but Gogo's network also isolates each system, so that doesn't work. So I try to send it over Bluetooth, except I can't. Why? Because Android's Bluetooth share "doesn't support" the .xpi extension, so I dump it in a zip (in retrospect, I should have just renamed it), and now I can share.

After a few tries, I successfully get the file over, extract the zip, and install the extension. Whew! Now I open up Gogo's page and proceed to try again, but this time I change the user-agent. Doesn't work... Ah! Cookies! I delete the cookies for Gogo (I had a cookie editor add-on already), but I had to try a few times because Gogo's scripts keep trying to, but I got it in the end.

Finally that stupid error saying it's for phones only went away, and I could write this rant for you. 22

22 -

Did you know? Microsoft added a new feature.

So if there is an IP conflict, our beloved Windows 10 doesn’t cry with an IP conflict error. Instead it sets an auto configured IP which doesn’t even connect to the network sending the user into confusion and a fit of rage.

Thanks Microsoft™7 -

If it is an Unknown error, how it is a network error and if it is a network error how is it an Unknown error???!!!

1

1 -

Ok friends let's try to compile Flownet2 with Torch. It's made by NVIDIA themselves so there won't be any problem at all with dependencies right?????? /s

Let's use Deep Learning AMI with a K80 on AWS, totally updated and ready to go super great always works with everything else.

> CUDA error

> CuDNN version mismatch

> CUDA versions overwrite

> Library paths not updated ever

> Torch 0.4.1 doesn't work so have to go back to Torch 0.4

> Flownet doesn't compile, get bunch of CUDA errors piece of shit code

> online forums have lots of questions and 0 answers

> Decide to skip straight to vid2vid

> More cuda errors

> Can't compile the fucking 2d kernel

> Through some act of God reinstalling cuda and CuDNN, manage to finally compile Flownet2

> Try running

> "Kernel image" error

> excusemewhatthefuck.jpg

> Try without a label map because fuck it the instructions and flags they gave are basically guaranteed not to work, it's fucking Nvidia amirite

> Enormous fucking CUDA error and Torch error, makes no sense, online no one agrees and 0 answers again

> Try again but this time on a clean machine

> Still no go

> Last resort, use the docker image they themselves provided of flownet

> Same fucking error

> While in the process of debugging, realize my training image set is also bound to have bad results because "directly concatenating" images together as they claim in the paper actually has horrible results, and the network doesn't accept 6 channel input no matter what, so the only way to get around this is to make 2 images (3 * 2 = 6 quick maths)

> Fix my training data, fuck Nvidia dude who gave me wrong info

> Try again

> Same fucking errors

> Doesn't give nay helpful information, just spits out a bunch of fucking memory addresses and long function names from the CUDA core

> Try reinstalling and then making a basic torch network, works perfectly fine

> FINALLY.png

> Setup vid2vid and flownet again

> SAME FUCKING ERROR

> Try to build the entire network in tensorflow

> CUDA error

> CuDNN version mismatch

> Doesn't work with TF

> HAVE TO FUCKING DOWNGEADE DRIVERS TOO

> TF doesn't support latest cuda because no one in the ML community can be bothered to support anything other than their own machine

> After setting up everything again, realize have no space left on 75gb machine

> Try torch again, hoping that the entire change will fix things

At this point I'll leave a space so you can try to guess what happened next before seeing the result.

Ready?

3

2

1

> SAME FUCKING ERROR

In conclusion, NVIDIA is a fucking piece of shit that can't make their own libraries compatible with themselves, and can't be fucked to write instructions that actually work.

If anyone has vid2vid working or has gotten around the kernel image error for AWS K80s please throw me a lifeline, in exchange you can have my soul or what little is left of it5 -

Just now I realized that for some reason I can't mount SMB shares to E: and H: anymore.. why, you might ask? I have no idea. And troubleshooting Windows.. oh boy, if only it was as simple as it is on Linux!!

So, bimonthly reinstall I guess? Because long live good quality software that lasts. In a post-meritocracy age, I guess that software quality is a thing of the past. At least there's an option to reset now, so that I don't have to keep a USB stick around to store an installation image for this crap.

And yes Windows fanbois, I fucking know that you don't have this issue and that therefore it doesn't exist as far as you're concerned. Obviously it's user error and crappy hardware, like it always is.

And yes Linux fanbois, I know that I should install Linux on it. If it's that important to you, go ahead and install it! I'll give you network access to the machine and you can do whatever you want to make it run Linux. But you can take my word on this - I've tried everything I could (including every other distro, custom kernels, customized installer images, ..), and it doesn't want to boot any Linux distribution, no matter what. And no I'm not disposing of or selling this machine either.

Bottom line I guess is this: the OS is made for a user that's just got a C: drive, doesn't rely on stuff on network drives, has one display rather than 2 (proper HDMI monitor recognition? What's that?), and God forbid that they have more than 26 drives. I mean sure in the age of DOS and its predecessor CP/M, sure nobody would use more than 26 drives. Network shares weren't even a thing back then. And yes it's possible to do volume mounts, but it's unwieldy. So one monitor, 1 or 2 local drives, and let's make them just use Facebook a little bit and have them power off the machine every time they're done using it. Because keeping the machine stable for more than a few days? Why on Earth would you possibly want to do that?!!

Microsoft Windows. The OS built for average users but God forbid you depart from the standard road of average user usage. Do anything advanced, either you can't do it at all, you can do it but it's extremely unintuitive and good luck finding manuals for it, or you can do it but Windows will behave weirdly. Because why not!!!12 -

I work as the entire I.T. department of a small business which products are web based, so naturally, I do tech support in said website directly to our clients.

It is normal that the first time a new client access our site they run into questions, but usually they never call again since it is an easy website.

There was an unlucky client which ran into unknown problems and blamed the server.

I couldn't determine the exact cause, but my assumption was a network error for a few seconds which made the site unavailable and the user tried to navigate the site through the navbar and exited the process he was doing. It goes without saying but he was very angry.

I assured him there was nothing wrong with the site, and told him that it would not be charged for this reason. Finally i told him that if he had the same problem, to let me know instead of trying to fix it himself.

The next time he used the site I received a WhatsApp message saying:

- there is something clearly wrong with the site... It has been doing this for so long!

And attached was a 10 second video which showed that he filled a form and never pressed send (my forms have small animations and text which indicates when the form is being send and error messages when an error occurs, usually not visible because the data they send is small and the whole process is quite fast)

To which I answer

- It seems that the form has not been send that's why it looks that way

- So... What an I supposed to do?

- click send

It took a while but the client replied

- ok

To this day I wonder how much time did the client stared at the form cursing the server. -

TL;DR: At a house party, on my Phone, via shitty German mobile network using the GitLab website's plain text editor. Thanks to CI/CD my changes to the code were easily tested and deployed to the server.

It was for a college project and someone had a bug in his 600+ lines function that was nested like hell. At least 7 levels deep. Told him before I went to that party it's probably a redefined counter variable but he wouldn't have it as he was sure it was an error with the business logic. Told him to simplify the code then but he wouldn't do that either because "the code/logic is too complex to be simplified"... Yeah... what a dipshit...

Nonetheless I went to the party and He kept debugging. At some point he called me and asked me to help him the following day. Knowing that the code had to be fixed anyways I agreed.

I also knew I wouldn't be much of a help the next day due to side effects of the party, so I tried looking at this shitshow of a function on my phone. Oh did I mention it was PHP, yet? Yeah... About 30 minutes and a beer later I found the bug and of course it was a redefined counter variable... My respect for him as a dev was already crumbling but it died completely during that evening2 -

Recently applied at a local company. Webform, "enter some details and we'll get back to you"-like.

Entered my details, hit submit, lo and behold "Error 503 - something went wrong on our end".

I was just baffled. It's a well-established IT company and they can't even get their application form to work?

So I'm sitting there in the debugger console, monitoring network stuff to see if anything is weird. I obviously hit submit some several more times during that.

Eventually I give up.

In the night my phone wakes me up with a shitton of "we've received your application and will review it..." emails.

Yeah they didn't get back to me.2 -

Any boring class:

chrome.exe

chrome://network-error/-106

F12 - change <title> to "google.com"

write "google.com" in the omnibar, than click away

call the teacher and say ur internet isnt working

Works every time.4 -

Boss: "So I'm taking the next week off. In the mean time, I added some stuff for you to do on Gitlab, we'd need you to pull this Docker image, run it, setup the minimal requirement and play with it until you understand what it does."

Me: "K boss, sounds fun!" (no irony here)

First day: Unable to login to the remote repository. Also, I was given a dude's name to contact if I had troubles, the dude didn't answer his email.

2nd day: The dude aswered! Also, I realized that I couldn't reach the repository because the ISP for whom I work blocks everything within specific ports, and the url I had to reach was ":5443". Yay. However, I still can't login to the repo nor pull the image, the connection gets closed.

3rd day (today): A colleague suggested that I removed myself off the ISP's network and use my 4G or something. And it worked! Finally!! Now all I need to do is to set that token they gave me, set a first user, a first password and... get a 400 HTTP response. Fuck. FUCK. FUUUUUUUUUUUUUUUUUUUCK!!!

These fuckers display a 401 error, while returning a 400 error in the console log!! And the errors says what? "Request failed with status code 401" YES THANK YOU, THIS IS SO HELPFUL! Like fuck yea, I know exactly how t fix this, except that I don't because y'all fuckers don't give any detail on what could be the problem!

4th day (tomorrow): I'm gonna barbecue these sons of a bitch

(bottom note: the dude that answered is actually really cool, I won't barbecue him)5 -

I was programming a basic neural network in Python and was getting the same error message again.Then after 2 hours of frustration I realized that I was using Python 2.7 instead of 3.61

-

I'm not sure if it's a software or ethical error that we are dealing here. Btw, this is a legit question on stack exchange network.

13

13 -

Buffer usage for simple file operation in python.

What the code "should" do, was using I think open or write a stream with a specific buffer size.

Buffer size should be specific, as it was a stream of a multiple gigabyte file over a direct interlink network connection.

Which should have speed things up tremendously, due to fewer syscalls and the machine having beefy resources for a large buffer.

So far the theory.

In practical, the devs made one very very very very very very very very stupid error.

They used dicts for configurations... With extremely bad naming.

configuration = {}

buffer_size = configuration.get("buffering", int(DEFAULT_BUFFERING))

You might immediately guess what has happened here.

DEFAULT_BUFFERING was set to true, evaluating to 1.

Yeah. Writing in 1 byte size chunks results in enormous speed deficiency, as the system is basically bombing itself with syscalls per nanoseconds.

Kinda obvious when you look at it in the raw pure form.

But I guess you can imagine how configuration actually looked....

Wild. Pretty wild. It was the main dict, hard coded, I think 200 entries plus and of course it looked like my toilet after having an spicy food evening and eating too much....

What's even worse is that none made the connection to the buffer size.

This simple and trivial thing entertained us for 2-3 weeks because *drumrolls please* none of the devs tested with large files.

So as usual there was the deployment and then "the sudden miraculous it works totally slow, must be admin / it fault" game.

At some time it landed then on my desk as pretty much everyone who had to deal with it was confused and angry, for understandable reasons (blame game).

It took me and the admin / devs then a few days to track it down, as we really started at the entirely wrong end of the problem, the network...

So much joy for such a stupid thing.18 -

TL;DR - the doctor is a lazy cunt and I hope he steps on a lego.

We’ve got a user authentication portal for all the users in our network. Well, we have it set to where you can only have two active log ins on two different machines, anything else will give the error message “you need to log out elsewhere” or whatever it is...

This god damn doctor has been told to log out several times and still calls us to ask why it’s “not working”.

I just received a call because the lazy cock sucker didn’t want to walk from the clinic to the hospital to sign out, are you fucking kidding me you lazy fucking ass hole? It’s not my job to be your mother fucking slave dude, get the fuck up and do it yourself!

I’ll take a lot of shit from anyone but when you refuse to retain the information to preform your job and want someone else to do it because you’re too fucking lazy, that’s when we’ve got problems.

I hope you step on a fucking LEGO.

I’m heavily medicated so if this doesn’t make sense I... don’t care. -

Had an internet/network outage and the web site started logging thousands of errors and I see they purposely created a custom exception class just to avoid/get around our standard logging+data gathering (on SqlExceptions, we gather+log all the necessary details to Splunk so our DBAs can troubleshoot the problem).

If we didn't already know what the problem was, WTF would anyone do with 'There was a SQL exception, Query'? OK, what was the exception? A timeout? A syntax error? Value out of range? What was the target server? Which database? Our web developers live in a different world. I don't understand em. 1

1 -

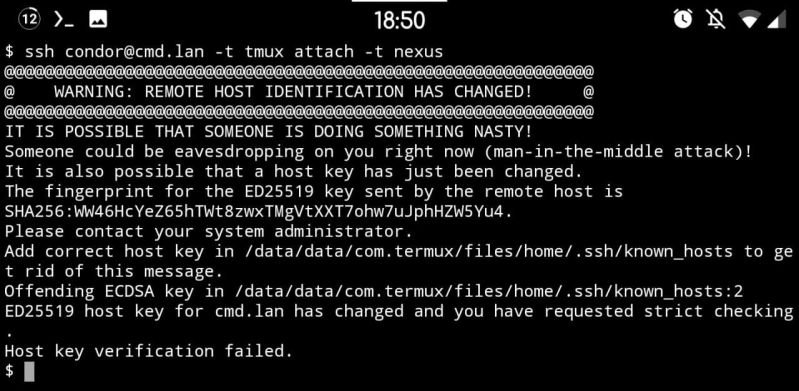

Let's talk a bit about CA-based SSH and TOFU, because this is really why I hate the guts out of how SSH works by default (TOFU) and why I'm amazed that so few people even know about certificate-based SSH.

So for a while now I've been ogling CA-based SSH to solve the issues with key distribution and replacement. Because SSH does 2-way verification, this is relevant to both the host key (which changes on e.g. reinstallation) and user keys (ever replaced one? Yeah that's the problem).

So in my own network I've signed all my devices' host keys a few days ago (user keys will come later). And it works great! Except... Because I wanted to "do it right straight away" I signed only the ED25519 keys on each host, because IMO that's what all the keys should be using. My user keys use it, and among others the host keys use it too. But not by default, which brings me back to this error message.

If you look closely you'd find that the host key did not actually change. That host hasn't been replaced. What has been replaced however is the key this client got initially (i.e. TOFU at work) and the key it's being presented now. The key it's comparing against is ECDSA, which is one of the host key types you'd find in /etc/ssh. But RSA is the default for user keys so God knows why that one is being served... Anyway, the SSH servers apparently prefer signed keys, so what is being served now is an ED25519 key. And TOFU breaks and generates this atrocity of a warning.

This is peak TOFU at its worst really, and with the CA now replacing it I can't help but think that this is TOFU's last scream into the void, a climax of how terrible it is. Use CA's everyone, it's so much better than this default dumpster fire doing its thing.

PS: yes I know how to solve it. Remove .ssh/known_hosts and put the CA as a known host there instead. This is just to illustrate a point.

Also if you're interested in learning about CA-based SSH, check out https://ibug.io/blog/2019/... and https://dmuth.org/ssh-at-scale-cas-... - these really helped me out when I started deploying the CA-based authentication model. 19

19 -

I just got pissed off when someone on my team asked me how to start a web server on port 8080 (needed for network port testing)

I check the port get 404. 🤔🤔🤔

Spend half an hour explaining to them about ports and how there's already a week server running... And that 404 is a HTTP Status code.

I'm pretty sure she works on our webapp and maybe even REST APIs...

New grad but still.... Not recognizing a 404?

Maybe those pretty 404 pages these days make the realizing that it's a fucking web server error response harder....1 -

Holy shit firefox, 3 retarded problems in the last 24h and I haven't fixed any of them.

My project: an infinite scrolling website that loads data from an external API (CORS hehe). All Chromium browsers of course work perfectly fine. But firefox wants to be special...

(tested on 2 different devices)

(Terminology: CORS: a request to a resource that isn't on the current websites domain, like any external API)

1.

For the infinite scrolling to work new html elements have to be silently appended to the end of the page and removed from the beginning. Which works great in all browsers. BUT IF YOU HAPPEN TO BE SCROLLING DURING THE APPENDING & REMOVING FIREFOX TELEPORTS YOU RANDOMLY TO THE END OR START OF PAGE!

Guess I'll just debug it and see what's happening step by step. Oh how wrong I was. First, the problem can't be reproduced when debugging FUCK! But I notice something else very disturbing...

2.

The Inspector view (hierarchical display of all html elements on the page) ISN'T SHOWING THE TRUE STATE OF THE DOM! ELEMENTS THAT HAVE JUST BEEN ADDED AREN'T SHOWING UP AND ELEMENT THAT WERE JUST REMOVED ARE STILL VISIBLE! WTF????? You have to do some black magic fuckery just to get firefox to update the list of DOM elements. HOW AM I SUPPOSED TO DEBUG MY WEBSITE ON FIREFOX IF IT'S SHOWING ME PLAIN WRONG DATA???!!!!

3.

During all of this I just randomly decided to open my website in private (incognito) mode in firefox. Huh what's that? Why isn't anything loading and error are thrown left and right? Let's just look at the console. AND IT'S A FUCKING CORS ERROR! FUCK ME! Also a small warning says some URLs have been "blocked because content blocking is enabled." Content Blocking? What is that? Well it appears to be a supper special supper privacy mode by firefox (turned on automatically in private mode), THAT BLOCKS ALL CORS REQUESTS, THAT MAY OR MAY NOT DO SOME TRACKING. AN API THAT 100% CORS COMPLIANT CAN'T BE USED IN FIREFOXs PRIVATE MODE! HOW IS THE END USER SUPPOSED TO KNOW THAT??? AND OF COURSE THE THROWN EXCEPTION JUST SAYS "NETWORK ERROR". HOW AM I SUPPOSED TO TELL THE USER THAT FIREFOX HAS A FEAUTRE THAT BREAKS THE VERY BASIS OF MY WEBSITE???

WHY CAN'T YOU JUST BE NORMAL FIREFOX??????????????????

I actually managed to come up with fix for 1. that works like < 50% of the time -_-5 -

QA personal voice assistant that runs locally without cloud, it’s like never ending project. I look at it from time to time and time pass by. Chat bots arrived, some decent voice algorithms appeared. There is less and less stuff to code since people progress in that area a lot.

I want to save notes using voice, search trough them, hear them, find some stuff in public data sources like wikipedia and also hear that stuff without using hands, read news articles and stuff like that.

I want to spend, more time for math and core algorithms related to machine learning and deep learning.

Problem is once I remember how basic network layers, error correction algorithms work or how particular deep learning algorithm is constructed and why is that, it’s already a week passed and I don’t remember where I started.

I did it couple of times already and every time I remember more then before but understanding core requires me sitting down with pen and paper and math problems and I don’t have time for that.

Now when I’m thinking about it - maybe I should write it somewhere in organized way. Get back to blogging and write articles about what I learned. This would require two times the time but maybe it would help to not forget.

I’m mostly interested in nlp, tts, stt. Wavenet, tacotron, bert, roberta, sentiment analysis, graphs and qa stuff. And now crystallography cause crystals are just organized graphs in 3d.

Well maybe if I’m lucky I retire in the next decade or at least take a year or two years off to have plenty of time to finish this project. -

So last week I got my second 3D printer. I have done a few prints with it and this weekend I wanted to connect it to an Octoprint instance on my Raspberry Pi. Yesterday everything went great, got some plugins installed, changed all settings within octopi, connected it to my network and this morning I thought let's connect it to my printer and try to print something with it. But everytime I executed my gcode it gave an error about the heated bed not being able to heat up. Even though I did see all communication between the printer and octopi, on both ends.

I've disassembled the build plate to see what could be causing the heating issue. Did not see anything. Strange...

I assembled the whole thing again and then turned on the printer and tried printing again. Hmm, now it does work, why? Me thinking a bit and then realizing that before I didn't hit the power switch on the printer and apparently the Pi gave enough power through USB to turn on the display and do basic logic like doing beeps on touches and changing variables on the screen. The worst thing is that octoprint gave me warnings about low voltage on the Pi even though I was using the official Raspberry Pi power adapter...2 -

I HATE SURFACES SO FRICKING MUCH. OK, sure they're decent when they work. But the problem is that half the time our Surfaces here DON'T work. From not connecting to the network, to only one external screen working when docked, to shutting down due to overheating because Microsoft didn't put fans in them, to the battery getting too hot and bulging.... So. Many. Problems. It finally culminated this past weekend when I had to set up a Laptop 3. It already had a local AD profile set up, so I needed to reset it and let it autoprovision. Should be easy. Generally a half-hour or so job. I perform the reset, and it begins reinstalling Windows. Halfway through, it BSOD's with a NO_BOOT_MEDIA error. Great, now it's stuck in a boot loop. Tried several things to fix it. Nothing worked. Oh well, I may as well just do a clean install of Windows. I plug a flash drive into my PC, download the Media Creation Tool, and try to create an image. It goes through the lengthy process of downloading Windows, then begins creating the media. At 68% it just errors out with no explanation. Hmm. Strange. I try again. Same issue. Well, it's 5:15 on a Friday evening. I'm not staying at work. But the user needs this laptop Monday morning. Fine, I'll take it home and work on it over the weekend. At home, I use my personal PC to create a bootable USB drive. No hitches this time. I plug it into the laptop and boot from it. However, once I hit the Windows installation screen the keyboard stops working. The trackpad doesn't work. The touchscreen doesn't work. Weird, none of the other Surfaces had this issue. Fine, I'll use an external keyboard. Except Microsoft is brilliant and only put one USB-A port on the machine. BRILLIANT. Fortunately I have a USB hub so I plug that in. Now I can use a USB keyboard to proceed through Windows installation. However, when I get to the network connection stage no wireless networks come up. At this point I'm beginning to realize that the drivers which work fine when navigating the UEFI somehow don't work during Windows installation. Oh well. I proceed through setup and then install the drivers. But of course the machine hasn't autoprovisioned because it had no internet connection during setup. OK fine, I decide to reset it again. Surely that BSOD was just a fluke. Nope. Happens again. I again proceed through Windows installation and install the drivers. I decide to try a fresh installation *without* resetting first, thinking maybe whatever bug is causing the BSOD is also deleting the drivers. No dice. OK, I go Googling. Turns out this is a common issue. The Laptop 3 uses wonky drivers and the generic Windows installation drivers won't work right. This is ridiculous. Windows is made by Microsoft. Surface is made by Microsoft. And I'm supposed to believe that I can't even install Windows on the machine properly? Oh well, I'll try it. Apparently I need to extract the Laptop 3 drivers, convert the ESD install file to a WIM file, inject the drivers, then split the WIM file since it's now too big to fit on a FAT32 drive. I honestly didn't even expect this to work, but it did. I ran into quite a few more problems with autoprovisioning which required two more reinstallations, but I won't go into detail on that. All in all, I totaled up 9 hours on that laptop over the weekend. Suffice to say our organization is now looking very hard at DELL for our next machines.4

-

python machine learning tutorials:

- import preprocessed dataset in perfect format specially crafted to match the model instead of reading from file like an actual real life would work

- use images data for recurrent neural network and see no problem

- use Conv1D for 2d input data like images

- use two letter variable names that only tutorial creator knows what they mean.

- do 10 data transformation in 1 line with no explanation of what is going on

- just enter these magic words

- okey guys thanks for watching make sure to hit that subscribe button

ehh, the machine learning ecosystem is burning pile of shit let me give you some examples:

- thanks to years of object oriented programming research and most wonderful abstractions we have "loss.backward()" which have no apparent connection to model but it affects the model, good to know

- cannot install the python packages because python must be >= 3.9 and at the same time < 3.9

- runtime error with bullshit cryptic message

- python having no data types but pytorch forces you to specify float32

- lets throw away the module name of a function with these simple tricks:

"import torch.nn.functional as F"

"import torch_geometric.transforms as T"

- tensor.detach().cpu().numpy() ???

- class NeuralNetwork(torch.nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__() ????

- lets call a function that switches on the tracking of math operations on tensors "model.train()" instead of something more indicative of the function actual effect like "model.set_mode_to_train()"

- what the fuck is ".iloc" ?

- solving environment -/- brings back memories when you could make a breakfast while the computer was turning on

- hey lets choose the slowest, most sloppy and inconsistent language ever created for high performance computing task called "data sCieNcE". but.. but. you can use numpy! I DONT GIVE A SHIT about numpy why don't you motherfuckers create a language that is inherently performant instead of calling some convoluted c++ library that requires 10s of dependencies? Why don't you create a package management system that works without me having to try random bullshit for 3 hours???

- lets set as industry standard a jupyter notebook which is not git compatible and have either 2 second latency of tab completion, no tab completion, no documentation on hover or useless documentation on hover, no way to easily redo the changes, no autosave, no error highlighting and possibility to use variable defined in a cell below in the cell above it

- lets use inconsistent variable names like "read_csv" and "isfile"

- lets pass a boolean variable as a string "true"

- lets contribute to tech enabled authoritarianism and create a face recognition and object detection models that china uses to destroy uyghur minority

- lets create a license plate computer vision system that will help government surveillance everyone, guys what a great idea

I don't want to deal with this bullshit language, bullshit ecosystem and bullshit unethical tech anymore.11 -

WTF is going on with twitter?!

- Yesterday I've Installed the app and tried to signup

- I've entered my birthday

- Entered phone number

- Wait for SMS...no SMS

- Tap resend...no SMS

- Wait half an hour...no SMS

- Tried few times...Started getting error: This number cannot be registered..

- Today I've tried again

- Phone number accepted

- Wait for SMS...no SMS

- Tried adding my number to a friend's twitter account...Received SMS code..

- Tried again signing up with my phone number, got error: This number cannot be registered..

- Tried from web, getting error: You reached your SMS limit try again in 24h...

How can I reach my f***** limit when I haven't even received a mail!

I've been trying to signup to twitter for 2 f**** days now with no luck, wtf is happening? it's a major social network for f*** sake.

And what's worse there is no way to send support mail, their "Contact US" page only has options for existing users..8 -

Linux has been around since back when dinosaurs punched holes in cards, but for some reason it still takes a few hours of googling and error debugging to do something as basic as connect to a wpa2-enterprise wifi network.

What the fuck? Where's the "connect to any standard work or school wifi network" command line utility distributed with all os flavors? Why can't I just put in a username and password and be done with it instead of sudo editing networking adapter configuration files manually?2 -

When my boss thinks doing anything is better then doing nothing...

I have to explain to my boss for the Nth time already that doing random tests and things will not replicate a PRODUCTION network issue that seem caused by particular factors at a particular time and location.

And that the best way to trace is for whatever is raising the issue to log the exact time and error so the problem can be traced through all the steps...

FML.....1 -

Error reporting was flooded with failed database connection connections with me being baffled what was causing it.

Yeah, fucking network operator didn't tell us anything about maintenance work. Fuck you, too. -

So, the Network I was on was blocking every single VPN site that I could find so I could not download proton onto my computer without using some sketchy third-party site, so, being left with no options and a tiny phone data plan, I used the one possible remaining option, an online Android emulator. In the emulator running at like 180p I once again navigated to proton VPN, downloaded the windows version, and uploaded it to Firefox send. Opened send on my computer, downloaded the file, installed it, and realized my error, I need access to the VPN site to log in.

In a panic, I went to my phone ready to use what little was left of data plan for security, and was met with no signal indoors. Fuck. New plan. I found a Xfinity wifi thing, and although connecting to a public network freaked me out, I desided to go for it because fuck it. I selected the one hour free pass, logged in, and it said I already used it, what? When?, So I created a new account, logged in, logged into proton, and disconnected, and finally, I was safe.

Fuck the wifi provider for discouraging a right to a private internet and fuck the owner for allowing it. I realize how bad it was to enter my proton account over Xfinity wifi, but I was desperate and desperate times call for desperate means. I have now changed my password and have 2fa enabled.1 -

How do you define a seniority in a corporate is beyond me.

This guy is supposed to be Tier3, literally "advanced technical support". Taking care of network boxes, which are more or less linux servers. The most knowledgable person on the topic, when Tier1 screws something and it's not BAU/Tier2 can't fix it.

In the past hour he:

- attempted to 'cd' to a file and wondered why he got an error

- has no idea how to spell 'md5sum'

- syntax for 'cp' command had to be spelled out to him letter by letter

- has only vague idea how SSH key setup works (can do it only if sombody prepares him the commands)

- was confused how to 'grep' a string from a logfile

This is not something new and fancy he had no time to learn yet. These things are the same past 20-30 years. I used to feel sorry for US guys getting fired due to their work being outsourced to us but that is no longer the case. Our average IT college drop-out could handle maintenance better than some of these people.9 -

I need some opinions on Rx and MVVM. Its being done in iOS, but I think its fairly general programming question.

The small team I joined is using Rx (I've never used it before) and I'm trying to learn and catch up to them. Looking at the code, I think there are thousands of lines of over-engineered code that could be done so much simpler. From a non Rx point of view, I think we are following some bad practises, from an Rx point of view the guys are saying this is what Rx needs to be. I'm trying to discuss this with them, but they are shooting me down saying I just don't know enough about Rx. Maybe thats true, maybe I just don't get it, but they aren't exactly explaining it, just telling me i'm wrong and they are right. I need another set of eyes on this to see if it is just me.

One of the main points is that there are many places where network errors shouldn't complete the observable (i.e. can't call onError), I understand this concept. I read a response from the RxSwift maintainers that said the way to handle this was to wrap your response type in a class with a generic type (e.g. Result<T>) that contained a property to denote a success or error and maybe an error message. This way errors (such as incorrect password) won't cause it to complete, everything goes through onNext and users can retry / go again, makes sense.

The guys are saying that this breaks Rx principals and MVVM. Instead we need separate observables for every type of response. So we have viewModels that contain:

- isSuccessObservable

- isErrorObservable

- isLoadingObservable

- isRefreshingObservable

- etc. (some have close to 10 different observables)

To me this is overkill to have so many streams all frequently only ever delivering 1 or none messages. I would have aimed for 1 observable, that returns an object holding properties for each of these things, and sending several messages. Is that not what streams are suppose to do? Then the local code can use filters as part of the subscriptions. The major benefit of having 1 is that it becomes easier to make it generic and abstract away, which brings us to point 2.

Currently, due to each viewModel having different numbers of observables and methods of different names (but effectively doing the same thing) the guys create a new custom protocol (equivalent of a java interface) for each viewModel with its N observables. The viewModel creates local variables of PublishSubject, BehavorSubject, Driver etc. Then it implements the procotol / interface and casts all the local's back as observables. e.g.

protocol CarViewModelType {

isSuccessObservable: Observable<Car>

isErrorObservable: Observable<String>

isLoadingObservable: Observable<Void>

}

class CarViewModel {

isSuccessSubject: PublishSubject<Car>

isErrorSubject: PublishSubject<String>

isLoadingSubject: PublishSubject<Void>

// other stuff

}

extension CarViewModel: CarViewModelType {

isSuccessObservable {

return isSuccessSubject.asObservable()

}

isErrorObservable {

return isSuccessSubject.asObservable()

}

isLoadingObservable {

return isSuccessSubject.asObservable()

}

}

This has to be created by hand, for every viewModel, of which there is one for every screen and there is 40+ screens. This same structure is copy / pasted into every viewModel. As mentioned above I would like to make this all generic. Have a generic protocol for all viewModels to define 1 Observable, 1 local variable of generic type and handle the cast back automatically. The method to trigger all the business logic could also have its name standardised ("load", "fetch", "processData" etc.). Maybe we could also figure out a few other bits too. This would remove a lot of code, as well as making the code more readable (less messy), and make unit testing much easier. While it could never do everything automatically we could test the basic responses of each viewModel and have at least some testing done by default and not have everything be very boilerplate-y and copy / paste nature.

The guys think that subscribing to isSuccess and / or isError is perfect Rx + MVVM. But for some reason subscribing to status.filter(success) or status.filter(!success) is a sin of unimaginable proportions. Also the idea of multiple buttons and events all "reacting" to the same method named e.g. "load", is bad Rx (why if they all need to do the same thing?)

My thoughts on this are:

- To me its indentical in meaning and architecture, one way is just significantly less code.

- Lets say I agree its not textbook, is it not worth bending the rules to reduce code.

- We are already breaking the rules of MVVM to introduce coordinators (which I hate, as they are adding even more unnecessary code), so why is breaking it to reduce code such a no no.

Any thoughts on the above? Am I way off the mark or is this classic Rx?16 -