Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "graphs"

-

As a developer, sometimes you hammer away on some useless solo side project for a few weeks. Maybe a small game, a web interface for your home-built storage server, or an app to turn your living room lights on an off.

I often see these posts and graphs here about motivation, about a desire to conceive perfection. You want to create a self-hosted Spotify clone "but better", or you set out to make the best todo app for iOS ever written.

These rants and memes often highlight how you start with this incredible drive, how your code is perfectly clean when you begin. Then it all oscillates between states of panic and surprise, sweat, tears and euphoria, an end in a disillusioned stare at the tangled mess you created, to gather dust forever in some private repository.

Writing a physics engine from scratch was harder than you expected. You needed a lot of ugly code to get your admin panel working in Safari. Some other shiny idea came along, and you decided to bite, even though you feel a burning guilt about the ever growing pile of unfinished failures.

All I want to say is:

No time was lost.

This is how senior developers are born. You strengthen your brain, the calluses on your mind provide you with perseverance to solve problems. Even if (no, *especially* if) you gave up on your project.

Eventually, giving up is good, it's a sign of wisdom an flexibility to focus on the broader domain again.

One of the things I love about failures is how varied they tend to be, how they force you to start seeing overarching patterns.

You don't notice the things you take back from your failures, they slip back sticking to you, undetected.

You get intuitions for strengths and weaknesses in patterns. Whenever you're matching two sparse ordered indexed lists, there's this corner of your brain lighting up on how to do it efficiently. You realize it's not the ORMs which suck, it's the fundamental object-relational impedance mismatch existing in all languages which causes problems, and you feel your fingers tingling whenever you encounter its effects in the future, ready to dive in ever so slightly deeper.

You notice you can suddenly solve completely abstract data problems using the pathfinding logic from your failed game. You realize you can use vector calculations from your physics engine to compare similarities in psychological behavior. You never understood trigonometry in high school, but while building a a deficient robotic Arduino abomination it suddenly started making sense.

You're building intuitions, continuously. These intuitions are grooves which become deeper each time you encounter fundamental patterns. The more variation in environments and topics you expose yourself to, the more permanent these associations become.

Failure is inconsequential, failure even deserves respect, failure builds intuition about patterns. Every single epiphany about similarity in patterns is an incredible victory.

Please, for the love of code...

Start and fail as many projects as you can.30 -

A super creepy webcrawler I built with a friend in Haskell. It uses social media, various reverse image searches from images and strategically picked video/gif frames, image EXIF data, user names, location data, etc to cross reference everything there is to know about someone. It builds weighted graphs in a database over time, trying to verify information through multiple pathways — although most searches are completed in seconds.

I originally built it for two reasons: Manager walks into the office for a meeting, and during the meeting I could ask him how his ski holiday with his wife and kids was, or casually mention how much I would like to learn his favorite hobby.

The other reason was porn of course.

I put further development in the freezer because it's already too creepy. I'd run it on some porn gif, and after a long search it had built a graph pointing to a residence in rural Russia with pictures of a local volleyball club.

To imagine that intelligence agencies probably have much better gathering tools is so insane to think about.53 -

1. Humans perform best if they have ownership over a slice of responsibility. Find roles and positions within the company which give you energy. Being "just another intern/junior" is unacceptable, you must strive to be head of photography, chief of data security, master of updating packages, whatever makes you want to jump out of bed in the morning. Management has only one metric to perform on, only one right to exist: Coaching people to find their optimal role. Productivity and growth will inevitably emerge if you do what you love. — Boss at current company

2. Don't jump to the newest technology just because it's popular or shiny. Don't cling to old technology just because it's proven. — Team lead at the Arianespace contractor I worked for.

4. "Developing a product you wouldn't like to use as an end user, is unsustainable. You can try to convince yourself and others that cancer is great for weight loss, but you're still gonna die if you don't try to cure it. You can keep ignoring the disease here to fill your wallet for a while, but it's worse for your health than smoking a pack of cigs a day." — my team supervisor, heavy smoker, and possibly the only sane person at Microsoft.

5. Never trust documentation, never trust comments, never trust untested code, never trust tests, never trust commit messages, never trust bug reports, never trust numbered lists or graphs without clearly labeled axes. You never know what is missing from them, what was redacted away. — Coworker at current company.8 -

Resize a image in a document in Microsoft word................ 500 lines realign, border width changes, 4 graphs deleted, tsunami in ur city, earth's orbit shift by 2 meters.u want to die.5

-

Pi Project

It's pinging Google and measuring the response time every three seconds, then graphs the result on the LED SenseHat. It's graphing wifi stability. 11

11 -

The exact moment when I understood what programming actually was.

I was getting hard times at my 3rd college grade, trying to implement the recursive sudoku solver in python. Teacher spent a lot of time trying to explain me things like referential transparency, recursion and returning the new value instead of modifying the old one and everything related. I just couldn't get it.

I was one of the least productive students, i couldn't even understand merge sort.

I was struggling with for loops and indexes, and then suddenly something clicked in my head, like someone flipped a switch, and i understood everything i was explained, all at once. It was like enlightenment, like pure magic.

I had sudoku solver implemented by the end of the lecture. Linked list, hash map, sets, social graphs, i got all of these implemented later, it wasn't a problem anymore. I later got an A for my diploma.

Thank you @dementiy, you were the reason for my career to blast off.7 -

I’m a graphs designer, hardware expert, free software generator, marketing evangelist, networking wizard, and troubleshooter bot9

-

Me: spends 2 hours on a script that converts graphs into colorblind friendly mode

Friend: why didnt you just grayscale the image

Me:

Me: uh7 -

This story starts over two years ago... I think I'm doomed to repeat myself till the end of time...

Feb 2014

[I'm thrust into the world of Microsoft Exchange and get to learn PowerShell]

Me: I've been looking at email growth and at this rate you're gonna run out of disk space by August 2014. You really must put in quotas and provide some form of single-instance archiving.

Management: When we upgrade to the next version we'll allocate more disk, just balance the databases so that they don't overload in the meantime.

[I write custom scripts to estimate mailbox size patterns and move mailboxes around to avoid uneven growth]

Nov 2014

Me: We really need to start migration to avoid storage issues. Will the new version have Quotas and have we sorted out our retention issues?

Management: We can't implement quotas, it's too political and the vendor we had is on the nose right now so we can't make a decision about archiving. You can start the migration now though, right?

Me: Of course.

May 2015

Me: At this rate, you're going to run out of space again by January 2016.

Management: That's alright, we should be on track to upgrade to the next version by November so that won't be an issue 'cos we'll just give it more disk then.

[As time passes, I improve the custom script I use to keep everything balanced]

Nov 2015

Me: We will run out of space around Christmas if nothing is done.

Management: How much space do you need?

Me: The question is not how much space... it's when do you want the existing storage to last?

Management: October 2016... we'll have the new build by July and start migration soon after.

Me: In that case, you need this many hundreds of TB

Storage: It's a stretch but yes, we can accommodate that.

[I don't trust their estimate so I tell them it will last till November with the added storage but it will actually last till February... I don't want to have this come up during Xmas again. Meanwhile my script is made even more self-sufficient and I'm proud of the balance I can achieve across databases.]

Oct 2016 (last week)

Me: I note there is no build and the migration is unlikely since it is already October. Please be advised that we will run out of space by February 2017.

Management: How much space do you need?

Me: Like last time, how long do you want it to last?

Management: We should have a build by July 2017... so, August 2017!

Me: OK, in that case we need hundreds more TB.

Storage: This is the last time. There's no more storage after August... you already take more than a PB.

Management: It's OK, the build will be here by July 2017 and we should have the political issues sorted.

Sigh... No doubt I'll be having this conversation again in July next year.

On the up-shot, I've decided to rewrite my script to make it even more efficient because I've learnt a lot since the script's inception over two years ago... it is soooo close to being fully automated and one of these days I will see the database growth graphs produce a single perfect line showing a balance in both size and growth. I live for that Nirvana.6 -

This happens so often!

*Lecturer teaching using a ppt*

A slide with literally one basic understandable sentence on it : unnecessarily discussed for 20 min.

A slide with actual important stuff,graphs, definitions charts etc. : Skipped in 5 sec 😑3 -

So yesterday our team got a new toy. A big ass 4k screen to display some graphs on. Took a while to assemble the stand, hang the TV on that stand, but we got there.

So our site admin gets us a new HDMI cable. Coleague told us his lappy supports huge screens as he used to plug his home TV in his work lappy while WFHing. He grabs that HDMI, plugs one end into the screen, another - into his lappy and

.. nothing...

Windows does not recognize any new devices connected. The screen does not show any signs of any changes. Oh well..

Site IT admin installs all the updates, all the new drivers, upgrades BIOS and gives another try.

Nothing.

So naturally the cable is to blame. The port is working for him at home, so it's sure not port's fault. Also he uses his 2-monitor setup at work, so the port is 100% working!

I'm curious. What if..... While they are busy looking for another cable, I take that first one, plug it into my Linux (pretty much stock LinuxMint installation w/ X) lappy,

3.. 2.. 1..

and my desktop is now on the big ass 4k fat screen.

Folks. Enough bitching about Linux being picky about the hardware and Windows being more user friendly, having PnP and so. I'm not talking about esoteric devices. I'm talking about BAU devices that most of home users are using. A monitor, a printer, a TV screen, a scanner, wireless/usb speaker/mouse/keyboard/etc...

Linux just works. Face it

P.S. today they are still trying to make his lappy work with that TV screen. No luck yet. 17

17 -

The team had just created an analytics dashboard web application for a client. During the demo, client asks: "Can we have a download button that saves all the graphs in a powerpoint, 1 graph per slide with a title?"6

-

This spring I was working on a library for an algorithm class at uni with some friends and one of the algorithm was extremely slow, we were using Python to study graphs of roads on a map and a medium example took about 6-7h of commission to finish (I never actually waited for so long, so maybe more).

I got so pissed of for that code that I left the lab and went to eat. Once I got back I rewrote just the god-damned data structure we were using and the time got down to 300ms. Milliseconds!

Lessons learned:

- If you're pissed go take a walk and when you'll come back it will be much easier;

- Don't generalize to much a library, the data structure I write before was optimized for a different kind of usage and complete garbage for that last one;

- Never fucking use frozen sets in Python unless you really need them, they're so fricking slow!3 -

Creating an anonymous analytics system for the security blog and privacy site together with @plusgut!

It's fun to see a very simple API come alive with querying some data :D.

Big thanks to @plusgut for doing the frontend/graphs side on this one!18 -

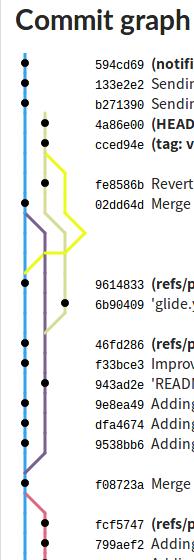

Oh boy, I think I need a new pair of pants.

GitLab (!Github) have improved their ci/CD pipelines to allow you to chain jobs 🥳🤤

https://about.gitlab.com/2019/08/... 1

1 -

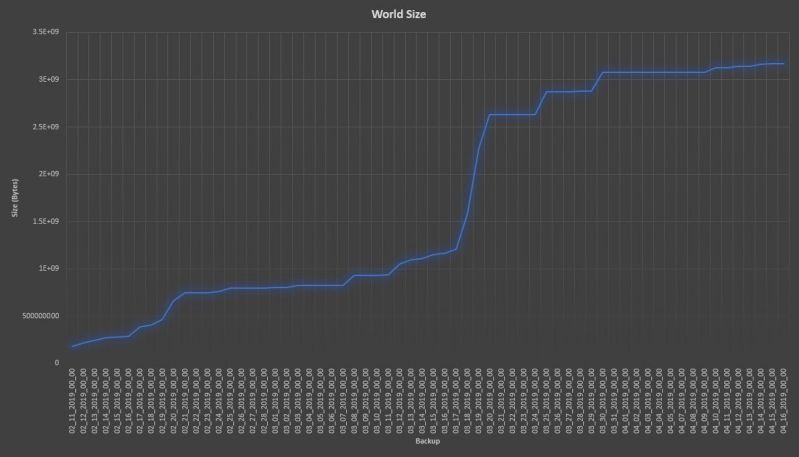

I made a survival Minecraft server for my friends and me a little while ago. We restarted the world today and since I love graphs, here’s a graph of of the size of our world save over time!

6

6 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

On highschool I took a special major in which we learned various computer and mathematics skills such as neural networks, fractals, etc.

One of the teachers there, which for me was also a mentor, is a physician. He taught us python which he didn't know very well (he wasn't that bad either) and science which was his true passion.

My end project was to try to predict stocks market using a simple neural network and daily graphs of 50 NSDQ companies. The result reached 51% prediction on average which was awful, but I couldn't forget the happinness and curiosity working on this project made me feel.

Now, 5 years later, I have a Bsc and finishing a Msc in Computer Science, and would sincerely want to thank this mentor for giving me the guts and will to accomplish this.7 -

Remove all the outdated and unwanted topics which were taught during Indus Valley civilization like: 8080 microprocessor, Java 6, Software Testing principles etc. And add more interesting and realistic topics like: Algorithm design, graphs and other data structures, Java 8 (at least for now), big data, Basics about AI, etc.5

-

HR: you didn’t write in your job experience that you know kubernetes and we need people who know it.

Me: I wrote k8s

HR: What’s that ?

…

Do you know docker ?

Do you know what docker is ?

Do you use cloud ?

Can you read and write ?

Are you able to open the door with your left hand ?

What if we cut your hands and tell you to open the doors, how would you do that ?

What are your salary expectations?

Do you have questions, I can’t answer but I can forward them. Ask question, ask question, questions are important.

What is minimal wage you will agree to work ?

You wrote you worked with xy, are you comfortable with yx ?

We have fast hiring process consisting of 10 interviews, 5 coding assessments, 3 talks and finally you will meet the team and they will decide if you fit.

Why do you want to work … here ?

Why you want to work ?

How dare you want to work ?

Just find work, we’re happy you’re looking for it.

What databases you know ?

Do you know nosql databases ?

We need someone that knows a,b,c,d….x,y,z cause we use 1,2,3 … 9,10.

We need someone more senior in this technology cause we have more junior people.

Are you comfortable with big data?

We need someone who spoke on conference cause that’s how we validate that people can speak.

I see you haven’t used xy for a while ( have 5 years experience with xy ) we need someone who is more expert in xy.

How many years of experience you have in yz ??? (you need to guess how many we want cause we look for a fortune teller )

Not much changed in job hunting, taking my time to prepare to leetcode questions about graphs to get a job in which they will tell me to move button 1px to the left.

Need to make up some stories about how I was bad person at work and my boss was angry and told me to be better so I become better and we lived happy ever after. How I argued with coworkers but now I’m not arguing cause I can explain. How bad I was before and how good I am now. Cause you need to be a better person if you want to work in our happy creepy company.

Because you know… the tree of DOOM… The DOMs day.5 -

Had a LinkedIn recruiter contact me a few months ago, I usually get one of these a week at minimum and usually more frequent the moment a start a new position. I hate that!

Anyway, story and rant:

The recruiter sent me a position that was pretty good, lots of benefits, not too far to drive, some remote days. With the usual list of responsibilities that they themselves dont know what half of them are but put them on anyway, I would automate those anyway if I wanted to work there.

All looks great, I ask if they can send me more details and the budget they company has for the position.

This was for a Senior position so I thought they would know what industry standard is.

The recruiter replies with a budget: $2000

I actually couldn't believe that they thought that was acceptable amount of money for the amount of responsibilities they wanted this new senior guy to do, no wonder the previous guy left.

I respond and told her that the amount is extremely low for what they want and I dont think they will find someone with the skills they need at that amount. I would be willing to talk for a minimum of $4000 and thats not guaranteed until I can go for a formal interview to find out exactly what the company needs.

The recruiters replay was probably the rudest anyone has ever been to me online, lol! She insists its industry standards and any Senior would be lucky to get such a great paycheck, the company has been in business for years and their developers have always been happy and paid industry standards.

I respond again and tell her that im getting $3800 at this small company where I currently am and if the "international company with clients all over the world" wants to have my skill set why is it that they cant pay premium salaries!? As well as the graphs for my Country on what the current industry standards are for salaries in my industry.

She never replied, but I kept tabs on the company she was recruiting for. They are still looking for a senior dev, its been 8 months now and no one has applied.

I am so happy more developers are standing up for themselves and not taking agencies bullshit with low salaries, crazy overtime and bad technical specs.

Note: Amounts are made up, was just to show comparison.4 -

Saturday/Me:."Sure buddy I'll make a website for your company"

Monday/Him: "talked with 10 venues today, told them we'd be live Friday. How's the project going?"

... my first Django project and I'm also looking for new jobs/in school3 -

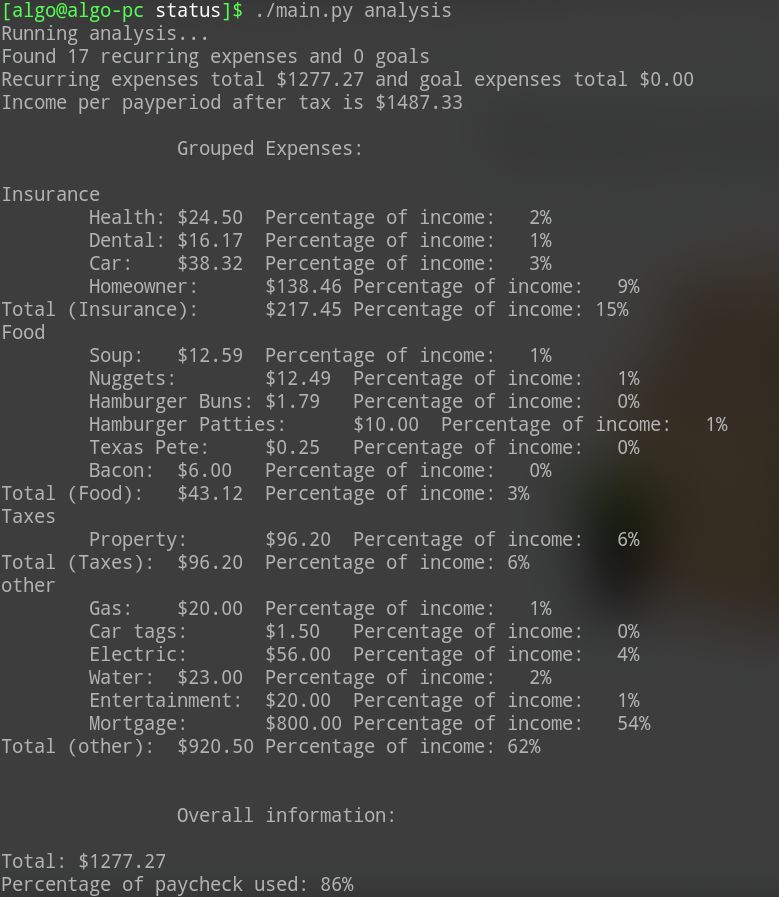

Here is the first step of my software that will keep track of "what bills am I currently paying?" throughout your day. This is analysis mode, you can specify your expenses and income (after tax) in a json file and it will build this report for you.

Here's an example with relatively realistic figures

The next step is to visualize it and have it render some charts and graphs. Yay! 9

9 -

At work the other day...

Guy: "Oh hey I was thinking if you could help me with an application to visualize some data."

Me: "Ooookay...what did you have in mind?"

Guy: "I think we have XML files that could be turned into graphs...oh and we could add some trend lines. (Getting more excited) And maybe we could supplement it with live data...oh hey and maybe we could add real time alerts via email..."

Me: *thinks to self...there is no way in hell I am starting to work on something that he is literally coming up with requirements as he's talking* "I need specifics...so go take some time, think it through and get back to me with concrete details and examples."

Guy: "Ok. That should be enough to get you started for now at least."

That would be a big fuck no, good sir. Haven't started and won't start it. He has never mentioned it to me again since then.4 -

Hey !

A big question:

Assume we got an android app which graphs a sound file .

The point is: the user is able to zoom in/out so the whole data must be read in the begining , but as the file is a little longer , the load time increases.

What can i do to prevent this?3 -

Okay, first rant here.

Spend most of my morning searching for a js file that was supposed to build some graphs in a report page in this legacy system (still in active development) just to find it embedded inside a random .php file being included inside a wall of if-elses (that shit has around 100 lines) on the index.php (that somehow manages to route all the nonsense that's going on there).. was it really that difficult to make it a proper .js file? and actually import it on the page that is using it? c'mon... 4

4 -

When i found out about the matplotlib function xkcd. "with plt.xkcd():".

Everything is so much better when you can plot thr graphs for school in xkcd style. -

So I found this consulting job a while ago thinking that some extra cash while studying would be nice to have.

I meet with the guy, a researcher trying to start a business up, good for him I think, maybe we'll hit it off, continue working, why not? Except he has no clue how to write working code, all he ever did was writing matlab scripts he says, thats why he hired me he says.

Okay, fine, you do your job I do mine.

He hands me the contract, its about comparing two libraries, finding out which one is better suited for his job, cool, plots and graphs everywhere.

Except this is an unpaid job. YOU WHAT?! It's a test job. FINE. At least it'll look good on my resume.

We talk about the paid part where I'm supposed to scale the two libraries, looks good, as expected from an ML engineering perspective. It comes to payment. The dude has no idea how taxes work, says he has a set amount to pay and not a penny more. I explain with examples how taxes are paid, how you get reimbursed for them and so on. Won't budge. Screws me over.

Opens the door for other jobs I think, he'll learn next time I think and take the job.

Fast forward a month, 90% of the job done, he adds a third thing to compare. Gives a github link to a repo with 2 authors, last commit a year ago. There are links to a 404, claiming compiled jars. Fuck.

Not my first rodeo, git clone that shit, make compile, the works. The thing uses libs that ain't in no repo, that would be too easy. Run, error, find lib, remake all the things, rinse repeat.

The scripts they got have hardcoded paths and filenames for 2 year old binaries, remake that shit.

It works, at least I get a prompt now. Try the example files they got, no luck, some missing unlinked binary somewhere, but not a name mentioned. Cross reference the shit outta the libs mentioned on readme, find the missing shit, down it.

Available versions are too new, THE MOLDING NUTCRACKER uses some bug in an old version of the lib.

I give up. Fuck this. This ain't worth the money OR time. Wanker... -

So a follow up to my last Mathematica rant:

I have a JSON file made up of arrays of arrays of arrays with the outermost layer containing ~10,000 arrays.

So, my graphing works perfectly the first time for one of my graphs. I fix another unrelated graph, graph the whole file, and suddenly the first one stops working. The file read-in only reads in the array {2,13}. I double checked the contents of the file, they were as large as always.

Then, I proceed to look for bugs, find none, and decide to restart Mathematica. This doesn't help.

So I go back, find no bugs, and eventually am so fed up that I just restart Mathematica again, no changes.

Suddenly, the array reads in fine. Waiting for the graphs to come out but I think they'll be fine.

WTF Mathematica? Why must I restart TWICE to make bugs caused by your application go away?7 -

Just right now:

Management: How's the feature going?

Me: The backend is done. Here's how the front end looks so far...

Management: What?! No! Where will they input the units? What about the input#2? and the graphs?! You were just not going to put that?

Me: ... this is how it's lookin so far. The deadline isn't until next week. I'm actually pretty ahead of schedule.

Management: But what about button #2 and #3? And input #4?

Me: Yes, it's all planned. It's not done yet. You asked me how I'm doing so far. Of course I haven't finished.6 -

SVN.

Nothing good comes with GUI for version control except for the graphs of branches. And this little tortoise thinks it's the shit.6 -

I love Unix systems because everything goes smoothly most of the time but today... Fuck me... I just wanted to see how many lines my script was with "wc - l" but I couldn't remember "m" or "w". 180 degrees separated despair and monotony, although I didn't know it yet. I did "mc - l" first and midnight logged empty ftp buffer to my file. Goodbye Thursday and Fridays work :) I should commit more often.4

-

I was talking to my non-tech gf about how a colleague of mine didn't understand priority queue and show led her an example, during explanation fucked up the example and duplicated priorities of 2 values but they came up in the unexpected order. She wanted to find the logic in it and blamed the computer for being dumb, but it has been ~45 minutes, she has Wikipedia about binary trees & linked list open as well as simple graphs visualising both + armed with pen and paper trying to understand how it all all works..

Achievement Earned?

P.S I am either creating a monstrosity (Frankenstein style) or recruiting a fresh mind to our ranks, either way I am proud af 😢😊😍8 -

*lunch time*

Designer: we want to put these graphs on the landing page.

Me: ok, well they are pretty simple I can recreate them in about an hour with JS, and they will look better and be inter active...

PM: we don't have time for that just use the images from the mock up

Me: ok...

*5hrs & dozens of emails later...*

PM: the graph doesn't look quite right, can we just build it in code so it looks better? Oh and we need to have it to the client to review by end of day...

Me: ...1 -

Of course the shouting episodes all happened during the era I was doing WordPress dev.

So we were a team of consultants working on this elephant-traffic website. There were a couple of systems for managing content on a more modular level, the "best" being one dubbed MF, a spaghettified monstrosity that the 2 people who joined before me had developed.

We were about to launch that shit into production, so I was watching their AWS account, being the only dev who had operational experience (and not afraid to wipe out that macos piece of shit and dev on a real os).

Anyhow, we enable the thing, and the average number of queries per page load instantly jumps from ~30 (even vanilla WP is horrible) to 1000+. Instances are overloaded and the ASG group goes up from 4 to 22. That just moves the problem elsewhere as now the database server is overwhelmed.

Me: we have to enable database caching for this thing *NOW*

Shitty authors of the monstrosity (SAM): no, our code cannot be responsible for that, it's the platform that can't handle the transition.

Me: we literally flipped a single switch here and look at the jump in all these graphs.

SAM: nono, it's fine, just add more instances

Me: ARE YOU FUCKIN SERIOUS?

Me: - goes and enables database caching without any approvals to do so, explaining to mgmt. that failure to do so would impair business revenue due to huge loading times, so they have to live with some data staleness -

SAM: Noooo, we'll show you it's not our code.

SAM: - pushes a new release of the monstrosity that makes DB queries go above 2k / page load -

...

Tho on the bright side, from that point on I focused exclusively on performance, was building a nice fragment caching framework which made the site fly regardless of what shitty code was powering it, tuned the stack to no end and learned a ton of stuff in the process which allowed me to graduate from the tar pit of WP development.5 -

Written coding test, first question :

Form the minimum spanning tree of the given graph using Kruskals algorithm.

Plot twist : No weights given. Assume unweighted graph4 -

Winter break university projects:

Option A: implement writing and reading floating point decimals in Assembly (with SSE)

Option B: reuse the reading and writing module from Option A, and solve a mathematical problem with SSE vectorization

Option C: Research the entirety of the internet to actually understand Graphs, then use Kruskal's algorithm to decide that a graph is whole or not (no separated groups) - in C++

Oh, and BTW there's one week to complete all 3...

I don't need life anyways... -

A few years ago my boss held a brainstorming meeting to go over features for an internal reporting app. I brought up we should have related business news stories scroll on the page header like Fox business or something. He laughed and said sure. Two things happened after that.

1. Found out the marquee tag still works in chrome.

2. Yeah you bet I put that shit in there.

Anyways a meeting was held a few days after where my boss chewed me out for actually doing it. He showed the app to his boss and got laughed at by his leadership team when they saw news headlines scroll over analytics graphs.

After writing this I realize this is more his embarrassment than mine. Have a great Tuesday fam.7 -

So we work on a Vmware network. And besides the terrible network lag. The specs of that VM is one core (Possibly one thread of a xeon core) and 3 GB RAM.

What do we do on it?

Develop heavy ass java GUI applications on eclipse. It lags in every fucking task. Can't even use latest versions of browsers because the VM is a fucking snail ass piece of shit!

So, in the team meeting I proposed to my manager, Hey our productivity is down because of this POS VM. Please raise the specs!.

He said mere words won't help. He needs proof.

Oh, you need proof ? Sure. I coded up a script that all of my team ran for a week. That generates a CSV with CPU usage, mem left, time - every 10 min. I use this data to show some motherfucking Graphs because apparently all they understand is graphs and shit.

So there you go. Have your proof! Now give me the specs I need to fucking work!3 -

@dfox I recently started playing around with Neo4j and find it really fun to work with. Would there be any way to get hold of parts of the devRant graph/graphs? Not private or secret stuff of course - only public parts of rants, tags and users. It would be fun to to play around with and analyze.13

-

QA personal voice assistant that runs locally without cloud, it’s like never ending project. I look at it from time to time and time pass by. Chat bots arrived, some decent voice algorithms appeared. There is less and less stuff to code since people progress in that area a lot.

I want to save notes using voice, search trough them, hear them, find some stuff in public data sources like wikipedia and also hear that stuff without using hands, read news articles and stuff like that.

I want to spend, more time for math and core algorithms related to machine learning and deep learning.

Problem is once I remember how basic network layers, error correction algorithms work or how particular deep learning algorithm is constructed and why is that, it’s already a week passed and I don’t remember where I started.

I did it couple of times already and every time I remember more then before but understanding core requires me sitting down with pen and paper and math problems and I don’t have time for that.

Now when I’m thinking about it - maybe I should write it somewhere in organized way. Get back to blogging and write articles about what I learned. This would require two times the time but maybe it would help to not forget.

I’m mostly interested in nlp, tts, stt. Wavenet, tacotron, bert, roberta, sentiment analysis, graphs and qa stuff. And now crystallography cause crystals are just organized graphs in 3d.

Well maybe if I’m lucky I retire in the next decade or at least take a year or two years off to have plenty of time to finish this project. -

Today i was trying to solve a problem on my graphs algorithm (find the shortest path) but i was not able to do almost anything, so i asked for help to a friend.

He started to try to help me, but after a long reasoning we both stopped to understand what we were doing.

After a while, i've decided to run the code totally random and... it worked! And that's not all, it worked better than we were trying to do!

What a lucky shot😎

(Sorry if there are some errors, english isn't my first language)3 -

I suspected that our storage appliances were prematurely pulling disks out of their pools because of heavy I/O from triggered maintenance we've been asked to automate. So I built an application that pulls entries from the event consoles in each site, from queries it makes to their APIs. It then correlates various kinds of data, reformats them for general consumption, and produces a CSV.

From this point, I am completely useless. I was able to make some graphs with gnumeric, libre calc, and (after scraping out all the identifying info) Google sheets, but the sad truth is that I'm just really bad at desktop office document apps. I wound up just sending the CSV to my boss so he can make it pretty.1 -

My Precalculus teacher has such overstrict rules on showing work.

1. On tests, degree signs must be shown in all work. This wouldn't be outrageous except that if the answer is right but a single degree sign is missing in the mandated shown work, the entire question is wrong even with a correct final answer because the "answer doesn't match up with the work".

2. We must show work in the exact form mandated from on class. If even a single step of work is missing or wrong on even one say homework problem, no credit even if the entire rest of the sheet is correct and complete.

3. Never applied to me, but if a homework problem cannot be solved by a student, they must write a sentence describing how far they got and what wasn't doable, or no credit on the entire homework. Did I mention it is checked daily and is 2 unweighted points with 50-100 point tests?

4. On graphing calculator problems, one had to draw a rectangle representing the calculator screen, even for solving systems of equations without explicit drawing graphs as part of the problem, because otherwise, she had "no proof that a calculator was used". It isn't that hard to fake, and it was quite stupid.

5. Reference triangles were required even when completely unnecessary or the answers were assumed copied, even if a better method was shown in work.

And much, much more!4 -

This utilization shit is stupid! Seriously man what the hell! Yes yes it's an important number yes yes I don't even care. You want me to increase my utilization and at the same time be wary of the budget, which are unrealistically tight to begin with. It's freaking impossible! Who comes up with this shit?

You know what? Half of this shit ain't even my fault! A project was set for 200 hours and a guy wasted half of that trying to figure out just HOW TO CONNECT TO THE API! Like the guy only wrote 30 lines in 100 HOURS! ARE YOU FREAKING KIDDING ME! THEN YOU PASS OVER THE PROJECT TO ME AND SAY YOU HAVE ONLY 100 HOURS LEFT TO CONNECT TO THE API, GET THE DATA (WHICH BTW DOESNT EVEN EXIST), PARSE IT, AND THEN CREATE GRAPHS AND A FULLY FUNCTIONAL SOFTWARE, WITH A USER INTERFACE THAT SHOULD RUN AS AN EXECUTABLE!!!! ME? ALONE?

MAN FUCK YOU!2 -

I'm working on a pretty Plotly map. To learn how to use bubble graphs, I've copy-pasted the example and modified it.

The example is ebola occurrence by year, Plague Inc style. So naturally I need to add some countries. For testing, of course.

Ebola for everyone! Yay.

I like my job.2 -

When you get to work with the Analytics side if the warehouse and one of the guys wants you to learn d3. Js to take a csv to make a html site.

Me: hell Yeah can't wait to make crazy circle graphs and line graphs for everyone in analytics

Analytics: Oh, we just need you to take the csv files and copy the same excel format to a html site. So, table, table, table, table.

Me: so...... No visualization graph

Analytics: No. 4

4 -

Wanna develope an android app which plays and graphs heart sounds send by a blurletooth module!

Any helpful link ?5 -

Just implemented A* and Dijkstra to get into pathfinding with graphs. Really hyped about this! TIL that Dijkstra is perfect to find the quickest subway route in my city 😏2

-

Python devs and data analysts....

Do you recommend using pycharm for working with jupyter notebooks? I surely had a bad time with it.

I have been using many jetbrains softwares , and am a fan of their docs search and autocompletion. But I don't think there is a full support for jupyter jn it, because sometimes my graphs made using matploit or seaborn just brakes.

And some libraries have a lot of functions taking parameters as " *args, **kwargs " , I don't know what that means but those function take a lot of "value" parameters i guess?(like this: plt.figure(figsize=[13,6], axis=False) )

Pycharm also don't seem to have access to list of those arguments...

Are you having such problems too? Have you found some better ide with autocompletions and support for jupyter? Do tell.

(Ps: i know jupyter can be run directly on a browser, but as i said "auto completions and documentations" )5 -

Back when I was doing my uni final year project. i was given the choice of either writing codes or writing documentation (graphs, requirement and a whole lot of other madeup bullshit)

I deicide go with coding, sign up with laracast. watch a whole lot of screen cast. and BOOM, coding is easy - I was literally quoting bombastic technical terms like many-to-many relationship to my group mates after 2 videos. (didn't even know what was bootstrap at that time) Thats was when I decide I wanna code for a living. Laravel made me a developer.1 -

Ffs, HOW!?!? Fuck! I need to get this rotten bs out.

RDS at its max capabilities from the top shelf, works OK until you scale it down and back up again. Code is the same, data is the same, load is the same, even the kitchen sink is the same, ffs, EVERYTHING is the same! Except the aws-managed db is torn down and created anew. From the SAME snapshots! But the db decides to stop performing - io tpt is shit, concurrency goes through the roof.

Re-scale it a few more times and the performance gets back to normal.

And aws folks are no better. Girish comes - says we have to optimize our queries. Rajesh comes - we are hitting the iops limit. Ankur comes - you're out of cpu. Vinod thinks it's gotta be the application to blame.

Come on guys, you are a complete waste of time for a premium fucking support!

Not to mention that 2 enhanced monitoring graphs show anythung but the read throughput.

Ffs, Amazon, even my 12yo netbook is more predictable than your enterprise paas! And that support..... BS!

We're now down to troubleshooting aws perf issues rather than our client's.... -

Both these kits (for doing interactive graphs) are pretty cool:

one for chemical structures (RDKit):

https://rdkit.blogspot.com/

Making interactive graphs in python:

https://towardsdatascience.com/maki...1 -

I spent 2 weeks building a website for a friend for equity in his company. Different user types and views to serve his purposes. He changed it out with a Wix site yesterday... It is true my CSS was shitty but damn, I spent so much time on the backend. I think I learned something?4

-

Being asked to produce a graph for metrics a, b and c and then being invited to the two hour "a, b and c" meeting to explain what they mean to the people that asked me to graph them. That's rapidly becoming my "job description"...

-

Not in prod today, but was part of a group project that we handed in and which got us an A.

The project was to write a PID controller for a robot that would drive along a track using a sensor to follow markings on the floor. During development we were drawing graphs of the PID parameters and sensor input every tick, which caused a bit of lag but no worries - we'll turn it off for the trial runs.

Imagine our pikachu shock meme when we turned off the graphs and our calibrations were suddenly *way* off since we had been oversteering all along to compensate for the lag.

There wasn't enough time to optimize it before the deadline and using sleeps didn't produce the same "type" of lag, so we just made the graph minimize itself when it opened. To this day I wonder if the professor ever saw it or if we got the A despite it. -

Last year, 2nd year of Uni, we had to create an app that read from CSV file that contained info on the no of ppl in each class and things like grades and such and had to display graphs of all the info tht you could then export as a pdf.

This had to also be sone in a team. I, however, hate doing anything other than programming (no team leader, pm bullshit) so I tell them I want to be one of the programmers (basically split the roles, rather than each one doing a bit of everything like my professor wanted) and we did.

I program this bitch wverything works well, I am happy. Day of the presentation comes, one of the graphs is broken... FUCK. I then go past it and never discuss the error. We got a 70.

I swear to God it worked on my computer -.-

I also have to mention that our professor was the client and he had set an actual deadline until we can ask him questions. After the deadline I realized I didn't know what a variable in the csv file was for and when I went to ask him he said "You should've asked me this before. I can't tell you now". My team was not the only one that didn't know and he gave the exact answer to everybody else. Got the answer from another team. Turns out it was useless.

He was the worst client ever. Why tf would you put a deadline on when you can ask the client questions?! I should be able to fucking ask questions during production if you want the product as you want it >.<7 -

Had a production issue last night where db hung so today whole team was investigating.

I checked the graphs and noticed a huge spike in inserts during a few hours. Normally it's distributed evenly through the day.

Emailed team with screenshots and also mentioned it to someone but then forgot to follow up... I assumed they were looking into it (I don't work in the same office as them).

Someone just logged in and notice the same thing happening right now... which made me remember.

So I asked him, did you see my email?

Silence....

Also got another guy doing a sort of code review on a util app I wrote that deletes certain records from our db and why I'm not just using SQL. I tools him the most obvious way doesn't work I tried but he won't believe me so let him do try it himself.

Anyway, these few days just feels like "why doesn't anyone listen to me?" ... and just feeling overqualified and sort of not part of the team again....3 -

DevRant-Stats Site Update:

A little Christmas Present for you!

-> Graphs!

Added two Graphs now to view the stats of the last 30 days.

Unfortunately the system is running since yesterday and there is not much data...

Little tip:

If you click on the labels above the graph you can disable them!7 -

Getting into trees and graphs in python.. quite frustrating at first ;-; especially with little OO experience..

-

About half an year ago, I literally fought with my DS teacher for half an hour in front of whole class,

REASON:

That dude couldn't saw the infinite while loop on his BINARY SEARCH algo hand written on board...

I mean come on dude, binary search is one of the simplest algo, and he didn't agreed with me until he dry run his written code(PS: the only thing I liked about him...HE KNOWS HOW TO DRY RUN)....

Scariest part: He was supposed to teach us trees and graphs in about a month

And it was my first day in that class -

Should i be posting on devRant to hire a fellow devRanter for a project in my company? (temporarily but may become constant if we click well)

More info:

Looking to hire a mostly frontend react dev.

The project is about graph visualisation and traversal.

Our stack is python (flask, appbuilder, SQLalchemy), react, react d3-graph, jest.

Must know how to use git and pull-requests.

(The software is based on Apache superset)

The code is a bit of a mess. But let's be honest, which big project isn't?12 -

My first job as a student was at the institute. I was working realy hard. Doing my best. Closing issues lika a boss. All my code was reviewed by senior.

Two other student has this simple program to make (gui for some functions and some graphs). They have no idea how to make it. Their code was worst than spageti and in four mounths then didn't even come close to the end. Noone even looked at theri code.

We were paid the same money!1 -

"Most people don't know what files are and don't know how to type and read, so they need tools that look up those files for them, put the numbers and words in those files into graphs and colors, and replace the typing with little buttons."

– imbecile on Reddit6 -

My lead: Here's an epic to remove a framework from all our projects. I want you to write every planning step in a document before you make any tickets or do any work.

Me: Okay cool. Before I do that, I'm just gonna finish the removal from the project we're 99% done with so we can remove that from the planning stages. I already did it locally with no issues so we know it's a 1 pointer. That takes us from two dependency graphs down to one which will help immensely in planning.

Lead: No. Don't make any tickets, this is a spike. Just put it in the document so management will know how long we expect the whole thing to take. And make sure you pull in this engineer from a completely different team who has his own tickets and doesn't even know I'm doing this. Make sure you include him on everything.4 -

Started out with python, while meaning to learn javascript.

I am now competent in python. Im still not sure how it happened.

Started python because I got tired with doing repetative calculations by hand. I think I had like a phobia of problem solving with nested loops. any time I thought a problem would require nesting, especially more than one nested loop, I would just avoid doing it, or end up doing it by hand.

Wrote so many goddamn loops though in the process of exploring graphs, doing things by hand seems like a nuisance. Thinking in loops has its own zen or something.

Now I just need to get over my fear of json-based CLI-enabled configuration-over-convention.1 -

Graphs and clustering changed my perception of the world. I caught myself thinking of random shit in clusters and edges, and now I realized I have been doing this for a while.

•I caught myself thinking the following a few minutes ago:

While taking a shower, for unexplained reasons, I started visualizing different groups of friend’s throughout my life and how their connection/relationship to its group (centroid AKA leader) had a significant influence on how each individual behaved. After doing this for five groups - I proceeded to label them in classes of behaviors and noticed why each friend behaved a certain way. Wtf right? 😂4 -

Fuck, now I'm actually somewhat mad how much time those figma plugins could've saved me lol.

Especially things like generating a quick color palette, that immediately pastes them next to the element are so damn useful.

Generating real-life data into text elements, avatars pulled right from an API, auto fetched graphs for example data, all the goodies that make life easier.5 -

I had the idea that part of the problem of NN and ML research is we all use the same standard loss and nonlinear functions. In theory most NN architectures are universal aproximators. But theres a big gap between symbolic and numeric computation.

But some of our bigger leaps in improvement weren't just from new architectures, but entire new approaches to how data is transformed, and how we calculate loss, for example KL divergence.

And it occured to me all we really need is training/test/validation data and with the right approach we can let the system discover the architecture (been done before), but also the nonlinear and loss functions itself, and see what pops out the other side as a result.

If a network can instrument its own code as it were, maybe it'd find new and useful nonlinear functions and losses. Networks wouldn't just specificy a conv layer here, or a maxpool there, but derive implementations of these all on their own.

More importantly with a little pruning, we could even use successful examples for bootstrapping smaller more efficient algorithms, all within the graph itself, and use genetic algorithms to mix and match nodes at training time to discover what works or doesn't, or do training, testing, and validation in batches, to anneal a network in the correct direction.

By generating variations of successful nodes and graphs, and using substitution, we can use comparison to minimize error (for some measure of error over accuracy and precision), and select the best graph variations, without strictly having to do much point mutation within any given node, minimizing deleterious effects, sort of like how gene expression leads to unexpected but fitness-improving results for an entire organism, while point-mutations typically cause disease.

It might seem like this wouldn't work out the gate, just on the basis of intuition, but I think the benefit of working through node substitutions or entire subgraph substitution, is that we can check test/validation loss before training is even complete.

If we train a network to specify a known loss, we can even have that evaluate the networks themselves, and run variations on our network loss node to find better losses during training time, and at some point let nodes refer to these same loss calculation graphs, within themselves, switching between them dynamically..via variation and substitution.

I could even invision probabilistic lists of jump addresses, or mappings of value ranges to jump addresses, or having await() style opcodes on some nodes that upon being encountered, queue-up ticks from upstream nodes whose calculations the await()ed node relies on, to do things like emergent convolution.

I've written all the classes and started on the interpreter itself, just a few things that need fleshed out now.

Heres my shitty little partial sketch of the opcodes and ideas.

https://pastebin.com/5yDTaApS

I think I'll teach it to do convolution, color recognition, maybe try mnist, or teach it step by step how to do sequence masking and prediction, dunno yet.6 -

My graph based programming language. It'll feature graphs as data types!... whenever I finish it. 😓2

-

Fucking hell my insights are late ones...

So I am working with fluid dynamics simulation. I went home fired up the laptop and started the calculations. This is how the events went:

9 PM: starting the calculation

10 PM: checking on the graphs to see whether everything will be alright if I leave it running. Then went to sleep.

2 AM: Waking up in shock, that I forgot to turn on autosave after every time step. Then reassured myself that this is only a test and I won't need the previous results anyway.

5 AM: waking up, everything seems to be fine. I pause the calculation hibernate the laptop and went to work.

6:40 AM on my way to the front door a stray thought struck into my mind... What if it lost contact with the licence server, while entering hibernated state. Bah never mind... It will establish a new connection when I switch it back on.

6.45 AM Switching on the laptop. Two error messages greet me.

1. Lost contact with license server.

2. Abnormal exit.

Looking on the tray the paused simulation is gone. Since I didn't enabled autosave, I have to start it all over again. Well. Lesson learned I guess. Too bad it cost 8 hours of CPU time.2 -

Was an internal auditor translating department process to a technical spec for a programmer. We were going to leverage an external company's API which would replace our need to use their slow and buggy web app.

During a meeting, an audit teammate suggested something be changed with the external service we were using. I said we could bring it up with the company but we shouldn't rely on it because we were a small customer even during out busiest month (200 from us vs 10000+ from big banks).

Teammate said we should have our programming team fix it. I made it clear that it was not our side and that to build out the service on our side was beyond our scope. Teammate continued to bring it up during the meeting then went back to desk after meeting and emailed us all marked up screenshots of the feature.

I ignored this and finished writing up the specs, sending them over to the programmer building out the service.

30 minutes later I get a call from programmer's manager who was quite angry at an expanded scope that was impossible (engineers were king at this company. Best not to anger them). Turns out my teammate had emailed his own spec to the programmers full of impossible features that did not reference the API docs.

I feel bad about it now but I yelled at my teammate quite loudly. I said he was spending time on something that was not reasonable or possible and when they continued to talk about their feature I yelled even louder.

Didn't get fired but it definitely tagged me as an asshole until I left. Fair enough :) -

There are a few constants in Software Development:

1) The requirements always change.

2) Don't trust input.

Silly me was so naive to ignore 1 and 2 and later I dealt with the consequences.

1) Oh, we have this new API and we're only going to build Google Maps interfaces with it. Nice, easy task. We won't have to address the other parts of the library, wooh! The next day: "Yeah guys, we kinda wanna use the other parts now". Me: sigh.

2) Simple task: I have my API accept CSV files so I can generate graphs out of them. What could go wrong? Provide wrong file? I caught that. Provide completely fucked up and garbled CSV? Whoops.1 -

I'm slowly realizing how much goofy code I put in my branch and overlooked. This code review is going to be interesting...

Some examples:

import plots as lel

<h4 id="title">Crunchatize Me, Captain! </h4>

go.Scattergeo(name="cheese", ...)

webster = { ... }

The commit messages are even worse.

- 'horizontalize' link list

- very messily hack in <feature>

- partially refactor some of the awful code from previous

- Remove one annoying space

- make background color less annoying

- remove seemingly useless property

- minor fix

- Apparently it's possible to center a DIV. Who knew?

- Made some cool bar graphs

And then there's just a bunch of reverts.2 -

This is gonna be a long post, and inevitably DR will mutilate my line breaks, so bear with me.

Also I cut out a bunch because the length was overlimit, so I'll post the second half later.

I'm annoyed because it appears the current stablediffusion trend has thrown the baby out with the bath water. I'll explain that in a moment.

As you all know I like to make extraordinary claims with little proof, sometimes

for shits and giggles, and sometimes because I'm just delusional apparently.

One of my legit 'claims to fame' is, on the theoretical level, I predicted

most of the developments in AI over the last 10+ years, down to key insights.

I've never had the math background for it, but I understood the ideas I

was working with at a conceptual level. Part of this flowed from powering

through literal (god I hate that word) hundreds of research papers a year, because I'm an obsessive like that. And I had to power through them, because

a lot of the technical low-level details were beyond my reach, but architecturally

I started to see a lot of patterns, and begin to grasp the general thrust

of where research and development *needed* to go.

In any case, I'm looking at stablediffusion and what occurs to me is that we've almost entirely thrown out GANs. As some or most of you may know, a GAN is

where networks compete, one to generate outputs that look real, another

to discern which is real, and by the process of competition, improve the ability

to generate a convincing fake, and to discern one. Imagine a self-sharpening knife and you get the idea.

Well, when we went to the diffusion method, upscaling noise (essentially a form of controlled pareidolia using autoencoders over seq2seq models) we threw out

GANs.

We also threw out online learning. The models only grow on the backend.

This doesn't help anyone but those corporations that have massive funding

to create and train models. They get to decide how the models 'think', what their

biases are, and what topics or subjects they cover. This is no good long run,

but thats more of an ideological argument. Thats not the real problem.

The problem is they've once again gimped the research, chosen a suboptimal

trap for the direction of development.

What interested me early on in the lottery ticket theory was the implications.

The lottery ticket theory says that, part of the reason *some* RANDOM initializations of a network train/predict better than others, is essentially

down to a small pool of subgraphs that happened, by pure luck, to chance on

initialization that just so happened to be the right 'lottery numbers' as it were, for training quickly.

The first implication of this, is that the bigger a network therefore, the greater the chance of these lucky subgraphs occurring. Whether the density grows

faster than the density of the 'unlucky' or average subgraphs, is another matter.

From this though, they realized what they could do was search out these subgraphs, and prune many of the worst or average performing neighbor graphs, without meaningful loss in model performance. Essentially they could *shrink down* things like chatGPT and BERT.

The second implication was more sublte and overlooked, and still is.

The existence of lucky subnetworks might suggest nothing additional--In which case the implication is that *any* subnet could *technically*, by transfer learning, be 'lucky' and train fast or be particularly good for some unknown task.

INSTEAD however, what has happened is we haven't really seen that. What this means is actually pretty startling. It has two possible implications, either of which will have significant outcomes on the research sooner or later:

1. there is an 'island' of network size, beyond what we've currently achieved,

where networks that are currently state of the3 art at some things, rapidly converge to state-of-the-art *generalists* in nearly *all* task, regardless of input. What this would look like at first, is a gradual drop off in gains of the current approach, characterized as a potential new "ai winter", or a "limit to the current approach", which wouldn't actually be the limit, but a saddle point in its utility across domains and its intelligence (for some measure and definition of 'intelligence').4 -

FUCK YOU SYNCFUSION, JUST FUCK YOU!! TRYING TO USE YOUR FUCKING LINEAR GRAPHS AND THEY NEVER FUCKING WORK!!! THEY DON'T ADAPT THEIR OWN BOUNDS, THEY DON'T SHOW LABELS EVEN THOUGH I'M FUCKING TELLING YOU TO SHOW THEM AND EVEN WHEN I ADD HEADERS YOU REFUSE TO SHOW THEM!! AND FOR SOME GODDAMN FUCKING REASON, WHENEVER I USE A TABBED PAGE YOU JUST GO UP AND FUCKING THROW AN "UNKNOWN EXCEPTION" JUST FUCK IT FUCK YOU , FUCK YOUR GRAPHS, FUCK EVERYTHING!!!!!!undefined fuck syncfusion seppuku i don't care anymore xamarin forms shitty framework or platform fml2

-

So the time has come for me to officially say "Fuck IE".

The potential client, one of the major hospital chain in the country, wants the site to work in Internet Explorer. Can't believe they are still clinging on stupid IE because Google Chrome is insecure 😂

There is no way all the charts and graphs we made would work in IE.

To top it off, the "bluffon" boss came up with idea of using flash to display this features on IE.

It's fucking 2017!!8 -

Studying trees prior to a technical interview tomorrow.

It's so daunting as I'm only recently delving into fundamentals of computer science - I studied something quite unrelated. I wish I could just sit down and build an Angular project...2 -

figured maybe you can specify dependencies specifically to be used in main.rs (as a standalone executable) or lib.rs (as a library)

since for some reason there's dev-dependencies which specifies they will only be used in tests or whatever

well rust actually doesn't compile code that wasn't ever called / would be run (and nags you about code you have but didn't use anywhere). this means binaries are smaller and all that. i've known about this but seemingly the AI insists nobody needs to specify dependency differences between main.rs and lib.rs because of this quirk of rust compiling

ok well then why the hell is there a dev dependencies and a normal dependencies then?

well no good reason.

- "intentionality" -- how about the clarity of intentionality between being an executable or a library?! no? guess not

- build optimization, because traversing usability graphs can be taxing especially in big projects. ok. again still applies to executable vs library problem

- "community and ecosystem practices". really? we've always done it this way? shove it 🙄😩. you try to innovative and then willfully inherit the problems you solved of other languages... because that's how we've always done it. lame

double standards. so annoying -

One month ago I had to start a school project with some my classmates. I managed all the infrastructure using terraform and today, the day before the delivery, I noticed that the graphs used for the monitoring always been so quiet. I decided to ask my team what was going on and these are their replies:

- "I thought IaC was more describing the actual infrastructure"

- "I didn't know we have a database on AWS, I always used my local postgres instance"

- "Why do we need to host our web app on AWS? I can just run it from Visual Studio"

I don't think I want to live on this planet anymore10 -

So they asked if we want a wallboard or not and we answered with a NO. As you already guessed we still got a wall mounted LG TV with a small linux box.

So I started to tinker with the wallboard and created a horrible python -> HTML5/JS stuff which crawls the data worth to show and creates static pages with html5 canvas for graphs.

Later I found atlasboard which is a discontinuated dashboard from Atlassian so started to learn nodejs and rebuilt and added new widgets to show our smoketests, our mssql server metrics from zabbix, our sprint from Jira and some other servers' status.

Then I created the essential metrics again but in Vice C64 emu, I collected and exported the data in python created a PETSCII compatible .prg file and the **** Dasboard 64 **** loaded and created graphs in the emu every minutes.

That was awesome! BASIC V2 is slow as hell but still awesome.3 -

Finally success, I can die now.

How do you research a subject you literally know nothing about and are unprepared? That's the main question when creating a system like deep search (e.g. perplexity).

I have made a clone that comes pretty close to perplexity. Sadly, perplexity has some tools i can not build in that easy, especially not for cheap, like live voice that you can interrupt and stuff. I'll add support for image uploads later. It can show up-to-date source code examples based on searches (so, by stupid outdated models) and has syntax highlighting for every language. It also generates nice graphs (that actually make sense, took a while) to compliment the data it finds.

Example of the application, try to search something yourself: https://diepzoek.app.molodetz.nl//...3 -

Trees -> declarative programming

Loops -> functional programming

Sequences -> imperative programming

Graphs -> dynamic programming

Good mapping, yeah or no?14 -

so i'm sitting here staring inwardly at the learning rate optimizer...

i think it works

but i find myself wanting to scream at the nuances of the method being hidden from me

I know its probably fairly simple.

i want to write my own.

i want to plot neat graphs that give me metrics at the learning results for each epoch showing how much closer the values are getting to the training data some neat spiral of values and lines and flashy too.

but i feel.

...welll

strangely lackluster and a tad paralyzed for some reason. partly because it feels like i've done this all before... sigh.

on the topic of things I already did.

https://en.wikipedia.org/wiki/...

can you believe they made this bullshit into A TV SHOW ? IN THIS WEIRD ASS HYPERSENSITIVE ENVIRONMENT ? THIS RAPE WEIRDOS WET DREAM ? 5 SEASONS AT LEAST.

GOD WHAT IS WRONG WITH THIS COUNTRY ? ITS EITHER ADULT MEN WATCHING RUGRATS OR THIS SHIT !2 -

Just Found pretty amazing stuffs with tensorflow and tensorboard.

It is great to have views and graphs of your training nodes

-

I was working as a lab tech/data analyst and wrote a bunch of macros to make my job less monotonous and decided I'd rather code all day than make graphs in Excel. A year-and-a-half later, I was 1 internship away from 2 associates degrees, so I quit my job and got the one I'm doing now. I love the work, but wish they'd pay me more.

-

There are two good ways to approach binary app distribution:

1. Every app has its own installer, shell-based or otherwise, that handles everything including choosing the right binary, downloading it, managing versions, updates, config files, etc — all of it.

2. Every app is distributed as source code. It's user's responsibility to build it by the guide provided in README and keep it updated.

In between those two pillars, there is an uncanny valley of orphaned dependency graphs, broken post-install scripts, vendor-locked enforced app stores, eCoSyStEmS of apps, Adobe Creative Cloud, and all the other shit.6 -

How do you feel about not creating database tables for objects that only exist in relations?

For example, I have made a wiki engine. Because nothing on wiki pages can actually change, they aren't an entity. Revisions are an entity, and they refer to the title of the page which was changed. The same application also includes two non-version-controlled directed graphs between the pages (element of category and navigation log), which are represented by tables that link two titles. Of course the indexes are all set up so that it works like a foreign key would, but there is no Page or Article table. -

I had to generate different kinds of graphs at compiletime and had to compile a graph and write down the code size for that specific width/height in addition to one of three implementations which all need to be evaluated. I computer scienced the shit out of it!

I wrote some Rust code that easily lets me build some graphs with the dimensions passed as input parameter. Then i wrote a method that converts the graph into the definition of the graph in a C header (sadly the only way) and wrote a bash script that executes that rust code with all possible dimensions and saves the header into my source folder. Then i build the application and write the programsize into a file.

In the next step i run a python script that reads all the generated files with the sizes and created a csv file which in turn can be used by excel/numbers to visualize the dependency between depth of graph and code size 😄

I had only some hours for it all, it is messy but works 😄 -

Previously, I half-assedly theorized that, given a timeline on which I'd store state mutations, with each mutation being an action taken ingame by either the player or computer, I could feasibly construct a somewhat generative narrative engine.

Basis: the system reads the current state, builds [some structure] holding possible choices, and prompts the player to take an action from those choices. The action modifies the state, and the loop begins anew, save that now it's the system "prompting itself", so to speak.

Utterly barebones and abstract as it may be, it was useful to build this concept in my head as it gave me a way to reason about what I wanted to build. But there were two problems which I had to grapple with:

- What would [some structure] even be?

- How would the computer make choices based on an instance of [some structure]?

I found myself striking the philosopher pose for long hours on the toilet, deeply pondering these questions which I couldn't help but merge into one due to the shared incognita; silly brain wanted trees but I kept figuring out that's not going to work as the relationships between symbols are sometimes but not always hierarchical. Shhh, silly brain, it's not trees.

So what is the answer?

Well, can you guess it?

Graphs, of course it's fucking graphs. Specifically, a state transition graph. It was right in my face the whole time and I couldn't see it. Well, close enough.

It's ideal as the system in question is a finite state machine with strong emphasis on finite -- the whole point is narrowing down choices, which now that I think about it, can also come down to another graph. Let me explain.

A 'symbol' or rather SIGIL is an individual in-game effect. To this FSM, it's an instruction. Sigils are used to compose actions, which you can think of as an encapsulation of some function, or better yet, an *undoable transaction* which causes some alteration in the game world.

But to form a narrative from a sequence of such transactions, and to allow the system to respond to them coherently, relationships need to be established between sigils in a manner that can be reasoned about in code. You may not realize this yet but this is both a language processing and text generation problem, so fuck me.

However, we have a big advantage in that we are not dealing with *natural* language, that is to say, each sigil is a structure from which we can extract valuable information on the nature of the state transformation applied.

This allows us to find relationships between sigils programmatically: two words are related if some comparison between the underlying structure -- and the transformation it describes -- holds true. Therefore, if we take the sigils that compose the last transformation in the timeline, fetch relationships for said sigils according to a given criteria, then eliminate all immediate relationships that are not shared between all members of the group, we end up with a new one that can be utilized as starting point to construct a reply.

More elimination of possibilities would have to be performed as this reply is constructed [*], but the point is that because the context (timeline) is itself made of previous transforms, the system *could* make such a reply coherent, or at the very least internally consistent.

Well... in the world of half-assed theory. I don't know whether I'm stupid, insane, both, pad for alignment, or this is an actual breakthrough. Maybe none of the above.

Anyway, it's another way to mentally model the problem which is very useful. New challenge would be the text generation part, extremely high chance of gibberish within existing vision; need more potty-pondering.

[*]: I'll break it into bits OK.

0. Determine intention. That's right, the reply isn't actually _fully_ generated, it's just making variations on a template. So pick a template depending on who is taking a turn and replying to who (think companion relationship score bullshit)

1. Sort the new group according to the number of connections the constituent sigils had to the context from which they were extracted, higher first.