Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "works on my machine"

-

I’m a senior dev at a small company that does some consulting. This past October, some really heavy personal situation came up and my job suffered for it. I raised the flag and was very open with my boss about it and both him and my team of 3 understood and were pretty cool with me taking on a smaller load of work while I moved on with some stuff in my life. For a week.

Right after that, I got sent to a client. “One month only, we just want some presence there since it’s such a big client” alright, I guess I can do that. “You’ll be in charge of a team of a few people and help them technically.” Sounds good, I like leading!

So I get here. Let’s talk technical first: from being in a small but interesting project using Xamarin, I’m now looking at Visual Basic code, using Visual Studio 2010. Windows fucking Forms.

The project was made by a single dev for this huge company. She did what she could but as the requirements grew this thing became a behemoth of spaghetti code and User Controls. The other two guys working on the project have been here for a few months and they have very basic experience at the job anyways. The woman that worked on the project for 5 years is now leaving because she can’t take it anymore.

And that’s not the worse of it. It took from October to December for me to get a machine. I literally spent two months reading on my cellphone and just going over my shitty personal situation for 8 hours a day. I complained to everyone I could and nothing really worked.

Then I got a PC! But wait… no domain user. Queue an extra month in which I could see the Windows 7 (yep) log in screen and nothing else. Then, finally! A domain user! I can log in! Just wait 2 extra weeks for us to give your user access to the subversion rep and you’re good to go!

While all of this went on, I didn’t get an access card until a week ago. Every day I had to walk to the reception desk, show my ID and request they call my boss so he could grant me access. 5 months of this, both at the start of the day and after lunch. There was one day in particular, between two holidays, in which no one that could grant me access was at the office. I literally stood there until 11am in which I called my company and told them I was going home.

Now I’ve been actually working for a while, mostly fixing stuff that works like crap and trying to implement functions that should have been finished but aren’t even started. Did I mention this App is in production and being used by the people here? Because it is. Imagine if you will the amount of problems that an application that’s connecting to the production DB can create when it doesn’t even validate if the field should receive numeric values only. Did I mention the DB itself is also a complete mess? Because it is. There’s an “INDEXES” tables in which, I shit you not, the IDs of every other table is stored. There are no Identity fields anywhere, and instead every insert has to go to this INDEXES table, check the last ID of the table we’re working on, then create a new registry in order to give you your new ID. It’s insane.

And, to boot, the new order from above is: We want to split this app in two. You guys will stick with the maintenance of half of it, some other dudes with the other. Still both targeting the same DB and using the same starting point, but each only working on the module that we want them to work in. PostmodernJerk, it’s your job now to prepare the app so that this can work. How? We dunno. Why? Fuck if we care. Kill you? You don’t deserve the swift release of death.

Also I’m starting to get a bit tired of comments that go ‘THIS DOESN’T WORK and ‘I DON’T KNOW WHY WE DO THIS BUT IT HELPS and my personal favorite ‘??????????????????????13 -

LONG RANT AHEAD!

In my workplace (dev company) I am the only dev using Linux on my workstation. I joined project XX, a senior dev onboarded me. Downloaded the code, built the source, launched the app,.. BAM - an exception in catalina.out. ORM framework failed to map something.

mvn clean && mvn install

same thing happens again. I address this incident to sr dev and response is "well.... it works on my machine and has worked for all other devs. It must be your environment issue. Prolly linux is to blame?" So I spend another hour trying to dig up the bug. Narrowed it down to a single datamodel with ORM mapping annotation looking somewhat off. Fixed it.

mvn clean && mvn install

the app now works perfectly. Apparently this bug has been in the codebase for years and Windows used to mask it somehow w/o throwing an exception. God knows what undefined behaviour was happening in the background...

Months fly by and I'm invited to join another project. Sounds really cool! I get accesses, checkout the code, build it (after crossing the hell of VPNs on Linux). Run component 1/4 -- all goocy. run component 2,3/4 -- looks perfect. Run component 4/4 -- BAM: LinkageError. Turns out there is something wrong with OSGi dependencies as ClassLoader attempts to load the same class twice, from 2 different sources. Coworkers with Windows and MACs have never seen this kind of exception and lead dev replies with "I think you should use a normal environment for work rather than playing with your Linux". Wtf... It's java. Every env is "normal env" for JVM! I do some digging. One day passes by.. second one.. third.. the weekend.. The next Friday comes and I still haven't succeeded to launch component #4. Eventually I give up (since I cannot charge a client for a week I spent trying to set up my env) and walk away from that project. Ever since this LinkageError was always in my mind, for some reason I could not let it go. It was driving me CRAZY! So half a year passes by and one of the project devs gets a new MB pro. 2 days later I get a PM: "umm.. were you the one who used to get LinkageError while starting component #4 up?". You guys have NO IDEA how happy his message made me. I mean... I was frickin HIGH: all smiling, singing, even dancing behind my desk!! Apparently the guy had the same problem I did. Except he was familiar with the project quite well. It took 3 more days for him to figure out what was wrong and fix it. And it indeed was an error in the project -- not my "abnormal Linux env"! And again for some hell knows what reason Windows was masking a mistake in the codebase and not popping an error where it must have popped. Linux on the other hand found the error and crashed the app immediatelly so the product would not be shipped with God knows what bugs...

I do not mean to bring up a flame war or smth, but It's obvious I've kind of saved 2 projects from "undefined magical behaviour" by just using Linux. I guess what I really wanted to say is that no matter how good dev you are, whether you are a sr, lead or chief dev, if your coworker (let it be another sr or a jr dev) says he gets an error and YOU cannot figure out what the heck is wrong, you should not blame the dev or an environment w/o knowing it for a fact. If something is not working - figure out the WHATs and WHYs first. Analyze, compare data to other envs,... Not only you will help a new guy to join your team but also you'll learn something new. And in some cases something crucial, e.g. a serious messup in the codebase.11 -

Started being a Teaching Assistant for Intro to Programming at the uni I study at a while ago and, although it's not entirely my piece of cake, here are some "highlights":

* students were asked to use functions, so someone was ingenious (laughed my ass off for this one):

def all_lines(input):

all_lines =input

return all_lines

* "you need to use functions" part 2

*moves the whole code from main to a function*

* for Math-related coding assignments, someone was always reading the input as a string and parsing it, instead of reading it as numbers, and was incredibly surprised that he can do the latter "I always thought you can't read numbers! Technology has gone so far!"

* for an assignment requiring a class with 3 private variables, someone actually declared each variable needed as a vector and was handling all these 3 vectors as 3D matrices

* because the lecturer specified that the length of the program does not matter, as long as it does its job and is well-written, someone wrote a 100-lines program on one single line

* someone was spamming me with emails to tell me that the grade I gave them was unfair (on the reason that it was directly crashing when run), because it was running on their machine (they included pictures), but was not running on mine, because "my Python version was expired". They sent at least 20 emails in less than 2h

* "But if it works, why do I still have to make it look better and more understandable?"

* "can't we assume the input is always going to be correct? Who'd want to type in garbage?"

* *writes 10 if-statements that could be basically replaced by one for-loop*

"okay, here, you can use a for-loop"

*writes the for loop, includes all the if-statements from before, one for each of the 10 values the for-loop variable gets*

* this picture

N.B.: depending on how many others I remember, I may include them in the comments afterwards 19

19 -

The Top 20 replies by programmers when their programs do not work:

20. "That's weird..."

19. "It's never done that before."

18. "It worked yesterday."

17. "How is that possible?"

16. "It must be a hardware problem."

15. "What did you type in wrong to get it to crash?"

14. "There is something funky in your data."

13. "I haven't touched that module in weeks!"

12. "You must have the wrong version."

11. "It's just some unlucky coincidence."

10. "I can't test everything!"

9. "THIS can't be the source of THAT."

8. "It works, but it hasn't been tested."

7. "Somebody must have changed my code."

6. "Did you check for a virus on your system?"

5. "Even though it doesn't work, how does it feel?

4. "You can't use that version on your system."

3. "Why do you want to do it that way?"

2. "Where were you when the program blew up?"

And the Number One reply by programmers when their programs don't work:

1. "It works on my machine."10 -

I just found new band called "localhost". They recently published their new album named "127.0.0.1" with an awesome song "It works on my machine".

It's awesome :D9 -

My biggest dev blunder. I haven't told a single soul about this, until now.

👻👻👻👻👻👻

So, I was working as a full stack dev at a small consulting company. By this time I had about 3 years of experience and started to get pretty comfortable with my tools and the systems I worked with.

I was the person in charge of a system dealing with interactions between people in different roles. Some of this data could be sensitive in nature and users had a legal right to have data permanently removed from our system. In this case it meant remoting into the production database server and manually issuing DELETE statements against the db. Ugh.

As soon as my brain finishes processing the request to venture into that binary minefield and perform rocket surgery on that cursed database my sympathetic nervous system goes into high alert, palms sweaty. Mom's spaghetti.

Alright. Let's do this the safe way. I write the statements needed and do a test run on my machine. Works like a charm 😎

Time to get this over with. I remote into the server. I paste the code into Microsoft SQL Server Management Studio. I read through the code again and again and again. It's solid. I hit run.

....

Wait. I ran it?

....

With the IDs from my local run?

...

I stare at the confirmation message: "Nice job dude, you just deleted some stuff. Cool. See ya. - Your old pal SQL Server".

What did I just delete? What ramifications will this have? Am I sweating? My life is over. Fuck! Think, think, think.

You're a professional. Handle it like one, goddammit.

I think about doing a rollback but the server dudes are even more incompetent than me and we'd lose all the transactions that occurred after my little slip. No, that won't fly.

I do the only sensible thing: I run the statements again with the correct IDs, disconnect my remote session, and BOTTLE THAT SHIT UP FOREVER.

I tell no one. The next few days I await some kind of bug report or maybe a SWAT team. Days pass. Nothing. My anxiety slowly dissipates. That fateful day fades into oblivion and I feel confident my secret will die with me. Cool ¯\_(ツ)_/¯12 -

Worst dev team failure I've experienced?

One of several.

Around 2012, a team of devs were tasked to convert a ASPX service to WCF that had one responsibility, returning product data (description, price, availability, etc...simple stuff)

No complex searching, just pass the ID, you get the response.

I was the original developer of the ASPX service, which API was an XML request and returned an XML response. The 'powers-that-be' decided anything XML was evil and had to be purged from the planet. If this thought bubble popped up over your head "Wait a sec...doesn't WCF transmit everything via SOAP, which is XML?", yes, but in their minds SOAP wasn't XML. That's not the worst WTF of this story.

The team, 3 developers, 2 DBAs, network administrators, several web developers, worked on the conversion for about 9 months using the Waterfall method (3~5 months was mostly in meetings and very basic prototyping) and using a test-first approach (their own flavor of TDD). The 'go live' day was to occur at 3:00AM and mandatory that nearly the entire department be on-sight (including the department VP) and available to help troubleshoot any system issues.

3:00AM - Teams start their deployments

3:05AM - Thousands and thousands of errors from all kinds of sources (web exceptions, database exceptions, server exceptions, etc), site goes down, teams roll everything back.

3:30AM - The primary developer remembered he made a last minute change to a stored procedure parameter that hadn't been pushed to production, which caused a side-affect across several layers of their stack.

4:00AM - The developer found his bug, but the manager decided it would be better if everyone went home and get a fresh look at the problem at 8:00AM (yes, he expected everyone to be back in the office at 8:00AM).

About a month later, the team scheduled another 3:00AM deployment (VP was present again), confident that introducing mocking into their testing pipeline would fix any database related errors.

3:00AM - Team starts their deployments.

3:30AM - No major errors, things seem to be going well. High fives, cheers..manager tells everyone to head home.

3:35AM - Site crashes, like white page, no response from the servers kind of crash. Resetting IIS on the servers works, but only for around 10 minutes or so.

4:00AM - Team rolls back, manager is clearly pissed at this point, "Nobody is going fucking home until we figure this out!!"

6:00AM - Diagnostics found the WCF client was causing the server to run out of resources, with a mix of clogging up server bandwidth, and a sprinkle of N+1 scaling problem. Manager lets everyone go home, but be back in the office at 8:00AM to develop a plan so this *never* happens again.

About 2 months later, a 'real' development+integration environment (previously, any+all integration tests were on the developer's machine) and the team scheduled a 6:00AM deployment, but at a much, much smaller scale with just the 3 development team members.

Why? Because the manager 'froze' changes to the ASPX service, the web team still needed various enhancements, so they bypassed the service (not using the ASPX service at all) and wrote their own SQL scripts that hit the database directly and utilized AppFabric/Velocity caching to allow the site to scale. There were only a couple client application using the ASPX service that needed to be converted, so deploying at 6:00AM gave everyone a couple of hours before users got into the office. Service deployed, worked like a champ.

A week later the VP schedules a celebration for the successful migration to WCF. Pizza, cake, the works. The 3 team members received awards (and a envelope, which probably equaled some $$$) and the entire team received a custom Benchmade pocket knife to remember this project's success. Myself and several others just stared at each other, not knowing what to say.

Later, my manager pulls several of us into a conference room

Me: "What the hell? This is one of the biggest failures I've been apart of. We got rewarded for thousands and thousands of dollars of wasted time."

<others expressed the same and expletive sediments>

Mgr: "I know..I know...but that's the story we have to stick with. If the company realizes what a fucking mess this is, we could all be fired."

Me: "What?!! All of us?!"

Mgr: "Well, shit rolls downhill. Dept-Mgr-John is ready to fire anyone he felt could make him look bad, which is why I pulled you guys in here. The other sheep out there will go along with anything he says and more than happy to throw you under the bus. Keep your head down until this blows over. Say nothing."11 -

We are transferring our infrastructure to Google cloud and Docker. Last week one of our frontend developers tell me:

But it works without Docker! 9

9 -

I really, honestly, am getting annoyed when someone tells me that "Linux is user-friendly". Some people seem to think that because they themselves can install Linux, that anyone can, and because I still use Windows I'm some sort of a noob.

So let me tell you why I don't use Linux: because it never actually "just works". I have tried, at the very least two dozen times, to install one distro or another on a machine that I owned. Never, not even once, not even *close*, has it installed and worked without failing on some part of my hardware.

My last experience was with Ubuntu 17.04, supposed to have great hardware and software support. I have a popular Dell Alienware machine with extremely common hardware (please don't hate me, I had a great deal through work with an interest-free loan to buy it!), and I thought for just one moment that maybe Ubuntu had reached the point where it just, y'know, fucking worked when installing it... but no. Not a chance.

It started with my monitors. My secondary monitor that worked fine on Windows and never once failed to display anything, simply didn't work. It wasn't detected, it didn't turn on, it just failed. After hours of toiling with bash commands and fucking around in x conf files, I finally figured out that for some reason, it didn't like my two IDENTICAL monitors on IDENTICAL cables on the SAME video card. I fixed it by using a DVI to HDMI adapter....

Then was my sound card. It appeared to be detected and working, but it was playing at like 0.01% volume. The system volume was fine, the speaker volume was fine, everything appeared great except I literally had no fucking sound. I tried everything from using the front output to checking if it was going to my display through HDMI to "switching the audio sublayer from alsa to whatever the hell other thing exists" but nothing worked. I gave up.

My mouse? Hell. It's a Corsair Gaming mouse, nothing fancy, it only has a couple extra buttons - none of those worked, not even the goddamn scrollwheel. I didn't expect the *lights* to work, but the "back" and "Forward" buttons? COME ON. After an hour, I just gave up.

My media keyboard that's like 15 years old and is of IBM brand obviously wasn't recognized. Didn't even bother with that one.

Of my 3 different network adapters (2 connectors, one wifi), only one physical card was detected. Bluetooth didn't work. At this point I was so tired of finding things that didn't work that I tried something else.

My work VPN... holy shit have you ever tried configuring a corporate VPN on Linux? Goddamn. On windows it's "next next next finish then enter your username/password" and on Linux it's "get this specific format TLS certificate from your IT with a private key and put it in this network conf and then run this whatever command to...." yeah no.

And don't get me started on even attempting to play GAMES on this fucking OS. I mean, even installing the graphic drivers? Never in my life have I had to *exit the GUI layer of an OS* to install a graphic driver. That would be like dropping down to MS-DOS on Windows to install Nvidia drivers. Holy shit what the fuck guys. And don't get me started on WINE, I ain't touching this "not an emulator emulator" with a 10-foot pole.

And then, you start reading online for all these problems and it's a mix of "here are 9038245 steps to fix your problem in the terminal" and "fucking noob go back to Windows if you can't deal with it" posts.

It's SO FUCKING FRUSTRATING, I spent a whole day trying to get a BASIC system up and running, where it takes a half-hour AT MOST with any version of Windows. I'm just... done.

I will give Ubuntu one redeeming quality, however. On the Live USB, you can use the `dd` command to mirror a whole drive in a few minutes. And when you're doing fucking around with this piece of shit OS that refuses to do simple things like "playing audio", `dd` will restore Windows right back to where it was as if Ubuntu never existed in the first place.

Thanks, `dd`. I wish you were on Windows. Your OS is the LEAST user friendly thing I've ever had to deal with.31 -

So I finally got my head out of my ass and decided to install some OS on that 500MB RAM legacy craptop from earlier.

*installs Tiny Core Linux*

Hmm.. how do I install extra packages into this thing again? *Googles how to install packages*

Aha, extensions it's called.. and you install them through their little package manager GUI, and then you also have to dick around with some TCE directory, and boot options for that. Well I ain't gonna do that. Why the fuck would I need to dick around with that? Just install the fucking files in /bin, /var, /etc and whatever the fuck you need to like a decent distro. I'll fucking load them whenever I need them, BY EXECUTING THE FUCKING BINARY. But no, apparently that's not how TCL works.

Also, why the fuck is this keyboard still set to US? I'm using a Belgian keyboard for fuck's sake.. "loadkeys be-latin1"

> Command not found.

Okay... (fucking piece of shit) how do I change the fucking keyboard layout for this shit?!

*does the jazz hand routine required for that*

So apparently I need to install a package for that as well. Oh wait, an EXTENSION!! My bad. And then you can use "loadkmap < /usr/share/kmap/something/something" to load the keyboard layout. Except that it doesn't change the fucking keymap at all! ONE FUCKING JOB, YOU PIECE OF SHIT!!!

That's fucking it. No more dicking around in TCL. If I wanted to fuck around with the system this much, I'd have compiled my own custom Linux system. Maybe I can settle with Arch Linux, that's a familiar distro to me.. I can easily install openbox in that and call it a day. But this is an i686 machine.. Arch doesn't support that anymore, does it?

*does another jazz hand routine on Arch Linux 32 and sees that there's a community-maintained project just for that*

Oh God bless you fine Arch Linux users for making a community fork!! I fucking love you.. thank you so much!! Arch it'll be then <318 -

*Doing a Peer Code Review of someone senior to me*

Me: This fix doesn't look like it will work, but maybe I don't understand. How does this fix the defect?

Senior Dev: *Blinks* It works on my machine

Me: But how does it work?

Senior Dev: It works when I run it on my machine...

Me: Do you know if this will fix the issue?

*Silence*

Never seen QA punt an issue back to development so fast.7 -

Me and co-worker troubleshooting why he can't run the docker container for database.

Me: Check if the port is busy.

Co-worker: To my knowledge, it isn't.

Me: Strange, it just works fine for me and everyone else.

Me: And you're sure you didn't already start it previously?

*We verify that it isn't running*

Me: I'm pretty sure the port is busy from that error message. Try another port.

Co-worker: Already did, it didn't work.

Me: And by any chance restarting your machine won't solve anything?

*It doesn't solve anything*

Me: Alright, I have some work to do, but I'll get back to this. Tell me if you find a solution.

Co-worker: Alright.

*** Time passes, when I get back he has switched to windows, dualboot, same machine ***

Me: I don't think you'll have a better time running the docker image on windows.

Co-worker: Oh, that's not what I'm looking for. You see, I had a database on my windows partition recently and I thought maybe thats why it won't start.

Me (screaming internally) : WTF ARE YOU STUPID, WINDOWS AND LINUX ISNT RUNNING AT THE SAME FUCKING TIME.

Me (actually saying): I don't think computers work like that.

Co-worker: My computer is magical. It does strange things.

Me: That's a logical conclusion.

*** More time passes ***

Co-worker solves the problem. The port was busy because Ubuntu was already running PostgreSQL on that port.

Third co-worker shimes in: Oh yeah, I had the exact same problem and it took me a long time to solve it.

Everyone is sitting in arms reach of each other.

So not only was I right from the start. Someone else heard this whole conversation and didn't chime in with his solution. And the troubleshooting step of booting into windows and looking if a database is running there ???? Wtf

Why was I put on this Earth?6 -

Fuck code.org. Fuck code. Not code code, but "code" (the word "code"). I hate it. At least for teaching. Devs can use it as much as they want, they know what it means and know you can't hack facebook with 10 seconds of furiously typing "code" into a terminal. What the fuck are you thinking when you want me to hack facebook? No, when I program, it's not opening terminal, changing to green text and typing "hack <insert website name here, if none is given, this will result to facebook.com>" Can you just shut the fuck up about how you think that because you can change the font in google fucking docs you have the right to tell me what code can and can't do? No, fuck you. Now to my main point, fuck "code" (the string). It's an overused word, and it's nothing but a buzzword (to non devs, you guys know what you're talking about. how many times have you seen someone think they are a genius when they here the word "code"?) People who don't know shit don't call themselves programmers or devs, they call themselves coders. Why? It fucking sounds cool, and I won't deny that, but the way it's talked about in movies, by people, (fucking) code.org, etc, just makes people too much of a bitch for me to handle. I want everyone reading this rant who has friends who respect the fact that YOU know code (I truly believe everyone on devRant does), how it works, and it's/your limitations, AND that it takes hard work and effort, to thank god right now. If you're stuck with some people like me, I feel you. Never say "code" near them again. Say "program." I really hate people who think they know what an HTML tag is and go around calling themselves coders. Now onto my main point, code.org. FUCK IT. CAN YOU STOP RUINING MY FUCKING AP CS CLASS. NO CODE.ORG, I DON'T NEED TO WATCH YOUR TEN GODDAMN VIDEOS ON HOW TECHNOLOGY IS IMPORTANT, <sarcasm>I'VE BEEN LIVING UNDER A ROCK FOR THIRTY YEARS</sarcasm>. DO I REALLY NEED ANOTHER COPY OF SCRATCH? WAIT, NO, SCRATCH WAS BETTER. YOU HAD FUCKING MICROSOFT, GOOGLE, AND OTHER TECHNOLOGICAL GIANTS AND YOU FUCKED UP SO BAD YOU MADE IT WORSE THAT SCRATCH. JUST LETMECODE (yes I said that) AND STOP TALKING ABOUT HOW SOME IRRELEVANT ROBOT ARM DEVELOPED BY MIT IS USING AI AND MACHINE LEARNING TO MAKE SOME ROBOT EVOLVE?! IF YOU SPEND ONE MORE SECOND SAYING "INNOVATION" I'LL SHOVE THAT PRINT STATEMENT YOU HAVE A SYNTAX ERROR UP YOUR ASS. DON'T GET ME FUCKING STARTED ON HOW ITS IMPOSSIBLE TO DO ANYTHING FOR YOURSELF WHEN YOUR GETTING ALL THE ANSWERS WITHOUT DOING ANY WORK AND THE FACT THAT JAVASCRIPT IS YOUR FUCKING LANGUAGE. <sarcasm>GREAT IDEA, LETS GET THESE NEW PROGRAMMERS INTO A PROFESSIONAL ENVOIRMENT BY ADDING A DRAG AND DROP CODE (obviously we can say it) EDITOR</sarcasm> MAYBE IF YOU GOT THIS SHIT UP YOUR ASS AND TO YOUR BRAIN YOU'D ACTUALLY GET TO PRPGRAMMING IN YOUR ADVANCED AP COURSE. ITS CALLED FUCKING CODE.ORG FOR A REASON32

-

This rant means YOU if you are one of those people that "fix" their family's computers.

I was visiting my family over the holidays and while I managed to stay away from fixing their computers for the most time, I offered to help my grandfather to update the Garmin navigation device he wanted to gift my father. (They do not use smartphones for navigation, and my father doesn't want "these modern shitty phones".)

When booting up my grandfather's laptop, I realized something odd: Linux Mint boot screen. Wut?

And immediately I said: "It could be impossible to update your navigation device on this laptop."

As true enough, the Garmin Express update software requires either a Windows PC or a Mac; and even though I vaguely hoped it might be possible to upgrade through Linux, I just could not be bothered to find out that day.

What I wondered though is why did my grandfather of all people ran Linux!?

Don't get me wrong, I use Linux myself on my work machine and I never want to work with something else when coding; yet my grandfather is an end user of the show-me-where-and-what-and-how-often-to-click-kind.

What could he gain by it?

As it turns out, the computer nerd's friend of my uncle managed his PC. And my uncle and he decided unanimously my grandfather should better run Linux. Is it something my grandfather needs? No. BUT IT'S RIGHT! Suck it up! (My father's laptop therefore also runs Linux Mint. So he can't upgrade his new device either.)

This is the ugly kind of entitled nerd-dom I truly detest.

When discussing things further, my grandfather told me that he had problems ever since with his printer. Under Windows, he knew how to print on the special photo paper. Under Linux, all he can barely manage is to print on normal papers. Shame, printing photos was the only thing he liked doing on that device. What did my uncle's friend tell him?

"Get a decent printer!"

Fuck that guy.

It's fine if Linux works for you, but before you install it on a PC of a relative, you better make sure it fits their needs! If you have that odd member that only wants to write letters, read emails, use facebook, and wants to play that browser game, feel free to introduce them to Linux.

Yet if they have any special wish, don't stand in their way.

If they want to do something that requires a certain OS, don't just decide for them that their desire is wrong, but help them achieve their goal. If you can't align that with your ideology, then get the fuck out of my way and stop "helping".

For some people, a computer is a device to achieve a certain goal, a work. They only get hindered by your ill-advised attempts at virtue signalling.8 -

3 rants for the price of 1, isn't that a great deal!

1. HP, you braindead fucking morons!!!

So recently I disassembled this HP laptop of mine to unfuck it at the hardware level. Some issues with the hinge that I had to solve. So I had to disassemble not only the bottom of the laptop but also the display panel itself. Turns out that HP - being the certified enganeers they are - made the following fuckups, with probably many more that I didn't even notice yet.

- They used fucking glue to ensure that the bottom of the display frame stays connected to the panel. Cheap solution to what should've been "MAKE A FUCKING DECENT FRAME?!" but a royal pain in the ass to disassemble. Luckily I was careful and didn't damage the panel, but the chance of that happening was most certainly nonzero.

- They connected the ribbon cables for the keyboard in such a way that you have to reach all the way into the spacing between the keyboard and the motherboard to connect the bloody things. And some extra spacing on the ribbon cables to enable servicing with some room for actually connecting the bloody things easily.. as Carlos Mantos would say it - M-m-M, nonoNO!!!

- Oh and let's not forget an old flaw that I noticed ages ago in this turd. The CPU goes straight to 70°C during boot-up but turning on the fan.. again, M-m-M, nonoNO!!! Let's just get the bloody thing to overheat, freeze completely and force the user to power cycle the machine, right? That's gonna be a great way to make them satisfied, RIGHT?! NO MOTHERFUCKERS, AND I WILL DISCONNECT THE DATA LINES OF THIS FUCKING THING TO MAKE IT SPIN ALL THE TIME, AS IT SHOULD!!! Certified fucking braindead abominations of engineers!!!

Oh and not only that, this laptop is outperformed by a Raspberry Pi 3B in performance, thermals, price and product quality.. A FUCKING SINGLE BOARD COMPUTER!!! Isn't that a great joke. Someone here mentioned earlier that HP and Acer seem to have been competing for a long time to make the shittiest products possible, and boy they fucking do. If there's anything that makes both of those shitcompanies remarkable, that'd be it.

2. If I want to conduct a pentest, I don't want to have to relearn the bloody tool!

Recently I did a Burp Suite test to see how the devRant web app logs in, but due to my Burp Suite being the community edition, I couldn't save it. Fucking amazing, thanks PortSwigger! And I couldn't recreate the results anymore due to what I think is a change in the web app. But I'll get back to that later.

So I fired up bettercap (which works at lower network layers and can conduct ARP poisoning and DNS cache poisoning) with the intent to ARP poison my phone and get the results straight from the devRant Android app. I haven't used this tool since around 2017 due to the fact that I kinda lost interest in offensive security. When I fired it up again a few days ago in my PTbox (which is a VM somewhere else on the network) and today again in my newly recovered HP laptop, I noticed that both hosts now have an updated version of bettercap, in which the options completely changed. It's now got different command-line switches and some interactive mode. Needless to say, I have no idea how to use this bloody thing anymore and don't feel like learning it all over again for a single test. Maybe this is why users often dislike changes to the UI, and why some sysadmins refrain from updating their servers? When you have users of any kind, you should at all times honor their installations, give them time to change their individual configurations - tell them that they should! - in other words give them a grace time, and allow for backwards compatibility for as long as feasible.

3. devRant web app!!

As mentioned earlier I tried to scrape the web app's login flow with Burp Suite but every time that I try to log in with its proxy enabled, it doesn't open the login form but instead just makes a GET request to /feed/top/month?login=1 without ever allowing me to actually log in. This happens in both Chromium and Firefox, in Windows and Arch Linux. Clearly this is a change to the web app, and a very undesirable one. Especially considering that the login flow for the API isn't documented anywhere as far as I know.

So, can this update to the web app be rolled back, merged back to an older version of that login flow or can I at least know how I'm supposed to log in to this API in order to be able to start developing my own client?6 -

We are moving to kubernetes.

Nothing much has changed except we now get to say

it works on my cluster.

instead of

it work on my machine.1 -

Hello again, everyone. As Sunday comes to a close, and Monday is fast approaching, I'll share with you the likely cause of my death by stroke and/or heart attack:

MONDAY MORNING COFFEE OF HORROR

Disclaimer: Do NOT try this. I am a professional addict. I am not responsible for anything this brew from hell causes to you and/or those around you.

So, I wake up, feeling like I haven't slept for days, or just notice the fucking alarm clock shrieking because I pulled an all-nighter.

Step 1: Silence alarm clock via mild violence.

Step 2: Get the coffee machine to brew some filter coffee (espresso works too)

Step 3: Get milk and ice cubes from the fridge (both are needed, I don't care if you don't like milk, trust me)

Step 4: Get 2 spoonfuls (not tea spoon, and actually FULL spoonfuls) into the biggest glass you have

Step 5: Pour just a little of the warm filter coffee into the glass, just to get the instant coffee wet enough, and start mixing, until the result looks like the horror you unleashed in your toilet a few minutes ago (and will do so again in a few)

Step 6: Mix in 25-50 ml milk, just for the aesthetic change of colour of the devil-brew, and to add the necessary amount of lactic acid to react with the coffee to produce chemical X

Step 7: Add ice cubes to taste (if you are new to this, add a lot)

Step 8. Slowly add the filter coffee while mixing furiously, so that the light brown paste at the bottom get dissolved (it's harder than it sounds)

Now, take a deep breath. Before you is a disgusting brew undergoing a chemical reaction, and your moves need to be precise otherwise it will explode. Note that sugar or any other form of sweetener is FORBIDDEN, as it will block the reaction chain and the result won't be as potent.

Take a straw (a big one, not those needle-like ones that some cafeterias give to fool you into believing that the coffee is more than 150ml). Put it inside the mix, and check that the route to the bathroom is free of obstacles.

Now, clench your abs, close your nose if you are new to this, grab the straw and DRINK!

DRINK LIKE THERE IS NO TOMORROW!

THAT BROWN DEVIL'S BILE WILL HAVE YOUR INTESTINES SPASM AND DANCE THE MACARENA WHILE TWIRLING A HULA HOOP!

YOUR HEART WILL GO OVERDRIVE HARDER THAN YOUR PC'S CPU WHEN COMPILING ON ECLIPSE AND BROWSING WITH IE AT THE SAME TIME.

The combination of caffeine and lactic acid will bring out the perfectly disgusting combination of sour and bitter usually expected in rotting lemons. After you manage to chug it down (DON'T SPILL OR SPIT ANY!) you have 30 - 60 seconds max to run to the porcelain throne, where you will spend the next 30-60 minutes.

After that, nothing can stop you! You will fix bugs, write entire codebases from scratch, punch that annoying coworker, punch that boss! You will be a demigod among mortals for the next 6-8 hours!

Your recipes for Monday morning coffee?13 -

tl;dr: Bossmang blaming my code for a database connection issue thrown from outside of my code. Bossmang doesn’t listen. Bossmang doesn’t want to believe it’s a connection issue.

———

Bossmang: The code you wrote is causing insane spec failures in the release branch! It’s hard to follow because it’s so insane, but the cause is your code not properly handling undefined settings! Look at this! <spec>

Me: Specs pass on my machine. I ran it with both a set and nil value. <screenshots>

Bossmang: It works when you set it to nil.

Me: But a setting that doesn’t exist returns nil? <screenshot>

Bossmang: Not seeming to.... So this is the spec failure from the release: “No connection pool with id primary found. <stacktrace that starts outside of my code>”

Me: ... That’s a DB connection error. It’s also being thrown outside of my code, and from a `super` call to Rails.

Bossmang: But <unrelated> and <unrelated> and <other spec> is failing, and if I set the version, it has <other failure> instead! That calls your code first.

Me: It’s a database error. Also: <explains probable, unrelated cause of other failures, like someone didn’t mock a fucking external api call>

Bossmang: But if I restore a DB backup, it fails again.

Me: Restoring uses a dB connection, which could be exhausting the pool depending on the daemons you have running.

Bossmang: perhaps.

...

Bossmang: I still think it’s related to spec ordering.

🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️

This is tiring.12 -

more buzzword translations with a story (because the last one was pretty well liked):

"machine learning" -> an actual, smart thing, but you generally don't need any knowledge to use it as they're all libraries now

"a bitcoin" -> literally just a fucking number that everyone has

"powerful" -> it's umm… almost working (seriously i hate this word, it really has a meaning of null)

"hacking" -> watching a friend type in their facebook password with a black hoodie on, of course (courtesy of @GeaRSiX)

"cloud-based service" -> we have an extra commodore 64 and you can use it over the internet for an ever-increasing monthly fee

"analysis" -> two options: "it's not working" or "its close enough"

"stress-free workplace" -> working from home without pants

now for a short story:

a few days ago in code.org "apscp" class, we learnt about how to do "top down design" (of course, whatever works before for you was not in option in solving problems). we had to design a game, as the first "step" of "top down design," we had to identify three things we needed to do to make a game.

they were:

1. characters

2. "graphics"

3. "ai"

graphics is literally a png, but what the fuck do you expect for ai?

we have a game right? oh wait! its getting boring. let's just sprinkle some fucking artificial intelligence on it like i put salt on french fries.

this is complete bullshit.

also, one of my most hated commercials:

https://youtu.be/J1ljxY5nY7w

"iot data and ai from the cloud"

yeah please shut the fuck up

🖕fucking buzzwords6 -

Very specific and annoying situation here:

- Working on a machine learning project with other people

- I'm on Linux, they use Windows

- We code in python

- We generally use vscode for development, and its python extension

I implement some basic neural networks with tensorflow, and add a bunch of logging for it. I test it on my machine and it works fine.

But, my group mates report that "after a few seconds the entire client hangs".

Apparently it only happens on Windows?

We start debugging the hell out of the code I implemented, added 20 log messages and sat there for a solid hour.

Until I make one very odd realization: the issue doesn't happen when I run the script in my terminal, instead of vscode with the debugger. So I try different debug settings, using an external terminal instead of vscode's built in debug console seems to fix it too.

And I make another observation: In the debug console, some messages don't seem to appear at all, while the external terminal shows them just fine.

So, turns out, that printing an epsilon character: “ε” (U+03B5), causes the entire thing to hang up.

It's the year 2020 and somehow we still can't do unicode.

I'm so done, what on earth.6 -

Worst fight I've had with a co-worker?

Had my share of 'disagreements', but one that seemed like it could have gone to blows was a developer, 'T', that tried to man-splain me how ADO.Net worked with SQLServer.

<T walks into our work area>

T: "Your solution is going to cause a lot of problems in SQLServer"

Me: "No, its not, your solution is worse. For performance, its better to use ADO.Net connection pooling."

T: "NO! Every single transaction is atomic! SQLServer will prioritize the operation thread, making the whole transaction faster than what you're trying to do."

<T goes on and on about threads, made up nonsense about priority queues, on and on>

Me: "No it won't, unless you change something in the connection string, ADO.Net will utilize connection pooling and use the same SPID, even if you explicitly call Close() on the connection. You are just wasting code thinking that works."

T walks over, stands over me (he's about 6.5", 300+ pounds), maybe 6 inches away

T: "I've been doing .net development for over 10 years. I know what I'm doing!"

I turn my chair to face him, look up, cross my arms.

Me: "I know I'm kinda new to this, but let me show you something ..."

<I threw together a C# console app, simple connect, get some data, close the connection>

Me: "I'll fire up SQLProfiler and we can see the actual connection SPID and when sql server closes the SPID....see....the connection to SQLServer is still has an active SPID after I called Close. When I exit the application, SQLServer will drop the SPD....tada...see?"

T: "Wha...what is that...SQLProfiler? Is that some kind of hacking tool? DBAs should know about that!"

Me: "It's part of the SQLServer client tools, its on everyone's machine, including yours."

T: "Doesn't prove a damn thing! I'm going to do my own experiment and prove my solution works."

Me: "Look forward to seeing what you come up with ... and you haven't been doing .net for 10 years. I was part of the team that reviewed your resume when you were hired. You're going to have to try that on someone else."

About 10 seconds later I hear him from across the room slam his keyboard on his desk.

100% sure he would have kicked my ass, but that day I let him know his bully tactics worked on some, but wouldn't work on me.7 -

Company website with images path relative to my localhost/

....

so everything work just on my machine .

the client and pm send me emails to fix the sites but I open the url everything works correctly.

after 5 days the pm attach an image .. at that moment I was like 😖😖😖😖😖😖2 -

Be me, new dev on a team. Taking a look through source code to get up to speed.

Dev: **thinking to self** why is there no package lock.. let me bring this up to boss man

Dev: hey boss man, you’ve got no package lock, did we forget to commit it?

Manager: no I don’t like package locks.

Dev: ...why?

Manager: they fuck up computer. The project never ran with a package lock.

Dev: ..how will you make sure that every dev has the same packages while developing?

Manager: don’t worry, I’ve done this before, we haven’t had any issues.

**couple weeks goes by**

Dev: pushes code

Manager: hey your feature is not working on my machine

Dev: it’s working on mine, and the dev servers. Let’s take a look and see

**finds out he deletes his package lock every time he does npm install, so therefore he literally has the latest of like a 50 packages with no testing**

Dev: well you see you have some packages here that updates, and have broken some of the features.

Manager: >=|, fix it.

Dev: commit a working package lock so we’re all on the same.

Manager: just set the package version to whatever works.

Dev: okay

**more weeks go by**

Manager: why are we having so many issues between devs, why are things working on some computers and not others??? We can’t be having this it’s wasting time.

Dev: **takes a look at everyone’s packages** we all have different packages.

Manager: that’s it, no one can use Mac computers. You must use these windows computers, and you must install npm v6.0 and node v15.11. Everyone must have the same system and software install to guarantee we’re all on the same page

Dev: so can we also commit package lock so we’re all having the same packages as well?

Manager: No, package locks don’t work.

**few days go by**

Manager: GUYS WHY IS THE CODE DEPLOYING TO PRODUCTION NOT WORKING. IT WAS WORKING IN DEV

DEV: **looks at packages**, when the project was built on dev on 9/1 package x was on version 1.1, when it was approved and moved to prod on 9/3 package x was now on version 1.2 which was a change that broke our code.

Manager: CHANGE THE DEPLOYMENT SCRIPTS THEN. MAKE PROD RSYNC NODE_MODULES WITH DEV

Dev: okay

Manager: just trust me, I’ve been doing this for years

Who the fuck put this man in charge.11 -

I did it: I built up another PC identical to my machine (https://devrant.com/rants/2923002/...) for my SO and installed Linux Mint for her, too. That had been my primary motive for an easy and stable distro in the first place.

Now that didn't come out of the blue. We were discussing the end of Win 7 already two years ago where I brought up my concerns with Win 10 - mainly the forced, lousy updates and the integrated spyware, and that I was considering Linux as way out.

I had expected quite some pushback because she had been exclusively on Windows since the 90s. However, I didn't sell Linux as upgrade. It's just that Win 7 is over, progress under Windows as well, and we're in damage control mode. Went down pretty well.

Fast forward three weeks - remember, first time Linux user and no IT-geek:

- it just works, including web, videos, and music.

- she likes Cinnamon.

- nice desktop themes.

- Redshift is as good as f.lux.

- software installation is just like an app store.

- updates work via an easy tray icon.

- quote: "Linux is great!"

- given this alternative, she doesn't understand why people willingly put up with Win 10.

- no drive letters: already forgotten.

- popcorn for upcoming Win 10 disaster stories.

- why do Windows updates take that long?

- why does Windows need to reboot for every update?

- why does Windows hang in that update boot screen for so long?

I'm impressed that Linux has come so far that it's suitable for end users. Next in line is her father who wants to try Linux, but that will be a story for tomorrow.14 -

Hey look, npm broke my project again. Surprise!

Code and dependencies on my local machine, all untouched for a couple of weeks, no longer works. I've no idea how it even managed that.

Oh, and `npm update` crashes.

eventually solved by upgrading npm and running `npm update --depth 500` because some arbitrary child dependencies changed without updating the parent packages, ofc. on my local machine. without me having run `npm update` for about a month.

because of course that makes sense.

Second time in two months, too.

isn't npm great?3 -

My work laptop (windows) updated yesterday. Today my monitors keep flickering, hanging, and going black for a few seconds then come back with an error that my display drivers crashed. Since I have basically zero access to anything admin on this machine, I put in a help desk ticket with all the details, the error message, even screen shots which took forever to get because of all the crashes.

They finally respond after about an hour, and tell me that my computer does not support 3 screens so I will have to use 2, and that is what is causing the crash. Well I have been using 3 screens with this computer since I started there in 2014, and it has worked perfectly until the update, so I asked if they could revert the update.

He told me that they could not revert it, and not only that, but I couldn't have been using 3 monitors before because the computer doesn't support it and never has. REALLY??? I just freaking told you I have been doing that for over 3 years so obviously it does support it you deaf, stupid retard. Try using your brain for 2 seconds and work on a solution instead of calling me a liar and dismissing my issue without thought.

After going back and forth for about 5 minutes I gave up and hung up. Finally I fixed it by switching out my docking station with another one I found laying around. Not sure why that worked, but I'm back to working on all 3 monitors. I called the guy back to tell him it's working and sent a picture of my setup, his response: "Well I don't know why that works because your laptop is too old to support that."

Useless...3 -

If you write a tutorial or a book with code samples please take the time to ensure that (a) you cover everything that is needed to get your samples to work properly and (b) that your samples actually to work.

It is frustrating the bloody hell out of me typing your code character by character into my machine just to have my compiler screaming at me.

On that note: just wasted a week on rewriting a whole bloody library that was "broken" just to discover that the library works just fine but the freaking tutorial on the very page was faulty.5 -

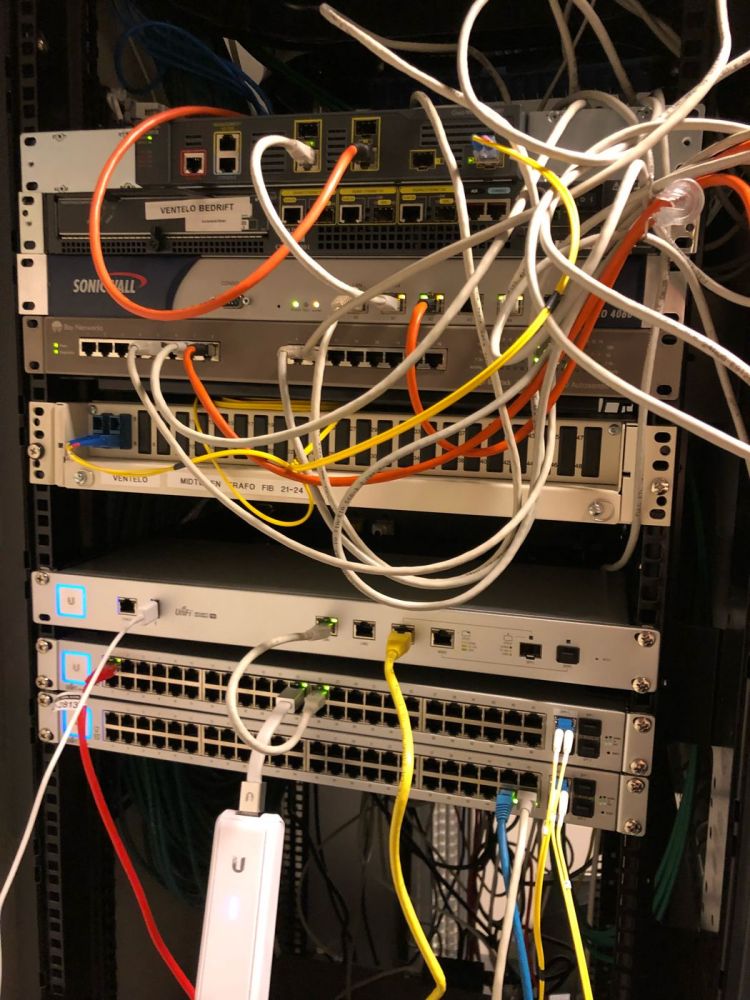

I’ve started the process of setting up the new network at work. We got a 1Gbit fibre connection.

Plan was simple, move all cables from old switch to new switch. I wish it was that easy.

The imbecile of an IT Guy at work has setup everything so complex and unnecessary stupid that I’m baffled.

We got 5 older MacPros, all running MacOS Server, but they only have one service running on them.

Then we got 2x xserve raid where there’s mounted some external NAS enclosures and another mac. Both xserve raid has to be running and connected to the main macpro who’s combining all this to a few different volumes.

Everything got a static public IP (we got a /24 block), even the workstations. Only thing that doesn’t get one ip pr machine is the guest network.

The firewall is basically set to have all ports open, allowing for easy sniffing of what services we’re running.

The “dmz” is just a /29 of our ip range, no firewall rules so the servers in the dmz can access everything in our network.

Back to the xserve, it’s accessible from the outside so employees can work from home, even though no one does it. I asked our IT guy why he hadn’t setup a VPN, his explanation was first that he didn’t manage to set it up, then he said vpn is something hackers use to hide who they are.

I’m baffled by this imbecile of an IT guy, one problem is he only works there 25% of the time because of some health issues. So when one of the NAS enclosures didn’t mount after a power outage, he wasn’t at work, and took the whole day to reply to my messages about logins to the xserve.

I can’t wait till I get my order from fs.com with new patching equipment and tonnes of cables, and once I can merge all storage devices into one large SAN. It’ll be such a good work experience. 7

7 -

I'm a DevOps engineer. It's my job to understand why this type of shit is broken, and when I finally figure it out, I get so mad at bullish players like AWS.

It's simple. Install Python3 from apt.

`apt-get update && apt-get install -y python3-dev`

I've done this thousands of times, and it just works.

Docker? Yup.

AWS AMI? Yup.

Automation? Nope.

WTF? Let's waste 2.5 hours and figure out why this morning.

In docker: `apt-cache policy python3-dev` shows us:

python3-dev:

http://archive.ubuntu.com/ubuntu focal/main amd64 Packages

But in AWS instance, we see we're reading from "http://us-east-1.ec2.archive.ubuntu.com/... focal/main" instead!

Ah, but why does it fail? AWS is just using a mirror, right? Not quite.

When the automation script is running, it's beating AWS to the apt mirror update! My instance, running on AWS is trying to access the same archive.ubuntu.com that the Docker container tried to use. "python3-dev" was not a candidate for installation! WTF Amazon? Shouldn't that just work, even if I'm not using your mirror?

So I try again, and again, and again. It works, on average, 1 out of every 5 times. I'm assuming this means we're seeing some strange shit configuration between EC2 racks where some are configured to redirect archive.ubuntu.com to the ec2 mirror, and others are configured to block. I haven't dug this far into the issue yet, because by the time I can SSH into the machine after automation, the apt list has already received it's blessed update from EC2.

Now I have to build a graceful delay into my automation while I wait for AWS to mangle, I mean "fix up" my apt sources list to their whim.

After completely blowing my allotted time on this task, I just shipped a "sleep" statement in my code. I feel so dirty. I'm going to go brew some more coffee to be okay with my life. Then figure out a proper wait statement.7 -

> pic related

This is what I've been putting up with on my personal machine for months.

tl;dr: Suggestions for a wlan card/adapter for Debian9? My current RealTek wnic is barely functional, and my replacement (a TPLink... something) is completely incompatible.

I don't need anything super fancy, though I would ofc love support for AC/AD if at all possible.

I don't care (too much) about price, since I'm only going to buy one and very likely won't replace it for years.

------

I'm running Debian9 and have a have a RealTek card. Even when it's not arbitrarily dropping packets like in the screenie, it randomly caches them for up to 90 seconds and dumps them hilariously out of order. I can't play games like that. I can barely even browse devRant. Steam goes offline about once every 30 seconds, and therefore spams all of my friends with online/offline notifications. Streaming "works" on good days. Git works fine, however, so most days I don't notice the connection issues.

And yes, I'm using a community-patched driver (rtl8188ce) that's supposed to fix some of RealTek's more major screwups and increase the transmit power by ~20x. The driver helps, but only a little.

I've done some reasearch on wlan linux support, but haven't found anything very reassuring. Mostly just forum posts saying things like "Intel cards usually work fine!" I don't want to gamble. I just want to buy a card that will work and be done with it. :(

Suggestions? Insight? 10

10 -

"I can't replicate it therefore your hotfix for the customer shouting at you is unnecessary"

WTF?! I had to lead this guy to the records where I'd replicated it myself in both the customer system and the demo one! There's a real sense that the core dev team in this place automatically disregards what the rest of us say (support had already mentioned it was replicable but clearly hadn't realised that they needed to spoon-feed this guy).

This place has a huge silo problem, glad I'm not staying much longer...

edit: these tags shouldn't be reordering themselves, not cool13 -

Okay you bastards ya got me: I fucking enjoy using Linux as my dev environment.

There, I SAID IT -

BUT DON'T THINK FOR A SECOND IT MEANS THAT I STOPPED HATING IT

Oh the fucking love hate relationship to fucking Linux.

"Hey, ihatecomputers! How many hours per year did you spend fixing internet connectivity issues on Windows?" you ask. Well, close to fucking 0 you goddamned imbecile. But on Linux? I don't even want to talk about it.

And what about that time when I wanted to connect my bluetooth headphones so I could listen to music while studying? Well, by the time my headphones were connected to my machine (usually a one second operation) I had no time left for, you know, actual studying. Oh my god, it's the most trivial fucking thing.

Well, at least that particular issue got solved.

Unlike that fucking Ethernet connection which has been fucking out of commission since I started using fucking Linux. Wifi works just well enough to make it not worth pouring more time into troubleshooting that shit, but just barely though because my wifi IS FUCKING DOGSHIT ON LINUX

...

But fuck me if it isn't it the most lean thing ever! It's the goddamned opposite of bloated. So smooth and snappy. And free as in slurred speech, or whatever. It makes me happy. When I'm not seething with rage, that is.

Yeah I guess that's it, thanks for tuning in.

~ihatecomputers16 -

Everyone was a noob once. I am the first to tell that to everyone. But there are limits.

Where I work we got new colleagues, fresh from college, claims to have extensive knowledge about Ansible and knows his way around a Linux system.... Or so he claims.

I desperately need some automation reinforcements since the project requires a lot of work to be done.

I have given a half day training on how to develop, starting from ssh keys setup and local machine, the project directory layout, the components the designs, the scripts, everything...

I ask "Do you understand this?"

"Yes, I understand. " Was the reply.

I give a very simple task really. Just adapt get_url tasks in such a way that it accepts headers, of any kind.

It's literally a one line job.

A week passes by, today is "deadline".

Nothing works, guy confuses roles with playbooks, sets secrets in roles hardcodes, does not create inventory files for specifications, no playbooks, does everything on the testing machine itself, abuses SSH Keys from the Controller node.... It's a fucking ga-mess.

Clearly he does not understand at all what he is doing.

Today he comes "sorry but I cannot finish it"

"Why not?" I ask.

"I get this error" sends a fucking screenshot. I see the fucking disaster setup in one shot ...

"You totally have not done the things like I taught you. Where are your commits and what are.your branch names?"

"Euuuh I don't have any"

Saywhatnow.jpeg

I get frustrated, but nonetheless I re-explain everything from too to bottom! I actually give him a working example of what he should do!

Me: "Do you understand now?"

Colleague: "Yes, I do understand now?"

Me: "Are you sure you understand now?"

C: "yes I do"

Proceeds to do fucking shit all...

WHY FUCKING LIE ABOUT THE THINGS YOU DONT UNDERSTAND??? WHAT KIND OF COGNITIVE MALFUNCTION IA HAPPENING IN YOUR HEAD THAT EVEN GIVEN A WORKING EXAMPLE YOU CANT REPLICATE???

WHY APPLY FOR A FUCKING JOB AND LIE ABOUT YOUR COMPETENCES WHEN YOU DO T EVEN GET THE FUCKING BASICS!?!?

WHY WASTE MY FUCKING TIME?!?!?!

Told my "dear team leader" (see previous rants) that it's not okay to lie about that, we desperately need capable people and he does not seem to be one of them.

"Sorry about that NeatNerdPrime but be patient, he is still a junior"

YOU FUCKING HIRED THAT PERSON WITH FULL KNOWLEDGE ABOUT HAI RESUME AND ACCEPTED HIS WORDS AT FACE VALUE WITHOUT EVEN A PROPER TECHNICAL TEST. YOU PROMISED HE WAS CAPABLE AND HE IS FUCKING NOT, FUCK YOU AND YOUR PEOPLE MANAGEMENT SKILLS, YOU ALREADY FAIL AT THE START.

FUCK THIS. I WILL SLACK OFF TODAY BECAUSE WITHOUT ME THIS TEAM AND THIS PROJECT JUST CRUMBLES DOWN DUE TO SHEER INCOMPETENCE. 5

5 -

someone pushes code and breaks ci.

me: you broke the build

her: (ignoring the explicit error message) it works on my machine, travis is broken

me: it doesn't even work on my machine!

her: I forgot to push one file, sorry.1 -

Ok friends let's try to compile Flownet2 with Torch. It's made by NVIDIA themselves so there won't be any problem at all with dependencies right?????? /s

Let's use Deep Learning AMI with a K80 on AWS, totally updated and ready to go super great always works with everything else.

> CUDA error

> CuDNN version mismatch

> CUDA versions overwrite

> Library paths not updated ever

> Torch 0.4.1 doesn't work so have to go back to Torch 0.4

> Flownet doesn't compile, get bunch of CUDA errors piece of shit code

> online forums have lots of questions and 0 answers

> Decide to skip straight to vid2vid

> More cuda errors

> Can't compile the fucking 2d kernel

> Through some act of God reinstalling cuda and CuDNN, manage to finally compile Flownet2

> Try running

> "Kernel image" error

> excusemewhatthefuck.jpg

> Try without a label map because fuck it the instructions and flags they gave are basically guaranteed not to work, it's fucking Nvidia amirite

> Enormous fucking CUDA error and Torch error, makes no sense, online no one agrees and 0 answers again

> Try again but this time on a clean machine

> Still no go

> Last resort, use the docker image they themselves provided of flownet

> Same fucking error

> While in the process of debugging, realize my training image set is also bound to have bad results because "directly concatenating" images together as they claim in the paper actually has horrible results, and the network doesn't accept 6 channel input no matter what, so the only way to get around this is to make 2 images (3 * 2 = 6 quick maths)

> Fix my training data, fuck Nvidia dude who gave me wrong info

> Try again

> Same fucking errors

> Doesn't give nay helpful information, just spits out a bunch of fucking memory addresses and long function names from the CUDA core

> Try reinstalling and then making a basic torch network, works perfectly fine

> FINALLY.png

> Setup vid2vid and flownet again

> SAME FUCKING ERROR

> Try to build the entire network in tensorflow

> CUDA error

> CuDNN version mismatch

> Doesn't work with TF

> HAVE TO FUCKING DOWNGEADE DRIVERS TOO

> TF doesn't support latest cuda because no one in the ML community can be bothered to support anything other than their own machine

> After setting up everything again, realize have no space left on 75gb machine

> Try torch again, hoping that the entire change will fix things

At this point I'll leave a space so you can try to guess what happened next before seeing the result.

Ready?

3

2

1

> SAME FUCKING ERROR

In conclusion, NVIDIA is a fucking piece of shit that can't make their own libraries compatible with themselves, and can't be fucked to write instructions that actually work.

If anyone has vid2vid working or has gotten around the kernel image error for AWS K80s please throw me a lifeline, in exchange you can have my soul or what little is left of it5 -

Today a junior dev from the company I'm working at as consultant, suddenly shouted:

😤"why the hell my software behaves differently on every pc here in the office ... But it works on my machine? I'm sure there's something wrong with the OS/Framework"

🤔 let me think for a moment ...

* is it because the whole office keep developing like the ancient romans did?

* is it because that software is such a mess that requires a wizard in order to manually change all the magic configuration strings ?

* is it because every damn developer there has his particular environment and the word "container" reminds you only the show where the people bid for unclaimed shit ?

* is it because the "guru" at your company decided it was a super cool idea to wrap EVERY single external library (that just works out of the box) into some obscure static helper without even a single trace of documentation and clue of what's wrong?

🤗"I don't know... Must be a bug in the OS or framework for sure" -

tired of my tor browser not letting me do my job (still configuring settings, but it's taking too long and i'm getting tired of it), and chrome doesn't show my mouse pointer (which works everywhere else on my machine).

Time to go back to firefox. downloading nightly this time, though. thoughts on it? any default settings I should change for security or to make it better in general?11 -

I was asked to look into a site I haven't actively developed since about 3-4 years. It should be a simple side-gig.

I was told this site has been actively developed by the person who came after me, and this person had a few other people help out as well.

The most daunting task in my head was to go through their changes and see why stuff is broken (I was told functionality had been removed, things were changed for the worse, etc etc).

I ssh into the machine and it works. For SOME reason I still have access, which is a good thing since there's literally nobody to ask for access at the moment.

I cd into the project, do a git remote get-url origin to see if they've changed the repo location. Doesn't work. There is no origin. It's "upstream" now. Ok, no biggie. git remote get-url upstream. Repo is still there. Good.

Just to check, see if there's anything untracked with git status. Nothing. Good.

What was the last thing that was worked on? git log --all --decorate --oneline --graph. Wait... Something about the commit message seems familiar. git log. .... This is *my* last commit message. The hell?

I open the repo in the browser, login with some credentials my browser had saved (again, good because I have no clue about the password). Repo hasn't gotten a commit since mine. That can't be right.

Check branches. Oh....Like a dozen new branches. Lots of commits with text that is really not helpful at all. Looks like they were trying to set up a pipeline and testing it out over and over again.

A lot of other changes including the deletion of a database config and schema changes. 0 tests. Doesn't seem like these changes were ever in production.

...

At least I don't have to rack my head trying to understand someone else's code but.... I might just have to throw everything that was done into the garbage. I'm not gonna be the one to push all these changes I don't know about to prod and see what breaks and what doesn't break

.

I feel bad for whoever worked on the codebase after me, because all their changes are now just a waste of time and space that will never be used.3 -

Taking a database class, prof insists on using Microsoft Sql server 2014. "Okay cool" said the Microsoft surface fan boy inside me as I installed it. "Holy shit this is using 6 fucking GBs?? Eh it's okay I trust" again said my Microsoft fanboy self. Finished installing, makes queries and it works. Cool.

Go to run Sql server again next day and get an error (nothing displayed, just a box pop up and then a crash) I use some Google skills. Change a bunch of shit and still it persists. "Just uninstall it and reinstall again" says my prof. I do so except random errors during installation saying Sql already exists even though I just uninstalled it. "Maybe it's some registry keys messing with it!" do some digging, remove unneeded registry keys and try again. Installation finished but a whack of features say failed to install.

I sit and try to work this shit out for the next four hours (not paying attention to my class) and still can't get Sql to completely uninstall itself. I try iobit uninstaller, command line uninstalling, fucking everything but still not working. Slowly my fanboy side is wishing that the windows symbol on the back of my machine was an apple.

I ended up having to backup all my files and reinstalling windows to get it working properly. Holy sweet fuck. The worst part is when this class is done ill probably need to reinstall yet again to save the 6gb it's sucking up. So if you're not sure whether you need something as heavy as Microsoft Sql server or not for your application, don't use it! It's a fucking virus that is super difficult to remove.

Tldr: life long Microsoft fanboy becomes apple convert in a day of using Microsoft Sql server. 9

9 -

Source code works on my local machine, even when I present it to the relevant users; no body panics its all part of the plan. Place it on the server and it does not work AND EVERYONE LOSES THEIR MIND!1

-

Raging here, overheating really. One spends thousands on technology that is promoted with the catch phrase "it just works", yet here I am, after updating my fancy new emoji maker (iphone x) to 11.2 and then attempt to carry on working by compiling my code to test some new features. And...

oh, whats this xCode? You have a problem? You can't locate something? You can't locate iOS 11.2 (15C114)... sorry and you think that this "May not" be supported in current version of Xcode?

Let me get this straight you advanced piece of technological wizardy, you know you are missing something, you in fact know what it is, you can actually TELL me what is missing and yet, still, in 2017, you can't go FETCH it?????

Really? All you can do is sit, with that stupid look on your face, and watch the paint dry? Your stuck? That's it?

I hate you for the false pretense of advanced capability. and for your lack of a consistent dark theme so my eyes stop bleeding when reading your "I don't know what to do" messages...

By the way, maybe you can stop randomly crashing, or pinwheeling, I get that your bored as a machine designed to crunch numbers/data/code all day long and that for fun you feel you have to add some color to your subsitance. But stop it. Do what I'm told you can do, "JUST WORK" for once without me having to drag you forward kicking and screaming.

K. that feels better. Now for some whiskey.5 -

As DevOps it’s my duty to make sure these excuses no longer exist. Software should just work everywhere all the time

3

3 -

I wrote an important test-case for this complicated/hard-to-understand program and i can proudly say "it doesn't work on my machine". On other machines it works as expected.

Should i really investigate this or focus on other tasks?12 -

Buffer usage for simple file operation in python.

What the code "should" do, was using I think open or write a stream with a specific buffer size.

Buffer size should be specific, as it was a stream of a multiple gigabyte file over a direct interlink network connection.

Which should have speed things up tremendously, due to fewer syscalls and the machine having beefy resources for a large buffer.

So far the theory.

In practical, the devs made one very very very very very very very very stupid error.

They used dicts for configurations... With extremely bad naming.

configuration = {}

buffer_size = configuration.get("buffering", int(DEFAULT_BUFFERING))

You might immediately guess what has happened here.

DEFAULT_BUFFERING was set to true, evaluating to 1.

Yeah. Writing in 1 byte size chunks results in enormous speed deficiency, as the system is basically bombing itself with syscalls per nanoseconds.

Kinda obvious when you look at it in the raw pure form.

But I guess you can imagine how configuration actually looked....

Wild. Pretty wild. It was the main dict, hard coded, I think 200 entries plus and of course it looked like my toilet after having an spicy food evening and eating too much....

What's even worse is that none made the connection to the buffer size.

This simple and trivial thing entertained us for 2-3 weeks because *drumrolls please* none of the devs tested with large files.

So as usual there was the deployment and then "the sudden miraculous it works totally slow, must be admin / it fault" game.

At some time it landed then on my desk as pretty much everyone who had to deal with it was confused and angry, for understandable reasons (blame game).

It took me and the admin / devs then a few days to track it down, as we really started at the entirely wrong end of the problem, the network...

So much joy for such a stupid thing.18 -

Running a fucking conda environment on windows (an update environment from the previous one that I normally use) gets to be a fucking pain in the fucking ass for no fucking reason.

First: Generate a new conda environment, for FUCKING SHITS AND GIGGLES, DO NOT SPECIFY THE PYTHON VERSION, just to see compatibility, this was an experiment, expected to fail.

Install tensorflow on said environment: It does not fucking work, not detecting cuda, the only requirement? To have the cuda dependencies installed, modified, and inside of the system path, check done, it works on 4 other fucking environments, so why not this one.

Still doesn't work, google around and found some thread on github (the errors) that has a way to fix it, do it that way, fucking magic, shit is fixed.

Very well, tensorflow is installed and detecting cuda, no biggie. HAD TO SWITCH TO PYHTHON 3,8 BECAUSE 3.9 WAS GIVING ISSUES FOR SOME UNKNOWN FUCKING REASON

Ok no problem, done.

Install jupyter lab, for which the first in all other 4 environments it works. Guess what a fuckload of errors upon executing the import of tensorflow. They go on a loop that does not fucking end.

The error: imPoRT eRrOr thE Dll waS noT loAdeD

Ok, fucking which one? who fucking knows.

I FUCKING HATE that the main language for this fucking bullshit is python. I guess the benefits of the repl, I do, but the python repl is fucking HORSESHIT compared to the one you get on: Lisp, Ruby and fucking even NODE in which error messages are still more fucking intelligent than those of fucking bullshit ass Python.

Personally? I am betting on Julia devising a smarter environment, it is a better language already, on a second note: If you are worried about A.I taking your job, don't, it requires a team of fucktards working around common basic system administration tasks to get this bullshit running in the first place.

My dream? Julia or Scala (fuck you) for a primary language in machine learning and AI, in which entire environments, with aaaaaaaaaall of the required dlls and dependencies can be downloaded and installed upon can just fucking run. A single directory structure in which shit just fucking works (reason why I like live environments like Smalltalk, but fuck you on that too) and just run your projects from there, without setting a bunch of bullshit from environment variables, cuda dlls installation phases and what not. Something that JUST FUCKING WORKS.

I.....fucking.....HATE the level of system administration required to run fucking anything nowadays, the reason why we had to create shit like devops jobs, for the sad fuckers that have to figure out environment configurations on a box just to run software.

Fuck me man development turned to shit, this is why go mod, node npm, php composer strict folder structure pipelines were created. Bitch all you want about npm, but if I can create a node_modules setting with all of the required dlls to run a project, even if this bitch weights 2.5GB for a project structure you bet your fucking ass that I would.