Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "data storage"

-

Dear people who complain about spending a whole night to find a tiny syntax error; Every time I read one of your rants, I feel like a part of me dies.

As a developer, your job is to create elegant optimized rivers of data, to puzzle with interesting algorithmic problems, to craft beautiful mappings from user input to computer storage and back.

You should strive to write code like a Michelangelo, not like a house painter.

You're arguing about indentation or getting annoyed by a project with braces on the same line as the method name. You're struggling with semicolons, misplaced braces or wrongly spelled keywords.

You're bitching about the medium of your paint, about the hardness of the marble -- when you should be lamenting the absence of your muse or the struggle to capture the essence of elegance in your work.

In other words:

Fix your fucking mindset, and fix your fucking tools. Don't fucking rant about your tabs and spaces. Stop fucking screaming how your bloated swiss-army-knife text editor is soooo much better than a purpose-built IDE, if it fails to draw something red and obnoxious around your fuck ups.

Thanks.62 -

As a developer, sometimes you hammer away on some useless solo side project for a few weeks. Maybe a small game, a web interface for your home-built storage server, or an app to turn your living room lights on an off.

I often see these posts and graphs here about motivation, about a desire to conceive perfection. You want to create a self-hosted Spotify clone "but better", or you set out to make the best todo app for iOS ever written.

These rants and memes often highlight how you start with this incredible drive, how your code is perfectly clean when you begin. Then it all oscillates between states of panic and surprise, sweat, tears and euphoria, an end in a disillusioned stare at the tangled mess you created, to gather dust forever in some private repository.

Writing a physics engine from scratch was harder than you expected. You needed a lot of ugly code to get your admin panel working in Safari. Some other shiny idea came along, and you decided to bite, even though you feel a burning guilt about the ever growing pile of unfinished failures.

All I want to say is:

No time was lost.

This is how senior developers are born. You strengthen your brain, the calluses on your mind provide you with perseverance to solve problems. Even if (no, *especially* if) you gave up on your project.

Eventually, giving up is good, it's a sign of wisdom an flexibility to focus on the broader domain again.

One of the things I love about failures is how varied they tend to be, how they force you to start seeing overarching patterns.

You don't notice the things you take back from your failures, they slip back sticking to you, undetected.

You get intuitions for strengths and weaknesses in patterns. Whenever you're matching two sparse ordered indexed lists, there's this corner of your brain lighting up on how to do it efficiently. You realize it's not the ORMs which suck, it's the fundamental object-relational impedance mismatch existing in all languages which causes problems, and you feel your fingers tingling whenever you encounter its effects in the future, ready to dive in ever so slightly deeper.

You notice you can suddenly solve completely abstract data problems using the pathfinding logic from your failed game. You realize you can use vector calculations from your physics engine to compare similarities in psychological behavior. You never understood trigonometry in high school, but while building a a deficient robotic Arduino abomination it suddenly started making sense.

You're building intuitions, continuously. These intuitions are grooves which become deeper each time you encounter fundamental patterns. The more variation in environments and topics you expose yourself to, the more permanent these associations become.

Failure is inconsequential, failure even deserves respect, failure builds intuition about patterns. Every single epiphany about similarity in patterns is an incredible victory.

Please, for the love of code...

Start and fail as many projects as you can.30 -

Finally did it. Quit my job.

The full story:

Just came back from vacation to find out that pretty much all the work I put at place has been either destroyed by "temporary fixes" or wiped clean in favour of buggy older versions. The reason, and this is a direct quote "Ari left the code riddled with bugs prior to leaving".

Oh no. Oh no I did not you fucker.

Some background:

My boss wrote a piece of major software with another coder (over the course of month and a balf). This software was very fragile as its intention was to demo specific features we want to adopt for a version 2 of it.

I was then handed over this software (which was vanilajs with angular) and was told to "clean it up" introduce a typing system, introduce a build system, add webpack for better module and dependency management, learn cordova (because its essential and I had no idea of how it works). As well as fix the billion of issues with data storage in the software. Add a webgui and setup multiple databses for data exports from the app. Ensure that transmission of the data is clean and valid.

What else. This software had ZERO documentation. And I had to sit my boss for a solid 3hrs plus some occasional questions as I was developing to get a clear idea of whats going on.

Took a bit over 3 weeks. But I had the damn thing ported over. Cleaned up. And partially documented.

During this period, I was suppose to work with another 2 other coders "my team". But they were always pulled into other things by my Boss.

During this period, I kept asking for code reviews (as I was handling a very large code base on my own).

During this period, I was asking for help from my boss to make sure that the visual aspect of the software meets the requirements (there are LOTS of windows, screens, panels etc, which I just could not possibly get to checking on my own).

At the end of this period. I went on vacation (booked by my brothers for my bday <3 ).

I come back. My work is null. The Boss only looked at it on the friday night leading up to my return. And decided to go back to v1 and fix whatever he didnt like there.

So this guy calls me. Calls me on a friggin SUNDAY. I like just got off the plane. Was heading to dinner with my family.

He and another coder have basically nuked my work. And in an extremely hacky way tied some things together to sort of work. Moreever, the webguis that I setup for the database viewing. They were EDITED ON THE PRODUCTION SERVER without git tracking!!

So monday. I get bombarded with over 20 emails. Claiming that I left things in an usuable state with no documentation. As well as I get yelled at by my boss for introducing "unnecessary complicated shit".

For fuck sakes. I was the one to bring the word documentation into the vocabulary of this company. There are literally ZERO documentated projects here. While all of mine are at least partially documented (due to lack of time).

For fuck sakes, during my time here I have been basically begging to pull the coder who made the admin views for our software and clean up some of the views so that no one will ever have to touch any database directly.

To say this story is the only reason I am done is so not true.

I dedicated over a year to this company. During this time I saw aspects of this behaviour attacking other coders as well as me. But never to this level.

I am so friggin happy that I quit. Never gonna look back.13 -

Hey everyone,

We have a few pieces of news we're very excited to share with everyone today. Apologies for the long post, but there's a lot to cover!

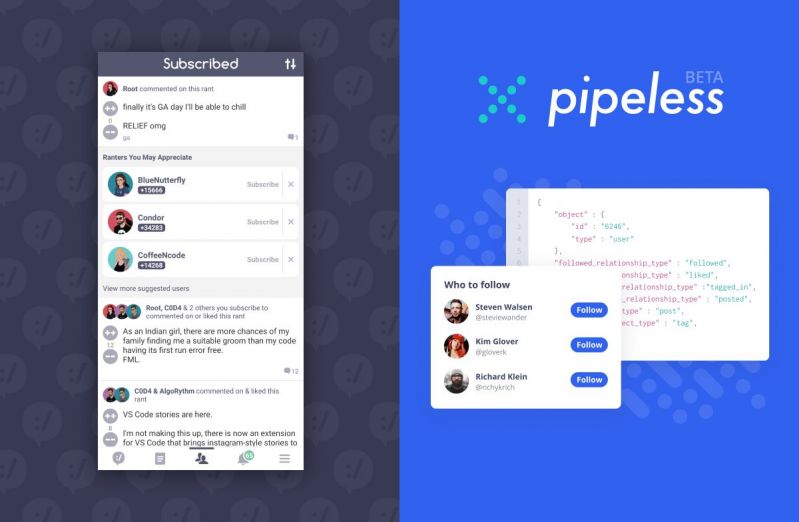

First, as some of you might have already seen, we just launched the "subscribed" tab in the devRant app on iOS and Android. This feature shows you a feed of the most recent rant posts, likes, and comments from all of the people you subscribe to. This activity feed is updated in real-time (although you have to manually refresh it right now), so you can quickly see the latest activity. Additionally, the feed also shows recommended users (based on your tastes) that you might want to subscribe to. We think both of these aspects of the feed will greatly improve the devRant content discovery experience.

This new feature leads directly into this next announcement. Tim (@trogus) and I just launched a public SaaS API service that powers the features above (and can power many more use-cases across recommendations and activity feeds, with more to come). The service is called Pipeless (https://pipeless.io) and it is currently live (beta), and we encourage everyone to check it out. All feedback is greatly appreciated. It is called Pipeless because it removes the need to create complicated pipelines to power features/algorithms, by instead utilizing the flexibility of graph databases.

Pipeless was born out of the years of experience Tim and I have had working on devRant and from the desire we've seen from the community to have more insight into our technology. One of my favorite (and earliest) devRant memories is from around when we launched, and we instantly had many questions from the community about what tech stack we were using. That interest is what encouraged us to create the "about" page in the app that gives an overview of what technologies we use for devRant.

Since launch, the biggest technology powering devRant has always been our graph database. It's been fun discussing that technology with many of you. Now, we're excited to bring this technology to everyone in the form of a very simple REST API that you can use to quickly build projects that include real-time recommendations and activity feeds. Tim and I are really looking forward to hopefully seeing members of the community make really cool and unique things with the API.

Pipeless has a free plan where you get 75,000 API calls/month and 75,000 items stored. We think this is a solid amount of calls/storage to test out and even build cool projects/features with the API. Additionally, as a thanks for continued support, for devRant++ subscribers who were subscribed before this announcement was posted, we will give some bonus calls/data storage. If you'd like that special bonus, you can just let me know in the comments (as long as your devRant email is the same as Pipeless account email) or feel free to email me (david@hexicallabs.com).

Lastly, and also related, we think Pipeless is going to help us fulfill one of the biggest pieces of feedback we’ve heard from the community. Now, it is going to be our goal to open source the various components of devRant. Although there’s been a few reasons stated in the past for why we haven’t done that, one of the biggest reasons was always the highly proprietary and complicated nature of our backend storage systems. But now, with Pipeless, it will allow us to start moving data there, and then everyone has access to the same system/technology that is powering the devRant backend. The first step for this transition was building the new “subscribed” feed completely on top of Pipeless. We will be following up with more details about this open sourcing effort soon, and we’re very excited for it and we think the community will be too.

Anyway, thank you for reading this and we are really looking forward to everyone’s feedback and seeing what members of the community create with the service. If you’re looking for a very simple way to get started, we have a full sample dataset (1 click to import!) with a tutorial that Tim put together (https://docs.pipeless.io/docs/...) and a full dev portal/documentation (https://docs.pipeless.io).

Let us know if you have any questions and thanks everyone!

- David & Tim (@dfox & @trogus) 52

52 -

My mentor/guider at my last internship.

He was great at guiding, only 1-2 years older than me, brought criticism in a constructive way (only had a very tiny thing once in half a year though) and although they were forced to use windows in a few production environments, when it came to handling very sensitive data and they asked me for an opinion before him and I answered that closed source software wasn't a good idea and they'd all go against me, this guy quit his nice-guy mode and went straight to dead-serious backing me up.

I remember a specific occurrence:

Programmers in room (under him technically): so linuxxx, why not just use windows servers for this data storage?

Me: because it's closed source, you know why I'd say that that's bad for handling sensitive data

Programmers: oh come on not that again...

Me: no but really look at it from my si.....

Programmers: no stop it. You're only an intern, don't act like you know a lot about thi....

Mentor: no you shut the fuck up. We. Are. Not. Using. Proprietary. Bullshit. For. Storing. Sensitive. Data.

Linuxxx seems to know a lot more about security and privacy than you guys so you fucking listen to what he has to say.

Windows is out of the fucking question here, am I clear?

Yeah that felt awesome.

Also that time when a mysql db in prod went bad and they didn't really know what to do. Didn't have much experience but knew how to run a repair.

He called me in and asked me to have a look.

Me: *fixed it in a few minutes* so how many visitors does this thing get, few hundred a day?

Him: few million.

Me: 😵 I'm only an intern! Why did you let me access this?!

Him: because you're the one with the most Linux knowledge here and I trust you to fix it or give a shout when you simply can't.

Lastly he asked me to help out with iptables rules. I wasn't of much help but it was fun to sit there debugging iptables shit with two seniors 😊

He always gave good feedback, knew my qualities and put them to good use and kept my motivation high.

Awesome guy!4 -

Call comes down from the CEO and through his "Yes Man" that some investors are coming by to visit and he want to show off the data center and test servers. There are four full racks of storage servers filled with HDDs and each server has 4 to 16 HDDs a piece.

I got told to "make all of the lights blink", which can be epic seeing it in action but my test cycles rarely aligned that way.

All morning I was striping RAID arrays and building short mixed I/O tests to maximize "LED blinking" for the boss's henchman.

Investors apparently live/die by blinking light progress and it was all on me to get everything working.21 -

I am currently working for a client who have all their data in Google Sheets and Drive. I had to write code to fetch that data and it's painful to query that data.

I can definitely relate with this.

PS: Their last year revenue was over US$2 Bn and one of their sibling company is among Top IT companies in the country. 7

7 -

And here comes the last part of my story so far.

After deploying the domain, configuring PCs, configuring the server, configuring the switch, installing software, checking that the correct settings have been applied, configuring MS Outlook (don't ask) and giving each and every user a d e t a i l e d tutorial on using the PC like a modern human and not as a Homo Erectus, I had to lock my door, put down my phone and disconnect the ship's announcement system's speaker in my room. The reasons?

- No one could use USB storage media, or any storage media. As per security policy I emailed and told them about.

- No one could use the ship's computers to connect to the internet. Again, as per policy.

- No one had any games on their Windows 10 Pro machines. As per policy.

- Everyone had to use a 10-character password, valid for 3 months, with certain restrictions. As per policy.

For reasons mentioned above, I had to (almost) blackmail the CO to draft an order enforcing those policies in writing (I know it's standard procedure for you, but for the military where I am it was a truly alien experience). Also, because I never trusted the users to actually backup their data locally, I had UrBackup clone their entire home folder, and a scheduled task execute a script storing them to the old online drive. Soon it became apparent why: (for every sysadmin this is routine, but this was my first experience)

- People kept deleting their files, whining to me to restore them

- People kept getting locked out because they kept entering their password WRONG for FIVE times IN a ROW because THEY had FORGOTTEN the CAPS lock KEY on. Had to enter three or four times during weekend for that.

- People kept whining about the no-USB policy, despite offering e-mail and shared folders.

The final straw was the updates. The CO insisted that I set the updates to manual because some PCs must not restart on their own. The problem is, some users barely ever checked. One particular user, when I asked him to check and do the updates, claimed he did that yesterday. Meanwhile, on the WSUS console: PC inactive for over 90 days.

I blocked the ship's phone when I got reassigned.

Phiew, finally I got all those off my chest! Thanks, guys. All of the rants so far remind me of one quote from Dave Barry: 7

7 -

In a user-interface design meeting over a regulatory compliance implementation:

User: “We’ll need to input a city.”

Dev: “Should we validate that city against the state, zip code, and country?”

User: “You are going to make me enter all that data? Ugh…then make it a drop-down. I select the city and the state, zip code auto-fill. I don’t want to make a mistake typing any of that data in.”

Me: “I don’t think a drop-down of every city in the US is feasible.”

Manage: “Why? There cannot be that many. Drop-down is fine. What about the button? We have a few icons to choose from…”

Me: “Uh..yea…there are thousands of cities in the US. Way too much data to for anyone to realistically scroll through”

Dev: “They won’t have to scroll, I’ll filter the list when they start typing.”

Me: “That’s not really the issue and if they are typing the city anyway, just let them type it in.”

User: “What if I mistype Ch1cago? We could inadvertently be out of compliance. The system should never open the company up for federal lawsuits”

Me: “If we’re hiring individuals responsible for legal compliance who can’t spell Chicago, we should be sued by the federal government. We should validate the data the best we can, but it is ultimately your department’s responsibility for data accuracy.”

Manager: “Now now…it’s all our responsibility. What is wrong with a few thousand item drop-down?”

Me: “Um, memory, network bandwidth, database storage, who maintains this list of cities? A lot of time and resources could be saved by simply paying attention.”

Manager: “Memory? Well, memory is cheap. If the workstation needs more memory, we’ll add more”

Dev: “Creating a drop-down is easy and selecting thousands of rows from the database should be fast enough. If the selection is slow, I’ll put it in a thread.”

DBA: “Table won’t be that big and won’t take up much disk space. We’ll need to setup stored procedures, and data import jobs from somewhere to maintain the data. New cities, name changes, ect. ”

Manager: “And if the network starts becoming too slow, we’ll have the Networking dept. open up the valves.”

Me: “Am I the only one seeing all the moving parts we’re introducing just to keep someone from misspelling ‘Chicago’? I’ll admit I’m wrong or maybe I’m not looking at the problem correctly. The point of redesigning the compliance system is to make it simpler, not more complex.”

Manager: “I’m missing the point to why we’re still talking about this. Decision has been made. Drop-down of all cities in the US. Moving on to the button’s icon ..”

Me: “Where is the list of cities going to come from?”

<few seconds of silence>

Dev: “Post office I guess.”

Me: “You guess?…OK…Who is going to manage this list of cities? The manager responsible for regulations?”

User: “Thousands of cities? Oh no …no one is our area has time for that. The system should do it”

Me: “OK, the system. That falls on the DBA. Are you going to be responsible for keeping the data accurate? What is going to audit the cities to make sure the names are properly named and associated with the correct state?”

DBA: “Uh..I don’t know…um…I can set up a job to run every night”

Me: “A job to do what? Validate the data against what?”

Manager: “Do you have a point? No one said it would be easy and all of those details can be answered later.”

Me: “Almost done, and this should be easy. How many cities do we currently have to maintain compliance?”

User: “Maybe 4 or 5. Not many. Regulations are mostly on a state level.”

Me: “When was the last time we created a new city compliance?”

User: “Maybe, 8 years ago. It was before I started.”

Me: “So we’re creating all this complexity for data that, realistically, probably won’t ever change?”

User: “Oh crap, you’re right. What the hell was I thinking…Scratch the drop-down idea. I doubt we’re have a new city regulation anytime soon and how hard is it to type in a city?”

Manager: “OK, are we done wasting everyone’s time on this? No drop-down of cities...next …Let’s get back to the button’s icon …”

Simplicity 1, complexity 0.16 -

Crap.. got myself into a fight with someone in a bar.

Hospitalized, turns out that my knee is bruised and my nose is broken. For some reason the knee hurts much more than the nose though.. very weird.

Just noticed that some fucker there stole my keychain USB stick too. Couldn't care less about the USB stick itself, got tons of those at home and hard drive storage even more so (10TB) but the data on it was invaluable. It held on a LUKS-encrypted partition, my GPG keys, revocation certificates, server backups and everything. My entire digital identity pretty much.

I'm afraid that the thief might try to crack it. On the flip side, if it's just a common Windows user, plugging it in will prompt him to format it.. hopefully he'll do that.

What do you think.. take a leap with fate and see how strong LUKS really is or revoke all my keys and assume my servers' filesystems to be in the hands of some random person that I don't know?

Seriously though.. stealing a fucking flash drive, of what size.. 32GB? What the fuck is wrong with people?32 -

I'm getting ridiculously pissed off at Intel's Management Engine (etc.), yet again. I'm learning new terrifying things it does, and about more exploits. Anything this nefarious and overreaching and untouchable is evil by its very nature.

(tl;dr at the bottom.)

I also learned that -- as I suspected -- AMD has their own version of the bloody thing. Apparently theirs is a bit less scary than Intel's since you can ostensibly disable it, but i don't believe that because spy agencies exist and people are power-hungry and corrupt as hell when they get it.

For those who don't know what the IME is, it's hardware godmode. It's a black box running obfuscated code on a coprocessor that's built into Intel cpus (all Intell cpus from 2008 on). It runs code continuously, even when the system is in S3 mode or powered off. As long as the psu is supplying current, it's running. It has its own mac and IP address, transmits out-of-band (so the OS can't see its traffic), some chips can even communicate via 3g, and it can accept remote commands, too. It has complete and unfettered access to everything, completely invisible to the OS. It can turn your computer on or off, use all hardware, access and change all data in ram and storage, etc. And all of this is completely transparent: when the IME interrupts, the cpu stores its state, pauses, runs the SMM (system management mode) code, restores the state, and resumes normal operation. Its memory always returns 0xff when read by the os, and all writes fail. So everything about it is completely hidden from the OS, though the OS can trigger the IME/SMM to run various functions through interrupts, too. But this system is also required for the CPU to even function, so killing it bricks your CPU. Which, ofc, you can do via exploits. Or install ring-2 keyloggers. or do fucking anything else you want to.

tl;dr IME is a hardware godmode, and if someone compromises this (and there have been many exploits), their code runs at ring-2 permissions (above kernel (0), above hypervisor (-1)). They can do anything and everything on/to your system, completely invisibly, and can even install persistent malware that lives inside your bloody cpu. And guess who has keys for this? Go on, guess. you're probably right. Are they completely trustworthy? No? You're probably right again.

There is absolutely no reason for this sort of thing to exist, and its existence can only makes things worse. It enables spying of literally all kinds, it enables cpu-resident malware, bricking your physical cpu, reading/modifying anything anywhere, taking control of your hardware, etc. Literal godmode. and some of it cannot be patched, meaning more than a few exploits require replacing your cpu to protect against.

And why does this exist?

Ostensibly to allow sysadmins to remote-manage fleets of computers, which it does. But it allows fucking everything else, too. and keys to it exist. and people are absolutely not trustworthy. especially those in power -- who are most likely to have access to said keys.

The only reason this exists is because fucking power-hungry doucherockets exist.26 -

!(short rant)

Look I understand online privacy is a concern and we should really be very much aware about what data we are giving to whom. But when does it turn from being aware to just being paranoid and a maniac about it.? I mean okay, I know facebook has access to your data including your whatsapp chat (presumably), google listens to your conversations and snoops on your mail and shit, amazon advertises that you must have their spy system (read alexa) install in your homes and numerous other cases. But in the end it really boils down to "everyone wants your data but who do you trust your data with?"

For me, facebook and the so-called social media sites are a strict no-no but I use whatsapp as my primary chating application. I like to use google for my searches because yaa it gives me more accurate search results as compared to ddg because it has my search history. I use gmail as my primary as well as work email because it is convinient and an adv here and there doesnt bother me. Their spam filters, the easy accessibility options, the storage they offer everything is much more convinient for me. I use linux for my work related stuff (obviously) but I play my games on windows. Alexa and such type of products are again a big no-no for me but I regularly shop from amazon and unless I am searching for some weird ass shit (which if you want to, do it in some incognito mode) I am fine with coming across some advs about things I searched for. Sometimes it reminds me of things I need to buy which I might have put off and later on forgot. I have an amazon prime account because prime video has some good shows in there. My primary web browser is chrome because I simply love its developer tools and I now have gotten used to it. So unless chrome is very much hogging on my ram, in which case I switch over to firefox for some of my tabs, I am okay with using chrome. I have a motorola phone with stock android which means all google apps pre-installed. I use hangouts, google keep, google map(cannot live without it now), heck even google photos, but I also deny certain accesses to apps which I find fishy like if you are a game, you should not have access to my gps. I live in India where we have aadhar cards(like the social securtiy number in the USA) where the government has our fingerprints and all our data because every damn thing now needs to be linked with your aadhar otherwise your service will be terminated. Like your mobile number, your investment policies, your income tax, heck even your marraige certificates need to be linked with your aadhar card. Here, I dont have any option but to give in because somehow "its in the interest of the nation". Not surprisingly, this thing recently came to light where you can get your hands on anyone's aadhar details including their fingerprints for just ₹50($1). Fuck that shit.

tl;dr

There are and should be always exceptions when it comes to privacy because when you give the other person your data, it sometimes makes your life much easier. On the other hand, people/services asking for your data with the sole purpose of infilterating into your private life and not providing any usefulness should just be boycotted. It all boils down to till what extent you wish to share your data(ranging from literally installing a spying device in your house to them knowing that I want to understand how spring security works) and how much do you trust the service with your data. Example being, I just shared most of my private data in this rant with a group of unknown people and I am okay with it, because I know I can trust dev rant with my posts(unlike facebook).29 -

One:

Had a stack of harddrives with my important data, two USB drives and a 4.7gb disc, two or three cloud storage accounts.

Needed a restore:

Knocked the stack of hard drives onto the floor (all broken), stood on one of the flash drives, found the other one in a pocket of a pair of trousers which just came out of the washing machine, dvd too scratched to read and couldn't verify my cloud storage account because I lost the password to the connected email account and the backup email account to verify that one didn't exist anymore. Fucking hell.

Two:

Production database with not that much yet but at least some production data which wasn't backupped.

Friend: can I reboot the db machine?

Me: yup!

Friend: what's the luks crypt password?

Me: 😯😐😓😫😲😧😭

End of story 😅

For the record, the first one actually happened (I literally cried afterwards) and that taught me to update my recovery email addresses more often!9 -

I have this little hobby project going on for a while now, and I thought it's worth sharing. Now at first blush this might seem like just another screenshot with neofetch.. but this thing has quite the story to tell. This laptop is no less than 17 years old.

So, a Compaq nx7010, a business laptop from 2004. It has had plenty of software and hardware mods alike. Let's start with the software.

It's running run-off-the-mill Debian 9, with a custom kernel. The reason why it's running that version of Debian is because of bugs in the network driver (ipw2200) in Debian 10, causing it to disconnect after a day or so. Less of an issue in Debian 9, and seemingly fixed by upgrading the kernel to a custom one. And the kernel is actually one of the things where you can save heaps of space when you do it yourself. The kernel package itself is 8.4MB for this one. The headers are 7.4MB. The stock kernels on the other hand (4.19 at downstream revisions 9, 10 and 13) took up a whole GB of space combined. That is how much I've been able to remove, even from headless systems. The stock kernels are incredibly bloated for what they are.

Other than that, most of the data storage is done through NFS over WiFi, which is actually faster than what is inside this laptop (a CF card which I will get to later).

Now let's talk hardware. And at age 17, you can imagine that it has seen quite a bit of maintenance there. The easiest mod is probably the flash mod. These old laptops use IDE for storage rather than SATA. Now the nice thing about IDE is that it actually lives on to this very day, in CF cards. The pinout is exactly the same. So you can use passive IDE-CF adapters and plug in a CF card. Easy!

The next thing I want to talk about is the battery. And um.. why that one is a bad idea to mod. Finding replacements for such old hardware.. good luck with that. So your other option is something called recelling, where you disassemble the battery and, well, replace the cells. The problem is that those battery packs are built like tanks and the disassembly will likely result in a broken battery housing (which you'll still need). Also the controllers inside those battery packs are either too smart or too stupid to play nicely with new cells. On that laptop at least, the new cells still had a perceived capacity of the old ones, while obviously the voltage on the cells themselves didn't change at all. The laptop thought the batteries were done for, despite still being chock full of juice. Then I tried to recalibrate them in the BIOS and fried the battery controller. Do not try to recell the battery, unless you have a spare already. The controllers and battery housings are complete and utter dogshit.

Next up is the display backlight. Originally this laptop used to use a CCFL backlight, which is a tiny tube that is driven at around 2000 volts. To its controller go either 7, 6, 4 or 3 wires, which are all related and I will get to. Signs of it dying are redshift, and eventually it going out until you close the lid and open it up again. The reason for it is that the voltage required to keep that CCFL "excited" rises over time, beyond what the controller can do.

So, 7-pin configuration is 2x VCC (12V), 2x enable (on or off), 1x adjust (analog brightness), and 2x ground. 6-pin gets rid of 1 enable line. Those are the configurations you'll find in CCFL. Then came LED lighting which required much less power to run. So the 4-pin configuration gets rid of a VCC and a ground line. And finally you have the 3-pin configuration which gets rid of the adjust line, and you can just short it to the enable line.

There are some other mods but I'm running out of characters. Why am I telling you all this? The reason is that this laptop doesn't feel any different to use than the ThinkPad x220 and IdeaPad Y700 I have on my desk (with 6c12t, 32G of RAM, ~1TB of SSDs and 2TB HDDs). A hefty setup compared to a very dated one, yet they feel the same. It can do web browsing, I can chat on Telegram with it, and I can do programming on it. So, if you're looking for a hobby project, maybe some kind of restrictions on your hardware to spark that creativity that makes code better, I can highly recommend it. I think I'm almost done with this project, and it was heaps of fun :D 11

11 -

TL;DR: disaster averted!

Story time!

About a year ago, the company I work for merged with another that offered complementary services. As is always the case, both companies had different ways of doing things, and that was true for the keeping of the financial records and history.

As the other company had a much larger financial database, after the merger we moved all the data of both companies on their software.

The said software is closed source, and was deployed on premises on a small server.

Even tho it has a lot of restrictions and missing features, it gets the job done and was stable enough for years.

But here comes the fun part: last week there was a power outage. We had no failsafe, no UPS, no recent backups and of course both the OS and the working database from the server broke.

Everyone was in panic mode, as our whole company needs the software for day to day activity!

Now, don't ask me how, but today we managed to recover all the data, got a new server with 2 RAID HDDs for the working copy of the DB, another pair for backups, and another machine with another dual HDD setup for secondary backups!

We still need a new UPS and another off site backup storage, but for now...disaster averted!

Time for a beer! Or 20...

That is all :)4 -

Got laid off on Friday because of a workforce reduction. When I was in the office with my boss, someone went into my cubicle and confiscated my laptop. My badge was immediately revoked as was my access to network resources such as email and file storage. I then had to pack up my cubicle, which filled up the entire bed of my pickup truck, with a chaperone from Human Resources looking suspiciously over my shoulder the whole time. They promised to get me a thumb drive of my personal data. This all happens before the Holidays are over. I feel like I was speed-raped by the Flash and am only just now starting to feel less sick to the stomach. I wanted to stay with this company for the long haul, but I guess in the software engineering world, there is no such thing as job security and things are constantly shifting. Anyone have stories/tips to make me feel better? Perhaps how you have gotten through it? 😔😑😐14

-

At the data restaurant:

Chef: Our freezer is broken and our pots and pans are rusty. We need to refactor our kitchen.

Manager: Bring me a detailed plan on why we need each equipment, what can we do with each, three price estimates for each item from different vendors, a business case for the technical activities required and an extremely detailed timeline. Oh, and do not stop doing your job while doing all this paperwork.

Chef: ...

Boss: ...

Some time later a customer gets to the restaurant.

Waiter: This VIP wants a burguer.

Boss: Go make the burger!

Chef: Our frying pan is rusty and we do not have most of the ingredients. I told you we need to refactor our kitchen. And that I cannot work while doing that mountain of paperwork you wanted!

Boss: Let's do it like this, fix the tech mumbo jumbo just enough to make this VIP's burguer. Then we can talk about the rest.

The chef then runs to the grocery store and back and prepares to make a health hazard hurried burguer with a rusty pan.

Waiter: We got six more clients waiting.

Boss: They are hungry! Stop whatever useless nonsense you were doing and cook their requests!

Cook: Stop cooking the order of the client who got here first?

Boss: The others are urgent!

Cook: This one had said so as well, but fine. What do they want?

Waiter: Two more burgers, a new kind of modern gaseous dessert, two whole chickens and an eleven seat sofa.

Chef: Why would they even ask for a sofa?!? We are a restaurant!

Boss: They don't care about your Linux techno bullshit! They just want their orders!

Cook: Their orders make no sense!

Boss: You know nothing about the client's needs!

Cook: ...

Boss: ...

That is how I feel every time I have to deal with a boss who can't tell a PostgreSQL database from a robots.txt file.

Or everytime someone assumes we have a pristine SQL table with every single column imaginable.

Or that a couple hundred terabytes of cold storage data must be scanned entirely in a fraction of a second on a shoestring budget.

Or that years of never stored historical data can be retrieved from the limbo.

Or when I'm told that refactoring has no ROI.

Fuck data stack cluelessness.

Fuck clients that lack of basic logical skills.4 -

I just can't understand what will lead an so called Software Company, that provides for my local government by the way, to use an cloud sever (AWS ec2 instance) like it were an bare metal machine.

They have it working, non-stop, for over 4 years or so. Just one instance. Running MySQL, PostgreSQL, Apache, PHP and an f* Tomcat server with no less than 10 HUGE apps deployed. I just can't believe this instance is still up.

By the way, they don't do backups, most of the data is on the ephemeral storage, they use just one private key for every dev, no CI, no testing. Deployment are nightmares using scp to upload the .war...

But still, they are running several several apps for things like registering citizen complaints that comes in by hot lines. The system is incredibly slow as they use just hibernate without query optimizations to lookup and search things (n+1 query problems).

They didn't even bother to get a proper domain. They use an IP address and expose the port for tomcat directly. No reverse proxy here! (No ssl too)

I've been out of this company for two years now, it was my first work as a developer, but they needed help for an app that I worked on during my time there. I was really surprised to see that everything still the same. Even the old private key that they emailed me (?!?!?!?!) back then still worked. All the passwords still the same too.

I have some good rants from the time I was there, and about the general level of the developers in my region. But I'll leave them for later!

Is it just me or this whole shit is crazy af?3 -

--- SUMMARY OF THE APPLE KEYNOTE ON THE 30TH OF OCTOBER 2018 ---

MacBook Air:

> Retina Display

> Touch ID

> 17% less volume

> 8GB RAM

> 128GB SSD

> T2 Chip (Core i5 with 1.6 GHz / 3.6 GHz in turbo mode)

Price starting at $1199

Mac Mini:

> T2 Chip

> up to 64GB RAM

> up to 2TB all-flash SSD

> better cooling than previous Mac Mini

> more ports than previous Mac Mini - even HDMI, so you can connect it to any monitor of your choice!

> stackable - yes, you can build a whole data center with them!

Price is 799$

Both MacBook Air and Mac Mini are made of 100% recyled aluminium!

Good job, Apple!

iPad Pro:

> home-button moved to trash

> very sexy edges (kinda like iPhone 4, but better)

> all-screen design - no more ugly borders on the top and bottom of the screen

> 15% thinner and 25% less volume than previous iPads

> liquid retina display (same as the new iPhone XR)

> Face ID - The most secure way to login to your iPad!

> A12X Bionic Chip - Insane performance!

> up to 1TB storage - Whoa!

> USB-C - Allow you to connect your iPad to anything! You can even charge your iPhone with your iPad! How cool is that?!

> new Apple Pencil that attaches to the iPad Pro and charges wirelessly

> new, redesigned physical keyboard

Price starting at 799$

Also, Apple introduced "Today at Apple" - Hundreds of sessions and workshops hosted at apple stores everywhere in the world, where you can learn about photography, coding, art and more! (Using Apple devices of course)16 -

The world of storage gonna change..

Yesterday, scientists successfully stored and retrieved back an entire OS, a movie and some other files from a DNA.

It is estimated that a single gram of DNA can store 215 million GB of data. ( Now the storage can be as small as invisible. )9 -

I would like to invite you all to test the project that a friend and me has been working on for a few months.

We aim to offer a fair, cheap and trusty alternative to proprietary services that perform data mining and sells information about you to other companies/entities.

Our goal is that users can (if they want) remain anonymous against us - because we are not interested in knowing who you are and what you do, like or want.

We also aim to offer a unique payment system that is fair, good and guarantees your intergrity by offer the ability to pay for the previous month not for the next month, by doing that you do not have to pay for a service that you does not really like.

Please note that this is still Free Beta, and we need your valuable experience about the service and how we can improve it. We have no ETA when we will launch the full service, but with your help we can make that process faster.

With this service, we do want to offer the following for now:

Nextcloud with 50 GB storage, yes you can mount it as a drive in Linux :)

Calendar

Email Client that you can connect to your email service (

SearX Instance

Talk ( voice and video chat )

Mirror for various linux distros

We are using free software for our environment - KVM + CEPH on our own hardware in our own facility. That means that we have complete control over the hosting and combined with one of the best ISP in the world - Bahnhof - we believe that we can offer something unique and/or be a compliment to your current services if you want to have more control over your data.

Register at:

https://operationtulip.com

Feel free to user our mirror:

https://mirror.operationtulip.com

Please send your feedback to:

feedback@operationtulip.com38 -

The solution for this one isn't nearly as amusing as the journey.

I was working for one of the largest retailers in NA as an architect. Said retailer had over a thousand big box stores, IT maintenance budget of $200M/year. The kind of place that just reeks of waste and mismanagement at every level.

They had installed a system to distribute training and instructional videos to every store, as well as recorded daily broadcasts to all store employees as a way of reducing management time spend with employees in the morning. This system had cost a cool 400M USD, not including labor and upgrades for round 1. Round 2 was another 100M to add a storage buffer to each store because they'd failed to account for the fact that their internet connections at the store and the outbound pipe from the DC wasn't capable of running the public facing e-commerce and streaming all the video data to every store in realtime. Typical massive enterprise clusterfuck.

Then security gets involved. Each device at stores had a different address on a private megawan. The stores didn't generally phone home, home phoned them as an access control measure; stores calling the DC was verboten. This presented an obvious problem for the video system because it needed to pull updates.

The brilliant Infosys resources had a bright idea to solve this problem:

- Treat each device IP as an access key for that device (avg 15 per store per store).

- Verify the request ip, then issue a redirect with ANOTHER ip unique to that device that the firewall would ingress only to the video subnet

- Do it all with the F5

A few months later, the networking team comes back and announces that after months of work and 10s of people years they can't implement the solution because iRules have a size limit and they would need more than 60,000 lines or 15,000 rules to implement it. Sad trombones all around.

Then, a wild DBA appears, steps up to the plate and says he can solve the problem with the power of ORACLE! Few months later he comes back with some absolutely batshit solution that stored the individual octets of an IPV4, multiple nested queries to the same table to emulate subnet masking through some temp table spanning voodoo. Time to complete: 2-4 minutes per request. He too eventually gives up the fight, sort of, in that backhanded way DBAs tend to do everything. I wish I would have paid more attention to that abortion because the rationale and its mechanics were just staggeringly rube goldberg and should have been documented for posterity.

So I catch wind of this sitting in a CAB meeting. I hear them talking about how there's "no way to solve this problem, it's too complex, we're going to need a lot more databases to handle this." I tune in and gather all it really needs to do, since the ingress firewall is handling the origin IP checks, is convert the request IP to video ingress IP, 302 and call it a day.

While they're all grandstanding and pontificating, I fire up visual studio and:

- write a method that encodes the incoming request IP into a single uint32

- write an http module that keeps an in-memory dictionary of uint32,string for the request, response, converts the request ip and 302s the call with blackhole support

- convert all the mappings in the spreadsheet attached to the meetings into a csv, dump to disk

- write a wpf application to allow for easily managing the IP database in the short term

- deploy the solution one of our stage boxes

- add a TODO to eventually move this to a database

All this took about 5 minutes. I interrupt their conversation to ask them to retarget their test to the port I exposed on the stage box. Then watch them stare in stunned silence as the crow grows cold.

According to a friend who still works there, that code is still running in production on a single node to this day. And still running on the same static file database.

#TheValueOfEngineers2 -

On my former job we once bought a competing company that was failing.

Not for the code but for their customers.

But to make the transition easy we needed to understand their code and database to make a migration script.

And that was a real deep dive.

Their system was built on top of a home grown platform intended to let customers design their own business flows which meant it contained solutions for forms and workflow path design. But that never hit of so instead they used their own platform to design a new system for a more specific purpose.

This required some extra functionality and had it been for their customers to use that functionality would have been added to the platform.

But since they had given up on that they took an easy route and started adding direct references between the code and the configuration.

That is, in the configuration they added explicit class names and method names to be used as data store or for actions.

This was of cause never documented in any way.

And it also was a big contributing cause to their downfall as they hit a complexity they could not handle.

Even the slightest change required synchronizing between the config in the db and the compiled code, which meant you could not see mistakes in compilation but only by trying out every form and action that touched what you changed.

And without documentation or search tools that also meant that no one new could work the code, you had to know what used what to make any changes.

Luckily for us we mostly only needed to understand the storage in the database but even that took about a month to map out WITH the help of their developer ;)

It was not only the “inner platform” it was abusing and breaking the inner platform in more was I can count.

If you are going down the inner platform, at least make sure you go all the way and build it as if it was for the customers, then you at least keep it consistent and keep a clear border between platform and how it is used.12 -

Well, it wasn't fun, but I switched jobs this month. And sadly, it was mostly because my old company started building custom applications for our larger customers. Now, normally that wouldn't be too bad (other than the fact that it distracts us form working on our main product...) but... it was decided that we would use the back end of our user-generated forms module as the data storage layer. Someone outside of my department thought it would be a great idea, and my boss kinda just rolled over without a fight because he always just figures he can "make it work" if he works hard enough...

You shoulda seen the database and SQL code...

Because of that decision, everything took at least 3x as long to write and there was always the looming possibility that the user could change the schema on a whim and break the app.

I think the reasoning behind it was to try and keep the customers tied to the aging flagship product (with a pricy subscription model), but IMO, it was not with it. Our efforts could've had much greater impact somewhere else. Nobody seemed to care what I thought about it though...

I had to start over as a front-end dev, but I'm trying to look on the bright side and seeing it as an opportunity to sharpen my skills in that area. I'm already learning a lot. And although it's a little scary at times, it's also so refreshing to work at a place where I know I'm not the smartest guy in the room.

To the future!5 -

My co-workers hate it when I ask this question on a technical interview, but my common one is "what is the difference between a varchar(max) and varchar(8000) when they are both storing 8000 characters"

Answer, you cannot index a varchar(max). A varchar(max) and varchar(8000) both store the data in the table but a max will go to blob storage if it is greater than 8000.

No one ever knows the answer but I like to ask it to see how people think. Then I tell them that no one ever gets that right and it isn't a big deal that they don't know it, as I give them the answer.8 -

Today is sprint demo day. As usual I'm only half paying attention since being a Platform Engineer, my work is always technically being "demoed" (shit's running ain't it? There you go, enjoy the EC2 instances.)

One team presents a new thing they built. I'm still half paying attention, half playing Rocket League on another monitor.

Then someone says

"We're storing in prod-db-3"

They have my curiosity.

"Storing x amount of data at y rate"

They now have my attention. I speak up "Do you have a plan to drop data after a certain period of time?"

They don't. I reply "Okay, then your new feature only has about 2 months to live before you exhaust the disk on prod-db-3 and we need to add more storage"

I am asked if we can add more storage preemptively.

"Sure, I say." I then direct my attention to the VP "{VP} I'll make the change request to approve the spend for additional volume on prod-db-3"

VP immediately balks and asks why this wasn't considered before. I calmly reply "I'm not sure. This is the first time I'm learning of this new feature even coming to life. Had anyone consulted with the Platform team we'd have made sure the storage availability was there."

VP asks product guy what happened.

"We didn't think we'd need platform resources for this so we never reached out for anything".

I calmly mute myself, turn my camera back off and go back to Rocket League as the VP goes off about planning and collaboration.

"CT we'll reach out to you next week about getting this all done"

*unmute, camera stays off* "Sounds good" *clears ball*4 -

The newest IPhone (Which specwise is good) is more expensive than the newest graphics cards that are coming out... h.o.l.y. s.h.i.t

and you know what? THE BEST MODEL IS 400 BUCKS MORE EXPENSIVE THAN A FUCKING 2080TI!

And you know what?

WHATS UP WITH STORAGE!

you go from 64Gb to 256 and 512 GIGABYTES OF DATA!!

Its like, Midrange storage to a Ton of Storage to HOLY SHIT WHO NEEDS THIS MUCH?!

and you know WHY they are doing this?

Because most of the people will buy the 256 or 512 model GUARANTEED! People behave like this! you dont believe me?

What if you gave a Big Popcorn box for 15 Bucks and the smallest one for 5 bucks, which one would you buy? The smaller one. (Most of the time) and why? because you only have 2 choices! If I gave you 3 choices: 5 for small, 10 for medium and 15 for large?

You wont buy the smallest one, because it doesnt make sense! THATS WHY AND NOW FUCK OFF AND YOU TOO APPLE GO SUCK COCKS OF YOUR FUCKING FANBOYS WHICH WILL PAY MORE!4 -

OCR (The exam board for my course) are fucking thick in the head when it comes to anything computing.

- I get a mark or two for saying open source software is worse than thier propritary counterparts

- ALL open source software forks must also be make open source. They spend so much time going over the legal stuff BUT HAVE NEVER HEARD OF OPEN SOURCE LICENCING!

- One exam paper had a not gate picture with 2 inputs...

- I have to differentiate between portable and handheld! YOU MEAN HANDHELD DEVICES ARE NOT PORTABLE!?!!?!?

- In level 2 education, OCR say 1 MB = 1024 KB - In level 3, they say 1 MB = 1000 KB, and 1 MiB = 1024 KiB, and expect you to differentiate. Why do you expect the wrong answer in level 2!?

- INFORMATION FORMATS AND STYLES ARE COMPLETELY DIFFERENT THINGS! If you look up synonyms for "style", "form" is there, and if you look up synonyms for "format", "style" is there.

- When asked for storage devices, I have to say "smartphone", "tablet", "desktop PC" - I mean yeah they store data but when you ask me for storage devices I will say "hard disk drive", "solid state drive", "SD card", etc. >.>

I could probably go on an on about this...

I sure do love being asked to copy-paste existing HTML/JS/CSS and being asked to just tweak it here and there, and then wait for other people's incompetence in copy-pasting... I sure do love being stuck with this sort of "education" ._.4 -

!rant but history

I found this old micro controller: The TMS 1000 (from 1974). The specs: 100-400kHz clock speed, 4-bit architecture, 1kB ROM and 32 bytes (!) RAM. According to data sheet, you sent the program to TI and they gave you a programmed controller back - updates to the once upload program were impossible, but an external memory chip was possible.

I'm glad we have computers with more processing power and storage (and other languages than assembler) - on the other hand it enforced good debugging before deployment and and efficient code.

Data sheet: http://bitsavers.org/components/ti/... 6

6 -

Just had Windows pop up with a notification saying “We’ve turned on storage sense for you”. Love it how the default is “When Windows Decides”. Was that why countless users lost their data back in October 2018???

I think I’ll turn that off permanently 😂😂😂 4

4 -

Man, most memorable has to be the lead devops engineer from the first startup I worked at. My immediate team/friends called him Mr. DW - DW being short for Done and Working.

You see, Mr. DW was a brilliant devops engineer. He came up with excellent solutions to a lot of release, deployment, and data storage problems faced at the company (small genetics firm that ships servers with our analysis software on them). I am still very impressed by some of the solutions he came up with, and wish I had more time to study and learn about them before I left that company.

BUT - despite his brilliance, Mr. DW ALWAYS shipped broken stuff. For some reason this guy thinks that only testing a single happiest of happy path scenarios for whatever he is developing constitutes "everything will work as expected!" As soon as he said it was "done", but golly for him was it "done". By fucking God was that never the truth.

So, let me provide a basic example of how things would go:

my team: "Hey DW, we have a problem with X, can you fix this?"

DW: "Oh, sure. I bet it's a problem with <insert long explanations we don't care about we just want it fixed>"

my team: "....uhh, cool! Looking forward to the fix!"

... however long later...

DW: "OK, it's done. Here you go!"

my team: "Thanks! We'll get the fix into the processing pipelines"

... another short time later...

my team: "DW, this thing is broken. Look at all these failures"

DW: "How can that be? It was done! I tested it and it worked!"

my team: "Well, the failures say otherwise. How did you test?"

DW: "I just did <insert super basic thing>"

my team: "...... you know that's, like, not how things actually work for this part of the pipeline. right?"

DW: "..... But I thought it was XYZ?"

my team: "uhhhh, no, not even close. Can you please fix and let us know when it's done and working?"

DW: "... I'll fix it..."

And rinse and repeat the "it's done.. oh wait, it's broken" a good half dozen times on average. But, anyways, the birth of Mr. Done and Working - very often stuff was done, but rarely did it ever work!

I'm still friends with my team mates, and whenever we're talking and someone says something is done, we just have to ask if it's done AND working. We always get a laugh, sadly at the excuse of Mr. DW, but he dug his own hole in this regard.

Little cherry on top: So, the above happened with one of my friends. Mr. DW created installation media for one of our servers that was deployed in China. He tested it and "it was done!" Well, my friend flies out to China for on-site installation. He plugs the install medium in and goes for the install and it crashes and burns in a fire. Thankfully my friend knew the system well enough to be able to get everything installed and configured correctly minus the broken install media, but definitely the most insane example of "it's done!" but sure as he'll "it doesn't work!" we had from Mr. DW.2 -

I seriously don't know why some people still using Google Drive, DropBox. Pied Piper is much better

www.piedpiper.com7 -

After working for this company for only a couple years, I was tasked with designing and implementing the entire system for credit card encryption and storage and token management. I got it done, got it working, spent all day Sunday updating our system and updating the encryption on our existing data, then released it.

It wasn't long into Monday before we started getting calls from our clients not being able to void or credit payments once they had processed. Looking through the logs, I found the problem was tokens were getting crossed between companies, resulting in the wrong companies getting the wrong tokens. I was terrified. Fortunately I had including safe guards tying each token to a specific company, so they were not able to process the wrong cards. We fixed it that night.1 -

2012 laptop:

- 4 USB ports or more.

- Full-sized SD card slot with write-protection ability.

- User-replaceable battery.

- Modular upgradeable memory.

- Modular upgradeable data storage.

- eSATA port.

- LAN port.

- Keyboard with NUM pad.

- Full-sized SD card slot.

- Full-sized HDMI port.

- Power, I/O, charging, network indicator lamps.

- Modular bay (for example Lenovo UltraBay)

- 1080p webcam (Samsung 700G7A)

- No TPM trojan horse.

2024 laptop:

- 1 or 2 USB ports.

- Only MicroSD card slot. Requires fumbling around and has no write-protection switch.

- Non-replaceable battery.

- Soldered memory.

- Soldered data storage.

- No eSATA port.

- No LAN port.

- No NUM pad.

- Micro-HDMI port or uses USB-C port as HDMI.

- Only power lamp. No I/O lamp so user doesn't know if a frozen computer is crashed or working.

- No modular bay

- 720p webcam

- TPM trojan horse (Jody Bruchon video: https://youtube.com/watch/... )

- "Premium design" (who the hell cares?!)11 -

Oh fucking Huawei.

Fuck you.

Inventory:

- Honor 6x (BLN-L22C675)

- Has EMUI4.1 Marshmallow

- Cousin brother 'A' (has bricking XP!)

- Uncle 'K'

- Has Mac with Windows VM

Goal:

- Stock as LineageOS / AOSP

Procedure (fucking seriously):

- Find XDA link to root H6X

- Go to Huawei page and fill out form

- Receive and use bootloader code

- Find latest TWRP

- Flash latest TWRP

- TWRP not working? Bootloops

- XDA search "H6X boot to recovery"

- Find and try modded TWRP

- TWRP fails, no bootloop

- Find & flash TWRP 3.1.0

- Yay! TWRP works

- Find and download LineageOS and SuperSU

- Flash via TWRP

- Yay! Success.

- Attempt boot

- Boot fails. No idea why

- Go back to TWRP

- TWRP gives shitload of errors

"cannot mount /data, storage etc."

- Feel fucked up

- Notice that userdata partition exists,

but FSTAB doesn't take

- Remembers SuperSU modded boot

image and FSTABS!

- Fuck SuperSU

- Attempt to mod boot image

- Doesn't work (modded successfully

but no change)

- Discover Huawei DLOAD

Installer for "UPDATE.APP" OTAs

Note: Each full OTA is 2+ GB zipped

- Find, download, fail on 4+ OTAs

- Discover "UPDATE.APP Extractor"

Runs on Windows

Note: UPDATE.APP custom format

Different per H6X model

- Uses 'K''s VM to test

- My H6X model does not have

a predefined format

- Process to get format requires

TWRP, which is not working

- FAIL HERE

- Discover "Firmware Finder"

Windows app to find Huawei

firmwares

- Tries 'K''s VM

- Fails with 1 OTA

- Downloads another firmware ZIP

- Unzips and tries to use OTA

- Works?!

- Boots successfully?!

- Seems to have EMUI 5.0 Nougat

- Downloads, flashes TWRP

- TWRP not working AGAIN?

- Go back to XDA page

- Find that TWRP on EMUI 5 - NO

- Find rollbacks for EMUI5 -> EMUI4

- Test, fail 2-4 times (Massive OTAs)

- DLOAD accepts this one?!!!

- I HAVE ORIG AGAIN!!!

- Re-unlock and reflash TWRP

- Realise that ROMs aren't working on

EMUI 4.1; Find TWRPs for EMUI5

- Find and fail with 2-3 OTAs

Note: Had removed old OTAs for

space on Chromebook (32GB)

- In anger, flash one with TWRP

instead of DLOAD (which checks

compatability)

- Works! Same wasn't working with

DLOAD

- Find and flash a custom TWRP

as old one still exists (not wiped in

flash)

- Try flashing LineageOS

- LineageOS stuck in boot

- Try flashing AOSP

- Same

- Try flashing Resurruction Remix

- Same

- Realise that need stock EMUI5

vendor

- Realise that the firmware I installed

wasn't for my device so not working

- FUCK NO MORE LARGE DLs

- Try another custom TWRP

- Begin getting '/cust mounting' errs

- Try reflashing EMUI5 with TWRP

- Doesn't work

- Try DLOADing EMUI5

- Like before, incompatability

- DLOAD EMUI4

- Reunlock and reflash TWRP

- WRITE THIS AS A BREAK

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAARRRRRRRRRRRRRRRRRRRRRRRRGGGGGGGGGGGGGGGGGGGGGGGGGHHHHHHHHHHHHHH7 -

Necessary context for this rant if you haven't read it already: https://devrant.com/rants/2117209

I've just found my LUKS encrypted flash drive back. It was never stolen.. it somehow got buried in the depths of my pockets. No idea how I didn't look into my jacket for the entire time since that incident happened... But I finally found it back. None of my keys were ever compromised. And there's several backups that were stored there that have now been recovered too. Time to dd this flash drive onto a more permanent storage medium again for archival. Either way, it did get me thinking about the security of this drive. And I'll implement them on the next iteration of it.

For now though.. happy ending. So relieved to see that data back...

Full quality screenshot: https://nixmagic.com/pics/... 10

10 -

Long rant ahead.. 5k characters pretty much completely used. So feel free to have another cup of coffee and have a seat 🙂

So.. a while back this flash drive was stolen from me, right. Well it turns out that other than me, the other guy in that incident also got to the police 😃

Now, let me explain the smiley face. At the time of the incident I was completely at fault. I had no real reason to throw a punch at this guy and my only "excuse" would be that I was drunk as fuck - I've never drank so much as I did that day. Needless to say, not a very good excuse and I don't treat it as such.

But that guy and whoever else it was that he was with, that was the guy (or at least part of the group that did) that stole that flash drive from me.

Context: https://devrant.com/rants/2049733 and https://devrant.com/rants/2088970

So that's great! I thought that I'd lost this flash drive and most importantly the data on it forever. But just this Friday evening as I was meeting with my friend to buy some illicit electronics (high voltage, low frequency arc generators if you catch my drift), a policeman came along and told me about that other guy filing a report as well, with apparently much of the blame now lying on his side due to him having punched me right into the hospital.

So I told the cop, well most of the blame is on me really, I shouldn't have started that fight to begin with, and for that matter not have drunk that much, yada yada yada.. anyway he walked away (good grief, as I was having that friend on visit to purchase those electronics at that exact time!) and he said that this case could just be classified then. Maybe just come along next week to the police office to file a proper explanation but maybe even that won't be needed.

So yeah, great. But for me there's more in it of course - that other guy knows more about that flash drive and the data on it that I care about. So I figured, let's go to the police office and arrange an appointment with this guy. And I got thinking about the technicalities for if I see that drive back and want to recover its data.

So I've got 2 phones, 1 rooted but reliant on the other one that's unrooted for a data connection to my home (because Android Q, and no bootable TWRP available for it yet). And theoretically a laptop that I can put Arch on it no problem but its display backlight is cooked. So if I want to bring that one I'd have to rely on a display from them. Good luck getting that done. No option. And then there's a flash drive that I can bake up with a portable Arch install that I can sideload from one of their machines but on that.. even more so - good luck getting that done. So my phones are my only option.

Just to be clear, the technical challenge is to read that flash drive and get as much data off of it as possible. The drive is 32GB large and has about 16GB used. So I'll need at least that much on whatever I decide to store a copy on, assuming unchanged contents (unlikely). My Nexus 6P with a VPN profile to connect to my home network has 32GB of storage. So theoretically I could use dd and pipe it to gzip to compress the zeroes. That'd give me a resulting file that's close to the actual usage on the flash drive in size. But just in case.. my OnePlus 6T has 256GB of storage but it's got no root access.. so I don't have block access to an attached flash drive from it. Worst case I'd have to open a WiFi hotspot to it and get an sshd going for the Nexus to connect to.

And there we have it! A large storage device, no root access, that nonetheless can make use of something else that doesn't have the storage but satisfies the other requirements.

And then we have things like parted to read out the partition table (and if unchanged, cryptsetup to read out LUKS). Now, I don't know if Termux has these and frankly I don't care. What I need for that is a chroot. But I can't just install Arch x86_64 on a flash drive and plug it into my phone. Linux Deploy to the rescue! 😁

It can make chrooted installations of common distributions on arm64, and it comes extremely close to actual Linux. With some Linux magic I could make that able to read the block device from Android and do all the required sorcery with it. Just a USB-C to 3x USB-A hub required (which I have), with the target flash drive and one to store my chroot on, connected to my Nexus. And fixed!

Let's see if I can get that flash drive back!

P.S.: if you're into electronics and worried about getting stuff like this stolen, customize it. I happen to know one particular property of that flash drive that I can use for verification, although it wasn't explicitly customized. But for instance in that flash drive there was a decorative LED. Those are current limited by a resistor. Factory default can be say 200 ohm - replace it with one with a higher value. That way you can without any doubt verify it to be yours. Along with other extra security additions, this is one of the things I'll be adding to my "keychain v2".10 -

So, the uni hires a new CS lecturer. He is teaching 230, the second CS class in the CS major. Two weeks into the semester, he walks in and proceeds to do his usual fumbling around on the computer (with the projector on).

Then, he goes to his Google Drive, which is empty mostly, and tells us that he accidentally wrote a program that erased his entire hard drive and his internet storage drives (Google, box, etc.)...

I mean, way to build credibility, guy... Then he tells us that he has a backup of everything 500 miles away, where he moved from. He also says that he only knows C (we only had formally learned Java so far), but hasn't actually coded (correction: typed!) in 20+ years, because he had someone do that for him and he has been learning Java over the past two weeks.

The rest of the semester followed as expected: he never had any lecture material and would ramble for an hour. Every class, he would pull up a new .java file and type code that rarely ran and he had no debugging skills. We would spend 15 minutes trying to help him with syntax issues—namely (), ;— to get his program running and then there would be a logic issue, in data structures.

He knew nothing of our sequence and what we knew up until this point and would lecture about how we will be terrible programmers because we did not do something the way he wanted—though he failed to give us expectations or spend the five minutes to teach us basic things (run-time complexity, binary, pseudocode etc). His assignments were not related to the material and if they were, they were a couple of weeks off. Also, he never knew which class we were and would ask if we were 230 or 330 at the end of a lecture...

I learned relatively nothing from him (though I ended up with a B+) but thankful to be taking advanced data structures from someone who knows their stuff. He was awful. It was strange. Also, why did the uni not tell him what he needed to be teaching?

End rant.undefined worst teacher worst professor awful communication awful code worst cs teacher disorganization1 -

I do not like the direction laptop vendors are taking.

New laptops tend to feature fewer ports, making the user more dependent on adapters. Similarly to smartphones, this is a detrimental trend initiated by Apple and replicated by the rest of the pack.

As of 2022, many mid-range laptops feature just one USB-A port and one USB-C port, resembling Apple's toxic minimalism. In 2010, mid-class laptops commonly had three or four USB ports. I have even seen an MSi gaming laptop with six USB ports. Now, much of the edges is wasted "clean" space.

Sure, there are USB hubs, but those only work well with low-power devices. When attaching two external hard drives to transfer data between them, they might not be able to spin up due to insufficient power from the USB port or undervoltage caused by the impedance (resistance) of the USB cable between the laptop's USB port and hub. There are USB hubs which can be externally powered, but that means yet another wall adapter one has to carry.

Non-replaceable [shortest-lived component] mean difficult repairs and no more reserve batteries, as well as no extra-sized battery packs. When the battery expires, one might have to waste four hours on a repair shop for a replacement that would have taken a minute on a 2010 laptop.

The SD card slot is being replaced with inferior MicroSD or removed entirely. This is especially bad for photographers and videographers who would frequently plug memory cards into their laptop. SD cards are far more comfortable than MicroSD cards, and no, bulky external adapters that reserve the device's only USB port and protrude can not replace an integrated SD card slot.

Most mid-range laptops in the early 2010s also had a LAN port for immediate interference-free connection. That is now reserved for gaming-class / desknote laptops.

Obviously, components like RAM and storage are far more difficult to upgrade in more modern laptops, or not possible at all if soldered in.

Touch pads increasingly have the buttons underneath the touch surface rather than separate, meaning one has to be careful not to move the mouse while clicking. Otherwise, it could cause an unwanted drag-and-drop gesture. Some touch pads are smart enough to detect when a user intends to click, and lock the movement, but not all. A right-click drag-and-drop gesture might not be possible due to the finger on the button being registered as touch. Clicking with short tapping could be unreliable and sluggish. While one should have external peripherals anyway, one might not always have brought them with. The fallback input device is now even less comfortable.

Some laptop vendors include a sponge sheet that they want users to put between the keyboard and the screen before folding it, "to avoid damaging the screen", even though making it two millimetres thicker could do the same without relying on a sponge sheet. So they want me to carry that bulky thing everywhere around? How about no?

That's the irony. They wanted to make laptops lighter and slimmer, but that made them adapter- and sponge sheet-dependent, defeating the portability purpose.

Sure, the CPU performance has improved. Vendors proudly show off in their advertisements which generation of Intel Core they have this time. As if that is something users especially care about. Hoo-ray, generation 14 is now yet another 5% faster than the previous generation! But what is the benefit of that if I have to rely on annoying adapters to get the same work done that I could formerly do without those adapters?

Microsoft has also copied Apple in demanding internet connection before Windows 11 will set up. The setup screen says "You will need an Internet connection…" - no, technically I would not. What does technically stand in the way of Windows 11 setting up offline? After all, previous Windows versions like Windows 95 could do so 25 years earlier. But also far more recent versions. Thankfully, Linux distributions do not do that.

If "new" and "modern" mean more locked-in and less practical and difficult to repair, I would rather have "old" than "new".10 -

You know the worst thing about being a freelancer? You're expected to wear every fucking hat and you don't get normal hours.

Over the past few days I have been working with a client of a client attampting to fix his server. He's running CentOS on VMWare and somehow ended up breaking the system.

Upon inspection there was no way to fix his system remotely. It wouldn't even boot in recovery mode. So we've been attempting to recover his data so that we can reinstall CentOS and not have to start completely from scratch.