Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "memory"

-

iOS: Hey, human wanna hear a joke?

Me: Sure.

iOS: Out of Memory.

Me: What?

iOS: I ain't explaining shit.2 -

New guy: There's a memory leak in my code.

Me: You need to free the memory you previously allocated.

New guy: Already did that, deleted everything from my "Downloads" folder and some stuff from my Desktop.

Me: *Long Pause* Have you tried "rm -rf /" yet ?4 -

A university that teaches students

C++ without teaching an understanding of memory management is pointerless.5 -

After 2 hours of wiring/debugging/rewiring, I have my EEPROM programmer halfway done. Currently is only able to read locations in memory. Next step: make it programmable.

(For those of you who dont know, EEPROM stands for Electrically Erasable, Programmable Read-Only Memory 29

29 -

The time my Java EE technology stack disappointed me most was when I noticed some embarrassing OutOfMemoryError in the log of a server which was already in production. When I analyzed the garbage collector logs I got really scared seeing the heap usage was constantly increasing. After some days of debugging I discovered that the terrible memory leak was caused by a bug inside one of the Java EE core libraries (Jersey Client), while parsing a stupid XML response. The library was shipped with the application server, so it couldn't be replaced (unless installing a different server). I rewrote my code using the Restlet Client API and the memory leak disapperead. What a terrible week!

2

2 -

When you create a bunch of objects in Java and it crashes because you're used to the memory usage of C's structs.

3

3 -

My first personal computer in 1988: the ZX Spectrum +.

48 KBytes of memory.

The European opponent of Commodore 64. Sic! 8

8 -

Googled "prevent memory leaks in delphi".

Came across a library called TCondom.

Talk about naming your classes aptly.4 -

Print 'Hello World' in ReactJS.

# Time - dies

# Memory - cries in silence

# C - gives an evil laugh 7

7 -

Long ago, like 5 years, I made an app for my EX GF in symbion to track her periods. Application predict the next date when your period will come based on her cycle.

How ever after 2 month of usage she told me that application was flashing that she is pregnant. She scared shit out of herself and made me sacred a hell as well.

Later i find out that the variable i used to store number of days between last period and current date was not capable of storing value more than 40, i don't know how, and triggers negative value to be shown.

Early days of my programming, Shit happens.8 -

Application has had a suspected memory leak for years. Tech team got developers THE EXACT CODE that caused it. Few months of testing go by, telling us they're resolving their memory leak problem (finally).

Today: yeah, we still need restarts because we don't know if this new deployment will fix our memory leak, we don't know what the problem is.

WHAT THE FUCK WERE YOU DOING IN THE LOWER REGIONS FOR THREE FUCKING MONTHS?!?!?! HAVING A FUCKING ORGY???????????????

My friends took the time to find your damn problem for you AND YOU'RE GOING TO TELL ME YOU DON'T KNOW WHAT THE PROBLEM IS???

It was in lower regions for 3 MONTHS and you don't know how it's impacting memory usage?!?!?! DO YOU WANT TO STILL HAVE A JOB? BECAUSE IF NOT, I CAN TAKE CARE OF THAT FOR YOU. YOU DON'T DESERVE YOUR FUCKING JOB IF YOU CAN'T FUCKING FIX THIS.

Every time your app crashes, even though I don't need to get your highest level boss on anymore for approval to restart your server, I'M GOING TO FUCKING CALL HIM AND MAKE HIM SEE THAT YOU'RE A FUCKING IDIOT. Eventually, he'll get so annoyed with me, your shit will be fixed. AND I WON'T HAVE TO DEAL WITH YOUR USELESS ASS ANYMORE.

(Rant directed at project manager more than dev. Don't know which is to blame, so blaming PM)28 -

Me and my girlfriend's pillow talk about memory leaks

Me: **... So garbage collection is a means to stopping a memory leak from occuring

Gf: what 's a memory leak ?

Me: a memory leak is like when you want a pizza, and the guy gives you pizza. But you don't eat the pizza and you ask for another pizza. You keep doing this repeatedly. Until the pizza guy realizes what you're doing and decides to kill you. He then takes back all his pizzas

Gf: why would you do that though?

Me: Lazy ass programmers who don't clean up after themselves.6 -

When valgrind (C Memory allocation error detection tool) aborts due to a memory allocation error...1

-

I like memory hungry desktop applications.

I do not like sluggish desktop applications.

Allow me to explain (although, this may already be obvious to quite a few of you)

Memory usage is stigmatized quite a lot today, and for good reason. Not only is it an indication of poor optimization, but not too many years ago, memory was a much more scarce resource.

And something that started as a joke in that era is true in this era: free memory is wasted memory. You may argue, correctly, that free memory is not wasted; it is reserved for future potential tasks. However, if you have 16GB of free memory and don't have any plans to begin rendering a 3D animation anytime soon, that memory is wasted.

Linux understands this. Linux actually has three States for memory to be in: used, free, and available. Used and free memory are the usual. However, Linux automatically caches files that you use and places them in ram as "available" memory. Available memory can be used at any time by programs, simply dumping out whatever was previously occupying the memory.

And as you well know, ram is much faster than even an SSD. Programs which are memory heavy COULD (< important) be holding things in memory rather than having them sit on the HDD, waiting to be slowly retrieved. I much rather a web browser take up 4 GB of RAM than sit around waiting for it to read the caches image off my had drive.

Now, allow me to reiterate: unoptimized programs still piss me off. There's no need for that electron-based webcam image capture app to take three gigs of memory upon launch. But I love it when programs use the hardware I spent money on to run smoother.

Don't hate a program simply because it's at the top of task manager.6 -

that's quite accurate :) ofc there are exceptions (looking at you two naughty bois, metaspace and off-heap allocations!), but it's true for the most of it :)

5

5 -

Woohoo! I fixed a huge memory leak in our app! ... In one class.

Time for noise cancelling head phones, 80s hacker music, tons of caffeine, and more leak hunting. :)3 -

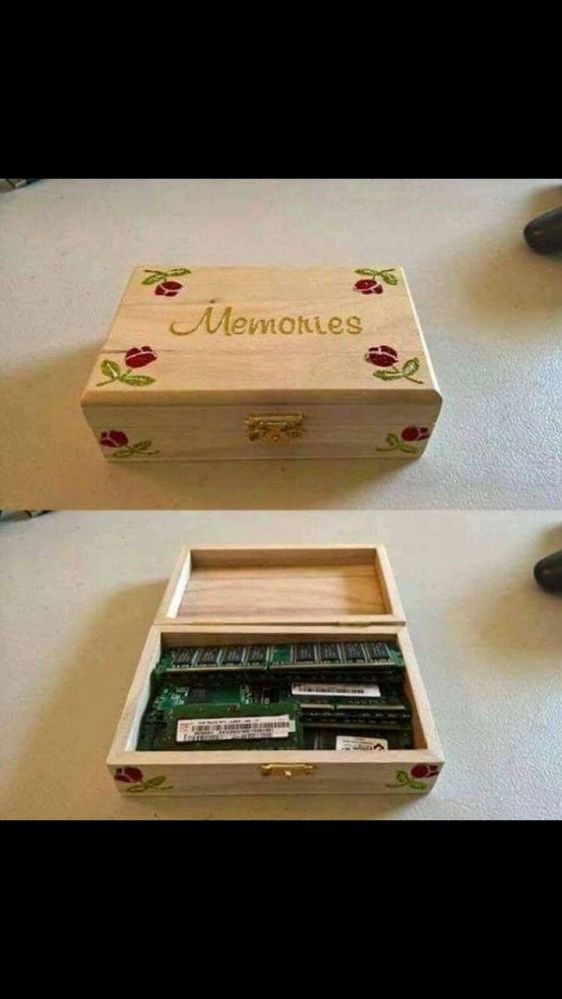

I found something really interesting depicted in the image.

It is because of memory alignment / padding.

I could explain it to you guys, but this url would do a far better job:

https://kalapos.net/Blog/ShowPost/... 8

8 -

Okay, just wrote a program with memory allocation inside an accidental infinite loop and by the time I was able to kill it, it had already claimed 86% of my memory. Scared the shit out of me because my OS was CRAWLING for a while3

-

Programmers then:

No problem NASA mate, we can use these microcontrollers to bring men to the moon no problem!

Programmers now:

Help Stack Overflow, my program is kill.. isn't 90GB (looking at you Evolution) and 400GB of virtual memory (looking at you Gitea) for my app completely normal? I thought that unused memory was wasted memory!1!

(400GB in physical memory is something you only find in the most high-end servers btw)9 -

My brother is just like f*cked up program:

Fortnite > Movies > TV Series > Fortnite > Movies > TV Series > F...

Yes, infinite loop and memory leak at its' best.8 -

Dev: Hi Guys, we've noticed on crashlytics that one of your screens has a small crash. Can you look?

Me: Ok we had a look, and it looks to us to be a memory leak issue on most of the other screens. Homepage, Search, Product page etc. all seem to have sizeable memory leaks. We have a few crashes on our screens saying iPhone 11's (which have 4gb of ram) are crashing with only 1% of ram left.

What we think is happening is that we have weak references to avoid circular dependencies. Our weak references are most likely the only things the system would be able to free up, resulting in our UI not being able to contact the controller, breaking everything. Because of the custom libraries you built that we have to use, we can't really catch this.

Theres not really a lot we can do. We are following apples recommendations to avoid circular dependencies and memory leaks. The instruments say our screens are behaving fine. I think you guys will have to fix the leaks. Sorry.

Dev 1: hhhmm, what if you create a circular dependency? Then the UI won't loose any of the data.

Dev 2: Have you tried looking at our analytics to understand how the user is getting to your screens?

=================================

I've been sitting here for 15 minutes trying to figure out how to respond before they come online. I am fucking horrified by those responses to "every one of your screens have memory leaks"2 -

When you Valgrind your program for the first time for memory leaks and get "85000127 allocs, 85000127 deallocs, no memory leaks possible"

4

4 -

Today I have discovered that my fingers have become so accustomed to writing the word "vertex", that I can no longer write "vector" on the first try...1

-

Fucking Windows... If you don't have enough memory for everything youre supposed to do, then kill whatever you want, but not the fucking graphics driver.

What should I do then? Close something different? Fuckin Monkeys.1 -

Dear customer,

You pointed out, that the program is failing due to insuffizient memory.

"Memory" is the RAM in your Laptop.

"Insuffizient" means YOUR FUCKING RAM CANT HOLD THE ENTIRE 2 TB DATABASE!

GTFO and RTFM!

Best regards

me6 -

Achievement unlocked: malloc failed

😨

(The system wasn't out of memory, I was just an idiot and allocated size*sizeof(int) to an int**)

I'd like to thank myself for this delightful exercise in debugging, the GNU debugger, Julian Seward and the rest of the valgrind team for providing the necessary tools.

But most of all, I'd like that three hours of my life back 😩4 -

My last phone with keyboard, bought not so long ago. I miss ssh to my srv over a phone sometimes. But I do not miss hacking android to move graphic memory to standard memory to boost Os speed...

7

7 -

Our team makes a software in Java and because of technical reasons we require 1GB of memory for the JVM (with the Xmx switch).

If you don't have enough free memory the app without any sign just exits because the JVM just couldn't bite big enough from the memory.

Many days later and you just stand there without a clue as to why the launcher does nothing.

Then you remember this constraint and start to close every memory heavy app you can think of. (I'm looking at you Chrome) No matter how important those spreadsheets or illustrator files. Congratulation you just freed up 4GB of memory, things should work now! WRONG!

But why you might ask. You see we are using 32-bit version of java because someone in upper management decided that it should run on any machine (even if we only test it on win 7 and high sierra) and 32 is smaller than 64 so it must be downwards compatible! we should use it! Yes, in 2019 we use 32-bit java because some lunatic might want to run our software on a Windows XP 32-bit OS. But why is this so much of a problem?

Well.. the 32-bit version of Java requires CONTIGUOUS FREE SPACE IN MEMORY TO EVEN START... AND WE ARE REQUESTING ONE GIGABYTE!!

So you can shove your swap and closed applications up your ass but I bet you that you won't get 1GB contiguous memory that way!

Now there will be a meeting about this issue and another related to the issues with 32-bit JVM tomorrow. The only problem is that this issue only occures if you used up most of your memory and then try to open our software. So upper management will probably deem this issue minor and won't allow us to upgrade to 64-bit... in 20fucking1910 -

- Let's write some code to check for memory leaks

- Oh shit, memory is leaking like crazy

- In fact the program crashes within 10 minutes

*Some hours of debugging and not finding the cause later*

- Starts thinking about the worse

- Hell yeah, the memory leak is caused by the code that checks for memory leaks. But fucking how

- Finds out the leak is caused by the implementation of the std C lib

- In the fucking printf() function

- Proceeds to cry5 -

*Keyboard breaks*

*Calls Desktop Support*

Me: Hey, my keyboard is broken. I want to replace it.

*Support guy sends new keyboard*

*Calls support again*

Me: Hey, the shift key on the left side of the new keyboard is broken.

I don't know who came up with this keyboard design, but that person really wanted to see developers who write in camel case suffer while their muscle memory adapts. 16

16 -

Well done, Google Chrome, you ate most of my 16 GB of RAM! >:v

P.D. I said "most of" because of the memory allocation table 5

5 -

Yes of course, my problem is not having enough memory to throw an exception of not enough memory ...

5

5 -

God damn it lastpass, how the hell do you get a memory leak and an infinite loop in a fucking browser extension?! Using 7GB of RAM and all 8 cores @ 3,4GHz!!9

-

Windows 10 wants to ruin my life by consuming almost 70% of memory for itself from 4 gigs.

No application is running and still consuming that much of memory. Now I just hate the updates of windows 10 pro.

Any suggestions to get rid of this situation? 26

26 -

Me: "It's a balance between three things: you either optimize for computation, memory use, or programming effort. Computers don't have a infinitely fast processors with an infinite amount of memory."

Coworker: "Did anybody tell Java?"3 -

Q: What's a "muscle memory"?

A: It's when you open up devRant, skim through several posts, get bored, decide to visit some other website for more stimulation, close the tab with devRant, open a new tab and your hands type in devrant.com [ENTER] before you know it3 -

When you thought web browsers use too much memory, introducing a document scanning app that uses only 2,7 GB of ram.

Just a slight memory leak. 5

5 -

"Insufficient memory for Java Runtime Environment"

I'm using a host with 32 GB ROM, fuck you Java.12 -

Kernel coming along slowly but surely. I can now fetch the memory map and use normal Rust printlns to the vga text mode!

Next up is physical memory allocation and page maps 17

17 -

I confess that I know how to manage memory on assembly language, but I never knew how to use the memory button of my Casio calculator :'v Should I be ashamed?7

-

Linker crashed while building LLVM from source AT FUCKING 97% ARE YOU FUCKING KIDDING ME?

(Antergos , GCC 7)

The error was that it exhausted the memory. How the fuck does a system with 16GB RAM and a swapfile run out of memory while building something? Dayum.5 -

Here for you, just so you cant sleep this night:

while (true) {

new long;

cout<<"Deal with it, motherfucker ";

}7 -

Researchers were able to store 3 bits of data per cell in phase-change memory, beating the previous limit of 1 bit per cell, IBM said. PCM competes with dynamic random-access memory.

5/19/2016. 2

2 -

I'm really enjoying rust now. It was worth the struggles.

I was really surprised to see, a NodeJS server takes around 40-60MB of memory whereas Rust (Actix web) server takes around 500KB-2MB :O whoa! Awesome!3 -

Today I had an app throw an out of memory exception, while trying to throw an out of memory exception1

-

!rant

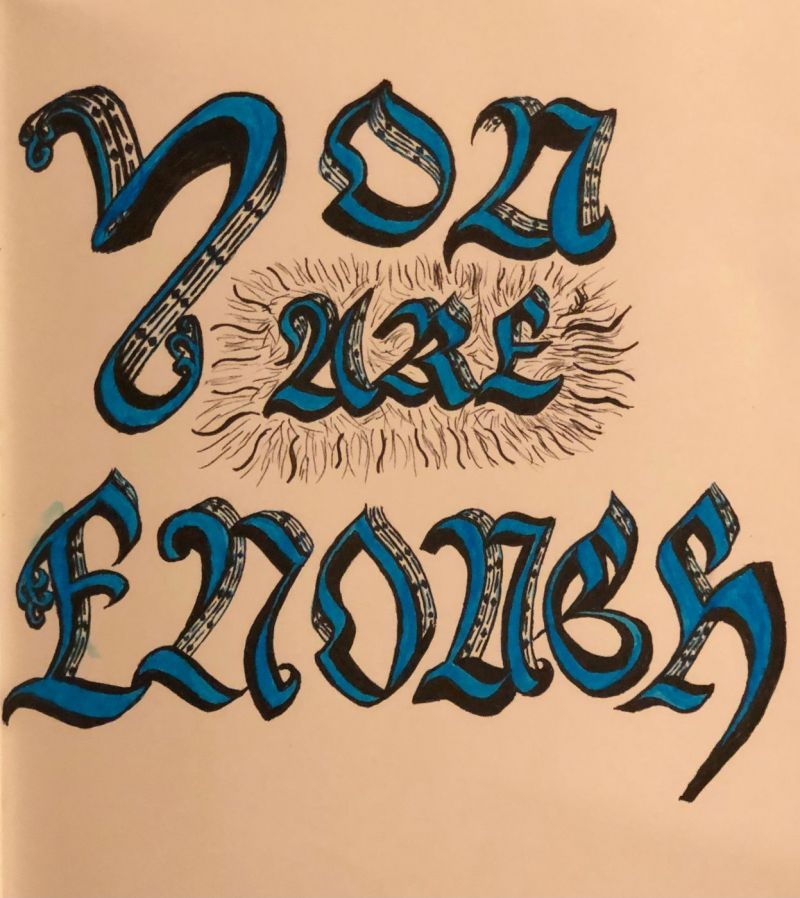

Human memory is fascinating. It’s interesting to think about the events that helped shape you into what you are. And how sometimes those events are exchanges with people who probably never gave the moment a second thought, but fundamentally changed how you relate to others.

For example: In ninth grade, I became friends with a group of seniors. Spent every lunch period together, auditioned and landed a part in a play to hang out with them. We never hung out over the weekends, but my dad had died two years before and I didn’t hang with anyone on weekends.

Then at the end of the school year, I’d actually got my mum to buy me a school yearbook and was super excited to have my friends sign it. But when I asked them to, one of them furrowed their brow and said, “Amy, we’re not really friends...”

And I haven’t trusted friendships since.

Anyway, for anyone who needs to read this right now: 4

4 -

Hello guys. Today I bring you my list of top 3 programs that use too much memory

🪟 Windows 10+

⚛️ Web browsers, Electron

🐋 Docker containers

Honorable mention: ☕Java

The developers of those programs should put more effort into optimizing memory usage13 -

So I'm making a file uploader for a buddy of mine and I got an error that I had never seen before. Suddenly I had C++ code and some other weird shite in my terminal. Turns our that I got a memory leak and the first thing that sprung to mind was "Fuck yes, I get to do some NCIS ass debugging".

Now the app worked fine for smaller files, like 5MB - 10MB files, but when I tried with some Linux ISO's it would produce the memory leak.

Well I opened the app with --inspect and set some breakpoints and after setting some breakpoints I found it. Now, for this app I needed to do some things if the user uploads an already existing file. Now to do that I decided to take the SHA string of the file and store it in a database. To do this I used fs.readFile aaaaaaaaaand this is where it went wrong. fs.readFile doesn't read the file as a stream.

Well when I found that, boy did I feel stupid :v 5

5 -

I was cleaning up my hard drive and deleted some old directories.

I was notified that my backup just started and wanted to look how far along it was.

However, instead of 'ls -l /mnt/DATA/Backup' my shitpile of muscle memory typed 'rm -rf /mnt/DATA/Backup'... That's when my harddrive suddenly had 750GB of free space and I decided that I probably need some sleep.

Before any of y'all wanna lecture me on off-site backups, funny thing: Today I implemented a new daily backup routine (praised be borg) and therefore deleted my somewhat chaotic Backups on my NAS "Because if shit hits the fan, I still have my local Backup"2 -

When I was in college I was working on a game in Java using Slick2D. My folks were away on holiday so I had the ability to drink in the house (I was over 18). I worked on this coursework piece whilst drinking.

The next morning I went into college with my work and found that it had a massive memory leak that was included by the work that I’d done whilst under the influence.

The issue was fixed (quite easily tbh) but everyone in my class reminded me for the rest of the year...5 -

it was not a technical interview.

just screening.

guy: tell me smth about redis.

me: key value, in memory storage.

guy: more

me: umm, the concept is similar to localStorage in browsers, key value storage, kinda in memory.

guy: so we use redis in browsers?

me: no, I mean the high level concept is similar.

guy: (internally: stupid, fail).3 -

Most memorable co-worker was a daft idiot.

this was 10 years ago - I was working as a junior in my very first job, fresh out of uni, for a very small startup. It was me, and the 3 founders, for a very long time. Then this old (45, from my perspective then..) dev was hired.

This guy had no idea how to do the job. no common sense. the code confused him. the founders confused him. I was focusing on my work - and was unable to help him much with his. His only saving grace? He was a nice guy. Really nice.

But why was he so memorable, out of all the people I ever worked with? simple. He had a short term memory problem. Could not, even if he really tried, remember what he did yesterday.... when I asked him what his issue was, he decribed his life is like a car going in reverse in a heavy fog. "I can only see a short distance backwards, with no idea where I'm going".

Startup was sold to a big company. I became a teamlead/architect. He? someone decided he should be a PM. -

Fucking your fucking module allocates fucking memory fucking deallocate the fucking memory in your fucking module.

Don't fucking bullshit me!11 -

!rant

I just stumbled upon a first game I ever programmed back in highschool. Oh the nostalgia and the urge to cringe. Apparently I thought programing a game in visual basic and leaving an enormus memory leak was a good idea. Well I guess you have to start somewhere.3 -

My boss just now: "In a 32 bit machine the memory limit is 1.6 GB. After that programs routinely crash."

What really happens IMO? He writes programs that crash when they reach 1.6 GB allocated and the architecture is 32 bit. But it's a limit of his software, not one of the OS.8 -

Ohhh, I get it. Apple thinks it isn't getting enough money from us by selling us one iMac, so it wants to sell us a second one by making the first one's memory physically non-upgradeable via soldered memory even though the software says it _could_ be upgraded.2

-

!rant

I started learning to use Hadoop recently, and am running a VM with all I need installed on it (the HDF Sandbox to be exact). The VM wants 8GB to run and my laptop only has just that, except it also needs to run Windows at the same time...

At first I thought I was screwed, that I'd need a more capable computer to learn. I gave it a shot anyway, and told VirtualBox to give the VM 4GB, hoping the VM itself would use RAM swap to function. And it did!

What I didn't expect was Windows not slowing down even a bit. Turns out Windows can triple the computer's RAM with virtual memory that it keeps on disk.

So the bottom line is: my VM is using 4GB as if it was 8GB, and at the moment my Windows is using 8GB as if they were 14GB. All of this without breaking a sweat. The more you know!3 -

After lot of efforts (connectivity and memory issues :p) finally got Devrant installed. Feeling excited.

<"Hello Devs"/>4 -

FUCKING HELL!

I just shutdown my computer after deciding to leave the unfinished feature that I started a couple hours ago for tomorrow.

Not 5 fucking minuets later I had found a solution in my head but now don’t want to spend the time to turn my computer on to fix it. Ugh1 -

Fuck you react native and your stupid memory leak on dev machine! You are even worse than chrome 🤦🏼♂️

-

Valgrind is awesome. Today I fixed a lot of memory leakage / overflow bugs thanks to it. An guess what? Now, everything works!

-

WOW! Firefox you are worse than Chrome! From 10GB used memory down to 3GB when you are closed :|

(had a VM taking some of the memory, closing it made memory go down to 10GB from 14GB used) 8

8 -

Okay, friends. I have a challenge. Who can make the sneakiest memory leak example? I need to stump a class of students with something only valgrind can find and I'm having a hard time.5

-

I Google

I stack overflow

I am an index that references

The internet memory

The modern day code-er mantra -

Okey, so the recruiters are getting smarter, I just clicked how well do you know WordPress quiz (I know it's from a recruiter, already entered a php quiz An might win a drone)

So the question is how to solve this issue:

Fatal error: Allowed memory size of 33554432 bytes exhausted (tried to allocate 2348617 bytes) in /home4/xxx/public_html/wp-includes/plugin.php on line xxx

A set memory limit to 256

B set memory limit to Max

C set memory limit to 256 in htaccess

D restart server

These all seem like bad answers to me.

I vote E don't use the plug-in, or the answer that trumps the rest, F don't use WordPress 4

4 -

HOW COCKSUCKING DIFFICULT CAN IT BE TO CHANGE THE FUCKING MEMORY LIMIT FOR NPM PACKAGES?!

HOLY MOTHERFUCKING SHIT.6 -

A full 4 level page table on x86_64 is over around 687 GB in size. Allocating it fixed size obviously won't work...

So now when allocating virtual memory pages for an address space I sooner or later have to allocate one page to hold a new page table... which has to be referenced by the global page table for me to use... where there isn't any more space... which is exactly the reason we allocated a new page in the first place... so I'm fucked basically8 -

So, vs2015 is crashing when the process gets to 2gb .... 32bit .net process memory limit strikes again!!! 80 projects in the solution & what looks a run away extension is taking the memory !!!

Come on M$ it should be 64bit on a 64bit system!!

Now the hunt for the extension that's causing it!! -

C++ might be very good in memory management but it's an absolute pain in the ass. When the src has thousands of lines of code it's clos3 to impossible to manage the memory. The errors are so vague.

And porting the code to a new hardware is an absolute nightmare.1 -

Sooooo I learn c. Programmed antivirus project last night, and there is 13, 374 bytes of memory leak. (BTW the program crashes at return 0). *Rage and despair*

4

4 -

I have waitsted whole my day searching a bug with memory allocation in C++, and still don't know how to fix it! That moment, when coding took me far less time than searching that fucking bug... I feel that i missed anything, but all looks ok

I HATE C++ WITH IT'S FUCKING POINTERS!!!!!25 -

I've always thought I was somewhat lazy about not caring about plaintext password in RAM in WPF (or whatever) but then this guy made a super valid point...

I really think a hacker would just keylog at that point rather than trying to read your obscure program's memory for your password... especially if they have access to raw memory... 3

3 -

I'm trying to investigate why chrome keeps crashing after i implemented web sockets to a web app.

I used windows perfmon to see the memory usage over night.

The usage between 17:30 and 01:50 is expected behaviour as this part of the app is a live data graph of the last 48 hours.

Now i have to find out why the app doubles in memory twice in a hour. 2

2 -

C++. Damn the pointers. It's because I learned Java before C++ and the memory management in C++. I don't get it ever, the object creation, memory allocation, deallocation and everything3

-

Turns out the app was crashing because YouTube was hogging 8GB of memory and so queries started failing spontaneously.2

-

You know a server is having a jolly'ol time when, while logging through the serial console, it lags... Then, a few seconds later, you get a message

[time.seconds] Out of memory: Kill process PID (login) score 0 or sacrifice child

[time.seconds] Killed process PID (login) total-vm:65400kB, anon-rss:488kB, file-rss:0kB

10/10, only way to bring the server back to life was by a hard-reset :|3 -

Me: there are a lot of memory leaks in my application i should do something

Inner me : teacher does know that, submit the project1 -

F**k companies who's apps use MySQL/MariaDB tables of the table engine MEMORY.

Seriously.

That engine *sucks* to work with as an admin. It's such a huge pain in the ass having to always dump the whole DB instead of taking a snapshot.

And if the replica restarts... Poof. Replication breaks. Cuz all the memory tables are suddenly empty!

Fml. Fmfl. Ugh.17 -

When you keep receiving notifications from a app that you uninstalled because it's still loaded into memory.2

-

When ryzen released I bought a 1700x a little while after with 3600 cl15 memory.

Back then it only ran at 3.6ghz cpu and 2800 mhz cl16 memory.

Now im on 3.75 ghz and 3333mhz cl14.

I honestly didnt expect this to happen1 -

Hello C++ / C programmers. I've noticed my professor putting the ASCII code of a character into an int instead of just using a char to store it. When he does this he's not doing math or anything with them, so is there any advantage to it? My TA mentioned something about memory alignment, but I'm not experienced enough to know how something being aligned differently in memory would help or hurt a program.5

-

You know the PHP legacy code base is complete garbage when it requires a script memory limit of 1.5GB.9

-

Thank you, company forced windows update! My 60 minutes reconfiguring rabbitmq and postgres were well invested instead of investigating the memory leak fucking hibernate causes.

I'm done. -

As I keep saying, we should spend less time developing "better, safer" tools and practices and more time making sure the developers that use them know what they're doing. The bugs caused by lack of memory safety are rare (although often more critical) compared to the bugs caused by developers not paying proper attention to what their code does in the first place.

https://theregister.com/2023/01/...11 -

Ive been looking for a rant that mentionned being payed to review code for something like 50pounds an hour.

Can any one help me to either find the person/rant/company that offers this service?5 -

TIL don't rely too much on in memory databases if your client runs development and production environment on the same machine.

Just don't7 -

How do you organize your downloads folder?

Personally, I make a new folder with some name(altough the name actually being useful is rare) and just select all of my files and dump them there. Finding a file sucks so much though, I can never remember their names so I just look through the folders at the icons and hope I find the file I'm looking for. This mess that is my downloads folder led to looking 5 times in a folder to find a file.

My DOS VM is more organized than that...

Speaking of DOS managing memory in that is hell. I've never had memmaker detect 64MB of RAM, giving the VM 96MB of RAM made it detect 2 more MB or something.4 -

Production goes down because there's a memory leak due to scale.

When you say it in one sentence, it sounds too easy. Being developers we know how it all goes. It starts with an alert ping, then one server instance goes down, then the next. First you start debugging from your code, then the application servers, then the web servers and by that time, you're already on the tips of your toes. Then you realize that the application and application servers have been gradually losing memory over a period of time. If the application is one that don't get re-deployed ever so often, the complexity grows faster. No anomaly / change detection monitor can detect a gradual decrease of memory over a period of months.2 -

Quick question...

Can you guys code without any reference?

I find very hard to memorize all the functions and etc...4 -

Identifying when to start a project over because it has gotten out of hand with workarounds and memory management issues.

-

Friend asked him if I could test his program.

I help him test his program and found a memory leak.

He investigated the issue...

After a few hours, he found out that his garbage collector had a memory leak :^)6 -

The past couple of weeks I've been struggling with my laptop. It regularly ran out of memory and when that happens everything runs in a snail pace. I always thought 8GB would be enough for developing software, but I was terribly wrong.

So I ordered another 8GB and installed it yesterday. Later at work I looked at the ram usage and noticed that it was up to nearly 13GB!

I have no idea how I managed to get by with only 8 for so long. 🤔

FYI: I usually have 2 to 3 IDEs and a gazillion chrome tabs open 😅6 -

I went to bed and before sleeping opened an app on my phone that controls the music on my PC.

At first glance it looked like something was different so my first thought was "Ooh, they updated the app" and then I remembered that no, I didn't. I'm a lazy sack of crap that didn't update that app in a while and I didn't even implement everything.

On the plus side, I did actually get the basics working so... :D -

Hey guys and gals, I built a silly little memory game! Comment with your best scores (no inspecting elements...that's cheating). Also, don't click too fast or it'll break. Lol

http://threetendesign.com/memory4 -

Finished work early, shutting down the PC, Clicked Ctrl+S instead. Fucking Muscle Memory still want me to work?

-

Just accidentally remembered my first encounter with Discrete Math... who knew hell could be a memory.

-

To anyone with good knowledge of RxJs:

Should I be careful how many subscriptions I have open at a time? I'm specifically thinking about memory usage.

While obviously it's more, does it make a huge impact on memory usage, if I have 20 subscriptions active, compared to 2?5 -

Today in Amy can’t remember words, I forgot the word nostalgia and instead said “pangs of wistful memory”. You’re welcome.1

-

So to give you a feel for what evil, clusterfuck code it was in: this projects largest part was coded by a maniac, witty physicist confined in the factory for a month, intended as a 'provisional' solution of course it ran for years. The style was like C with a bit of classes.. and a big chunk of shared memory as a global mud of storage, communication and catastrophe. Optimistic or no locking of the memory between process barriers, arrays with self implemented boundary checks that would give you the zeroth element on failure and write an error log of which there were often dozens in the log. But if that sounds terrifying already, it is only baseline uneasyness which was largely surpassed by the shear mass of code, special units, undocumented madness. And I had like three month to write a simulator of the physical factory and sensors to feed that behemoth with the 'right' inputs. Still I don't know how I stood it through, but I resigned little time afterwards.

Well, lastly to the bug: there was some central map in that shared memory that hold like view of the central customer data. And somehow - maybe not that surprisingly giving the surrounding codebase - it sometimes got corrupted. Once in a month or two times a day. Tried to put in logging, more checks - but never really could pinpoint the problem... Till today I still get the haunting feeling of a luring memory corruption beneath my feet, if I get closer to the metal core of pure C.1 -

just wondering: how much of your programming / development is based on your memory and how much do you use Google just for a reminder and how much do you ude Google for the entire code?2

-

It's 2022 and people still believe USB sticks and external card readers are a replacement for memory card slots.

They're not. SD cards have a standardized form factor and do not protrude from memory card slots, but external card readers and USB sticks do.

Just like smartphones, laptops are increasingly ditching the SD card slot or replacing it with microSD, which has less capacity, lower life expectancy and data retention span due to smaller memory transistors, worse handling, and no write-protection switch.

Not only should full-sized SD cards be brought back to laptops, but also brought to smartphones. There might soon be 2 TB SD cards, meaning not one second of worrying about running out of space for years. That would be wonderful.18 -

Why somebody would think that allocate huge amount of objects in the static memory make any sense?? Why??? You need to allocate a bloody database context and all the allocation of your IOC containers and keep increasing!!!

-

The problem with moving Docker containers from your decked-out dev machine to a VM on AWS when your boss has told you to keep costs down:

1. Start Micro instance, 1++ gig memory

2. Get Out of Memory error from app after 30 minutes

3. Goto 1 -

Linux.

Guys, I need some inspiration. How are you dealing with memory leaks, i. .e identifying which component of the system is leaking memory?

Regular method of dumping ps aux sorted by virtual memory usage is not working as all the processes are using the same amount of memory all the time. This is XEN dom0 memory leak, and I have no more ideas what to do.

Is it possible that guests could be eating the dom0 memory?15 -

Fighting against a read-only-memory-write exception of a com object for two days. Feeling like Spartacus but without a result for now. Wanna only sit down and cry. 😢 by the way... Outdated machines with win7 and 2 gigs of ram 😨. This is my second I-hate-this-F*****g-world rant this month. I'm gonna really hate this world! 😬😈4

-

I usually like PHP, because it is easy to use, but FUCK! Can you just let me free the fucking memory by myself? Setting variable to null doesn't work, unset doesn't work either. I am still getting fucking memory exhausted error.

There is literally no data stored anywhere, because I unset every fucking thing.

gc_collect_cycles() doesn't work either, probably because this crap thinks there is a reference for this variable somewhere.12 -

I can't really figure out how I grew from learning_syntax -> remembering_function_names -> following_patterns -> developing_a_personal_style -> reading_the_doc -> getting_the_source.

Well I have a long memory problem, so I guess it happened overnight!

Wait, did the doctor say it was a memory problem? Hell no! -

Go to hell elastic cloud!

While true:

I can’t resize my elasticsearch instance to get memory because it’s stuck….

It’s stuck because it doesn’t has enough memory to actually start …

Wtf!2 -

That realization that you have a memory leak that invalidates all of your previous performance testing

-

Feel dirty writing in c. How do people even deal with unsafe pointer type casting/memory allocation/free? The codebase is plagued with memory leaks and there is no test.

I will just pretend I can't read c code and play dumb when shit happens12 -

When C devoper creates a memory leak standard practice valgrind time.

When a webdeveloper creates a memory leak is the day they start to learn javascript. -

So there's a proposal for C++ to zero initialize pretty much everything that lands on the stack.

I think this is a good thing, but I also think malloc and the likes should zero out the memory they give you so I'm quite biased.

What's devrants opinion on this?

https://isocpp.org/files/papers/...14 -

Memory debugging iOS probably makes me more anxious and stressed out than anything. I have put 11

hours into attempting to figure out this crash, but still no progress. It's like I can feel management breathing down my neck to get it done asap. You ever get so stressed out while trying to figure something out at work?3 -

Ok ok.. I used a German keyboard so Y and Z are switched. Ive never seen a picture of Jason Mraz but I really like his music so I wanted to YouTube him.. and my muscle memory did this.

2

2 -

Does anybody have experience with this? Is it worth the money?

"My Cloud network memory, automatically synchronize data, worldwide access" 4

4 -

What a mess ^^

From one moment to another unit-tests on my local machine stopped working.

There was a PHP fatal error, because of insufficient memory.

Actually, there was a ducking "unit"-test of a controller action "log".

This action returns the content of the projects log file...

Since this log file grew over the time, PHP tried to assert the response of the controller action which was sized about 400MB.

C'moooooon guys!

What were your thoughts behind this bullshit? ^^ -

Does anybody use raspberry pi as Kubernetes node? I kinda have an experience of my pis do not have enough memory with 1Gb. The system would run about 5 minutes, and each second more memory would be allocated. After that, every node shut itself down. Is there anything I can do about that?2

-

sweaty_decision_meme.jpg

- Debugging some application locally (with debugger)

- 20-30 manual step-ins, tracking those values VERY closely

- debugger becomes a little sluggish

- move mouse to select a line to jump to

- cursor is lagging: all jumpy and everything

- CTRL+ALT+F1

- everything freezes.

sooo...either reboot the laptop and lose all the work, or wait for OOMK to kick in, which could be hours, depending on the level of memory starvation.13 -

I actually don't understand why most people like saying bad things about electron-js been a memory hog. I am not denying the fact that it sucks up system resources. Placing all the blame on electron-js is irrational because most apps built untop of electron-js does not hug memory (vscode is a living testimony to that). When you use bloated frameworks and/or libraries you are bound to have memory issues. When you don't understand how to manage memory effectively (in higher level language - you still have to do something for your value to be garbage collected) you are bound to be held captive in the chains of memory consumption.

Don't hate electron8 -

Seriously, screw whoever at Apple decided to make my Mac not have upgrade-able RAM. Late 2015 8GB slow Mac is slow.10

-

everyone has one function that they have to look up every time they use it. for me its str_replace() in php. i can never remember the order of the parameters...and ive been using it for almost 10 years....3

-

Customer: your app is not returning all the objects in my bucket

Support: check console log 500 server error, ssh into box check logs exhausted memory limit.

Sudo vim /etc/php.ini search memory limit

Update to a high number restart Apache sit back and think fuck did I set it to high will it blow up my server.

Only time will tell!!! Sorted out the issue until the next user with millions of objects in their buckets -

Back in game dev final year, working on GameCube kits, I encountered a weird rendering bug: half the screen was junk.

I was following the professors work and was bewildered that mine was broken.

The order of the class (c++) was different...

I think there was a huge leak somewhere and the order of the class meant memory was leaking into VRAM. I never had the chance to bug hunt to the core of it... Took a while to realise it was that...

It opened my eyes to respect memory haha.2 -

It's pain in the ass, when you finally managed to free enough memory to keep your android os up-to-date and just a few minutes after update restart getting a message that there is an os update, which needs another 200mb.

It's a never-ending torture..4 -

Why TF does nodejs just eats 100mb of ram away for a simple application with ONE websocket connection ? I've tried getting some heap snaps, memory allocation timelines and used memwatch-next, but to no result AT ALL! Since the heap stay small but the rss memory grows like there is no tomorrow.

-

Git's fucked, I guess I have to retype my website from memory. :/

```

git restore --source=HEAD :/

``` 2

2 -

When was the last time you dealt with an evasive memory leak in JavaScript? How complicated was it and how long did it take to resolve?1

-

I was recently reading about memory leaks and profiling and found a really excellent article for people new to c# or best practices. It's a great article and well worth the read if you're still learning.

https://michaelscodingspot.com/find...6 -

You know what really grinds my fucking gears?

When I increment my pointer beyond the memory the operating system allocated it.

Who are you to define how much memory my pointer is allocated? You fucking bigot!1 -

How did mid-2000s computer users get along with just 1 GB of RAM or less?

As of today, anything less than 8 GB of RAM seems impractical. A handful of tabs in a web browser and file manager can quickly fill that up.

Shortly after booting, 2 GB of RAM are already eaten up on today's operating systems.

When I occasionally used an older laptop computer with 6 GB of RAM (because it has more ports and better repairability than today's laptops; before upgrading the memory), most of the time over 5 GB were in use, and that did not even include disk caching.

It appears that today's web browsers are far more memory-intensive than 2000s web browsers, even if we do similar things people did in the 2000s: browsing text-based pages with some photos here and there, watching videos, messaging and mailing, forum posting, and perhaps gaming. Tabbed browsing already was a thing in the 2000s. Microsoft added tabs to their pre-installed browser in 2006, back when an average personal computer had 1 GB of RAM, and an average laptop 512 MB!

Perhaps a difference is that people today watch in 720p or 1080p whereas in the 2000s, people typically watched at 240p, 360p, or 480p, but that still does not explain this massive difference. (Also, I pick a low resolution anyway when mostly listening to a video in background.)

One could create a swap file to extend system memory, though that is not healthy for an SSD in the long term. On computers, RAM is king.14 -

Why the ever loving fuck does Windows take MORE ram to close a fucking program, than to run it. I'm STOPPING a fucking operation. There is nothing about this concept that requires more processing or memory. When I click the fucking close button, you're supposed to free up memory and stop running shit. Not the opposite.3

-

I'm having a laptop which is powered by i5 3230M with 6G memory (1 x 4G and 1 x 2G). Sometimes I can feel the lag while I'm working on multiple IDEs. So, how much more memory do you guys suggest?

(2 x 4G) or (1 x 4G and 1 x 8G)19 -

They tried to mark him, they even tried compacting him and his children, but this old generation instance is not going down without a fight. He’s in a big heap of trouble, and he’s running out of time. You better count your references, because this summer’s stop-the-world event will have you staying at work all night: Memory Leak: Production is in your code NOW

-

had a blast helping a pal install arch (and setting up the necessities, like i3-gaps, neofetch, wal, etc...). tonight was awesome.

PS: i can recite any basic arch install by memory now, EFI or BIOS, and i'm slightly better at navigating vim now5 -

TL;DR When talking about caching, is it even worth considering try and br as memory efficient as possible?

Context:

I recently chatted with a developer who wanted to improve a frameworks memory usage. It's a framework creating discord bots, providing hooks to events such as message creation. He compared it too 2 other frameworks, where is ranked last with 240mb memory usage for a bot with around 10.5k users iirc. The best framework memory wise used around 120mb, all running on the same amount of users.

So he set out to reduce the memory consumption of that framework. He alone reduced the memory usage by quite some bit. Then he wanted to try out ttl for the cache or rather cache with expirations times, adding no overhead, besides checking every interval of there are so few records that should be deleted. (Somebody in the chat called that sort of cache a meme. Would be happy , if you coukd also explain why that is so😅).

Afterwards the memory usage droped down to 100mb after a Around 3-5 minutes.

The maintainer of the package won't merge his changes, because sone of them really introduce some stuff that might be troublesome later on, such as modifying the default argument for processes, something along these lines. Haven't looked at these changes.

So I'm asking myself whether it's worth saving that much memory. Because at the end of the day, it's cache. Imo cache can be as big as it wants to be, but should stay within borders and of course return memory of needed. Otherwise there should be no problem.

But maybe I just need other people point of view to consider. The other devs reasoning was simple because "it shouldn't consume that much memory", which doesn't really help, so I'm seeking you guys out😁 -

So, like, why doesn't Java let me do manual memory management? In C# if I want to screw up the code-base and everyone that comes after me with my half-informed experiments it totally lets me.21

-

My MacBook Pro crucial memory RAM died after 4 years of using.

Any brand suggestions compatible with Mac ?7 -

I need to monitor the off heap memory of a spark on yarn application (executors, mostly) running in Java. Any tool/method that someone could suggest?

-

Teaching coworkers performance tuning, we have the memory enough that you don't need to write to disk... Really the data isn't Even a MB....

-

1. Unending relentless quest for learning

2. Linear memory capacity

3. Uninterrupted autohealing capabilities like sleep -

Hi Everyone,

I am working as a jr front end developer and wanted to study more about performance profiling in Chrome and finding memory leaks using Dev tools. I searched online for a while and not able to find a nice place to start with, can anyone help me out with a resource from where I can start the debugging performance using Chrome Dev tools.

It would be very helpful. -

I have a personal opinion and correct me if I'm wrong

Why does a programmer need to learn behind the scenes stuff (memory allocation).

I know it helps to understand the concepts better.

Why would I learn how the engine of a car works in order to drive a car ?.

And the only task is required from you to drive a car.

And literally you can code without knowing any of these stuff and since companies only need clean and efficient code.

Will it be really helpful to enhance your coding skills if you know behind the scenes stuff ?6 -

Lesson learned .. never use sailsjs

Magic data loss

Laggy as fuck (832ms)... php5 runs better than this(210ms)

memory leaks -

"Well GPU main memory is L2. CPU main memory is L3. Which is why GPUs are so much faster." - cs major in my college.

Someone please confirm if this is a common opinion.2 -

I've not yet understand the difference between virtual address and logical address when we speak of RAM memory

-

Recently joined new Android app (product) based project & got source code of existing prod app version.

Product source code must be easy to understand so that it could be supported for long term. In contrast to that, existing source structure is much difficult to understand.

Package structure is flat only 3 packages ui, service, utils. No module based grouped classes.

No memory release is done. So on each screen launch new memory leaks keep going on & on.

Too much duplication of code. Some lazy developer in the past had not even made wrappers to avoid direct usage of core classes like Shared Preference etc. So at each place same 4-5 lines were written.

Too much if-else ladders (4-5 blocks) & unnecessary repetitions of outer if condition in inner if condition. It looks like the owner of this nested if block implementation has trust issues, like that person thought computer 'forgets' about outer if when inside inner if.

Too much misuse of broadcast receiver to track activities' state in the era of activity, apપ life cycle related Android library.

Sometimes I think why people waste soooo... much efforts in the wrong direction & why can't just use library?!!

These things are found without even deep diving into the code, I don't know how much horrific things may come out of the closet.

This same app is being used by many companies in many different fields like banking, finance, insurance, govt. agencies etc.

Sometimes I surprise how this source passed review & reached the production. -

Working on a batch image editor in python as my most recent time killer project. Started out using PIL for py2. Port over to pillow on py3, and one of the core pillow functions exploded my computer.

It memory leaked and took every last kb of unused memory!

Guess Im stuck using py2 -

That point where you start to think about decalring variables once globaly to save space in memory ...1

-

Writing the general memory allocator for my hobby os. It's kludgy, but it works.

I add a single for-loop that executes well after that.

The frame allocator can't initialize.

fml -

Spend like 3 weeks in mem-checking with valgrind and ASAN, because there seemed to be some leaks. So painful and scary. You loose all confidence in your software, the checking tool, your own sanity.

Some spurious result prevailed, could only move it around. Boss could not reproduce the problem on his machine; Ubuntu 18 with GCC 7, mine was Debian 9 with GCC 6, so I tried older Ubuntu with GCC 5. Also no problem.

Fuck it, I'm switching to clang. -

RetinaPixcom: Your Ultimate Camera Gear Destination in Delhi for Nikon, Sandisk, Sony, and Mobile Gimbals

Looking for the best camera store in Delhi? Look no further than RetinaPixcom, your one-stop shop for all things related to cameras, memory cards, and accessories. Whether you're a professional photographer, a budding content creator, or just someone who loves capturing memories, we offer high-quality products from top brands like Nikon, Sony, Sandisk, and a wide range of mobile gimbals. Our store, located at Ground Floor, B-10, Hauz Khas, Hauz Khas Market, Kharera, Hauz Khas, New Delhi, is ready to help you find the perfect gear for your photography and videography needs.

Nikon Store in Delhi: Professional Cameras for Every Photographer

Nikon is one of the most trusted names in the photography world, offering high-performance cameras and lenses for every type of photographer. Whether you're an amateur capturing family moments or a professional shooting high-end projects, Nikon delivers exceptional image quality, color accuracy, and a versatile lens selection.

At RetinaPixcom, we proudly serve as a leading Nikon store in Delhi, offering an extensive range of Nikon DSLR cameras, mirrorless cameras, and lenses. From the iconic Nikon D-series DSLRs to the innovative Nikon Z-series mirrorless cameras, we have something for every type of photography. Our expert team is always on hand to help you select the perfect Nikon camera based on your needs and budget, ensuring you get the most out of your investment.

Sandisk Memory Card: Reliable Storage for Your Photography Needs

In the world of digital photography, having reliable storage is essential. Sandisk is the global leader in memory cards and storage solutions, offering a wide variety of SD cards, microSD cards, USB drives, and external storage devices to keep your photos and videos safe.

At RetinaPixcom, we offer a variety of Sandisk memory cards, including the Sandisk Extreme and SanDisk Ultra series, known for their fast read and write speeds, which are perfect for high-definition photography and 4K video recording. Whether you’re shooting with a Nikon, Sony, or Canon camera, you’ll find the perfect Sandisk memory card to store your high-resolution images and videos efficiently. Our range of Sandisk memory cards ensures you never run out of space, and they come with reliable data protection for all your media files.

Sony Store in Delhi: Cutting-Edge Technology for Videographers and Photographers

Sony is synonymous with innovation in the world of digital cameras, offering state-of-the-art technology and unmatched performance. From Sony mirrorless cameras to professional video cameras, Sony continues to push the boundaries of what’s possible in the world of photography and videography.

As a Sony store in Delhi, RetinaPixcom brings you a comprehensive range of Sony Alpha mirrorless cameras, Cyber-shot compact cameras, and professional Sony video cameras. These cameras are known for their superior image quality, incredible autofocus systems, and groundbreaking video capabilities, making them a top choice for both professional filmmakers and content creators. Additionally, we offer a wide selection of Sony lenses and accessories to complement your Sony camera.

If you're a content creator looking for reliable equipment to elevate your videos, our Sony range of cameras, including models with 4K video recording and in-body stabilization, will help you take your craft to the next level.

Mobile Gimbal Shop in Delhi: Steady and Smooth Shots Every Time

In the world of mobile videography, mobile gimbals have become a must-have accessory for smooth and stable shots. Whether you're filming on your smartphone or capturing steady footage for your next vlog, a mobile gimbal can significantly improve your video quality by eliminating shaky footage.

At RetinaPixcom, we offer a variety of mobile gimbals from leading brands like DJI and Zhiyun. As a trusted mobile gimbal shop in Delhi, we provide options like the DJI Osmo Mobile 4 and the Zhiyun Smooth 4, which are perfect for stabilizing your smartphone while shooting high-quality video. These gimbals are designed for ease of use, portability, and smooth stabilization, helping you create professional-looking content on the go. Whether you’re vlogging, recording events, or creating cinematic footage, our selection of mobile gimbals will ensure that every shot is steady and smooth.

Why Choose RetinaPixcom?

Top-Quality Products: We stock cameras, memory cards, and accessories from world-renowned brands like Nikon, Sony, and Sandisk to ensure you have access to the best gear available.

Expert Guidance: Our team of professionals is always ready to help you choose the perfect equipment for your needs, whether you're a beginner or a professional.

Competitive Pricing: At RetinaPixcom, we offer competitive prices, ensuring that you get the best value for your investment. 1

1 -

Today I found out that memory =/= disk space...

I always referred to disk space as memory. Here's how a casual chat with my friend would be like:

Friend: Hey, you should get this game!

Me: How big is it? 50GB? I Don have enough memory on my laptop for that. -

What is it with web devs that can't write effective PHP applications that don't need a 1 GB of Memory Limit?

Where are the days that 32MBs of memory was fine per request? Ugh...2