Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "save data"

-

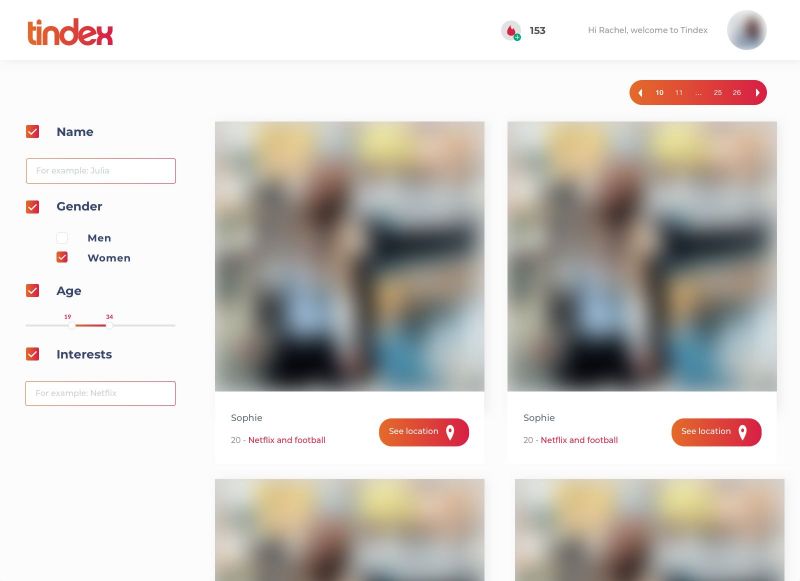

Last year I built the platform 'Tindex'. It was an index of Tinder profiles so people could search by name, gender and age.

We scraped the Tinder profiles through a Tinder API which was discontinued not long ago, but weird enough it was still intact and one of my friends who was also working on it found out how to get api keys (somewhere in network tab at Tinder Online).

Except name, gender and age we also got 3 distances so we could calculate each users' location, then save the location each 15 minutes and put the coordinates on a map so users of Tindex could easily see the current location of a specific Tinder user.

Fun note: we also got the Spotify data of each Tinder user, so we could actually know on which time and which location a user listened to a specific Spotify track.

Later on we started building it out: A chatbot which connected to Tinder so Tindex users could automatically send a pick up line to their new matches (Was kinda buggy, sometimes it sent 3 pick up lines at ones).

Right when we started building a revenue model we stopped the entire project because a friend of ours had found out that we basically violated almost all terms.

Was a great project, learned a lot from it and actually had me thinking twice or more about online dating platforms.

Below an image of the user overview design I prototyped. The data is mock-data. 51

51 -

In a meeting after I explained that the user passwords will be encrypted before we save them in the database

Them: "Please don't do that, we don't want to change our clients data"

Me: " so we should save the clear text?"

Them: "Yes"

😒9 -

Frack he did it again.

In a meeting with the department mgr and going over a request feature *we already discussed ad nauseam* that wasn’t technically feasible (do-able, just not worth the effort)

DeptMgr: “I want to see the contents of web site A embedded in web site B”

Me: “I researched that and it’s not possible. I added links to the target APM dashboard instead.”

Dev: “Yes, it’s possible. Just use an IFrame.”

DeptMgr: “I thought so. Next sprint item …what’s wrong?…you look frustrated”

Me: “Um..no…well, I said it’s not possible. I tried it and it doesn’t work”

Dev: “It’s just an IFrame. They are made to display content from another site.”

Me: “Well, yes, from a standard HTML tag, but what you are seeing is rendered HTML from the content manager’s XML. It implemented its own IFrame under the hood. We already talked about it, remember?”

Dev: “Oh, that’s right.”

DeptMgr: “So it’s possible?”

Dev: “Yea, we’ll figure it out.”

Me: “No…wait…figure what out? It doesn’t work.”

Dev: “We can use a powershell script to extract the data from A and port it to B.”

DeptMgr: “Powershell, good…Next sprint item…”

Me: “Powershell what? We discussed not using powershell, remember?”

Dev: “It’s just a script. Not a big deal.”

DeptMgr: “Powershell sounds like a right solution. Can we move on? Next sprint item….are you OK? You look upset”

Me: “No, I don’t particularly care, we already discussed executing a powershell script that would have to cross two network DMZs. Bill from networking already raised his concern about opening another port and didn’t understand why we couldn’t click a link. Then Mike from infrastructure griped about another random powershell script running on his servers just for reporting. He too raised his concern about all this work to save one person one click. Am I the only one who remembers this meeting? I mean, I don’t care, I’ll do whatever you want, but we’ll have to open up the same conversations with Networking again.”

Dev: “That meeting was a long time ago, they might be OK with running powershell scripts”

Me: “A long time ago? It was only two weeks.”

Dev: “Oh yea. Anyway, lets update the board. You’ll implement the powershell script and I’ll …”

Me: “Whoa..no…I’m not implementing anything. We haven’t discussed what this mysterious powershell script is supposed to do and we have to get Mike and Bill involved. Their whole team is involved in the migration project right now, so we won’t see them come out into the daylight until next week.”

DevMgr: “What if you talk to Eric? He knows powershell. OK…next sprint item..”

Me: “Eric is the one who organized the meeting two weeks ago, remember? He didn’t want powershell scripts hitting his APM servers. Am I the only one who remembers any of this?”

Dev: “I’m pretty good with powershell, I’ll figure it out.”

DevMgr: “Good…now can we move on?”

GAAAHH! I WANT A FLAMETHROWER!!!

Ok…feel better, thanks DevRant.11 -

I fucking love people like this.

Yesterday I met a 'friend' who I hadn't seen in a very long time. Just a guy I used to know tbh but let's call him Friend anyway. After a while in the conversation this happened...

*Friend doesn't know I have a degree in CS*

Friend: "WHAT?? YOU LIKE PROGRAMMING? NO WAY! ME TOO!"

Me: "THAT'S AWESOME! You've been programming for long?"

Friend: "A little over a year now. I know almost all languages now. C++, C#, Python, Java and HTML. Still a couple left to go. Once you're on the level I achieved programming becomes really, really easy. How long have you been programming?"

Me: "Almost a decade now"

Friend: "Damn dude you must know all languages by now I suppose?"

Me: "I've been mainly doing C++ so not really haha"

Friend: "I can always help when you're struggling with one language. C++ is pretty easy tbh. You should learn others too btw. HTML for example is pretty important because you can program websites with it"

Me: "Yeah... Thanks... So... What project are you working on right now?"

Friend: "I'm making a register page for my very own forum. The only problem I have is that PHP won't save the login details"

Me: "Hahaha I know the feeling. MySQL?"

Friend: "What?"

Me: "What do you use to save your data"

Friend: "Just a txt file. It's easier that way."

Me: "Hahaha true. Who needs safety right? *smiles*"

Friend: "Actually it's 100% safe because only I can see the txt file so other people can not hack other users."

Me: "Yes! That's great! Cya!"

Friend: "I'm working on a mmorpg too btw! I can learn you to make games if you want. Just call me. Here's my number"

Me: "Alright... Thanks... Bye!"

*Arrives at home*

*Deletes number*

I do not make this up.

I can understand that someone who isn't in the CS industry doesn't take it too seriously and gets hyped when their "Hello World" program works.

I'm fine with that.

The thing that really triggers me is big headed ass holes like this. Like how much more like a absolute dickhead could you possibly more act? Fucking hate people like that.32 -

Friend: Dude, css is so cool and amazing. I love it

Me: Erm ye, okay...

Friend: I think, im gonna make a css program to save data to database. That would make it even cooler!

Me: ye, okay. Wait what?! Hahahahaha

Friend: ??? Why u laughing13 -

So this was a couple years ago now. Aside from doing software development, I also do nearly all the other IT related stuff for the company, as well as specialize in the installation and implementation of electrical data acquisition systems - primarily amperage and voltage meters. I also wrote the software that communicates with this equipment and monitors the incoming and outgoing voltage and current and alerts various people if there's a problem.

Anyway, all of this equipment is installed into a trailer that goes onto a semi-truck as it's a portable power distribution system.

One time, the computer in one of these systems (we'll call it system 5) had gotten fried and needed replaced. It was a very busy week for me, so I had pulled the fried computer out without immediately replacing it with a working system. A few days later, system 5 leaves to go work on one of our biggest shows of the year - the Academy Awards. We make well over a million dollars from just this one show.

Come the morning of show day, the CEO of the company is in system 5 (it was on a Sunday, my day off) and went to set up the data acquisition software to get the system ready to go, and finds there is no computer. I promptly get a phone call with lots of swearing and threats to my job. Let me tell you, I was sweating bullets.

After the phone call, I decided I needed to try and save my job. The CEO hadn't told me to do anything, but I went to work, grabbed an old Windows XP laptop that was gathering dust and installed my software on it. I then had to build the configuration file that is specific to system 5 from memory. Each meter speaks the ModBus over TCP/IP protocol, and thus each meter as a different bus id. Fortunately, I'm pretty anal about this and tend to follow a specific method of id numbering.

Once I got the configuration file done and tested the software to see if it would even run properly on Windows XP (it did!), I called the CEO back and told him I had a laptop ready to go for system 5. I drove out to Hollywood and the CFO (who was there with the CEO) had to walk about a mile out of the security zone to meet me and pick up the laptop.

I told her I put a fresh install of the data acquisition software on the laptop and it's already configured for system 5 - it *should* just work once you plug it in.

I didn't get any phone calls after dropping off the laptop, so I called the CFO once I got home and asked her if everything was working okay. She told me it worked flawlessly - it was Plug 'n Play so to speak. She even said she was impressed, she thought she'd have to call me to iron out one or two configuration issues to get it talking to the meters.

All in all, crisis averted! At work on Monday, my supervisor told me that my name was Mud that day (by the CEO), but I still work here!

Here's a picture of the inside of system 8 (similar to system 5 - same hardware) 15

15 -

Started talking with someone about general IT stuff. At some point we came to the subject of SSL certificates and he mentioned that 'that stuff is expensive' and so on.

Kindly told him about Let's Encrypt and also that it's free and he reacted: "Then I'd rather have no SSL, free certificates make you look like you're a cheap ass".

So I told him the principle of login/registration thingies and said that they really need SSL, whether it's free or not.

"Nahhh, then I'd still rather don't use SSL, it just looks so cheap when you're using a free certificate".

Hey you know what, what about you write that sentence on a whole fucking pack of paper, dip it into some sambal, maybe add some firecrackers and shove it up your ass? Hopefully that will bring some sense into your very empty head.

Not putting a secure connection on a website, (at all) especially when it has a FUCKING LOGIN/REGISTRATION FUNCTION (!?!?!?!!?!) is simply not fucking done in the year of TWO THOUSAND FUCKING SEVENTEEN.

'Ohh but the NSA etc won't do anything with that data'.

Has it, for one tiny motherfucking second, come to mind that there's also a thing called hackers? Malicious hackers? If your users are on hacked networks, it's easy as fuck to steal their credentials, inject shit and even deliver fucking EXPLOIT KITS.

Oh and you bet your ass the NSA will save that data, they have a whole motherfucking database of passwords they can search through with XKeyScore (snowden leaks).

Motherfucker.68 -

Ignorant sales people and PMs who confuse a program's UI with the whole thing and ask you: why it took you so long, you just had to add a save button?

Yeah, asshole, adding a call-to-action style save button only took me 10 minutes, making it save your fucking data reliably took me a whole week.8 -

"could you please just use the standard messaging/social networking thingies? That way it'll be way easier to communicate!!"

Oh I don't mind using standard tools/services which everyone uses at all.

Just a few requirement: they don't save information that doesn't need to be saved, leave the users in control of their data (through end to end encryption for example) and aren't integrated in mass surveillance networks.

Aaaaaand all the standard options which everyone uses are gone 😩30 -

I have to let it out. It's been brewing for years now.

Why does MySQL still exist?

Really, WHY?!

It was lousy as hell 8 years ago, and since then it hasn't changed one bit. Why do people use it?

First off, it doesn't conform to standards, allowing you to aggregate without explicitly grouping, in which case you get god knows what type of shit in there, and then everybody asks why the numbers are so weird.

Second... it's $(CURRENT_YEAR) for fucks sake! This is the time of large data sets and complex requirements from those data sets. Just an hour through SO will show you dozens of poor people trying to do with MySQL what MySQL just can't do because it's stupid.

Recursion? 4 lines in any other large RDBMS, and tough luck in MySQL. So what next? Are you supposed to use Lemograph alongside MySQL just because you don't know that PostgreSQL is free and super fast?

Window functions to mix rows and do neat stuff? Naaah, who the hell needs that, right? Who needs to find the products ordered by the customer with the biggest order anyway? Oh you need that actually? Well you should write 3-4 queries, nest them in an incredibly fucked up way, summon a demon and feed it the first menstrual blood of your virgin daughter.

There used to be some excuses in the past "but but but, shared hosting only has MySQL". Which was wrong by the way. This was true only for big hosting names, and for people who didn't bother searching for alternatives. And now it's even better, since VPS and PaaS solutions are now available at prices lower than shared hosting, which give you better speed, performance and stability than shared hosting ever did.

"But but but Wordpress uses MySQL" - well then kill it! There are other platforms out there, that aren't just outrageously horrible on the inside and outside. Wordpress is crap, and work on it pays crap. Learn Laravel, Symfony, Zend, or even Drupal. You'll be able to create much more value than those shitty Wordpress sites that nobody ever visits or pay money on.

"But but but my client wants some static pages presented beside their online shop" - so why use Wordpress then? Static pages are static pages. Whip up a basic MVC set-up in literally any framework out there, avoid MySQL, include a basic ACL package for that framework, create a controller where you add a CKEditor to edit page content, and stick a nice template from themeforest for that page and be done with that shit! Save the mock-up for later use if you do that stuff often. Or if you're lazy to even do that, then take up Drupal.

But sure, this is going a bit over the scope. I actually don't care where you insert content for your few pages. It can be a JSON file for all I care. But if I catch you doing an e-commerce solution, or anything else than just text storage, on MySQL, I'll literally start re-assessing your ability to think rationally.11 -

// sorry, again a story not a rant

Category->type = 'Story';

Category->save();

Today at work I got a strange email

'about your msi laptop'

(Some background information, a few months ago I went on vacation and left my work laptop at home. Long story short some one broke in and stole my msi laptop)

So this email had my interest. I opened it and the content was something like:

Hi! My name is x, I clean/repair laptops partime and I noticed your personal information on this laptop, normally people whipe their data from their laptop before selling so this is just a double check, if the laptop was stolen please call me on xxx

If I hear nothing I'll assume its alright and will whipe your data

So of course I immediately called him, after a conversation I informed the police who is now working on the case7 -

This motherfucker tried to fuck me!

Ok, here's the full story.

I applied for a quick job as freelancer. He told me I just had to implement stripe payment gateway. After finishing that he asked to save the user data from payment to the database, too. I added that. All the way he wanted me to work on his ugly project on a rotten server through cpanel. But I refused instead I uploaded a showcase environment on my own server.

After he tested my code and all was working as expected he again tried to make me implement the code right away into his retarded project before payment. When I mentioned that he has to pay me first he started bitching that he won't pay in advance.

At this point I left that fucker. Knowing that my feeling was right and this bitch never had the intention to pay for my work. He just wanted to steel my code.

Fuck you. I hope you get eaten in your bed by very hungry slugs one day. Like this one guy here on devrant.19 -

"Hey nephew, why doesn't the FB app work. It shows blank white boxes?"

- It can't connect or something? (I stopped using the FB app since 2013.)

"What is this safe mode that appeared on my phone?!"

- I don't know. I don't hack my smartphone that much. Well, I actually do have a customised ROM. But stop! I'm pecking my keyboard most of the time.

"Which of my files should I delete?"

- Am I supposed to know?

"Where did my Microsoft Word Doc1.docx go?"

- It lets you choose the location before you hit save.

"What is 1MB?"

- Search these concepts on Google. (some of us did not have access to the Internet when we learned to do basic computer operations as curious kids.)

"What should I search?"

- ...

"My computer doesn't work.. My phone has a virus. Do you think this PC they are selling me has a good spec? Is this Video Card and RAM good?"

- I'm a programmer. I write code. I think algorithmically and solve programming problems efficiently. I analyse concepts such as abstraction, algorithms, data structures, encapsulation, resource management, security, software engineering, and web development. No, I will not fix your PC.7 -

Citizens are advised not to use encryption as decrypting data takes too much time and is costly.

Please spread awareness and save money.

Thank you for Cooperating, have a nice day :)6 -

Actual rant time. And oh boy, is it pissy.

If you've read my posts, you've caught glimpses of this struggle. And it's come to quite a head.

First off, let it be known that WINDOWS Boot Manager ate GRUB, not the other way around. Windows was the instigator here. And when I reinstalled GRUB, Windows threw a tantrum and won't boot anymore. I went through every obvious fix, everything tech support would ever think of, before I called them. I just got this laptop this week, so it must be in warranty, right? Wrong. The reseller only accepts it unopened, and the manufacturer only covers hardware issues. I found this after screaming past a pretty idiotic 'customer representative' ("Thank you for answering basic questions. Thank you for your patience. Thank you for repeating obvious information I didn't catch the first three times you said it. Thank you for letting me follow my script." For real. Are you tech support, or emotional support? You sound like a middle school counselor.) to an xkcd-shibboleth type 'advanced support'. All of this only to be told, "No, you can't fix it yourself, because we won't give you the license key YOU already bought with the computer." And we already know there's no way Microsoft is going to swoop in and save the day. It's their product that's so faulty in the first place. (Debian is perfectly fine.)

So I found a hidden partition with a single file called 'Image' and I'm currently researching how to reverse-engineer WIM and SWM files to basically replicate Dell's manufacturing process because they won't take it back even to do a simple factory reset and send it right back.

What the fuck, Dell.

As for you, Microsoft, you're going to make it so difficult to use your shit product that I have to choose between an arduous, dangerous, and likely illegal process to reclaim what I ALREADY BOUGHT, or just _not use_ a license key? (Which, there's no penalty for that.) Why am I going so far out of my way to legitimize myself to you, when you're probably selling backdoors and private data of mine anyway? Why do I owe you anything?

Oh, right. Because I couldn't get Fallout 3 to run in Wine. Because the game industry follows money, not common sense. Because you marketed upon idiocy and cheapness and won a global share.

Fuck you. Fuck everything. Gah.

VS Code is pretty good, though.19 -

I'm convinced code addiction is a real problem and can lead to mental illness.

Dev: "Thanks for helping me with the splunk API. Already spent two weeks and was spinning my wheels."

Me: "I sent you the example over a month ago, I guess you could have used it to save time."

Dev: "I didn't understand it. I tried getting help from NetworkAdmin-Dan, SystemAdmin-Jake, they didn't understand what you sent me either."

Me: "I thought it was pretty simple. Pass it a query, get results back. That's it"

Dev: "The results were not in a standard JSON format. I was so confused."

Me: "Yea, it's sort-of JSON. Splunk streams the result as individual JSON records. You only have to deserialize each record into your object. I sent you the code sample."

Dev: "Your code didn't work. Dan and Jake were confused too. The data I have to process uses a very different result set. I guess I could have used it if you wrote the class more generically and had unit tests."

<oh frack...he's been going behind my back and telling people smack about my code again>

Me: "My code wouldn't have worked for you, because I'm serializing the objects I need and I do have unit tests, but they are only for the internal logic."

Dev:"I don't know, it confused me. Once I figured out the JSON problem and wrote unit tests, I really started to make progress. I used a tuple for this ... functional parameters for that...added a custom event for ... Took me a few weeks, but it's all covered by unit tests."

Me: "Wow. The way you explained the project was; get data from splunk and populate data in SQLServer. With the code I sent you, sounded like a 15 minute project."

Dev: "Oooh nooo...its waaay more complicated than that. I have this very complex splunk query, which I don't understand, and then I have to perform all this parsing, update a database...which I have no idea how it works. Its really...really complicated."

Me: "The splunk query returns what..4 fields...and DBA-Joe provided the upsert stored procedure..sounds like a 15 minute project."

Dev: "Maybe for you...we're all not super geniuses that crank out code. I hope to be at your level some day."

<frack you ... condescending a-hole ...you've got the same seniority here as I do>

Me: "No seriously, the code I sent would have got you 90% done. Write your deserializer for those 4 fields, execute the stored procedure, and call it a day. I don't think the effort justifies the outcome. Isn't the data for a report they'll only run every few months?"

Dev: "Yea, but Mgr-Nick wanted unit tests and I have to follow orders. I tried to explain the situation, but you know how he is."

<fracking liar..Nick doesn't know the difference between a unit test and breathalyzer test. I know exactly what you told Nick>

Dev: "Thanks again for your help. Gotta get back to it. I put a due date of April for this project and time's running out."

APRIL?!! Good Lord he's going to drag this intern-level project for another month!

After he left, I dug around and found the splunk query, the upsert stored proc, and yep, in about 15 minutes I was done.1 -

> be me

> install linux on encrypted drive

> takes 8 hours to fill the drive with fake data so theres no chance of data leakage

> save encryption password to phone

> phone doesnt actually save password

> realize you dont have access to pc anymore

> cry

> reinstall linux7 -

My 1.5 year old 1tb SSD just died unexpectedly. (Samsung EVO 850). It might be under warranty yet.

Fortunately the only data I lost was my debian install, some steam games, bookmarks, and a few unfinshed projects.

I kept everything important on a backup spinning drive -- which is also dying. Joy. But I might be able to save its data.28 -

Never gonna happen:

* Port our API to graphql. Or even make it just vaguely rest-compliant. Or even just vaguely consistent.

* Migrate from mysql to postgres. Or any sane database.

* Switch codebase from PHP to... well, anything else.

* Teach coworkers to not commit passwords, API keys, etc.

* Teach coworkers to write serious commit messages instead of emoji spam

* Get a silent work environment.

* Get my office to serve better snacks than fermented quinoa spinach bars and raw goat milk kale smoothies

* Find an open source IDE with good framework magic support. Jetbrains, I'll give you my left testicle if you join the light side of the force.

* Buy 2x3 equally sized displays. I'm using 6, but they're various sizes/resolutions.

* Master Rust.

* Finish building my house. I completely replaced the roof, but still have to dig out a cellar (to hide my dead coworkers).

* Repair/replace the foundation of my house (I think Rust is easier)

* Get slim and muscular.

Realistically:

* Get a comfortable salary increase, focus more on platform infrastructure, data design, coaching

* Get fat(ter). Eating, sitting, gaming, coding and sleeping are my hobbies after all.

* Save up for the inevitable mental breakdown-induced retirement.13 -

Today was bad day.

- only had 3 hours of sleep

- 1.5h exam in the morning

- work in the afternoon until 8pm

- 1 drive crashed in a RAID5 array

- wasted hours of data copying

- my hands and arms got really dirty from all that nasty trump-face-colored dust in the server room

- nothing new in the west

- I have to get up in 4 hours again to start a new copying task

- I only knew it was friday today because the devRant meme game was reaching the weekly peak

- lists can save lives

- good night 😴2 -

I have this little hobby project going on for a while now, and I thought it's worth sharing. Now at first blush this might seem like just another screenshot with neofetch.. but this thing has quite the story to tell. This laptop is no less than 17 years old.

So, a Compaq nx7010, a business laptop from 2004. It has had plenty of software and hardware mods alike. Let's start with the software.

It's running run-off-the-mill Debian 9, with a custom kernel. The reason why it's running that version of Debian is because of bugs in the network driver (ipw2200) in Debian 10, causing it to disconnect after a day or so. Less of an issue in Debian 9, and seemingly fixed by upgrading the kernel to a custom one. And the kernel is actually one of the things where you can save heaps of space when you do it yourself. The kernel package itself is 8.4MB for this one. The headers are 7.4MB. The stock kernels on the other hand (4.19 at downstream revisions 9, 10 and 13) took up a whole GB of space combined. That is how much I've been able to remove, even from headless systems. The stock kernels are incredibly bloated for what they are.

Other than that, most of the data storage is done through NFS over WiFi, which is actually faster than what is inside this laptop (a CF card which I will get to later).

Now let's talk hardware. And at age 17, you can imagine that it has seen quite a bit of maintenance there. The easiest mod is probably the flash mod. These old laptops use IDE for storage rather than SATA. Now the nice thing about IDE is that it actually lives on to this very day, in CF cards. The pinout is exactly the same. So you can use passive IDE-CF adapters and plug in a CF card. Easy!

The next thing I want to talk about is the battery. And um.. why that one is a bad idea to mod. Finding replacements for such old hardware.. good luck with that. So your other option is something called recelling, where you disassemble the battery and, well, replace the cells. The problem is that those battery packs are built like tanks and the disassembly will likely result in a broken battery housing (which you'll still need). Also the controllers inside those battery packs are either too smart or too stupid to play nicely with new cells. On that laptop at least, the new cells still had a perceived capacity of the old ones, while obviously the voltage on the cells themselves didn't change at all. The laptop thought the batteries were done for, despite still being chock full of juice. Then I tried to recalibrate them in the BIOS and fried the battery controller. Do not try to recell the battery, unless you have a spare already. The controllers and battery housings are complete and utter dogshit.

Next up is the display backlight. Originally this laptop used to use a CCFL backlight, which is a tiny tube that is driven at around 2000 volts. To its controller go either 7, 6, 4 or 3 wires, which are all related and I will get to. Signs of it dying are redshift, and eventually it going out until you close the lid and open it up again. The reason for it is that the voltage required to keep that CCFL "excited" rises over time, beyond what the controller can do.

So, 7-pin configuration is 2x VCC (12V), 2x enable (on or off), 1x adjust (analog brightness), and 2x ground. 6-pin gets rid of 1 enable line. Those are the configurations you'll find in CCFL. Then came LED lighting which required much less power to run. So the 4-pin configuration gets rid of a VCC and a ground line. And finally you have the 3-pin configuration which gets rid of the adjust line, and you can just short it to the enable line.

There are some other mods but I'm running out of characters. Why am I telling you all this? The reason is that this laptop doesn't feel any different to use than the ThinkPad x220 and IdeaPad Y700 I have on my desk (with 6c12t, 32G of RAM, ~1TB of SSDs and 2TB HDDs). A hefty setup compared to a very dated one, yet they feel the same. It can do web browsing, I can chat on Telegram with it, and I can do programming on it. So, if you're looking for a hobby project, maybe some kind of restrictions on your hardware to spark that creativity that makes code better, I can highly recommend it. I think I'm almost done with this project, and it was heaps of fun :D 12

12 -

Imagine, you get employed to restart a software project. They tell you, but first we should get this old software running. It's 'almost finished'.

A WPF application running on a soc ... with a 10" touchscreen on win10, a embedded solution, to control a machine, which has been already sold to customers. You think, 'ok, WTF, why is this happening'?

You open the old software - it crashes immediately.

You open it again but now you are so clever to copy an xml file manually to the root folder and see all of it's beauty for the first time (after waiting for the freezed GUI to become responsive):

* a static logo of the company, taking about 1/5 of the screen horizontally

* circle buttons

* and a navigation interface made in the early 90's from a child

So you click a button and - it crashes.

You restart the software.

You type something like 'abc' in a 'numberfield' - it crashes.

OK ... now you start the application again and try to navigate to another view - and? of course it crashes again.

You are excited to finally open the source code of this masterpiece.

Thank you jesus, the 'dev' who did this, didn't forget to write every business logic in the code behind of the views.

He even managed to put 6 views into one and put all their logig in the code behind!

He doesn't know what binding is or a pattern like MVVM.

But hey, there is also no validation of anything, not even checks for null.

He was so clever to use the GUI as his place to save data and there is a lot of parsing going on here, every time a value changes.

A thread must be something he never heard about - so thats why the GUI always freezes.

You tell them: It would be faster to rewrite the whole thing, because you wouldn't call it even an alpha. Nobody listenes.

Time passes by, new features must be implemented in this abomination, you try to make the cripple walk and everyone keeps asking: 'When we can start the new software?' and the guy who wrote this piece of shit in the first place, tries to give you good advice in coding and is telling you again: 'It was almost finished.' *facepalm*

And you? You would like to do him and humanity a big favour by hiting him hard in the face and breaking his hands, so he can never lay a hand on any keyboard again, to produce something no one serious would ever call code.4 -

Customer : c

Me : m

*Few weeks ago*

C: the server is slow, it sometimes takes 7 seconds before I see our data

(the project is 7+ years old and wasn't written by someone who is very good in SQL)

M: yeah I see that, our servers are busy with this one "process" (SQL query)

C: make it faster

M: well that's possible but it will take a few days (massive SQL spaghetti that I first have to untangle)

C: 😡 nvm then

*Yesterday*

C: server is down !

M: 🤔 *loads data from server and waits ~ 7 seconds*

M: Well what's the problem?

C: I need the data but it's so slow

WELL YOU MINDLESS IMBECILE... If something is slow it doesn't mean our god damn production server is down !

That just means that you have to give us a day or two so we can optimise the (ALSO BY YOUR REQUEST) rushed project... And save you YOUR money that YOU waste on the processing time on our server...4 -

Short version:

Dear devRantdairy,

today I was stupid.

The End.

Full version:

I am working on some messaging system, trying to use less as possible overhead sending data. Therefore there of course are asynchronous calls and some templating. But that's just the setting of the rant: I designed an architecture to save conversations in a database. Working with transactions in pdo I wrote a query wich in my eyes should have worked well. But the result just didn't appear in the table. So I started debugging data. Recreated the table. Rewrote the query. Went to bed. Woke up. Further tryed to make this work. And in the end I realized I just forgot to commit the transaction.

How dumb can you be? There's way too much time gone for that mistake. Is there a hole? I want do dig myself.7 -

3 rants for the price of 1, isn't that a great deal!

1. HP, you braindead fucking morons!!!

So recently I disassembled this HP laptop of mine to unfuck it at the hardware level. Some issues with the hinge that I had to solve. So I had to disassemble not only the bottom of the laptop but also the display panel itself. Turns out that HP - being the certified enganeers they are - made the following fuckups, with probably many more that I didn't even notice yet.

- They used fucking glue to ensure that the bottom of the display frame stays connected to the panel. Cheap solution to what should've been "MAKE A FUCKING DECENT FRAME?!" but a royal pain in the ass to disassemble. Luckily I was careful and didn't damage the panel, but the chance of that happening was most certainly nonzero.

- They connected the ribbon cables for the keyboard in such a way that you have to reach all the way into the spacing between the keyboard and the motherboard to connect the bloody things. And some extra spacing on the ribbon cables to enable servicing with some room for actually connecting the bloody things easily.. as Carlos Mantos would say it - M-m-M, nonoNO!!!

- Oh and let's not forget an old flaw that I noticed ages ago in this turd. The CPU goes straight to 70°C during boot-up but turning on the fan.. again, M-m-M, nonoNO!!! Let's just get the bloody thing to overheat, freeze completely and force the user to power cycle the machine, right? That's gonna be a great way to make them satisfied, RIGHT?! NO MOTHERFUCKERS, AND I WILL DISCONNECT THE DATA LINES OF THIS FUCKING THING TO MAKE IT SPIN ALL THE TIME, AS IT SHOULD!!! Certified fucking braindead abominations of engineers!!!

Oh and not only that, this laptop is outperformed by a Raspberry Pi 3B in performance, thermals, price and product quality.. A FUCKING SINGLE BOARD COMPUTER!!! Isn't that a great joke. Someone here mentioned earlier that HP and Acer seem to have been competing for a long time to make the shittiest products possible, and boy they fucking do. If there's anything that makes both of those shitcompanies remarkable, that'd be it.

2. If I want to conduct a pentest, I don't want to have to relearn the bloody tool!

Recently I did a Burp Suite test to see how the devRant web app logs in, but due to my Burp Suite being the community edition, I couldn't save it. Fucking amazing, thanks PortSwigger! And I couldn't recreate the results anymore due to what I think is a change in the web app. But I'll get back to that later.

So I fired up bettercap (which works at lower network layers and can conduct ARP poisoning and DNS cache poisoning) with the intent to ARP poison my phone and get the results straight from the devRant Android app. I haven't used this tool since around 2017 due to the fact that I kinda lost interest in offensive security. When I fired it up again a few days ago in my PTbox (which is a VM somewhere else on the network) and today again in my newly recovered HP laptop, I noticed that both hosts now have an updated version of bettercap, in which the options completely changed. It's now got different command-line switches and some interactive mode. Needless to say, I have no idea how to use this bloody thing anymore and don't feel like learning it all over again for a single test. Maybe this is why users often dislike changes to the UI, and why some sysadmins refrain from updating their servers? When you have users of any kind, you should at all times honor their installations, give them time to change their individual configurations - tell them that they should! - in other words give them a grace time, and allow for backwards compatibility for as long as feasible.

3. devRant web app!!

As mentioned earlier I tried to scrape the web app's login flow with Burp Suite but every time that I try to log in with its proxy enabled, it doesn't open the login form but instead just makes a GET request to /feed/top/month?login=1 without ever allowing me to actually log in. This happens in both Chromium and Firefox, in Windows and Arch Linux. Clearly this is a change to the web app, and a very undesirable one. Especially considering that the login flow for the API isn't documented anywhere as far as I know.

So, can this update to the web app be rolled back, merged back to an older version of that login flow or can I at least know how I'm supposed to log in to this API in order to be able to start developing my own client?6 -

For a week+ I've been listening to a senior dev ("Bob") continually make fun of another not-quite-a-senior dev ("Tom") over a performance bug in his code. "If he did it right the first time...", "Tom refuses to write tests...that's his problem", "I would have wrote the code correctly ..." all kinds of passive-aggressive put downs. Bob then brags how without him helping Tom, the application would have been a failure (really building himself up).

Bob is out of town and Tom asked me a question about logging performance data in his code. I look and see Bob has done nothing..nothing at all to help Tom. Tom wrote his own JSON and XML parser (data is coming from two different sources) and all kinds of IO stream plumbing code.

I use Visual Studio's feature create classes from JSON/XML, used the XML Serialzier and Newtonsoft.Json to handling the conversion plumbing.

With several hundred of lines gone (down to one line each for the XML/JSON-> object), I wrote unit tests around the business transaction, integration test for the service and database access. Maybe couple of hours worth of work.

I'm 100% sure Bob knew Tom was going in a bad direction (maybe even pushing him that direction), just to swoop in and "save the day" in front of Tom's manager at some future point in time.

This morning's standup ..

Boss: "You're helping Tom since Bob is on vacation? What are you helping with?"

Me: "I refactored the JSON and XML data access, wrote initial unit and integration tests. Tom will have to verify, but I believe any performance problem will now be isolated to the database integration. The problem Bob was talking about on Monday is gone. I thought spending time helping Tom was better than making fun of him."

<couple seconds of silence>

Boss:"Yea...want to let you know, I really, really appreciate that."

Bob, put people first, everyone wins.11 -

Forgive me father, for I have sinned. Alot actually, but I'm here for technical sins. Okay, a particular series of technical sins. Sit your ass back down padre, you signed up for this shit. Where was I? Right, it has been 11429 days since my last confession. May this serve as equal parts rant, confession, and record for the poor SOB who comes after me.

Ended up in a job where everything was done manually or controlled by rickety Access "apps". Many manhours were wasted on sitting and waiting for the main system to spit out a query download so it could be parsed by hand or loaded into one of the aforementioned apps that had a nasty habit of locking up the aged hardware that we were allowed. Updates to the system were done through and awful utility that tended to cut out silently, fail loudly and randomly, or post data horrifically wrong.

Fuck that noise. Floated the idea of automating downloads and uploads to bossman. This is where I learned that the main system had no SQL socket by default, but the vendor managing the system could provide one for an obscene amount of money. There was no buy in from above, not worth the price.

Automated it anyway. Main system had a free form entry field, ostensibly for handwriting SELECT queries. Using Python, AutoHotkey, and glorified copy-pasting, it worked after a fashion. Showed the time saved by not having to do downloads manually. Got us the buy in we needed, bigwigs get negotiating with the vendor, told to start developing something based on some docs from the vendor. Keep the hacky solution running as team loves not having to waste time on downloads.

Found SQLi vulnerability in the above free form query system, brought it up to bossman to bring up the chain. Vulnerability still there months later. Test using it for automated updates. Works and is magnitudes more stable than update utility. Bring it up again and show the time we can save exploiting it. Decision made to use it while it exists, saves more time. Team happier, able to actual develop solutions uninterrupted now. Using Python, AutoHotkey, glorified copy-pasting, and SQLi in the course of day to day business critical work. Ugliest hacky thing I've ever caused to exist.

Flash forward 6 years. Automation system now in heavy use acrossed two companies. Handles all automatic downloads for several departments, 1 million+ discrete updates daily with alot of room for expansion, stuff runs 24/7 on schedule, most former Access apps now gone and written sanely and managed by the automation system. Its on real hardware with real databases and security behind it.

It is still using AutoHotkey, copy-paste, and SQLi to interface with the main system. There never was and never will be a SQL socket. Keep this hellbeast I've spawned chugging along.

I've pointed out how many ways this can all go pearshaped. I've pointed out that one day the vendor will get their shit together they'll come in post system update and nothing will work anymore. I've pointed out the danger in continuing to use the system with such a glaring SQLi vulnerability.

Noone cares. Won't be my problem soon enough.

In no particular order:

Fuck management for not fighting for a good system interface

Fuck the vendor for A) not having a SQL socket and B) leaving the SQLi vulnerability there this long

Fuck me for bringing this thing into existence5 -

!rant I read some documentation about Amazon, "save all the data in my butt".

- me laughing

* I installed the extension that replaces all the instances of" the cloud " by "my butt". I'm easily entertained ;p4 -

My first real "rant", okay...

So I decided today to hop back on the horse and open Android Studio for the first time in a couple months.

I decided I was going to make a random color generator. One of my favorite projects. Very excited.

Got all the layouts set up, and got a new color every tap with RGB and hex codes, too. Took more time to open Android Studio, really.

Excited with my speedy progress, I think "This'll be done in no time!". Text a friend and tell them what I'm up to. Shes very nice, wants the app. "As soon as I'm done". I expected that to be within the hour.

I want to be able to save the colors for future reference. Got the longClickListener set up just fine. Cute little toast pops up every time. Now I just need to save the color to a file.

Easy, just a semicolon-deliminated text file in my app's cache folder.

Three hours later, and my file still won't write any data. Friend has gone to sleep. Homework has gone undone. My hatred for Android is reborn.

Stay tuned, the adventure continues tomorrow...11 -

Government Fucking Websites.

Slow as fuck, disorganized, errors from 2004, UI from 2001.

You have to use them at a time when you really don't feel like waiting 30 fucking seconds for each page load.

Or filling out a fucking form that, ok, they made SOME kind of attempt to save your data, but it's overly complex and annoying.

Government websites. Making tasks that should take 5 minutes, 5 hours, since 1998.

Assholes.8 -

Am I the only one who will spend an hour to write a script that runs only once to save myself from entering in 20 minutes worth of data manually?6

-

I had an idiot as my boss once. The guy was a principal architect at the time, and thought it would be a good idea to demonstrate his/our project to the entire org in an auditorium. The project inclined turning the User's phone as the entertainment unit in the car. He spoke of all the bells and whistles, about how you can listen to music, watch videos while in the car. A guy expressed his concern about the cost and availability of 3g/4g data in India, our target market. He blatantly dismissed the concern claiming one doesn't use data while watching videos, as you aren't downloading or saving anything. If you save the video offline only then you consume data. I have never seen a group of 200 odd people grow silent that quick. People looking around uncomfortably. And then this ass goes, "My team is sitting back there. Reach out to them if you have any doubts.."

I sunk in my seat as low as I possibly could without falling down8 -

Root gets ignored.

I've been working on this monster ticket for a week and a half now (five days plus other tickets). It involves removing all foreign keys from mass assignment (create, update, save, ...), which breaks 1780 specs.

For those of you who don't know, this is part of how rails works. If you create a Page object, you specify the book_id of its parent Book so they're linked. (If you don't, they're orphans.) Example: `Page.create(text: params[:text], book_id: params[:book_id], ...)` or more simply: `Page.create(params)`

Obviously removing the ability to do this is problematic. The "solution" is to create the object without the book_id, save it, then set the book_id and save it again. Two roundtrips. bad.

I came up with a solution early last week that, while it doesn't resolve the security warnings, it does fix the actual security issue: whitelisting what params users are allowed to send, and validating them. (StrongParams + validation). I had a 1:1 with my boss today about this ticket, and I told him about that solution. He sort of hand-waved it away and said it wouldn't work because <lots of unrelated things>. huh.

He worked through a failed spec to see what the ticket was about, and eventually (20 minutes later) ran into the same issues Idid, and said "there's no way around this" (meaning what security wants won't actually help).

I remembered that Ruby has a `taint` state tracking, and realized I could use that to write a super elegant drop-in solution: some Rack middleware or a StrongParams monkeypatch to mark all foreign keys from user-input as tainted (so devs can validate and un-taint them), and also monkeypatch ACtiveRecord's create/save/update/etc. to raise an exception when seeing tainted data. I brought this up, and he searched for it. we discovered someone had already build this (not surprising), but also that Ruby2.7 deprecates the `taint` mechanism literally "because nobody uses it." joy. Boss also somehow thought I came up with it because I saw the other person's implementation, despite us searching for it because I brought it up? 🤨

Foregoing that, we looked up more possibilities, and he saw the whitelist+validation pattern quite a few more times, which he quickly dimissed as bad, and eventually decided that we "need to noodle on it for awhile" and come up with something else.

Shortly (seriously 3-5 minutes) after the call, he said that the StrongParams (whitelist) plus validation makes the most sense and is the approach we should use.

ffs.

I came up with that last week and he said no.

I brought it up multiple times during our call and he said it was bad or simply talked over me. He saw lots of examples in the wild and said it was bad. I came up with a better, more elegant solution, and he credited someone else. then he decided after the call that the StrongParams idea he came up with (?!) was better.

jfc i'm getting pissy again.8 -

So a few years ago when I was getting started with programming, I had this idea to create "Steam but for mods". And just think about it - 13 and a half years old me which knew C# not even for a half of a year wanted to create a fairly sizable project. I wasn't even sure how while () or foreach () loops worked back in the day.

So I've made a post on a polish F1 Challenge '99-'02 game forum about this thing. The guy reached out to me and said: "Hey, I could help you out". This is where all started.

I've got in touch with him via Gadu-Gadu (a polish equivalent of ICQ). So I've sent him the source code... Packed in .ZIP file... By Zippyshare… And just think how BAD this code was. Like for instance, to save games data which you were adding they were stored in text files. The game name was stored in one .txt file. The directory in another. The .exe file name in yet another and so on. Back then I thought that was perfectly fine! I couldn't even make the game to start via this program, because I didn't know about Working Directory).

The guy didn't reply to me anymore.

Of course back then it wasn't embarrassing to me at all, but now when I think about it... -

Well, fuck. The CTO of our startup decided to migrate data of our hundred thousand customers from a stable functioning platform to an in-house unstable platform with severe performance issues, to "save" costs, despite our repeated requests. He made us not have any contingency plans because he wanted to "motivate" us to complete the migration.

Result- we have a thousand customers reporting major issues daily, which is causing loss of revenue to both us and them. The company ran out of funding. Most of the team members were fired. And he's expecting the rest of us to magically fix everything. Dunno what kind of office politics is this, in which you're sabotaging Your Own company.

Looking for a new job now to get out of this hellhole. I really used to love this company. Feels sad to see it ruined like this.4 -

😐ctrl/cmd + s to sacrifice file.😑

Teacher: always save your file or else data will be lost. ctrl + s

Me: in word

__________

| |

| |

| |

| page 1 |

__________

ctrl + s ( 10 times)💾

Next day I open this file, my data is lost.

I sware to god i hit ctrl + s 10 times.15 -

I don't think I could give the best advice on this since I don't follow all the best practices (lack of knowledge, mostly) but fuck it;

- learn how to use search engines. And no, not specifically Google because I don't want to drag kids into the use of mass surveillance networks and I neither want to promote them (even if they already use it).

- try not to give up too easily. This is one I'm still profiting from (I'm a stubborn motherfucker)

- start with open source technologies. Not just "because open source" but because open source, in general, gives one the ability to hack around and explore and learn more!

- Try to program securely and with privacy in mind (the less data you save, the less can be abused, compromised, leaked, etc)

- don't be afraid to ask questions

-enjoy it!7 -

My preciousssss!!

Fucking assholes! Just spent 3h debugging for bugs that weren't there.. Our client insisted we must rollback the whole update, because gui was broken.. after analysing data & testing I figure out that there must be something 'wrong' as there was no data to copy from in the first place...so there should be no bug..

Aaand here goes the best part: they didn't want to point out missing data bug, they just wanted one restriction to be removed, because it 'broke GUI', to allow for empty value on save... WTF?! How can you insist that gui is buggy & that you don't want an update, if you just want something to be optional?! Which was done immediately, one change in one js file?! Dafaaaaaq?!

Kids, English is important!! Otherwise you end up debugging ghosts for 3+ hours withou a cigarette...and waking up a coworker with bad news of rollback at half to midnight... Aaaaaaaargh!!!

сука блять 27

27 -

Getting ready for GDPR at work. I had to explain to my bosses what it meant, especially regarding one of our project where we store a lot of user data. Then I heard it: "this crap doesn't regard us. we have no sensitive data. we only save out users' name and generalities.". I have no words.3

-

So you want to collect and save sensitive data from psychologists sessions and use Wordpress. What can go wrong.2

-

Coworker: so once the algorithm is done I will append new columns in the sql database and insert the output there

Me: I don't like that, can we put the output in a separate table and link it using a foreign key. Just to avoid touching the original data, you know, to avoid potential corruption.

C: Yes sure.

< Two days later - over text >

C: I finished the algo, i decided to append it to the original data in order to avoid redundancy and save on space. I think this makes more sense.

Me: ahdhxjdjsisudhdhdbdbkekdh

No. Learn this principal:

" The original data generated by the client, should be treated like the god damn Bible! DO NOT EVER CHANGE ITS SCHEMA FOR A 3RD PARTY CALCULATION! "

Put simply: D.F.T.T.O

Don't. Fucking. Touch. The. Origin!5 -

Fuck you you fucking fuck, why would you change an api without any notification?

Background: built an app for a customer, it needs to fetch data frlman external api, and save it to a db.

Customer called: it's broken what did you do?!?

Me: I'll look into it.

Turned out the third party just changed their api... Guess I should implenent some kind of notification, if no messages come in for some time...5 -

Poorly written docs.

I've been fighting with the Epson T88VI printer webconfig api for five hours now.

The official TM-T88VI WebConfig API User's Manual tells me how to configure their printer via the API... but it does so without complete examples. Most of it is there, but the actual format of the API call is missing.

It's basically: call `API_URL` with GET to get the printer's config data (works). Call it with PUT to set the data! ... except no matter what I try, I get either a 401:Unauthorized (despite correct credentials), 403:Forbidden (again...), or an "Invalid Parameter" response.

I have no idea how to do this.

I've tried literally every combination of params, nesting, json formatting, etc. I can think of. Nothing bloody works!

All it would have taken to save me so many hours of trouble is a single complete example. Ten minutes' effort on their part. tops.

asjdf;ahgwjklfjasdg;kh.5 -

I am much too tired to go into details, probably because I left the office at 11:15pm, but I finally finished a feature. It doesn't even sound like a particularly large or complicated feature. It sounds like a simple, 1-2 day feature until you look at it closely.

It took me an entire fucking week. and all the while I was coaching a junior dev who had just picked up Rails and was building something very similar.

It's the model, controller, and UI for creating a parent object along with 0-n child objects, with default children suggestions, a fancy ui including the ability to dynamically add/remove children via buttons. and have the entire happy family save nicely and atomically on the backend. Plus a detailed-but-simple listing for non-technicals including some absolutely nontrivial css acrobatics.

After getting about 90% of everything built and working and beautiful, I learned that Rails does quite a bit of this for you, through `accepts_nested_params_for :collection`. But that requires very specific form input namespacing, and building that out correctly is flipping difficult. It's not like I could find good examples anywhere, either. I looked for hours. I finally found a rails tutorial vide linked from a comment on a SO answer from five years ago, and mashed its oversimplified and dated examples with the newer documentation, and worked around the issues that of course arose from that disasterous paring.

like.

I needed to store a template of the child object markup somewhere, yeah? The video had me trying to store all of the markup in a `data-fields=" "` attrib. wth? I tried storing it as a string and injecting it into javascript, but that didn't work either. parsing errors! yay! good job, you two.

So I ended up storing the markup (rendered from a rails partial) in an html comment of all things, and pulling the markup out of the comment and gsubbing its IDs on document load. This has the annoying effect of preventing me from using html comments in that partial (not that i really use them anyway, but.)

Just.

Every step of the way on building this was another mountain climb.

* singular vs plural naming and routing, and named routes. and dealing with issues arising from existing incorrect pluralization.

* reverse polymorphic relation (child -> x parent)

* The testing suite is incompatible with the new rails6. There is no fix. None. I checked. Nope. Not happening.

* Rails6 randomly and constantly crashes and/or caches random things (including arbitrary code changes) in development mode (and only development mode) when working with multiple databases.

* nested form builders

* styling a fucking checkbox

* Making that checkbox (rather, its label and container div) into a sexy animated slider

* passing data and locals to and between partials

* misleading documentation

* building the partials to be self-contained and reusable

* coercing form builders into namespacing nested html inputs the way Rails expects

* input namespacing redux, now with nested form builders too!

* Figuring out how to generate markup for an empty child when I'm no longer rendering the children myself

* Figuring out where the fuck to put the blank child template markup so it's accessible, has the right namespacing, and is not submitted with everything else

* Figuring out how the fuck to read an html comment with JS

* nested strong params

* nested strong params

* nested fucking strong params

* caching parsed children's data on parent when the whole thing is bloody atomic.

* Converting datetimes from/to milliseconds on save/load

* CSS and bootstrap collisions

* CSS and bootstrap stupidity

* Reinventing the entire multi-child / nested params / atomic creating/updating/deleting feature on my own before discovering Rails can do that for you.

Just.

I am so glad it's working.

I don't even feel relieved. I just feel exhausted.

But it's done.

finally.

and it's done well. It's all self-contained and reusable, it's easy to read, has separate styling and reusable partials, etc. It's a two line copy/paste drop-in for any other model that needs it. Two lines and it just works, and even tells you if you screwed up.

I'm incredibly proud of everything that went into this.

But mostly I'm just incredibly tired.

Time for some well-deserved sleep.7 -

I have a Windows machine sitting behind the TV, hooked to two controllers, set up as basically a console for the big TV. It doesn't get a lot of use, and mostly just churns out folding@home work units lately. It's connected by ethernet via a wired connection, and it has a local static IP for the sake of simplicity.

In January, Windows Update started throwing a nonspecific error and failing. After a couple weeks I decided to look up the error, and all the recommendations I found online said to make sure several critical services were running. I did, but it appeared to make no difference.

Yesterday, I finally engaged MS support. Priyank remoted into my machine and attempted all the steps I had already tried. I just let him go, so he could get through his checklist and get to the resolution steps. Well, his checklist began and ended with those steps, and he started rather insistently telling me that I had to reinstall, and that he had to do it for me. I told him no thank you, "I know how to reinstall windows, and I'll do it when I'm ready."

In his investigation though, I did notice that he opened MS Edge and tried to load Bing to search for something. But Edge had no connection. No pages would load. I didn't take any special notice of it at the time though, because of the argument I was having with him about reinstalling. And it was no great loss to me that Edge wasn't working, because that was literally the first time it'd ever been launched on that computer.

We got off the phone and I gave him top marks in the CS survey that was sent, as it appeared there was nothing he could do. It wasn't until a couple hours later that I remembered the connectivity problem. I went back and checked again. Edge couldn't load anything. Firefox, the ping command, Steam, Vivaldi, parsec and RDP all worked fine. The Windows Store couldn't connect either. That was when it occurred to me that its was likely that Windows Update was just unable to reach the internet.

As I have no problem whatsoever with MS services being unable to call home, I began trying to set up an on-demand proxy for use when I want to update, and I noticed that when I fill out the proxy details in Internet Options, or in Windows 10's more windows10-ish UI for a system proxy, the "save" button didn't respond to clicks. So I looked that problem up, and saw that it depends on a service called WinHttpAutoProxySvc, which I found itself depends on something called IP Helper, which led me to the root cause of all my issues: IP Helper now depends on the DHCP Client service, which I have explicitly disabled on non-wifi Windows installs since the '90s.

Just to see, I re-enabled DHCP Client, and boom! Everything came back on. Edge, the MS Store, and Windows Update all worked. So I updated, went through a couple reboots-- because that's the name of the game with windows update --and had a fully updated machine.

It occurred to me then that this is probably how MS sends all its spy data too, and since the things I actually use work just fine, I disabled DHCP Client again. I figure that's easier than navigating an intentionally annoying menu tree of privacy options that changes and resets with every major update.

But holy shit, microsoft! How can you hinge the entire system's OS connectivity on something that not everybody uses? 6

6 -

Win 10 is the best, I love how it just restarts without asking, no more hassle of me having to confirm anything, or save data first. finally an OS that has the confidence to just do whatever the fuck it wants, so awesome!3

-

Woohoo!!! I made it to 1000++s :) Now I feel less newbie-like around here :)

So... I don't want to shit-post, so in gratitude to all you guys for this awesome community you've built, specially @trogus and @dfox, I'll post here a list of my ideas/projects for the future, so you guys can have something to talk about or at least laugh at.

Here we go!

Current Project: Ensayador.

It's a webapp that intends to ease and help students write essays. I'm making it with history students in mind, but it should also help in other discipline's essay production. It will store the thesis, arguments, keywords and bibliography so students can create a guideline before the moment of writting. It will also let students catalogue their reads with the same fields they'd use for an essay: that is thesis, arguments, keywords and bibliography, for their further use in other essays. The bibliography field will consist on foreign keys to reads catalogued. The idea is to build upon the models natural/logical relations.

Apps: All the apps that will come next could be integrated in just one big app that I would call "ChatPo" ("Po" is a contextual word we use in my country when we end sentences, I think it derived from "Pues"). But I guess it's better to think about them as different apps, just so I don't find myself lost in a neverending side-project.

A subchat(similar to a subreddit)-based chat app:

An app where people can join/create sub-chats where they can talk about things they are interested in. In my country, this is normally done by facebook groups making a whatsapp group and posting the link in the group, but I think that an integrated app would let people find/create/join groups more easily. I'm not sure if this should work with nicknames or real names and phone numbers, but let's save that for the future.

A slack clone:

Yes, you read it right. I want to make a slack clone. You see, in my country, enterprise communications are shitty as hell: everything consists in emails and informal whatsapp groups. Slack solves all these problems, but nobody even knows what it is over here. I think a more localized solution would be perfect to fill this void, and it would be cool to make it myself (with a team of friends of course), and hopefully profit out of it.

A labour chat-app marketplace:

This is a big hybrid I'd like to make based on the premise of contracting services on a reliable manner and paying through the app. "Are you in need of a plumber, but don't know where to find a reliable one? Maybe you want a new look on your wall, but don't want to paint it yourself? Don't worry, we got you covered. In <Insert app name> you can find a professional perfect to suit your needs. Payment? It's just a tap away!". I guess you get the idea. I think wechat made something like this, I wonder how it worked out.

* Why so many chat apps? Well... I want to learn Erlang, it is something close to mythical to me, and it's perfect for the backend of a comms app. So I want to learn it and put it in practice in any of these ideas.*

Videogames:

Flat-land arena: A top down arena game based on the book "flat land". Different symmetrical shapes will fight on a 2d plane of existence, having different rotating and moving speeds, and attack mechanics. For example, the triangle could have a "lance" on the front, making it agressive but leaving the rest defenseless. The field of view will be small, but there'll be a 2d POV all around the screen, which will consist on a line that fills with the colors of surrounding objects, scaling from dark colors to lighter colors to give a sense of distance.

This read could help understand the concept better:

http://eldritchpress.org/eaa/...

A 2D darksouls-like class based adventure: I've thought very little about this, but it's a project I'm considering to build with my brothers. I hope we can make it.

Imposible/distant future projects:

History-reading AI: History is best teached when you start from a linguistic approach. That is, you first teach both the disciplinar vocabulary and the propper keywords, and from that you build on causality's logic. It would be cool to make an AI recognize keywords and disciplinary vocabulary to make sense of historical texts and maybe reformat them into another text/platform/database. (this is very close to the next idea)

Extensive Historical DB: A database containing the most historical phenomena posible, which is crazy, I know. It would be a neverending iterative software in which, through historical documents, it would store historical process, events, dates, figures, etc. All this would then be presented in a webapp in which you could query historical data and it would return it in a wikipedia like manner, but much more concize and prioritized, with links to documents about the data requested. This could be automated to an extent by History-reading AI.

I'm out of characters, but this was fun. Plus, I don't want this to be any more cringy than it already is.12 -

Soo I am the only tech-guy in my family and it's a bit like:

Other: You do program?

Me: yes?

Other: pls repair my printer!

And you guys know how awful that is, aren't you? But in my family it gets tougher...

Today my older sister asked me how to save data from a broken HDD. I said I know a guy who's doing forensic on HDDs and he could make that.

She's like: "but a friend of mine said it could be done easier with software"

And yes, it is! But not that successful...

Now's the point she killed me instantly!

She said: "he opened the HDD and said the disks look fine they could be easily added to a new HDD"....

WHAT THE ACUTAL FUCK I SAID NOW YOUR DRIVE IS BROKEN FOREVER! AND THEN SHE INSULTED ME AND BLOCKED ME ON FUCKING WHATSAPP! SHE IS LEARNING WEBDESIGN WHY THE FUCK DON'T TEACH HER THE BASICS OF FUCKING COMPUTERS! Oh for fuck sakes....3 -

Was just thinking of building a command line tool's to ease development of some of my games assets (Just packing them all together) and seeing as I want to use gamemaker studio 2 thought that my obsession with JSON would be perfect for use with it's ds_map functions so lets start understanding the backend of these functions to tie them with my CL tool...

*See's ds_map_secure_save*

Oh this might be helpful, easily save a data structure with decent encryption...

*Looks at saved output and starts noticing some patterns*

Hmm, this looks kinda familiar... Hmmm using UTF-8, always ends with =, seems to always have 8 random numbers at the start.. almost like padding... Wait... this is just base64!

Now yoyogames, I understand encryption can be hard but calling base64 'secure' is like me flopping my knob on the table and calling it a subtle flirt...6 -

Disclaimer: This is not a Windows hate rant as this problem has been solved by Microsoft(partially).

I went to a hackathon last year at an engineering college. It was not such grand hackathon as people have in USA or Europe. So I entered in this competition trying to develop a medical app which asks the user detail about his/her problems then asks questions to match the symptoms of diseases. So me and a guy(who isn't a coder) tried to develop that app. He provided the data of diseases, I tried to develop kind of AI app with those data but found that job too hard for one day hackathon. So I wrote an email for api medic for their api which I was going to use. I then coded continuously for 4 hours in Android studio for the android app. The event manager told us late in the day that repo had been made for the hackathon and we must push our codes before 12 that night. The event manager provided the repo very late that day maybe around 6. I did a big mistake not creating my own repo on github to save every code I had written from time to time.(After this e vent whatever I code I save it in a repo). I was running Windows 10 on one of my laptop and ubuntu on my another. Due to some divine badluck I was using my Windows 10 laptop on that hackathon. So around maybe 10 I was about to wrap up the day push the code to repo. I went to getself a cup of coffee and returned to find lo and behold fucking BSOD. I was fucked, it was my first hackathon so made another misatake of using emulator rather than my android phone. My Android phone was not responding good that day so I used the android emulator.

From that day on I do three things:

1. Always push my projects to github repo.

2. Use android phone after running some minor tests on emulator.

3. Never use windows(Happy arch user till eternity.)

You might be thinking even though BSOD, it can be recovered. But didn't happen in my case, the windows revert back to the time I had just upgraded from Windows 8.1 to 10.3 -

In my quest to find a nice dark theme file manager, I stumbled upon this thing called Q-Dir. By default it looks like it comes straight out of the 90's, but after a bit of tweaking here and there it actually turned out really nice!

If you're like me and want the dark theme before Redstone 5 finally arrives but don't want to gobble up all your data in Insiders either, this is actually a pretty solid replacement. Hopefully that'll save some poor sods from having to go through the trouble of finding the holy grail of the dark theme in file managers :)

http://softwareok.com//... 4

4 -

Me: why are we paying for OCR when the API offers both json and pdf format for the data?

Manager: because we need to have the data in a PDF format for reporting to this 3rd party

Me: sure, but can we not just request both json and PDF from the vendor (it’s the same data). send the json for the automated workflow (save time, money and get better accuracy) and send the PDF to the 3rd party?

Manager: we made a commercial decision to use PDF, so we will use PDF as the format.

Me: but ...3 -

Best code performance incr. I made?

Many, many years ago our scaling strategy was to throw hardware at performance problems. Hardware consisted of dedicated web server and backing SQL server box, so each site instance had two servers (and data replication processes in place)

Two servers turned into 4, 4 to 8, 8 to around 16 (don't remember exactly what we ended up with). With Window's server and SQL Server licenses getting into the hundreds of thousands of dollars, the 'powers-that-be' were becoming very concerned with our IT budget. With our IT-VP and other web mgrs being hardware-centric, they simply shrugged and told the company that's just the way it is.

Taking it upon myself, started looking into utilizing web services, caching data (Microsoft's Velocity at the time), and a service that returned product data, the bottleneck for most of the performance issues. Description, price, simple stuff. Testing the scaling with our dev environment, single web server and single backing sql server, the service was able to handle 10x the traffic with much better performance.

Since the majority of the IT mgmt were hardware centric, they blew off the results saying my tests were contrived and my solution wouldn't work in 'the real world'. Not 100% wrong, I had no idea what would happen when real traffic would hit the site.

With our other hardware guys concerned the web hardware budget was tearing into everything else, they helped convince the 'powers-that-be' to give my idea a shot.

Fast forward a couple of months (lots of web code changes), early one morning we started slowly turning on the new framework (3 load balanced web service servers, 3 web servers, one sql server). 5 minutes...no issues, 10 minutes...no issues,an hour...everything is looking great. Then (A is a network admin)...

A: "Umm...guys...hardly any of the other web servers are being hit. The new servers are handling almost 100% of the traffic."

VP: "That can't be right. Something must be wrong with the load balancers. Rollback!"

A:"No, everything is fine. Load balancer is working and the performance spikes are coming from the old servers, not the new ones. Wow!, this is awesome!"

<Web manager 'Stacey'>

Stacey: "We probably still need to rollback. We'll need to do a full analysis to why the performance improved and apply it the current hardware setup."

A: "Page load times are now under 100 milliseconds from almost 3 seconds. Lets not rollback and see what happens."

Stacey:"I don't know, customers aren't used to such fast load times. They'll think something is wrong and go to a competitor. Rollback."

VP: "Agreed. We don't why this so fast. We'll need to replicate what is going on to the current architecture. Good try guys."

<later that day>

VP: "We've received hundreds of emails complementing us on the web site performance this morning and upset that the site suddenly slowed down again. CEO got wind of these emails and instructed us to move forward with the new framework."

After full implementation, we were able to scale back to only a few web servers and a single sql server, saving an initial $300,000 and a potential future savings of over $500,000. Budget analysis considering other factors, over the next 7 years, this would save the company over a million dollars.