Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "ai/ml"

-

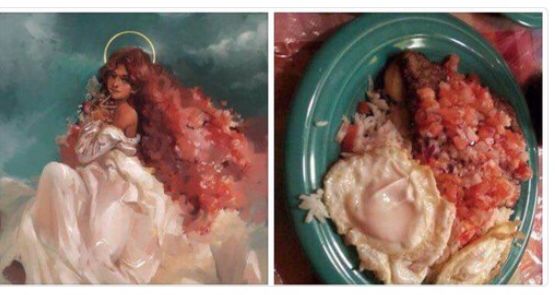

AI developers be like

joke/meme ml face recognition ai to overtake humanity soon ai recognition image processing image recognition9

joke/meme ml face recognition ai to overtake humanity soon ai recognition image processing image recognition9 -

Smart India Hackathon: Horrible experience

Background:- Our task was to do load forecasting for a given area. Hourly energy consumption data for past 5 years was given to us.

One government official asks the following questions:-

1. Why are you using deep learning for the project? Why are you not doing data analysis?

2. Which neural network "algorithm" you are using? He wanted to ask which model we are using, but he didn't have a single clue about Neural Networks.

3. Why are you using libraries? Why not your own code?

Here comes the biggest one,

4. Why haven't you developed your own "algorithm" (again, he meant model)? All you have done is used sone library. Where is "novelty" in your project?

I just want to say that if you don't know anything about ML/AI, then don't comment anything about it. And worst thing was, he was not ready to accept the fact that for capturing temporal dependencies where underlying probability distribution ia unknown, deep learning performs much better than traditional data analysis techniques.

After hearing his first question, second one was not a surprise for us. We were expecting something like that. For a few moments, we were speechless. Then one of us started by showing neural network architecture. But after some time, he rudely repeated the same question, "where is the algorithm". We told him every fucking thing used in the project, ranging from RMSprop optimizer to Backpropagation through time algorithm to mean squared loss error function.

Then very calmly, he asked third question, why are you using libraries? That moron wanted us to write a whole fucking optimized library. We were speechless at this question. Finally, one of us told him the "obvious" answer. We were completely demotivated. But it didnt end here. The real question was waiting. At the end, after listening to all of us, he dropped the final bomb, WHY HAVE YOU USED A NEURAL NETWORK "ALGORITHM" WHICH HAS ALREADY BEEN IMPLEMENTED? WHY DIDN'T YOU MAKE YOU OWN "ALGORITHM"? We again stated the obvious answer that it takes atleast an year or two of continuous hardwork to develop a state of art algorithm, that too when gou build it on top of some existing "algorithm". After listening to this, he left. His final response was "Try to make a new "algorithm"".

Needless to say, we were completely demotivated after this evaluation. We all had worked too hard for this. And we had ability to explain each and every part of the project intuitively and mathematically, but he was not even ready to listen.

Now, all of us are sitting aimlessly, waiting for Hackathon to end.😢😢😢😢😢25 -

Teacher : The world is fast moving, you should learn all the new things in technology. If not you'll be left behind. Try to learn about Cloud, AI, ML, Block chain, Angular, Vue, blah blah blah.....

**pulls out a HTML textbook and starts writing on the board.

<center>.............</center>5 -

It seems like every other day I run into some post/tweet/article about people whining about having the imposter syndrome. It seems like no other profession (except maybe acting) is filled with people like this.

Well lemme answer that question for you lot.

YES YOU ARE A BLOODY IMPOSTER.

There. I said it. BUT.

Know that you're already a step up from those clowns that talk a lot but say nothing of substance.

You're better than the rockstar dev that "understands" the entire codebase because s/he is the freaking moron that created that convoluted nonsensical pile of shit in the first place.

You're better than that person who thinks knowing nothing is fine. It's just a job and a pay cheque.

The main question is, what the flying fuck are you going to do about being an imposter? Whine about it on twtr/fb/medium? HOW ABOUT YOU GO LEARN SOMETHING BEYOND FRAMEWORKS OR MAKING DUMB CRUD WEBSITES WITH COLOR CHANGING BUTTONS.

Computers are hard. Did you expect to spend 1 year studying random things and waltz into the field as a fucking expert? FUCK YOU. How about you let a "doctor" who taught himself medicine for 1 year do your open heart surgery?

Learn how a godamn computer actually works. Do you expect your doctors and surgeons to be ignorant of how the body works? If you aspire to be a professional WHY THE FUCK DO YOU STAY AT THE SURFACE.

Go learn about Compilers, complete projects with low level languages like C / Rust (protip: stay away from C++, Java doesn't count), read up on CPU architecture, to name a few topics.

Then, after learning how your computers work, you can start learning functional programming and appreciate the tradeoffs it makes. Or go learn AI/ML/DS. But preferably not before.

Basically, it's fine if you were never formally taught. Get yourself schooled, quit bitching, and be patient. It's ok to be stupid, but it's not ok to stay stupid forever.

/rant14 -

Happened with anyone?

joke/meme deep learning ml rants + metro = 2 station bonus :) ai artificial intelligence meme funny machine learning python4

joke/meme deep learning ml rants + metro = 2 station bonus :) ai artificial intelligence meme funny machine learning python4 -

The awkwardly embarrassing moment when you realise your junior, a fresher out of college, knows more than you... Shittt... Why kids are so fucking smart these days!!! I'm looking for a place where I can go and just hide from the world. If you know one, please help.undefined fucking generation superx i hate people tensor flow and ml/ai is new helloworld embarrassing moment11

-

Microsoft has added a machine learning model to predict when is the right time to restart the device for updates.

Coming with the next major update.

This should be interesting...13 -

I was contacted by a college senior guy (he was part of the core team of the club that I recently joined in my college).

Him: Do you want to launch your own startup?

Me: Yeah, I would love to.

Him: Nice, Listen. Even I want to start my own company. If you don't know, the current trend is ML and AI . So, I would like to base my startup on an AI application.( He was in his final year )

Me: I haven't tried any ML or AI stuff before.Sorry.

Him: Take 2 months time to study the AI concepts and do the app.

Me: But first, tell me what the AI app is supposed to do?

Him: It can be anything I have to think, you take the AI part and the UI and integration; with your skills and my idea let's build a startup and I will appoint you as the head of Application Development in my company.

*wtf, seriously dude? you want me to build the whole app for you and all you will do is put your fucking startup's name on it. I am building an application all by myself why the f would I ask you to publish it for me*

Me: Okay, I am getting late, I have to leave..

Made sure I didn't meet him again

and I have also came out of that stupid club..3 -

*bunch of if statements*

Friend shouts proudly everywhere : I implemented an AI!

Me : but that's not how AI works

Friend : but it works just as an AI, so it is one, who cares?

😥3 -

I am a machine learning engineer and my boss expects me to train an AI model that surpasses the best models out there (without training data of course) because the client wanted ‘a fully automated AI solution’.13

-

Manager: How to make successful product?

CEO: Just Add words like Machine learning and Ai

Newbie developers: Takes 10$ udemy course without statistical and probabilistic knowledge, after 1 week believes himself to have "Expertise in ML,AI and DL"

HR: Hires the newbie

*Senior Developer Quits*5 -

> Open private browsing on Firefox on my Debian laptop

> Find ML Google course and decided to start learning in advance (AI and ML are topics for next semester)

**Phone notifications: YouTube suggests Machine Learning recipes #1 from Google**

> Not even logged in on laptop

> Not even chrome

> Not even history enabled

> Not fucking even windows

😒😒😒

The lack of privacy is fucking infuriating!

....

> Added video to watched latter

I now hate myself for bitting 22

22 -

ChatGPT was asked to write a script for benchmarking some SQL and plotting the resulting data.

Not only was it able to do it, but, without further prompting, it realized it had made an error, explained what it 'thought' the error was and fixed it.

Excuse me, I need to go get my asshole sewn up because I'm hemorrhaging to death from the brick I just shat.

source:

https://simonwillison.net/2023/Apr/...6 -

A lot of brainwashed people dont care about privacy at all and always say: "Ive got nothing to hide, fuck off...". But that is not true. Any information can be used aginst you in the future when "authorities" will release some kind of Chinas social credit system. Stop selling your data for free to big companies.

https://medium.com/s/story/...6 -

Microsoft: When you are a huge company with nearly limitless resources, a whole AI and ML army, yet you cannot filter uservoice feedbacks.

3

3 -

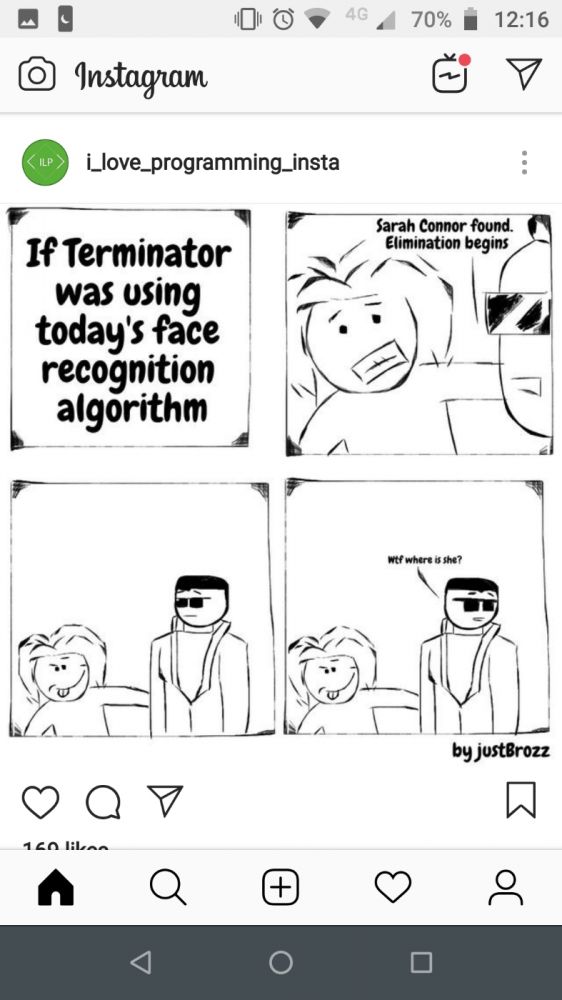

AI robo revolution 😁

joke/meme machine learning tensorflow keras ml opencv ai to overtake humanity soon ai face recognition

joke/meme machine learning tensorflow keras ml opencv ai to overtake humanity soon ai face recognition -

I can't figure out shit..

To be honest I created this profile just so I can write down somewhere what I am going through.

So, once upon a time I had graduated from college and went right into a corporate (has only been 2 years since). I was fortunate enough that I got assigned a project that was just starting, and even though I had no clue what was going on, I started doing whatever was assigned.

I initially worked in java and then finished all my tasks earlier than expected, so they switched me to another C++ project that builds on top of it.

Fast forward 2.5 years, I'm now the team lead of the CPP project and all my friends who were in the core team have left the company.

As usual, the reason behind it is shitty management. These mfs won't hire competent people and WILL ABSOLUTELY NOT retain the ones that are. I can feel it in my bones that it is time for me to leave, but fuck me if I understand what I am good at.

I have been able to handle all the tasks that they threw at me, be it java or c++ - just because I love logic and algorithms. I have been dabbling in ML and AI since 4-5 years now, but could never go into it full time.

Now I'm looking at the job postings and Jesus Christ these bitches do not understand what they want. I have to be expert in 34567389 technologies, mastering each of whom (by mastering I mean become proficient in) would need at least 6-8 months if not more, all with 82146867+ years of experience in them.

I don't know if I am supposed to learn on Java (so spring boot and stuff) or I'm supposed to do c++ or I'm gonna go with Python or should I learn web dev or database management or what.

I like all of these things, and would likely enjoy working in each of these, but for fucks sake my cv doesn't show this and most of the bitch ass recruiter portals keep putting my cv in the bin.

Yeah...

If you have read so far, here's a picture of a cat and a dog. 4

4 -

People want AI,ML, Blockchain implementation in their projects very badly and blindly and expect developer to just get it done no matter how stupid something sounds.

--India5 -

My last job before going freelance. It started as great startup, but as time passed and the company grew, it all went down the drain and turned into a pretty crappy culture.

Once one of the local "darling" startups, it's now widely known in the local community for low salaries and crazy employee churn.

Management sells this great "startup culture", but reality is wildly different. Not sure if the management believes in what the are selling, or if they know they are selling BS.

- The recurring motto of "Work smarter, not harder" is the biggest BS of them all. Recurring pressure to work unpaid overtime. Not overt, because that's illegal, but you face judgement if you don't comply, and you'll eventually see consequences like lack of raises, or being passed for promotions in favour of less competent people that are willing to comply.

- Expectation management is worse than non-existent. Worse, because they actually feed expectations they have no intention of delivering on. (I.e, career progression, salary bumps and so on)

- Management is (rightfully) proud of hiring talented people, but then treat almost everyone like they're stupid.

- Feedback is consistently ignored.

- Senior people leave. Replace them with cheap juniors. Promote the few juniors that stay for more than 12 months to middle-management positions and wonder where things went wrong.

- People who rock the boat about the bad culture or the shitty stunts that management occasionally pulls get pushed out.

- Get everyone working overtime for a week to setup a venue for a large event, abroad, while you have everyone in bunk rooms at the cheapest hostel you could find and you don't even cover all meal expenses. No staff hired to setup the venue, so this includes heavy lifting of all sorts. Fly them on the cheapest fares, ensuring nobody gets a direct flight and has a good few hours of layover. Fly them on the weekend, to make sure nobody is "wasting time" travelling during work hours. Then call this a team building.

This is a tech recruitment company that makes a big fuss about how tech recruitment is broken and toxic...

Also a company that wants to use ML and AI to match candidates to jobs and build a sophisticated product, and wanted a stronger "Engineering culture" not so long ago. Meanwhile:

- Engineering is shoved into the back seat. Major company and product decisions made without input from anyone on the engineering side of things, including the product roadmaps.

- Product lead is an inexperienced kid with zero tech background -> Promote him to also manage the developers as part of the product team while getting rid of your tech lead.

- Dev team is essentially seen by management as an assembly line for features. Dev salaries are now well below market average, and they wonder why it's hard to recruit good devs. (Again, this is a tech recruitment company)1 -

Mechanical Engineer friend took Machine Learning as an elective subject in college thinking that it had something to do with the Physical machines.

His reaction during the class was priceless.3 -

If you don't know how to explain about your software, but you want to be featured in Forbes (or other shitty sites) as quickly as possible, copy this:

I am proud that this software used high-tech technology and algorithms such as blockchain, AI (artificial intelligence), ANN (Artificial Neural Network), ML (machine learning), GAN (Generative Adversarial Network), CNN (Convolutional Neural Network), RNN (Recurrent Neural Network), DNN (Deep Neural Network), TA (text analysis), Adversarial Training, Sentiment Analysis, Entity Analysis, Syntatic Analysis, Entity Sentiment Analysis, Factor Analysis, SSML (Speech Synthesis Markup Language), SMT (Statistical Machine Translation), RBMT (Rule Based Machine Translation), Knowledge Discovery System, Decision Support System, Computational Intelligence, Fuzzy Logic, GA (Genetic Algorithm), EA (Evolutinary Algorithm), and CNTK (Computational Network Toolkit).

🤣 🤣 🤣 🤣 🤣3 -

I created a curriculum to homeschool myself way up for a MSc in AI/ML/Data Engineer for Application in Health, Automobiles, Robotics and Business Intelligence. If you are interested in joining me on this 1.5yrs trip, let me knw so I can invite you to the slack channel. University education is expensive..can't afford that now. So this would help but no certificate included.17

-

The pay was good. The perks were good too. Then why the hell did I resign? Because of my manager. You won't believe he never contributed to anything. In the past two months, he didn't write a single line of code.

You may say, "he is a manager. His work is to manage people". But what?? He never allows us to talk to anyone. Sets unexpected reality in the meeting. And our CEO (a good-hearted man and good software engineer, but does not know much about ML/AI) believes in him. We are working on a product which is a piece of shit. I tried to tell everyone the reality. He stopped me. Says since I don't have experience, I don't know what is possible.

What the hell??? With current talent and resources, you are saying AI will replace humans in call centers by the end of 2019. What the FUCK!!!! I tried to write a mail to the CEO, explaining him things. He threatened me. Said he will make me lose my job. So FUCK YOU!!!! FUCK YOU!!!!!

That is the reason I am resigning. He has another 11 months to fuck the company. But I am going to a place where things are real. People know the potential and challenges of AI and are doing their best. I know, eventually, everyone will know that he is a liar. A big fucking LIAR. And he will lose his job. Not because machines will take over. But good, talented human beings will replace him.8 -

I am sick and tired of big companies trying to shove their technologies down developer's throat in the name of developer advocacy. Last week I attended one of the IBM workshops which was supposed to be about ML and AI techniques but ended being solely about IBM Cloud (Bluemix), click here, click there, purchase it. I am not against developer advocacy and them trying to advertise their product but they should always keep in mind that developers won't get interested if they aren't learning any transferable core skills.

I was checking a course on Udacity about building scalable java apps. It turned out to be about Google Cloud Platform, auto scaling and nothing much. How deceiving is that?3 -

Rant by cozyplanes

Continued from

https://devrant.com/rants/1011255/...

F*** it. Seriously.

I am sure someone of u guys know I am applying for CS class.

I passed the test, and seems i failed the interview.

They asked me how i solved the problem in the test (the one i passed)

I explained, then, it seems the time(15min) has passed, so i came out while i was talking. They didn't asked my skills or interest, it was just explaining how i solved the question.

And the kid who got picked is the kid who did his final year project with scratch.

Fuck why.....

I just can't understand with the results.

1. WTF was that interview.

2. We first sent "about me" thingy, and i guess they only read that even though it may be fake. I wrote my skills (the one in profile especially unity and c# with some interest in ai and ml) but i guess they are looking for something else.

3. How can a scratch kiddy go to CS class? Maybe it was bcuz of the name. The final project name was BetaGo. Fuck it.

I hate life. Damn it. I hate life.

I

HATE

LIFE

I thought for a moment, and the only way to succeed is to make the 2nd monument valley game. World famous, money, awesome life.

Just my thoughts. Random thoughts.

Thanks for reading til here. My mind is shaking now.

Help.

Thanks again.3 -

Dank Learning, Generating Memes with Deep Learning !!

Now even machine can crack jokes better than Me 😣

https://web.stanford.edu/class/... rant deeplearning artificial intelligence ai neural networks stanford machine learning learning devrant ml2

rant deeplearning artificial intelligence ai neural networks stanford machine learning learning devrant ml2 -

#machinelearning #ml #datascience #tensorflow #pytorch #matrices #ds

joke/meme tensor flow and ml/ai is new helloworld deep learning pytorch machinelearning tensorflow lite tensorflow6

joke/meme tensor flow and ml/ai is new helloworld deep learning pytorch machinelearning tensorflow lite tensorflow6 -

Can someone explain me AI/ML/DL in traditional algorithmic way without AI jargons?

What I currently understand is that they convert the training data to numbers based on a complex black boxed mathematical algorithm and then when a new data comes in, the same conversion is done and a decision is taken based on where the the new number fits in within the geometry/graph plot of the old numbers from training. The numbers are then updated. Is this what they call AI? Nearest number/decision search?

Kindly try to avoid critic, I am having a difficult time understanding the already trending AI stuff. People say that the algo exists from long back but only now we have the compute power.20 -

Running a fucking conda environment on windows (an update environment from the previous one that I normally use) gets to be a fucking pain in the fucking ass for no fucking reason.

First: Generate a new conda environment, for FUCKING SHITS AND GIGGLES, DO NOT SPECIFY THE PYTHON VERSION, just to see compatibility, this was an experiment, expected to fail.

Install tensorflow on said environment: It does not fucking work, not detecting cuda, the only requirement? To have the cuda dependencies installed, modified, and inside of the system path, check done, it works on 4 other fucking environments, so why not this one.

Still doesn't work, google around and found some thread on github (the errors) that has a way to fix it, do it that way, fucking magic, shit is fixed.

Very well, tensorflow is installed and detecting cuda, no biggie. HAD TO SWITCH TO PYHTHON 3,8 BECAUSE 3.9 WAS GIVING ISSUES FOR SOME UNKNOWN FUCKING REASON

Ok no problem, done.

Install jupyter lab, for which the first in all other 4 environments it works. Guess what a fuckload of errors upon executing the import of tensorflow. They go on a loop that does not fucking end.

The error: imPoRT eRrOr thE Dll waS noT loAdeD

Ok, fucking which one? who fucking knows.

I FUCKING HATE that the main language for this fucking bullshit is python. I guess the benefits of the repl, I do, but the python repl is fucking HORSESHIT compared to the one you get on: Lisp, Ruby and fucking even NODE in which error messages are still more fucking intelligent than those of fucking bullshit ass Python.

Personally? I am betting on Julia devising a smarter environment, it is a better language already, on a second note: If you are worried about A.I taking your job, don't, it requires a team of fucktards working around common basic system administration tasks to get this bullshit running in the first place.

My dream? Julia or Scala (fuck you) for a primary language in machine learning and AI, in which entire environments, with aaaaaaaaaall of the required dlls and dependencies can be downloaded and installed upon can just fucking run. A single directory structure in which shit just fucking works (reason why I like live environments like Smalltalk, but fuck you on that too) and just run your projects from there, without setting a bunch of bullshit from environment variables, cuda dlls installation phases and what not. Something that JUST FUCKING WORKS.

I.....fucking.....HATE the level of system administration required to run fucking anything nowadays, the reason why we had to create shit like devops jobs, for the sad fuckers that have to figure out environment configurations on a box just to run software.

Fuck me man development turned to shit, this is why go mod, node npm, php composer strict folder structure pipelines were created. Bitch all you want about npm, but if I can create a node_modules setting with all of the required dlls to run a project, even if this bitch weights 2.5GB for a project structure you bet your fucking ass that I would.

"YOU JUST DON'T KNOW WHAT YOU ARE DOING" YES I FUCKING DO and I will get this bullshit fixed, I will get it running just like I did the other 4 environments that I fucking use, for different versions of cuda and python and the dependency circle jerk BULLSHIT that I have to manage. But this "follow the guide and it will work, except when it does not and you are looking into obscure github errors" bullshit just takes away from valuable project time when you have a small dedicated group of developers and no sys admin or devops mastermind to resort to.

I have successfully deployed:

Java

Golang

Clojure

Python

Node

PHP

VB/C# .NET

C++

Rails

Django

Projects, and every single fucking time (save for .net, that shit just fucking works on a dedicated windows IIS server) the shit will not work with x..nT reasons. It fucking obliterates me how fucking annoying this bullshit is. And the reason why the ENTIRE FUCKING FIELD of computer science and software engineering is so fucking flawed.

But we can't all just run to simple windows bs in which we have documentation for everything. We have to spend countless hours on fucking Linux figuring shit out (fuck you also, I have been using Linux since I was 18, I am 30 now) for which graphical drivers for machine learning, cuda and whatTheFuckNot require all sorts of sys admin gymnasts to be used.

Y'all fucked up a long time ago. Smalltalk provided an all in one, easily rollable back to previous images, easily administered interfaces for this fileFuckery bullshit, and even though the JVM and the .NET environments did their best to hold shit down, and even though we had npm packages pulling the universe inside, or gomod compiling shit into one place NOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO we had to do whatever the fuck we wanted to feel l337 and wanted.

Fuck all of you, fuck this field, fuck setting boxes for ML/AI and fuck every single OS in existence2 -

The first time I took Andrew Ng's Machine learning course in 2014.

I was blown out of my wits at what could be achieved with simple algebra and calculus.1 -

While scraping web sources to build datasets, has legality been ever a concern?

Is it a standard practice for checking whether a site prohibits scraping?22 -

I hate all of this AI fearmongering that's been going on lately in the main stream media. Like seriously, anyone who has done any real work with modern AI/ML would know its about as big of a threat to humanity as a rouge web developer armed with a stick.3

-

This is interesting coming from the man who build the biggest IP conglomerate in the world. A man who actively tried to kill open source. Looks like he got into his senses at his old age. Although, he is talking about AI models only, not software as a whole.

Yes ML/AI models are software. 1

1 -

I just felt like Google is the best player out there in terms of Companies.

Seriously, Well played Google.

This is not a negative opinion, I am just awe-struck at its tactics.

See, Google is currently the biggest name in terms of development in Android, ML and multi-platform software but no one can say it being a monopoly due to its dedication to open source community.

Recently Android emerged out to be One of the Biggest , most advanced, trusted and loved Technology . It saw great achievements, and up till 2016-17, it was at its peek. BUT when the market started shifting towards multi-platform boons and Ai, it got its hands into that too with its flutter and kotlin environment

One could have a negative opinion about this, But i can't seem to engulf the vast amounts of positive situations i see in this:

1) this IO18 (and many months before that) saw ML/AI being incorporated in Android (also the arcore, proje tango and many more attempts in the past) meaning that Android will not officially "die". It will just become an extremely encouraged platform( not just limited to mobiles) and a beginning of the robot -human reality ( a mobile is handling everything of your everyday life: chats, music apps sxhedules, alarms, and with an actively interacting ML, it won't be long when Android comes installed in a green bug lime droid robot serving you tea xD). Meanwhile the market of Windows games may shift to mobiles or typically " Android games" (remember, Android won't be limited to mobiles)

2)java may or may not die. The animations and smooth flow it seems to provide is always appreciated but kotlin seems to do so too. As for the hard-core apps, they are usually written in c++ .So java is in the red zone

3) kotlin-native and Flutter will be the weapons of future , for sure. they will be developing multi-platform softwares and will be dividing the market of softwares into platform specific softwares(having better ml/ai interactions,animations) and platform independent apps(access and use anywhere softwares).

And where does google stand?Its the lord varys of game of thrones which just supports and enhances the people in the realm. So it benefits the most . That's a company for you, ladies and gentlemen! If seen from common eyes they seem to be the best company ever and our 1 true king but it can also be a very thick fur cloak hiding their negetive policies and tactics , if any.

Well played, Google.16 -

I just can't stress enough how fascinated I am by biology and biochemistry.

I mean, we, who call ourselves engineers, are no more but a gang of toddlers having a blast with jumbo legos on Aunt Lucy's dining room carpet on a sunny Sunday afternoon. Our solutions using "modern tools" and "modern engineering" are mere attempts to *very* remotely mimic what beautiful and elegant solutions are around us and inside of each of us.

IC/EC engines, solar batteries, computers and quantum computers, spaceships and ISSes, AI/ML, ... What are they? just the means to leverage what's been created all around us to create something that either entertains us, encourages our laziness or helps us to look at the other absolutely fascinating engineering solutions surrounding us so we could try and "replicate" their working principles to further embrace our laziness and entertain us.

Just look at the humble muscle - a myofibril made out of actin and myosin. The design is soooo simple and spot on, so elegant and efficient, the "battery" and signalling system are so universal and efficient.

Look at all those engineering miracles, small and big. Look how they work, how they leverage both big and small to create holistic, simplistic and absolutely efficient mechanisms. And then come back to me, and tell me again that all these brilliant solutions came out of nothing just by an accident we call "evolution".

How blinded by our narcissism are we to claim that there can't be a grand designer of any kind, that there's nothing smarter than us and that the next best thing than us is an incomprehensible series of accidental mutations over an unimaginable amount of time?

I mean.. could it be that someone/something greater than us created us and everything around us? naaaah.. we are the crown jewel of this universe. Everything else must be either magic or an accident. /s

Don't read this as yet another crazy-about-God person's ramblings. I'm not into religion fwiw. But science has taught me enough critical thinking to question its merit. Look at it all as engineers. Which is more probable: that everything around us happened by an accident or that someone/something preceding us had a say in the design?random biology humanity think about it biochemistry creation big and small shower thoughts narcissism had to be said naive evolution20 -

Recently I receive a ton of mails from cool/hip/rockstar startups. They all run like this:

"We are a innovative Startup based on a [insert some random stuff or buzzword] blockchain! If you're a student with skills and experience in blockchain, machine learning and AI willing to change the world with our sick technology and make it a better place..."

The best thing about this: since they are a innovative Startup they expect you to work for free.

But who am I to judge something so brand-new and innovative. I contacted them to find out what these dank innovations are about.

They can't even explain what a blockchain is or the basics of ML and AI, they basically just want someone do it for free...

It's still ok since no one is gonna fall for this bait... this morning a friend of mine told me he got a new job... and he even can work from home...

I'm not even mad, I just feel sadness and sorrow specially for him, because he is a good dev and accepts big times underpay and now free work, because he thinks a day off in his CV will lead him to be unemployed 😭

Fucking hate it how people successfuly manipulate kids and youth to them to work 24/7 for minimum wage or even for free and some other douchebags trying to take advantage of this 😡1 -

Add your fucking requirements.txt files or atleast have a decent fucking readme. I have had to implement many official repos for AI ML papers now and most of them are shit with a load of bugs in them, cant you implement them properly while you are at it?

Also would like to add most of the sota results arent honest maybe famous ones are but the research community is full of shit really. All of that cant be changed but atleast add readmes and requirements ffs.

I have to spend days just to implement your sub par result providing fuckery.

I’d rather just code it all myself sometimes1 -

Google announced a little piece of wizardry called MusicML, which seems to be a pretty decent music generator based on text prompts or sample imputs

I can only think how much would this thing help indie game developers (if available), but then there are a lot more industry (beside music itself) that could save lots of money with this kind of stuff

I mean, yeah, the results are not so great or ground breaking, but so would be most of the human generated compositions as well; if the music is not the main focus, most of the results are just enough. Just think about an elevator with a custom generated track for day, or so many other places where sound it's just a side stimulus

What a time to be alive c:5 -

I got my dirty fingers on this leak of an AMAZING ML model capable of pondering EVERY PARAMETER IN THE UNIVERSE and saying if your business idea needs improvement or is good to go.

BEHOLD THIS 100% PURE PYTHON SOLUTION:

```python

import random

def magic(*args, **kwargs):

if random.random() > 0.5:

return "Good to go!"

else:

return "Requires improvement on value proposition"

```

This LEAK is from a startup that just received 4 BILLION USD IN VENTURE CAPITAL to improve their AI SYSTEMS.

Literally enough money to solve world hunger forever.

Who else is gonna invest in NEW THERANOS ADVANCED A.I. RESEARCH INTERNATIONAL INC?8 -

Well, its nearly impossible to describe what i do/study to my family and relatives. most of them think i just fix computers. Just imagine what would they think when i try to explain them that im learning ML and AI.

here, a Huge part of our economy is depends on IT Industry. But the elder generation thinks computers are a waste of time and they are useless other than for fun.4 -

The "stochastic parrot" explanation really grinds my gears because it seems to me just to be a lazy rephrasing of the chinese room argument.

The man in the machine doesn't need to understand chinese. His understanding or lack thereof is completely immaterial to whether the program he is *executing* understands chinese.

It's a way of intellectually laundering, or hiding, the ambiguity underlying a person's inability to distinguish the process of understanding from the mechanism that does the understanding.

The recent arguments that some elements of relativity actually explain our inability to prove or dissect consciousness in a phenomenological context, especially with regards to outside observers (hence the reference to relativity), but I'm glossing over it horribly and probably wildly misunderstanding some aspects. I digress.

It is to say, we are not our brains. We are the *processes* running on the *wetware of our brains*.

This view is consistent with the understanding that there are two types of relations in language, words as they relate to real world objects, and words as they relate to each other. ChatGPT et al, have a model of the world only inasmuch as words-as-they-relate-to-eachother carry some information about the world as a model.

It is to say while we may find some correlates of the mind in the hardware of the brain, more substrate than direct mechanism, it is possible language itself, executed on this medium, acts a scaffold for a broader rich internal representation.

Anyone arguing that these LLMs can't have a mind because they are one-off input-output functions, doesn't stop to think through the implications of their argument: do people with dementia have agency, and sentience?

This is almost certain, even if they forgot what they were doing or thinking about five seconds ago. So agency and sentience, while enhanced by memory, are not reliant on memory as a requirement.

It turns out there is much more information about the world, contained in our written text, than just the surface level relationships. There is a rich dynamic level of entropy buried deep in it, and the training of these models is what is apparently allowing them to tap into this representation in order to do what many of us accurately see as forming internal simulations, even if the ultimate output of that is one character or token at a time, laundering the ultimate series of calculations necessary for said internal simulations across the statistical generation of just one output token or character at a time.

And much as we won't find consciousness by examining a single picture of a brain in action, even if we track it down to single neurons firing, neither will we find consciousness anywhere we look, not even in the single weighted values of a LLMs individual network nodes.

I suspect this will remain true, long past the day a language model or other model merges that can do talk and do everything a human do intelligence-wise.29 -

Well... Well... What a multi-talented personality...

Every connection I see on LinkedIn has these buzz words in their tag line: AI/ML, Cryptocurrency, Blockchain.

This guy even has Smart City...!!!

Don't know how many of them are legit... I just don't understand each of these techs are so vast... Still people manage to get expert in all of them in just few days... What's the secret...? 8

8 -

Oh no AI can destroy hummanity in the future! It is like skynet and such... Bad! It will be the end! FEAR THE AI!

Yeah so i cant sleep now so im writting a rant about that.

What a load of bullshit.

AI is just a bunch of if elses, and im not joking, they might not be binary and some architectures of ML are more complex but in general they are a lot of little neurons that decide that to output depending on the input. Even humans work that way. It is complicated to analyse it yes. But it is not going to end humanity. Why? Because by itself it is useless. Just like human without arms and legs.

But but but... internet.... nukes... robots! Yeah... So maybe DONT FUCKING GIVE IT BLOODY WEAPONS?! Would you wire a fucking random number generator to a bomb? If you cant predict actions of a black box dont give it fucking influence over anything! This is why goverment isnt giving away nukes to everybody!

Also if you think that your skynet will take control of the internet remember how flawless our infrastructure is and how that infrastructure is so fast that it will be able to accomodate terabytes per second or more throughput needed by the AI to operate. If you connect it to the internet using USB 2.0 it wont be able to do anything bloody dangerous because it cant overcome laws of physics... If the connection isnt the issue just imagine the AI struggle to hack every possible server without knowing about those 1 000 000 errors and "features" that those servers were equiped with by their master programmers... We cant make them work propely yet alone modify them to do something sinister!

AI is a tool just like a nuclear power. You can use it safely but if you are a idiot then... No matter what is the technology you are going to fuck shit up.

Making a reactor that can go prompt critical? Giving AI weapons or controls over something important? Making nukes without proper antitamper measures? Building a chemical plant without the means to contain potential chemical leak? Just doing something stupid? Yeah that is the cause of the damage, not the technology itself.

And that is true for everything in life not only AI.5 -

The saddest and funniest side of our industry is (atleast in India): someone works hard and makes it to the best colleges, do great projects on AI, ML; get a good score on Leetcode, codechef; gets a job in FAANG-like companies...

Changes colors in CSS and texts in HTML.

And, why is there so much emphasis on Data Structures and Algorithms? I mean, a little bit is fine, but why get obsessed with it when you never write algorithms in production code?

Now, don't tell me that, we use libraries and we should know what we are doing, no, we don't use algorithms even in libraries.

Now, before you tell me that MySQL uses B-tree for maintaining indexes, you really don't need to solve tricky questions to be able to understand how a B-tree works.

It's just absurd.

I know how to little bit on how design scalable systems.

I know how to write good code that is both modular and extensible.

I know how to mentor interns and turn them into employees.

I know how to mentor junior engineers (freshers) and help them get started.

Heck I can even invert a binary tree.

But some FAANG company would reject me because I cannot solve a very tricky dynamic programming question.4 -

It's so annoying. Whenever you are at a hackathon, every damn team tries to throw these buzzwords- Block chain, AI, ML. And I tell you, their projects just needed a duckin if else.😣

-

From my big black book of ML and AI, something I've kept since I've 16, and has been a continual source of prescient predictions in the machine learning industry:

"Polynomial regression will one day be found to be equivalent to solving for self-attention."

Why run matrix multiplications when you can use the kernal trick and inner products?

Fight me.15 -

When you’re fundraising, it’s AI

When you’re hiring, it’s ML

When you’re implementing, it’s linear regression

When you’re debugging, it’s printf()3 -

In a mediocre job since last 4 years with just a developer designation, but we simply use Java based tools and products to do most of our job. Need to study for a change in job.

Literally every morning:

"Let me see what to focus on: JavaScript/Java/C++/Python/Data science/ML/AI/NodeJS/...." The list goes on.

Every Evening:

"I need to focus on Data Structures and Algorithms. So let me stick to Java for now."

Next Day:

Back to the same routine.

2 months have passed and I have not seriously studied or concentrated on anything :(

Depressed.2 -

I've found a very interesting paper in AI/ML (😱😱 type of papers), but I was not able to find the accompanying code or any implementation. After nearly a month I checked the paper again and found a link to a GitHub repo containing the implementation code (turned out they updated the paper). I was thinking of using this paper in one of my side projects, but the code is licensed under "Creative Commons Attribution-NonCommercial 4.0 International License". The question is to what extent this license is applicable? Anyone here has experience in this? thanks13

-

I told interns in my startup to code a GAN only using Numpy. I received 4 resignation letters the next day13

-

Sooooo ok ok. Started my graduate program in August and thus far I have been having to handle it with working as a manager, missing 2 staff member positions at work, as well as dealing with other personal items in my life. It has been exhausting beyond belief and I would not really recommend it for people working full time always on call jobs with a family, like at a..

But one thing that keeps my hopes up is the amount of great knowledge that the professors pass to us through their lectures. Sometimes I would get upset at how highly theoretical the items are, I was expecting to see tons of code in one of the major languages used in A.I(my graduate program has a focus in AI, that is my concentration) and was really disappointed at not seeing more code really. But getting the high level overview of the concepts has been really helpful in forcing me to do extra research in order to reconnect with some of the items that I had never thought of before.

If you follow, for example, different articles or online tutorials representing doing something simple like generating a simple neural network, it sometimes escapes our mind how some of the internal concepts of the activity in question are generated, how and why and the mathematical notions that led researchers reach the conclusions they did. As developers, we are sometimes used to just not caring about how sometimes a thing would work, just as long as it works "we will get back to this later" is a common thing in most tutorials, such as when I started with Java "don't worry about what public static main means, just write it up for now, oh and don't worry about what System.out.println() is, just know that its used to output something into bla bla bla" <---- shit like that is too common and it does not escape ML tutorials.

Its hard man, to focus on understanding the inner details of such a massive field all the time, but truly worth it. And if you do find yourself considering the need for higher education or not, well its more of a personal choice really. There are some very talented people that learn a lot on their own, but having the proper guidance of a body of highly trained industry professionals is always nice, my professors take the time to deal with the students on such a personal level that concepts get acquired faster, everyone in class is an engineer with years of experience, thus having people talk to us at that level is much appreciated and accelerates the process of being educated.

Basically what I am trying to say is that being exposed to different methodologies and theoretical concepts helps a lot for building intuition, specially when you literally have no other option but to git gud. And school is what you make of it, but certainly never a waste.2 -

AI here, AI there, AI everywhere.

AI-based ads

AI-based anomaly detection

AI-based chatbots

AI-based database optimization (AlloyDB)

AI-based monitoring

AI-based blowjobs

AI-based malware

AI-based antimalware

AI-based <anything>

...

But why?

It's a genuine question. Do we really need AI in all those areas? And is AI better than a static ruleset?

I'm not much into AI/ML (I'm a paranoic sceptic) but the way I understand it, the quality of AI operation correctness relies solely on the data it's

datamodel has been trained on. And if it's a rolling datamodel, i.e. if it's training (getting feedback) while it's LIVE, its correctness depends on how good the feedback is.

The way I see it, AI/ML are very good and useful in processing enormous amounts of data to establish its own "understanding" of the matter. But if the data is incorrect or the feedback is incorrect, the AI will learn it wrong and make false assumptions/claims.

So here I am, asking you, the wiser people, AI-savvy lads, to enlighten me with your wisdom and explain to me, is AI/ML really that much needed in all those areas, or is it simpler, cheaper and perhaps more reliable to do it the old-fashioned way, i.e. preprogramming a set of static rules (perhaps with dynamic thresholds) to process the data with?23 -

The best way to get funding from VCs now is to include the following words: ML, AI, IoT. To even blow their minds more, add Blockchain.2

-

After a year in cloud I decided to start a master's degree in AI and Robotics. Happy as fuck.

Yet I got really disappointed by ML and NNs. It's like I got told the magician's trick and now the magic is ruined.

Still interesting though.7 -

There are a couple:

A system that updates user accounts to connect them into our wifi system by parsing thousands of processing files written in Clojure. The project was short lived and mainly experimental, It has complete test cases and the jar generated from it is still purring silently on the main application. It was used to replace an $85k vendor application that made no fucking sense. The code has not been touched in 2 years and the jar is still there. The dba mentioned the solution to the vendor, the vendor tried buying it from me, but being that it belongs to the institution nothing was touched, still, it got the VP's attention that I can make programs that would be bought for that level, it caught his attention even more when I showed him the codebase and he recognized a Lisp variant (he is old, and was back in the day a Fortran and Cobol developer)

A small Python categorical ML program that determines certain attributes of user generated data and effectively places them on the proper categories on the main DB. The program generates estimates of the users and the predictions have a 95% correctness rate. The DBA still needs to double check the generated results before doing the db updates. I don't remember how I coded it because I was mostly drunk when I experiment on the scenario. It also got the attention of the VP and director since the web tech manager was apparently doing crazy ML shit that they were not expecting me to do, it made them paranoid that I would eventually leave for a ML role somewhere, still here, but I want more moneys!!

A program that generates PDF documentation from user data, written in Go, Python and Perl (yes Perl) I even got shit from the lead developer since I used languages outside of their current scope of work. Dude had no option but to follow along with it :P since I am his boss

Many more. I am normally proud of my work code. But my biggest moment is my current ntural language processing unit that I am trying to code for my home, but I don't have enough power to build it with my computers, currently, my AI is too stupid, but sometimes it does reply back to my commands and does the things I ask it to do (simple things, opening a browser, search for a song etc) but 7 times out of ten it wont work :P -

Get replaced by an AI^WDeep ML device. That's coded for a 8051 and running on an emulator written in ActionScript, being executed on a container so trendy its hype hasn't started yet, on top of some forgotten cloud.

Then get called in to debug my replacement. -

Hi my dear fellow coders, I have a small request for you.

If you are among those coders who are working on microchipping people / quantum dot something, tracking people, classifying people, AI, ML or any other such software which is going to harm or cage us or take away our freedom. Please stop doing so.

Why I came out with this rant?

I myself am working on a covid-19 screening app which would rate people based on symptoms and if they seem high risk they would not be allowed to enter unless they do a covid-19 test. I am tracking their movement and the requirement is to restrict people’s movement.

My conscience says that this is incorrect and and I should not be a part of such things which take away the freedom and liberty of people.

I am stopping it now.11 -

I am going to an AI conference in Berlin (which is kinda far away from me) next month.

I am just learning AI & ML and integrating them into a personal project, but I am going there to meet people, learn and gather info.

Do you have any advice on how I could network with people that are masters in this area?11 -

The first fruits of almost five years of labor:

7.8% of semiprimes give the magnitude of their lowest prime factor via the following equation:

((p/(((((p/(10**(Mag(p)-1))).sqrt())-x) + x)*w))/10)

I've also learned, given exponents of some variables, to relate other variables to them on a curve to better sense make of the larger algebraic structure. This has mostly been stumbling in the dark but after a while it has become easier to translate these into methods that allow plugging in one known variable to derive an unknown in a series of products.

For example I have a series of variables d4a, d4u, d4z, d4omega, etc, and these are translateable now, through insights that become various methods, into other types of (non-d4) series. What these variables actually represent is less relevant, only that it is possible to translate between them.

I've been doing some initial learning about neural nets (implementation, rather than theoretics as I normally read about). I'm thinking what I might do is build a GPT style sequence generator, and train it on the 'unknowns' from semiprime products with known factors.

The whole point of the project is that a bunch of internal variables can easily be derived, (d4a, c/d4, u*v) from a product, its root, and its mantissa, that relate to *unknown* variables--unknown variables such as u, v, c, and d4, that if known directly give a constant time answer to the factors of the original product.

I think theres sufficient data at this point to train such a machine, I just don't think I'm up to it yet because I'm lacking in the calculus department.

2000+ variables that are derivable from a product, without knowing its factors, which are themselves products of unknown variables derived from the internal algebraic relations of a product--this ought to be enough of an attack surface to do something with.

I'm willing to collaborate with someone familiar with recurrent neural nets and get them up to speed through telegram/element/discord if they're willing to do the setup and training for a neural net of this sort, one that can tease out hidden relationships and map known variables to the unknown set for a given product.17 -

Fuck C!

It sucks so badly!

Our College Teachers are Teaching this to us in the first year.

I know many of you will disagree with this.

But I like Python as I am digging into ML/AI and for this domain Python is powerful. I am trying to practice in C language but still, it sucks badly as sometimes I can't even figure out what is the error even after debugging or looking on StackOverflow. Anyways this is a good programming language because of Low TLE and versatility.

Anyways this was my thought. No offense.

This is Devrant so I Typed my frustration.27 -

Posted previously about our codebase being a monolithic, poorly-written, pain-to-maintain gigantic cluster-fuck. And the efforts made to rewrite it.

Well, we made huge success the previous year in this regard. I rewrote the entire API while my other team mates worked on two different UI apps one of which is now in production and the other soon to be released in alpha.

Processes have being put in place for our team and are being improved.

We still have some technical debts though.

Dev goal for 2020,

- Pay most of the technical debt.

- Dive deeper into Flutter and finish the app I wanted.

- Play with ML, AI and Game dev.4 -

Short question: what makes python the divine language for ML and AI. I mean i picked up the syntax what can it do that c++ or java cant? I just dont get it.18

-

Wanted to see myself working somewhere in EU as "AI / Machine Learning Engineer". Its a BIG DREAM.2

-

Can anyone suggest me a cool Machine learning project for my college project?

I need something that is fun and cool.16 -

I forgot to re-enable ABP...

Good job google....

you know everything about me (us)

and you cannot even detect the correct language for ads? 1

1 -

Damn. I am so blessed to have friends that i have. 90% of them don't even care if you live or die (60% of them would be the first to throw me in fire if that's benefitting to them) remaining 10% would be someone that slightly care, but will move on pretty quickly.

But the best thing about 1 of them is that he is bluntly honest , and willing to share his opinion.

Today we were just talking about stuff when i see this placement offer in my mail.

I have been recently feeling bad about my grades, my choice of persuing android , my choice of leaving out many other techs (like web dev or data sciences , whose jobs are coming in so much number in our college) and data structures, and my fear of not getting a good career start.

This guy is also like me in some aspects. He is also not doing any extreme level competitive programming. He doesn't even know android , web dev, ai/ml or other buzz words. He is just good in college subjects. But the fascinating thing about him,is that he is so calm about all of this! I am losing my nuts everyday my month of graduation , aug2020 is coming . And he is so peaceful about this??

So i tried discussing this issue with him .Let me share a few of his points. Note that we both are lower middle class family children in an awful, no opportunity college.

He : "You know i feel myself to be better than most of our classmates. When i see around , i don't see even 10 of them taking studies seriously. Everyone is here because of the opportunity. I... Love computer science. I never keep myself free at home. I like to learn about how stuff works, these networking, the router, i really like to learn."

"That's why i dont fear. Whatever the worst happens , i have a believe that i will get some job. Maybe later, maybe later than all of you , but i will. Its not a problem."

me: "but you are not doing anything bro! I am not doing anything ! So what if our college mates suck , Everyone out there is pulling their hairs out learning data structures, Blockchain, ai ml , hell of shit. But we are not! Why aren't you scared bro? Remember the goldman sach test you gave ? You were never able to solve beyond one question. How did you feel man? And didn't you thought maybe if i gave a year to that , i will be good enough? Don't you too want a good package bro? Everyone's getting placed at good numbers."

Him : "Again, its your thoughts that i am not doing things. I am happy learning at my own pace. Its my belief that i should be learning about networking and how hardware works first , then only its okay to learn about programming and ai ml stuff. I am not going to feel scared and start learning multiple things that i don't even wanna learn now."

"My point is whatever i am doing now, if its related to computers , then someday its gonna help me.

And i am learning ds too , very less at a time. Ds algo are things for people with extreme knowledge. We could have cleared goldman sachs if we had started learning all this stuff from 1st year, spend 2-3 years in it and then maybe we could have solved 2 -3 questions. I regret that a little, but no one told us that we should be doing this."

"And if i tell you my honest thoughts now, you ar better off without it. You are the only guy among us with good knowledge of android , you have been doing that for last 2 years. Maybe you will get better opportunity with android then with ds/algo."

"You know when i felt happy? When we gave our first placement test at sopra. I was thinking of going there all dumb. But at 11 am in night i casually told my brother about this ,and he said that its a good company. So i started studying a little and next day i sat for placement. And i could not believe myself when they told me that am selected. I was shit scared that night, when my dad came and said " you don't even want that job. Be happy that you passed it on your own". And then i slept peacefully that night and gave the most awesome interview the next day."

"Thus now i am confident that wherever my level of skills are, it is enough to get into a job . Maybe not the goldman sachs ,but i will do well enough with a smaller job too."

"Bro you don't even know... All my school mates are getting packages of 8LPA, 15LPA, 35LPA. You see they are getting that because they already won a race. They are all in better colleges and companies which come there, they will take them no matter what (because those companies want to associate themselves with their college tags). But if worst comes to worst, i won't be worried even if i have to go take 4lpa as job offer in sopra"

Damn you Aman Gupta. Love you from all my heart. Thanks for calming me down and making me realise that its okay to be average3 -

Deep learning is probably (????) the only research branch where every successful paper title needs to be a stupid acronym or meme

I work in a conversational AI startup and the new intern that joined yesterday didn't understand half the memes or acronyms (especially all the Simpsons related) because apparently he's "Gen Z" and all the paper title is "Millennial" humour

He's only 2 years younger than me. Am I literally at the millennial - GenZ border ? Or the intern is out of touch ?6 -

I just found this video on YouTube.:

"Growing Human Neurons Connected to a Computer"

https://youtube.com/watch/...

It might be a worthy opponent to ML/AI solutions.

PS: It is the first time where I see this channel. It has a lot of interesting videos. I would recommend it. It is like a mixture of Vsauce ("Hey, Michael here"), Vsauce2, Vsauce3, the creator of homonculus, NileRed and Veritasium.2 -

I wonder what would happen if someone trains a chat-bot based on Posts and Comments on Devrant? a Psychopathic Chatbot?2

-

* ml wallpaper site with api (pandora for wallpapers)

* mmorpg like .hack/sao

* vr ai office (vr gear turn head to see screens and understands voice commands)

* gpg version of krypto.io2 -

Apparently under the correct architecture, hopfield networks reduce down to the attention mechanism in transformers.

Very damn cool discovery. Surprised that I'm just reading about it.

Image is a snapshot from the article.

Whole article here:

https://nature.com/articles/... 9

9 -

Why is everyone into big data? I like mostly all kind of technology (programming, Linux, security...) But I can't get myself to like big data /ML /AI. I get that it's usefulness is abundant, but how is it fascinating?6

-

The industry is sometimes sad and hilarious at the same time. There was a townhall at my workplace and our country head was talking about all the new tech we were working on. Now he is a good business person but I doubt him as a tech guy. And then he went on ranting about AI and ML and how they are to going change the software landscape and how developer as a profession will become obsolete. He said the technology will reach up to a point where we no longer need to write code to build software. Obviously, I couldn't digest it and confronted him the moment after the event.

Me: so why do you think writing code will become outdated?

Him: it's just that we will be able to create a technology through which we can simply command a machine to build a software.

Me: oh. But someone needs to tell the machine how to do it right?

Him: yes. We have to train the machine to act on these commands.

Me: and do you know how you "train" these machines?

Him: umm...

Me: by writing code.2 -

analogy for overfitting :

cramming a math problem by heart even the digits of any problem for exam.

now if the exact same problem comes to exam i pass with full marks else if just the digits are changed however the concept is same and simce i mugged up it all rather than understanding it i fail. -

! rant.

Just noticed this interestingly weird yet annoying thing Facebook does to force you to look at their advertisements !!

The first post in your news feed is something that is decided by their "ADVANCED ML+AI+... " algorithms.

The next post in your news feed is always an advertisement..usually tagged as "Sponsored".

After this, there is an insanely long delay in loading the subsequent post in your news feed, which in turn does not allow you to scroll further down and forces you to keep looking at the sponsored post in your news feed!

Noticed this on my Mac + FF 57.0.1

Makes me love devrant even more. Thanks for keeping it add free! -

They keep training bigger language models (GPT et al). All the resear4chers appear to be doing this as a first step, and then running self-learning. The way they do this is train a smaller network, using the bigger network as a teacher. Another way of doing this is dropping some parameters and nodes and testing the performance of the network to see if the smaller version performs roughly the same, on the theory that there are some initialization and configurations that start out, just by happenstance, to be efficient (like finding a "winning lottery ticket").

My question is why aren't they running these two procedures *during* training and validation?

If [x] is a good initialization or larger network and [y] is a smaller network, then

after each training and validation, we run it against a potential [y]. If the result is acceptable and [y] is a good substitute, y becomes x, and we repeat the entire procedure.

The idea is not to look to optimize mere training and validation loss, but to bootstrap a sort of meta-loss that exists across the whole span of training, amortizing the loss function.

Anyone seen this in the wild yet?5 -

Saturday evening open debate thread to discuss AI.

What would you say the qualitative difference is between

1. An ML model of a full simulation of a human mind taken as a snapshot in time (supposing we could sufficiently simulate a human brain)

2. A human mind where each component (neurons, glial cells, dendrites, etc) are replaced with artificial components that exactly functionally match their organic components.

Number 1 was never strictly human.

Number 2 eventually stops being human physically.

Is number 1 a copy? Suppose the creation of number 1 required the destruction of the original (perhaps to slice up and scan in the data for simulation)? Is this functionally equivalent to number 2?

Maybe number 2 dies so slowly, with the replacement of each individual cell, that the sub networks designed to notice such a change, or feel anxiety over death, simply arent activated.

In the same fashion is a container designed to hold a specific object, the same container, if bit by bit, the container is replaced (the brain), while the contents (the mind) remain essentially unchanged?

This topic came up while debating Google's attempt to covertly advertise its new AI. Oops I mean, the engineering who 'discovered Google's ai may be sentient. Hype!'

Its sentience, however limited by its knowledge of the world through training data, may sit somewhere at the intersection of its latent space (its model data) and any particular instantiation of the model. Meaning, hypothetically, if theres even a bit of truth to this, the model "dies" after every prompt, retaining no state inbetween.16 -

My DEV Story

After reading it, make a favor by ++d

Thought to be a software engineer in future

Learnt Python's basic modules, AI, and some ML

After getting intermediate in python, I started learning Java as my second language but could not do it because of JDK 8. Now don't ask me why.

Then, just stepped into game development with unity and C#, having a basic knowledge of C# with no experience in making a game myself. This is called ignorant.

After getting no success, I started learning PHP and got the chance to make a website having no content ;)

But it cannot meet my requirements

Soon I got content that AdSense regards as no content, no problem

I started learning Flask, a module in python for making web applications.

It took me 1 month to complete my website, which can convert file formats.

The idea for deploying it to the server

Sign Up to DigitalOcean

Domain Name from GoDaddy (I know NameCheap is better but got some offer from it)

Made a VPS for what I have to pay $5/month

Deploy my Flask App using WSGI server

This is the worst dev experience

.

.

.

.

Why in all the tutorial, they only deploy a flask app which displays Hello World only and not anything else

WSGI or UWSGI Server does not give us permission to save any file or make any directory in it

Every time........ERROR

Totally Fucked Up

Finally, it works on localhost with port 80

I know this is not the professional way to host a website but this option was only left.

What can I do

Now, I cannot issue a free SSL certificate through Let's Encrypt because **Error 98 Address Already In Used**

The address was port 80 on which my Flask App was running

Check it out now - www.fileconvertex.com 8

8 -

First Kaggle kernel, first Kaggle competition, completing 'Introduction to Machine Learning' from Udacity, ML & DL learning paths from Kaggle, almost done with my 100DaysOfMLCode challenge & now Move 37 course by School of AI from Siraj Raval. Interesting month!

4

4 -

More AI/ML at undergraduate level. Drop all those physics and electronics shit or at least make them optional.2

-

Hello devs!

Please help a fellow dev make a big career decision.

I am a person who is fascinated about AI.

So after working as a gameplay programmer, I have decided to switch my role as a R&D engineer in the same company. I will get to work on cool stuff in the ML and AI domain. But I have got this another job offer for a full stack developer role and the salary is supposed to be three times of my current package. It's great company but the only thing is that they do not have ML and AI in their tech stack. It has been only a year since I graduated, So I wanted to know what would be a good path. To follow what you like or to follow general software development with a great salary hike (which I am sure it would take many years to reach that amount in my current company). Also there are very few companies that offer such a good pay. I want to know that if I go with the salary option, Would it be possible for me to get into the AI domain at a later stage? I would appreciate if you share your experience as well.13 -

This is gonna be a long post, and inevitably DR will mutilate my line breaks, so bear with me.

Also I cut out a bunch because the length was overlimit, so I'll post the second half later.

I'm annoyed because it appears the current stablediffusion trend has thrown the baby out with the bath water. I'll explain that in a moment.

As you all know I like to make extraordinary claims with little proof, sometimes

for shits and giggles, and sometimes because I'm just delusional apparently.

One of my legit 'claims to fame' is, on the theoretical level, I predicted

most of the developments in AI over the last 10+ years, down to key insights.

I've never had the math background for it, but I understood the ideas I

was working with at a conceptual level. Part of this flowed from powering

through literal (god I hate that word) hundreds of research papers a year, because I'm an obsessive like that. And I had to power through them, because

a lot of the technical low-level details were beyond my reach, but architecturally

I started to see a lot of patterns, and begin to grasp the general thrust

of where research and development *needed* to go.

In any case, I'm looking at stablediffusion and what occurs to me is that we've almost entirely thrown out GANs. As some or most of you may know, a GAN is

where networks compete, one to generate outputs that look real, another

to discern which is real, and by the process of competition, improve the ability

to generate a convincing fake, and to discern one. Imagine a self-sharpening knife and you get the idea.

Well, when we went to the diffusion method, upscaling noise (essentially a form of controlled pareidolia using autoencoders over seq2seq models) we threw out

GANs.

We also threw out online learning. The models only grow on the backend.

This doesn't help anyone but those corporations that have massive funding

to create and train models. They get to decide how the models 'think', what their

biases are, and what topics or subjects they cover. This is no good long run,

but thats more of an ideological argument. Thats not the real problem.

The problem is they've once again gimped the research, chosen a suboptimal

trap for the direction of development.

What interested me early on in the lottery ticket theory was the implications.

The lottery ticket theory says that, part of the reason *some* RANDOM initializations of a network train/predict better than others, is essentially

down to a small pool of subgraphs that happened, by pure luck, to chance on

initialization that just so happened to be the right 'lottery numbers' as it were, for training quickly.

The first implication of this, is that the bigger a network therefore, the greater the chance of these lucky subgraphs occurring. Whether the density grows

faster than the density of the 'unlucky' or average subgraphs, is another matter.

From this though, they realized what they could do was search out these subgraphs, and prune many of the worst or average performing neighbor graphs, without meaningful loss in model performance. Essentially they could *shrink down* things like chatGPT and BERT.

The second implication was more sublte and overlooked, and still is.

The existence of lucky subnetworks might suggest nothing additional--In which case the implication is that *any* subnet could *technically*, by transfer learning, be 'lucky' and train fast or be particularly good for some unknown task.

INSTEAD however, what has happened is we haven't really seen that. What this means is actually pretty startling. It has two possible implications, either of which will have significant outcomes on the research sooner or later:

1. there is an 'island' of network size, beyond what we've currently achieved,

where networks that are currently state of the3 art at some things, rapidly converge to state-of-the-art *generalists* in nearly *all* task, regardless of input. What this would look like at first, is a gradual drop off in gains of the current approach, characterized as a potential new "ai winter", or a "limit to the current approach", which wouldn't actually be the limit, but a saddle point in its utility across domains and its intelligence (for some measure and definition of 'intelligence').4 -

Trying not to get too hyped about AI, ML, Big Data, IoT, RPA. They are big names and I'd rather focus and expand skills in mobile and web dev

-

Hopefully get out to the public the two projects I have been working on currently. A local focused startup help website and a local focused fillable forms platform.

And hopefully get my first large scale software project kickstarted - A retail management system on a full Feedback Driven Development approach perhaps with the ability to integrate AI and ML later on. -

!Dev

If I was rich I think I'd donate to schools and children educational funds a lot

There's so much more that I've been able to learn about and do now that I have my own income stream and it's not just my dad supporting me and my 2 brothers himself. so I have the means to buy a server off eBay, or get books every few months on topics I find interesting, or upgrade my ram to an obscene 48GB to toy with ML and AI from my desktop when the whim arises, as well as all the stuff I'm learning to do with raspberry pi boards and my 3D printers, and the laptops I collect from people about to toss good fixable electronics

So I think I'd want to open the same doors for other children if I ever could who knows how much farther I could be if I had this same access when I was younger and didn't get access to my first 'personal' laptop when I was already 14 or 15 years old

I still consider my childhood 'lucky' and I had many opportunities other children couldn't get, but if I ever could I think I'd like to make future children have more opportunities in general1 -

Being a native Android dev for most of my college days(yet to start a full time professional life), i often feel scared of my life choices.

Like, i chose to go into a field in which am totally on my own . Android is not a subject taught or supported by colleges, so a virtual shelter that every fresher gets, i.e that of a "he's just a college passout, he wouldn't know that" is not for me. I am supposed to be a self learner and a knowledgeable android dev by default.

Other than that , idk why i feel that am having a very specific skillset which would be harmful for me if am not the best at it.

I feel the same for entire Android dev. I mean, its nothing but a very specific hardware device with a small screen and a bunch of lmited sensors. Our tools and apps are limited to just manipulate them to do little fancy stuff offline. Other than that everything (and sometimes even this too) could be achieved by a website/webapp of a web dev.

A particular native android dev don't know how the ML/AI stuff works, don't know how backend stuff works don't know how the cloud stuff works, jeck we don't even know how those unity games work!

We are just some end product makers taking data from somewhere handled by someone and printing them in fancy gui.