Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

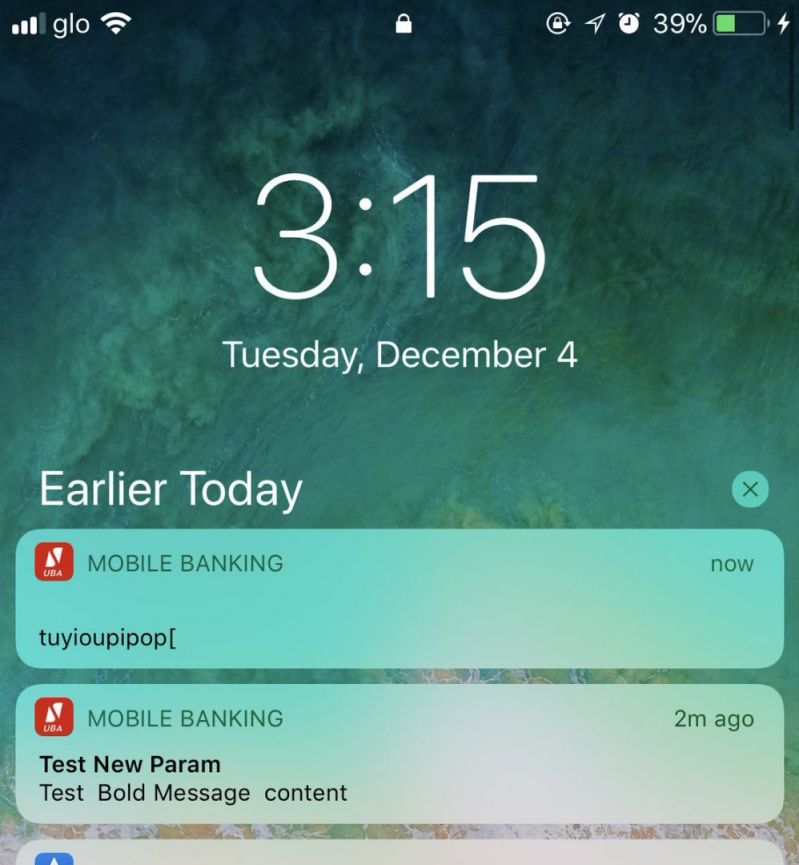

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "testing in production"

-

I worked with a good dev at one of my previous jobs, but one of his faults was that he was a bit scattered and would sometimes forget things.

The story goes that one day we had this massive bug on our web app and we had a large portion of our dev team trying to figure it out. We thought we narrowed down the issue to a very specific part of the code, but something weird happened. No matter how often we looked at the piece of code where we all knew the problem had to be, no one could see any problem with it. And there want anything close to explaining how we could be seeing the issue we were in production.

We spent hours going through this. It was driving everyone crazy. All of a sudden, my co-worker (one referenced above) gasps “oh shit.” And we’re all like, what’s up? He proceeds to tell us that he thinks he might have been testing a line of code on one of our prod servers and left it in there by accident and never committed it into the actual codebase. Just to explain this - we had a great deploy process at this company but every so often a dev would need to test something quickly on a prod machine so we’d allow it as long as they did it and removed it quickly. It was meant for being for a select few tasks that required a prod server and was just going to be a single line to test something. Bad practice, but was fine because everyone had been extremely careful with it.

Until this guy came along. After he said he thought he might have left a line change in the code on a prod server, we had to manually go in to 12 web servers and check. Eventually, we found the one that had the change and finally, the issue at hand made sense. We never thought for a second that the committed code in the git repo that we were looking at would be inaccurate.

Needless to say, he was never allowed to touch code on a prod server ever again.8 -

Oh, man, I just realized I haven't ranted one of my best stories on here!

So, here goes!

A few years back the company I work for was contacted by an older client regarding a new project.

The guy was now pitching to build the website for the Parliament of another country (not gonna name it, NDAs and stuff), and was planning on outsourcing the development, as he had no team and he was only aiming on taking care of the client service/project management side of the project.

Out of principle (and also to preserve our mental integrity), we have purposely avoided working with government bodies of any kind, in any country, but he was a friend of our CEO and pleaded until we singed on board.

Now, the project itself was way bigger than we expected, as the wanted more of an internal CRM, centralized document archive, event management, internal planning, multiple interfaced, role based access restricted monster of an administration interface, complete with regular user website, also packed with all kind of features, dashboards and so on.

Long story short, a lot bigger than what we were expecting based on the initial brief.

The development period was hell. New features were coming in on a weekly basis. Already implemented functionality was constantly being changed or redefined. No requests we ever made about clarifications and/or materials or information were ever answered on time.

They also somehow bullied the guy that brought us the project into also including the data migration from the old website into the new one we were building and we somehow ended up having to extract meaningful, formatted, sanitized content parsing static HTML files and connecting them to download-able files (almost every page in the old website had files available to download) we needed to also include in a sane way.

Now, don't think the files were simple URL paths we can trace to a folder/file path, oh no!!! The links were some form of hash combination that had to be exploded and tested against some king of database relationship tables that only had hashed indexes relating to other tables, that also only had hashed indexes relating to some other tables that kept a database of the website pages HTML file naming. So what we had to do is identify the files based on a combination of hashed indexes and re-hashed HTML file names that in the end would give us a filename for a real file that we had to then search for inside a list of over 20 folders not related to one another.

So we did this. Created a script that processed the hell out of over 10000 HTML files, database entries and files and re-indexed and re-named all this shit into a meaningful database of sane data and well organized files.

So, with this we were nearing the finish line for the project, which by now exceeded the estimated time by over to times.

We test everything, retest it all again for good measure, pack everything up for deployment, simulate on a staging environment, give the final client access to the staging version, get them to accept that all requirements are met, finish writing the documentation for the codebase, write detailed deployment procedure, include some automation and testing tools also for good measure, recommend production setup, hardware specs, software versions, server side optimization like caching, load balancing and all that we could think would ever be useful, all with more documentation and instructions.

As the project was built on PHP/MySQL (as requested), we recommended a Linux environment for production. Oh, I forgot to tell you that over the development period they kept asking us to also include steps for Windows procedures along with our regular documentation. Was a bit strange, but we added it in there just so we can finish and close the damn project.

So, we send them all the above and go get drunk as fuck in celebration of getting rid of them once and for all...

Next day: hung over, I get to the office, open my laptop and see on new email. I only had the one new mail, so I open it to see what it's about.

Lo and behold! The fuckers over in the other country that called themselves "IT guys", and were the ones making all the changes and additions to our requirements, were not capable enough to follow step by step instructions in order to deploy the project on their servers!!!

[Continues in the comments]25 -

I just sent an automated email titled "Gary is a Dinosaur!" to a lot of humourless clients because the ancient application I was testing assumed I was in the production environment. 🙃

Lesson to self: stop using bogus names in testing.

Still, it could've been a lot worse... 😂9 -

Doot doot.

My day: Eight lines of refactoring around a 10-character fix for a minor production issue. Some tests. Lots of bloody phone calls and conference calls filled with me laughing and getting talked over. Why? Read on.

My boss's day: Trying very very hard to pin random shit on me (and failing because I'm awesome and fuck him). Six hours of drama and freaking out and chewing and yelling that the whole system is broken because of that minor issue. No reading, lots of misunderstanding, lots of panic. Three-way called me specifically to bitch out another coworker in front of me. (Coworker wasn't really in the wrong.) Called a contractor to his house for testing. Finally learned that everything works perfectly in QA (duh, I fixed it hours ago). Desperately waited for me to push to prod. Didn't care enough to do production tests afterwards.

My day afterwards: hey, this Cloudinary transform feature sounds fun! Oh look, I'm done already. Boo. Ask boss for update. Tests still aren't finished. Okay, whatever. Time for bed.

what a joke.

Oh, I talked to the accountant after all of this bullshit happened. Apparently everyone that has quit in the last six years has done so specifically because of the boss. Every. single. person.

I told him it was going to happen again.

I also told him the boss is a druggie with a taste for psychedelics. (It came up in conversation. Absolutely true, too.) It's hilarious because the company lawyer is the accountant's brother.

So stupid.18 -

Hey, Root? How do you test your slow query ticket, again? I didn't bother reading the giant green "Testing notes:" box on the ticket. Yeah, could you explain it while I don't bother to listen and talk over you? Thanks.

And later:

Hey Root. I'm the DBA. Could you explain exactly what you're doing in this ticket, because i can't understand it. What are these new columns? Where is the new query? What are you doing? And why? Oh, the ticket? Yeah, I didn't bother to read it. There was too much text filled with things like implementation details, query optimization findings, overall benchmarking results, the purpose of the new columns, and i just couldn't care enough to read any of that. Yeah, I also don't know how to find the query it's running now. Yep, have complete access to the console and DB and query log. Still can't figure it out.

And later:

Hey Root. We pulled your urgent fix ticket from the release. You know, the one that SysOps and Data and even execs have been demanding? The one you finished three months ago? Yep, the problem is still taking down production every week or so, but we just can't verify that your fix is good enough. Even though the changes are pretty minimal, you've said it's 8x faster, and provided benchmark findings, we just ... don't know how to get the query it's running out of the code. or how check the query logs to find it. So. we just don't know if it's good enough.

Also, we goofed up when deploying and the testing database is gone, so now we can't test it since there are no records. Nevermind that you provided snippets to remedy exactly scenario in the ticket description you wrote three months ago.

And later:

Hey Root: Why did you take so long on this ticket? It has sat for so long now that someone else filed a ticket for it, with investigation findings. You know it's bringing down production, and it's kind of urgent. Maybe you should have prioritized it more, or written up better notes. You really need to communicate better. This is why we can't trust you to get things out.

*twitchy smile*rant useless people you suck because we are incompetent what's a query log? it's all your fault this is super urgent let's defer it ticket notes too long; didn't read19 -

Why are job postings so bad?

Like, really. Why?

Here's four I found today, plus an interview with a trainwreck from last week.

(And these aren't even the worst I've found lately!)

------

Ridiculous job posting #1:

* 5 years React and React Native experience -- the initial release of React Native was in May 2013, apparently. ~5.7 years ago.

* Masters degree in computer science.

* Write clean, maintainable code with tests.

* Be social and outgoing.

So: you must have either worked at Facebook or adopted and committed to both React and React Native basically immediately after release. You must also be in academia (with a masters!), and write clean and maintainable code, which... basically doesn't happen in academia. And on top of (and really: despite) all of this, you must also be a social butterfly! Good luck ~

------

Ridiculous job posting #2:

* "We use Ruby on Rails"

* A few sentences later... "we love functional programming and write only functional code!"

Cue Inigo Montoya.

------

Ridiculous job posting #3:

* 100% remote! Work from anywhere, any time zone!

* and following that: You must have at least 4 work hours overlap with your coworkers per day.

* two company-wide meetups per quarter! In fancy places like Peru and Tibet! ... TWO PER QUARTER!?

Let me paraphrase: "We like the entire team being remote, together."

------

Ridiculous job posting #4:

* Actual title: "Developer (noun): Superhero poised to change the world (apply within)"

* Actual excerpt: "We know that headhunters are already beating down your door. All we want is the opportunity to earn our right to keep you every single day."

* Actual excerpt: "But alas. A dark and evil power is upon us. And this… ...is where you enter the story. You will be the Superman who is called upon to hammer the villains back into the abyss from whence they came."

I already applied to this company some time before (...surprisingly...) and found that the founder/boss is both an ex cowboy dev and... more than a bit of a loon. If that last part isn't obvious already? Sheesh. He should go write bad fantasy metal lyrics instead.

------

Ridiculous interview:

* Service offered for free to customers

* PHP fanboy angrily asking only PHP questions despite the stack (Node+Vue) not even freaking including PHP! To be fair, he didn't know anything but PHP... so why (and how) is he working there?

* Actual admission: No testing suite, CI, or QA in place

* Actual admission: Testing sometimes happens in production due to tight deadlines

* Actual admission: Company serves ads and sells personally-identifiable customer information (with affiliate royalties!) to cover expenses

* Actual admission: Not looking for other monetization strategies; simply trying to scale their current break-even approach.

------

I find more of these every time I look. It's insane.

Why can't people be sane and at least semi-intelligent?18 -

Worst dev team failure I've experienced?

One of several.

Around 2012, a team of devs were tasked to convert a ASPX service to WCF that had one responsibility, returning product data (description, price, availability, etc...simple stuff)

No complex searching, just pass the ID, you get the response.

I was the original developer of the ASPX service, which API was an XML request and returned an XML response. The 'powers-that-be' decided anything XML was evil and had to be purged from the planet. If this thought bubble popped up over your head "Wait a sec...doesn't WCF transmit everything via SOAP, which is XML?", yes, but in their minds SOAP wasn't XML. That's not the worst WTF of this story.

The team, 3 developers, 2 DBAs, network administrators, several web developers, worked on the conversion for about 9 months using the Waterfall method (3~5 months was mostly in meetings and very basic prototyping) and using a test-first approach (their own flavor of TDD). The 'go live' day was to occur at 3:00AM and mandatory that nearly the entire department be on-sight (including the department VP) and available to help troubleshoot any system issues.

3:00AM - Teams start their deployments

3:05AM - Thousands and thousands of errors from all kinds of sources (web exceptions, database exceptions, server exceptions, etc), site goes down, teams roll everything back.

3:30AM - The primary developer remembered he made a last minute change to a stored procedure parameter that hadn't been pushed to production, which caused a side-affect across several layers of their stack.

4:00AM - The developer found his bug, but the manager decided it would be better if everyone went home and get a fresh look at the problem at 8:00AM (yes, he expected everyone to be back in the office at 8:00AM).

About a month later, the team scheduled another 3:00AM deployment (VP was present again), confident that introducing mocking into their testing pipeline would fix any database related errors.

3:00AM - Team starts their deployments.

3:30AM - No major errors, things seem to be going well. High fives, cheers..manager tells everyone to head home.

3:35AM - Site crashes, like white page, no response from the servers kind of crash. Resetting IIS on the servers works, but only for around 10 minutes or so.

4:00AM - Team rolls back, manager is clearly pissed at this point, "Nobody is going fucking home until we figure this out!!"

6:00AM - Diagnostics found the WCF client was causing the server to run out of resources, with a mix of clogging up server bandwidth, and a sprinkle of N+1 scaling problem. Manager lets everyone go home, but be back in the office at 8:00AM to develop a plan so this *never* happens again.

About 2 months later, a 'real' development+integration environment (previously, any+all integration tests were on the developer's machine) and the team scheduled a 6:00AM deployment, but at a much, much smaller scale with just the 3 development team members.

Why? Because the manager 'froze' changes to the ASPX service, the web team still needed various enhancements, so they bypassed the service (not using the ASPX service at all) and wrote their own SQL scripts that hit the database directly and utilized AppFabric/Velocity caching to allow the site to scale. There were only a couple client application using the ASPX service that needed to be converted, so deploying at 6:00AM gave everyone a couple of hours before users got into the office. Service deployed, worked like a champ.

A week later the VP schedules a celebration for the successful migration to WCF. Pizza, cake, the works. The 3 team members received awards (and a envelope, which probably equaled some $$$) and the entire team received a custom Benchmade pocket knife to remember this project's success. Myself and several others just stared at each other, not knowing what to say.

Later, my manager pulls several of us into a conference room

Me: "What the hell? This is one of the biggest failures I've been apart of. We got rewarded for thousands and thousands of dollars of wasted time."

<others expressed the same and expletive sediments>

Mgr: "I know..I know...but that's the story we have to stick with. If the company realizes what a fucking mess this is, we could all be fired."

Me: "What?!! All of us?!"

Mgr: "Well, shit rolls downhill. Dept-Mgr-John is ready to fire anyone he felt could make him look bad, which is why I pulled you guys in here. The other sheep out there will go along with anything he says and more than happy to throw you under the bus. Keep your head down until this blows over. Say nothing."11 -

My first testing job in the industry. Quite the rollercoaster.

I had found this neat little online service with a community. I signed up an account and participated. I sent in a lot of bug reports. One of the community supervisors sent me a message that most things in FogBugz had my username all over it.

After a year, I got cocky and decided to try SQL injection. In a production environment. What can I say. I was young, not bright, and overly curious. Never malicious, never damaged data or exposed sensitive data or bork services.

I reported it.

Not long after, I got phone calls. I was pretty sure I was getting charged with something.

I was offered a job.

Three months into the job, they asked if I wanted to do Python and work with the automators. I said I don't know what that is but sure.

They hired me a private instructor for a week to learn the basics, then flew me to the other side of the world for two weeks to work directly with the automation team to learn how they do it.

It was a pretty exciting era in my life and my dream job.4 -

Every so often I remember that the code I wrote is running in production and real customers are using it and I feel a little bit sick2

-

Sex talk between programmers.

She: I'm a virgin.

He: Don't worry. They call me the virginslayer007.

She: Oh! So how many virgins have you slayed till now?

He: That would be ONE in a few minutes.

She: So u r also a virgin then..

He: Don't worry. I watched so many video tutorials. We just have to do exactly as they did. Best thing is that it can be done both for testing and production purposes.

She: Let's stick to testing purposes for now.6 -

Client: "I did not receive the email that should be send after that event. Please fix."

Me:

* Checks code - ok

* Tests feature in locally - ok

* Tests feature in production - ok

* checks values in database - ok

* 2 hours wasted - ok

"Please help me dear CTO, idk what else I could check or how I should even respond to this."

CTO: "hmm, the clients account uses a adminstrative email address for testing. Let me just check if it is in the mailbox."

*checks* "Yeah, that's the email you're looking for, right?"

Me: *experiences relief, anger, blood lust and disappointment at the same time* "Could you please respond to the client for me, I need a break. Thanks"3 -

In my current work, I have two systems to work on (let's name em Systems A and B). Both basically do the same thing; both allow users to book facilities available to them.

System A is already in production. My job is to fix any bugs that come up on said system. System B is an improved version that they wanted me to develop. This would follow a different framework etc. I am already halfway through this system.

Now, here's the fucked up part. The code for system A is a massive clusterfuck. It has unused commented code dated back to ancient times where men had the brain of an ape.

And don't get me started on the fucking logic. One part of the code was to retrieve and display the timeslots available for a chosen facility. The code to do that alone takes up 500++ fucking lines, filled with ajax commands, html manipulation and commented, unused codes..AND THAT'S JUST THE FRONTEND!

The fucking backend was not a problem of smelly code anymore. Nope. It was like a programmer had code diarrhea and shat his backend code all over the project. If I had a pin board, I would have made a crazy wall just to understand what some fucknut was trying to achieve.

Anyway, my supervisor told me to fix some bugs on System A. Knowing how the code was, I told her that I could refactor the code. Since I've already achieved that function on System B, with a shorter and cleaner code, I could just copy that and use on System A. But nope. She SPECIFICALLY told me to just "do whatever to fix the bugs. I don't want to waste time on System A." Okay. Makes sense to me. Whatever. I didn't wanna fuck my head up looking through that mess of a cesspool. So, I came up with a few hacks, not thinking of clean code and fixed whatever bugs there was. I then just pushed to the repo (after testing of course).

This bloody morning, supervisor came in and gave me more bugs to fix. When I thought she was done, she said "Hey. I saw the fix you made to the system. The bugs are fixed but the retrieval of the timeslots is now pretty slow. Could you see what is the problem?"

Slow.. She said that it was slow. And asked if I could fix it. I already told her what the problem was and she did not want me to waste time on it. But she wants me to fix it. WHAT THE FUCK IS WRONG IN HER BLOODY HEAD! I SWEAR TO GOD... UGHHHHH I swear I was already waterboarding her in my head. YOU WANT FAST?? How bout fucking allowing me to refactor the code?? Fucking shit head. I think I should take up yoga.1 -

Some years back I was working in a project that essentially dealt with all things related to foreigners and foreign affairs in Switzerland. You could manage entry visas, work permits, citizenship, international warrants, Interpol requests, etc.

One of the test managers (from client side - i.e. the government) was once manually "testing" and mixed up the production and test instance, to both of which he was logged in at the time.

The test case then ended up setting up an entry ban against himself, as he used his own name for testing...

Next time he returned from vacation the border control at the airport were like "Uhm, Sir, we can't let you into the country. Please come with us." :D :D

(He managed to clear that up in end, I dare say, though, that he learned his lesson.)4 -

FUCKING TELEGRAM FUCK YOU STAY IN YOUR FUCKING API DOCUMENTATION AND STOP FUCKING TESTING YOUR SHIT ON A PRODUCTION SYSTEM WHY WOULD YOU DO THAT FUCK OFF WHY AM I EVEN DEVELOPING SHIT FOR YOUR PLATFORM ANYMORE WHEN FOLLOWING YOUR DOCUMENTATION LEADS TO FUCKING ERRORS AND WE HAVE TO DECOMPILE AND REVERSE ENGINEER YOUR FUCKING "OPEN SOURCE" APPS BECAUSE YOU DONT EVEN BOTHER TO FUCKING UPDATE THE SOURCE CODE ONCE A YEAR WHAT THE FUCK

Thank you for your attention7 -

So I took on a fairly big project and poured my heart and soul into it, was the biggest thing I did yet. I kept on sending beta's to the customer after each change for review! Kept on insisting that they review it, the answer was always "this looks amazing keep doing what you're doing"! After I finished and pushed everything to production.

They didn't use it for nearly 6 months! And then out of the blue they call me saying that half of the app is wrong.. WTF? Where was this information during testing! I informed them that the changes would take some time since I need to do migrations and change the whole database schema.

In which they replied "but you already finished it once won't changing things make it easier? We shouldn't pay for your mistakes"

I don't know how I handled that but they should be thankful they were half way across the country 😠😠😠😠3 -

Be me, new dev on a team. Taking a look through source code to get up to speed.

Dev: **thinking to self** why is there no package lock.. let me bring this up to boss man

Dev: hey boss man, you’ve got no package lock, did we forget to commit it?

Manager: no I don’t like package locks.

Dev: ...why?

Manager: they fuck up computer. The project never ran with a package lock.

Dev: ..how will you make sure that every dev has the same packages while developing?

Manager: don’t worry, I’ve done this before, we haven’t had any issues.

**couple weeks goes by**

Dev: pushes code

Manager: hey your feature is not working on my machine

Dev: it’s working on mine, and the dev servers. Let’s take a look and see

**finds out he deletes his package lock every time he does npm install, so therefore he literally has the latest of like a 50 packages with no testing**

Dev: well you see you have some packages here that updates, and have broken some of the features.

Manager: >=|, fix it.

Dev: commit a working package lock so we’re all on the same.

Manager: just set the package version to whatever works.

Dev: okay

**more weeks go by**

Manager: why are we having so many issues between devs, why are things working on some computers and not others??? We can’t be having this it’s wasting time.

Dev: **takes a look at everyone’s packages** we all have different packages.

Manager: that’s it, no one can use Mac computers. You must use these windows computers, and you must install npm v6.0 and node v15.11. Everyone must have the same system and software install to guarantee we’re all on the same page

Dev: so can we also commit package lock so we’re all having the same packages as well?

Manager: No, package locks don’t work.

**few days go by**

Manager: GUYS WHY IS THE CODE DEPLOYING TO PRODUCTION NOT WORKING. IT WAS WORKING IN DEV

DEV: **looks at packages**, when the project was built on dev on 9/1 package x was on version 1.1, when it was approved and moved to prod on 9/3 package x was now on version 1.2 which was a change that broke our code.

Manager: CHANGE THE DEPLOYMENT SCRIPTS THEN. MAKE PROD RSYNC NODE_MODULES WITH DEV

Dev: okay

Manager: just trust me, I’ve been doing this for years

Who the fuck put this man in charge.11 -

Rather than singling out one person, I wanna present what I see as incompetent/stupid/ignorant:

- no will to learn

- failure to follow the very specific instructions & later asking for help when they FUBR sth & not even knowing what they did to fuck up in the first place

- asking how to solve stuff, then ignoring the suggestions & doing sth totally against recommendations

- failure to remember most basic stuff, especially if not writing it down to look at later when needed

- failure to check logs & 'google' stuff before asking why something isn't working the way they want it

- after two weeks, asking me how feature xy works, mind you they coded it, not me

- asking me why they did something in a specific way - WTF, am I a mind reader?! Who designed that crap?! Me or you?!!

- being passive/aggressive & snarky when told to do something or being asked why isn't it done already

- not testing their shit properly

- not making backups when upgrading (production) servers

- not checking the input value, no validation.. even after many many debacles on production with null ref exceptions

- failure to admit they fucked up

- not learning from (their) mistakes8 -

So today was the worst day of my whole (just started) career.

We have a huge client like 700k users. Two weeks ago we migrated all their services to our aws infrastructure. I basically did most of the work because I'm the most skilled in it (not sure anymore).

Today I discovered:

- Mail cron was configured the wrong way so 3000 emails where waiting to be sent.

- The elastic search service wasn't yet whitelisted so didn't work for two weeks.

- The cron which syncs data between production db en testing db only partly worked.

Just fucking end me. Makes me wonder what other things are broken. I still have a lot to learn... And I might have fucked their trust in me for a bit.13 -

[3:18 AM] Me: Heya team, I fixed X, tested it and pushed to production. Lemme know what you think when you wake up.

[6:30 AM] Me: Yo, I just checked X and everything is peachy. Let me know if it works on your end.

[9:14] Colleague A: Whoop! Yeah! Awesome!

[9:15] Boss: Nice.

[9:30] A: X doesn't work for me.

Me: OK, did you do M as I told you.

A: yes

Me: *checks logs and database, finds no trace of M*

Me: A, you sure you did M on production? Send me a sreenshot plz.

A: yeah, I'm sure it's on production.

Me: *opens sreenshot, gets slapped in the face by https://staging.app.xyz*

Me: A, that's staging, you need to test it on production.

A: right, OK.

[10:46] A: works, yeah! Awesome, whoop!

[10:47] Boss: Nice.

Me: Ok! A, thanks for testing...

Me: *... and wasting my time*.

[10:47:23] Boss: Yo, did you fix Y?

Courageous/snarky me: *Hey boss, see, I knew you'd ask this right after I fixed X knowing that I could not have done anything else while troubleshooting A's testing snafu since you said 'Nice' twice. So, yesterday, I cloned myself and put me to work in parallel on Y on order fulfill your unreasonable expectations come morning.*

Real me: No, that's planned for tomorrow. -

"Everybody has a testing environment. Some people are lucky enough to have a totally seperate environment to run production in."

-Unknown1 -

Old boss story. This guy was nice but a terrible boss. Also relevant, he has a background in IT so should know better.

Him: So when you wanna check a password is correct you just unhash it in the database?

Me: *facepalm*

Me: Hey we should be doing unit and integration testing at a minimum to lower bugs.

Him: We don't need those, we're not a bank. If a problem comes up we just fix it and push to production.

(A while later)

Him(in email): Why do we keep getting bugs reported. Don't you devs test your code.

I was mildly annoyed at that one.

Him: We're always over budget on projects, how can we fix this.

Me: What if we increase our quotes.(technically there are other ways as well but not really possible at that time)

Him: We can't do that, clients won't want to pay.

Me: *finishing off my handover as I'm leaving for a new job*

Him: Wow you do a lot of work2 -

STOP. TESTING. IN. PRODUCTION.

STOP. TESTING. IN. PRODUCTION.

STOP. TESTING. IN. PRODUCTION.

STOP. TESTING. IN. PRODUCTION.17 -

Wordpress does not suck. If you know how to work it.

Past period I saw so many rants on WP. My rant is that it is not 100% WP fault. Yes there are seriously structural problems in WP but that does not mean you cannot create top-notch websites.

At my work we create those top-notch WP sites. Blazing fast and manageable. Seriously we got a customer request to make the site slower because it loaded pages to fast (ea; you hardly could see you switched pages).

- We ONLY use a strict set of plugins that we think are stable, useful.

- We have everything in composer (and our own Satis) for plugins.

- We use custom themes & classes. Our code is MVC with Twig.

- In our track history we have 0 hacked websites for the past 2 years.

- Everything runs stable 24/7

- We have OTAP (testing, acceptance & production environments)

- We patch really fast

These are sites going from $15k++ and we know our shit.

Don't hate on WP if you have no clue what you are doing yourself.

That is my rant.23 -

Manager: what is the estimations for this task A nd B?

Me: Task A: 3 months for 1 guys, and task B: 2 Months

Manager: ok, u can have a fresher, and finish task A, and u urself can pick task B, u can train him and bring him up to pace...

Me: (trying to recalibrate my estimations)...

Manager: oh and u have 3 weeks to deliver production ready scalable quality code with junits, documentation and testing done...

Me: then why the fuck did u bother for the estimates?

Manager: oh that is just for the process complaince...I don't want any trouble in audit...4 -

Me: Hey boss, if you ever need someone to get into doing DevOps related tasks for the team, I'd be more than happy to take that on.

Boss: We don't really need any dedicated person to work on that, but if we do in the future, I'll let you know.

Fast forward a few days: I am now unable to deploy bug fixes to our testing environment, now in the cloud, because all access has been blocked for everyone except the two numbskulls who thought it'd be a great idea to move EVERYTHING over (apps, configuration manager, proxies, etc) first.

Oh, and this bug is affecting production. 3

3 -

So, a few years ago I was working at a small state government department. After we has suffered a major development infrastructure outage (another story), I was so outspoken about what a shitty job the infrastructure vendor was doing, the IT Director put me in charge of managing the environment and the vendor, even though I was actually a software architect.

Anyway, a year later, we get a new project manager, and she decides that she needs to bring in a new team of contract developers because she doesn't trust us incumbents.

They develop a new application, but won't use our test team, insisting that their "BA" can do the testing themselves.

Finally it goes into production.

And crashes on Day 1. And keeps crashing.

Its the infrastructure goes out the cry from her office, do something about it!

I check the logs, can find nothing wrong, just this application keeps crashing.

I and another dev ask for the source code so that we can see if we can help find their bug, but we are told in no uncertain terms that there is no bug, they don't need any help, and we must focus on fixing the hardware issue.

After a couple of days of this, she called a meeting, all the PMs, the whole of the other project team, and me and my mate. And she starts laying into us about how we are letting them all down.

We insist that they have a bug, they insist that they can't have a bug because "it's been tested".

This ends up in a shouting match when my mate lost his cool with her.

So, we went back to our desks, got the exe and the pdb files (yes, they had published debug info to production), and reverse engineered it back to C# source, and then started looking through it.

Around midnight, we spotted the bug.

We took it to them the next morning, and it was like "Oh". When we asked how they could have tested it, they said, ah, well, we didn't actually test that function as we didn't think it would be used much....

What happened after that?

Not a happy ending. Six months later the IT Director retires and she gets shoed in as the new IT Director and then starts a bullying campaign against the two of us until we quit.5 -

I just blocked some of the top management from connecting to our WLAN because I was testing a verifing feature for said WiFi that kicks all devices not listed in the DB.

It happened while my boss/senior/guidance was trying to show them the advantages of a centrally managed infrastructure.

He covered my ass well and tried to sell it to them as proof of a secure solution, that unknown devices couldn't log in.

I feel like human trash right now, but that's what you get for testing in production.4 -

My worst devSin was testing in production once because I was too lazy to set up the dev environment locally.

Never will I do that mistake again!4 -

So my department is "integrating CI/CD"

Right now, there's a very anti-automation culture in the deployment process, and out of our many applications, almost none have automated testing. And my groups is the only one that uses feature branching - one of the few groups that uses branching at all beyond "master, dev"

So yeah... You could see how this is already ENTIRELY fucked from the very beginning.

First thing they want to do is add better support for a process... Which goes directly against CI/CD.

The process is that to deploy to production (even after it is manually approved by manager), someone in another department needs to press a button to manually deploy. This, as far as I can tell, is for business rule reasons rather than technical ones.

They want us to improve that (the system will stay exactly the same with some streamlined options for said button pressers)

I'm absolutely astounded at the way our management wants to do something but goes in exactly the opposite direction. It's like the found an article of what CI/CD was and then took notes on exactly what not to do.25 -

Well the clown strikes again,

How do u break production and a testing environment in one night?

One full month preping for same thing that revolves around one config file and assured us he was confident,

He wasn't

he managed to fuck it up so bad for the team d brass lost d plot,

I'm not one for condemning people but my God Dante's inferno woulda had an extra ring if he worked with this buck,

The stupidity has shattered my belief in sunshine and rainbows -

Last night my boss played with our access points in the warehouse for a client, he messed something up and they stopped working.

I asked a person from our service to fix them

Service: he fucked something up again?

Me: yup

S: can you fix them?

M: yup

S: then why ask me?

M: it's not my job 😂

He swapped them, and got mad. -

"Who needs a staging server, test suites and continuous integration anyways haha"

-company i just joined6 -

Freelance project I was working on was deployed. Without my knowledge. At 11pm. Their in-house "tech guy" thought that the preview build i gave them was good enough for deployment. Massive bug, broke their api endpoints.

Got a call at 2 in the morning,asking for a fix. I told them how it was their fault and the App they deployed had TESTING written right on the main screen.

They promised additional payment to get me to fix it asap.

Went through the commit history (thank goodness their tech guy knew git, fuck him for committing on production though) and the crash reports.

Removed three lines. All became right with the world again. 😎2 -

Somebody at Samsung is testing something in production 🤭

It's "Find my mobile" which is preinstalled by default on Samsung devices 8

8 -

Friend of mine: so I wonder how do you test your applications in the startup?

Me: testing? *grabs his coffee laughing*

Actually we have a complete build pipeline from commit/pull-request to dev and production environments. No tests. Really. We are in rapid product development / research state.

We change technologies and approaches like our underwear (and yeah, this is frequently). If we settled some day and understood the basic problems of the whole feature palette, we'll talk about tests again.rant early product development test driven development proof of concept don't make me laugh prototype startup3 -

I'm coming off a lengthy staff augmentation assignment awful enough that I feel like I need to be rehabilitated to convince myself that I even want to be a software developer.

They needed someone who does .NET. It turns out what they meant was someone to copy and paste massive amounts of code that their EA calls a "framework." Just copy and paste this entire repo, make a whole ton of tweaks that for whatever reason never make their way back into the "template," and then make a few edits for some specific functionality. And then repeat. And repeat. Over a dozen times.

The code is unbelievable. Everything is stacked into giant classes that inherit from each other. There's no dependency inversion. The classes have default constructors with a comment "for unit testing" and then the "real" code uses a different one.

It's full of projects, classes, and methods with weird names that don't do anything. The class and method names sound like they mean something but don't. So after a dozen times I tried to refactor, and the EA threw a hissy fit. Deleting dead code, reducing three levels of inheritance to a simple class, and renaming stuff to indicate what it does are all violations of "standards." I had to go back to the template and start over.

This guy actually recorded a video of himself giving developers instructions on how to copy and paste his awful code.

Then he randomly invents new "standards." A class that reads messages from a queue and processes them shouldn't process them anymore. It should read them and put them in another queue, and then we add more complication by reading from that queue. The reason? We might want to use the original queue for something else one day. I'm pretty sure rewriting working code to meet requirements no one has is as close as you can get to the opposite of Agile.

I fixed some major bugs during my refactor, and missed one the second time after I started over. So stuff actually broke in production because I took points off the board and "fixed" what worked to add back in dead code, variables that aren't used, etc.

In the process, I asked the EA how he wanted me to do this stuff, because I know that he makes up "standards" on the fly and whatever I do may or may not be what he was imagining. We had a tight deadline and I didn't really have time to guess, read his mind, get it wrong, and start over. So we scheduled an hour for him to show me what he wanted.

He said it would take fifteen minutes. He used the first fifteen insisting that he would not explain what he wanted, and besides he didn't remember how all of the code he wrote worked anyway so I would just have to spend more time studying his masterpiece and stepping through it in the debugger.

Being accountable to my team, I insisted that we needed to spend the scheduled hour on him actually explaining what he wanted. He started yelling and hung up. I had to explain to management that I could figure out how to make his "framework" work, but it would take longer and there was no guarantee that when it was done it would magically converge on whatever he was imagining. We totally blew that deadline.

When the .NET work was done, I got sucked into another part of the same project where they were writing massive 500 line SQL stored procedures that no one could understand. They would write a dozen before sending any to QA, then find out that there was a scenario or two not accounted for, and rewrite them all. And repeat. And repeat. Eventually it consisted of, one again, copying and pasting existing procedures into new ones.

At one point one dev asked me to help him test his procedure. I said sure, tell me the scenarios for which I needed to test. He didn't know. My question was the equivalent of asking, "Tell me what you think your code does," and he couldn't answer it. If the guy who wrote it doesn't know what it does right after he wrote it and you certainly can't tell by reading it, and there's dozens of these procedures, all the same but slightly different, how is anyone ever going to read them in a month or a year? What happens when someone needs to change them? What happens when someone finds another defect, and there are going to be a ton of them?

It's a nightmare. Why interview me with all sorts of questions about my dev skills if the plan is to have me copy and paste stuff and carefully avoid applying anything that I know?

The people are all nice except for their evil XEB (Xenophobe Expert Beginner) EA who has no business writing a line of code, ever, and certainly shouldn't be reviewing it.

I've tried to keep my sanity by answering stackoverflow questions once in a while and sometimes turning evil things I was forced to do into constructive blog posts to which I cannot link to preserve my anonymity. I feel like I've taken a six-month detour from software development to shovel crap. Never again. Lesson learned. Next time they're not interviewing me. I'm interviewing them. I'm a professional.9 -

Continue of https://devrant.com/rants/2165509/...

So, its been a week since that incident and things were uneventful.

Yesterday, the "Boss" came looking for me...I was working on some legacy code they have.

He asked, "what are you doing ?"

Me, "I am working on the extraction part for module x"

He, "Show me your code!"

Me(😓), shows him.

Then he begins..."Have you even seen production grade code ? What is this naming sense ? (I was using upper and lower camel case for methods and variables)

I said, "sir, this is a naming convention used everywhere"

He, " Why are there so many useless lines in here?"

Me, "Sir, I have been testing with different lines and commenting them out, and mostly they are documentation"

He, "We have separate docs for all, no need to waste your time writing useless things into the code"

Me, 😨, "but how can anyone use my code if I don't comment or document it ?"

He, "We don;t work like that...(basically screaming)..."If you work here you follow the rules. I don't want to hear any excuses, work like you are asked to"

Me, 😡🤯, Okay...nice.

Got up and left.

Mailed him my resignation letter, CCed it to upper management, and right now preparing for an interview on next monday.

When a tech-lead says you should not comment your codes and do not document, you know where your team and the organisation is heading.

Sometimes I wonder how this person made himself a tech-lead and how did this company survived for 7 years!!

I don't know what his problem was with me, I met him for the first time in that office only(not sure if he saw the previous post, I don't care anymore).

Well, whatever, right now I am happy that I left that firm. I wish he get what he deserves.12 -

After months of development, testing, testing and even more testing the app was ready for deployment to production. Happy days, the end was in sight!

I had a week's leave so I handed over the preparation for deployment to my Senior Developer and left it in his capable hands while I enjoyed the sun and many beers.

I came back on the day of deployment and proudly pressed the deploy button. Hurrah!

Not long after I got loads of phone calls from around the country as the app wasn't working. What madness is this?! We tested this for months!

Turns out my Senior didn't like the way I'd written the SQL queries so he changed them. Which is obviously both annoying and unprofessional, but even worse he got a join wrong so the memory usage was a billion times more and it drained the network bandwidth for the whole site when I tried to debug it.

I got all the grief for the app not working and for causing many other incidents by running queries that killed the network.

So...much... rage!!!3 -

If you're going to request CRITICAL changes to thousands of records in the database, and approve it through testing which is done on an exact replica of production, then tell me it was done incorrectly after the fact it has been implemented and you didn't actually review the changes made to the data or business logic that you requested then you are an idiot. Our staging environment is there to ensure all the changes are accurate you useless human. Its the data you provided, I didn't just magically pull it from thin air to make yours and my job a pain the ass.undefined stupid data analysts this is why health insurance costs a buttload do your job fuckface idiots6

-

Worst collaboration experience story?

I was not directly involved, it was a Delphi -> C# conversion of our customer returns application.

The dev manager was out to prove waterfall was the only development methodology that could make convert the monolith app to a lean, multi-tier, enterprise-worthy application.

Starting out with a team of 7 (3 devs, 2 dbas, team mgr, and the dev department mgr), they spent around 3 months designing, meetings, and more meetings. Armed with 50+ page specification Word document (not counting the countless Visio workflow diagrams and Microsoft Project timeline/ghantt charts), the team was ready to start coding.

The database design, workflow, and UI design (using Visio), was well done/thought out, but problems started on day one.

- Team mgr and Dev mgr split up the 3 devs, 1 dev wrote the database access library tier, 1 wrote the service tier, the other dev wrote the UI (I'll add this was the dev's first experience with WPF).

- Per the specification, all the layers wouldn't be integrated until all of them met the standards (unit tested, free from errors from VS's code analyzer, etc)

- By the time the devs where ready to code, the DBAs were already tasked with other projects, so the Returns app was prioritized to "when we get around to it"

Fast forward 6 months later, all the devs were 'done' coding, having very little/no communication with one another, then the integration. The service and database layers assumed different design patterns and different database relationships and the UI layer required functionality neither layers anticipated (ex. multi-users and the service maintaining some sort of state between them).

Those issues took about a month to work out, then the app began beta testing with real end users. App didn't make it 10 minutes before users gave up. Numerous UI logic errors, runtime errors, and overall app stability. Because the UI was so bad, the dev mgr brought in one of the web developers (she was pretty good at UI design). You might guess how useful someone is being dropped in on complex project , months after-the-fact and being told "Fix it!".

Couple of months of UI re-design and many other changes, the app was ready for beta testing.

In the mean time, the company hired a new customer service manager. When he saw the application, he rejected the app because he re-designed the entire returns process to be more efficient. The application UI was written to the exact step-by-step old returns process with little/no deviation.

With a tremendous amount of push-back (TL;DR), the dev mgr promised to change the app, but only after it was deployed into production (using "we can fix it later" excuse).

Still plagued with numerous bugs, the app was finally deployed. In attempts to save face, there was a company-wide party to celebrate the 'death' of the "old Delphi returns app" and the birth of the new. Cake, drinks, certificates of achievements for the devs, etc.

By the end of the project, the devs hated each other. Finger pointing, petty squabbles, out-right "FU!"s across the cube walls, etc. All the team members were re-assigned to other teams to separate them, leaving a single new hire to fix all the issues.5 -

Got pulled out of bed at 6 am again this morning, our VMs were acting up again. Not booting, running extremely slow, high disk usage, etc.

This was the 6 time in as many weeks this happened. And always the marching orders were the same. Find the bug, smash the bug, get it working with the least effort. I've dumped hundreds of hours maintaining this broken shitheap of a system, putting off other duties to keep mission critical stations running.

The culprits? Scummy consultants, Windows 10 1709, and Citrix Studio.

Xen Server performed well enough, likely due to its open source origins and Centos architecture.

Whelp. DasSeahawks was good and pissed. Nothing like getting rousted out of bed after a few scant hours rest for patching the same broken system.

DasSeahawks lost his temper. Things went flying. Exorcists were dispatched and promptly eaten.

Enough. No consultants, no analysts, and no experts touched it. No phone calls, no manuals, not even a google search. Just a very pissed admin and his minion declaring blitzkrieg.

We made our game plan, moved the users out, smoked our cigs, chugged monster, and queued a gnu-metal playlist on spotify.

Then we took a wrecking ball to the whole setup. User docs were saved, all else was rm -r * && shred && summon -u Poseidon -beast Land_Cracken.

Started at 3pm and finished just after midnight. Rebuilt all the vms with RDP, murdered citrix studio (and their bullshit licenses), completely blocked Windows 10 updates after 1607, and load balanced the network.

So what do we get when all the experts are fired? Stabbed lightning. VMs boot in less than 10 seconds, apps open instantly, and server resources are half their previous usage state. My VMs are now the fastest stations in our complex, as they should be.

Next to do: install our mxgpu, script up snapshots and heartbeat, destroy Windows ads/telemetry, and setup PDQ. damn its good to be good!

What i learned --> never allow testing to go to production, consultants will fuck up your shit for a buck, and vendors are half as reliable over consultants. Windows works great without Microsoft, thin clients are overpriced, and getting pissed gets things done.

This my friends, is why admins are assholes.4 -

I was asked to look into a site I haven't actively developed since about 3-4 years. It should be a simple side-gig.

I was told this site has been actively developed by the person who came after me, and this person had a few other people help out as well.

The most daunting task in my head was to go through their changes and see why stuff is broken (I was told functionality had been removed, things were changed for the worse, etc etc).

I ssh into the machine and it works. For SOME reason I still have access, which is a good thing since there's literally nobody to ask for access at the moment.

I cd into the project, do a git remote get-url origin to see if they've changed the repo location. Doesn't work. There is no origin. It's "upstream" now. Ok, no biggie. git remote get-url upstream. Repo is still there. Good.

Just to check, see if there's anything untracked with git status. Nothing. Good.

What was the last thing that was worked on? git log --all --decorate --oneline --graph. Wait... Something about the commit message seems familiar. git log. .... This is *my* last commit message. The hell?

I open the repo in the browser, login with some credentials my browser had saved (again, good because I have no clue about the password). Repo hasn't gotten a commit since mine. That can't be right.

Check branches. Oh....Like a dozen new branches. Lots of commits with text that is really not helpful at all. Looks like they were trying to set up a pipeline and testing it out over and over again.

A lot of other changes including the deletion of a database config and schema changes. 0 tests. Doesn't seem like these changes were ever in production.

...

At least I don't have to rack my head trying to understand someone else's code but.... I might just have to throw everything that was done into the garbage. I'm not gonna be the one to push all these changes I don't know about to prod and see what breaks and what doesn't break

.

I feel bad for whoever worked on the codebase after me, because all their changes are now just a waste of time and space that will never be used.3 -

In one of my first jobs i developed an (ugly and heavly under-payed) e-commerce/media platform for a customer.

That customer was constantly making fun of his bald partner telling how he was gay, liked dicks, etc., drawing dicks and bananas as sample website logos or uploading dildo/penis images as images, he was always like this.

Once the website was ready for production i removed all the "testing" posts and images and told the client to insert some real content and alert me when it was ready for release.

Well some time after the release i got a call from that client, for the first time he was serious:

C: Hi, why there are dildo images on the server? (the website in production was full of dildo/penis images instead of actual product images, he even photoshopped the head of his partner on a penis and uploaded it!!!)

R: ehm... i told you it was on production and to stop uploading bad content....

C: Ummm ok, please fix it immediatly, thanks!3 -

How to NOT write unit tests:

A colleague of mine has developed a new package of software, many of our new projects are going to use. So in his presentation of the new functionalities he also showed us that he used unit tests to cover some of his code. So i asked him to show me that all tests passes.

He: I can show you, but one test suit will fail currently.

Me: Why?? You told us, everything is finished and works fine.

He: That's right, but they will fail because I'm currently not in the customer VPN.

Me: Excuse me, WHAT??

He: Yes, I'm not in the VPN that connects me to this one customers facility in Hungary, where the counterpart of the software is runnung live.

Me: YOU WROTE UNIT TESTS THAT TEST AGAINST A RUNNING LIVE FACILITY??

He: Yes, so I can check, that the telegramms I send are right. If I get back the right acknowledgement, the telegramm structure is right and my code is working.

Me: You know, that is not the porpose of unit tests? You know, that these test should run in any environment?

He: But they are proving, that my code is working. Everytime I change something I connect to the customer and let the tests run.

Me: ...

Despite the help of some other developers we could not convince him that this was not good and he should remove them. So now this package is used in 2 new projects and this test suit is still failing, everytime you execute all unit tests.7 -

How could I only name one favorite dev tool? There are a *lot* I could not live without anymore.

# httpie

I have to talk to external API a lot and curl is painful to use. HTTPie is super human friendly and helps bootstrapping or testing calls to unknown endpoints.

https://httpie.org/

# jq

grep|sed|awk for for json documents. So powerful, so handy. I have to google the specific syntax a lot, but when you have it working, it works like a charm.

https://stedolan.github.io/jq/

# ag-silversearcher

Finding strings in projects has never been easier. It's fast, it has meaningful defaults (no results from vendors and .git directories) and powerful options.

https://github.com/ggreer/...

# git

Lifesaver. Nough said.

And tweak your command line to show the current branch and git to have tab-completion.

# Jetbrains flavored IDE

No matter if the flavor is phpstorm, intellij, webstorm or pycharm, these IDE are really worth their money and have saved me so much time and keystrokes, it's totally awesome. It also has an amazing plugin ecosystem, I adore the symfony and vim-idea plugin.

# vim

Strong learning curve, it really pays off in the end and I still consider myself novice user.

# vimium

Chrome plugin to browse the web with vi keybindings.

https://github.com/philc/vimium

# bash completion

Enable it. Tab-increase your productivity.

# Docker / docker-compose

Even if you aren't pushing docker images to production, having a dockerfile re-creating the live server is such an ease to setup and bootstrapping the development process has been a joy in the process. Virtual machines are slow and take away lot of space. If you can, use alpine-based images as a starting point, reuse the offical one on dockerhub for common applications, and keep them simple.

# ...

I will post this now and then regret not naming all the tools I didn't mention. -

> Worst work culture you've experienced?

It's a tie between my first to employers.

First: A career's dead end.

Bosses hardly ever said the truth, suger-coated everything and told you just about anything to get what they wanted. E.g. a coworker of mine was sent on a business trip to another company. They had told him this is his big chance! He'd attend a project kick-off meeting, maybe become its lead permanently. When he got there, the other company was like "So you're the temporary first-level supporter? Great! Here's your headset".

And well, devs were worth nothing anyway. For every dev there were 2-3 "consultants" that wrote detailed specifications, including SQL statements and pseudocode. The dev's job was just to translate that to working code. Except for the two highest senior devs, who had perfect job security. They had cooked up a custom Ant-based build system, had forked several high-profile Java projects (e.g. Hibernate) and their code was purposely cryptic and convoluted.

You had no chance to make changes to their projects without involuntarily breaking half of it. And then you'd have to beg for a bit of their time. And doing something they didn't like? Forget it. After I suggested to introduce automated testing I was treated like a heretic. Well of course, that would have threatened their job security. Even managers had no power against them. If these two would quit half a dozen projects would simply be dead.

And finally, the pecking order. Juniors, like me back then, didn't get taught shit. We were just there for the work the seniors didn't want to do. When one of the senior devs had implemented a patch on the master branch, it was the junior's job to apply it to the other branches.

Second: A massive sweatshop, almost like a real-life caricature.

It was a big corporation. Managers acted like kings, always taking the best for themselves while leaving crumbs for the plebs (=devs, operators, etc). They had the spacious single offices, we had the open plan (so awesome for communication and teamwork! synergy effects!). When they got bored, they left meetings just like that. We... well don't even think about being late.

And of course most managers followed the "kiss up, kick down" principle. Boy, was I getting kicked because I dared to question a decision of my boss. He made my life so hard I got sick for a month, being close to burnout. The best part? I gave notice a month later, and _he_still_was_surprised_!

Plebs weren't allowed anything below perfection, bosses on the other hand... so, I got yelled at by some manager. Twice. For essentially nothing, things just bruised his fragile ego. My bosses response? "Oh he's just human". No, the plebs was expected to obey the powers that be. Something you didn't like? That just means your attitude needs adjustment. Like with the open plan offices: I criticized the noise and distraction. Well that's just my _opinion_, right? Anyone else is happily enjoying it! Why can't I just be like the others? And most people really had given up, working like on a production line.

The company itself, while big, was a big ball of small, isolated groups, sticking together by office politics. In your software you'd need to call a service made by a different team, sooner or later. Not documented, noone was ever willing to help. To actually get help, you needed to get your boss to talk to their boss. Then you'd have a chance at all.

Oh, and the red tape. Say you needed a simple cable. You know, like those for $2 on Amazon. You'd open a support ticket and a week later everyone involved had signed it off. Probably. Like your boss, the support's boss, the internal IT services' boss, and maybe some other poor sap who felt important. Or maybe not, because the justification for needing that cable wasn't specific enough. I mean, just imagine the potential damage if our employees owned a cable they shouldn't!

You know, after these two employers I actually needed therapy. Looking back now, hooooly shit... that's why I can't repeat often enough that we devs put up with way too much bullshit.3 -

Testing in a production environment is like closing a door in a to kill a snake and electricity goes off 📴

-

I used to work on a production management team, whose job was, among other things, safeguarding access to production. Dev teams would send us requests all the time to, "run a quick SQL script."

Invariably, the SQL would include, "SELECT * FROM db_config."

We would push the tickets back, and the devs would call us, enraged. I learned pretty quickly that they didn't have any real interest in dev, test, or staging environments, and just wanted to do everything in prod, and see if it works.

But they would give up their protests pretty fast when I offered to let them speak to a manager when they were upset I wouldn't run their SQL.2 -

We developed an application and deployed on production (but not launched)

And business team already created lot of garbage or dummy data. Reporting systems are huge pile of bars, stats and shit.

Now, has to destroy and clean production.

Already advise them to do experiments or testing dev or staging.

Damn. First time in my career experienced this. Has to delete production.4 -

My co-worker, still studying but working as a "senior dev", just decided that we don't need a test/staging environment anymore. We just "validate" (we also don't use the word "test" anymore) newly created features in production.

Makes absolutely sense...

Thank god I have a new job from february on!1 -

young user @Mizukuro asked days ago for ways to improving his javascript skills.

I wasn't sure what to say at the moment, but then I thought of something.

Lodash is the most depended upon package in npm. 90k packages depend on it, more than double than the second most depended upon package (request with 40k).

Lodash was also created 6 years ago.

This means lodash has been heavily tested, and is production ready.

This means that reading and understanding its code will be very educational.

Also, every lodash function lives in its own file, and are usually very short.

This means it's also easy to understand the code.

You could start with one of the "is..." (eg isArray, isFunction).

The reason for such choice is that it's very easy to understand what these functions do from their name alone.

And you also get to see how a good coder deals with js types (which can be very impredictible sometines).

And to learn even more, read the test file for that function (located in tests/<original file name>.js. For the most part they are very readable and examples of very good testing code.

Here's the isFunction code

https://github.com/lodash/lodash/...

Here's the test for isFunction

https://github.com/lodash/lodash/...

The one thing you won't learn here is about es5, 6, or whatever.3 -

-not commenting

-leaving console logs behind in production

-not testing if it works in IE

-using root too much

-using if instead of switch

-never staying consistent with naming conventions

-starting projects and never finishing3 -

Borrowed from Reddit and Twitter:

Everybody has a testing environment. Some people are lucky enough enough to have a totally separate environment to run production in.3 -

Yet another day at work:

My job is to write test libraries for web services and test others code. Yes I know to code, and have a niche in software testing.

Sometimes developers (whose code I find bugs in) get so defensive and scream in emails and meetings if I point out an issue in their code.

Today, when I pointed a bug in his repo, a developer questioned me in an email asking if I even understood his code, and as a tester I shouldn’t look at his code and only blackbox test it.

I wish I can educate the defensive developer that sometimes, it’s okay to make mistakes and be corrected. That’s how we deliver services that doesn’t suck in production.10 -

Ever had a day that felt like you're shoveling snow from the driveway? In a blizzard? With thunderstorms & falling unicorns? Like you shovel away one m² & turn around and no footprints visible anymore? And snow built up to your neck?

Today my work day was like that.. xcept shit..shit instead of pretty & puffy snow!!

Working on things a & b, trying to not mess either one up, then comes shit x, coworker was updating production.. ofc something went wrong.. again not testing after the update..then me 'to da rescue'.. :/ hardly patch things up, so it works..in a way.. feature c still missing due to needed workarounds.. going back to a and b.. got disrupted by the same coworker who is nver listening, but always asking too much..

And when I think I finally have the b thing figured out a f-ing blocker from one of our biggest clients.. The whole system is unresponsive.. Needles to say, same guy in support for two companies (their end), so they filed the jira blocker with the wrong customer that doesn't have a SLA so no urgent emails..and then the phone calls.. and then the hell broke loose.. checking what is happening.. After frantic calls from our dba to anyone who even knows that our customer exists if they were doing sth on the db.. noup, not a single one was fucking with the prod db.. The hell! Materialised view created 10 mins ago that blocked everything..set to recreate every 10 minutes..with a query that I am guessing couldn't even select all that data in under 15.. dafaaaq?! Then we kill it..and again it is there.. We found out that customers dbas were testing something on live environment, oblivious that they mamaged to block the entire db..

FML, I'm going pokemon hunting.. :/ codename for ingress n beer..3 -

Hello DevRant community! It’s been a while, almost 5 years to be exact. The last time I posted here, I was a newbie, grappling with the challenges of a new job in a completely new country. Oh, how time flies!

Fast forward to today, and it’s been quite the journey. The codebase that once seemed like an indecipherable maze is now my playground. The bugs that used to keep me up at night are now my morning coffee puzzles. And the team, oh the team! We’ve moved from awkward nods to inside jokes and shared victories.

But let’s talk about the real hero here - the coffee machine. The unsung hero that has fueled late-night coding sessions and early morning stand-ups. It’s seen more heated debates than the PR comments section. If only it could talk, it would probably write its own rant about the indecisiveness of developers choosing between cappuccino and latte.

And then there are the unforgettable ‘learning opportunities’ - moments like accidentally shutting down the production server or dropping the customer database. Yes, they were panic-inducing crises of apocalyptic proportions at that time, but in hindsight, they were valuable lessons. Lessons about the importance of thorough testing, proper version control, reliable backup systems, and most importantly, owning up to our mistakes.

So here’s to the victories and failures, the bugs and fixes, the refactorings and 'wontfix’s. Here’s to the incredible journey of growth and learning. And most importantly, here’s to this amazing community that’s always been there with advice, sympathy, humor, and support.

Can’t wait to see what the next 5 years bring! 🥂3 -

Startup-ing 101, from Fitbit:

- spy on users

- sell data

- cut production costs

- mutilate people's bodies, leaving burn scars that will never heal

- announce the recall, get PR, and make the refund process impossibly convoluted

- never give actual refunds

- claim that yes, fitbit catches fire, but only the old discontinued device, just to mess with search results and make the actual info (that all devices catch fire) hard to find

- try hard to obtain the devices in question, so people who suffered have no evidence

- give bogus word salad replies to the press

This is what one of the people burned has to say:

"I do not have feeling in parts of my wrist due to nerve damage and I will have a large scar that will be with me the rest of my life. This was a traumatic experience and I hope no one else has to go through it. So, if you own a Fitbit, please reconsider using it."

Ladies and gentlemen, cringefest starts. One of fitbit replies:

"Fitbit products are designed and produced in accordance with strict standards and undergo extensive internal and external testing to ensure the safety of our users. Based on our internal and independent third party testing and analysis, we do not believe this type of injury could occur from normal use. We are committed to conducting a full investigation. With Google's resources and global platform, Fitbit will be able to accelerate innovation in the wearables category, scale faster, and make health even more accessible to everyone. I could not be more excited for what lies ahead".

In the future, corporate speech will be autogenerated.

(if you wear fitbit, just be aware of this.) 13

13 -

I know politics is not allowed here, but I have to share this gem with you.

One day before the election for the European parliament the website of the German city Bochum showed a wrong bar diagram with false results of the election for a few seconds.

Everyone was loling. But I was like WTF? They were testing in production. And also they included data were the party AFD had about 50% of the votes. Are they retarded or so?4 -

Ok, here goes...

I was once asked to evaluate upgrade options for an online shop platform.

The thing was built on Zend 1, but that's not the problem.

The geniuses that worked on it before didn't have any clue about best practices, framework convention, modular thinking, testing, security issues...nothing!

There were some instances when querying was done using a rudimentary excuse for a model layer. Other times, they would just use raw queries and just ignore the previous method. Sometimes the database calls were made in strange function calls inside randomly loaded PHP files from different folders from all over the place. Sometimes they used JOINs to get the data from multiple tables, sometimes they would do a bunch of single table queries and just loop every data set to format it using multiple for loops.

And, best of all, there were some parts of the app that would just ignore any ideea of frameworks, conventions and all that and would be just a huge PHP file full of spagetti code just spalshed around, sometimes with no apparent logic to it. Queries, processing, HTML...everything crammed in one file...

The most amazing thing was that this code base somehow managed to function in production for more than 5 years and people actualy used it...

Imagine the reaction I got from the client the moment I said we should burn it to the ground and rebuild the whole thing from scratch...

Good thing my boss trusted me and backed me up (he is a great guy by the way) and we never had to go along with that Frankenstein monster... -

Woops! I was debugging a particularly snarky issue in Production the other day. This morning I realized about 60 minutes into my new coding I hadn't changed my profile back. I was testing form submissions on a live customer's site.2

-

Testing an attendace machine API one by one so i know what does what.

And there’s an API for wiping all the attendance data stored in the machine.

I didn’t realize it until i push the damn button.

The attendance machine just become fresh like new 😱😨😰

Shit.

A testing session just become an extreme sport.

Thankfully the IT guy has a backup but just up to last month.

Well, it’s better than nothing isn’t it?

He just tell his boss that the machine was run out of memory and the attendance data for the current month were not saved.

And he ask him to buy me some machine for testing.

Yes, i was living on the edge by testing in the production machine. -

Things that seem "simple" but end up taking a long ass time to actually deploy into production:

1. Using a new payment processor:

"It's just a simple API, I'll be done in 2 hours"

LOL sure it is, but testing orders and setting up a sandbox or making sure you have credentials right, and then switching from test to life and retesting, and then... fuck

2. Making changes to admin stats.

"'I just have to add this column and remove that one... maybe like a couple of hours"

YOU WISH

3. Anything Javascript

"Hah, what, that's like a button, np"

125 minutes later...

console.log('before foo');

console.log(this.foo)

etc..2 -

Me: *asks boss for the id of his test store so I can apply experimental schema changes to test out a new dashboard app*

Boss: *gives me production store id and doesn't say anything*

Fate: … "You got lucky this time."

This is the CEO of the company btw. Startups. *sigh*1 -

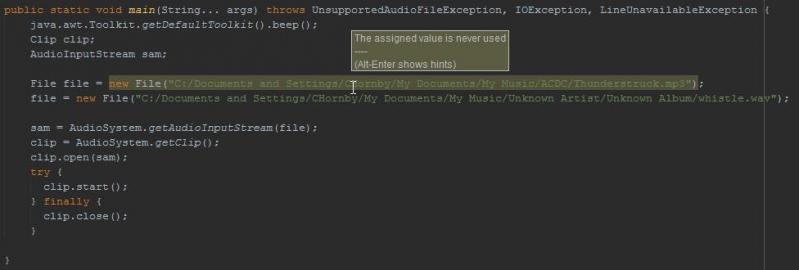

So, awesome clip to use for testing... Problem is, I found this in the codebase for a production app.

*Facepalm* 1

1 -