Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "gpu"

-

Manager: Hey what was that that you closed on your screen just now?

Dev: That popup? That’s NVIDIA letting me know that a new driver for my GPU is available.

Manager: Isn’t that for video games?

Dev: I mean that’s the reason many people opt into having a GPU but It’s not the on—

Manager: You are NOT allowed to play video games on your work computer!

Dev: This is my personal computer. It’s just an older GPU I popped onto this computer since otherwise it was just sitting in a drawer. My work computer is out of commission.

Manager: Well where is your work computer? How come you are not using it?

Dev: …Because of that blue screen of death issue we talked about yesterday.

Manager: Ok but that doesn’t give you permission to play VIDEO GAMES on your *WORK* computer.

Dev: …24 -

So I cracked prime factorization. For real.

I can factor a 1024 bit product in 11hours on an i3.

No GPU acceleration, no massive memory overhead. Probably a lot faster with parallel computation on a better cpu, or even on a gpu.

4096 bits in 97-98 hours.

Verifiable. Not shitting you. My hearts beating out of my fucking chest. Maybe it was an act of god, I don't know, but it works.

What should I do with it?228 -

Most of the code I write nowadays is for GPUs using a dialect of C. Anyways, due to the hardware of GPUs there is no convenient debugger and you can't just print to console neither.

Most bugs are solved staring at the code and using pen and paper.

I guess one could call that a quirk.11 -

Finally finished my AMD Watercooled build 🤤

Now my code can run cooler 😂

CPU - Ryzen 3900X - stock

RAM - Corsair Vengeance 32 GB 3600 Mhz

Mobo - Asus ROG Crosshair Formula VIII

GPU - MSI GTX 1080ti 11GB GDDR5 19

19 -

My new workstation. i7-4790k, MSI h81m-p33, 16gb DDR3, AORUS RX580, ADATA 128gb SSD, WD 1tb Black Drive, CoolerMaster V1000 1000W PSU.

OS: Ubuntu 16.04.3 LTS and Windows 10 Pro 14

14 -

If a CPU were an employee...

CPU: Hey boss, I'm seeing you are giving me a lot of mathematical tasks that would really profit from splitting into parallel calculations. GPU's are great for that, we should get one.

Boss: But you can still do them, right? If you can do it, I'm pretty sure you can do it at GPU speeds. We gotta save up so I can buy another car!

----------------------

Boss: Why is this taking so long?

CPU: I'm overloaded with work, so I'm overheating. Maybe you could buy a GPU to help me out, or at least a fan...

Boss: You're overheating? Your personal problems should not affect your professional life. Learn to get your shit together or we will hire someone who will

CPU: *melts*1 -

So, this baby just arrived :)

Now every game I can think of... Nahh, we all know that this helps you in programming by giving you some power 8

8 -

So you are telling me I can run tensorflow.js on the browser and use user's GPU to power my models!

Oh Yeaaaaaaaaaaah!1 -

My "new desktop PC".

6 core xeon CPU with hyperthreading x2 (24 virtual cores) over clocked to 3.9ghz

128gb ddr3 ecc ram

256gb SCSI hard drives in RAID 0

256 Samsung ssd

AMD r9 something something 8gb ddr5 vram gpu

Got it for £120 on eBay (except for the ssd and gpu). Still missing a sound card :(

I have no idea on what to use it yet except for development but I thought it'd be cool to build a pretty good "desktop pc" for around £350-£400. 14

14 -

And once again:

18:00: *writing a Mandelbrot algo in glsl for the GPU*

19:00: "This should be working now..."

22:00: "why isn't it working??!"

22:30: "Oh my uniform vectors become zero when they arrive on the GPU"

01:00: "Oh. I uploaded them as matrices..."

I wasted about 4 fucking hours because I suck dick.5 -

*Notices that SMPlayer takes a lot of the iGPU and CPU*

*Relaunches SMPlayer to use dedicated Nvidia GPU*

> I don't really want to play videos anymore when running on this card.

> MPlayer crashed by bad usage of CPU/FPU/RAM.

(that last one is an actual log btw)

Alright, got it. I bought this fucking PC just for its fucking "powerful" GPU. It already locked me into using WanBLowS on that piece of garbage. Yet now that NvuDiA piece of garbage is gonna act worse than a fucking paperweight?! Seriously?!!

FUCK YOU NVIDIA!!! Linus Torvalds called your shitty cards shit on Linux.. I call it shit on every fucking OS out there!!! MOTHERFUCKERS!!!3 -

Just tested my GPU code vs my non-GPU code.

Its a simple game of life implementation. My test is on a 80 x 40 grid running for 100,000 cycles.

The normal code took 117 seconds.

The CUDA code took 2 seconds.

Holy fuck this is terrifying.3 -

How I see GPU brands:

- Team blue, Intel:

Oldschool autistic engineers, working GPU but not practical

- Team red, AMD:

Competitive but anarchist, horrid Windows drivers, but good on opensource, anti-mainstream

- Team green, nVidia:

Way too greedy cunts circlejerking each others off during breaks (like Apple employees), delivering on performance, but wayyy overpriced and scammy tactics6 -

Advertising your laptops as "linux-laptops" but shipping only with nvidia-gpu should be punishable by death.18

-

Ladies and gentlemen... Can we please have a moment of silence for my PC which seems to be on her last legs...

CPU thermal throttling at 40 degrees celcius (CPU is on it's way out)

RAM is randomly having allocation errors

PSU isn't delivering optimal current

GPU only displays out of 2 DP ports

and for the very first time... Have had an SSD fail...

Pray for me people... Please14 -

A situation i just dealt with on my tech help Discord server:

Beginning:

"my gpu isn't working and i need it to update my bios"

Ending:

"i have to have a cpu and ram to update my bios?"

this kid thought he only needed a mobo, GPU and PSU

he's 19

why5 -

I'm 4 days into my new job, and so far I am absolutely loving it. Here's my setup. Yes, they gave me 3 monitors plus a laptop, so my setup has 5 screens! Now I can die happy :D

Definitely worth noting as well, since it caught me by surprise - the company-supplied laptop is a powerhouse. High-end i7 CPU, mid-to-high-end NVIDIA GPU, tons of ports, 1TB HD, 4K display, and 48 GB RAM. Yes, 48 GB. I am truly blessed, starting off my career with this. ^_^ rant excessive ram my dream finally came true hello innumerable open tabs multi-monitor setup goodbye lag11

rant excessive ram my dream finally came true hello innumerable open tabs multi-monitor setup goodbye lag11 -

Well something happened to my Ubuntu and suddenly.... <poof> it supports my Nvidia GPU now </poof>16

-

Just opened my laptop to see if I can upgrade my ram, and yes I can

And I've got space for a 2.5 drive as well!

Fuck yeah!

All I need is a 1080 inside of this :P

But I've just got a shitty built-in gpu... 16

16 -

Bought four GPU for our server and only to find our motherboard can only fit in two GPU despite it has 4 PCIe slot...14

-

dammit. I fucking hate it when I get stuck because of low level computing concepts and there is no explanation on Google.

like.. I understand the difference between an int and a float, but no one ever explains how you convert 32bit signed vectors to floats. or how bgra and rgba differ. or how to composite two images on a GPU. etc. the internet is great and all, but fuck, sometimes it seems as everyone is just as dumb as I am.4 -

My Razer laptop died due to a hard drive failure so i filed an RMA. They didn't have the replacement part in stock, so they're replacing the laptop all together. Mine is a 2019 model with a 2060, but the current equivalent model has a RTX 3070, so I'll get that instead. I'm not complaining 😏9

-

Prof: "Hey, you can take a look at the source code that we used last year in this research paper"

Me :(surprise because other papers usually don't share source code), "Okay"

A few weeks later:

Me: "Prof, if you use method A instead of method B, you can get better performance by 20%. Here's the link"

Prof:"The source link that you mentioned is for another instrument, not GPU"

Me:"Yeah, but I tested in on GPU and I found it is also applied in my device"

Prof:"That's interesting."

-----------------------------------------------------

This is why folks, sharing the source code that you used in scientific papers is important.8 -

Fucking Windows, could you please stop "updating" my fucking GPU drivers to an older version after I just updated them myself to the newest version.

You little dipshit, is a version check really that fucking hard for you?11 -

Seriously, Ubuntu can go burn in hell far as I care.

I've spent the better part of my morning attempting to set it up to run with the correct Nvidia drivers, Cuda and various other packages I need for my ML-Thesis.

After countless random freezes, updates,. Downgrades and god-knows-what, I'm going back to Windows 10 (yes, you read that right). It's not perfect but at least I don't have to battle with my laptop to get it running. The only thing which REALLY bothers me about it is the lack of GPU pass-through, meaning running local docker containers rely solely on the CPU. In itself not a huge issue if only I didn't NEED THE GOD DAMN GPU FOR THE TRAINING21 -

WHY THE FUCK! WHY THE FUCKING FUCK! DO I HAVE TO WAIT 3 FUCKING DAYS TO GET A FUCKING VIDEO RENDERED! i didnt buy a new fucking 2080TI for this! WHY THE FUCK DOES THE CPU RENDER THE FUCKING VIDEO!

I mean, we can do fucking REAL TIME RAY-TRACING! And yet, no fucking idiot came to the Idea, "hmm we could let the GPU its intended purpose and dont use the CPU that much." I MEAN, IT HAPPENED, BUT FUCK IT! FUCK ALL OF THIS! FUCKING 74 HOURS!! FOR AN HOUR CLIP!

(Its 4K tho)

Fuck.21 -

Exploring my work machine today

- Nvidia control panel icon in the taskbar

Why is that there? *Click*

- No screens connected to an Nvidia device.

- Goes to system information

- GTX 1050

- Look around back, all of the displays are plugged into system video on the motherboard.

- Facepalm, plug into the GPU2 -

Ok I give up. I concede. I can't take one more second of trying to get ANY window manager to run under Arch with an Nvidia GPU.

Back to Debian it is...18 -

My new motherboard (MSI Z170 GAMING AC) reports temps for the memory, CPU, GPU and hard drive in °C. And the motherboard temp in °F.

Almost had a fucking heart attack the first time I opened Speccy for benchmarking. -

Skills required for ML :

Math skills:

|====================|

Programming skills :

|===|

Skills required for ML with GPU:

Math skills:

|====================|

Programming skills :

|====================|

/*contemplating career choice*/

: /3 -

"How we use Tensorflow, Blockchain, Cloud, nVidia GPU, Ethereum, Big Data, AI and Monkeys to do blah blah... "4

-

There's nothing like cleaning out a cupboard of stuff you have long since moved on from only to find this hidden in the corner.

I once had a Mac, it's still a piece of shit and I'm not convinced Apple ever made life easier to use one of these 😅 3

3 -

What was your most ridiculous story related to IT?

Mine was when I was quite small (11yo) and wanted a graphics card (the epoch of ATI Radeon 9800), looked at the invoice to know what kind of ports I had in the pc (did not open it), then proceeded to brat to my dad to get me a new GPU

So we where in Paris, we went to a shop, vendor asked me "PCI or AGP?" and said AGP.

Paris > London > Isle of Skye roadtrip followed, then as my dad brought me back home in Switzerland, we opened my pc...

And we couldn't fit the GPU in the basic old PCI port. My Dad was pissed. He frustratedly tried fitting the GPU in the PCI slot, but nope. (He's a software engineer though)

At least the GPU had 256 mb of ram :D

Gave it to my brother 6 months later at family gathering

To this day, my Dad still thinks I cannot handle hardware, although I have successfully built 10+ pc, and still cringes with a laughing smile when I talk to him about it haha

Ah well.1 -

I know I'm late to the party with GPU passthrough, but holy crap is it great! I first tried to set it up with an old GeForce 1050 ti, but damned if nvidia isn't completely worthy of that middle finger Linus gave them. I switched to an older Radeon(rx570) and it worked PERFECTLY. I have a fully accelerated windows desktop running as a VM now that I can connect to via parsec.

Big fan.5 -

Nvidia might stop being a douchebag about Linux: https://developer.nvidia.com/blog/... Right now, that doesn't change much yet, but it opens a possible road.18

-

Finally dumped windows from my tower/gaming rig!!!

I'm now running Ubuntu Budgie and Windows 10 inside a qemu/kvm VM with gpu passthrough. That way I have almost-native performance and no additional setup effort for installing games. 7

7 -

Well, the rant is because I have to go to Madrid twice a month, which is annoying.

But now I'm writing your GPU drivers, bitches 😂.

More concretely, for those who may care, gonna work in HLSL to spir-v conversion.8 -

I wrote something to make QEMU VMs with GPU passthrough easy, thought I may share.

Feel free to look and uhh help me improve it, I really have no idea how some things in QEMU works. PRs and stuff appreciated

https://github.com/sr229/nya4 -

Cant decide whether to talk about how TeamViewer banned me because they think me helping my girlfriend all the time was commercial use,

or when she saw my porn while abusing my computer's GPU for training her model6 -

An undetectable ML-based aimbot that visually recognizes enemies and your crosshairs in images copied from the GPU head, and produces emulated mouse movements on the OS-level to aim for you.

Undetectable because it uses the same api to retrieve images as gameplay streaming software, whereas almost all existing aimbots must somehow directly access the memory of the running game.11 -

Got a Radeon RX 570 to complete my light gaming and rendering setup. This is easily the most powerful GPU I have ever owned, super hyped to try out all my games on this now. Works great after it crashed under Linux a few times for god knows what reasons but hey, not disappointed at all so far.

All AMD setup here (Ryzen + Radeon), feels good to be back on AMD after a long Intel/Nvidia stint.10 -

Oi mates!

Little #ad (Not annoying don't worry - it's a cool project)

Just wanted to let y'all know about the awesome project from the Stanford University named Folding@Home!

Basically you donate CPU/GPU power and they use it for researching cancer/alzheimer's/etc.

All you need to do is install some software on your server/computer.

Then the software downloads so called "Work Units" (no big bandwidth required - really small packets) and simulates/calculates some stuff. Afterwards the client send the results back to their server.

This way they are able to create a "supercomputer" that is spread all over the world.

You don't need to pay anything except maybe some increased electricity bills (but you change some settings to use only a little part of the CPU/GPU and therefore create less heat).

Of course the program only uses the CPU/GPU power that's not required by any other software on the computer. I can literally play games while the client is running. No performance decrease.

That's a short intro by me. I can suggest you to visit their website and maybe even start folding by yourself!

> https://foldingathome.com

Also @cr78, @kescherRant and me are in a team together. If you want to join our team as well just use our Team ID:

235222

Teams?

Yup, there's this little stats site (https://stats.foldingathome.com) where all teams can compete against each other. Nothing big.

I hope I convinced atleast some of you!

Feel free to ask questions in the comments!

See ya.11 -

Making my own game console.

I work with FPGAs and have access to a bunch of pretty nice dev boards, so building a workable CPU and GPU is definitely possible. I even have architectures for both in mind, and have planned ways to get compiler support (i.e. just use RISC-V as the ISA as much as possible so that I can reuse an existing RISC-V compiler with minimal changes) so that I don't need to write assembly.

But, lack of time. Sigh.4 -

Discovered pro tip of my life :

Never trust your code

Achievements unlocked :

Successfully running C++ GPU accelerated offscreen rendering engine with texture loading code having faulty validation bug over a year on production for more than 1.5M daily Android active users without any issues.

History : Recently I was writing a new rendering engineering that uses our GPU pipeline engine.. and our prototype android app benchmark test always fails with black rendering frame detection assertion.

Practice:

Spend more than a month to debug a GPU pipeline system based on directed acyclic graph based rendering algorithm.

New abilities added :

Able to debug OpenGL ES code on Android using print statement placed in source code using binary search.

But why?

I was aware of the issue over a month and just ignored it thinking it's a driver bug in my android device.. but when the api was used by one of Android dev, he reported the same issue. In the same day at night 2:59AM ....

Satan came to me and told me that " ok listen man, here is what I am gonna do with you today, your new code will be going production in a week, and the renderer will give you just one black frame after random time, and after today 3AM, your code will not show GL Errors if you debug or trace. Buhahahaha ahhaha haahha..... Puffff"

And he was gone..

Thanks satan for not killing me.. I will not trust stable production code anymore enevn though every line is documented and peer reviewed. -

Finally bought a macbook for my android app development. Paid 1600 EUR for 2015 MID mbpro with 512GB SSD, 16gb ram, 2.5ghz quad core i7 cpu and dedicated gpu. I know I overpaid but I couldnt fkin stand working on windows anymore. I spent one year with fcking windows building android apps. Making apps on windows is fking impossible. And dont even get me started on new crap macbooks starting from 2016 :/19

-

Ok i post it a bit late but what the hell.

This is my monster now! I now shall conquer the world!

MSI GL62 7RD

with that configuration:

CPU: i5 7300HQ

RAM: 8GB DDR4

GPU: gtx1050

HDD: WB blue (small laptop one) 1TB

Ok i already had that configuration for a while... but it was sloooowwwww D:

That is why for my birthday/chrismas i bought myself additional 8GB of ram and a tiny nvme ssd to make everything 1000x faster! 😎

1 ++ for a person who reads how big the ssd is... 11

11 -

Planning a telegram bot + home web server, possibly using the Bluetooth to combine with an android and make a self driving go kart, but that seems a bit ambitious, especially considering most of the GPU acceleration isnt supported with Raspberry Pi.

8

8 -

Me working in High Performance Computing :

CPU/GPU in full throttle ... go brrrr...

Me working on an app:

Should put sleep() in the while loop so as not to overwork the CPU

😑😑2 -

My Windows installation has been achting up the last few days. Something with permissions is messing with my gpu it seems.

Of all the errors I have gotten, this must have been the best one 5

5 -

I haven't built a new computer in 9 years, but I finally, finally got together enough money to go big.

POWER: Seasonic Focus Plus 850W 80+ Gold

MOTHERBOARD: NZXT N7 Z370 ATX LGA1151 (Black)

GPU: EVGA GTX 1080 FTW GAMING

CPU: INTEL Core i7-8700K 3.7GHz 6-Core

RAM: G.SKILL TridentZ 3000 Mhz 32GB (2 x 16 GB)

SSD: SAMSUNG 860 EVO 1TB

CPU COOLING: NZXT Kraken X62

I can't fucking wait for it to arrive.5 -

Protip: always account for endianness when using a library that does hardware access, like SDL or OpenGL :/

I spent an hour trying to figure out why the fucking renderer was rendering everything in shades of pink instead of white -_-

(It actually looked kinda pretty, though...)

I'd used the pixel format corresponding to the wrong endianness, so the GPU was getting data in the wrong order.

(For those interested: use SDL_PIXELFORMAT_RGBA32 as the pixel type, not the more "obvious" SDL_PIXELFORMAT_RGBA8888 when making a custom streaming SDL_Texture)5 -

So I bought a gaming laptop a while back, and Cyberpunk 2077 binaries got leaked a few days ago... So I wanted to play it, kinda. It looks really good from the screenshots. Friend asks me "what CPU / GPU do you have"?

My gaming laptop is a Y700 so an i7-6700HQ and a GTX 960M. Turns out that even at low settings this thing probably won't pull even 30 FPS.

So even with a gaming laptop, you don't get to do any gaming. 10/10 would buy again! I'll enjoy Super Mario because imagine caring about gameplay rather than stunning graphics that you need tomorrow's hardware for, and buy it yesterday! And have it already obsolete today.

Long story short, I kinda hate the gaming scene. I'm not a gamer either by any means. Even this laptop just runs Linux and I bought it mostly because some of its hardware is better than my x220's. Are gamers expected to spring the money for the latest and greatest nugget every other month? When such a CPU and GPU alone would already cost most people's entire monthly wage?

What's the point of having a game that nobody can play? Even my friends' desktop hardware which is quite a bit better still - it only pulls 45 FPS according to him. Seriously, what's the point?12 -

SPECS:

- Dooge X5 max (worst phone ever, can't reccomend, randomly shuts off, displays advertizement, gets super hot)

- Bottle of coke light (so I don't get fat)

- Auna Mic 900-b (I used to do videos on youtube, though they were so bad i've deleted them lol)

- Two HP 24es screens (one of them broke when I let it fall while switching overheating cables)

- Mech keyboard with MX - Red

- Razer Naga 2014 (I regret buying that already)

- Wacom intuos small (I wanted to become a designer for a game with @Qcat)

- Computer with

CPU: ryzen i1600. 3.8ghz, 4ghz with boost, 12threads 6 cortes

RAM: 16 gig

Storage: 250gb SSD, 1tb hdd

Stickers: Generously donated by @gelomyrtol

Cooler: alpenföhn brocken

GPU: ATI 560 (something like that. I took the cheapest as I needed to fit a gpu into the budget, ryzen doesnt have integrated graphics units)

OS: fedora GNU/Linux with KDE as de (though i'm not sure wether i'll stay with it. I recently used cinnamon but it was too slow.

If i'm not on my desk, i'm either doing music studies, sleeping or i'm at school.

When on my deskj, I do

1) programming

2) Reading

3) watch nicob's danganronpa let's plays

4) programming.

My current projects:

clinl.org

github.com/wittmaxi/zeneural 10

10 -

If linux is used and maintained by professional power users mostly, why on earth does it never gets good support for basic things Windows does?

Screen dims while playing video, configuring lock screen to work with DM sucks as hell, you even have to define most keyboard shortcuts manually, Optimus GPU setup always buggy, you have to spend some time just configuring power management to work just fine.

We really need to fix this. I mean I am a Linux addict but time is money too.8 -

Fuck this piece of shit that calls itself a Macbook Pro. Bought myself a Mac in 2011 because I wanted something durable. Spent 2k € on it. Now its more annoying thany any Windows machine I ever had because I have this fucking GPU glitch that Keeps. Crashing. My. Machine. I cant work that way.

Yeah, already had it repaired. Didn't work. Use gfxCardStatus to keep it on integrated. Doesnt work. Well, I coud just buy a new one, couldn't I? Fuck Apple, fuck you.6 -

Great

Updated GPU drivers, turned my PC off while i was getting something to eat and watch YouTube videos in the living room, decided to go work on some project, turn my PC back on, and... Nothing works...

Neither of my monitors displays anything and not even my keyboard turns on... Didn't even get to see the splash screen of my mobo vendor

Why does this shit always happen to me?7 -

My laptop is screwed. The laptop's monitor doesn't light up at all. An external monitor works with heavy artifacts.

6

6 -

Wtf is this shit .... why the hell is so much fees even accepted as normal

(Ps - you can get a decent i5 with amd gpu and dos for 40k) 11

11 -

It's black Friday and I have a 380€ gift card at Netonnet, what should I get? I'm thinking of a new GPU since I have a gtx 650 atm.

Any ideas? 🤔13 -

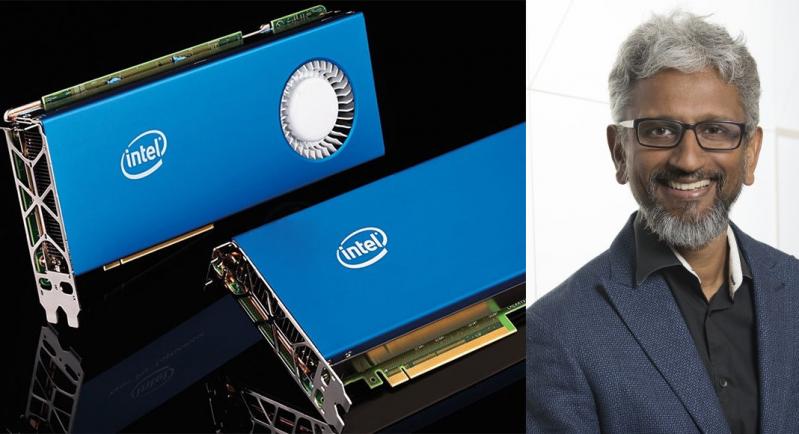

So... has anyone yet made a comment about now exHead AMD Chief of GPU division Raja Koduri joining Intel?

Now this is awkward after I made this OC image not so long ago :/

https://devrant.com/rants/896872/...

Also in other news can we comment that Systemd has pretty much took over most linux distros? is this the new NSA backdoor? (before someone points out is open source, have anyone been able to properly audit it?) 4

4 -

I have finally decided to stop helping people setup a proper machine learning environment inside of their machines with Proper GPU support.

I-fucking-give-up.

Goggle Colab, EVERYONE is getting dey ass sent to Colab. I DON'T GIVE A FUCK about privacy and shit like that at this fucking moment, getting TIRED af of getting messages about someone somehow fucking up their CUDA installation, and/or their entire machine (had one dude trying to run native GPU support through WSL 2, their machine did not have the windows update version 2004 and he has on an older build, upon update he fucked everything up EVEN THOUGH I TOLD HIM NOT TO DO IT YET)

.......fuck it, I am sending everyone to Colab. YES I UNDERSTAND THAT PRIVACY IS A THING and Goggle bad and all that jazz......but if you believe in Roko's Basilisk then I AM DOING THEM A FAVOR

I work hard to get our robot overlords into function, let it be known here, I support our robot overlords and will do as much as possible to bring them to life and have me own 2b big tiddy with a nice ass android.

I should also mention that I've had a few drinks on me already and keep getting these messages.5 -

I love Nvidia's apathetic attitude to Linux support. It makes it so much more economical for me every time I need to upgrade my GPU - the mid-upper tier previous generation cards are always available for around the same price as a lower tier current generation card. There is no buyers remorse when the only things I'm missing out on by not going bleeding edge are kernel panics and random reboots.4

-

When my senior told me his program is kill because not enough processing unit in our 1080Ti.

Man, your Linux runs way more than 8 processes, and you only have two processes that runs with CUDA... -

Just tried out Minecraft's shader mod SEUS and wondering what the fuck am I doing with my life being a web dev and not working on graphics.

If you have an nvidia gpu, please give it a try.

This is an example with PBR textures, it's mind blowing https://youtu.be/RbM5w9CBDIw

INB4 comment like "peasant web dev wants to do graphics lmao"11 -

So who knows about this beauty / THE BEAST

Hint : Nvidia

Comment if you are fanboy of GPU tech! I am certainly 😎 26

26 -

So recently I had an argument with gamers on memory required in a graphics card. The guy suggested 8GB model of.. idk I forgot the model of GPU already, some Nvidia crap.

I argued on that, well why does memory size matter so much? I know that it takes bandwidth to generate and store a frame, and I know how much size and bandwidth that is. It's a fairly simple calculation - you take your horizontal and vertical resolution (e.g. 2560x1080 which I'll go with for the rest of the rant) times the amount of subpixels (so red, green and blue) times the amount of bit depth (i.e. the amount of values you can set the subpixel/color brightness to, usually 8 bits i.e. 0-255).

The calculation would thus look like this.

2560*1080*3*8 = the resulting size in bits. You can omit the last 8 to get the size in bytes, but only for an 8-bit display.

The resulting number you get is exactly 8100 KiB or roughly 8MB to store a frame. There is no more to storing a frame than that. Your GPU renders the frame (might need some memory for that but not 1000x the amount of the frame itself, that's ridiculous), stores it into a memory area known as a framebuffer, for the display to eventually actually take it to put it on the screen.

Assuming that the refresh rate for the display is 60Hz, and that you didn't overbuild your graphics card to display a bazillion lost frames for that, you need to display 60 frames a second at 8MB each. Now that is significant. You need 8x60MB/s for that, which is 480MB/s. For higher framerate (that's hopefully coupled with a display capable of driving that) you need higher bandwidth, and for higher resolution and/or higher bit depth, you'd need more memory to fit your frame. But it's not a lot, certainly not 8GB of video memory.

Question time for gamers: suppose you run your fancy game from an iGPU in a laptop or whatever, with 8GB of memory in that system you're resorting to running off the filthy iGPU from. Are you actually using all that shared general-purpose RAM for frames and "there's more to it" juicy game data? Where does the rest of the operating system's memory fit in such a case? Ahhh.. yeah it doesn't. The iGPU magically doesn't use all that 8GB memory you've just told me that the dGPU totally needs.

I compared it to displaying regular frames, yes. After all that's what a game mostly is, a lot of potentially rapidly changing frames. I took the entire bandwidth and size of any unique frame into account, whereas the display of regular system tasks *could* potentially get away with less, since most of the frame is unchanging most of the time. I did not make that assumption. And rapidly changing frames is also why the bitrate on e.g. screen recordings matters so much. Lower bitrate means that you will be compromising quality in rapidly changing scenes. I've been bit by that before. For those cases it's better to have a huge source file recorded at a bitrate that allows for all these rapidly changing frames, then reduce the final size in post-processing.

I've even proven that driving a 2560x1080 display doesn't take oodles of memory because I actually set the timings for such a display in order for a Raspberry Pi to be able to drive it at that resolution. Conveniently the memory split for the overall system and the GPU respectively is also tunable, and the total shared memory is a relatively meager 1GB. I used to set it at 256MB because just like the aforementioned gamers, I thought that a display would require that much memory. After running into issues that were driver-related (seems like the VideoCore driver in Raspbian buster is kinda fuckulated atm, while it works fine in stretch) I ended up tweaking that a bit, to see what ended up working. 64MB memory to drive a 2560x1080 display? You got it! Because a single frame is only 8MB in size, and 64MB of video memory can easily fit that and a few spares just in case.

I must've sucked all that data out of my ass though, I've only seen people build GPU's out of discrete components and went down to the realms of manually setting display timings.

Interesting build log / documentary style video on building a GPU on your own: https://youtube.com/watch/...

Have fun!18 -

Was training a model for an hour on paid GPU after which it showed a deprecation error and crashed my Jupyter notebook.5

-

I wish laptops were more easily modifiable. Ram upgrade? Gpu upgrade? 10-GBit ethernet? Maybe I should just buy a desktop...3

-

Just installed Keras, theano, PyTorch and Tensorflow on Windows 10 with GPU and CUDA working...

Took me 2 days to do it on my PC, and then another two days of cryptic compiler errors to do it on my laptop. It takes an hour or so on Linux... But now all of my devices are ready to train some Deep Deep Learning models )

I don't think even here many people will understand the pain I had to go through, but I just had to share it somewhere since I am now overcome with peace and joy.4 -

Stories like the one I'm about to tell you are just another reason why people hate Windows. I know I usually preach 'Don't hate everything' and shit, but this is a real big fucking deal when it hits your desktop for no reason.

Now, onto the actual story...

Background: Playing with my Oculus, fixing issues like forgetting to use USB3 and stuff. I learned about an issue with Nvidia GPUs, where in Windows, they can only support 4 simultaneous displays per GPU. I only have the one GPU in my system, Nova, so I have to unplug a monitor to get Oculus and its virtual window thingy working. Alright, friend gave me idea of using my old GPU to drive one of my lesser used monitors, my right one. Great idea I thought, I'll install it a bit later.

A bit later...

I plug the GPU in (after 3 tries of missing the PCI-E slot, fuckers) and for some reason I'm getting boot issues. It's booting to the wrong drive, sometimes it'll not even bother TRYING to boot, suddenly one of my hard drives isn't even being recognized in BIOS, fuck. Alright, is the GPU at least being recognized? Shit, it isn't. FUCKFUCKFUCK.

Oh wait. I just forgot the power cable Duh. Plug that in, same issues. Alright, now I have no idea. Try desperately to boot, but it just won't I start getting boot error 0xc000000f. Critical device not found. Alrighty then. Fuck my life, eh?

Remove the GPU, look around a bit while frantically trying to boot the system, and I notice an oddly bent SATA cable. I look at it and the bastard is FRAYED AT THE END! Fuck, that's my main SSD! I finally replace the SATA cable and boot, still the same error... Boot into a recovery environment, and guess what?

Windows has decided to change my boot partition, ya know, the FUCKING C: DRIVE, from NTFS to RAW format, stripping it of formatting! What the actual fuck Microsoft? You just took a shit on yourself while having a seizure on the fucking MOON! Fine, fuck you, I have recovery USB! Oh, shit, that won't boot... I have an old installation! Boot ITS recovery, try desperately to find a fix online... CHKDSK C: /F... alright, repairing, awesome! Repaired, I can see data, but not boot. So now I'm at the point where I'm waiting for a USB installer to be created over USB 2.0. Wheeeeeeeeee. FML.

THESE are the times I usually hate Windows a lot. And I do. But it gets MOST of my work done. Except when it does this.

I'm already pissed, so don't go into the comments and just hate on Windows completely. Just a little. The main post is for the main hate. Deal with it. And I know that someone is going to come at me "Ohhhhh, you need FUCKIN LIIIIIIINUUUUUUUXXXXXXXX!' Want to know my response to that?

No.3 -

What is more essential? A good CPU or a good GPU?

Me --- A good ergonomic chair.

After working 16 hours at a stretch I got a fucking bad back pain ..

Oh godddd!!!!!!! Kill me Please !!!!!3 -

I have an Acer dual GPU with Nvidia, guess what Gnome + Nvidia = bugs, yeah I probably should know that but I didn't, and I spend the last hour recovering my PC after installing Nvidia drivers. Why can't you guys (Gnome, Nvidia) agree on something?5

-

People ask me why I prefer Windows: decided to install Mint, it crashed upon choosing a timezone. Had to not set a timezone to move past that bug. Then the install hangs. Turns out I should've edited the grub boot settings because I'm using an nvidia GPU. What the actual fuck? How is anyone supposed to know that?12

-

Argh! (I feel like I start a fair amount of my rants with a shout of fustration)

Tl;Dr How long do we need to wait for a new version of xorg!?

I've recently discovered that Nvidia driver 435.17 (for Linux of course) supports PRIME GPU offloading, which -for the unfamiliar- is where you're able render only specific things on a laptops discreet GPU (vs. all or nothing). This makes it significantly easier (and power efficient) to use the GPU in practice.

There used to be something called bumblebee (which was actually more power efficient), but it became so slow that one could actually get better performance out of Intel's integrated GPU than that of the Nvidia GPU.

This feature is also already included in the nouveau graphics driver, but (at least to my understanding) it doesn't have very good (or none) support for Turing GPUs, so here I am.

Now, being very excited for this feature, I wanted to use it. I have Arch, so I installed the nvidia-beta drivers, and compiled xorg-server from master, because there are certain commits that are necessary to make use of this feature.

But after following the Nvidia instructions, it doesn't work. Oops I realize, xrog probably didn't pick up the Nvidia card, let's restart xorg. and boom! Xorg doesn't boot, because obviously the modesetting driver isn't meant for the Nvidia card it's meant for the Intel one, but xorg is to stupid for that...

So here I am back to using optimus-manager and the ordinary versions of Nvidia and xorg because of some crap...

If you have some (good idea) of what to do to make it work, I'm welcome to hear it.6 -

Convo with me an my friend today (i purposefully left out my opinions and reactions):

Friend: i want to learn c#

Me: sounds good, but I'd go java if i were you

F: yeah but i want to do unity

M: sounds good, but I'd go with unreal engine if I were you

F: what language is unreal engine?

M: C++, but if you want to make apps, go with unity

F: yeah I want to make an android app

M: sounds good, but I'd try out renderscript if I were you

F: yeah I've used that before

M: oh really? What does it do?

F: I don't know

M: its for gpgpu because android game devs needed better performance

F: yeah I've used that

M: what does gpgpu stand for?

F: umm… i know what gpu stands for

M: okay dude, you didn't use it

F: yes I did, I made a cypher

M: dude, you didn't use it

F: yes I did!

M: what does gpgpu stand for?

F: *left*

*five minutes later*

M: *checks phone*

M: *sees text from friend*

Text from friend: dude it was general purpose gpu1 -

I made this bad decision to buy pretty pricey laptop with nVidia card. Lenovo Legion Y520.

So yeah, have you heard about optimus technology and how much one can hate nvidia?

> Debian is working, nice.

> Let's try nvidia-driver.

> 48hours later: WOoooooah glxgears at 120 fps!

> Installed some fonts. "Could not load gpu driver". HDMI port stops working. Unable to repair. Entering despair.

> Surviving on dual-booted windows.

halp3 -

Noname Russian $17 wireless charger somehow makes less high pitched coil noise than my fancy nomad charger.

Yes it’s ugly. Yes the led is blasting and yes I painted over the led with a black nail polish.

I disassembled the nomad charger and located the coils that were making noise. I’m going to either fill them with epoxy (a common technique used by gpu enthusiasts to get rid of coil noise) or replace them completely.

TL;DR:

nomad — bouba

noname russian charger — kiki 4

4 -

Friend : Bro, give me the game you were playing yesterday.

( Witcher 3)

Me : OK, tell me your system specs.

He: 1 TB hard drive and 8 GB ram.

Me : GPU?

He : 2 GB Nvidia.

Me: Model?

He: Don't know something like 740m ( M you heard that right)

Me : Won't work. GPU is not powerful enough.

He: You don't know anything, it will work. You also play on same GPU with 2 GB.

Me: -.- ( I had gtx 770)6 -

Authors of this textbook that I'm holding should replace their GPU because that graphical glitches are bad

2

2 -

The graphic cards supply is completely madness. Even after the crypto crash it is not possible to buy RTX cards at price any close to MSRP.

But today my jaw drop when I saw auction on popular online portal where some guy was selling a place in a queue in another store. The title was "Rights to buy RTX 3080" and for 400$ you could potentially buy a GPU for the price close to MSRP. I think this was too much and after a few hour the advert was took down.5 -

As interesting as embedded linux is, if you ever try to do something with GPU acceleration. Stop! Go organize a spot with a psychiatrist first. You will need it!4

-

And Linus goes best GPU under 500$ bla bla bla. In other countries, you times that price by the exchange rate, then tax, and fuck it's 2k. And I make like 3k per month as a dev. That's not even 1k USD..

-

The Gold Rush of 1849.... everyone went to the west coast to mine gold.

The Bitcoin Rush of 2009.... everyone went to the GPU to mine Bitcoin.5 -

*writes programs with variables for arguments*

Runs.. Crashes

*removes variables places exact same code in parameter list*

Runs.. Succeeds.

I don't understand you my GPU -

Holy mother of butts. Two weeks. Two weeks I've been on and off trying to get hardware rendering to work in xorg on a laptop with an integrated nvidia hybrid gpu.

I know the workarounds and it's what I've been using otherwise. Nouveau without power management or forced software rendering works fine. I also know it's a known issue, this is just me going "but what the hell, it HAS to be possible".

The kicker is that using nvidias official tools will immediately break it and overwrite your xorg.conf with an invalid configuration.

I've never bought an nvidia gpu but all my work laptops have had them. Every time i set one up I can't resist giving this another shot, but I always hit a brick wall where everything is set up right but launching X produces a black screen where I can't even launch a new tty or kill the current one. I assume it's the power management tripping over itself.

The first time I tried getting this to work was about 3 or 4 years ago on a different laptop and distro. It's not a stretch to say that it would be better if nvidia just took down their drivers for now to save everyone's time.5 -

My Vega56 gpu is mining a bit faster than usual after the last reset. Not by a huge amount, but a little faster. Enough for it to be noticeable.

I don't wanna touch it. I just wanna let it sit there and happily chew away those hashes.

But I will have to touch it eventually, if I wanna turn my monitor off. If I wanna go to sleep.

Doing that will trigger a restart of the miner.

And I will never know if it will have that extra lil speed bump ever again.

Why is life so cruel2 -

Completed a python project, started as interest but completed as an academic project.

smart surveillance system for museum

Requirements

To run this you need a CUDA enabled GPU on your computer. (Highly recommended)

It will also run on computers without GPU i.e. it will run on your processor giving you very poor FPS(around 0.6 to 1FPS), you can use AWS too.

About the project

One needs to collect lots of images of the artifacts or objects for training the model.

Once the training is done you can simply use the model by editing the 'options' in webcam files and labels of your object.

Features

It continuously tracks the artifact.

Alarm triggers when artifact goes missing from the feed.

It marks the location where it was last seen.

Captures the face from the feed of suspects.

Alarm triggering when artifact is disturbed from original position.

Multiple feed tracking(If artifact goes missing from feed 1 due to occlusion a false alarm won't be triggered since it looks for the artifact in the other feeds)

Project link https://github.com/globefire/...

Demo link

https://youtu.be/I3j_2NcZQds2 -

so I asked a question on linus tech tips discord channel that wasn't GPU related and the whole chat went quiet.

I mean, it's a tech channel are GPUs the only thing boys talk about now?

the new dicksize contest? lol11 -

i bought a new laptopand i can't fucking install ubuntu on it

and my supervisor asked me to show him ubuntu running on this laptop

before anyone points out that i m a noob

I have already installed ubuntu, arch mint on my pc

here are the specs:

amd a9

radeon gpu

8gb rma

lenovo model 320 ideapad

ubuntu 18.04, 16.04 17.04

and the ubuntu fucking hangs as soon as i get to the gui screen

found something with nomodeset, going to use that

I can't install arch cause I need to show ubuntu running on this laptop to him10 -

It seems a really good deal to buy the card and then flip it keep the games. Who do you guys think 🤔

13

13 -

The year is 2025.

WSL2 still makes it a major, excruciating pain in the ass to expose a port over the network without having to look up the fucking command and think about the stupid cancerous networking model every time.

All I wanted to do was run some experiments with AI models on my gaming laptop using its GPU, and expose an API to my other laptop, having the latter as a client.

Guess I have to get fucking rid of Windows forever if I want a fucking usable computer.4 -

Robbery of nearby future :

A broke dev decided to do a robbery by stealing the whole DAVE -2 system from the Tesla S3 model

While asking why he chose a drastic path as this, he said "My client wanted the training to be ready within 2 days and I couldn't arrange that much GPU in such small notice, so decided to do what I did.*ignored(But I reinstalled it back in the car)*

As you can see, client's have turned into money hungry, cock sucking, fist fucking, and God-knows-what-fetish wanting prices of shit"

Over to you, Clara 3

3 -

I'm wondering if devs would still play that russian roulette in linux if the one drawing the short straw gets it's GPU fried up 🤔

Or at least somethibg like that.2 -

Got a new win 10 laptop and installed creators update on it. It's running well so far, but a few friends told me that Creators update causes crashes and hugs and that I should roll back.

Anyone here with creators update experiences?

I have a laptop with win 10 home 1703, 8GB ram and Nvidia GPU, if any of that matters.13 -

You know apple has its dick deep inside your ass when you buy a Macbook pro with no discrete gpu for game development(unreal engine) and then shell out $1500 for a fried cpu.18

-

Question / Suggestions.

CPU : AMD or Intel ?

GPU : AMD or Nvidia ?

I want to build a High-end PC (Desktop).18 -

Name one thing more fun than atomically writing values into a gpu buffer and them mysteriously vanishing into the aether immediately after the compute shader invocation

I can literally see them in the buffer using RenderDoc and then as soon as I go to the next command the buffer is completely filled with zeros again as if the values never existed

?? like how ??8 -

!rant

hey dev's,

I need help for choosing laptop.

I will need it for deep learning.

DELL G7 7588

config:

pro : i9-8950HK

gpu : GTX 1060

ram : 16 gb ddr4

ssd : 512

hdd : 1TB

your reviews please42 -

I finally got the refurbished laptop I ordered and..

wrong CPU, wrong number of cores

wrong GPU

only 1 USB port, I bought 3

battery is DOA

fuck aaa_pcs at ebay. they better replace this with what I bought or imma call Karen to talk to their manager

maybe I should check for spyware/backdoors/etc while I'm at it just because I'm pissed.

any suggestions? nothing is too petty if it doesn't void warranty6 -

Will the MacBook Pro 15 2018 be any good for Machine Learning. I know it's got an AMD (omg why?) And most ML frameworks only support CUDA but is it possible to utilise the AMD gpu somehow when training models / predicting?5

-

The feeling when you are very interested in Al, ML and want to do projects for college using tensorflow and openAI........

But you only have i3 with 4GB RAM plus 0 GPU.

That sucks.... 😶4 -

TIL DELL Server from 2018 use a 20 Year old GPU cause its reliable. I did honestly not knew this.

https://en.wikipedia.org/wiki/... 8

8 -

I'm going to build a PC. Everything is done besides of the GPU.

I wanted to go full team red, but it turns out that the RX 5700 XT has a lot of driver issues.

I watched a hell lot of YouTube videos, benchmarks, forum posts, reddit, ...

Everyone is complaining about driver issues that haven't been fixed since the launch of the product.

That makes me want to go to team green and buy the NVIDIA 2070 SUPER GPU.

I post this as some sort of rant, but also as some sort of advice seeking post to double check my decision before I buy the 2070 SUPER.14 -

I've decided to switch my engine from OpenGL to Vulkan and my god damn brain hurts

Loader -> Instance -> Physical Devices -> Logical Device (Layers | Features | Extensions) | Queue Family (Count | Flags) -> Queues | Command Pools -> Command Buffers

Of course each queue family only supports some commands (graphics, compute, transfer, etc.) and everything is asynchronous so it needs explicit synchronization (both on the cpu and with gpu semaphores) too4 -

So if I buy this stuff, word has it that I will have "a computer." Is this enough to get to play with CUDA on a little tiny GPU?

23

23 -

!rant - it's a THANK YOU!

Had the problem so far that I could not start some apps in docker containers with GPU support (e. g. Chromium).

After a long search and a lot of help from the community, today's update of xf86-video-ati (1:7.8.0-2 -> 1:7.10.0-1) has finally fixed the bug. Yay!

Thank you very much Arch Linux and all the great maintainers. You're doing an awesome job.2 -

Best budget laptop for development?

(Or at least under $999 USD)

Would like:

- Web Development without lag.

- Be able to run Visual Studio without lag.

- Light, I don’t want to carry around a bricc (Ultraportable any good?).

- Thunderbolt 3 Support? (would like to attach a decent external GPU enclosure for home gaming every now and then).

Or basically something I’ll get my moneys worth and isn’t shit.

All preferences and suggestions are welcomed, just don’t be a douche about it 😘❤️8 -

FUCK NVIDIA FUCK FUCKFUJCK FMUJCKFU IKC JCF THFUCKJ TEUFKCJ TUFKC TUCFKFD TFUCJ RUF FCUKC FUCK THEM ALL TO HELL

I'M WASTING MY GODDAMN FUCKIJJNG WEEKEND TRYING TO GET THE STUPID SHITTYAS SS GTX 1050 LAPTOP GPU TO FUCKING WORK on MANJARO AND IT SEEMS LIKE THERE'S NO FUCKING WAY IN HELL I'LL BE ABLE TO DO IT

FUCK THIS GODDAMN COMPANY

HEY, NVDA STOCK IS GOING DOWN SOON, YOU SHOULD ALL FUCKING SELL IT RIGHT NOW AND WARN OTHERS TO DO THE SAME10 -

When i once came to the crib of a random girl to bang her ofcourse she had an asus laptop and that laptop was about 17" screen I think, the specs were fuckin brutal and i was shocked, i wanted to touch it and find out more about the laptop and specs and gpu and what model was it and then i realized i came there to fck her but i was staring at the laptop like an asshole1

-

~rant

Hey all! M gonna b buying a new laptop for programming.

I need something with like 16 gigs RAM, decent processor, SSD.

I can't buy thinkpad because well... It shud have been ~$750 but in my country, it costs $1200. And that is for the 8GB RAM config... E470... 570 isn't even available.

Hence since the lack of laptops without dedicated GPU but high configs, I basically can find 2 options:

Dell XPS

MacBook

So I wanna ask what would you guys prefer? I code in C/C++ pretty much exclusively. And I definitely like butter smooth functioning of OS.

If it ain't a MacBook, i'll b using Arch Linux.

Finally, I live in india.

So... Which one do I pick? And if u have a recommendation, I m open for that too. It shud just have good specs BUT NO DEDICATED GPU.

Thanks 😄8 -

Been spending the past two days setting up linux on my new work laptop which happens to have a hybrid nvidia GPU, in addition to requiring a usermade driver to get the docking station working. Both the (proprietary!) nvidia driver and displaylink driver demanded to rebuild the kernel, and the nvidia ones crashed during it.

I had no idea it could be this much of a hassle. Had I bought it myself I would have taken it back. Never buying a laptop with a discrete GPU ever again. Sweet butts. -

I finally gave up with windows (especially 10)

Used to be behind it and support it but the last bunch of updates is causing more of a headache and dont get me started on the "oh you disabled automatic rebooting..... going to reboot anyway and give you a 10 minute warning while you are asleep".

How bad did it get?

I have built a plan for my linux install that will allow me to play most games via linux (thansk wine and DX2VC) and as i love playing Sea of Thieves, got a few things set up so that when i start the windows vm, it will unload my gpu, give it to the vm. when i shut the vm down, it will reload it back into linux (thanks to joeknock90 if hes on here)

Tested this with my laptop with an external crappy GPU and works surprisingly well

Sources where i got where i am:

https://github.com/joeknock90/...

https://lutris.net/

https://looking-glass.hostfission.com/...

and yes Linus's vid also gave me a little push as well6 -

Any laptop suggestions? My budget is around £400, preferably AMD but Intel is acceptable

RAM isn't an issue as I have a spare 4GB ram stick

It's mainly just for programming, so a fancy GPU isn't important11 -

So I just found out that GL_MAX as a blend func is supported pretty much universally (~92%). Pretty nice considering I kinda gave up my baricentrics based anti-aliasing technique months ago7

-

Hey everyone, need some help/opinions, I quite literally have almost no time anymore for alot of thing specially try share alot of news here (not the Intel mess, I reported some of that stuff before it exploded) I share most of that news in my site though, but I really wanted to ask people here that may work for hostings companies if they know about the retardation of Nvidia in their change of Terms of Services for their GPU usage (https://legionfront.me/posts/1936 ) and also want to know if users here are if they are looking for dedicated servers, mainly GPU servers for their works and what are you look for (specs and such) or rather where

-

!rant

I have a Surface 3 for home dev stuff. I wanted to get back into C++ for graphic/GPU programming. However it uses an Intel Graphics 5000 chipset so I can't do CUDA and the Intel Media Server can't upgrade the graphics driver because it's a Surface.

What should I do? I would rather not build a system just to play.5 -

The display power savings feature on Intel GPU drivers is retarded. It reduces backlight brightness when you're viewing dark content and pumps it back up when you have something white on the screen. This is the complete opposite of what you need for keeping the image viewable with minimum backlight brightness.

The dark parts of the dark image can be kept the same brightness by reducing the backlight, but the white text you're reading will actually be darker, so you need to manually set the display brighter. And then you need to reduce brightness when you switch to a white window because now that's too bright. And as a bonus, the backlight brightness will keep adjusting when you change windows, which is super distracting.1 -

Any ideas what to do with this old computer?

CPU: AMD Athlon 64 X2 5000+ 2.60 GHz

RAM: 5 GB

GPU: Nvidia GeForce 9800 GT

Motherboard: ASUS M2N-E SLI

HDD: 150 GB17 -

So the tests for the AMD RX 7000 GPUs are out. Business as usual: superb for non-RT gaming given the price, crap at everything else - including energy efficiency in FPS/W.

Pro tip to the AMD marketing: you don't highlight features like energy where you suck relative to the competition. You point out your strong points. Admittedly, you don't have much to work with here.3 -

hard choice guys help me out

AI development needed, but little to no resources, also should a use a gpu or a cpu for this calculations

tesla k70 gpu -

google cloud - $0.7/hr

aws - $0.9/hr but full support upfront if any device issues and device switch instantly

dwave 2000q - don't know because too extreme for medium to large scale apps, also it's a qauntum computer , prices might not be by an hour but by month/year3 -

Hardware Nerd Rant...

Spend nearly a year waiting for a super high end developer laptop. Tricked out MacBook Pro 2016: $4200+, Tricked out Surface Book 2/i7, $3700+

No 32GB ram because of Intel power consumption pre-Cannonlake. Mac doesn't have a touchscreen at its price point...

My gut ultimately says: NOPE.JPG

General hope is for GPU Docks like the Razer Core to come to Apple in 2017. Thoughts?5 -

Some hardware experts here? Looking to upgrade my PC soon, and would like some opinion on the parts I chose. I'm going for a minimalistic Mini-ITX productivity build, but gaming also.

- CPU: Ryzen 7 2700X

- CPU Cooler: AMD Wraith Prism

- GPU: MSI RX 570 8GB Armor (already have it)

- RAM: 2x 8GB TridentZ RGB

- 1. SSD: Corsair Force MP510 240GB M2 SSD

- 2. SSD: Samsung EVO 860 1TB SSD

- PSU: Corsair CX550M

- MoBo: Asus ROG Strix B350-L Mini-ITX

- Fans: 6x Thermaltake Riing 12 RGB

- Case: NZXT H200i24 -

An eventful day:

Because of my recent amateur thermopaste application onto a heatpipe that connects a laptop CPU and a discrete GPU and *ingenious* HP ProBook engineering my Radeon graphics have fried yesterday.

On the bright side, got the Nougat update for my Samsung S6, with bright hopes that it will help restore the state of an unresponsive fingerprint scanner... nope, that is still broken.

Summer is near, exams finished, time for some DIY on used and abused tech! :D2 -

Finally got around to some real video encoding work on my new computer but noticing it's not blazing fast...

And more work is still handled by the CPU... But I thought video processing is handled by the GPU, which seems to be barely used at all. I'm using Handbrake but I thought the whole point of dedicated GPU was for intensive graphics and video processing?6 -

Bought fucking nvidia gpu to test speed of some fucking machine learning models that generate speech.

6 hours wasted already for installing fucking dependencies

cuda, fucking tensorflow gpu, bezel and other shit

Fucking resetting password to download deb with cudnn,

really ??????? fucking emails are not delivered to my fucking mailbox

After mass click of send email and multiple account ban and unban I figured out I should login to nvidia website and then allow access to fucking developer every time I want to log in there - fuck shit

Uninstalling everything now looking for fucking compatible versions between software.

10 years in this business still fucking installation of dependencies is most difficult part

Fucking corporate business and their shitty installation instructions to fuck up peoples lives and switch them to the cloud.

Same was with fucking kubernetes

Fucking software dependency hell

It’s worse then ever before.

Fuck ....2 -

"The more GPUs you buy, the more money you saved" - Jensen Huang. ASUS finally promoting GPU mining app on their RTX official webpage! Good luck with saving money!!!

6

6 -

I am thinking about building new PC, so I made a list of components. What do you think? Any suggestions?

I don't know which GPU model/brand to get yet. Would like to play at 1440p >60Hz so GTX1070 is not enough if I don't wanna buy new GPU in a few years (probably 4-5 years as I always do).

- CPU: AMD RYZEN 7 2700X

- GPU: GTX 1080 Ti / GTX 1080

- HDD: WD Gold 2TB

- SSD: Samsung 860 EVO 250 GB

- RAM: Patriot Viper RGB Series 16 GB KIT DDR4 3200 MHz CL16 DDR4 Black

- MB: ASUS ROG STRIX B450-F GAMING

- CAS: NZXT H500 Black

- PSU: Corsair HX75012 -

I'm about to buy a new desktop pc, looking for some that can run visual studio 2015 without problems, i also use netbeans and mysql. I was thinking in a amd fx 8230 e (8 core) with 16 gb ram and a 1gb video card ... or a amd a10 7860k(4 core /integrated gpu) also with 16 gb ram. What do you think guys? Whats better for software development? I'll appreciate some devs advice17

-

Can anyone recommend a good notebook for mobile/desktop developement? Mainly Java, Android, .NET and Unity3D projects. Dedicated GPU is a big plus.13

-

Anybody has a good recommendation for a laptop for mostly full stack web development?

I think I should look for following features:

- minimum 16G ram

- Althought is 2021, just in case, I add: usb C to connect to a dock with two screens and SSD

- I'll run several docker containers at once

- time to time I make non-exhaustive work on c++

- good screen dpi

- I use linux

- portable. No need for the lighter in the market but easy to carry in a bag. Good battery.

- not too expensive

I can save on:

- I don't need the latest processor, just a good one

- I'm not a gamer. I not need the latest GPU. However, some GPU is appreciated. I don't need colorful leds neither.

Do you have any recommendations on laptops and/or features to search for/avoid?8 -

What a pain it is getting Linux/Arch setup perfectly on a MacBook Pro. Overheating like a mofo. ACPI shitstorm, integrated GPU disabled by default and need a hack to enable it outside of macos, fan control is wack.

Solved most of this crap but still can't completely disable the Nvidia GPU, so both integrated and dedicated are powered on. Frustrated AF.2 -

Anyone know of any GPU accelerated Machine Learning libraries that DON'T need Python, something maybe using C/C++, C# or Java?12

-

How can you tell that your PC is under a heavy load?

When, despite having your high-quality active noise canceling headphones on and playing games, you can still hear what must be your GPU having a heatstroke and having it's fans spin at 100%.

Never knew it can be this loud. Or maybe the headphones are just crap.3 -

So I bought a gtx1650 gpu for my old phenom II X4 pc. It didn’t work – the screen vent black in like five minutes after powering up the pc.

I was disappointed, but instead of returning the gpu, I bought all the other components to build a new pc on ryzen cpu. Including the gpu, it all was like $400 and I still have all my old parts to sale.

Now I’m here, playing all the latest games like doom and wolfenstein on ultra in 1080p 60fps and I’m more than happy.

I basically found a way to convert my bad experience into good experience. I’m just off my therapy, so all that bad experiences that may seem insignificant are a big deal for me.

I didn’t knew it was possible to make a good emotions out of bad emotions that easy. If only I knew the way to apply this strategy for any arbitrary situation.

(please miss me with that boomer bullshit like “nothing is wrong stop whining and get over it” etc. I’ve been there, I’ve done that and I needed medical treatment afterwards. “Getting over it” just doesn’t work)6 -

Took my first PTO today since joining the new company to get my wisdom teeth extracted. Already took care of that first thing this morning. Now I actually get to kick back and listen to some records until my new GPU is delivered, and then I get to fuck off the rest of the weekend with new games because my wife says I can't do any yard work this weekend.

Only had the top two extracted because I was born without the bottoms I guess. But less pain on my wallet and my jaw, I'll happily trade in the narcotics they gave me and stick to my scotch.

Not a bad 3 day weekend in my book. Ill have to go back and figure out who/what broke the projection runner in the sandbox environment when I go back, but eh. that's a Monday problem now. 😎4 -

N64 emulation possible on Nintendo hardware? Are you SUUUUUUURE???

According to GBATemp:

Wii:

CPU: Single-core PPC, 729MHz

GPU: 243 MHz single-core

RAM: 88MB

Possible? Yes (always full-speed)

New 3DS:

CPUs: 2, Quad-core ARM11 (804MHz), Single-core ARM9 (134MHz)

GPU: 268MHz single-core

RAM: 256MB

Possible? No

Switch:

CPU: Octa-core (2x Quad-core ARMv8 equivalent), 1.02GHz

GPU: 307.2MHz-768MHz (max. 384MHz when undocked)

RAM: 4GB

Possible? Barely (5FPS or less constant, only half the instruction set possible to implement)

this all makes total fucking sense 7

7 -

Finally getting to really try out WSL2.

Something must have changed since I last tried it because it's working great running my Jetbrains IDEs graphically and I am able to run NGINX and MySQL no problem. I even have caja installed and it runs great too.

It feels so much snappier than VirtualBox, even though the GPU acceleration is still not really working.

I'm mixed on finally learning Docker. It's a kind of insidious evil that is damaging the industry in a hundred ways, but it is an industry staple so not learning it would be foolish.3 -

Had a work project I needed to do on After effects but I didn't have my Laptop with me. I ended up rendering my project using a 5th gen i5 Laptop with no GPU. The room smells like burnt plastic now. Someone send me a GPU.

6

6 -

I need a powerhouse laptop: top CPU, loads of RAM and a decent GPU to run games occasionally (doesn't have to be ultra high graphics). Any brand recommendations? I love building computers, but buying laptops is really overwhelming.17

-

I've recently managed to install Linux on my laptop (after endless GPU-related errors solved by a BIOS update) and have been using it since (kept Windows only for gaming). Booting into Windows after one month feels like it's gonna make my laptop explode slowly2

-

Has anyone (who does either Data Analysis, ML/DL, NLP) had issues using AMD GPUs?

I'm wondering if it's even worth considering or if it's too early to think about investing in computers with such GPUs.10 -

An idea on how to build a server based on gpu? Yeah like the movie? We need to build the whole machine it would be impossible to swap a CPU by a GPU in the actual architecture.. just a thought.. however the problem I would have for making a home server is not even the hardware or the electricity bill, but my unstable internet connection..1

-

Fuck this Apple Macbook Pro 16" which takes 20W to drive two simple full HD displays in idle mode off the dedicated GPU with fans running at 3000 RPM.

I would love to get back my 2013 MBP which worked flawlessly without hearing the fans even in the hardest conditions while compiling stuff.

Even the most basic and cheap windows notebooks are able to drive two displays with 1W and no fans whatsoever in idle mode.

Damn Apple. Fuck this notebook.12 -

So, I bought a gaming laptop to have a desktop replacement on the move.

Issue is, when I stress it, it's... Loud af, and runs really hot (~90°c)

Is that normal for gaming laptops? I dunno if I should return it as faulty of just get used to it.

It's Asus ROG Strix Scar III G531GW - An i7 9750H and 2070.

The temp issues only seem to be about the cpu, gpu runs around 80°C and is fine...12 -

I need to reinstall Linux due to reasons. When I set this PC up i went for Kubuntu, but I've been having a lot of trouble with my Nvidia graphics card.

Is there a distro that you'd recommend that works nicely with an Nvidia GPU? I'm open to all alternatives.13 -

Hey Guys,

I am planning on getting an Vega 56 for Linux. Does anyone know how it performs. I heard a lot of good stuff about Vega 64 on Linux. But the power consumption is quite high and I am not sure if the drivers work well.

So I was wondering if it's actually worth it or not.6 -

What actual pain is, trying to install CUDA on Windows (that should be a pain there itself) and all after 3 hours you realize your lappie doesn't support it (Geforce 820M)! There goes my dream of Theano, PyCuda and Tensorflow-gpu ...6

-

Setting up an expo react / react native is a far worse feeling than installing GPU drivers + cuda toolkit for pytorch.

I have no idea how react devs are dealing with this shit. This is so horrible. Wtf is babel ? wtf is expo ? Wtf is SDK ? Wtf is eas ????????????????????2 -

So Im planning to build a pc, which i will mainly use it for dev and gaming in free time, my main components will be:

CPU: INTEL 8700K

GPU: GTX 1080 msi or gigabyte?

SSD: 860 EVO

RAM: 16GB 3200MHZ

MOTHERBOARD: should i go with msi or gigabyte whixh one is better?

PSU: 650W or 700W deepcooler?

Also for the cpu cooler do i get water colling or a standard cpu fan?

P.S: i plan to overclock the cpu and gpu at some point.

Also whats your opinion on the rgb lightning gpu and motherboard, and is there point in getting a mobo with sli support (is it work buying second gpu at some point or better upgrade the existing)4 -

I have one question to everyone:

I am basically a full stack developer who works with cloud technologies for platform development. For past 5-6 months I am working on product which uses machine learning algorithms to generate metadata from video.

One of the algorithm uses tensorflow to predict locale from an image. It takes an image of size ~500 kb and takes around 15 sec to predict the 5 possible locale from a pre-trained model. Now when I send more than 100 r ki requests to the code concurrently, it stops working and tensor flow throws some error. I am using a 32 core vcpu with 120 GB ram. When I ask the decision scientists from my team they say that the processing is high. A lot of calculation is happening behind the scene. It requires GPU.

As far as I understand, GPU make sense while training but while prediction or testing I do not think we will need such heavy infra. Please help me understand if I am wrong.

PS : all the decision scientists in the team basically dumb fucks, and they always have one answer use GPU.8 -

Imma guess the answer is no but has anyone ever heard of an external cuda enabled gpu you can plug into your laptop ? and maybe a seperate power jack lol4

-

Any of you uses Fedora with an Nvidia-GPU?

The distro seems pretty cool, but i doubt I'll get everything to work, since fedora doesn't offer premade packages for proprietary drivers.3 -

For learning purposes, I made a minimal TensorFlow.js re-implementation of Karpathy’s minGPT (Generative Pre-trained Transformer). One nice side effect of having the 300-lines-of-code model in a .ts file is that you can train it on a GPU in the browser.

https://github.com/trekhleb/...

The Python and Pytorch version still seems much more elegant and easy to read though... 2

2 -

After ten reinstallation, finally ready my first Virtual Machine fully operative with FreeBSD.

An OS full of surprise for a Arch-fag like me.

It's just like Arch, but without complicated simple things. Even if the raw system don't have anything on, in ten minutes is a fully operative system with even a configurated Desktop Enviroment.

The malus: driver. Not every machine can be the host for FreeBSD, for example AMD GPU can't send audio channel throw the hdmi port.

Personally i love the BSD License, so i think this OS will be my permanent one after Windows 101 -

My department just installed a new high performance GPU, so :

1. Good bye, my old laptop GPU!

2. Let's play around and break the shiny thing! 😎

(more likely I will be the one who break down due to frustration though 😬)1 -

Man i realy need to get of my windows host.

My productivity takes a nosedive whenever im on windows idk why.

I'd love to use linux fully but my fav game Overwatch has shit performance running on linux.

So the best solution would be to pass through my gpu to a windows vm for gaming.

But that would require a new gpu for the host system as the ryzen 7 1700 does not have a gpu.

I dont have any experience with passing thtough gpus. But could i make 2 vms that acces the same gpu, ofc not at the same time. So that i could have a gaming vm and maybe use another linux vm if i wanted to do something which profits off gpu acceleration.11 -

Hello my fellow dev ranters! I've been speaking with my dad and he has been complaining about how slow is his old windows machine. I was thinking of move it to a Linux distro, but I don't know which.

The machine has 2gb of ram, about 200gb os disk, a ati radeon 2400 gpu and a intel dual core.

With these specs and taking into account that the final user is a person that mostly uses the pc for web browsing, sports stream and movies. What would be a good, lightweight and simple to use linux distro?

Thank you in advance!3 -

Fuck sake, had to change the cooling system on my desktop, figured out the new cooling is too big(Noctua) to fit the GPU back, change the HDMI output to the motherboard(my CPU is an APU) and black screen all the time :(

It's fucking normal that as soon as the old GPU is lacking the system is not capable to switch to the embedded one? Fuck me.10 -

I'm pissed at my laptop. Its using the on board graphics instead of the dedicated graphics card. I've searched everywhere and can't find a solution. I've tried just disabling the on board graphics in the device manager, but then I can't plugin external monitors. And on top of that when opening a game it doesn't even use the GPU it uses the CPU

Anyone have advice? The onboard is AMD Radeon R7 and the card is an AMD RX 560 -

When you go to "Oh they do it cheap", don't expect results...

Changed my PC build around 2 years ago.

Went from Core i7 / Nvidia to Ryzen 9 / AMD

Welp, AMD is totaly unstable.

I've invested 5k $ so I'm gonna ride it, but NEVER, EVER EVER again I'm buying AMD CPU or GPU.

Shit is unstable as fuck. I have latency issues, CPU issues, Video issues almost every week.

With Intel/nVidia cvombo I had before, I had issues maybe once every 3-4 months.

So yeah, buy low cost AMD, you pay the price later in usability. Fuck them.20 -

So one of my first rants was about me unable to setup Debian with (lightdm) Cinnamon to be working with optimus laptop and to make the damn hdmi port work, where the port is attached to the nvidia gpu (vga passthrough?)

I have to try it with another distro because the dual-booted Windows greatly feeds my procrastination. (Like ... Factorio, Stellaris, Rimworld and etc. type of procrastination, it's getting somewhat severe. )

So what would you people of devrant recommend me to try? I am thinking a lot about Arch but I am afraid there will be a lot more problems with the lenovo drivers for various things.

The next one is classical Ubuntu, at the end this distro looks like it's at least trying to work amongst other distro's.

Also thought about Fedora because yum and RedHat. ( ..lol )

Thx ppl.2 -

Okay, so because my desktop has an APU (AMD A8-3850) and a dedicated GPU (AMD R9 380) in it, and i'm finally getting a (small, probably 240GB because budget) SSD for it, what Linux distro should I use? I'm planning on doing libvirt passthrough for Windows using my APU because fuck running it as a main anymore, it breaks too often. As far as I can tell, my options are as such, family-wise:

- Debian kernel: amdgpu doesn't like that I have an APU and GPU and refuses to see a screen (yes, even after all the Xorg configs and xrandr bullshit and kernel flags and...)

- RHEL: a lot of Red Hat-based distros (mainly Fedora) have packages that are broken out-of-repo and out-of-box recently, but maybe it'll like my hardware? (It's been a few Fedora releases since I last tried it, is this fixed? CentOS has such old packages that it's not even worth bothering with for my needs.)

- Arch kernel: go fuck yourself, i don't wanna take 1000 hours to get it running for a week, nor would the updates be any better than Windows' current problem (or even more so, as slightly more often than not Windows' broken updates just add annoyances and don't hose the system.)

did I miss any?25 -

Why is there no language that can run on a GPU, not considering GLSL and the such as they're shader languages and are only used for that.5

-

Anybody got any tips on how to not get kicked out of the Google colab books? Everything I’ve found is from like 2020ish

-