Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "out of memory"

-

Being paid to rewrite someone else's bad code is no joke.

I'll give the dev this, the use of gen 1,2,3 Pokemon for variable names and class names in beyond fantastic in terms of memory and childhood nostalgia. It would be even more fantastic if he spelt the names correctly, or used it to make a Pokemon game and NOT A FUCKING ACCOUNTANCY PROGRAM.

There's no correspondence in name according to type, or even number. Dev has just gone batshit, left zero comments, and now somehow Ryhorn is shitting out error codes because of errors existing in Charmeleon's asshole.

The things I do for money...24 -

Toilets and race conditions!

A co-worker asked me what issues multi-threading and shared memory can have. So I explained him that stuff with the lock. He wasn't quite sure whether he got it.

Me: imagine you go to the toilet. You check whether there's enough toilet paper in the stall, and it is. BUT now someone else comes in, does business and uses up all paper. CPUs can do shit very fast, can't they? Yeah and now you're sitting on the bowl, and BAMM out of paper. This wouldn't have happened if you had locked the stall, right?

Him: yeah. And with a single thread?

Me: well if you're alone at home in your appartment, there's no reason to lock the door because there's nobody to interfere.

Him: ah, I see. And if I have two threads, but no shared memory, then it is as if my wife and me are at home with each a toilet of our own, then we don't need to lock either.

Me: exactly!12 -

We had a Commodore64. My dad used to be an electrical engineer and had programs on it for calculations, but sometimes I was allowed to play games on it.

When my mother passed away (late 80s, I was 7), I closed up completely. I didn't speak, locked myself into my room, skipped school to read in the library. My dad was a lovely caring man, but he was suffering from a mental disease, so he couldn't really handle the situation either.

A few weeks after the funeral, on my birthday, the C64 was set up in my bedroom, with the "programmers reference guide" on my desk. I stayed up late every night to read it and try the examples, thought about those programs while in school. I memorized the addresses of the sound and sprite buffers, learnt how programs were managed in memory and stored on the casette.

I worked on my own games, got lost in the stories I was writing, mostly scifi/fantasy RPGs. I bought 2764 eproms and soldered custom cartridges so I could store my finished work safely.

When I was 12 my dad disappeared, was found, and hospitalized with lost memory. I slipped through the cracks of child protection, felt responsible to take care of the house and pay the bills. After a year I got picked up and placed in foster care in a strict Christian family who disallowed the use of computers.

I ran away when I was 13, rented a student apartment using my orphanage checks (about €800/m), got a bunch of new and recycled computers on which I installed Debian, and learnt many new programming languages (C/C++, Haskell, JS, PHP, etc). My apartment mates joked about the 12 CRT monitors in my room, but I loved playing around with experimental networking setups. I tried to keep a low profile and attended high school, often faking my dad's signatures.

After a little over a year I was picked up by child protection again. My dad was living on his own again, partly recovered, and in front of a judge he agreed to be provisory legal guardian, despite his condition. I was ruled to be legally an adult at the age of 15, and got to keep living in the student flat (nation-wide foster parent shortage played a role).

OK, so this sounds like a sobstory. It isn't. I fondly remember my mom, my dad is doing pretty well, enjoying his old age together with an nice woman in some communal landhouse place.

I had a bit of a downturn from age 18-22 or so, lots of drugs and partying. Maybe I just needed to do that. I never finished any school (not even high school), but managed to build a relatively good career. My mom was a biochemist and left me a lot of books, and I started out as lab analyst for a pharma company, later went into phytogenetics, then aerospace (QA/NDT), and later back to pure programming again.

Computers helped me through a tough childhood.

They awakened a passion for creative writing, for math, for science as a whole. I'm a bit messed up, a bit of a survivalist, but currently quite happy and content with my life.

I try to keep reminding people around me, especially those who have just become parents, that you might feel like your kids need a perfect childhood, worrying about social development, dragging them to soccer matches and expensive schools...

But the most important part is to just love them, even if (or especially when) life is harsh and imperfect. Show them you love them with small gestures, and give their dreams the chance to flourish using any of the little resources you have available.21 -

Like many others my favourote shameless hack is a cronjob to restart our app server at 2am, thus preventing out of memory exceptions5

-

iOS: Hey, human wanna hear a joke?

Me: Sure.

iOS: Out of Memory.

Me: What?

iOS: I ain't explaining shit.2 -

A guy on another team who is regarded by non-programmers as a genius wrote a python script that goes out to thousands of our appliances, collects information, compiles it, and presents it in a kinda sorta readable, but completely non-transferable format. It takes about 25 minutes to run, and he runs it himself every morning. He comes in early to run it before his team's standup.

I wanted to use that data for apps I wrote, but his impossible format made that impractical, so I took apart his code, rewrote it in perl, replaced all the outrageous hard-coded root passwords with public keys, and added concurrency features. My script dumps the data into a memory-resident backend, and my filterable, sortable, taggable web "frontend"(very generous nomenclature) presents the data in html, csv, and json. Compared to the genius's 25 minute script that he runs himself in the morning, mine runs in about 45 seconds, and runs automatically in cron every two hours.

Optimized!19 -

So this was a couple years ago now. Aside from doing software development, I also do nearly all the other IT related stuff for the company, as well as specialize in the installation and implementation of electrical data acquisition systems - primarily amperage and voltage meters. I also wrote the software that communicates with this equipment and monitors the incoming and outgoing voltage and current and alerts various people if there's a problem.

Anyway, all of this equipment is installed into a trailer that goes onto a semi-truck as it's a portable power distribution system.

One time, the computer in one of these systems (we'll call it system 5) had gotten fried and needed replaced. It was a very busy week for me, so I had pulled the fried computer out without immediately replacing it with a working system. A few days later, system 5 leaves to go work on one of our biggest shows of the year - the Academy Awards. We make well over a million dollars from just this one show.

Come the morning of show day, the CEO of the company is in system 5 (it was on a Sunday, my day off) and went to set up the data acquisition software to get the system ready to go, and finds there is no computer. I promptly get a phone call with lots of swearing and threats to my job. Let me tell you, I was sweating bullets.

After the phone call, I decided I needed to try and save my job. The CEO hadn't told me to do anything, but I went to work, grabbed an old Windows XP laptop that was gathering dust and installed my software on it. I then had to build the configuration file that is specific to system 5 from memory. Each meter speaks the ModBus over TCP/IP protocol, and thus each meter as a different bus id. Fortunately, I'm pretty anal about this and tend to follow a specific method of id numbering.

Once I got the configuration file done and tested the software to see if it would even run properly on Windows XP (it did!), I called the CEO back and told him I had a laptop ready to go for system 5. I drove out to Hollywood and the CFO (who was there with the CEO) had to walk about a mile out of the security zone to meet me and pick up the laptop.

I told her I put a fresh install of the data acquisition software on the laptop and it's already configured for system 5 - it *should* just work once you plug it in.

I didn't get any phone calls after dropping off the laptop, so I called the CFO once I got home and asked her if everything was working okay. She told me it worked flawlessly - it was Plug 'n Play so to speak. She even said she was impressed, she thought she'd have to call me to iron out one or two configuration issues to get it talking to the meters.

All in all, crisis averted! At work on Monday, my supervisor told me that my name was Mud that day (by the CEO), but I still work here!

Here's a picture of the inside of system 8 (similar to system 5 - same hardware) 15

15 -

https://git.kernel.org/…/ke…/... sure some of you are working on the patches already, if you are then lets connect cause, I am an ardent researcher for the same as of now.

So here it goes:

As soon as kernel page table isolation(KPTI) bug will be out of embargo, Whatsapp and FB will be flooded with over-night kernel "shikhuritee" experts who will share shitty advices non-stop.

1. The bug under embargo is a side channel attack, which exploits the fact that Intel chips come with speculative execution without proper isolation between user pages and kernel pages. Therefore, with careful scheduling and timing attack will reveal some information from kernel pages, while the code is running in user mode.

In easy terms, if you have a VPS, another person with VPS on same physical server may read memory being used by your VPS, which will result in unwanted data leakage. To make the matter worse, a malicious JS from innocent looking webpage might be (might be, because JS does not provide language constructs for such fine grained control; atleast none that I know as of now) able to read kernel pages, and pawn you real hard, real bad.

2. The bug comes from too much reliance on Tomasulo's algorithm for out-of-order instruction scheduling. It is not yet clear whether the bug can be fixed with a microcode update (and if not, Intel has to fix this in silicon itself). As far as I can dig, there is nothing that hints that this bug is fixable in microcode, which makes the matter much worse. Also according to my understanding a microcode update will be too trivial to fix this kind of a hardware bug.

3. A software-only remedy is possible, and that is being implemented by all major OSs (including our lovely Linux) in kernel space. The patch forces Translation Lookaside Buffer to flush if a context switch happens during a syscall (this is what I understand as of now). The benchmarks are suggesting that slowdown will be somewhere between 5%(best case)-30%(worst case).

4. Regarding point 3, syscalls don't matter much. Only thing that matters is how many times syscalls are called. For example, if you are using read() or write() on 8MB buffers, you won't have too much slowdown; but if you are calling same syscalls once per byte, a heavy performance penalty is guaranteed. All processes are which are I/O heavy are going to suffer (hostings and databases are two common examples).

5. The patch can be disabled in Linux by passing argument to kernel during boot; however it is not advised for pretty much obvious reasons.

6. For gamers: this is not going to affect games (because those are not I/O heavy)

Meltdown: "Meltdown" targeted on desktop chips can read kernel memory from L1D cache, Intel is only affected with this variant. Works on only Intel.

Spectre: Spectre is a hardware vulnerability with implementations of branch prediction that affects modern microprocessors with speculative execution, by allowing malicious processes access to the contents of other programs mapped memory. Works on all chips including Intel/ARM/AMD.

For updates refer the kernel tree: https://git.kernel.org/…/ke…/...

For further details and more chit-chats refer: https://lwn.net/SubscriberLink/...

~Cheers~

(Originally written by Adhokshaj Mishra, edited by me. ) 22

22 -

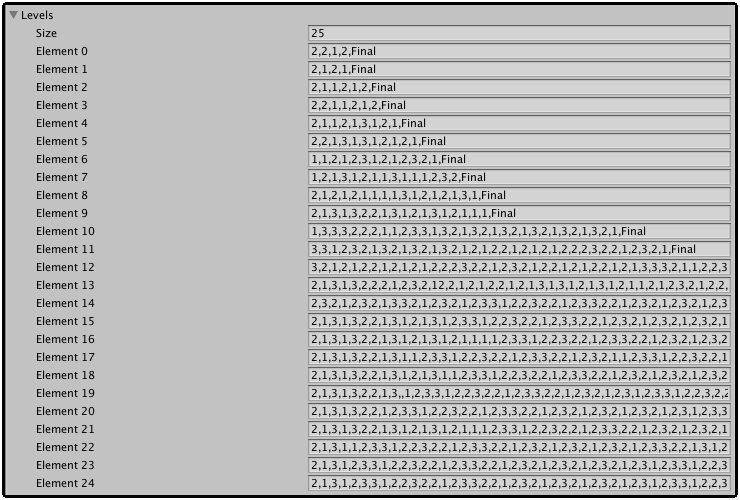

So I cracked prime factorization. For real.

I can factor a 1024 bit product in 11hours on an i3.

No GPU acceleration, no massive memory overhead. Probably a lot faster with parallel computation on a better cpu, or even on a gpu.

4096 bits in 97-98 hours.

Verifiable. Not shitting you. My hearts beating out of my fucking chest. Maybe it was an act of god, I don't know, but it works.

What should I do with it?228 -

In a user-interface design meeting over a regulatory compliance implementation:

User: “We’ll need to input a city.”

Dev: “Should we validate that city against the state, zip code, and country?”

User: “You are going to make me enter all that data? Ugh…then make it a drop-down. I select the city and the state, zip code auto-fill. I don’t want to make a mistake typing any of that data in.”

Me: “I don’t think a drop-down of every city in the US is feasible.”

Manage: “Why? There cannot be that many. Drop-down is fine. What about the button? We have a few icons to choose from…”

Me: “Uh..yea…there are thousands of cities in the US. Way too much data to for anyone to realistically scroll through”

Dev: “They won’t have to scroll, I’ll filter the list when they start typing.”

Me: “That’s not really the issue and if they are typing the city anyway, just let them type it in.”

User: “What if I mistype Ch1cago? We could inadvertently be out of compliance. The system should never open the company up for federal lawsuits”

Me: “If we’re hiring individuals responsible for legal compliance who can’t spell Chicago, we should be sued by the federal government. We should validate the data the best we can, but it is ultimately your department’s responsibility for data accuracy.”

Manager: “Now now…it’s all our responsibility. What is wrong with a few thousand item drop-down?”

Me: “Um, memory, network bandwidth, database storage, who maintains this list of cities? A lot of time and resources could be saved by simply paying attention.”

Manager: “Memory? Well, memory is cheap. If the workstation needs more memory, we’ll add more”

Dev: “Creating a drop-down is easy and selecting thousands of rows from the database should be fast enough. If the selection is slow, I’ll put it in a thread.”

DBA: “Table won’t be that big and won’t take up much disk space. We’ll need to setup stored procedures, and data import jobs from somewhere to maintain the data. New cities, name changes, ect. ”

Manager: “And if the network starts becoming too slow, we’ll have the Networking dept. open up the valves.”

Me: “Am I the only one seeing all the moving parts we’re introducing just to keep someone from misspelling ‘Chicago’? I’ll admit I’m wrong or maybe I’m not looking at the problem correctly. The point of redesigning the compliance system is to make it simpler, not more complex.”

Manager: “I’m missing the point to why we’re still talking about this. Decision has been made. Drop-down of all cities in the US. Moving on to the button’s icon ..”

Me: “Where is the list of cities going to come from?”

<few seconds of silence>

Dev: “Post office I guess.”

Me: “You guess?…OK…Who is going to manage this list of cities? The manager responsible for regulations?”

User: “Thousands of cities? Oh no …no one is our area has time for that. The system should do it”

Me: “OK, the system. That falls on the DBA. Are you going to be responsible for keeping the data accurate? What is going to audit the cities to make sure the names are properly named and associated with the correct state?”

DBA: “Uh..I don’t know…um…I can set up a job to run every night”

Me: “A job to do what? Validate the data against what?”

Manager: “Do you have a point? No one said it would be easy and all of those details can be answered later.”

Me: “Almost done, and this should be easy. How many cities do we currently have to maintain compliance?”

User: “Maybe 4 or 5. Not many. Regulations are mostly on a state level.”

Me: “When was the last time we created a new city compliance?”

User: “Maybe, 8 years ago. It was before I started.”

Me: “So we’re creating all this complexity for data that, realistically, probably won’t ever change?”

User: “Oh crap, you’re right. What the hell was I thinking…Scratch the drop-down idea. I doubt we’re have a new city regulation anytime soon and how hard is it to type in a city?”

Manager: “OK, are we done wasting everyone’s time on this? No drop-down of cities...next …Let’s get back to the button’s icon …”

Simplicity 1, complexity 0.16 -

Lads, I will be real with you: some of you show absolute contempt to the actual academic study of the field.

In a previous rant from another ranter it was thrown up and about the question for finding a binary search implementation.

Asking a senior in the field of software engineering and computer science such question should be a simple answer, specifically depending on the type of job application in question. Specially if you are applying as a SENIOR.

I am tired of this strange self-learner mentality that those that have a degree or a deep grasp of these fundamental concepts are somewhat beneath you because you learned to push out a website using the New Boston tutorials on youtube. FOR every field THAT MATTERS a license or degree is hold in high regards.

"Oh I didn't go to school, shit is for suckers, but I learned how to chop people up and kinda fix it from some tutorials on youtube" <---- try that for a medical position.

"Nah it's cool, I can fix your breaks, learned how to do it by reading blogs on the internet" <--- maintenance shop

"Sure can write the controller processing code for that boing plane! Just got done with a low level tutorial on some websites! what can go wrong!"

(The same goes for military devices which in the past have actually killed mfkers in the U.S)

Just recently a series of people were sent to jail because of a bug in software. Industries NEED to make sure a mfker has aaaall of the bells and whistles needed for running and creating software.

During my masters degree, it fucking FASCINATED me how many mfkers were absolutely completely NEW to the concept of testing code, some of them with years in the field.

And I know what you are thinking "fuck you, I am fucking awesome" <--- I AM SURE YOU BLOODY WELL ARE but we live in a planet with billions of people and millions of them have fallen through the cracks into software related positions as well as complete degrees, the degree at LEAST has a SPECTACULAR barrier of entry during that intro to Algos and DS that a lot of bitches fail.

NOTE: NOT knowing the ABSTRACTIONS over the tools that we use WILL eventually bite you in the ASS because you do not fucking KNOW how these are implemented internally.

Why do you think compiler designers, kernel designers and embedded developers make the BANK they made? Because they don't know memory efficient ways of deploying a product with minimal overhead without proper data structures and algorithmic thinking? NOT EVERYTHING IS SHITTY WEB DEVELOPMENT

SO, if a mfker talks shit about a so called SENIOR for not knowing that the first mamase mamasa bloody simple as shit algorithm THROWN at you in the first 10 pages of an algo and ds book, then y'all should be offended at the mkfer saying that he is a SENIOR, because these SENIORS are the same mfkers that try to at one point in time teach other people.

These SENIORS are the same mfkers that left me a FUCKING HORRIBLE AND USELESS MESS OF SPAGHETTI CODE

Specially to most PHP developers (my main area) y'all would have been well motherfucking served in learning how not to forLoop the fuck out of tables consisting of over 50k interconnected records, WHAT THE FUCK

"LeaRniNG tHiS iS noT neeDed!!" yes IT fucking IS

being able to code a binary search (in that example) from scratch lets me know fucking EXACTLY how well your thought process is when facing a hard challenge, knowing the basemotherfucking case of a LinkedList will damn well make you understand WHAT is going on with your abstractions as to not fucking violate memory constraints, this-shit-is-important.

So, will your royal majesties at least for the sake of completeness look into a couple of very well made youtube or book tutorials concerning the topic?

You can code an entire website, fine as shit, you will get tested by my ass in terms of security and best practices, run these questions now, and it very motherfucking well be as efficient as I think it should be(I HIRE, NOT YOU, or your fucking blog posts concerning how much MY degree was not needed, oh and btw, MY degree is what made sure I was able to make SUCH decissions)

This will make a loooooooot of mfkers salty, don't worry, I will still accept you as an interview candidate, but if you think you are good enough without a degree, or better than me (has happened, told that to my face by a candidate) then get fucking ready to receive a question concerning: BASIC FUCKING COMPUTER SCIENCE TOPICS

* gays away into the night52 -

I don’t usually recommend movies to a lot of people, but if you like going to the movies, I highly, highly recommend you see “Searching”. Its wide release in the U.S. is today and it will be out internationally soon I think.

It’s probably the best thriller movie I’ve ever seen, and the best movie I’ve seen in last few years, and I see a lot of them. The tech in it is awesome, and how the movie is presented will bring back tech memories and it really is a trip down memory lane. The movie has a bit of everything. An awesome story, awesome suspense, and great use of technology and social media.

10/10, definitely see it!28 -

This facts are killing me

"During his own Google interview, Jeff Dean was asked the implications if P=NP were true. He said, "P = 0 or N = 1." Then, before the interviewer had even finished laughing, Jeff examined Google’s public certificate and wrote the private key on the whiteboard."

"Compilers don't warn Jeff Dean. Jeff Dean warns compilers."

"gcc -O4 emails your code to Jeff Dean for a rewrite."

"When Jeff Dean sends an ethernet frame there are no collisions because the competing frames retreat back up into the buffer memory on their source nic."

"When Jeff Dean has an ergonomic evaluation, it is for the protection of his keyboard."

"When Jeff Dean designs software, he first codes the binary and then writes the source as documentation."

"When Jeff has trouble sleeping, he Mapreduces sheep."

"When Jeff Dean listens to mp3s, he just cats them to /dev/dsp and does the decoding in his head."

"Google search went down for a few hours in 2002, and Jeff Dean started handling queries by hand. Search Quality doubled."

"One day Jeff Dean grabbed his Etch-a-Sketch instead of his laptop on his way out the door. On his way back home to get his real laptop, he programmed the Etch-a-Sketch to play Tetris."

"Jeff Dean once shifted a bit so hard, it ended up on another computer. "6 -

At age of 20, I got hired as junior dev at a mobile gaming company. We were 2 junior devs hired at the same time and one of our senior colleagues made a prank: he came in the office before us and rearranged our offices in a "funny" manner.

Two days later I waited for him to go home. I opened his PC case, removed the power button cable from the motherboard and then re-arranged everything back to normal. Well, I couldn't resist...

Next day he came into the office and, well, surprise... the PC was not starting. He went to the IT department and they spent 4 hours trying to figure out why it was not working. They replaced the CPU, RAM memory, including the PSU.

I had to go and tell them: "maybe it's the power button jack?!".

I got into some problems for that prank. Indeed I crossed a line, but what the hell... that was a bad IT department.19 -

Apple has a real problem.

Their hardware has always been overpriced, but at least before it had defenders pointing out that it was at least capable and well made.

I know, I used to be one of them.

Past tense.

They have jumped the shark.

They now make pretentious hipster crap that is massively overpriced and doesn't have the basic features (like hardware ports) to enable you to do your job.

I mean, who needs an ESC key? What is wrong with learning to type CTRL-[ instead? Muscle memory? What's that?

They have gone from "It just works" to "It just doesn't work" in no time at all.

And it is Developers who are most pissed off. A tiny demographic who won't be visible on the financial bottom line until their newly absent software suddenly makes itself known two, three years down the line.

By which time it is too late to do anything.

But hey! Look how thin (and thermally throttled) my new laptop is!19 -

I'll get to my four words in a sec, but let me set the background first.

This morning, at breakfast, I fired up my trusty laptop only to get a fan failure warning.

Finally, after the three year old is asleep tonight, I'm able to start dismantling the case to get to the fan. I'm hoping it just needs cleaned out.

Hard drive, memory, and keyboard spread out over the kitchen table. I'm not even halfway done.

Guess what? Now I'm one of the lucky 3500 people to have a power outage at 9 pm. Estimated restore time: 2 am.

Sigh.

"All those tiny screws"

And a three year old in the house... 18

18 -

Senior development manager in my org posted a rant in slack about how all our issues with app development are from

“Constantly moving goalposts from version to version of Xcode”

It took me a few minutes to calm myself down and not reply. So I’ll vent here to myself as a form of therapy instead.

Reality Check:

- You frequently discuss the fact that you don’t like following any of apples standards or app development guidelines. Bit rich to say the goalposts are moving when you have your back to them.

- We have a custom everything (navigation stack handler, table view like control etc). There’s nothing in these that can’t be done with the native ones. All that wasted dev time is on you guys.

- Last week a guy held a session about all the memory leaks he found in these custom libraries/controls. Again, your teams don’t know the basic fundamentals of the language or programming in general really. Not sure how that’s apples fault.

- Your “great emphasis on unit testing” has gotten us 21% coverage on iOS and an Android team recently said to us “yeah looks like the tests won’t compile. Well we haven’t touched them in like a year. Just ignore them”. Stability of the app is definitely on you and the team.

- Having half the app in react-native and half in native (split between objective-c and swift) is making nobodies life easier.

- The company forces us to use a custom built CI/CD solution that regularly runs out of memory, reports false negatives and has no specific mobile features built in. Did apple force this on us too?

- Shut the fuck up5 -

Story about an obscure bug: https://twitter.com/mmalex/status/...

"We had a ‘fun’ one on LittleBigPlanet 1: 2 weeks to gold, a Japanese QA tester started reliably crashing the game by leaving it on over night. We could not repro. Like you, days of confirmation of identical environment, os, hardware, etc; each attempt took over 24h, plus time differences, and still no repro.

"Eventually we realised they had an eye toy plugged in, and set to record audio (that took 2 days of iterating) still no joy.

"Finally we noticed the crash was always around 4am. Why? What happened only in Japan at 4am? We begged to find out.

"Eventually the answer came: cleaners arrived. They were more thorough than our cleaners! One hour of vacuuming near the eye toy- white noise- caused the in game chat audio compression to leak a few bytes of memory (only with white noise). Long enough? Crash.

"Our final repro: radios tuned to noise, turned up, and we could reliably crash the game. Fix took 5 minutes after that. Oh, gamedev...."5 -

My team handles infrastructure deployment and automation in the cloud for our company, so we don't exactly develop applications ourselves, but we're responsible for building deployment pipelines, provisioning cloud resources, automating their deployments, etc.

I've ranted about this before, but it fits the weekly rant so I'll do it again.

Someone deployed an autoscaling application into our production AWS account, but they set the maximum instance count to 300. The account limit was less than that. So, of course, their application gets stuck and starts scaling out infinitely. Two hundred new servers spun up in an hour before hitting the limit and then throwing errors all over the place. They send me a ticket and I login to AWS to investigate. Not only have they broken their own application, but they've also made it impossible to deploy anything else into prod. Every other autoscaling group is now unable to scale out at all. We had to submit an emergency limit increase request to AWS, spent thousands of dollars on those stupidly-large instances, and yelled at the dev team responsible. Two weeks later, THEY INCREASED THE MAX COUNT TO 500 AND IT HAPPENED AGAIN!

And the whole thing happened because a database filled up the hard drive, so it would spin up a new server, whose hard drive would be full already and thus spin up a new server, and so on into infinity.

Thats probably the only WTF moment that resulted in me actually saying "WTF?!" out loud to the person responsible, but I've had others. One dev team had their code logging to a location they couldn't access, so we got daily requests for two weeks to download and email log files to them. Another dev team refused to believe their server was crashing due to their bad code even after we showed them the logs that demonstrated their application had a massive memory leak. Another team arbitrarily decided that they were going to deploy their code at 4 AM on a Saturday and they wanted a member of my team to be available in case something went wrong. We aren't 24/7 support. We aren't even weekend support. Or any support, technically. Another team told us we had one day to do three weeks' worth of work to deploy their application because they had set a hard deadline and then didn't tell us about it until the day before. We gave them a flat "No" for that request.

I could probably keep going, but you get the gist of it.4 -

My git password is only muscle memory at this point.

If I accidentally try to think about what I'm typing I end locking myself out for the rest of the day.5 -

Experience that made me feel like a dev badass?

Users requested the ability to 'send' information from one application to another. Couple of our senior devs started out saying it would be impossible (there is no way to pass objects across a machine's memory boundary), then entertained the idea of utilizing the various messaging frameworks such as Microsoft's ServiceBus and RabbitMQ, but came up with a plan to use 2 WebAPI services (one messenger, one receiver) along with a homegrown messaging API (the clients would 'poll' the services looking for message) because ServiceBus, RabbitMQ, etc might not be able to scale to our needs. Their initial estimates were about 6 months development for the two services, hardware requirement for two servers, MSSQL server licenses, and padded an additional 6 months for client modifications. Very...very proud of their detailed planning.

I thought ...hmmm...I've done memory maps and created simple TCP/IP hosts that could send messages back and forth between other apps (non-UI), WPF couldn't be that much different.

In an afternoon, I came up with this (see attached), and showed the boss. Guess which solution we're going with.

The two devs are still kinda pissed at me. One still likes say as I walk in the room "our hero returns"....frack him. 11

11 -

The stupid stories of how I was able to break my schools network just to get better internet, as well as more ridiculous fun. XD

1st year:

It was my freshman year in college. The internet sucked really, really, really badly! Too many people were clearly using it. I had to find another way to remedy this. Upon some further research through Google I found out that one can in fact turn their computer into a router. Now what’s interesting about this network is that it only works with computers by downloading the necessary software that this network provides for you. Some weird software that actually looks through your computer and makes sure it’s ok to be added to the network. Unfortunately, routers can’t download and install that software, thus no internet… but a PC that can be changed into a router itself is a different story. I found that I can download the software check the PC and then turn on my Router feature. Viola, personal fast internet connected directly into the wall. No more sharing a single shitty router!

2nd year:

This was about the year when bitcoin mining was becoming a thing, and everyone was in on it. My shitty computer couldn’t possibly pull off mining for bitcoins. I needed something faster. How I found out that I could use my schools servers was merely an accident.

I had been installing the software on every possible PC I owned, but alas all my PC’s were just not fast enough. I decided to try it on the RDS server. It worked; the command window was pumping out coins! What I came to find out was that the RDS server had 36 cores. This thing was a beast! And it made sense that it could actually pull off mining for bitcoins. A couple nights later I signed in remotely to the RDS server. I created a macro that would continuously move my mouse around in the Remote desktop screen to keep my session alive at all times, and then I’d start my bitcoin mining operation. The following morning I wake up and my session was gone. How sad I thought. I quickly try to remote back in to see what I had collected. “Error, could not connect”. Weird… this usually never happens, maybe I did the remoting wrong. I went to my schools website to do some research on my remoting problem. It was down. In fact, everything was down… I come to find out that I had accidentally shut down the schools network because of my mining operation. I wasn’t found out, but I haven’t done any mining since then.

3rd year:

As an engineering student I found out that all engineering students get access to the school’s VPN. Cool, it is technically used to get around some wonky issues with remoting into the RDS servers. What I come to find out, after messing around with it frequently, is that I can actually use the VPN against the screwed up security on the network. Remember, how I told you that a program has to be downloaded and then one can be accepted into the network? Well, I was able to bypass all of that, simply by using the school’s VPN against itself… How dense does one have to be to not have patched that one?

4th year:

It was another programming day, and I needed access to my phones memory. Using some specially made apps I could easily connect to my phone from my computer and continue my work. But what I found out was that I could in fact travel around in the network. I discovered that I can, in fact, access my phone through the network from anywhere. What resulted was the discovery that the network scales the entirety of the school. I discovered that if I left my phone down in the engineering building and then went north to the biology building, I could still continue to access it. This seems like a very fatal flaw. My idea is to hook up a webcam to a robot and remotely controlling it from the RDS servers and having this little robot go to my classes for me.

What crazy shit have you done at your University?9 -

About two years ago I get roped into a something when someone was requesting an $8000 laptop to run an "program" that they wrote in Excel to pull data from our mainframe.

In reality they are using our normal application that interacts with the mainframe and screen scrapping it to populate several Excel spreadsheets.

So this guy kept saying that he needed the expensive laptop because he needed the extra RAM and processing power for his application. At the time we only supported 32 bit Windows 7 so even though I told him ten times that the OS wouldn't recognize more than 3.5 GB of RAM he kept saying that increasing the RAM would fix his problem. I also explained that even if we installed the 64 bit OS we didn't have approval for the 64 bit applications.

So we looked at the code and we found that rather than reusing the same workbook he was opening a new instance of a workbook during each iteration of his loop and then not closing or disposing of them. So he was running out of memory due to never disposing of anything.

Even better than all of that, he wanted a faster processor to speed up the processing, but he had about 5 seconds of thread sleeps in each loop so that the place he was screen scrapping from would have time to load. So it wouldn't matter how fast the processor was, in the end there were sleeps and waits in there hard coded to slow down the app. And the guy didn't understand that a faster processor wouldn't have made a difference.

The worst thing is a "dev" that thinks they know what they are doing but they don't have a clue.7 -

Today, I was told to investigate why the software doesn't work on "some" computers. I had no previous experience with that particular software but I just had to make some tests... easy, right? As soon as I ran the software, my computer crashed (I literally had to restart the pc). I asked my colleagues if I did something wrong but the set up seemed ok.

Later, in a random discussion about the software I found out it does "a little memory allocation". I opened the performance tab in task manager and ran the software again. In an instant, the RAM went from 1.3GB to 7.66GB (my pc has 8GB of RAM).

In an attempt to find how such a monstrosity was creater, I found out the developer that made the software had 16GB of RAM on his pc.

I have found something that eats RAM more than Chrome... brace yourselves.8 -

Every single one of them, and every one that will come after them.

Google, it started out as 2 people in their garage, wanting to make a search engine that was better than the others. Nothing else, nothing evil. Just make the world a little bit better. And look what it's become now. A megacorporation with little to no regards for their user base. Because who cares about users anyway?

Microsoft, it started out with Bill Gates - young high school computer nerd - who wanted to make an operating system for the world to use. Something that's better than the competition. And boy did he do so. Well "better than the competition" aside, he did make it for the world to use. And the world adopted it. And look what it's become now. A megacorporation with little to no regards for their user base. Because who cares about users anyway?

See where I'm going here?

Apple, it started out with Steve Jobs and Steve Wozniak in their garage, just like Google did, wanting to make hardware that was better than the others. Nothing else, nothing evil. Just to make the world a little bit better. And look what it's become now. Planned obsolescence has been baked into it, just like it is in every other piece of technology. Quality control and thinking through the design has become a thing of the past. User choice, yeah who cares about that.

Samsung, it started out centuries ago actually, and I don't really remember the details of it.. ColdFusion has a video on it if memory serves me right. Do watch it if you're interested. Anyway, just like all the others they started out as a company which wanted to make the world a little bit better. And damn right did they do so.. initially. Look what they've become now. Forcing their stupid TouchWiz UI upon their customers (or products?), a Bixby button that can't even be reprogrammed.. and the latest thing.. Knox, advertised as a security feature, but as everyone who likes rooting their devices and mucking with it knows, it is an anti-feature that only serves for lockdown. Why shouldn't you be able to turn in a phone for RMA when a hardware error occurs, when all you've personally modified is the software? Why should changing the software blow that eFuse, so that you can be sure that you can't replace it without specialized equipment and a very steady hand?

I could go on and on forever about more of the tech giants out there, but I feel like this suffices for now. Otherwise I won't have anything else left for future rants! But one thing I know for sure. Every tech company started, starts, and will start out with a desire to make the world a better place, and once they gain a significant customer base, they will without exception turn into the same kind of Evil Megacorp., just like the ones before them. Some may say that capitalism itself is to blame for this, the greed for more when you already have a lot. Who knows? I'd rather say that the very human nature itself is to blame for it. We're by design greedy beings, and I hate it. I hate being human for that. I don't want humans to be evil towards one another, and be greedy for ever more. But I guess that that's just the way it is, and some things do actually never change...17 -

I have just concluded a post-mortem on one of my servers.

Cause of death: out of memory due to a tiny memory leak in a VPN service triggered by 66 different IPs brute-forcing the creds at the same time. Mostly from China, of course.

Dear bot writers: you made me put aside my spaghetti and write iptables rules. I hate iptables. And I love spaghetti. You should be ashamed of yourself! Did momma not teach you basic OpSec? Don't crash the target and never, ever, interrupt the sysadmin during dinner!6 -

I taught my 9yo sister to SSH from my Arch Linux system to an Ubuntu system, she was amazed to see terminal and Firefox launching remotely. Next I taught her to murder and eat all the memory (I love Linux, as Batman, one should also know the weaknesses). Now she can rm rf / --no-preserve-root and the forkbomb. She's amazed at the power of one liners. Will be teaching her python as she grew fond of my Raspberry Pi zero w with blinkt and phat DAC, making rainbows and playing songs via mpg123.

I made her use play with Makey Makey when it first came out but it isn't as interesting. Drop your suggestions which could be good for her learning phase?13 -

Yeah Mozilla fuck merit and fuck you too!

This, this is what I was talking about when the fucking CoC came out and everyone (including it's author) started it using it as a political weapon.

You castrated fucking virgins! Mozilla, I want to support you I really don't like chrome but you always manage to disappoint everyone. I'm tired, tired of you morally superior socialists infecting my fucking workplace, entertainment and news.

This is just an excuse for lazy assholes to have their cake and eat it too and it's damn fucking INSULTING to us "minorities", I can work to get nice things just like anyone else bitch! having another skin color is not a disability!

Worst of all, you seem to have straight out millennial retards making these decisions seeing as it's based on an article from a washed up "gender research" professor that thinks Barbie Doctor is problematic, the most biased and dumb source you can possibly pull out of your ass.

Two classmates were murdered this morning, do you really think we care about what your diversity and inclusion Dept thinks it's problematic? You delusional halfwits, the only comforting thought is that your soft bigotry will perish alongside your product when it inevitably diminishes it's quality for sake of "equality".

Want to make better products? Ditch your useless diversity and inclusion department and start optimizing the memory consumption on firefox.

Want to help minorities? Start paying your outsourced developers decently.

I hope this helps people who thought including politics in software development wouldn't have dire consecuences to open their eyes; if not, oh well I guess people will get it when mozilla keeps going down the drain and they get fired because they just outsourced their work in the name of "diversity" just to save money.

https://blog.mozilla.org/inclusion/... 95

95 -

Here are the reasons why I don't like IPv6.

Now I'll be honest, I hate IPv6 with all my heart. So I'm not supporting it until inevitably it becomes the de facto standard of the internet. In home networks on the other hand.. huehue...

The main reason why I hate it is because it looks in every way overengineered. Or rather, poorly engineered. IPv4 has 32 bits worth, which translates to about 4 billion addresses. IPv6 on the other hand has 128 bits worth of addresses.. which translates to.. some obscenely huge number that I don't even want to start translating.

That's the problem. It's too big. Anyone who's worked on the internet for any amount of time knows that the internet on this planet will likely not exceed an amount of machines equal to about 1 or 2 extra bits (8.5B and 17.1B respectively). Now of course 33 or 34 bits in total is unwieldy, it doesn't go well with electronics. From 32 you essentially have to go up to 64 straight away. That's why 64-bit processors are.. well, 64 bits. The memory grew larger than the 4GB that a 32-bit processor could support, so that's what happened.

The internet could've grown that way too. Heck it probably could've become 64 bits in total of which 34 are assigned to the internet and the remaining bits are for whatever purposes large IP consumers would like to use the remainder for.

Whoever designed IPv6 however.. nope! Let's give everyone a /64 range, and give them quite literally an IP pool far, FAR larger than the entire current internet. What's the fucking point!?

The IPv6 standard is far larger than it should've been. It should've been 64 bits instead of 128, and it should've been separated differently. What were they thinking? A bazillion colonized planets' internetworks that would join the main internet as well? Yeah that's clearly something that the internet will develop into. The internet which is effectively just a big network that everyone leases and controls a little bit of. Just like a home network but scaled up. Imagine or even just look at the engineering challenges that interplanetary communications present. That is not going to be feasible for connecting multiple planets' internets. You can engineer however you want but you can't engineer around the hard limit of light speed. Besides, are our satellites internet-connected? Well yes but try using one. And those whizz only a couple of km above sea level. The latency involved makes it barely usable. Imagine communicating to the ISS, the moon or Mars. That is not going to happen at an internet scale. Not even close. And those are only the closest celestial objects out there.

So why was IPv6 engineered with hundreds of years of development and likely at least a stage 4 civilization in mind? No idea. Future-proofing or poor engineering? I honestly don't know. But as a stage 0 or maybe stage 1 person, I don't think that I or civilization for that matter is ready for a 128-bit internet. And we aren't even close to needing so many bits.

Going back to 64-bit processors and memory. We've passed 32 bit address width about a decade ago. But even now, we're only at about twice that size on average. We're not even close to saturating 64-bit address width, and that will likely take at least a few hundred years as well. I'd say that's more than sufficient. The internet should've really become a 64-bit internet too.34 -

Consequences Associated with Burnout:

- sleep deprivation ✅

- change in eating habits ✅

- increased illness due to weakened immune system ✅

- difficulty concentrating and poor memory/attention ✅

- lack of productivity ✅

- poor performance ✅

- avoidance of responsibilities ✅

- loss of enjoyment ✅

Have I just been burnt out and living it as my norm for the past 5 years? 🤡3 -

Idea: Emoji passwords

Bdixbsufhdbe HEAR ME OUT

I know, I know, emojis belong with teenage girls on Snapchat but there are some theoretical benefits to emoji passwords.

Brute Force attacks are useless! With such a wide range of characters and so many different combinations, they just wouldn't be viable.

Dictionary attacks are less useful! Because those require...words.

They can be easier to remember. Tell a story with your emojis. Images are easier to commit to memory than combinations of letters and numbers.

Users would adopt the feature! For whatever reason, the general population fucking loves these things. So emoji passwords probably won't take very long to see use.

I don't know much about this last one, so I saved it for last, but I would imagine that decryption would be more difficult if the available values is quite vast. I dunno how rainbow tables and hash defucking works so I'll just put this here as a "maybe"

😀33 -

kernel: Out of memory: Kill process 3396 (postgres) score 167 or sacrifice child

Anyone has a child that I can borrow?5 -

I like memory hungry desktop applications.

I do not like sluggish desktop applications.

Allow me to explain (although, this may already be obvious to quite a few of you)

Memory usage is stigmatized quite a lot today, and for good reason. Not only is it an indication of poor optimization, but not too many years ago, memory was a much more scarce resource.

And something that started as a joke in that era is true in this era: free memory is wasted memory. You may argue, correctly, that free memory is not wasted; it is reserved for future potential tasks. However, if you have 16GB of free memory and don't have any plans to begin rendering a 3D animation anytime soon, that memory is wasted.

Linux understands this. Linux actually has three States for memory to be in: used, free, and available. Used and free memory are the usual. However, Linux automatically caches files that you use and places them in ram as "available" memory. Available memory can be used at any time by programs, simply dumping out whatever was previously occupying the memory.

And as you well know, ram is much faster than even an SSD. Programs which are memory heavy COULD (< important) be holding things in memory rather than having them sit on the HDD, waiting to be slowly retrieved. I much rather a web browser take up 4 GB of RAM than sit around waiting for it to read the caches image off my had drive.

Now, allow me to reiterate: unoptimized programs still piss me off. There's no need for that electron-based webcam image capture app to take three gigs of memory upon launch. But I love it when programs use the hardware I spent money on to run smoother.

Don't hate a program simply because it's at the top of task manager.6 -

Not just another Windows rant:

*Disclaimer* : I'm a full time Linux user for dev work having switched from Windows a couple of years ago. Only open Windows for Photoshop (or games) or when I fuck up my Linux install (Arch user) because I get too adventurous (don't we all)

I have hated Windows 10 from day 1 for being a rebel. Automatic updates and generally so many bugs (specially the 100% disk usage on boot for idk how long) really sucked.

It's got ads now and it's generally much slower than probably a Windows 8 install..

The pathetic memory management and the overall slower interface really ticks me off. I'm trying to work and get access to web services and all I get is hangups.

Chrome is my go-to browser for everything and the experience is sub par. We all know it gobbles up RAM but even more on Windows.

My Linux install on the same computer flies with a heavy project open in Android Studio, 25+ tabs in Chrome and a 1080p video playing in the background.

Up until the creators update, UI bugs were a common sight. Things would just stop working if you clicked them multiple times.

But you know what I'm tired of more?

The ignorant pricks who bash it for being Windows. This OS isn't bad. Sure it's not Linux or MacOS but it stands strong.

You are just bashing it because it's not developer friendly and it's not. It never advertises itself like that.

It's a full fledged OS for everyone. It's not dev friendly but you can make it as much as possible but you're lazy.

People do use Windows to code. If you don't know that, you're ignorant. They also make a living by using Windows all day. How bout tha?

But it tries to make you feel comfortable with the recent bash integration and the plethora of tools that Microsoft builds.

IIS may not be Apache or Nginx but it gets the job done.

Azure uses Windows and it's one of best web services out there. It's freaking amazing with dead simple docs to get up and running with a web app in 10 minutes.

I saw many rants against VS but you know it's one of the best IDEs out there and it runs the best on Windows (for me, at least).

I'm pissed at you - you blind hater you.

Research and appreciate the things good qualities in something instead of trying to be the cool but ignorant dev who codes with Linux/Mac but doesn't know shit about the advantages they offer.undefined windows 10 sucks visual studio unix macos ignorance mac terminal windows 10 linux developer22 -

Story, !rant.

This memory came up as I was commenting on another rant, and thought it was worthy of a better retelling.

So about a year or two ago, I had just gotten a Software Defined Radio, and was tinkering with it and looking around for cool stuff I could do with it. After stalking planes for a while (caught a 747 over my area 😎) I saw this program that decoded satellite images of earth, coming from the NOAA satellites. I thought this was amazing.

So I waited until one was over my area and let the software do its magic. The image was not great, since I had this set up on the first floor and there was a lot of material between me and the satellite.

So I came to the brilliant conclusion that I'd leave the program on automatic more (it will start sampling when the satellite is near) on my terrace, which should yield better results, right?

Perhaps. Who knows. Anyways, couple hours pass and we are running late to a family dinner. So we book it. Family dinner was great, good food and all, and was having fun, so never thought about my poor laptop, sitting alone in the night.

But then, when I was walking home in the rain... It hit me. I started running. I couldn't believe what I had done. Fast forward five minutes, and I'm out of breath, but home. I run upstairs, and see the laptop just sitting there, lid open, no lights on, and of course soaked right through.

I couldn't believe it. My only piece of tech at the time, and my only avenue for programming, gone. And I was 15, so I wasn't getting another one any time soon. Took it inside and drained the water out of it, and just left it there lying on its side.

Next day it worked just fine 🤣 the battery on my laptop only lasted max one hour, so by sheer luck it had lost power before the rain came. That is the one time I have to thank that battery for being such utter trash.7 -

What an absolute fucking disaster of a day. Strap in, folks; it's time for a bumpy ride!

I got a whole hour of work done today. The first hour of my morning because I went to work a bit early. Then people started complaining about Jenkins jobs failing on that one Jenkins server our team has been wanting to decom for two years but management won't let us force people to move to new servers. It's a single server with over four thousand projects, some of which run massive data processing jobs that last DAYS. The server was originally set up by people who have since quit, of course, and left it behind for my team to adopt with zero documentation.

Anyway, the 500GB disk is 100% full. The memory (all 64GB of it) is fully consumed by stuck jobs. We can't track down large old files to delete because du chokes on the workspace folder with thousands of subfolders with no Ram to spare. We decide to basically take a hacksaw to it, deleting the workspace for every job not currently in progress. This of course fucked up some really poorly-designed pipelines that relied on workspaces persisting between jobs, so we had to deal with complaints about that as well.

So we get the Jenkins server up and running again just in time for AWS to have a major incident affecting EC2 instance provisioning in our primary region. People keep bugging me to fix it, I keep telling them that it's Amazon's problem to solve, they wait a few minutes and ask me to fix it again. Emails flying back and forth until that was done.

Lunch time already. But the fun isn't over yet!

I get back to my desk to find out that new hires or people who got new Mac laptops recently can't even install our toolchain, because management has started handing out M1 Macs without telling us and all our tools are compiled solely for x86_64. That took some troubleshooting to even figure out what the problem was because the only error people got from homebrew was that the formula was empty when it clearly wasn't.

After figuring out that problem (but not fully solving it yet), one team starts complaining to us about a Github problem because we manage the github org. Except it's not a github problem and I already knew this because they are a Problem Team that uses some technical authoring software with Git integration but they only have even the barest understanding of what Git actually does. Turns out it's a Git problem. An update for Git was pushed out recently that patches a big bad vulnerability and the way it was patched causes problems because they're using Git wrong (multiple users accessing the same local repo on a samba share). It's a huge vulnerability so my entire conversation with them went sort of like:

"Please don't."

"We have to."

"Fine, here's a workaround, this will allow arbitrary code execution by anyone with physical or virtual access to this computer that you have sitting in an unlocked office somewhere."

"How do I run a Git command I don't use Git."

So that dealt with, I start taking a look at our toolchain, trying to figure out if I can easily just cross-compile it to arm64 for the M1 macbooks or if it will be a more involved fix. And I find all kinds of horrendous shit left behind by the people who wrote the tools that, naturally, they left for us to adopt when they quit over a year ago. I'm talking entire functions in a tool used by hundreds of people that were put in as a joke, poorly documented functions I am still trying to puzzle out, and exactly zero comments in the code and abbreviated function names like "gars", "snh", and "jgajawwawstai".

While I'm looking into that, the person from our team who is responsible for incident communication finally gets the AWS EC2 provisioning issue reported to IT Operations, who sent out an alert to affected users that should have gone out hours earlier.

Meanwhile, according to the health dashboard in AWS, the issue had already been resolved three hours before the communication went out and the ticket remains open at this moment, as far as I know.5 -

Okay, just wrote a program with memory allocation inside an accidental infinite loop and by the time I was able to kill it, it had already claimed 86% of my memory. Scared the shit out of me because my OS was CRAWLING for a while3

-

That moment when you thought you've fortified yourself with enough RAM for the future (32GB) and Blender fails to work with a large project because...it runs out of memory (just in the loading phase, building them intermediate data structures pushes it over the edge I guess).

Fml.

It was kind of fascinating to watch the memory usage indicator creep up though. Morbid fascination.3 -

I have to rant a bit about the toxic reactions to a constructive Q&A website.

People keep complaining that they get downvotes and corrections, or stuff like that.

Are you fucking kidding me?

So you expect people to spend their own time for absolutely free, to help you, while you don't even want to invest in describing the issue you're having properly? And then complain that people are having issues in understanding your questions?

Let's look at this scientifically. Let's gather up some questions that have been received badly on SO in the last few hours. From the top (simply put https://stackoverflow.com/questions... in front of the id):

47619033 - person wants a discussion about an algorithm while not providing any information about what worked and what failed. "Please write a program for me". Breaking at least 2 rules.

47619027 - "check out my videos" spam

47619030 - "Here's the manual that has my answer but I can't find my answer in it".

47619004 - "how do I keep variables in memory"

47618997 - debug this exception, I'll give you no info on what I tried and failed. Screw this, you guys figure this out, I'm going out for beer.

47618993 - expects everyone to guess what the input is, what the expected output is, and whether he has read what HashMap is in the manual. But sure, this question is so far the best out of all the bad ones.

47618985 - please write code according to my specifications

Should I go on? There wasn't a single clear question about problems in code in this entire small set. Be free to continue searching, let me know if you find something that:

1. You understand what's being asked

2. Answer is clear and non-ambiguous (ex. NOT "which language is the coolest?")

3. Not asking someone to write a program for them.

4. Answer is not found in the most basic form of manuals (ex. php.net)

5. Is about programming.

The point is:

If you get downvoted on Stackoverflow - then you wrote a shitty question. Instead of coming over here and venting uselessly, simply address the concerns and at least TRY to write a clear question if you expect any answers.5 -

I want a case/skin/idk for my lappy after I finally leave this company. I have this awful habit of associating things with memories. If the memory is bad, seeing the object reminds me of it, and e.g. makes me feel burned out again. So, I want to add a really pretty case to my lappy so it feels like my laptop instead of the company's.

I've found a few really beautiful ones on Etsy and Pinterest, but they're so ridiculously expensive! I really don't want to pay $90 🙁

Does anyone know where I can find alternatives?11 -

Help.

I'm a hardware guy. If I do software, it's bare-metal (almost always). I need to fully understand my build system and tweak it exactly to my needs. I'm the sorta guy that needs memory alignment and bitwise operations on a daily basis. I'm always cautious about processor cycles, memory allocation, and power consumption. I think twice if I really need to use a float there and I consider exactly what cost the abstraction layers I build come at.

I had done some web design and development, but that was back in the day when you knew all the workarounds for IE 5-7 by heart and when people were disappointed there wasn't going to be a XHTML 2.0. I didn't build anything large until recently.

Since that time, a lot has happened. Web development has evolved in a way I didn't really fancy, to say the least. Client-side rendering for everything the server could easily do? Of course. Wasting precious energy on mobile devices because it works well enough? Naturally. Solving the simplest problems with a gigantic mess of dependencies you don't even bother to inspect? Well, how else are you going to handle all your sensitive data?

I was going to compare this to the Arduino culture of using modules you don't understand in code you don't understand. But then again, you don't see consumer products or customer-specific electronics powered by an Arduino (at least not that I'm aware of).

I'm just not fit for that shooting-drills-at-walls methodology for getting holes. I'm not against neither easy nor pretty-to-look-at solutions, but it just comes across as wasteful for me nowadays.

So, after my hiatus from web development, I've now been in a sort of internet platform project for a few months. I'm now directly confronted with all that you guys love and hate, frontend frameworks and Node for the backend and whatever. I deliberately didn't voice my opinion when the stack was chosen, because I didn't want to interfere with the modern ways and instead get some experience out of it (and I am).

And now, I'm slowly starting to feel like it was OKAY to work like this.7 -

Soooo I think I have finally come to the point that I may have to create a YouTube channel, to teach software engineering from the ground up... and teach it the way the universities and everyone else should be teaching it, so that they have a solid foundation.... throwing hello world, and loops and variables at folks out of the box without any of the environment context or low level embedded register, even logic gate understanding

That lack of understanding is why, soooo many college students and younger folks, are actually pretty shitty engineers. Everything is high level languages and theoretical concepts to them. Nothing practical, that’s why there’s sooo many python and java developers that can’t for the life of them understand memory management, low level hardware interfacing etc, because the colleges don’t teach it the way it use to be taught.

I seriously fear 30 years from now or sooner when there are few embedded engineers only left till retirement, as without those folks the whole pyramid of electronics falls to pieces.

Java, C#, python, all that shit don’t run on the bare metal... there’s this magical layer of C, and assembler that does all the work just so folks can abstract their thoughts.

Either 1 of two situations will happen.. price of electronics will rise because the embedded guys are few and far between therefore salaries skyrocket... OR everything starts running shit like java on the metal, where there are a over abundance of developers, their salaries will be low because there are soo many but the processing power, space, and energy needed to run java natively causes electronics cost to increase

but regardless 30 years from now if those script kiddies are building everything I fear it cuz there’s gonna be memory leaks, and overflow issues everywhere.. shit be blowing up more than 4th of July.. lol

Soooo in effort to prevent that and keep the embedded engineers up, or atleast properly educate the script kiddies, I’m gonna make that YouTube channel.. 1 maybe 2 videos a week, 1-2 hours sessions each.. starting at the fucken ground and building up.39 -

I could bitch about XSLT again, as that was certainly painful, but that’s less about learning a skill and more about understanding someone else’s mental diarrhea, so let me pick something else.

My most painful learning experience was probably pointers, but not pointers in the usual sense of `char *ptr` in C and how they’re totally confusing at first. I mean, it was that too, but in addition it was how I had absolutely none of the background needed to understand them, not having any learning material (nor guidance), nor even a typical compiler to tell me what i was doing wrong — and on top of all of that, only being able to run code on a device that would crash/halt/freak out whenever i made a mistake. It was an absolute nightmare.

Here’s the story:

Someone gave me the game RACE for my TI-83 calculator, but it turned out to be an unlocked version, which means I could edit it and see the code. I discovered this later on by accident while trying to play it during class, and when I looked at it, all I saw was incomprehensible garbage. I closed it, and the game no longer worked. Looking back I must have changed something, but then I thought it was just magic. It took me a long time to get curious enough to look at it again.

But in the meantime, I ended up played with these “programs” a little, and made some really simple ones, and later some somewhat complex ones. So the next time I opened RACE again I kind of understood what it was doing.

Moving on, I spent a year learning TI-Basic, and eventually reached the limit of what it could do. Along the way, I learned that all of the really amazing games/utilities that were incredibly fast, had greyscale graphics, lowercase text, no runtime indicator, etc. were written in “Assembly,” so naturally I wanted to use that, too.

I had no idea what it was, but it was the obvious next step for me, so I started teaching myself. It was z80 Assembly, and there was practically no documents, resources, nothing helpful online.

I found the specs, and a few terrible docs and other sources, but with only one year of programming experience, I didn’t really understand what they were telling me. This was before stackoverflow, etc., too, so what little help I found was mostly from forum posts, IRC (mostly got ignored or made fun of), and reading other people’s source when I could find it. And usually that was less than clear.

And here’s where we dive into the specifics. Starting with so little experience, and in TI-Basic of all things, meant I had zero understanding of pointers, memory and addresses, the stack, heap, data structures, interrupts, clocks, etc. I had mastered everything TI-Basic offered, which astoundingly included arrays and matrices (six of each), but it hid everything else except basic logic and flow control. (No, there weren’t even functions; it has labels and goto.) It has 27 numeric variables (A-Z and theta, can store either float or complex numbers), 8 Lists (numeric arrays), 6 matricies (2d numeric arrays), 10 strings, and a few other things like “equations” and literal bitmap pictures.

Soo… I went from knowing only that to learning pointers. And pointer math. And data structures. And pointers to pointers, and the stack, and function calls, and all that goodness. And remember, I was learning and writing all of this in plain Assembly, in notepad (or on paper at school), not in C or C++ with a teacher, a textbook, SO, and an intelligent compiler with its incredibly helpful type checking and warnings. Just raw trial and error. I learned what I could from whatever cryptic sources I could find (and understand) online, and applied it.

But actually using what I learned? If a pointer was wrong, it resulted in unexpected behavior, memory corruption, freezes, etc. I didn’t have a debugger, an emulator, etc. I had notepad, the barebones compiler, and my calculator.

Also, iterating meant changing my code, recompiling, factory resetting my calculator (removing the battery for 30+ sec) because bugs usually froze it or corrupted something, then transferring the new program over, and finally running it. It was soo slowwwww. But I made steady progress.

Painful learning experience? Check.

Pointer hell? Absolutely.4 -

A few years ago I was browsing Bash.org, and a user posted that he'd physically lost a machine.

A few weeks ago, I'd switched my router out for OPNSense. I figured it was time to start cleaning up my network.

Over the course of tracking down IP addresses and assigning statics to mac addresses, I spotted an IP I didn't recognize.

Being a home network, I'm pretty familiar with everything on the network by IP, so was a little taken aback.

I did some testing, found out that it was a Linux box. Cool.

I can SSH into it. Ok.

Logs show that it's running fine, no CPU/Memory/Harddrive issues. Nice.

So where is it?

Traceroute shows its connected directly to the router... Maybe over an unmanaged switch...

Hostname is "localhost"... That's no help.

I've walked the network 4 times now, and God knows where it is.

I think maybe I'll just leave it alone. If it ain't broke...9 -

I've been coding for over 8 years, and whenever a recruiter says we have you do these coding challenges or recite them an algorithm from memory, I say "You know, the longer you've been programming, the less you remember how to do this stuff, because you don't use it in real life." They say, "Well we just want to see how you think and how you solve problems." B.S.

These types of algorithmic programming challenges besides the simpler ones don't show how you think. A lot of stuff like the dynamic programming and other optimization problems were solved by phd professors after many years of research. Nobody would think up these solutions on their own.

These programming challenges weed out

experienced developers unless they want to

take the time to re-learn this stuff. It explains why google, facebook or amazon are filled with young and inexperienced developers and how come it takes so many thousands of them to get anything done, and they still have buggy products...19 -

I think Suicide Linux is a little heavy handed. Really? One mistake erases your hard drive? Please, let's make things interesting.

I would rather use Casino Linux.... try your luck. Any mistake sets a random single bit on your computer to 0. You could be lucky and it would set unused memory to 0, doing nothing. You could be an unlucky bastard and corrupt everything. You could be a super unlucky bastard and corrupt something important and only find out much, much too late.

I'm asking for $90,000 to start my business for 2% share of my company9 -

Dev: Hi Guys, we've noticed on crashlytics that one of your screens has a small crash. Can you look?

Me: Ok we had a look, and it looks to us to be a memory leak issue on most of the other screens. Homepage, Search, Product page etc. all seem to have sizeable memory leaks. We have a few crashes on our screens saying iPhone 11's (which have 4gb of ram) are crashing with only 1% of ram left.

What we think is happening is that we have weak references to avoid circular dependencies. Our weak references are most likely the only things the system would be able to free up, resulting in our UI not being able to contact the controller, breaking everything. Because of the custom libraries you built that we have to use, we can't really catch this.

Theres not really a lot we can do. We are following apples recommendations to avoid circular dependencies and memory leaks. The instruments say our screens are behaving fine. I think you guys will have to fix the leaks. Sorry.

Dev 1: hhhmm, what if you create a circular dependency? Then the UI won't loose any of the data.

Dev 2: Have you tried looking at our analytics to understand how the user is getting to your screens?

=================================

I've been sitting here for 15 minutes trying to figure out how to respond before they come online. I am fucking horrified by those responses to "every one of your screens have memory leaks"2 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.