Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "no memory"

-

Interview with a candidate. He calls himself "C++ expert" on his resume. I think: "oh, great, I love C++ too, we will have an interesting conversation!"

Me: let's start with an easy one, what is 'nullptr'?

Him: (...some undecipherable sequence of words that didn't make any sense...)

In my mind: mh, probably I didn't understand right. Let's try again with something simple and more generic

Me: can you tell me about memory management in C++?

Him: you create objects on the stack with the 'new' keyword and they get automatically released when no other object references them

In my mind: wtf is this guy talking about? Is he confusing C++ with Java? Does he really know C++? Let's make him write some code, just to be sure

Me: can you write a program that prints numbers from 1 to 10?

Ten minutes and twenty mistakes later...

Me: okay, so what is this <int> here in angle brackets? What is a template?

Him: no idea

Me: you wrote 'cout', why sometimes do I see 'std::cout' instead? What is 'std'?

Answer: no idea, never heard of 'std'

I think: on his resume he also said he is a Java expert. Let's see if he knows the difference between the two. He *must* have noticed that one is byte-compiled and the other one is compiled to native code! Otherwise, how does he run his code? He must answer this question correctly:

Me: what is the difference between Java and C++? One has a Virtual Machine, what about the other?

Him: Java has the Java Virtual Machine

Me: yes, and C++?

Him: I guess C++ has a virtual machine too. The C++ Virtual Machine

Me (exhausted): okay, I don't have any other questions, we will let you know

And this is the story of how I got scared of interviews29 -

Being paid to rewrite someone else's bad code is no joke.

I'll give the dev this, the use of gen 1,2,3 Pokemon for variable names and class names in beyond fantastic in terms of memory and childhood nostalgia. It would be even more fantastic if he spelt the names correctly, or used it to make a Pokemon game and NOT A FUCKING ACCOUNTANCY PROGRAM.

There's no correspondence in name according to type, or even number. Dev has just gone batshit, left zero comments, and now somehow Ryhorn is shitting out error codes because of errors existing in Charmeleon's asshole.

The things I do for money...24 -

Toilets and race conditions!

A co-worker asked me what issues multi-threading and shared memory can have. So I explained him that stuff with the lock. He wasn't quite sure whether he got it.

Me: imagine you go to the toilet. You check whether there's enough toilet paper in the stall, and it is. BUT now someone else comes in, does business and uses up all paper. CPUs can do shit very fast, can't they? Yeah and now you're sitting on the bowl, and BAMM out of paper. This wouldn't have happened if you had locked the stall, right?

Him: yeah. And with a single thread?

Me: well if you're alone at home in your appartment, there's no reason to lock the door because there's nobody to interfere.

Him: ah, I see. And if I have two threads, but no shared memory, then it is as if my wife and me are at home with each a toilet of our own, then we don't need to lock either.

Me: exactly!11 -

Account guy saw me coding...

account guy: so you type a lot.. how can you remember so much??

me: ??

account guy: I mean there is NO LOGIC in what you do, so you must read these things and type them here... you need to remember a lot.. right??

me: ohh... that... well.. I have very good memory :)

p.s. last line was sarcasm12 -

Had a PR blocked yesterday. Oh god, have I introduced a memory leak? Have I not added unit tests? Is there a bug? What horrible thing have I unknowingly done?

... added comments to some code.

Yep apparently “our code needs to be readable without comments, please remove them”.

Time to move on, no signs of intelligent life here.38 -

So I cracked prime factorization. For real.

I can factor a 1024 bit product in 11hours on an i3.

No GPU acceleration, no massive memory overhead. Probably a lot faster with parallel computation on a better cpu, or even on a gpu.

4096 bits in 97-98 hours.

Verifiable. Not shitting you. My hearts beating out of my fucking chest. Maybe it was an act of god, I don't know, but it works.

What should I do with it?228 -

In a user-interface design meeting over a regulatory compliance implementation:

User: “We’ll need to input a city.”

Dev: “Should we validate that city against the state, zip code, and country?”

User: “You are going to make me enter all that data? Ugh…then make it a drop-down. I select the city and the state, zip code auto-fill. I don’t want to make a mistake typing any of that data in.”

Me: “I don’t think a drop-down of every city in the US is feasible.”

Manage: “Why? There cannot be that many. Drop-down is fine. What about the button? We have a few icons to choose from…”

Me: “Uh..yea…there are thousands of cities in the US. Way too much data to for anyone to realistically scroll through”

Dev: “They won’t have to scroll, I’ll filter the list when they start typing.”

Me: “That’s not really the issue and if they are typing the city anyway, just let them type it in.”

User: “What if I mistype Ch1cago? We could inadvertently be out of compliance. The system should never open the company up for federal lawsuits”

Me: “If we’re hiring individuals responsible for legal compliance who can’t spell Chicago, we should be sued by the federal government. We should validate the data the best we can, but it is ultimately your department’s responsibility for data accuracy.”

Manager: “Now now…it’s all our responsibility. What is wrong with a few thousand item drop-down?”

Me: “Um, memory, network bandwidth, database storage, who maintains this list of cities? A lot of time and resources could be saved by simply paying attention.”

Manager: “Memory? Well, memory is cheap. If the workstation needs more memory, we’ll add more”

Dev: “Creating a drop-down is easy and selecting thousands of rows from the database should be fast enough. If the selection is slow, I’ll put it in a thread.”

DBA: “Table won’t be that big and won’t take up much disk space. We’ll need to setup stored procedures, and data import jobs from somewhere to maintain the data. New cities, name changes, ect. ”

Manager: “And if the network starts becoming too slow, we’ll have the Networking dept. open up the valves.”

Me: “Am I the only one seeing all the moving parts we’re introducing just to keep someone from misspelling ‘Chicago’? I’ll admit I’m wrong or maybe I’m not looking at the problem correctly. The point of redesigning the compliance system is to make it simpler, not more complex.”

Manager: “I’m missing the point to why we’re still talking about this. Decision has been made. Drop-down of all cities in the US. Moving on to the button’s icon ..”

Me: “Where is the list of cities going to come from?”

<few seconds of silence>

Dev: “Post office I guess.”

Me: “You guess?…OK…Who is going to manage this list of cities? The manager responsible for regulations?”

User: “Thousands of cities? Oh no …no one is our area has time for that. The system should do it”

Me: “OK, the system. That falls on the DBA. Are you going to be responsible for keeping the data accurate? What is going to audit the cities to make sure the names are properly named and associated with the correct state?”

DBA: “Uh..I don’t know…um…I can set up a job to run every night”

Me: “A job to do what? Validate the data against what?”

Manager: “Do you have a point? No one said it would be easy and all of those details can be answered later.”

Me: “Almost done, and this should be easy. How many cities do we currently have to maintain compliance?”

User: “Maybe 4 or 5. Not many. Regulations are mostly on a state level.”

Me: “When was the last time we created a new city compliance?”

User: “Maybe, 8 years ago. It was before I started.”

Me: “So we’re creating all this complexity for data that, realistically, probably won’t ever change?”

User: “Oh crap, you’re right. What the hell was I thinking…Scratch the drop-down idea. I doubt we’re have a new city regulation anytime soon and how hard is it to type in a city?”

Manager: “OK, are we done wasting everyone’s time on this? No drop-down of cities...next …Let’s get back to the button’s icon …”

Simplicity 1, complexity 0.16 -

And, the other side, husbands 😂

——————————————————–

Dear Technical Support,

Last year I upgraded from Boyfriend 5.0 to Husband 1.0 and noticed a distinct slow down in overall system performance — particularly in the flower and jewelry applications, which operated flawlessly under Boyfriend 5.0. The new program also began making unexpected changes to the accounting modules.

In addition, Husband 1.0 uninstalled many other valuable programs, such as Romance 9.5 and Personal Attention 6.5 and then installed undesirable programs such as NFL 5.0, NBA 3.0, and Golf Clubs 4.1.

Conversation 8.0 no longer runs, and Housecleaning 2.6 simply crashes the system. I’ve tried running Nagging 5.3 to fix these problems, but to no avail.

What can I do?

Signed,

Desperate

——————————————————–

Dear Desperate:

First keep in mind, Boyfriend 5.0 is an Entertainment Package, while Husband 1.0 is an Operating System.

Please enter the command: ” C:/ I THOUGHT YOU LOVED ME” and try to download Tears 6.2 and don’t forget to install the Guilt 3.0 update.

If that application works as designed, Husband 1.0 should then automatically run the applications Jewelry 2.0 and Flowers 3.5. But remember, overuse of the above application can cause Husband 1.0 to default to Grumpy Silence 2.5, Happy Hour 7.0 or Beer 6.1.

Beer 6.1 is a very bad program that will download the Snoring Loudly Beta.

Whatever you do, DO NOT install Mother-in-law 1.0 (it runs a virus in the background that will eventually seize control of all your system resources).

Also, do not attempt to reinstall the Boyfriend 5.0 program. These are unsupported applications and will crash Husband 1.0.

In summary, Husband 1.0 is a great program, but it does have limited memory and cannot learn new applications quickly.

You might consider buying additional software to improve memory and performance. We recommend Food 3.0 and Hot Lingerie 7.7.

Good Luck,

Tech Support3 -

This facts are killing me

"During his own Google interview, Jeff Dean was asked the implications if P=NP were true. He said, "P = 0 or N = 1." Then, before the interviewer had even finished laughing, Jeff examined Google’s public certificate and wrote the private key on the whiteboard."

"Compilers don't warn Jeff Dean. Jeff Dean warns compilers."

"gcc -O4 emails your code to Jeff Dean for a rewrite."

"When Jeff Dean sends an ethernet frame there are no collisions because the competing frames retreat back up into the buffer memory on their source nic."

"When Jeff Dean has an ergonomic evaluation, it is for the protection of his keyboard."

"When Jeff Dean designs software, he first codes the binary and then writes the source as documentation."

"When Jeff has trouble sleeping, he Mapreduces sheep."

"When Jeff Dean listens to mp3s, he just cats them to /dev/dsp and does the decoding in his head."

"Google search went down for a few hours in 2002, and Jeff Dean started handling queries by hand. Search Quality doubled."

"One day Jeff Dean grabbed his Etch-a-Sketch instead of his laptop on his way out the door. On his way back home to get his real laptop, he programmed the Etch-a-Sketch to play Tetris."

"Jeff Dean once shifted a bit so hard, it ended up on another computer. "5 -

Apple has a real problem.

Their hardware has always been overpriced, but at least before it had defenders pointing out that it was at least capable and well made.

I know, I used to be one of them.

Past tense.

They have jumped the shark.

They now make pretentious hipster crap that is massively overpriced and doesn't have the basic features (like hardware ports) to enable you to do your job.

I mean, who needs an ESC key? What is wrong with learning to type CTRL-[ instead? Muscle memory? What's that?

They have gone from "It just works" to "It just doesn't work" in no time at all.

And it is Developers who are most pissed off. A tiny demographic who won't be visible on the financial bottom line until their newly absent software suddenly makes itself known two, three years down the line.

By which time it is too late to do anything.

But hey! Look how thin (and thermally throttled) my new laptop is!19 -

Me: you should not open that log file in excel its almost 700mb

Client: its okay, my computer has 4gb ram

Me: *looking at clients computer crashing*

Client: the file is broken!

Me: no, you just need to use a more memory efficient tool, like R, SAS, python, C#, or like anything else!5 -

Senior development manager in my org posted a rant in slack about how all our issues with app development are from

“Constantly moving goalposts from version to version of Xcode”

It took me a few minutes to calm myself down and not reply. So I’ll vent here to myself as a form of therapy instead.

Reality Check:

- You frequently discuss the fact that you don’t like following any of apples standards or app development guidelines. Bit rich to say the goalposts are moving when you have your back to them.

- We have a custom everything (navigation stack handler, table view like control etc). There’s nothing in these that can’t be done with the native ones. All that wasted dev time is on you guys.

- Last week a guy held a session about all the memory leaks he found in these custom libraries/controls. Again, your teams don’t know the basic fundamentals of the language or programming in general really. Not sure how that’s apples fault.

- Your “great emphasis on unit testing” has gotten us 21% coverage on iOS and an Android team recently said to us “yeah looks like the tests won’t compile. Well we haven’t touched them in like a year. Just ignore them”. Stability of the app is definitely on you and the team.

- Having half the app in react-native and half in native (split between objective-c and swift) is making nobodies life easier.

- The company forces us to use a custom built CI/CD solution that regularly runs out of memory, reports false negatives and has no specific mobile features built in. Did apple force this on us too?

- Shut the fuck up5 -

Yesterday was Friday the 13th, so here is a list of my worst dev nightmares without order of significance:

1) Dealing with multithreaded code, especially on Android

2) Javascript callback hell

3) Dependency hell, especially in Python

4) Segfaults

5) Memory Leaks

6) git conflicts

7) Crazy regexes and string manipulations

8) css. Fuck css.

9) not knowing jack shit about something but expected by others to

produce a result with it.

10) 3+ hours of debugging with no success

Post yours26 -

We had the most fucking retarded client today. No, seriously, if you ever beat their level you have a serious mental issue.

They had a mail problem for which they'd need to check at the side of another company since we don't have those fucking logs.

Their statements:

- they entered an email address In the text field of mail-tester.com and were furious that they didn't get the results sent.

Note: it says right on that page that YOU JUST NEED TO SEND THE EMAIL ADDRESS WHICH IS PRE-ENTRRED IN THAT TEXT FIELD AN EMAIL.

- their company has been a reputable 'conservative' company which hasn't done anything wrong since 19xx so the fact that they'd end up on a blacklist was FUCKING OUTRAGEOUS and bullshit.

- our support wasn't willing to help and only willing to tell them outrageous lies.

- the other it company was only reachable at a premium number and thus expensive to call.

Emails back and forth and finally they CC'd the other company. They're reply was fucking priceless:

"we never had a premium number. Feel free to call us on *number* any time during the week between *time* and *time*.

Then he told us that we should just go back to sleep.

It was way worse than that but due to privacy and my own memory this is all I can tell.

Just wow.3 -

Story about an obscure bug: https://twitter.com/mmalex/status/...

"We had a ‘fun’ one on LittleBigPlanet 1: 2 weeks to gold, a Japanese QA tester started reliably crashing the game by leaving it on over night. We could not repro. Like you, days of confirmation of identical environment, os, hardware, etc; each attempt took over 24h, plus time differences, and still no repro.

"Eventually we realised they had an eye toy plugged in, and set to record audio (that took 2 days of iterating) still no joy.

"Finally we noticed the crash was always around 4am. Why? What happened only in Japan at 4am? We begged to find out.

"Eventually the answer came: cleaners arrived. They were more thorough than our cleaners! One hour of vacuuming near the eye toy- white noise- caused the in game chat audio compression to leak a few bytes of memory (only with white noise). Long enough? Crash.

"Our final repro: radios tuned to noise, turned up, and we could reliably crash the game. Fix took 5 minutes after that. Oh, gamedev...."5 -

Google: “Your websites must load the first byte in under 500ms and be fully loaded with no render blocking and local caching of all external site callouts to even begin to rank in Google searches.”

Me: “Ok, Google. Your wish is my command.”

*Looks at Chrome’s memory usage to load a blank page* 7

7 -

Experience that made me feel like a dev badass?

Users requested the ability to 'send' information from one application to another. Couple of our senior devs started out saying it would be impossible (there is no way to pass objects across a machine's memory boundary), then entertained the idea of utilizing the various messaging frameworks such as Microsoft's ServiceBus and RabbitMQ, but came up with a plan to use 2 WebAPI services (one messenger, one receiver) along with a homegrown messaging API (the clients would 'poll' the services looking for message) because ServiceBus, RabbitMQ, etc might not be able to scale to our needs. Their initial estimates were about 6 months development for the two services, hardware requirement for two servers, MSSQL server licenses, and padded an additional 6 months for client modifications. Very...very proud of their detailed planning.

I thought ...hmmm...I've done memory maps and created simple TCP/IP hosts that could send messages back and forth between other apps (non-UI), WPF couldn't be that much different.

In an afternoon, I came up with this (see attached), and showed the boss. Guess which solution we're going with.

The two devs are still kinda pissed at me. One still likes say as I walk in the room "our hero returns"....frack him. 11

11 -

Someone on a C++ learning and help discord wanted to know why the following was causing issues.

char * get_some_data() {

char buffer[1000];

init_buffer(&buffer[0]);

return &buffer[0];

}

I told them they were returning a pointer to a stack allocated memory region. They were confused, didn't know what I was talking about.

I pointed them to two pretty decently written and succinct articles, the first about stack vs. heap, and the second describing the theory of ownership and lifetimes. I instructed to give them a read, and to try to understand them as best as possible, and to ping me with any questions. Then I promised to explain their exact issue.

Silence for maybe five minutes. They disregard the articles, post other code saying "maybe it's because of this...". I quickly pointed them back at their original code (the above) and said this is 100% an issue you're facing. "Have you read the articles?"

"Nope" they said, "I just skimmed through them, can you tell me what's wrong with my code?"

Someone else chimed in and said "you need to just use malloc()." In a C++ room, no less.

I said "@OtherGuy please don't blindly instruct people to allocate memory on the heap if they do not understand what the heap is. They need to understand the concepts and the problems before learning how C++ approaches the solution."

I was quickly PM'd by one of the server's mods and told that I was being unhelpful and that I needed to reconsider my tone.

Fuck this industry. I'm getting so sick of it.24 -

Today, I was told to investigate why the software doesn't work on "some" computers. I had no previous experience with that particular software but I just had to make some tests... easy, right? As soon as I ran the software, my computer crashed (I literally had to restart the pc). I asked my colleagues if I did something wrong but the set up seemed ok.

Later, in a random discussion about the software I found out it does "a little memory allocation". I opened the performance tab in task manager and ran the software again. In an instant, the RAM went from 1.3GB to 7.66GB (my pc has 8GB of RAM).

In an attempt to find how such a monstrosity was creater, I found out the developer that made the software had 16GB of RAM on his pc.

I have found something that eats RAM more than Chrome... brace yourselves.8 -

One of my friend at college asked me why her computer is running slow even when she is running only chrome.

Me: how much memory does it have?

Her: 1TB.

Me (somewhat confused): no no I meant RAM.

Her: yeah yeah it's one TB. I read the specifications of the laptop.

Me: *in my mind, fucking read it again* please read it again. You must have misread it.

Her( grinning face ): alright.

Guess who didn't talk to me for a week. 😂14 -

Teacher: Computer settings are stored in the ROM on the motherboard.

Me: *internally* Uhm, yea, sure... and I am the pope

Me: Sorry to interrupt you but how come the BIOS settings get reset when the CMOS battery is pulled out or dies if they are stored in ROM?

Teacher: ....

Me: *internally* yea, that's what I thought, you have no clue what you are even saying - the BIOS is stored in ROM or flash memory while the settings are stored in NVRAM also called CMOS memory...10 -

I had to open the desktop app to write this because I could never write a rant this long on the app.

This will be a well-informed rebuttal to the "arrays start at 1 in Lua" complaint. If you have ever said or thought that, I guarantee you will learn a lot from this rant and probably enjoy it quite a bit as well.

Just a tiny bit of background information on me: I have a very intimate understanding of Lua and its c API. I have used this language for years and love it dearly.

[START RANT]

"arrays start at 1 in Lua" is factually incorrect because Lua does not have arrays. From their documentation, section 11.1 ("Arrays"), "We implement arrays in Lua simply by indexing tables with integers."

From chapter 2 of the Lua docs, we know there are only 8 types of data in Lua: nil, boolean, number, string, userdata, function, thread, and table

The only unfamiliar thing here might be userdata. "A userdatum offers a raw memory area with no predefined operations in Lua" (section 26.1). Essentially, it's for the API to interact with Lua scripts. The point is, this isn't a fancy term for array.

The misinformation comes from the table type. Let's first explore, at a low level, what an array is. An array, in programming, is a collection of data items all in a line in memory (The OS may not actually put them in a line, but they act as if they are). In most syntaxes, you access an array element similar to:

array[index]

Let's look at c, so we have some solid reference. "array" would be the name of the array, but what it really does is keep track of the starting location in memory of the array. Memory in computers acts like a number. In a very basic sense, the first sector of your RAM is memory location (referred to as an address) 0. "array" would be, for example, address 543745. This is where your data starts. Arrays can only be made up of one type, this is so that each element in that array is EXACTLY the same size. So, this is how indexing an array works. If you know where your array starts, and you know how large each element is, you can find the 6th element by starting at the start of they array and adding 6 times the size of the data in that array.

Tables are incredibly different. The elements of a table are NOT in a line in memory; they're all over the place depending on when you created them (and a lot of other things). Therefore, an array-style index is useless, because you cannot apply the above formula. In the case of a table, you need to perform a lookup: search through all of the elements in the table to find the right one. In Lua, you can do:

a = {1, 5, 9};

a["hello_world"] = "whatever";

a is a table with the length of 4 (the 4th element is "hello_world" with value "whatever"), but a[4] is nil because even though there are 4 items in the table, it looks for something "named" 4, not the 4th element of the table.

This is the difference between indexing and lookups. But you may say,

"Algo! If I do this:

a = {"first", "second", "third"};

print(a[1]);

...then "first" appears in my console!"

Yes, that's correct, in terms of computer science. Lua, because it is a nice language, makes keys in tables optional by automatically giving them an integer value key. This starts at 1. Why? Lets look at that formula for arrays again:

Given array "arr", size of data type "sz", and index "i", find the desired element ("el"):

el = arr + (sz * i)

This NEEDS to start at 0 and not 1 because otherwise, "sz" would always be added to the start address of the array and the first element would ALWAYS be skipped. But in tables, this is not the case, because tables do not have a defined data type size, and this formula is never used. This is why actual arrays are incredibly performant no matter the size, and the larger a table gets, the slower it is.

That felt good to get off my chest. Yes, Lua could start the auto-key at 0, but that might confuse people into thinking tables are arrays... well, I guess there's no avoiding that either way.13 -

I taught my 9yo sister to SSH from my Arch Linux system to an Ubuntu system, she was amazed to see terminal and Firefox launching remotely. Next I taught her to murder and eat all the memory (I love Linux, as Batman, one should also know the weaknesses). Now she can rm rf / --no-preserve-root and the forkbomb. She's amazed at the power of one liners. Will be teaching her python as she grew fond of my Raspberry Pi zero w with blinkt and phat DAC, making rainbows and playing songs via mpg123.

I made her use play with Makey Makey when it first came out but it isn't as interesting. Drop your suggestions which could be good for her learning phase?13 -

Storytime

A story about an Android TVbox which decided to become an iPad

Several years ago we've bought an android tv-box.

It served me and my family well for several years.

Specs are not that important in this story, but there they are:

Android 4.4

1GB RAM

Amlogic quadcore 1.4HGz

8GB memory.

This device served us well - online TV, browsing, music, file sharing and so on. But recently cheap Chinese memory deciteed to take a break and damaged ROM. Because of that device won't boot. The only option was to take it apart and "short circuit" certain legs on memory chip and make it boot from SD card and install new firmware. After such operations tv-box worked well again.

Hoverer, memory glitched again and again and this algorithm was repeated for several months.

But that is not what is this story about.

One day memory went completely crazy and there was no way to install new firmware on it. It just hanged on install. (BTW, it was official firmware for this device)

But after countless attempts it finally worked! It installed the firmware and booted into launcher and connected to WiFi!

But now comes the most interesting part.

It was not android anymore.

It decided to became an iPad.

My dad logged in to his Google account via tv-box and got mail that someboby connected from our IP via iPad (we don't have an iPad) and using safari browser! Stock browser is not safari browser.....

"Ok, nvm, crazy glitch." - we thought.

But preinstalled play marked wont launch. Because he told us, that we're trying to connect from iPad.

And Google chrome page suggested to download chrome for iPad

And everything was acting like it is an iPad.

OK, downloaded iTunes, why not??? ._.

Tried to install elixir for android via apk from flash, but then memory glitched one more time, everything went black and tv-box had damaged ROM again...

After that we decided to not torment it anymore...

That's it. Poor Android TVbox that all his life dreamed to become an iPad. Rest in peace.2 -

I just mistyped a keyboard shortcut that caused my computer to say «I AM FILO AND EVERYONE LOVES ME» at full volume.

I have no memory of leaving a script attached to some random shortcut, and I can't find the setting anywhere.

Young me was a narcissistic asshole1 -

Would the web be better off, if there was zero frontend scripting? There would be HTML5 video/audio, but zero client side JS.

Browsers wouldn't understand script tags, they wouldn't have javascript engines, and they wouldn't have to worry about new standards and deprecations.

Browsers would be MUCH more secure, and use way less memory and CPU resources.

What would we really be missing?

If you build less bloated pages, you would not really need ajax calls, page reloads would be cheap. Animated menus do not add anything functionally, and could be done using css as well. Complicated webapps... well maybe those should just be desktop/mobile apps.

Pages would contain less annoying elements, no tracking or crypto mining scripts, no mouse tracking, no exploitative spam alerts.

Why don't we just deprecate JS in the browser, completely?

I think it would be worth it.22 -

A small bug is found.

Chad dev:

😎 *Exists*

> Writes a simple ad hoc solution in a few lines

> Self documenting code with constant run time

> No external dependencies needed

> Fixes the bug, easy to test and does not introduce any new issues

That guy nobody likes (AKA. regex simp coder):

🤡 'This can be "simplified" into oNE LiNe'

> Writes a long regex expression that has to line wrap the editor window several times

> Writes an essay in the comments to explain it's apparent brilliance to the peasant reader

> Exponential run time (bwahahah), excessive memory requirements

> Needs to import additional frameworks, requires more testing that will delay release schedule

> Also fixes bug but the software now needs 2x ram to run and is 3x slower

> Really puts the "simp" in simplified, but not the way you would expect22 -

Him: Relation databases are stupid; SQL injections, complex relationships, redundant syntax and so much more!

Me: so what should we use instead? Mongo, redis, some other fancy new db?

Him: no, I have this class in Java, it loads all the data into memory and handles transfers with http.

Me: ...... Bye!5 -

Why computers are like men:

In order to get their attention, you have to turn them on.

They have a lot of data, but are still clueless.

They are supposed to help you solve problems, but half the time they are the problem.

As soon as you commit to one, you realize that if you had waited a little longer, you could have had a better model.

Why computers are like women:

No one but the Creator understands their internal logic.

The native language they use to communicate with other computers is incomprehensible to everyone else.

Even your smallest mistakes are stored in long-term memory for later retrieval.

As soon as you make a commitment to one, you find yourself spending half your paycheck on accessories for it.7 -

I like memory hungry desktop applications.

I do not like sluggish desktop applications.

Allow me to explain (although, this may already be obvious to quite a few of you)

Memory usage is stigmatized quite a lot today, and for good reason. Not only is it an indication of poor optimization, but not too many years ago, memory was a much more scarce resource.

And something that started as a joke in that era is true in this era: free memory is wasted memory. You may argue, correctly, that free memory is not wasted; it is reserved for future potential tasks. However, if you have 16GB of free memory and don't have any plans to begin rendering a 3D animation anytime soon, that memory is wasted.

Linux understands this. Linux actually has three States for memory to be in: used, free, and available. Used and free memory are the usual. However, Linux automatically caches files that you use and places them in ram as "available" memory. Available memory can be used at any time by programs, simply dumping out whatever was previously occupying the memory.

And as you well know, ram is much faster than even an SSD. Programs which are memory heavy COULD (< important) be holding things in memory rather than having them sit on the HDD, waiting to be slowly retrieved. I much rather a web browser take up 4 GB of RAM than sit around waiting for it to read the caches image off my had drive.

Now, allow me to reiterate: unoptimized programs still piss me off. There's no need for that electron-based webcam image capture app to take three gigs of memory upon launch. But I love it when programs use the hardware I spent money on to run smoother.

Don't hate a program simply because it's at the top of task manager.6 -

F*cking Samsung's alarm clock.

I really needed to wake up early today so I added secondary alarm little bit earlier. It was supposed to ring at 5:20 and second one shall ring 5:30. But Samsung said no.

Update came thru night and phone was restarted in process. Why it can't keep memory unlocked I don't understand, but OK. But it effectively means it was not able to trigger alarm clock. So I woke up at 6:35 and came more than hour late.

Why such basic functionality failed? My old Sony Erricsson T290i can ring even when powered down. Same as my Nokia and after that Lumia with Windows Phone 10. Why can't Samsung just be normal.11 -

Boss wants to scale our webservers because it seems they're having performance/capacity issues....

I'VE BEEN TELLING HIM FOR WEEKS IT'S NOT THE SERVERS!!! IT'S THE FACT THAT EVERY SINGLE QUERY HITS A SINGLE MONGODB... AND NO CACHE EITHER... AND THE DB CANT BE ENTIRELY LOADED INTO MEMORY AS ITS TOO BIG FOR RAM ON A SINGLE SERVER...

HOW THE FUCK CAN YOU SCALE IF EVERYTHING HAS A DEPENDENCY ON 1 NON-DISTRIBUTED DATABASE?6 -

It's march, I'm in my final year of university. The physics/robotics simulator I need for my major project keeps running into problems on my laptop running Ubuntu, and my supervisor suggests installing Mint as it works fine on that.

I backup what's important across a 4GB and a 16GB memory stick. All I have to do now is boot from the mint installation disk and install from there. But no, I felt dangerous. I was about to kill anything I had, so why not `sudo rm -rf /*` ? After a couple seconds it was done. I turned it off, then back on. I wanted to move my backups to windows which I was dual booting alongside Ubuntu.

No OS found. WHAT. Called my dad, asked if what I thought happened was true, and learnt that the root directory contains ALL files and folders, even those on other partitions. Gone was the past 2 1/2 years of uni work and notes not on the uni computers and the 100GB+ other stuff on there.

At least my current stuff was backed up.

TL;DR : sudo rm -rf /* because I'm installing another Linux distro. Destroys windows too and 2 1/2 years of uni work. 13

13 -

Programmers then:

No problem NASA mate, we can use these microcontrollers to bring men to the moon no problem!

Programmers now:

Help Stack Overflow, my program is kill.. isn't 90GB (looking at you Evolution) and 400GB of virtual memory (looking at you Gitea) for my app completely normal? I thought that unused memory was wasted memory!1!

(400GB in physical memory is something you only find in the most high-end servers btw)9 -

Meet 'SBI Online' app from Play Store, in their own words:

What they were supposed to do?

"Experience the new Retail Internet Banking of SBI"

What they do?

"SBI online app will redirect to SBI Retail Internet Banking (online SBI) site"

Why do they have app?

"No need to remember URL",

"Less memory space required on device"

App storage space?

F**king 2.6 MB, just to redirect users to their website, in third-party browser.2 -

A few years ago I was browsing Bash.org, and a user posted that he'd physically lost a machine.

A few weeks ago, I'd switched my router out for OPNSense. I figured it was time to start cleaning up my network.

Over the course of tracking down IP addresses and assigning statics to mac addresses, I spotted an IP I didn't recognize.

Being a home network, I'm pretty familiar with everything on the network by IP, so was a little taken aback.

I did some testing, found out that it was a Linux box. Cool.

I can SSH into it. Ok.

Logs show that it's running fine, no CPU/Memory/Harddrive issues. Nice.

So where is it?

Traceroute shows its connected directly to the router... Maybe over an unmanaged switch...

Hostname is "localhost"... That's no help.

I've walked the network 4 times now, and God knows where it is.

I think maybe I'll just leave it alone. If it ain't broke...9 -

!rant, but you're my friends and I want to share my day...

We've had a problem open since last March (before I started), but our teams identified the issue with the customer's code 2 years ago. No one made progress on it until I took it over. The newest version deployed 3 months ago and has no memory leak. I closed out oldest problem today.

On a personal note, I got quotes for my dj and photographer for my wedding next month, and the price for both is what I would've been willing to pay for one. My wedding was supposed to be very inexpensive, with these and my bartender being the most expensive parts, but due to unfortunate events, my wedding is 4x the cost (have to use a venue, backyard unavailable, which changes ALL my plans).4 -

I could bitch about XSLT again, as that was certainly painful, but that’s less about learning a skill and more about understanding someone else’s mental diarrhea, so let me pick something else.

My most painful learning experience was probably pointers, but not pointers in the usual sense of `char *ptr` in C and how they’re totally confusing at first. I mean, it was that too, but in addition it was how I had absolutely none of the background needed to understand them, not having any learning material (nor guidance), nor even a typical compiler to tell me what i was doing wrong — and on top of all of that, only being able to run code on a device that would crash/halt/freak out whenever i made a mistake. It was an absolute nightmare.

Here’s the story:

Someone gave me the game RACE for my TI-83 calculator, but it turned out to be an unlocked version, which means I could edit it and see the code. I discovered this later on by accident while trying to play it during class, and when I looked at it, all I saw was incomprehensible garbage. I closed it, and the game no longer worked. Looking back I must have changed something, but then I thought it was just magic. It took me a long time to get curious enough to look at it again.

But in the meantime, I ended up played with these “programs” a little, and made some really simple ones, and later some somewhat complex ones. So the next time I opened RACE again I kind of understood what it was doing.

Moving on, I spent a year learning TI-Basic, and eventually reached the limit of what it could do. Along the way, I learned that all of the really amazing games/utilities that were incredibly fast, had greyscale graphics, lowercase text, no runtime indicator, etc. were written in “Assembly,” so naturally I wanted to use that, too.

I had no idea what it was, but it was the obvious next step for me, so I started teaching myself. It was z80 Assembly, and there was practically no documents, resources, nothing helpful online.

I found the specs, and a few terrible docs and other sources, but with only one year of programming experience, I didn’t really understand what they were telling me. This was before stackoverflow, etc., too, so what little help I found was mostly from forum posts, IRC (mostly got ignored or made fun of), and reading other people’s source when I could find it. And usually that was less than clear.

And here’s where we dive into the specifics. Starting with so little experience, and in TI-Basic of all things, meant I had zero understanding of pointers, memory and addresses, the stack, heap, data structures, interrupts, clocks, etc. I had mastered everything TI-Basic offered, which astoundingly included arrays and matrices (six of each), but it hid everything else except basic logic and flow control. (No, there weren’t even functions; it has labels and goto.) It has 27 numeric variables (A-Z and theta, can store either float or complex numbers), 8 Lists (numeric arrays), 6 matricies (2d numeric arrays), 10 strings, and a few other things like “equations” and literal bitmap pictures.

Soo… I went from knowing only that to learning pointers. And pointer math. And data structures. And pointers to pointers, and the stack, and function calls, and all that goodness. And remember, I was learning and writing all of this in plain Assembly, in notepad (or on paper at school), not in C or C++ with a teacher, a textbook, SO, and an intelligent compiler with its incredibly helpful type checking and warnings. Just raw trial and error. I learned what I could from whatever cryptic sources I could find (and understand) online, and applied it.

But actually using what I learned? If a pointer was wrong, it resulted in unexpected behavior, memory corruption, freezes, etc. I didn’t have a debugger, an emulator, etc. I had notepad, the barebones compiler, and my calculator.

Also, iterating meant changing my code, recompiling, factory resetting my calculator (removing the battery for 30+ sec) because bugs usually froze it or corrupted something, then transferring the new program over, and finally running it. It was soo slowwwww. But I made steady progress.

Painful learning experience? Check.

Pointer hell? Absolutely.4 -

Looks like /dev/body got tainted.. nasal memory leaks all over the place 😷

$ kill -9 $(pidof cold)

... Nothing.

$ sudo !!

I said kill the fucking cold!!! Y u no listen to your admin?! 😠

> User condor is not in the sudoers file. This incident will be reported.

RRRRRRRRREEEEEEEEE!!!! 😣😣😣

I just want to finish my goddamn power supply project, instead of getting bed-ridden by a cold, and running through paper towels like there's no tomorrow 😭4 -

When you Valgrind your program for the first time for memory leaks and get "85000127 allocs, 85000127 deallocs, no memory leaks possible"

4

4 -

Why computers are like men:

1. In order to get their attention, you have to turn them on.

2. They have a lot of data, but are still clueless.

3. They are supposed to help you solve problems, but half the time they are the problem.

4. As soon as you commit to one, you realize that if you had waited a little longer, you could have had a better model.

Why computers are like women:

1. No one but the Creator understands their internal logic.

2. The native language they use to communicate with other computers is incomprehensible to everyone else.

3. Even your smallest mistakes are stored in long-term memory for later retrieval.

4. As soon as you make a commitment to one, you find yourself spending half your paycheck on accessories for it.1 -

Interesting bug hunt!

Got called in because a co-team had a strange bug and couldn't make sense of it. After a compiler update, things had stopped working.

They had already hunted down the bug to something equivalent to the screenshot and put a breakpoint on the if-statement. The memory window showed the memory content, and it was indeed 42. However, the debugger would still jump over do_stuff(), both in single step and when setting a breakpoint on the function call. Very unusual, but the rest worked.

Looking closer, I noticed that the pointer's content was an odd number, but was supposed to be of type uint32_t *. So I dug out the controller's manual and looked up the instruction set what it would do with a 32 bit load from an unaligned address: the most braindead thing possible, it would just ignore the lowest two address bits. So the actual load happened from a different address, that's why the comparison failed.

I think the debugger fetched the memory content bytewise because that would work for any kind of data structure with only one code path, that's how it bypassed the alignment issue. Nice pitfall!

Investigating further why the pointer was off, it turned out that it pointed into an underlying array of type char. The offset into the array was correctly divisible by 4, but the beginning had no alignment, and a char array doesn't need one. I checked the mapfiles and indeed, the old compiler had put the array to a 4 byte boundary and the new one didn't.

Sure enough, after giving the array a 4 byte alignment directive, the code worked as intended. 8

8 -

"...we're using Java. That fat bitch doesn't just eat memory, she just deep-throated her sixth serving and is showing no signs of relenting"

-Me, 2k182 -

Boss: Any idea why ColleagueX's code might be blowing out the memory?

Me (internal): Cos he's a fucking retard who can't code for shit, doesn't listen when I tell him to do stuff properly because he's fucking lazy, has no idea what stack and heap are, uses goto everywhere, doesn't know how to debug, doesn't write any unit tests, and generally WASTES MY FUCKING TIME!

Me (external): Probably a memory leak. I'll take a look.2 -

Saw this on Facebook and couldn't help but share here! 😂

A young woman submitted the tech support message below (about her relationship to her husband) presumably did it as a joke…

The query:

Dear Tech Support,

’Last year I upgraded from Boyfriend 5.0 to Husband 1.0 and noticed a distinct slowdown in overall system performance, particularly in the flower and jewelry applications, which operated flawlessly under Boyfriend 5.0.

In addition, Husband 1.0 uninstalled many other valuable programs, such as: Romance 9.5 and Personal Attention 6.5, and then installed undesirable programs such as: NBA 5.0, NFL 3.0 and Golf Clubs 4.1.

Conversation 8.0 no longer runs, and House cleaning 2.6 simply crashes the system. Please note that I have tried running Nagging 5.3 to fix these problems, but to no avail.

What can I do?

Signed,

Desperate

The response (that came weeks later out of the blue):

Dear Desperate,

“First keep in mind, Boyfriend 5.0 is an Entertainment Package, while Husband 1.0 is an operating system. Please enter command: I thought you loved me.html and try to download Tears 6.2 and do not forget to install the Guilt 3.0 update. If that application works as designed, Husband 1.0 should then automatically run the applications Jewelry 2.0 and Flowers 3.5.

However, remember, overuse of the above application can cause Husband 1.0 to default to Grumpy Silence 2.5, Happy Hour 7.0 or Beer 6.1. Please note that Beer 6.1 is a very bad program that will download the Farting and Snoring Loudly Beta.

Whatever you do, DO NOT, under any circumstances, install Mother-In-Law 1.0 (it runs a virus in the background that will eventually seize control of all your system resources.)

In addition, please, do not attempt to re-install the Boyfriend 5.0 program. These are unsupported applications and will crash Husband 1.0.

In summary, Husband 1.0 is a great program, but it does have limited memory and cannot learn new applications quickly. You might consider buying additional software to improve memory and performance. We recommend: Cooking 3.0.Good Luck!’

Good Luck!3 -

!rant

When I was in 8th grade and was learning to code (c++), I sincerely believed that calling a function within a function simply calls it again (like in a loop) . I had never heard of recursion.

And I actually made a small project in which I called a function again and again thinking that calling another terminates the previous one.

No wonder my program kept crashing. I have still kept that code with me as a wonderful memory.

I know this isn't particularly interesting, but I just saw that code today and felt like sharing this...3 -

Our team makes a software in Java and because of technical reasons we require 1GB of memory for the JVM (with the Xmx switch).

If you don't have enough free memory the app without any sign just exits because the JVM just couldn't bite big enough from the memory.

Many days later and you just stand there without a clue as to why the launcher does nothing.

Then you remember this constraint and start to close every memory heavy app you can think of. (I'm looking at you Chrome) No matter how important those spreadsheets or illustrator files. Congratulation you just freed up 4GB of memory, things should work now! WRONG!

But why you might ask. You see we are using 32-bit version of java because someone in upper management decided that it should run on any machine (even if we only test it on win 7 and high sierra) and 32 is smaller than 64 so it must be downwards compatible! we should use it! Yes, in 2019 we use 32-bit java because some lunatic might want to run our software on a Windows XP 32-bit OS. But why is this so much of a problem?

Well.. the 32-bit version of Java requires CONTIGUOUS FREE SPACE IN MEMORY TO EVEN START... AND WE ARE REQUESTING ONE GIGABYTE!!

So you can shove your swap and closed applications up your ass but I bet you that you won't get 1GB contiguous memory that way!

Now there will be a meeting about this issue and another related to the issues with 32-bit JVM tomorrow. The only problem is that this issue only occures if you used up most of your memory and then try to open our software. So upper management will probably deem this issue minor and won't allow us to upgrade to 64-bit... in 20fucking1910 -

TFW you spend 30 minutes debugging an app that isn't working right only to find out it's working exactly as it's supposed you, you just forgot you put that bit in there that does that thing.

One character change later and it's working perfectly. ONE CHARACTER! THIRTY BASTARD MINUTES! I just spent thirty minutes driving through every line of code and coming closer to the conclusion that Java was doing some kind of strange thing with dropping objects from memory. No, it wasn't Java that had memory problems, it's me! Just check me into the old peoples home now so I can spend my day pissing my pants and making lewd comments at the nurses because that's all I'm fucking useful for at this point!!

I need a coffee.5 -

Just commented on a rant

*Goes back to scrolling*

*Remembers that I forgot to ++ it*

*Runs to my comments to upvote the rant*

Happened to anyone else?8 -

Why do people jump from c to python quickly. And all are about machine learning. Free days back my cousin asked me for books to learn python.

Trust me you have to learn c before python. People struggle going from python to c. But no ml, scripting,

And most importantly software engineering wtf?

Software engineering is how to run projects and it is compulsory to learn python and no mention of got it any other vcs, wtf?

What the hell is that type of college. Trust me I am no way saying python is weak, but for learning purpose the depth of language and concepts like pass by reference, memory leaks, pointers.

And learning algorithms, data structures, is more important than machine learning, trust me if you cannot model the data, get proper training data, testing data then you will get screewed up outputs. And then again every one who hype these kinds of stuff also think that ml with 100% accuracy is greater than 90% and overfit the data, test the model on training data. And mostly the will learn in college will be by hearting few formulas, that's it.

Learn a language (concepts in language) like then you will most languages are easy.

Cool cs programmer are born today😖 31

31 -

I've got a file on my desktop called key.txt, and it's just a single line in it that is clearly some sort of API key.

Absolutely no memory of what it is for.

💩9 -

2012 laptop:

- 4 USB ports or more.

- Full-sized SD card slot with write-protection ability.

- User-replaceable battery.

- Modular upgradeable memory.

- Modular upgradeable data storage.

- eSATA port.

- LAN port.

- Keyboard with NUM pad.

- Full-sized SD card slot.

- Full-sized HDMI port.

- Power, I/O, charging, network indicator lamps.

- Modular bay (for example Lenovo UltraBay)

- 1080p webcam (Samsung 700G7A)

- No TPM trojan horse.

2024 laptop:

- 1 or 2 USB ports.

- Only MicroSD card slot. Requires fumbling around and has no write-protection switch.

- Non-replaceable battery.

- Soldered memory.

- Soldered data storage.

- No eSATA port.

- No LAN port.

- No NUM pad.

- Micro-HDMI port or uses USB-C port as HDMI.

- Only power lamp. No I/O lamp so user doesn't know if a frozen computer is crashed or working.

- No modular bay

- 720p webcam

- TPM trojan horse (Jody Bruchon video: https://youtube.com/watch/... )

- "Premium design" (who the hell cares?!)11 -

Ok friends let's try to compile Flownet2 with Torch. It's made by NVIDIA themselves so there won't be any problem at all with dependencies right?????? /s

Let's use Deep Learning AMI with a K80 on AWS, totally updated and ready to go super great always works with everything else.

> CUDA error

> CuDNN version mismatch

> CUDA versions overwrite

> Library paths not updated ever

> Torch 0.4.1 doesn't work so have to go back to Torch 0.4

> Flownet doesn't compile, get bunch of CUDA errors piece of shit code

> online forums have lots of questions and 0 answers

> Decide to skip straight to vid2vid

> More cuda errors

> Can't compile the fucking 2d kernel

> Through some act of God reinstalling cuda and CuDNN, manage to finally compile Flownet2

> Try running

> "Kernel image" error

> excusemewhatthefuck.jpg

> Try without a label map because fuck it the instructions and flags they gave are basically guaranteed not to work, it's fucking Nvidia amirite

> Enormous fucking CUDA error and Torch error, makes no sense, online no one agrees and 0 answers again

> Try again but this time on a clean machine

> Still no go

> Last resort, use the docker image they themselves provided of flownet

> Same fucking error

> While in the process of debugging, realize my training image set is also bound to have bad results because "directly concatenating" images together as they claim in the paper actually has horrible results, and the network doesn't accept 6 channel input no matter what, so the only way to get around this is to make 2 images (3 * 2 = 6 quick maths)

> Fix my training data, fuck Nvidia dude who gave me wrong info

> Try again

> Same fucking errors

> Doesn't give nay helpful information, just spits out a bunch of fucking memory addresses and long function names from the CUDA core

> Try reinstalling and then making a basic torch network, works perfectly fine

> FINALLY.png

> Setup vid2vid and flownet again

> SAME FUCKING ERROR

> Try to build the entire network in tensorflow

> CUDA error

> CuDNN version mismatch

> Doesn't work with TF

> HAVE TO FUCKING DOWNGEADE DRIVERS TOO

> TF doesn't support latest cuda because no one in the ML community can be bothered to support anything other than their own machine

> After setting up everything again, realize have no space left on 75gb machine

> Try torch again, hoping that the entire change will fix things

At this point I'll leave a space so you can try to guess what happened next before seeing the result.

Ready?

3

2

1

> SAME FUCKING ERROR

In conclusion, NVIDIA is a fucking piece of shit that can't make their own libraries compatible with themselves, and can't be fucked to write instructions that actually work.

If anyone has vid2vid working or has gotten around the kernel image error for AWS K80s please throw me a lifeline, in exchange you can have my soul or what little is left of it5 -

Is obsidian a fucking joke?

Seriously, is it a joke? Why would you ever care so much about indexing literally everything, if the entire thing crashes and/or takes >5min to LITERALLY just open the fucking directory and/or (so help you) if that directory is full of projects/repos or whatever the fuck and the total size of said directory is like >5GB.

WHY THE FUCK WOULD YOU INDEX EVERYTHING? -- "Ohh obsidian's not supposed to be used a fully fledged IDE, ohh obsidian should just handle MD files and normal sized projects, ohh the plugins and ease-of-use" -- Fuck.

There's no fucking real reason to index everything, BY DEFAULT. You open a directory with Obsidian? Doesn't matter, it's 1 byte, it's 100GB, you get indexed. Deal with it. It will use LITERALLY every resource your computer has. I'm surprised it doesn't go galaxy brain and ping if any other computers/devices are on the network and then attempt to connect and use their hardware (obsidian can be like a node!).

How shit can you be at understanding basic data structures and algorithms, where you just revert to based google-chrome brain and let the FUCKING TEXT EDITOR -- OBSIDIAN IS A FUCKING TEXT EDITOR HOLY SHIT -- hog all conceivable memory.

I swear to <some-deity> if anyone fucking says "Ohhhhhhhh actually, it's not a text editor, it has plugins and features and shit, it does all dis cool stff", OR, "Ohhhhh actually, obsidian indexes things for a very specific/rationale/apt/pragmatic/academic reason" OR "ohhhh, I have 100 iphones, 1000 ipads and a trillion desktop computers that each have 256GB of memory, why you hating on obsidian?" then go kick rocks. The fucking lot of you. Are you fucking kidding me.8 -

MacRant: was waiting for a new macbook pro release for awhile to upgrade by old laptop (not mac). Watched the release, had very mixed feeling about it, but still ordered (clinching my teeth and saying sorry to my wallet). Next day looked into alternatives, cacelled the orded to have more time to think, now deciding... I mean cmon, no latest 7th gen processor, no 32gb memory option, 2gb video is ok for non gaming, the whole "big" thing is TouchBar that I DON'T F* NEED. They should drop the "Pro" and name it "Fancy Strip".

So I looked into alternatives, and Dell XPS 15 with maxed spect is twice as juicier, and has not a touch bar, but the whole touch freakin 4k screen, for the less price :/

Just wanted to rant about the new macbook's spec and price and see what you all think of macbook vs alternatives?16 -

LPT: NEVER accept a freelance job without looking at the project's source first

Client: I have a project made by a company that is now abandoning it, I want you to fix some bugs

Me: Okay, can you:

1) Give me a build to test the current state of the game

2) Tell me what the bugs are

3) Show me the source

4) Tell me your budget

Client: *sends a list of 10 bugs* Here's the APK and to give you the project I'll need you to sign an NDA

Me: Sure...

*tests build*

*sees at least 20 bugs*

*still downloading source*

*bugs look quite easy to fix should be done under an hour*

Me: Okay, so, I can fix each bug for $10 and I can do 2 today

Client: Okay can you fix 8 bugs today for $40??

*sigh*

Me: No I cannot.

Client: okay then 2 today for $20 is fine, I want a refund if you can't fix them today

*sigh*

Me: Look dude, this isn't the first time I am doing this, aight? I'll fix the bugs today you can pay me after check they are done, savvy?

Client: okay

*source is downloaded*

*literal apes wrote the scripts, commented out code EVERYWHERE

Debug logs after every line printing every frame causing FPS drops, empty objects in the scene

multiple unused UI objects

everything is spaghetti*

*give up, after 2 hours of hell*

*tfw averted an order cancellation by not taking the order and telling client that they can pay me after I am done*

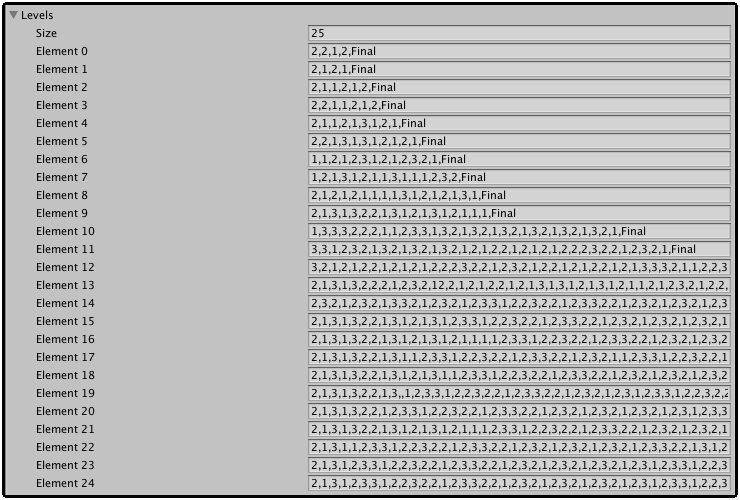

Attached is an image of a level object pool

It's an array with each element representing a level.

The numbers and "Final" are ids for objects in an object pool

The whole string is .Split(',') into an array (RIP MEMORY BTW) and then a loop goes through each element in the split array and instantiates the object from an object pool 5

5 -

long time ago....

Feature request: We want an android backup solution in Our app!

UI guy has already developed it, you just need to see if his solution is solid!

Ok then - lets look at the UI: Nice progress bars, that turn into green checkmarks. Looks good.

Now lets look at the code: ... Ok. loading some files into memory.... and... dafuq? does not write to a file?

Backup to RAM. With no restore. 🤦♂️.3 -

This happened today

My Manager: How is the progress so far on the search module?

Me(After implementing some crazy shit requirements): It's all set. APIs are working well against the mock in-memory database. I need an actual database to run my unit tests. Where do we have it?

My manager: Let's pretend that there is no database at this moment. Go-ahead with rest of your activities.

Me(IN MY MIND): F*CK you a** hole. You don't know the first thing of software development! Which a** hole promoted you as a manager!!!

Me(TO HIS FACE): Ah.. okay!! As you wish!3 -

University Coding Exam for Specialization Batch:

Q. Write a Program to merge two strings, each can be of at max 25k length.

Wrote the code in C, because fast.

Realized some edge cases don't pass, runtime errors. Proceed on to check the locked code in the Stub. (We only have to write methods, the driver code is pre-written)

Found that the memory for the char Arrays is being allocated dynamically with size 10240.

Rant #1:

Dafuq? What's the point of dynamic Memory Allocation if you're gonna fix it to a certain amount anyway?

Continuing...

Called the Program Incharge, asking him to check the problem and provide a solution. He took 10 minutes to come, meanwhile I wrote the program in Java which cleared all the test cases. <backstory>No University Course on Java yet, learnt it on my own </backstory>

Dude comes, I explain the problem. He asks me to do it in C++ instead coz it uses the string type instead of char array.

I told him that I've already done it in Java.

Him: Do you know Java?

Rant #2:

No you jackass! I did the whole thing in Java without knowing Java, what's wrong with you!2 -

Windows 10 wants to ruin my life by consuming almost 70% of memory for itself from 4 gigs.

No application is running and still consuming that much of memory. Now I just hate the updates of windows 10 pro.

Any suggestions to get rid of this situation? 26

26 -

You know what just gets to me about garbage-collected languages like c# and Java? Fucking dynamic memory allocation (seemingly) on the stack. Like it's so bizzare to me.

"Hey, c#, can I have an array of 256 integers during run-time?"

"Ya sure no prob"

"What happens when the array falls out of scope"

"I gotchu fam lol"8 -

Well I'm a first year student in computer science and in the first semester we started to learn C language and the IDE they told us to use for better learning was Devcpp.

We made a few small projects and all went well, but now in the second semester we started to make bigger projects with linked lists and memory allocation and Devcpp starts to be a complete bug itself... We are working hard in the project and after saving the project with no errors at all, at the next day, Devcpp starts to make any function we made invalid...

So we spoke to the same teacher about this and asked what can we do about it....

"Are you using Devcpp? You shouldn't, it is not that good for C"...

ARE YOU KIDDING ME?14 -

So I've started learning Rust and I must say it feels great! But some parts of the language, like enums, are quite different than what I'm used to.

As a proof of concept I've reimplemented a small API (an Azure Function App) in Rust with Actix Web and it's FAST AS FUCK BOIII.

The response is served about 5x as quckly and the memory footprint shrinked from some 90 MB to around 5 MB.

In my small scale usecase it's not a huge difference, but I think it can be massive at large scales...

What is your experience with Rust (at scale)?

I wish I could quickly reimplement the whole fucking CMS Of Doom™ in Rust... but no time and resources :(5 -

I propose that the study of Rust and therefore the application of said programming language and all of the technology that compromises it should be made because the language is actually really fucking good. Reading and studying how it manages to manipulate and otherwise use memory without a garbage collector is something to be admired, illuminating in its own accord.

BUT going for it because it is a "beTter C++" should not constitute a basis for it's study.

Let me expand through anecdotal evidence, which is really not to be taken seriously, but at the same time what I am using for my reasoning behind this, please feel free to correct me if I am wrong, for I am a software engineer yes, I do have academic training through a B.S in Computer Science yes, BUT my professional life has been solely dedicated to web development, which admittedly I do not go on about technical details of it with you all because: I am not allowed to(1) and (2)it is better for me to bitch and shit over other petty development related details.

Anecdotal and otherwise non statistically supported evidence: I have seen many motherfuckers doing shit in both C and C++ that ADMIT not covering their mistakes through the use of a debugger. Mostly because (A) using a debugger and proper IDE is for pendejos and debugging is for putos GDB is too hard and the VS IDE is waaaaaa "I onlLy NeeD Vim" and (B) "If an error would have registered then it would not have compiled no?", thus giving me the idea that the most common occurrences of issues through the use of the C father/son languages come from user error, non formal training in the language and a nice cusp of "fuck it it runs" while leaving all sorts of issues that come from manipulating the realm of the Gods "memory".

EVERY manual, book, coming all the way back to the K&C book talks about memory and the way in which developers of these 2 languages are able to manipulate and work on it. EVERY new standard of the ISO implementation of these languages deals, through community effort or standard documentation about the new items excised through features concerning MODERN (meaning, no, the shit you learned 20 years ago won't fucking cut it) will not cut it.

THUS if your ass is not constantly checking what the scalpel of electrical/circuitry/computational representation of algorithms CONDONES in what you are doing then YOU are the fucking problem.

Rust is thus no different from the original ideas of the developers behind Go when stating that their developers are not efficient enough to deal with X language, Rust protects you, because it knows that you are a fucking moron, so the compiler, advanced, and well made as it is, will give you warnings of your own idiotic tendencies, which would not have been required have you not been.....well....a fucking idiot.

Rust is a good language, but I feel one that came out from the necessity of people writing system level software as a bunch of fucking morons.

This speaks a lot more of our academic endeavors and current documentation than anything else. But to me DEALING with the idea of adapting Rust as a better C++ should come from a different point of view.

Do I agree with Linus's point of view of C++? fuck no, I do not, he is a kernel engineer, a damn good one at that regardless of what Dr. Tanenbaum believes(ed) but not everyone writes kernels, and sometimes that everyone requires OOP and additions to the language that they use. Else I would be a fucking moron for dabbling in the dictionary of languages that I use professionally.

BUT in terms of C++ being unsafe and unsecured and a horrible alternative to Rust I personaly do not believe so. I see it as a powerful white canvas, in which you are able to paint software to the best of your ability WHICH then requires thorough scrutiny from the entire team. NOT a quick replacement for something that protects your from your own stupidity BY impending the use of what are otherwise unknown "safe" features.

To be clear: I am not diminishing Rust as the powerhouse of a language that it is, myself I am quite invested in the language. But instead do not feel the reason/need before articles claiming it as the C++ killer.

I am currently heavily invested in C++ since I am trying a lot of different things for a lot of projects, and have been able to discern multiple pain points and unsafe features. Mainly the reason for this is documentation (your mother knows C++) and tooling, ide support, debugging operations, plethora of resources come from it and I have been able to push out to my secret project a lot of good dealings. WHICH I will eventually replicate with Rust to see the main differences.

Online articles stating that one will delimit or otherwise kill the other is well....wrong to me. And not the proper approach.

Anyways, I like big tits and small waists.14 -

This is my first rant here, so I hope everyone has a good time reading it.

So, the company I am working for got me going on the task to do a rewrite of a firmware that was extended for about 20 years now. Which is fine, since all new machines will be on a new platform anyways. (The old firmware was written for an 8051 initially. That thing has 256 byte of ram. Just imagine the usage of unions and bitfields...)

So, me and a few colleagues go ahead and start from scratch.

In the meantime however, the client has hired one single lonely developer. Keep in mind that nobody there understands code!

And oh boy did he go nuts on the old code, only for having it used on the very last machine of the old platform, ever! Everything after that one will have our firmware!

There are other machines in that series, using the original extended firmware. Nothing is compatible, bootloaders do not match, memory layouts do not match, code is a horrible mess now, the client is writing the specification RIGHT NOW (mind, the machine is already sold to customers), there are no tests, and for the grand finale, the guy canceled his job and went to a different company. Did I mention the bugs it has and the features it lacks?

Guess who's got to maintain that single abomination of a firmware now?1 -

“Hey - just calling you to give you an update”

Great - sorry can you refresh my memory what was this for?

“So I was about to put you through for a client but they’re no longer accepting CVs so just to update you that’s not happening”

Sorry, what was the client again?

“Oh I can’t say, but they’re no longer accepting CVs”

“...Thanks, goodbye.”

*So you call me to tell me that you can’t give my details to a client that you can’t disclosure....get off my line 🤬😡🤬*3 -

The only thing more dangerous than an alcoholic short-term-memory-challenged non-technical throw-you-under-the-bus IT director with self-esteem issues that are sporadically punctuated by delusions of superiority is one who fears for his job. Submitted for your inspection: a besotted mass of near-human brain function who not only has a 50 person IT department to run, but has also been questioned by the business owners as to what he actually does. So he has decided to show them. He has purchased a vendor product to replace a core in-house developed application used to facilitate creating the product the business sells. The purchased software only covers about 40 percent of the in-house application's functionality, so he is contracting with the vendor to perform custom development on the purchased product (at a cost likely to be just shy of six-figures) so that about 90 percent of existing functionality will be covered. He has asked one of his developers (me) to scale down the existing software to cover the functionality gaps the purchased software creates. There is no deployment plan that will allow the business to transition from the current software to the new vendor-supplied one without significantly hurting the ability of the business to function. When anyone raises this issue he dismisses it with sage musings such as, "I know it will be painful, but we'll just have to give the users really good support." Because he has no idea what any of his staff actually does, he is expecting one of his developers (again, unfortunately, me) to work with the vendor so that the Frankensoftware will perform as effectively as the current software (essentially as a project manager since there will be no in-house coding involved). Lastly, he refuses to assign someone to be responsible for the software: taking care of maintenance, configuration, and issue resolutions after it has been rolled out. When I pointedly tell him I will not be doing that (because this is purchased software and I am not a system admin or desktop engineer) he tells me, "Let me think about this." The worst part is that this is only one of four software replacement initiatives he is injecting himself into so he can prove his worth to the business owners. And by doing so he is systematically making every software development initiative akin to living in Dante's Eighth Circle. I am at the point where I want to burn my eye out with a hot poker, pour salt into the wound, and howl to the heavens in unbearable agony for a month, so when these projects come to fruition, and I am suffering the wrath of the business owners, I can look back on that moment I lost my eye and think "good times."4

-

Spent 6 hours implementing a feature because my senior didn't want to use a 3rd party plug-in.

After said 6 hours, went to look at the plugin's source code to get some inspiration with a problem I was having.

Guess fucking what? Plug-in was implemented exactly as I had done it to start with. Even better, actually, since it fixed some native bugs I couldn't find a way around.

Went back to my senior, showed him both sources and argued again in favour of the plug-in.

Senior: "Meh, I'm not sure. Don't really like to keep adding plugins"

Me: "Why? Do they cause performance hits? Increase memory usage?"

S: "No, not all. But I don't like plugins"

/flip

We ended up using the plug-in, but I "wasted" a whole day doing something we scrapped. Guess I learned some interesting things about encryption on Android, at least...6 -

Four years ago while still a newbey in Android Dev and still using the eclipse IDE which was hell to configure by adding Android plugins,my girlfriend had a birthday.

With my new found love of coding thought of developing a b-day app for her.With so little android knowledge I had a great idea the main activity would have her photo as the background and button which when clicked would show a toast saying happy b-day love.

After spending few minutes in Tutorial point and learning how to display a toast and setting click listeners on buttons I was good to go and compiled the app.

Later that evening I head to her room where her b-day was to be held with some of her lady friends .When presenting gifts I presented her gift said had one more surprise for her and asked for her phone and using bluetooth sent the apk to her phone.

Installing the app I was scared to death on seeing how my grey buttons were displaying on her 2.7 screen size since had no idea on designing for multiple screens.

Giving her back the phone she loved the app and felt like her superman in the room though not for long.Her lady friends had gone ahead took her phone and were critising the app:

Why can't I take a selfie

Why can't the app play a b-day song for her and this went on them not knowing how hurting that was.

Bumped on the lady who lead the onslaught on me and had to go down memory lane.Life is a journey.2 -

!dev

A child's mind is fascinating.

I remember how it felt being a kid, just deliriously happy.

Things were magical, mystical and happy.