Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "fetch"

-

1: Get a dog

2: Name dog Sudo

3: Teach Sudo to fetch my mail

4: Invite Linux-friend over

5: Yell "sudo fetchmail"

6: ?

7: Profit15 -

When you stare into git, git stares back.

It's fucking infinite.

Me 2 years ago:

"uh was it git fetch or git pull?"

Me 1 year ago:

"Look, I printed these 5 git commands on a laptop sticker, this is all I need for my workflow! branch, pull, commit, merge, push! Git is easy!"

Me now:

"Hold my beer, I'll just do git format-patch -k --stdout HEAD..feature -- script.js | git am -3 -k to steal that file from your branch, then git rebase master && git rebase -i HEAD~$(git rev-list --count master..HEAD) to clean up the commit messages, and a git branch --merged | grep -v "\*" | xargs -n 1 git branch -d to clean up the branches, oh lets see how many words you've added with git diff --word-diff=porcelain | grep -e '^+[^+]' | wc -w, hmm maybe I should alias some of this stuff..."

Do you have any git tricks/favorites which you use so often that you've aliased them?50 -

An area sales rep once rang me to tell me his iPhone screen was cracked and was going blurry around some sections.

I told him to fetch me to look, and I will see what I can do.

5 minutes later I get a SCREENSHOT from his phone asking if I can see the crack and blurry edges.

HOW FUCKING DUMB ARE THESE PEOPLE!!!

I mean, come on. He seriously said when I called him: "But I can see the crack and blurry bits on the screenshot on my phone"4 -

Me : Let's just use CDN.

Former boss (fb) : What's that?

Me : You fetch JS and CSS files online. Faster.

Fb: No! Download it!

Me: Why?

Fb: What if there's no internet?!

Me: ... it's a website...18 -

I am currently working for a client who have all their data in Google Sheets and Drive. I had to write code to fetch that data and it's painful to query that data.

I can definitely relate with this.

PS: Their last year revenue was over US$2 Bn and one of their sibling company is among Top IT companies in the country. 7

7 -

I sometimes write code by first putting comments and then writing the code.

Example

#fetch data

#apply optimization

#send data back to server

Then i put the code in-between the comments so that i can understand the flow.

Anyone else has this habit?18 -

*starts coding in c#*

Me: hmm this bit of functionality requires some good ol inheritence

*has flashback to uni lecture on c++ *

Lecturer: And so you can use inheritence with friends for xyz, you know what they say friends can touch eachothers privates

*end of flashback*

Me: Guh! No, not the puns ! Guh!5 -

Client: I have lost everything on the cloud holy crap!

Me: Are you signed into your google drive, and within the folder?

Client: No, how do i do that again? I obviously cant be bothered reading your well formated and instructional guide and would rather contact you at 6pm on a saturday night8 -

Jack and Jill

Pulled down from git

To fetch aPaleOfWater.c

Jack made some changes

And then pushed them all up

A merge conflict occurred

Jill decided his changes sucked

And push --force over his

Jack was enraged

For history was changed

And force pushed Jill

down a hill3 -

*starts coding by 7pm in the evening.

*remembers that he would soon have to go fetch something to eat but keeps on coding.

*tells himself he would get food by 10pm.

*checks the time - it's past 12am.

*codes all night long on an empty belly but doesn't care.10 -

Brother of my friend came to me and asked me to teach him C as it was most important lesson in high school CS. I agreed and started with data types, conditional statements, loops and others that were mostly exam oriented. He was doing good. Then I thought of teaching him a life lesson and introduced him to pointers(questions about pointers are very rare in exams). As soon as I started the pointers, things got pretty bored and he went off topic and started talking about a girl he has crush on and told he wanted to know when her birthday was so that he could gift her something to be ahead of the crowd trying to impress her. I thought to help him out, afterall he's like my younger brother and told him I can help. Result of his previous exam were out then, providing symbol number on Examination Board's website would do the trick because it would return full data of students result which had birthday in it. I modified my previous script to fetch data of his school's result and pass the data to a file. They're together since last few months. He reminds me time to time that my code is what got them together.8

-

I fucking LOVEEEEE that there's atleast 50 different ways to set the mysql root password, depending on what weather, what time and what version you're lucky to fetch from the repos.2

-

Why do some non-devs treat professional app development like some kids craft-making hobby that requires zero skill and knowledge or brain?

A friend (with ZERO knowledge about coding) said to me today, teach me, or tell me how to learn this app development, I'll learn it within a month and make my own apps plus do freelance app work in free time, apps fetch plenty of money easily. Blah blah.

Not the first time, other non dev friends have talked in the same way on other instances.

It's insulting and infuriating. I don't even know what to reply.7 -

Wrote a python script to fetch details of amazon products to monitor price differences.

The script is only 50 lines, which is why I love python!8 -

⚡️ devRantron v1.4.1 ⚡️

I strongly urge all the users of the devRantron to upgrade their app. We have added some major features and made a lot of bugfixes. For example:

1. Edit Rants and Comments

2. Browse Weekly

3. Save drafts of rants so that you can edit and post them later. Also, the app now autosaves when you are typing a new rant and will keep it until you post it.

4. Fixed macOS startup. Previously the app used to open a terminal in the background to launch the app. That has been removed.

5. Confirmation before deleting a rant or comment

6. Huge performance optimization. We have upgraded to React 16 and also changed the way our compiler compiles the application. The way we fetch the notifications has also been changed and it uses less bandwidth.

7. The app will only have single instance now. If you accidentally open the app again, it will just switch to the currently running instance.

8. We now show a release info dialogue before updating. Linux and macOS users will now receive an update notification for new updates.

9. Added the ability to select rant types.

You can get it from here: https://devrantron.firebaseapp.com/

macOS users, please remove the devRantron from "Login Items" in Settings > Users and Groups.

We would like to thank all our users for giving us the feedbacks. If you like the app, you can show your appreciation by giving a start to the repo.

Thank you! 23

23 -

#2 Worst thing I've seen a co-worker do?

Back before we utilized stored procedures (and had an official/credentialed DBA), we used embedded/in-line SQL to fetch data from the database.

var sql = @"Select

FieldsToSelect

From

dbo.Whatever

Where

Id = @ID"

In attempts to fix database performance issues, a developer, T, started putting all the SQL on one line of code (some sql was formatted on 10+ lines to make it readable and easily copy+paste-able with SSMS)

var sql = "Select ... From...Where...etc";

His justification was putting all the SQL on one line make the code run faster.

T: "Fewer lines of code runs faster, everyone knows that."

Mgmt bought it.

This process took him a few months to complete.

When none of the effort proved to increase performance, T blamed the in-house developed ORM we were using (I wrote it, it was a simple wrapper around ADO.Net with extension methods for creating/setting parameters)

T: "Adding extra layers causes performance problems, everyone knows that."

Mgmt bought it again.

Removing the ORM, again took several months to complete.

By this time, we hired a real DBA and his focus was removing all the in-line SQL to use stored procedures, creating optimization plans, etc (stuff a real DBA does).

In the planning meetings (I was not apart of), T was selected to lead because of his coding optimization skills.

DBA: "I've been reviewing the execution plans, are all the SQL code on one line? What a mess. That has to be worst thing I ever saw."

T: "Yes, the previous developer, PaperTrail, is incompetent. If the code was written correctly the first time using stored procedures, or even formatted so people could read it, we wouldn't have all these performance problems."

DBA didn't know me (yet) and I didn't know about T's shenanigans (aka = lies) until nearly all the database perf issues were resolved and T received a recognition award for all his hard work (which also equaled a nice raise).5 -

Long story short, I'm unofficially the hacker at our office... Story time!

So I was hired three months ago to work for my current company, and after the three weeks of training I got assigned a project with an architect (who only works on the project very occasionally). I was tasked with revamping and implementing new features for an existing API, some of the code dated back to 2013. (important, keep this in mind)

So at one point I was testing the existing endpoints, because part of the project was automating tests using postman, and I saw something sketchy. So very sketchy. The method I was looking at took a POJO as an argument, extracted the ID of the user from it, looked the user up, and then updated the info of the looked up user with the POJO. So I tried sending a JSON with the info of my user, but the ID of another user. And voila, I overwrote his data.

Once I reported this (which took a while to be taken seriously because I was so new) I found out that this might be useful for sysadmins to have, so it wasn't completely horrible. However, the endpoint required no Auth to use. An anonymous curl request could overwrite any users data.

As this mess unfolded and we notified the higher ups, another architect jumped in to fix the mess and we found that you could also fetch the data of any user by knowing his ID, and overwrite his credit/debit cards. And well, the ID of the users were alphanumerical strings, which I thought would make it harder to abuse, but then realized all the IDs were sequentially generated... Again, these endpoints required no authentication.

So anyways. Panic ensued, systems people at HQ had to work that weekend, two hot fixes had to be delivered, and now they think I'm a hacker... I did go on to discover some other vulnerabilities, but nothing major.

It still amsues me they think I'm a hacker 😂😂 when I know about as much about hacking as the next guy at the office, but anyways, makes for a good story and I laugh every time I hear them call me a hacker. The whole thing was pretty amusing, they supposedly have security audits and QA, but for five years, these massive security holes went undetected... And our client is a massive company in my country... So, let's hope no one found it before I did.6 -

Not having finished any education, and writing code during interviews.

I have a pretty nice resume with good references, and I think I'm a reasonably good & experienced dev.

But I'm absolutely unable to write code on paper, and really wonder how some devs can just write out algorithms using a pen and reason about it, without trying/failing/playing/fixing in an IDE.

Education I think.

I can transform the theory on a complex Wikipedia page about math/algorithm into code, I can translate a Haskell library into idiomatic python... but what I haven't done is write out sorting functions or fibonacci generators a million times during Java class.

I don't see the point either... but I still feel utterly worthless during an interview if they ask "So you haven't even finished highschool? Can you at least solve this prime number problem using a marker on this whiteboard? Could you explain in words which sorting algorithm is faster and why?"

"Uh... let me fetch a laptop with an IDE, stackoverflow and Wikipedia?"22 -

Don't do "git pull" quickly. Always do a "git fetch" THEN "git log HEAD..origin" OR "git log -p HEAD..origin". It is like previewing first what you will "git pull".

OR something like (example):

- git fetch

- git diff origin/master

- git pull --rebase origin master

Sometimes it is a trap, you will pull other unknown or unwanted files that will cause some errors after quickly doing a git pull when working in a team. Better safe than sorry.

Other tips and tricks related are welcome 😀

Credits: https://stackoverflow.com/questions...5 -

Unexperienced digital immigrant: "Let's make a website, can you do this?"

Me: "Yeah."

"But you gotta use wordpress. We want to be able to change the content easily. Also we want to have that website done fast."

"Meh, ok."

one day later

"Are you done yet?"

Me: "No, I got to find a good way for the lightbox I'm still implementing to fetch data from posts blabla"

"Why are you not done yet? Just take a plugin, even I can do that. Wordpress is so easy, it's just 1, 2, 3 and done."

Yeah I have an idea. Why don't you just make the website yourself.4 -

Me to my family :

Family: so this printer not working

Me: have you installed its software

Family: no, can you do it?

Me: i could travel 1 hour or you can just google and download it, its really quite simp--

Family: yeah this is to complicated for me il need you to come over10 -

Not really a bug, but once I tried to learn building function ajax per table asynchronously instead of calling all of them at once. Spend like couple of hours of trial of error. It wasn’t needed at the time, but suddenly I need to fetch something separately because of a new feature. Just write a couple and line it’s done

-

So the water dispenser in the kitchen does not have sparkling water, which I love. But there's one in the meeting room down the corridor that has sparkling water!

Like any regular employee of course I filed a request with site manager to upgrate the kitchen dispenser... NOT!

I wrote an app that sits in the taskbar when minimized and shows a traffic light with the status of the meeting room availability so I know when it's clear to go fetch me some of that bubbly goodness! 7

7 -

!rant.

Here's some useful git tricks. Use with care and remember to be careful to only rewrite history when noones looking.

- git rebase: powerful history rewriting. Combine commits, delete commits, reorder commits, etc.

- git reflog: unfuck yourself. Move back to where you were even if where you were was destroyed by rebasing or deleting. Git never deletes commits that you've seen within at least the last 50 HEAD changes, and not at all until a GC happens, so you can save yourself quite often.

- git cherry-pick: steal a commit into another branch. Useful for pulling things out of larger changesets.

- git worktree: checkout a different branch into a different folder using the same git repository.

- git fetch: get latest commits and origin HEADs without impacting local braches.

- git push --force-with-lease: force push without overwriting other's changes5 -

I spent an hour arguing with the CTO, pushing for having all our new products' data in the database (wow) with an API I could hit to fetch said data (wow) prior to displaying it on our order page.

He never actually agreed with me, but he finally acquiesced and wrote the migrations, API, and entered my (rather contrived) placeholder data. (I've been waiting on the boss for details and copy for three days.)

Anyway, it's now live on QA. but. I don't know where QA is for this app, and it's been long enough that i'm kind of afraid to ask.

Does that sound strange?

well.

We have seven (nine?) live applications (three of which share a database), and none of their repos match their URLs, nor even their Heroku app names. (In some of these Heroku names, "db" is short for the app's namesake, while in the rest it's short for "database").

So, I honestly have no idea where "dbappdev" points to, and I don't have access to the DNS records to check. -.-

What's more: I opened "dbappdev" on Heroku and tested out his new API -- lo and behold! it returns nada. Not a single byte. (Given his history I expected a 500, so this is an improvement, I think. Still totally useless, however.)

And furthermore: he didn't push the code to github, so I cannot test (or fix) it locally.

just. UGH.

every day with this guy, i swear.16 -

I worked for over 13 hours yesterday on super-urgent projects. I got so much done it's insane.

Projects:

1) the printer auto-configuration script.

2) changing Stripe from test mode to live mode in production

3) website responsiveness

I finished two within five minutes and pushed to both QA and Production. actually urgent, actually necessary. Easy change.

The printer auto-configure script was honestly fun to write, if very involved. However, the APIs I needed to call to fetch data, create a printer client, etc... none of them were tested, and they were _all_ broken in at least two ways. The CTO (api guy in my previous rant) was slow at fixing them, so getting the APIs working took literally four hours. One of them (test print) still doesn't work.

Responsiveness... this was my first time making a website responsive. Ever. Also, one of the pages I needed to style was very complicated (nested fixed-aspect-ratio + flexbox); I ended up duplicating the markup and hacking the styling together just to make it work. The code is horrible. But! "Friday's the day! it's going live and we're pushing traffic to it!" So, I invested a lot of time and energy into making it ready and as pretty as I could, and finally got it working. That page alone took me two hours.

The site and the printer script (and obv the Stripe change as well) absolutely needed to be done by this morning. Super important.

well.

1) Auto-configure script. Ostensibly we would have an intern come in and configure the printers. However, we have no printers that need configuring, so she did marketing instead. :/ Also, the docs Epson sent us only work for the T88V printer (we have exactly one, which we happened to set up and connect to). They do not work for the T88VI printers, which is what we ordered. and all we'll ever be ordering. So. :/ I'll need to rewrite a large chunk of my code to make this work. Joy :/

2) Stripe Live mode. Nobody even seemed to notice that we were collecting info in Test mode, or that I fixed it. so. um. :/

3) Responsiveness.

Well. That deadline is actually next Wednesday. The marketing won't even start until then, and I haven't even been given the final changes yet (like come on). Also! I asked for a QA review last night before I'd push it to production. One person glanced at it. Nobody else cared. Nobody else cared enough to look in the morning, either, so it's still on QA. Super-important deadline indeed. :/

Honestly?

I feel like Alice (from Dilbert) after she worked frantically on urgent projects that ended up just being cancelled. (That one where Wally smells that lovely buttery-popcorn scent of unnecessary work.)

I worked 13 hours yesterday.

for nothing.

fucking. hell.undefined fuck off we urgently don't need this yet! unnecessary work unsung heroine i'm starting to feel like dark terra.7 -

Github Inc. (Feel good inc. parody)

=========================

Ha-ha-ha-ha-ha-ha-ha-ha-ha-ha-ha-ha-ha.

Github.

Fetch it, fetch it, fetch it, Github.

Fetch it, fetch it, fetch it, Github.

Fetch it, fetch it, fetch it, Github.

Fetch it, fetch it, fetch it, Github.

Fetch it, fetch it, fetch it, Github.

Fetch it, fetch it, fetch it, Github.

(change) Fetch it (change), Fetch it (change), Fetch it (change), Github

(change) fetch it (change), fetch it (change), fetch it (change), Github

Repos breaking down on pull request

Juniors have to go cause they don't know wack

So while you filling the commits and showing branch trees

You won't get paid cause it's all damn free

You set a new linter and a new phenomenal style

Hoping the new code will make you smile

But all you wanna have is a nice long sleep.

But your screams they'll keep you awake cause you don't get no sleep no.

git-blame, git-blame on this line

What the f*ck is wrong with that

Take it all and recompile

It is taking too lonnng

This code is better. This code is free

Let's clone this repo you and me.

git-blame, git-blame on this line

Is everybody in?

Laughing at the class past, fast CRUD

Testing them up for test cracks.

Star the repos at the start

It's my portfolio falling apart.

Shit, I'm forking in the code of this here.

Compile, breaking up this shit this y*er.

Watch me as I navigate.

Ha-ha-ha-ha-ha-ha.

Yo, this repo is Ghost Town

It's pulled down

With no clowns

You're in the sh*t

Gon' bite the dust

Can't nag with us

With no push

You kill the git

So don't stop, git it, git it, git it

Until you're the maintainers

And watch me criticize you now

Ha-ha-ha-ha-ha.

Break it, break it, break it, Github.

Break it, break it, break it, Github.

Break it, break it, break it, Github.

Break it, break it, break it, Github.

git-blame, git-blame on this line

What the f*ck is wrong with that

Take it all and recompile

It is taking too lonnng

This code is better. This code is free

Let's clone this repo you and me.

git-blame, git-blame on this line

Is everybody in?

Don't stop, shit it, git it.

See how your team updates it

Steady, watch me navigate

Aha-ha-ha-ha-ha.

Don't stop, shit it, git it.

Peep at updates and reconvert it

Steady, watch me git reset now

Aha-ha-ha-ha-ha.

Github.

Push it, push it, push it, Github.

Push it, push it, push it, Github.

Push it, push it, push it, Github.

Push it, push it, push it, Github.2 -

Long rant ahead.. so feel free to refill your cup of coffee and have a seat 🙂

It's completely useless. At least in the school I went to, the teachers were worse than useless. It's a bit of an old story that I've told quite a few times already, but I had a dispute with said teachers at some point after which I wasn't able nor willing to fully do the classes anymore.

So, just to set the stage.. le me, die-hard Linux user, and reasonably initiated in networking and security already, to the point that I really only needed half an ear to follow along with the classes, while most of the time I was just working on my own servers to pass the time instead. I noticed that the Moodle website that the school was using to do a big chunk of the course material with, wasn't TLS-secured. So whenever the class begins and everyone logs in to the Moodle website..? Yeah.. it wouldn't be hard for anyone in that class to steal everyone else's credentials, including the teacher's (as they were using the same network).

So I brought it up a few times in the first year, teacher was like "yeah yeah we'll do it at some point". Shortly before summer break I took the security teacher aside after class and mentioned it another time - please please take the opportunity to do it during summer break.

Coming back in September.. nothing happened. Maybe I needed to bring in more evidence that this is a serious issue, so I asked the security teacher: can I make a proper PoC using my machines in my home network to steal the credentials of my own Moodle account and mail a screencast to you as a private disclosure? She said "yeah sure, that's fine".

Pro tip: make the people involved sign a written contract for this!!! It'll cover your ass when they decide to be dicks.. which spoiler alert, these teachers decided they wanted to be.

So I made the PoC, mailed it to them, yada yada yada... Soon after, next class, and I noticed that my VPN server was blocked. Now I used my personal VPN server at the time mostly to access a file server at home to securely fetch documents I needed in class, without having to carry an external hard drive with me all the time. However it was also used for gateway redirection (i.e. the main purpose of commercial VPN's, le new IP for "le onenumity"). I mean for example, if some douche in that class would've decided to ARP poison the network and steal credentials, my VPN connection would've prevented that.. it was a decent workaround. But now it's for some reason causing Moodle to throw some type of 403.

Asked the teacher for routers and switches I had a class from at the time.. why is my VPN server blocked? He replied with the statement that "yeah we blocked it because you can bypass the firewall with that and watch porn in class".

Alright, fair enough. I can indeed bypass the firewall with that. But watch porn.. in class? I mean I'm a bit of an exhibitionist too, but in a fucking class!? And why right after that PoC, while I've been using that VPN connection for over a year?

Not too long after that, I prematurely left that class out of sheer frustration (I remember browsing devRant with the intent to write about it while the teacher was watching 😂), and left while looking that teacher dead in the eyes.. and never have I been that cold to someone while calling them a fucking idiot.

Shortly after I've also received an email from them in which they stated that they wanted compensation for "the disruption of good service". They actually thought that I had hacked into their servers. Security teachers, ostensibly technical people, if I may add. Never seen anyone more incompetent than those 3 motherfuckers that plotted against me to save their own asses for making such a shitty infrastructure. Regarding that mail, I not so friendly replied to them that they could settle it in court if they wanted to.. but that I already knew who would win that case. Haven't heard of them since.

So yeah. That's why I regard those expensive shitty pieces of paper as such. The only thing they prove is that someone somewhere with some unknown degree of competence confirms that you know something. I think there's far too many unknowns in there.

Nowadays I'm putting my bets on a certification from the Linux Professional Institute - a renowned and well-regarded certification body in sysadmin. Last February at FOSDEM I did half of the LPIC-1 certification exam, next year I'll do the other half. With the amount of reputation the LPI has behind it, I believe that's a far better route to go with than some random school somewhere.25 -

Preface: i'm pretty... definitely wasted. rum is amazing.

anyway, I spent today fighting with ActionCable. but as per usu, here's the rant's backstory:

I spent two or three days fighting with ActionCable a few weeks ago. idr how long because I had a 102*f fever at the time, but I managed to write a chat client frontend in React that hooked up to API Guy's copypasta backend. (He literally just copy/pasted it from a chat app tutorial. gg). My code wasn't great, but it did most of what it needed to do. It set up a websocket, had listeners for the various events, connected to the ActionCable server and channel, and wrote out updates to the DOM as they came in. It worked pretty well.

Back to the present!

I spent today trying to get the rest to work, which basically amounted to just fetching historical messages from the server. Turns out that's actually really hard to do, especially when THE FKING OFFICIAL DOCUMENTATION'S EXAMPLES ARE WRONG! Seriously, that crap has scoping and (coffeescript) syntax errors; it doesn't even run. but I didn't know that until the end, because seriously, who posts broken code on official docs? ugh! I spent five hours torturing my code in an effort to get it to work (plus however many more back when I had a fever), only to discover that the examples themselves are broken. No wonder I never got it working!

So, I rooted around for more tutorials or blogs or anything else with functional sample code. Basically every example out there is the same goddamn chat app tutorial with their own commentary. Remember that copy/paste? yeah, that's the one. Still pissed off about that. Also: that tutorial doesn't fetch history, or do anything other than the most basic functionality that I had already written. Totally useless to me.

After quite a bit of searching, the only semi-decent resource I was able to find was a blog from 2015 that's entirely written in Japanese. No, I can't read more than a handful of words, but I've been using it as a reference because its code is seriously more helpful than what's on official Rails docs. -_-

Still never got it to work, though. but after those five futile hours of fighting with the same crap, I sort of gave up and did something else.

zzz.

Anyway.

The moral of the story is that if you publish broken code examples beacuse you didn't even fking bother to test them first, some extremely pissed off and vindictive and fashionable developer will totally waterboard the hell out of you for the cumulative total of her wasted development time because screw you and your goddamn laziness.8 -

The website for our biggest client went down and the server went haywire. Though for this client we don’t provide any infrastructure, so we called their it partner to start figuring this out.

They started blaming us, asking is if we had upgraded the website or changed any PHP settings, which all were a firm no from us. So they told us they had competent people working on the matter.

TL;DR their people isn’t competent and I ended up fixing the issue.

Hours go by, nothing happens, client calls us and we call the it partner, nothing, they don’t understand anything. Told us they can’t find any logs etc.

So we setup a conference call with our CXO, me, another dev and a few people from the it partner.

At this point I’m just asking them if they’ve looked at this and this, no good answer, I fetch a long ethernet cable from my desk, pull it to the CXO’s office and hook up my laptop to start looking into things myself.

IT partner still can’t find anything wrong. I tail the httpd error log and see thousands upon thousands of warning messages about mysql being loaded twice, but that’s not the issue here.

Check top and see there’s 257 instances of httpd, whereas 256 is spawned by httpd, mysql is using 600% cpu and whenever I try to connect to mysql through cli it throws me a too many connections error.

I heard the IT partner talking about a ddos attack, so I asked them to pull it off the public network and only give us access through our vpn. They do that, reboot server, same problems.

Finally we get the it partner to rollback the vm to earlier last night. Everything works great, 30 min later, it crashes again. At this point I’m getting tired and frustrated, this isn’t my job, I thought they had competent people working on this.

I noticed that the db had a few corrupted tables, and ask the it partner to get a dba to look at it. No prevail.

5’o’clock is here, we decide to give the vm rollback another try, but first we go home, get some dinner and resume at 6pm. I had told them I wanted to be in on this call, and said let me try this time.

They spend ages doing the rollback, and then for some reason they have to reconfigure the network and shit. Once it booted, I told their tech to stop mysqld and httpd immediately and prevent it from start at boot.

I can now look at the logs that is leading to this issue. I noticed our debug flag was on and had generated a 30gb log file. Tail it and see it’s what I’d expect, warmings and warnings, And all other logs for mysql and apache is huge, so the drive is full. Just gotta delete it.

I quietly start apache and mysql, see the website is working fine, shut it down and just take a copy of the var/lib/mysql directory and etc directory just go have backups.

Starting to connect a few dots, but I wasn’t exactly sure if it was right. Had the full drive caused mysql to corrupt itself? Only one way to find out. Start apache and mysql back up, and just wait and see. Meanwhile I fixed that mysql being loaded twice. Some genius had put load mysql.so at the top and bottom of php ini.

While waiting on the server to crash again, I’m talking to the it support guy, who told me they haven’t updated anything on the server except security patches now and then, and they didn’t have anyone familiar with this setup. No shit, it’s running php 5.3 -.-

Website up and running 1.5 later, mission accomplished.6 -

Just saw a repository with branch name - 👶

bitbucket gives this - git fetch && git checkout 👶 for checking it out,

wondering how would anyone checkout this branch without copy pasting the above line from the web xD8 -

I forgot to git fetch, so I spent quite a while doing exactly WHAT MY TEAMMATE HAD DONE JUST HOURS AGO3

-

Insomnia: yeah, nice cors header

Postman: neat cors header mate

Fetch in browser: where the FUCK is the cors header you retard6 -

Spent an hour figuring out an ERR_NAME_NOT_RESOLVED on a fetch Post call.

Turns out I was calling locahost instead of localhost.........

Only figured it out because I zoomed in on the console output by accident.

fml1 -

“sEniOr tEcHniCiaN”: “I don’t know what Blazor is. I write my projects in ASP.NET. You should just use ASP.NET”

Me: …”Blazor *is* ASP. This project is running on ASP.NET 6.”

“seNioR tEchNiCiaN”: “As previously stated, I don’t use Blazor. I don’t care what version it is.”

Yes, this is a real exchange from my ongoing problems with this idiot.

His attitude is what ticks me off the most.

He doesn’t know what CORS is.

He doesn’t understand that “ASP.NET” covers Blazor, Razor Pages, the old MVC stuff, web APIs, and more.

He doesn’t understand the difference between a web request being initiated from the browser via Fetch and a web request being initiated from the server. (“My ASP site is shown in the browser, so requests to the third party API aren’t originating from the server.”)

And yet has the arrogance to repeatedly talk down to me while I try to explain basic concepts to him in the least condescending way possible.

After going around and around in circles with him, he finally admitted to me that “he doesn’t actually know what the CORS configuration looks like or how to modify it, to be honest.”

I just wanna go home.15 -

Okay guys, this is it!

Today was my final day at my current employer. I am on vacation next week, and will return to my previous employer on January the 2nd.

So I am going back to full time C/C++ coding on Linux. My machines will, once again, all have Gentoo Linux on them, while the servers run Debian. (Or Devuan if I can help it.)

----------------------------------------------------------------

So what have I learned in my 15 months stint as a C++ Qt5 developer on Windows 10 using Visual Studio 2017?

1. VS2017 is the best ever.

Although I am a Linux guy, I have owned all Visual C++/Studio versions since Visual C++ 6 (1999) - if only to use for cross-platform projects in a Windows VM.

2. I love Qt5, even on Windows!

And QtDesigner is a far better tool than I thought. On Linux I rarely had to design GUIs, so I was happily surprised.

3. GUI apps are always inferior to CLI.

Whenever a collegue of mine and me had worked on the same parts in the same libraries, and hit the inevitable merge conflict resolving session, we played a game: Who would push first? Him, with TortoiseGit and BeyondCompare? Or me, with MinTTY and kdiff3?

Surprise! I always won! 😁

4. Only shortly into Application Development for Windows with Visual Studio, I started to miss the fun it is to code on Linux for Linux.

No matter how much I like VS2017, I really miss Code::Blocks!

5. Big software suites (2,792 files) are interesting, but I prefer libraries and frameworks to work on.

----------------------------------------------------------------

For future reference, I'll answer a possible question I may have in the future about Windows 10: What did I use to mod/pimp it?

1. 7+ Taskbar Tweaker

https://rammichael.com/7-taskbar-tw...

2. AeroGlass

http://www.glass8.eu/

3. Classic Start (Now: Open-Shell-Menu)

https://github.com/Open-Shell/...

4. f.lux

https://justgetflux.com/

5. ImDisk

https://sourceforge.net/projects/...

6. Kate

Enhanced text editor I like a lot more than notepad++. Aaaand it has a "vim-mode". 👍

https://kate-editor.org/

7. kdiff3

Three way diff viewer, that can resolve most merge conflicts on its own. Its keyboard shortcuts (ctrl-1|2|3 ; ctrl-PgDn) let you fly through your files.

http://kdiff3.sourceforge.net/

8. Link Shell Extensions

Support hard links, symbolic links, junctions and much more right from the explorer via right-click-menu.

http://schinagl.priv.at/nt/...

9. Rainmeter

Neither as beautiful as Conky, nor as easy to configure or flexible. But it does its job.

https://www.rainmeter.net/

10 WinAeroTweaker

https://winaero.com/comment.php/...

Of course this wasn't everything. I also pimped Visual Studio quite heavily. Sam question from my future self: What did I do?

1 AStyle Extension

https://marketplace.visualstudio.com/...

2 Better Comments

Simple patche to make different comment styles look different. Like obsolete ones being showed striked through, or important ones in bold red and such stuff.

https://marketplace.visualstudio.com/...

3 CodeMaid

Open Source AddOn to clean up source code. Supports C#, C++, F#, VB, PHP, PowerShell, R, JSON, XAML, XML, ASP, HTML, CSS, LESS, SCSS, JavaScript and TypeScript.

http://www.codemaid.net/

4 Atomineer Pro Documentation

Alright, it is commercial. But there is not another tool that can keep doxygen style comments updated. Without this, you have to do it by hand.

https://www.atomineerutils.com/

5 Highlight all occurrences of selected word++

Select a word, and all similar get highlighted. VS could do this on its own, but is restricted to keywords.

https://marketplace.visualstudio.com/...

6 Hot Commands for Visual Studio

https://marketplace.visualstudio.com/...

7 Viasfora

This ingenious invention colorizes brackets (aka "Rainbow brackets") and makes their inner space visible on demand. Very useful if you have to deal with complex flows.

https://viasfora.com/

8 VSColorOutput

Come on! 2018 and Visual Studio still outputs monochromatically?

http://mike-ward.net/vscoloroutput/

That's it, folks.

----------------------------------------------------------------

No matter how much fun it will be to do full time Linux C/C++ coding, and reverse engineering of WORM file systems and proprietary containers and databases, the thing I am most looking forward to is quite mundane: I can do what the fuck I want!

Being stuck in a project? No problem, any of my own projects is just a 'git clone' away. (Or fetch/pull more likely... 😜)

Here I am leaving a place where gitlab.com, github.com and sourceforge.net are blocked.

But I will also miss my collegues here. I know it.

Well, part of the game I guess?7 -

Fuck you you fucking fuck, why would you change an api without any notification?

Background: built an app for a customer, it needs to fetch data frlman external api, and save it to a db.

Customer called: it's broken what did you do?!?

Me: I'll look into it.

Turned out the third party just changed their api... Guess I should implenent some kind of notification, if no messages come in for some time...5 -

"I'll just fetch the data from Wikipedia, it must be really simple using their API"

I've never been so wrong.2 -

a tale of daily frustration:

git fetch

*yup I'm up-to-date ...*

git add -p .

*hack in beautiful patch ...*

git status -bs

*correct branch, didn't forget any files ...*

git diff --cached

*yep, that is what I mean to commit ...*

git commit -m"[TKT-NUM] Meaningful commit message"

git log -p -1

*double-checking ... looks good ...*

git push remote tkt-num-etc

*for a brief moment feel accomplished ...*

*notice typo in commit message ...*

I don't have a funny image or punchline to sum this post up. But know that if you recognise this feeling, then I am your brother in git.6 -

Some of the penguin's finest insults (Some are by me, some are by others):

Disclaimer: We all make mistakes and I typically don't give people that kind of treatment, but sometimes, when someone is really thick, arrogant or just plain stupid, the aid of the verbal sledgehammer is neccessary.

"Yeah, you do that. And once you fucked it up, you'll go get me a coffee while I fix your shit again."

"Don't add me on Facebook or anything... Because if any of your shitty code is leaked, ever, I want to be able to plausibly deny knowing you instead of doing Seppuku."

"Yep, and that's the point where some dumbass script kiddie will come, see your fuckup and turn your nice little shop into a less nice but probably rather popular porn/phishing/malware source. I'll keep some of it for you if it's good."

"I really love working with professionals. But what the fuck are YOU doing here?"

"I have NO idea what your code intended to do - but that's the first time I saw RCE and SQLi in the same piece of SHIT! Thanks for saving me the hassle."

"If you think XSS is a feature, maybe you should be cleaning our shitter instead of writing our code?"

"Dude, do I look like I have blue hair, overweight and a tumblr account? If you want someone who'd rather lie to your face than insult you, go see HR or the catholics or something."

"The only reason for me NOT to support you getting fired would be if I was getting paid per bug found!"

"Go fdisk yourself!"

"You know, I doubt the one braincell you have can ping localhost and get a response." (That one's inspired by the BOFH).

"I say we move you to the blockchain. I'd volunteer to do the cutting." (A marketing dweeb suggested to move all our (confidential) customer data to the "blockchain").

"Look, I don't say you suck as a developer, but if you were this competent as a gardener, I'd be the first one to give you a hedgetrimmer and some space and just let evolution do its thing."

"Yeah, go fetch me a unicorn while you're chasing pink elephants."

"Can you please get as high as you were when this time estimate come up? I'd love to see you overdose."

"Fuck you all, I'm a creationist from now on. This guy's so dumb, there's literally no explanation how he could evolve. Sorry Darwin."

"You know, just ignore the bloodstain that I'll put on the wall by banging my head against it once you're gone."2 -

Well... I had in over 15 years of programming a lot of PHP / HTML projects where I asked myself: What psychopath could have written this?

(PHP haters: Just go trolling somewhere else...)

In my current project I've "inherited" a project which was running around ~ 15 years. Code Base looked solid to me... (Article system for ERP, huge company / branches system, lot of other modules for internal use... All in all: Not small.)

The original goal was to port to PHP 7 and to give it a fresh layout. Seemed doable...

The first days passed by - porting to an asset system, cleaning up the base system (login / logout / session & cookies... you know the drill).

And that was where it all went haywire.

I really have no clue how someone could have been so ignorant to not even think twice before setting cookies or doing other "header related" stuff without at least checking the result codes...

Basically the authentication / permission system was fully fucked up. It relied on redirecting the user via header modification to the login page with an error set in a GET variable...

Uh boy. That ain't funny.

Ported to session flash messages, checked if headers were sent, hard exit otherwise - redirect.

But then I got to the first layers of the whole "OOP class" related shit...

It's basically "whack a mole".

Whoever wrote this, was as dumb and as ignorant to build up a daisy chain of commands for fixing corner cases of corner cases of the regular command... If you don't understand what I mean, take the following example:

Permissions are based on group (accumulation of single permissions) and single permissions - to get all permissions from a user, you need to fetch both and build a unique array.

Well... The "names" for permissions are not unique. I'd never expected to be someone to be so stupid. Yes. You could have two permissions name "article_search" - while relying on uniqueness.

All in all all permissions are fetched once for lifetime of script and stored to a cache...

To fix this corner case… There is another function that fetches the results from the cache and returns simply "one" of the rights (getting permission array).

In case you need to get the ID of the other (yes... two identifiers used in the project for permissions - name and ID (auto increment key))...

Let's write another function on top of the function on top of the function.

My brain is seriously in deep fried mode.

Untangling this mess is basically like getting pumped up with pain killers and trying to solve logic riddles - it just doesn't work....

So... From redesigning and porting from PHP 7 I'm basically rewriting the whole base system to MVC, porting and touching every script, untangling this dumb shit of "functions" / "OOP" [or whatever you call this garbage] and then hoping everything works...

A huge thanks to AURA. http://auraphp.com/

It's incredibily useful in this case, as it has no dependencies and makes it very easy to get a solid ground without writing a whole framework by myself.

Amen.2 -

The day I had to Translate this javascript:

fetch(url).then(r => r.json()).then(r => r.data)

To visual basic5 -

During job interview

Me : Am I going to maintain old solution?

Interviewer : Of course not.

2nd day

PM : Please fetch project X from SVN5 -

Did some updates to an older Web Forms website built by a previous SENIOR developer who is a notoriously horrible developer.

Now before I start, you have to understand this guy studied at a University and had been working for at least two years before I even started working. He is supposed to know the basic shit mentioned below.

This also happened a couple of days ago, so I have calmed down since then so I apologise for the relaxed tone. My next rant will contain a lot more swearing.

This fucking guy did the stupidest shit imaginable.

On the details view of a post|page|article|product|anything that would require a details view this jackass would load the data from the DB.

Using an OleDbConnection, OleDbDataAdapter, DataTable and the poorest writter fucking sql statements you have ever seen. All of these declared in the Page_Load method.

There was literally no reason for him to use OleDb instead of Sql, but he simply did not know any better.

He especially liked: "select * from tbl where id = " & Request("T") & ""

ZERO fucking checks to see if the value is even passed or valid, nothing. He did not even check whether the DataTable had any rows.

He then proceeded to use only the Heading column of the returned row to change the page's title.

Stupidly I assumed the aspx page will be in a better state. Fuck NO!

This fucktard went, added server tags to the opening of the asp:Content tag, copied that shit he used to fetch the data and pasted it between the server tags.

He did not know how to access the DataTable mentioned above from the aspx page!

He did this on every fucking project he worked on. Any place that required <%= %> to display data instead of using asp server controls, this cunt copied whatever was written in the code behind and pasted everything between server tags.

Fuck I could go on forever, but I think this is enough for my first rant. 2

2 -

Thanks to @sain2424, we found a really weird bug in devRantron.

One of the user that commented on or upvoted his rant has deleted that account. So when the notification component was trying to fetch the user's avatar to show the notification, it was failling and causing the app to crash.

@Cyanite the bugs you reported is fixed as well. Please update 🙃 🙃3 -

Got pretty peeved with EU and my own bank today.

My bank was loudly advertising how "progressive" they were by having an Open API!

Well, it just so happened I got an inkling to write me a small app that would make statistics of the payments going in and out of my account, without relying on anything third-party. It should be possible, right? Right?

Wrong...

The bank's "Open API" can be used to fetch the locations of all the physical locations of the bank branches and ATMs, so, completely useless for me.

The API I was after was one apparently made obligatory (don't quote me on that) by EU called the PSD2 - Payment Services Directive 2.

It defines three independent APIs - AISP, CISP and PISP, each for a different set of actions one could perform.

I was only after AISP, or the Account Information Service Provider. It provides all the account and transactions information.

There was only one issue. I needed a client SSL certificate signed by a specific local CA to prove my identity to the API.

Okay, I could get that, it would cost like.. $15 - $50, but whatever. Cheap.

First issue - These certificates for the PSD2 are only issued to legal entities.

That was my first source of hate for politicians.

Then... As a cherry on top, I found out I'd also need a certification from the local capital bank which, you guessed it, is also only given to legal entities, while also being incredibly hard to get in and of itself, and so far, only one company in my country got it.

So here I am, reading through the documentation of something, that would completely satisfy all my needs, yet that is locked behind a stupid legal wall because politicians and laws gotta keep the technology back. And I can't help but seethe in anger towards both, the EU that made this regulation, and the fact that the bank even mentions this API anywhere.

Seriously, if 99.9% of programmers would never ever get access to that API, why bother mentioning it on your public main API page?!

It... It made me sad more than anything...6 -

Man, what a way to start the week. Our mailserver went nuts (something about a Shellbot virus, I don't know) and we were forced to migrate to a new one. Clients calling in panic and threatening to sue us and shit. I was the one tasked to fix the problem (I am a developer mind you, my sysadmin knowledge is limited to google searching and contacting support). At the same time, Turkish hackers attacked our other server and forced me to fetch backups and clear spamming scripts. And to top it all, I was forced to answer the phone calls and respond to the threats. Man, I must have been a complete prick in my previous lifetime to deserve this.4

-

Why does FireFox has the shittiest dev tools?

Working on my website and it kept throwing "TypeError: Failed to fetch"

with no other info

Opened Chrome and that thing gave me the entire error without even modifying my logs code, and now I can peacefully solve the problem -.-11 -

Halloween is coming so i made this constructed unicorn mask with a paper mache base with elwire. Does it require any coding skills nope, but i bet people are goin to be suprised that i know how to build wireframes and papermache !

7

7 -

TL;DR : do we need a read-only git proxy

Guys, I just thought about something and this potential gitpocalypse.

There is no doubt anymore that regardless of Microsoft's decisions about Github, some projects will or already have migrated to the competition.

I'm thinking : some projects use the git link to fetch the code. If a dependency gets migrated, it won't be updated anymore, or worse, if the previous repo gets deleted, it can break the project.

Hence my idea : create some repository facade to any public git repository (regardless of their actual location).

Instead of using github.com/any/thing.git, we could use opensourcegit.com/any/thing.git. (fake url for the sake of the example).

It would redirect to the right repository (for public read only), and the owner could change the location of the actual repository in case of a migration.

What do you think ? If I get enough ++'s, I'll create a git repo about this.6 -

Why do simple errors take the longest to fucking find!

Was using the geolocation api (js) to get the current longitude and latitude of my location. Stored them in an object to use in a fetch(). Every time I ran the fetch it was giving me the wrong location!

1hr later I realized I had used.

Fetch(https${longitude}blablabla${longitude})

After realizing this mistake and everything I that lead up to that moment I closed my MacBook and took my ass to sleep.

Moral of this story is...take fucking breaks.

Goodnight1 -

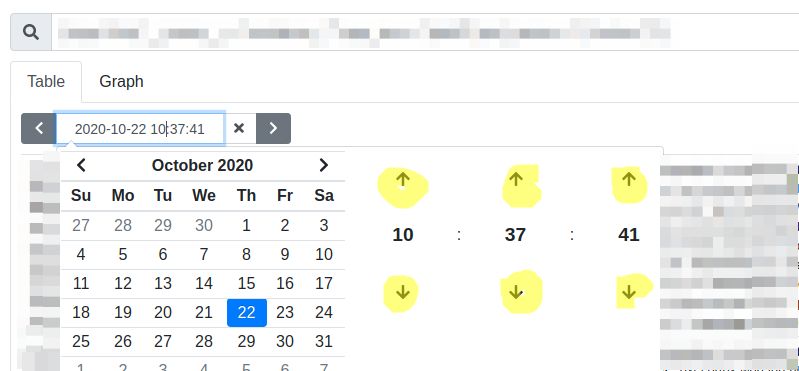

Let's talk about input forms.

Please don't do that!

Setting time with the arrow buttons in the UI.

Each time change causes the page to re-fetch all the records. This is fetching, parsing, rendering tens of thousands of entries on every single click. And I want to set a very specific time, so there's gonna be a lot of gigs of traffic wasted for /dev/null.

Do I hear you say "just type the date manually you dumbass!"? I would indeed be a dumbass if I didn't try that. You know what? Typing the date in manually does nothing. Apparently, the handler is not triggered if I type it in manually/remove the focus/hit enter/try to jump on 1 leg/draw a blue triangle in my notebook/pray 3

3 -

(New account because my main account is not anonymous)

Let's rant!

I'm 3 exams away from my CS degree, I've chosen to do some internship instead of another exam, thinking was a great idea.

Now I'm in this company, where I've never met anyone because of pandemic. A little overview:

- No git, we exchange files on whatsapp (spicy versioning)

- Ideas are foggy, so they ask for change even if I met their requirements, because from a day to another they change

- My thesis supervisor is not in the IT field, he understands nothing

The first (and only) task they gave me, was a web page to make request to their server, fetch data etc.

Two months passed trying to met their requests, there were a lot of dynamic content changin on the page, so I asked if I could use some rendering framework to make the code less shitty, no answers.

I continued doing shitty code in plain JS.

Another intern guy graduated, I've to mantain his code. This guy once asked me "Why have you created 8 js modules to accomplish the web page job?", I just answered saying that was my way of work, since we're on the same level in the company I didn't felt to explain things like usability, maintainability etc. it's like I've a bit of imposter syndrome, so I've never 100% sure that my knowledge is correct.

Now we came at the point where I've got his code to mantain, and guess what:

900 lines of JS module that does everything from rendering to fetching data..

I do my tasks on his code, then a bug arises so the "managers" ask him what's happened (why don't you ask to me that I'm mantaing is code!?!?), he fixes the bug nonetheless he finished his intership. So we had two copies of the same work, one with my job done and still with his bug, and another one without my work and without the bug.

I ask how to merge, and they send me the lines changed (the numeration was changed on my file ofc, remember: no git...)

Now we arrive today, after a month that they haven't assigned any task to me and they say:

"Ok, now let's re-do everything with this spicy fancy stunning frontend framework".

A very "indie" Framework that now I've to study to "translate" my work. A thing that could be avoided when I've asked for a framework, 2/3 MONTHS AGO.1 -

Not about favorite language but about why PHP is not my favorite language.

I recently launched a web shop built on Prestashop. I found that some product pages are so god damn slow, like taking 50 fuckin' seconds to load. So I started investigating and analyzing the problem. Turns out that for some products we have so many different combinations that it results in a cartesian product totalling about 75K of unique combinations.

Prestashop did a real bad job coding the product controller because for every combination they fetch additional data. So that results in 75K queries being executed for just 1 product detail page. Crazy, even more when you know that the query that loads all these combinations, before iterating through them, takes 7 fuckin' seconds to execute on my dev machine which is a very very fast high end machine.

That said I analyzed the query and now I broke the query down into 3 smaller queries that execute in a much faster 400 ms (in total!) fetching the exact same data.

So what does this have to do with PHP? As PHP is also OO why the fuck would you always put stuff in these god damn associative arrays, that in turn contain associative arrays that contain more arrays containing even more arrays of arrays.

Yes I could do the same in C# and other languages as well but I have never ever encountered that in other languages but always seem to find this in PHP. That's why I hate PHP. Not because of the language but all those fucking retarded assholes putting everything in arrays. Nothing OO about that.2 -

!rant

Did you ever have that feeling of "what the fuck - how did I do THAT" - I just had one; I was fine with the algorithm taking like 15/25 minutes to fetch, process, save all data, now it shreds through 10 times of the previous amount in like 7 minutes.3 -

Raging here, overheating really. One spends thousands on technology that is promoted with the catch phrase "it just works", yet here I am, after updating my fancy new emoji maker (iphone x) to 11.2 and then attempt to carry on working by compiling my code to test some new features. And...

oh, whats this xCode? You have a problem? You can't locate something? You can't locate iOS 11.2 (15C114)... sorry and you think that this "May not" be supported in current version of Xcode?

Let me get this straight you advanced piece of technological wizardy, you know you are missing something, you in fact know what it is, you can actually TELL me what is missing and yet, still, in 2017, you can't go FETCH it?????

Really? All you can do is sit, with that stupid look on your face, and watch the paint dry? Your stuck? That's it?

I hate you for the false pretense of advanced capability. and for your lack of a consistent dark theme so my eyes stop bleeding when reading your "I don't know what to do" messages...

By the way, maybe you can stop randomly crashing, or pinwheeling, I get that your bored as a machine designed to crunch numbers/data/code all day long and that for fun you feel you have to add some color to your subsitance. But stop it. Do what I'm told you can do, "JUST WORK" for once without me having to drag you forward kicking and screaming.

K. that feels better. Now for some whiskey.5 -

I feel I should open a github repo, for people to contribute privacy policy parts into, have say folders like "google analytics" and then whenever people encounter those in the wild, add examples there, so people could fetch together a full privacy policy for free, as all those new cashgrab websites are just fucking insane. But I am not really sure, if that would find any contributors tbh sadly.

P.S: I seem to have developed now a third sense, when the devrant post cooldown is down, so I can rant more lol, because whenever I feel like posting the thought or rant, the cooldown is just about to expire -

Don't you just love it when you ask Hibernate to fetch some data from DB and it does that for you.?And also updates a few more tables on its way. And inserts a few more rows. And updates another couple of fields..

Isn't that just amazing? I mean.. What could be more satisfying than getting an "ORA-00001: unique constraint (some.constraint) violated" while issuing a FUCKING **GET** METHOD?5 -

*Today*

Me: coding is fun , la la la, nothing can go wrong~~ ♡

*moment later*

Me: ahhhhh my back!! Why was i sitting in that terrible unergonimic position

Moral of the story , practice good seating posture and you wont get muscle knots! ;-;3 -

ME: Here's an endpoint to get all the textual info about the entity. And this one fine endpoint is to fetch entity's files

FrontEnd: This is no good. I need all entity info in a single JSON

ME: but files could be quite heavy, are you sure you wan...

FE: Yes, Just give me all the info in a single JSON

ME: okay... I hope you know what you're doing..

ME: <implemented as requested>

ME: <opens a webpage with 2 files attached>

Browser: <takes 30 seconds to open a page and downloads 30MB of data in the JSON>

ME: As mentioned before, your approach is a performance killer

FE: No worries, we'll fix that in the next version. First let's see if anyone will be using this feature at all - maybe it's not even worth working on

ME: <thinking> I know I would NOT be using an app if it takes over half a minute to open up a chat channel. FFS I wouldn't even be using Slack if it took 30 seconds to open some other conversation, because for some reason it wanted to fetch all the uploaded files along with all the messages each time a channel is clicked on.....

ME: <thinking> this project is doomed :(11 -

ATTENTION @dfox!!!!

I found a bug in the devRant REST server. When you upload a rant with a vertical image (when the height is bigger than the width), the server seems to flip between the width and the height (I don't know if it happens all the time), and when I fetch the rant, the JSON data for image width and height is flipped! (in the JSON, the attached image section contains width and height information about the image, and they are flipped in their values, meaning that the width key is equal to the height, and the height key is equal to the width).

An explanation on the image below:

The small window on the left shows the real info about the image. Notice how the height is 800 and width is 600.

The window at the bottom shows the data fetched from devRant servers. Notice how the width returned from the server is 800 and the height returned from the server is 600. 15

15 -

Kernel coming along slowly but surely. I can now fetch the memory map and use normal Rust printlns to the vga text mode!

Next up is physical memory allocation and page maps 17

17 -

As a pretty solid Angular dev getting thrown a react project over the fence by his PM I can say:

FUCK REACT!

It is nigh impossible to write well structured, readable, well modularized code with it and not twist your mind in recursion from "lift state up" and "rendercycle downwards only"

Try writing a modular modal as a modern function component with interchangeable children (passeable to the component as it should be) that uses portals and returns the result of the passed children components.

Closest I found to it is:

c o d e s a n d b o x.io/s/7w6mq72l2q

(and its a fucking nightmare logic wise and readability wise)

And also I still wouldn't know right of the bat how to get the result from the passed child components with all the oneway binding CLUSTERFUCK.

And even if you manage to there is no chance to do it async as it should be.

You HAVE to write a lot of "HTML" tags in the DOM that practically should not be anywhere but in async functions.

In Angular this is a breeze and works like a charm.

Its not even much gray matter to it...

I can´t comprehend how companies decide to write real big web apps with it.

They must be a MESS to maintain.

For a small "four components that show a counter and fetch user images" - OK.

But fo a big webapp with a big team etc. etc.?

Asking stuff about it on Stackoverflow I got edited unsolicited as fuck and downvoted as fuck in an instant.

Nobody explained anything or even cared to look at my Stackblitz.

Unsolicited edit, downvote, closevote and of they go - no help provided whatsoever.

Its completely fine if you don't have time to help strangers - but then at least do not stomp on beginners like that.

I immediately regretted asking a toxic community like this something that I genuinely seem to not understand. Wasn't SO about helping people?

I deleted my post there and won't be coming back and doing something productive there anytime soon.

Out of respect for my clients budget I'm now doing it the ugly react way and forget about my software architecture standards but as soon as I can I will advise switching to Angular.

If you made it here: WOW

Thank you for giving me a vent to let off some steam :)13 -

So after the original idea getting scraped during a hackathon this week, we created a slack bot to fetch most relevant answers from StackOverflow using user's input. All the user had to do was input few words and the bot handled all typos, links etc and returned the link as well as the most upvoted or the accepted amswer after scraping it from the website.

The average time to find an answer was around 2 seconds, and we also told that we're planning to use flask to deploy a web application for the same.

After the presentation, one of the judge-guys called me and told me that "It isn't good enough, will not be used widely" and "Its similar to Quora".

Never ever have I wanted to punch a son of a bitch in the balls ever.3 -

Happened just yesterday.

At 7:00pm some shared office colleagues rocked up at the building drunk, to inform me that they were having their own christmas party, since they couldnt be bothered attending ours the day before. -

Just now when I'm watching one of the many anime's I've saved onto my file server I noticed something.. all of their files are incomplete, and so are they on the NTFS mirror on this WanBLowS host. The files got corrupted. I recall that I used robocopy to place the files back and forth, and yet again it lives up to its expectations of it being a motherfucking piece of Winshit. FUCK YOU ROBOCOPY!!! If I wanted to fetch that anime yet again just to deal with your developers' incompetence, I'd have watched it online!! Meanwhile tell me, HOW DIFFICULT IS IT TO DEAL WITH A NETWORK FILE TRANSFER THAT EVEN USES YOUR OWN SHITFEST OF A PROTOCOL, FUCKING SMB?!! MSFT certified pieces of shit!!!!7

-

I'm having difficulty treating HR like human beings. I mean yes I spammed you to fetch me my payslip but why didn't you check why I am not getting it automatically from the first time? And your response is you are busy and HR requests take a minimum of 48 hours to process? THE FUCK? I mean how hard can it be to type my ID in the system and send me my payslip.

I really need to learn how to "play nice" before I get in trouble.3 -

A little late but whatever.

About half a year ago, I started working on setting up self hosted (slippy) maps. For one, because of privacy reasons, for two, because it'd be in my own control and I could, with enough knowledge, be entirely in control of how this would work.

While the process has been going on for hours every day for about half a year (with regular exceptions), I'll briefly lay out what I've accomplished.

I started with the OpenMapTiles project and tried to implement it myself. This went well but there were two major pitfalls:

1. It worked postgres database based. This is fine but when you want to have the entire world.... the queries took insanely long (minutes, at lower zoom levels) and quite intimate postgres/tooling knowledge was required, which I don't have.

2. Due to the long queries and such, the performance was so bad that the maps could take minutes to render and when you'd want that in production... yeah, no.

After quite some time I finally let that idea sail and started looking into the MBTiles solution; generating sqlite databases of geojson features. Very fast data serving but the rendering can take quite some time.

After some more months, I finally got the hang of it to the point that I automated 50-70 percent of the entire process. The one problem? It takes a shitload of resources and time to generate a worldwide mbtiles database.

After infinite numbers of trial and error, I figured out that one can devide a 'render' (mbtiles aka sqlite database) into multiple layers (one for building data, one for water, one for roads and so on), so I started doing renders that way.

Result? Styling became way more easy and logical and one could pick specific data to display; only want to display the roads? Its way more simple this way. (Not impossible otherwise but figuring out how that works... Good luck).

Started rendering all the countries, continents and such this way and while this seemed like a great idea; the entire world is at 3-4 percent after about a month. And while 40-70 percent generates 10 times as fast, that's still way too slow.

Then, I figured out that you can fetch data per individual layer/source. Thus, I could render every layer separately which is way faster.

Tried that with a few very tiny datasets and bam, it works. (And still very fast).

So, now, I'm generating all layers per continent. I want to do it world based but figured out that that's just not manageable with my resources/budget.

Next to that, I'm working on an API which will have exactly the features I want/need!13 -

If we had a devRant vote on the most annoying word of 2018, I'd vote for "token".

Token here, token there, token yourself in the rear!

Some project I'm currently working on has to fetch 4 different tokens for these syphillis-ridden external CUNTful APIs.

Your mother inhales dicks at the trainstation toilet for one token!2 -

Years ago, we were setting up an architecture where we fetch certain data as-is and throw it in CosmosDb. Then we run a daily background job to aggregate and store it as structured data.

The problem is the volume. The calculation step is so intense that it will bring down the host machine, and the insert step will bring down the database in a manner where it takes 30 min or more to become accessible again.

Accommodating for this would need a fundamental change in our setup. Maybe rewriting the queries, data structure, containerizing it for auto scaling, whatever. Back then, this wasn't on the table due to time constraints and, nobody wanted to be the person to open that Pandora's box of turning things upside down when it "basically works".

So the hotfix was to do a 1 second threadsleep for every iteration where needed. It makes the job take upwards of 12 hours where - if the system could endure it - it would normally take a couple minutes.

The solution has grown around this behavior ever since, making it even harder to properly fix now. Whenever there is a new team member there is this ritual of explaining this behavior to them, then discussing solutions until they realize how big of a change it would be, and concluding that it needs to be done, but...

not right now.2 -

TL;DR: Fuck Wordpress and their shitty “editor”.

Client told me the Wordpress editor was unusual slow on their site. I inspected the network traffic, while fiddling around in the admin pages. What I found was an even worse nightmare than expected. Somehow the fucktard of an “engineer” decided to implement the spell check module, to parse all other text areas on the page - even the fucking image sources. The result is a browser sending a GET request to fetch the images from the server every time an author triggers a keyup-event. Disabled the spell check and everything was back to budget-ineffective-feces-Wordpress normal.3 -

When you want only 10 rows of query result.

Mysql: Select top 10 * from foo.... 😁

Sql server: select top 10 * from foo.. 😁

PostgreSQL: select * from foo limit 10.. 😁

Oracle: select * from foo FETCH NEXT/FIRST 10 ROWS ONLY. 🌚

Oracle, are you trying to be more expressive/verbose because if that's the case then your understanding of verbosity is fucked up just like your understanding of clean-coding, user experience, open source, productivity...

Etc.6 -

!rant

Me and my bestfriend joined a hackathon way back since we were in college. The task was to fetch JSON data from a REST APIs then we were given a sample link so we can compare the output between the expected output with our own. But the response from the actual API is not in JSON format, it's a string so we need to do dozens of string manipulation to match the expected output.

To submit our work we are given our own subdomain to upload our work and setup the environment and the URL will be submitted. We know how to complete the challenge but the time is running out and we were in panic mode so my friend mistakenly submitted the URL used to compare the output. We already expected to fail the challenge but what the fuck, we got a perfect score and won the challenge.1 -

Damn, gitea is such a great piece of software, but yet it lacks so much of gitlab, which given are completely different sizes and all, but damn I would kill for the repos import feature of gitlab to be in gitea and maybe even automatic pipelines to fetch a remote automatically..

I could most likely hack together a solution that does the import and remote fetching automatically, but I doubt it would hold against any sort of update and be absolutely brutally murdered by any change.4 -

When your egghead boss (who is a dev, BTW) fails miserably in understanding that JavaScript fetch does not behave like the default synchronous nature of requests in Python.

After failing to make him learn about the asynchronous nature of JavaScript promises, he ends the discussion by saying "that's why python is better than js"

*facepalm*2 -

A (work-)project i spent a year on will finally be released soon. That's the perfect opportunity to vent out all the rage i built up during dealing with what is the javascript version of a zodiac letter.

Everything went wrong with the beginning. 3 people were assigned to rewrite an old flash-application. Me, A and B. B suggested a javascript framework, even though me and A never worked with more than jquery. In the end we chose react/redux with rest on the server, a classic.

After some time i got the hang of time, around that time B left and a new guy, C, was hired soon after that. He didn't know about react/redux either. The perfect start off to a burning pile of smelly code.

Today this burning pile turned into a wasteland of code quality, a house of cards with a storm approaching, a rocket with leaks ready to launch, you get the idea.

We got 2 dozen files with 200-500 loc, each in the same directory and each with the same 2 word prefix which makes finding the right one a nightmare on its on. We have an i18n-library used only for ~10 textfields, copy-pasted code you never know if it's used or not, fetch-calls with no error-handling, and many other code smells that turn this fire into a garbage fire. An eternal fire. 3 months ago i reduced the linter-warnings on this project to 1, now i can't keep count anymore.

We use the reactabular-module which gives us headaches because IT DOESN'T DO WHAT IT'S SUPPOSED TO DO AND WE CANT USE IT WELL EITHER. All because the client cant be bothered to have the table header scroll along with the body. We have methods which do two things because passing another callback somehow crashed in the browser. And the only thing about indentation is that it exists. Copy pasting from websites, other files and indentation wars give the files the unique look that make you wonder if some of the devs hides his whitespace code in the files.

All of this is the result of missing time, results over quality and the worst approach of all, used by A: if A wants an ui-component similar to an existing one, he copies the original and edits he copy until it does what he wants. A knows about classes, modules, components, etc. Still, he can't bring himself to spend his time on creating superclasses... his approach gives results much faster

Things got worse when A tried redux, luckily A prefers the components local state. WHICH IS ANOTHER PROBLEM. He doesn't understand redux and loads all of the data directly from the server and puts it into the local state. The point of redux is that you don't have to do this. But there are only 1 or 2 examples of how this practice hurt us yet, so i'm gonna have to let this slide. IF HE AT LEAST WOULD UPDATE THE DATA PROPERLY. Changes are just sent to the server and then all of the data is re-fetched. I programmed the rest-endpoints to return the updated objects for a very reason. But no, fuck me.