Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "records"

-

I have an array of 1037 records. The soap service only accepts 100 at a time. So, I write code to send an array of 100 records at a time to the soap service in a loop and get a response back of, "The maximum number (100) of records allowed for this operation has been exceeded." Well, I'll try 99 records then. Nope same error. I'll try 50 records, nope I'll just bang my head on the desk now since the documentation and error say it is a record limit of 100. 😠

Look at my code again. I was grabbing 100 records out of the array of 1037 records and storing it in a new array, but I was sending the original array with 1037 records instead of the temporary array in the loop. 😢 I'm going to bed.7 -

So a few days ago I felt pretty h*ckin professional.

I'm an intern and my job was to get the last 2003 server off the racks (It's a government job, so it's a wonder we only have one 2003 server left). The problem being that the service running on that server cannot just be placed on a new OS. It's some custom engineering document server that was built in 2003 on a 1995 tech stack and it had been abandoned for so long that it was apparently lost to time with no hope of recovery.

"Please redesign the system. Use a modern tech stack. Have at it, she's your project, do as you wish."

Music to my ears.

First challenge is getting the data off the old server. It's a 1995 .mdb file, so the most recent version of Access that would be able to open it is 2010.

Option two: There's an "export" button that literally just vomits all 16,644 records into a tab-delimited text file. Since this option didn't require scavenging up an old version of Access, I wrote a Python script to just read the export file.

And something like 30% of the records were invalid. Why? Well, one of the fields allowed for newline characters. This was an issue because records were separated by newline. So any record with a field containing newline became invalid.

Although, this did not stop me. Not even close. I figured it out and fixed it in about 10 minutes. All records read into the program without issue.

Next for designing the database. My stack is MySQL and NodeJS, which my supervisors approved of. There was a lot of data that looked like it would fit into an integer, but one or two odd records would have something like "1050b" which mean that just a few items prevented me from having as slick of a database design as I wanted. I designed the tables, about 18 columns per record, mostly varchar(64).

Next challenge was putting the exported data into the database. At first I thought of doing it record by record from my python script. Connect to the MySQL server and just iterate over all the data I had. But what I ended up actually doing was generating a .sql file and running that on the server. This took a few tries thanks to a lot of inconsistencies in the data, but eventually, I got all 16k records in the new database and I had never been so happy.

The next two hours were very productive, designing a front end which was very clean. I had just enough time to design a rough prototype that works totally off ajax requests. I want to keep it that way so that other services can contact this data, as it may be useful to have an engineering data API.

Anyways, that was my win story of the week. I was handed a challenge; an old, decaying server full of important data, and despite the hitches one might expect from archaic data, I was able to rescue every byte. I will probably be presenting my prototype to the higher ups in Engineering sometime this week.

Happy Algo!8 -

Ever had a 'why in FUCKS name would you do that?!?' moment with another programmer?

In my first study year we learned about PHP and how to write a login system. Most people would either do a 'select count(something like id) from users where username = username and password = password' or select the values based on the username/email and check if the password matches.

This guy selected everything from the table and FOREACHED the records while comparing if the username/password matched with an if within that loop.

I couldn't get him to understand how fucked up that system would become once you'd have loads and loads of users 😅21 -

So was first day at new job ... Boss takes me around meeting everyone. One employee stuck editing file by typing in new records data, calls boss for help.

Boss to me: "I like to get handsy with data from time to time. "

*me smiling, watch how he copies and paste the new records*

ME to boss:"why don't you just write the script to update all the records?"

Boss:"I don't trust the automation of input. "

Me:" what about human error?"

*crowd of other employees gather around awaiting answer*

Boss:"we include margin of errors in our disclaimer to the client... "

*He hears himself*

Boss:"... and we bill by the hour why would we work faster for less money?"

*me grinning, going to remember that line next time I need extension of deadline*

Me*murmurs*:" Master has presented dobby with a sock"

*Girl in next cubicle snickers clearly caught the reference "

Going to love it here.3 -

I found a healthCheck function while troubleshooting an old application for a large auto manufacturer today. The healthCheck function was running several times a day on a timer. The function tries to insert a record into the database and returns whether or not it was successful. It was written in 1999 and has to date inserted over 2.5 million records into the database! 1/3rd of the data for this application was the same record.

How the hell did nobody notice this for 20 years!!!3 -

haveibeenpwned: MASSIVE SECURITY BREACH AT COMPANY X, MILLIONS OF RECORDS EXPOSED AND SOLD, YOUR DATA IS AT RISK, please change your password!

Company X website: Hey your password expired! Please change it. Everything's fine, wanna buy premium? The sun is shining. Great day.1 -

Today it was revealed that the dutch government doesn't even fucking use SPF records for their email. Someone found out by sending an email in the dutch's govt name and it actually fucking worked.

I hope they fire the people responsible for NOT implementing the most BASIC FUCKING EMAIL SECURITY/VALIDITY MEASURES IN FUCKING HISTORY.

Incompetent cocksucking motherfucking fucks.13 -

Co-worker: hey, can you create an email?

Me: yeah, who needs one? There are no records indicating any new people starting for another two weeks.

Cw: if for Stan, he started today. Also he needs a computer set up.

Me: who the hell is Stan and why are there no records of this person?

Cw: he's new, he started today so we didn't need an email or computer before today.

Me: I get that they're new, but what happened to giving the IT department at least 3 days notice on new hires so I can make sure things get taken care of?

Cw: you know how it is around here, nobody gets notice for anything. So can you get that email and computer setup for me, he can't work without them.

Me: I get that we don't actually plan for anything around here and that 90% of my job is fixing that failure, but hiring someone isn't like a system failing, people don't just show up and say "I start today" they have to go through interviews and background checks and other stuff, someone besides this person knew they started today so I don't think it's too much to ask that I get an email when the offer is extended to the person so I can prepare a system.

Cw: well we interviewed him two weeks ago and he accepted the offer last week, he's here and waiting so just as soon as you can please.

Me: well here's an email, the computer is gonna have to wait, I have a lot going on today and I don't have any computers ready right now.

*Seriously tempted to make them wait till next week to cover the 3 days notice I've asked for 100 times*23 -

Seriously, god bless Laravel and Taylor Otwell.

I've just had a customer foolishly delete all their user accounts. The customer was seriously stressed about this and as it usually goes, this stress was echoed in the call.

I explained how they can easily restore the deleted records in a single click as I have configured Laravel's "soft delete" functionality site wide. i.e. when they delete a record it isn't really deleted. Functionality to physically delete the record is hidden away outside the client's user level.

Customer was seriously grateful and paid for 2 hours of my time (even though the call took 15 mins) and generally gave me lots of kudos.

Laravel, awesome. 6

6 -

Client: We need to add a field to the model that serves as a unique identifier

Dev: You already have one, it’s the _id property

Client: We want another! This one is for a task number so we can make a connection between the database record and our ERP.

Dev: Ah I see. I can add that for you. Is this truly a unique identifier or will you be using the same ERP identifier for multiple database records?

Client: I already said it’s a unique identifier. One ERP record to one database record, end of story! To do otherwise would be absolutely ridiculous! You should think for yourself before you ask silly questions.

Dev: My apologies I just want to make sure to clarify exactly what the requirements are.

**6 months later**

Client: HOW COME I CAN’T ASSIGN THE SAME UNIQUE IDENTIFIER TO MULTIPLE DATABASE RECORDS??? CAN’T PROGRAMMERS GET ANYTHING RIGHT EVER??

Dev: …14 -

Best "error" I've ever seen today.

We have a very large database with millions of people, some of those records are duplicates. So I had a long project to write something that would automatically merge duplicate records while allowing employees to review them. Today we had a duplicate show up in the list which should not have.

Same name (apart from one letter), address, employee ID (off by 1 number), same manager, title, phone number, birthdate. But we figured out it wasn't the same person and therefore wasn't a good match.

Turns out they are twin sisters who live together and we're hired by the same manager for the same position at the same time. What are the odds...12 -

Client: I need html code for this search bar *attaches png*

Me: Ok. I will send you the code. HTML is just for front end views.

Client: ok. I will place an order, you can send it over *places order*

Me: *copy 6 lines of code from bootsnipp*

*make some changes*

*submit to client*

Client: Thanks but when I press the search icon it does not display the relevant records.

Me: *smh*4 -

"hi, we have some dns records we'd like to change, they're in the attachment. Could you send a message when it's done? Thanks in advance!"

No, fuck off. Fucking cunts.15 -

Something is not working with PTR DNS records right now.

It's getting really frustrating and I'm starting to DuckDuckGo the issue.

Just noticed that I typed this:

"how to setup a fucking ptr record".

I didn't type the 'fucking' intentionally.

😆😅9 -

Ok you fucks that don't believe in documentation - me included.

Document your shit, because one day, one day some dumb fuck is going to have to recreate your over engineered bullshit of a system and scale it up.

What would fucking be useful right now is ANY god forsaken insight into what in the flying fuck your code is doing, or not doing, or why it makes queries to a database with no fucking records in it 🤦♂️ and then attempts to use that data... in case it did exist.

There's nothing like unpicking a mess of bullshit, and documenting it, and then have to remake it on a new platform.

Documention saves lives kids, maybe your own life one day😬16 -

I'm nearly done importing almost half a million records to Shopify and they just formal email asking me to stop hitting their API so hard.3

-

A recent project actually taught me how HORRIBLY STUPID it is to store large bodies of text in a SQL Server database. There were millions of records with pages of compressed text each.

More and more text records pile on every single day. Needless to say it was becoming super slow and backups were taking WAY too long.

After refactoring them out as compressed files to disk storage (I love you, micro-services) and dropping them completely from the database, the backup size went from 90gb to 3gb!

It's not every day you get to see a dramatic result like that from a refactor.

Lesson learned, and yes it was quite cool.6 -

5 years ago, in my first week of starting this particular job, the CTO casually mentioned they'd been struggling with a bug for years. Basically, in the last few days of the year, it seemed that records were jumping a year ahead, with no rhyme nor reason why. Happened every year, and wasn't linked with them deploying new code. (Their code was a mess with no sane way to unit test it, but that was a separate issue.)

I happened to know immediately what might be causing it - so I ran a case-sensitive search in the codebase for "YYYY", pointed out the issue, explained it, then committed a fix all in about 2 minutes.

I was told I'd officially passed my probation.

(Search for "week year vs year" if you're curious & the above doesn't ring any bells.)6 -

I had a manager who was a complete incompetent idiot (other than a fucking backstabber). He left the company ~3 weeks ago, yet I believe it would take 5 years to get rid of his legacy.

Today I discovered that one of his "genius ideas" led to the loss of months of data. This is already bad, but it's even more upsetting given that the records that have been lost are exactly the ones I needed to prove the validity of my project.

That fucking man keeps fucking with me even when he's not here, YOU DAMN ASSHOLE!!6 -

Its such a elating feeling when you implement multithreading perfectly.

I had a task where I read data from one table, searched on it and added the searched data to another table. The table had 0.22 million records.

On single thread it took entire night to do half of the job.

I divided it into 8 thread jobs, and pushed my quadcore to 100% and voila, its done in 2.5Hrs.

Just like Hannibal would have said, "I love it when a plan comes together"5 -

So this fucktard decided to write the most inefficient way to collect thousands of records.

The system I am working on allows users to book facilities. There is one feature where an admin can generate reports on the bookings made between any two dates. A report for bookings made between January and April generates 7878 records.

So this shithead, after making a call to the server and receiving 7878 records decides to put it through 4 fucking foreach loops (this takes around 44.94 seconds).

After doing that, he passes it to the controller to go through ANOTHER foreach loop to convert those records into a JSON string, using..string..manipulation. (this takes bloody 1 minute and 30 seconds).

Now, my dear, dear supervisor is asking me to fix this saying that there must be a typo somewhere. Typo my arse. This system has been up for more than a year. What have they been doing all this time??? Bloody hell. Fucking idiots everywhere. I now have to refactor

..fucking refactor.2 -

Guys, when you have to manually edit records in the prod DB, please at least make sure that your overweight cat isn't rolling around on the keyboard...8

-

In Soviet Union, people cut x-ray films into circles and used them to make vinyl records of popular Western music that wasn't available because of the iron curtain. Sound quality was atrocious, but that wasn't the point. I have several of such records in my vinyl collection, it was my grandma who was involved in this culture when she was young.

https://en.wikipedia.org/wiki/... 18

18 -

Got an email...

Colleague A (who acts like he supervises me because he's here longer than than I am but he doesn't) : Webpage is broken. Please fix.

(5 minutes later)

Colleague A : Sorry I didn't pull the *other* file you committed (Yeah I know we're still on cvs...)

Another email...

Colleague B (who really is my boss) : Webpage doesn't show all records. Please fix.

(5 minutes later)

Colleague B : Sorry I forgot check page 2.

That's all my development team. Right, development, not designers, or anything.

FML...2 -

This was WAY back in my first job as a programmer where I was working on a custom built CMS that we took over from another dev shop. So a standard feature was of course pagination for a section that had well over 400,000 records. The client would always complain about this section always being very slow to load. My boss at that job would tell me to not look at the problem as it wasn't a part of the scope.

But being a young enthusiastic programmer, I decided to delve into the problem anyway. What I came to discover was that the pagination was simply doing a select all 400,000 records, and then looping through the entire dataset until it got to the slice it needed to display.

So I fixed the pagination and page loads went from around 1 min to only a few seconds. I felt pretty proud about that. But I later got told off by my boss as he now can't bill for that fix. Personally I didn't care since I learned a bit about SQL pagination, and just how terrible some developers can be.5 -

App idea!

A normal social media app. But everytime a user taps on opposite gender's profile pic, it secretly records his face during that activity and then tweets that recording.

@his/her_username

@username_of_person_he_was_looking_at9 -

Fucking Gmail !!!! I hate you so much !!!

My mail server is fucking perfect, I have all the records in my DNS and even have a 10/10 score on mail-tester.com.

But this fucking Gmail keeps putting me the spam folder ! Why do you hate my so much ?21 -

When debugging and testing programs with huge databases, i acidentally start breaking Records in this company.

Had my biggest curl command with 5000 UUIDs (which just made my terminal crash, never happened before), my longest curl request (over an hour) and now Github ... what were your most ridiculous proportions in coding? 6

6 -

Testing demands a “bug” fixed. It isn’t a bug. It is a limit where as the amount of records updated in a single request overloads the RAM on the pod overloads and the request fails. I say, “That isn’t a bug, it fits within the engineering spec, is known and accepted by the PO, and the service sending requests never has a case for that scale. We can make an improvement ticket and let the PO prioritize the work.

Testing says, “IF IT BREAKS IT BUG. END STORY”

Your hubcaps stay on your car at 100km/h? Have you tried them at 500km/h? Did something else fail before you got to 500km/h? Operating specs are not bugs.16 -

I accidentally triggered a reindex on an database with 14 million records in it. It prevented hundreds of people from doing their jobs for several hours. Probably cost the company tens of thousands of dollars. Didn't get fired for it, but man it didn't feel good...

-

There are two records in this table.

I can confirm that the second one is currently being pulled.

One of the values I see getting pulled is different than either two of the records.

😶6 -

Web Developer with no common sense: “I’m going to query the currency calculator API for each of the 1000 records to convert.”.

Web Developer with common sense: “I’m going to query the currency rates API and use the calculation to convert each of the 1000 records.”6 -

I've been training a client for a few months now to not use Slack for sharing passwords and other secure materials.

I really thought I had made great progress. I even had him using a password manager. Then out of nowhere he sends the wildcard SSL key pair to me and a handful of other devs in a Slack thread.

At least we aren't storing important information like medical records. Oh wait, that's exactly what we're doing.6 -

Coworker: since the last data update this query kinda returns 108k records, so we gotta optimize it.

Me: The api must return a massive json by now.

C: Yeah we gotta overhaul that api.

Me: How big do you think that json response is? I'd say 300Kb

C: I guess 1.2Mb

C: *downloads json response*

Filesize: 298Kb

Me: Hell yeah!

PM: Now start giving estimates this accurate!

Me: 😅😂4 -

Okay, so I'm in rage mode right now :/

Last week a client of mine absolutely insisted on removing the "irritating delete popups" as they phrased it, against my advice.

In short, when deleting a record, I had a sexy "swal" confirmation appear (see https://limonte.github.io/sweetaler...) with some key data from the record, that prompted the user to confirm the action.

The client has now emailed me with the subject "URGENT, please read ASAP!!!". The email says his staff has deleted lots of records incorrectly.

*** face palm ***.

This is EXACTLY why we include delete confirmation prompts.

As I've used Laravel with soft deletes (luckily for my client) it shouldn't be a huge issue to reverse around 400 deleted records. However, I'm charging my client for half a days work out of principal.

Perfect example of my client not listening to me :( 5

5 -

TLDR - you shouldn't expect common sense from idiots who have access to databases.

I joined a startup recently. I know startups are not known for their stable architecture, but this was next level stuff.

There is one prod mongodb server.

The db has 300 collections.

200 of those 300 collections are backups/test collections.

25 collections are used to store LOGS!! They decided to store millions of logs in a nosql db because setting up a mysql server requires effort, why do that when you've already set up mongodb. Lol 😂

Each field is indexed separately in the log.

1 collection is of 2 tb and has more than 1 billion records.

Out of the 1 billion records, 1 million records are required, the rest are obsolete. Each field has an index. Apparently the asshole DBA never knew there's something called capped collection or partial indexes.

Trying to get approval to clean up the db since 3 months, but fucking bureaucracy. Extremely high server costs plus every week the db goes down since some idiot runs a query on this mammoth collection. There's one single set of credentials for everything. Everyone from applications to interns use the same creds.

And the asshole DBA left, making me in charge of handling this shit now. I am trying to fix this but am stuck to get approval from business management. Devs like these make me feel sad that they have zero respect for their work and inability to listen to people trying to improve the system.

Going to leave this place really soon. No point in working somewhere where you are expected to show up for 8 hours, irrespective of whether you even switch on your laptop.

Wish me luck folks.3 -

"WTF? These records should have been inserted into the table!"

...Hours of checking code, trying to figure out how this is possible, can't find a way to have this scenario happen...

...Add additional debug and troubleshooting code, add more verbose logging, redeploy to all the containers, reset all the tables, many apologies to the boss for the delay....

...Co-worker comes in: "oh, hey, sorry, accidently deleted some stuff from the database last night before i left."1 -

"I can't replicate it therefore your hotfix for the customer shouting at you is unnecessary"

WTF?! I had to lead this guy to the records where I'd replicated it myself in both the customer system and the demo one! There's a real sense that the core dev team in this place automatically disregards what the rest of us say (support had already mentioned it was replicable but clearly hadn't realised that they needed to spoon-feed this guy).

This place has a huge silo problem, glad I'm not staying much longer...

edit: these tags shouldn't be reordering themselves, not cool13 -

MySQL fell down the stairs again and dropped tables all over the datacenter

the affected servers tried to notify me in advance but spamd wasn't able to scan their messages so IT JUST DIDN'T SEND THEM

EVEN IF IT COULD, NAMED COULDN'T RESOLVE ANY MX RECORDS

BECAUSE BEFORE MYSQL CRASHED IT CONVERTED /ETC/RESOLV.CONF INTO AN INNODB TABLE

(AND THEN DROPPED IT)

(ON PURPOSE)3 -

Three months into a new job, as a senior developer (12+ years experience) and updated an import application.

With one small update query that didn't account for a possible NULL value for a parameter, so it updated all 65 million records instead of the 15 that belonged to that user.

Took 3 people and 4 days to put all the data back to it's original state.

Went right back to using the old version of the apllication, still running 2 years later. It's spaghetti code from hell with sql jobs and multiple stored procedures creating dynamic SQL, but I'm never touching it again.5 -

!rant

Convinced the boss we should move to .NETCore 5 because *future proofing*

and *security*.

Now I get to use records and can use all that fancy syntactic sugars.

Life's good.4 -

So one rant reminded me of a situation I whent through like 10 years ago...

I'm not a dev but I do small programs from time to time...

One time I was hired to pass a phone book list from paper to a ms Access 97 database...

On my old laptop I could only add 3 to 5 records cause MS access doesn't clean after itself and would crash...

So I made an app (in vb6) , to easily make records, was fast, light and well tabbed.

But now I needed a form to edit the last record when I made a mistake...

Then I wanted a form to check all the records I made.

Well that gave me an idea and presented the software to the client... A cheesy price was agreed for my first freelance sell...

After a month making it perfect and knowing the problems the client would had I made a admin form to merge all the databases and check for each record if it would exist.... I knew the client would have problems to merge hundreds of databases....

When it was done... The client told me he didn't need the software anymore.... So I gave it to a friend to use as an client dabatase software... It was perfect for him.

One month later the client called me because he couldn't merge the databases...

I told him I was already working in a company. That my software was ready to solve his problem, but I got mad and deleted everything...

He had to pay almost 20 times more for a software company to make the same software but worst... Mine would merge and check all the databases in a folder... Their's had to pick one by one and didn't check for duplicates... So he had to pay even more for another program to delete duplicates...

That's why I didn't follow programming as a freelance... Lots of regrets today...

Could be working at home, instead had a burn out this week cause of overwork...

Sorry for the long rant.2 -

Client: can you filter boats by location?

Me: Let me see... As you know, there are three remote systems that feed data into your database. I'd have to make a connection between the location records. But I can't rely on coordinates, name, ID or anything else. You'd have to manually create those links for me by remote systems records IDs. Telling me that record XY from system A is identical to record YX from system B, etc...

Client: How many records are we talking about?

Me: 504.

Three days later...

Client: Got it, is that enough for you in excel?

Me: Let me see... Very nice work, I can work with that.

Client: I almost died on it!

An hour later...

Me: Got it, test it and let's run it on the production version.

Client: It works beautifully.

A minute later...

Can we filter the ships by ports?

Me: Let me see... Yes, it's theoretically possible, but it's the same situation as with places...

Client: How many records are we talking about?

Me: 12,647.

Skype relayed to me the sound of something heavy falling, something grunting. Something dying.3 -

The other day, I customer of ours asked me to try to improve the performance of an application of his in a particular method. The method in question taking more than 5 minutes.

I took a look at what it did in the profiler, and it shocked me. More than 100k selects to the database, to retrieve 116 records...

I took a look at the code... Scores of selects in nested loops inside other nested loops inside of... That seemed normal to them...

At the end after we improved it's performance it took 3 seconds...

What shocks me the most is that the customer is a developer himself, really knowledgeable and has an order of magnitude more experience than I do. Am I too anti "worthless database round-trips"? Is that normal? :S1 -

If you're going to request CRITICAL changes to thousands of records in the database, and approve it through testing which is done on an exact replica of production, then tell me it was done incorrectly after the fact it has been implemented and you didn't actually review the changes made to the data or business logic that you requested then you are an idiot. Our staging environment is there to ensure all the changes are accurate you useless human. Its the data you provided, I didn't just magically pull it from thin air to make yours and my job a pain the ass.undefined stupid data analysts this is why health insurance costs a buttload do your job fuckface idiots6

-

There's nothing like sitting on the edge of your seat when you see a monster batch of records get sent updates.

This system was built 5 years ago and it's "peak" batch size has been < 400 records in a day, it usually sits around < 100.

It's not a big system and just runs in the background. So yea small numbers for this guy.

today though, I thought something fell down and shit its self, someone decided to add a a few thousand records to this thing and update a fuck tonne of data (for this system anyway)😬

The damn thing is standing it's ground and churning, but fuck, the scale of things is beyond what we ever thought it would have to deal with at any one time.

Build for the insane benchmarks kids, one day... someone's going to drop an elephant on it.5 -

Who the fuck writes a 200 line method with 52 if/else statements, 3 try-catches, 6 loops and only 1 comment saying //Array of system records. No dipshit I thought that was a Fucking interface. What happened to the whole keep it simple notion?!5

-

Let's talk about input forms.

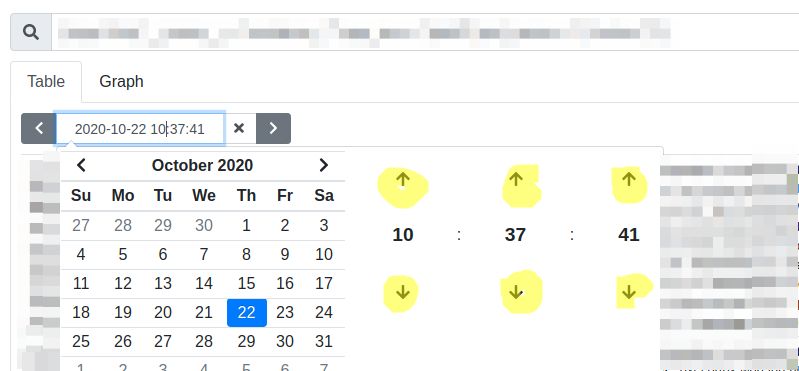

Please don't do that!

Setting time with the arrow buttons in the UI.

Each time change causes the page to re-fetch all the records. This is fetching, parsing, rendering tens of thousands of entries on every single click. And I want to set a very specific time, so there's gonna be a lot of gigs of traffic wasted for /dev/null.

Do I hear you say "just type the date manually you dumbass!"? I would indeed be a dumbass if I didn't try that. You know what? Typing the date in manually does nothing. Apparently, the handler is not triggered if I type it in manually/remove the focus/hit enter/try to jump on 1 leg/draw a blue triangle in my notebook/pray 3

3 -

Hey I see that you're trying to access your account. That sucks, we don't have your phone number in our records. But that's Ok because we're going to send you the confirmation letter by snail mail...

Fucking What?

I mean, I guess that's secure... but seriously though 5-10 days until I can see what is happening with my taxes? This is insanity

-

That moment of chills down your spine when you delete a few records in a huge production db and thinks "strange, this is taking longer than it should?" and suddenly realise that you forgot to include the "where"-clause in the statement...3

-

I find a poor tester copy/pasting data from the test environment to the live one, as he accidentally broke it. I ask the DBA, " why isn't syncing SQL records part of the deployment pipeline?"

"You're front end. This is my job. Go do your job."

"... but it's an easy query, and you're exposing us to human error."

"You need to go sit down."1 -

I'm debugging someone else's 10 year old legacy .asp web application (shoot me now), and I'm trying to find the most recent records in a database table.

Why is the most recent record from September of last year?

Oh.

Because they're storing the datetime value as varchar (40).

Good thing they were smart enough not to waste disk space by using varchar (255)!4 -

How's your internet connection?

I've got home and my net broke any possible speed records.

(NAJWIĘCEJ - top speed) 3

3 -

Have a query that runs in 01:58 and returns 517860 records. Rewrite it a bit for performance reasons, try running again, now it runs in 01:17 and returns 517870 records. Where the hell did I pick up those extra 10 records on that many total records?

I hate optimization...4 -

De-duping drama continues. Background: stakeholder marked a bunch of records as “do not use” and didn’t realize/didn’t care about the impact on other systems. Many of those are active user accounts.

Stakeholder: What if we ask the user to create a new website account?

Me: they can’t register a new account of the email was used already. Are you expecting me to delete all those web accounts so the users can start over with their current email? Or are you saying you’re going to email 400 people and tell them to get a new email address and create a new account? Don’t force users to do extra steps to fix your mess.

Continued from: https://devrant.com/rants/5403991/...3 -

I accidentally started a reindex on a collection that had 14 million records in the middle of the day. Caused an outage in a major portion of our applications for about 3 hours. Worst thing was that once I pressed enter, I realized that it was for the production database, and not the staging database like I intended. I immediately went to go tell the dev ops lead, and he basically said, "whelp, let's just sit back and watch the world burn. Not much we can do about it"1

-

Our ex-employee wrote an amazing SQL SELECT-query consisting of 6449 characters. It has 11 JOINS and takes a solid minute to execute.

The table it fetches from has 16 records and the SQL query returns 46857 records and it was production code lmao14 -

I updated a date field on a table with about 4 million records in it.

Only meant to update about 1000 of them.

This field is used to generate QBRs. So the executive level would notice first.

But then.... They didn't. Nobody noticed. Not for almost a year.

Then someone asked about it and I told them what happened, and they never brought it up again. -

Operations: Can you exclude some user records for the website? These are obsolete and we don’t want users to access these anymore.

Me: So what are you using to indicate the record is obsolete?

Ops: We changed the last name field to say “shell record - do not use.” Sometimes it’s in the first name. Actually, it gets truncated to “shell record - do not u”.

Me: A…text field…and you’re totally ok with breaking user accounts…ok ok cool cool

Not cool 😳😬🤬 I’m not causing more chaos because your record keeping has gotten messy11 -

I am tired of people shi**ng all over Windows and Microsoft. Microsoft runs probably the biggest website and cloud environment in the world. And guess what? On Windows servers. The company I work for has 200,000 employees and many massive data centers with trillions of client records. And we run a 100% Microsoft environment.

I would imagine that any sys admin or developer can appreciate such scale and reliability.17 -

Im gonna turn this topic on its head a little and mention the MOST NECESSARY feature that was never implemented in one of my projects.

It was an iOS client for a medical records system. Since it contained actual confidential medical information, some patient records could be “restricted”. Thos meant if you tried to open them you would be prompted for a reason, and this would be audited.

We already had 2 different iOS apps with this feature in place matching the web app. But for some reason with the 3rd app they just decided not to bother. I discovered that it was because the PO in charge of that project didnt consider it important enough for the demo. So we have one app where you can just bypass the whole auditing process and open restricted patient records freely.3 -

!rant

Question time for you very few Salesforce devs out there, yea I know there’s some.

Seeing as Google is not my friend today, I’m trying to get SOQL to return null valued fields back to a rest api, something this hunk of shit won’t do, and short of looping back through all the records and injecting these fields back in, I’m at a loss... any advise is welcome 🤯 -

So I had this internship in highschool for some marketing company creating simple databases for them to help out with their business.

When I came back from college for I think winter break they had asked if I would come in to help with a task that was going to take all day so they wanted me to come early. I agree and show up the next morning.

They had an Excel spreadsheet with about 5000 records in it and one of the fields was the name of the customer. They told me that the records came in as lastName, firstName or as lastName,firstName.

They wanted the field to look like firstName lastName. For a minute or two they had someone show me how they have been doing this which was just by hand. I don't really work with Excel so im not too keen with the macros. But it took me about 1 Google and 30 seconds to find someone with a similar macro to achieve this, I altered it a bit and let it go through all the records.

It was an awesome feeling when I went to the boss to let them know I was done (it had only been 10 mins), they almost didnt believe me.

Funny how one line of code can turn a day's work into a matter of minutes.2 -

Was showing the new guy how to write a fairly simple database query with a couple of joins.

Spent 3 hours trying to figure out why it didn't work.

Finally discovered that I had randomly chosen one of the 3 records (out of a possible 15,000+) that had leading white space.

Ctrl-Z back to the first query I wrote (3 hours ago), and it works perfectly.

New guy learns a more valuable lesson than I originally intended. -

I went for an interview yesterday and everything went fine, today I was asked to complete a technical test. I have done plenty of these in the past but never have I come across something as invasive as interviewzen.

It records every key stroke and plays it back to the interviewer, I assume they are watching on the webcam and recording audio too. I find the whole concept horrendous.

I closed that shit straight away and will tell them I'm no longer interested.

Anyone used that site before?5 -

I just want to say,

wow the Cloudflare API is awesome.

In less then an hour (from a blank file - to automation and tested) I was able to setup a DDNS task that basically just pulls my public ip (see https://devrant.com/rants/2050450/... for details) comparing it to the current DNS records for and update them if anything has changed in the past 30 minutes.

So kudos to these guys letting me in next to no time having a simple yet elegant way of dealing with my missing static ip.

Why can’t all APis be this simple?3 -

I freelanced for a startup one time, and found out they had ten of thousands of records stored in their DB about dental patients, inducing name, address, social security #, some medical history, etc. All in plain text. Worst part is they hired me after a 20 min phone call, and didn't even sign a NDA!

Makes me paranoid to use the Internet knowing what some of these companies do.2 -

I just released the first version of my most successful project. :)

It's a salesforce data migration tool that replaces AutoRabit for our company. The tool includes an own programming language to freely manipulate records and compared to AutoRabit which needs 12 hours for a full migration my tool needs 8.

Minutes.

A total of 18k fucking loc.4 -

My parents think everything i do on the computer is magic. Theyre too scared to toich any of my stuff because I convinced them it records live video and biometrics to my phone.

Sometimes life is good -

I dunno about coolest, but I did sort of cement my reputation as the "database guy" in my first job because of this.

My first job was with a group maintaining a series of websites. Because of the nature of the websites, every morning we had to pull the records from one database on one network, sneaker net the data to a database on another network, and import the data via custom data import function.

However, the live site would crash after 100 or so records were imported. The dba at the live site had to script out a custom data partitioning script to do his daily duties, but it definitely messed up his productivity.

Turns out, the custom mass import function had recycled the standard import function, which was only used to import 1 record at a time, and it never closed its database connections, because it never needed to. A one line fix to production code was delivered 6 months later (because that was our release cycle) and I came up with the temporary work around, which was basically removing the connection limit. It would still crash with the work around, but only with multiple days worth of data. So basically only on Monday. Also developed the test set for the import (15k+ records). -

-click-

MySQL: 'the table does not exist'

I just fucking made the temp table dude, that's what you literally do in step 1 .... how could it not....

-click-

MySQL 'Records: 1 Duplicates: 0 Warnings: 0'

Me: "Oh there we g---"

-click-

MySQL: 'the table does not exist'

Me: "Hey you just worked!"

-click-

MySQL: 'the table does not exist'

GOD DAMN IT

-click-

-click-

-click-

-click-

-click-

-click-

MySQL 'Records: 1 Duplicates: 0 Warnings: 0'

Me: Uh you're working now?

-click-

MySQL 'Records: 1 Duplicates: 0 Warnings: 0'

-click-

MySQL 'Records: 1 Duplicates: 0 Warnings: 0'

-click-

MySQL 'Records: 1 Duplicates: 0 Warnings: 0'

Guess that API just needed breaking in....3 -

Lead developer wants to put SQL statements in to records of the database for the code to execute (in Rails). This smells really bad to me, am I over reacting?8

-

!Rant

Wrote a crawler and now has 18 million records in the queue. About 500.000 files with metadata.

1 month until deadline and we have to do shit many things.

Now we discover we have a flaw in our crawler ( I don't see it as a bug ).. We don't know how much metadata we missed, but now we have to write a script that scrapes every webpage that we've already visited and get that metadata..

What's the flaw you ask? Some people find it funny to put capital letters in their attribute names.. *kuch* Microsoft.com!! *kuch*

And what didn't we do? We didn't lower case each entire webpage and then, only then, search the webpage for data..5 -

I had to write a script to clean some crap from a database.

In particular it had some records containing multiple names and I had to split them.

It was really a nightmare because the separator was not always the same, e.g. "John, Mark and Bob" or "Alice+Mary".

«Ok, let's use a fucking regex: ",|(and)|\\+|/|&"»

Then, I realized there were some "Alessandro" in the database. Yeah, Aless(and)ro. Shit.

So I had eventually added more crap into the database.6 -

Back when SharePoint was still foreign to me, and I didn't know the pain of administrating it, I had the idea that files were copied to my local machine. I saw no need to preserve backups from before I started, especially since they already existed on the server, so I got rid of them.

Also hooked up to SharePoint was an email handler. Whenever a case was created or deleted, an email went out to the entire department. Guess what happened when I deleted 250,000 records?

Fortunately, SharePoint has a recycle bin. Unfortunately, restoring those files generated another 250,000 emails. To the whole department.

I bought many donuts to appease the crowd baying for my blood.2 -

I remember it was Friday, 30 minutes before leaving the office when suddenly someone from the upper management directly asked for my help to mass update something as it is important. By that time our CMS is not capable of doing this so I had to do it straight in the live database.

It was an update query and I decided to type the query in notepad first. when i pasted it in the terminal i didn't noticed i missed the "where" part so i mass updated the status of all our records dating 3 years back.

fuck.. please take note it was on a Friday night.4 -

Wrote a SQL stored procedure today to do a complicated query. Decided to make it so that I could pass multiple records into the stored procedure in comma separated format, but the damned thing would only pull the first record. The query worked fine outside the procedure but it wouldn't pull anything more than the first record. After deleting and recreating and spending 30 minutes trying to figure out what was wrong I realized I changed the length of the wrong parameter. Set the correct one to varchar max and it was all good. 30 minutes of my life I will never get back.🐘💨1

-

I always thought the hate on senior developers doing stupid stuff was exaggerated... Mine just pulled an entire table, then used 4 for loops to reduce the records by criteria... I don't think he knows what the where statement is in SQL!1

-

I once inherited a project that had been outsourced for more than 6 months to a company at the other end of the world. Although the PM had almost daily contact with the developer, the project wasn't technically followed up.

I had already recommended code reviews 3 months before I inherited the project. But of course these had never happened.

The project contained all the nice-to-have features, but the core wasn't working. Loading the home page (with 20 records from a DB) took 15 minutes.

We then had roughly a month the get the project straight.4 -

2nd year programming professionally I designed, coded, and released a PCI compliant credit card encryption system, including updating all 7 million records (at the time) in our existing database to utilize the new system. By some miracle, it worked with only one small hiccup (see previous rant).

-

About today when I was trying to migration 2 million+ records simultaneously!

Ran two migration @ once and this happened!

PS: On restart everything is back to normal but lost 2 tables data which needs remigration! 3

3 -

Smartphone camera applications need to show this notification whenever a user records a video vertically.

Otherwise, many smartphone users will never learn to drop the vertical filming habit and to film horizontally.

This is a major benefit of dedicated cameras and camcorders: their user interface is aligned for being held horizontally, so people do so habitually. This is not the case with mobile phones. 4

4 -

First month of project we suggest that we test that Entity Framework has made reasonable DB queries because the system will need to handle a lot of records. “Not a priority in this sprint because we need features.” Devs try to get it into every sprint. The last week of the project they want us to dump in a ton of records so they can test it. The N+1 SELECT query issue is on main queries. It is so bad and slow with more records that a simple query causes the container management to auto scale the application on a single query. They can have max 8 users in the system at a time and it will take 10 seconds to do a simple page refresh.

They get on our case and we dredge up all of the correspondence where they completely ignored our advice. Fix it now! We need another sprint. Fix it free! No.11 -

Just checked the source code of our backend project with that tight deadline. So far, the backend consists of an in-memory database, 2 records and no API. 🙃

There is also no documentation on how it should look like 🤡14 -

-Applying for internship

-the "previous work/employee records" field is their

-can't leave it blank

-so did this "------" for its various fields of work position, start date of job, and date of job, salary, etc.

-warning says "can't leave that field empty"

HOW THE FUCK ONE APPLIES TO AN INTERNSHIP WITH WORK EXPERIENCE WHEN THERE IS NONE ?8 -

When you were clever and improved the data quality of the address database by using your companies web service in a batch job for 10M records just to find out that each call costs 10 cents...

5

5 -

Cursors.

WHY U DO DIS?

I know I'm impatient but 20 minutes _and still going_ on an update is madness, even at this volume records, and it's all because one of the numerous dependent triggers is running A CURSOR. Genuinely thought the query had just put itself out of its misery, as I am tempted to do.1 -

while writing a software for records compilation for a high school, the principal asked if the software would be able to mark the answer sheets from the students,

I'm taking, non multiple choice answer sheets.

hand written.

how?5 -

Last day of agile project i get asked for confirmation that the alpha system can handle 100000 records. We have had no load testing requests only feature pushes every sprint.

I see the back-end guys have used EF in a search function that eager joins a bunch of tables. Then the results get sorted and filtered in application code. It works fine for a few hundred records but the customer will do about 100k new records a year.

Yeah this won’t meet requirements. I wish they asked for some load testing before the last day. They aren’t going to like that one person can do a search every 15 seconds by the end of the year when I tell them. FML12 -

Just found a unit test in a legacy project that is over 300 lines long, creates files on the server and multiple records in the database, then deletes them all. It takes over two minutes to run.

Madness5 -

At NYU doctor's office that forces me to register a temp account to use their WiFi.

I get an email saying my medical records have changed so I try logging into the site to check.

Site can't be accessed on the network..,1 -

So today I accidentally updated more than 3000 records in a table and wasn’t using transactions so I couldn’t Rollback, LOL had the whole team freak out this Monday morning and we had to pull a backup5

-

Me: Alright Derwent, don't fuck up this database update. There's no undo button and no way to import a database backup so you gotta be extra careful or you're going to have to spend hours writing a whole bunch of regular expressions and sql statements to sift through an 11mb database dump and figure out how to restore 59 thousand records to the correct state. Let's practice this transition on a staging server first and make sure we get it right

Me: I got you fam *presses the wrong button*

-

What aren't there any 2k 32 inch 144 Hz monitors out there that are: flat. I want to upgrade my home setup from a 24" setup, which is, you know, flat. Even back in the days of the CRT monstrosities I've spent a premium on getting a flat panel, as the outside curvature was a technical obstacle to overcome.

I don't understand the need to curve the display. It distorts the lines, hinders other people looking at your screen as you have to be in the right spot, and every camera records on a flat surface. Why should it be a good thing to go curved?

I am reminded of the 3D craze.1 -

-----------Jr Dev Fucked by Sr Dev RANT------

Huge data set (300X) that looks like this :

( Primary_key, group_id,100more columns) .

Dataset to be split in records of X sized files such that all primary_key(s) of same group_id has to go in same file.

Sde2 with MS from Australia, 12 years of 'experience' generates an 'algo'. 70% Test case FAILED.

I write a bin packing algo with 100% test case pass, raises pull request to MASTER in < 1 day. Same sde2 does not approve, blocking same day release.

|-_-| What the fuck |-_-| Incompetent people getting 2x my salary with <.5x my work2 -

When you mess up the repo, the bestfriend to help out is named "stash".

Personal record: 9 times stash in a row with no commits.

Comment to share your personal records... 4

4 -

I'm doomed.

My first production worker script is making multiple active attribute of a user. My script should be able to deactive the old attributes if there is new one.

Months ago, this issue occured. My teammate from team A take over the script to investigate since I am busy working with team B.

Yesterday, I found out that I, myself, overwrite the fix my teammate made for that because of a new feature.

I have to clean up the affected records on production on Monday..and i have to explain to my manager. T.T

LPT: ALWAYS PULL REPO before developing new feature... -

I work in a large organization that previously didn't have it's own development team. Therefore various business areas have built their own solutions to solve problems which mostly involve Access and Excel.

Many of these applications still exist and we are expected to resolve any issues with them and update them when necessary performing this support role while still expected to meet our (very tight) development timelines.

I can't tell you how much of a pain in the tits it is to be trying to power through a priority development only to be interrupted with an urgent instruction to fix a 17 year old Access database that's running slow.

Of course it's pissing running slow, it's 17 years old, has nearly a million records and you have multiple users accessing it across the country!! I think it's time to peacefully let it die.6 -

Why does email suck so much oh my god, I don't want a fucking lesson in the kinds of domain records, I can set a TXT to prove that I control the DNS record, I have a TLS certificate, what the fuck else would I possibly need to prove!? None of this is contributing anything to security! Just fucking figure it out, it's the internet, not an international border, jesus.5

-

I created a tool where you just click a button and it records your in time and keeps in the local storage of a browser.

It gives you remaining time in office plus when will be your half day or short day based on your in-time. Whenever you visit in a whole day.

For motivation, I mention the remaining time in office like. Ex. "10 minutes more in the office or 1 more hour".

I shared this with one or two of my colleagues and within in a week, I saw it open in every sales, HR and IT departments systems.

That day I get the taste of true entrepreneurship. -

Had harsh words to a recruitment agency a few days ago who have been emailing me completely irrelevant job specs for weeks. (Side note, I don't know these people or how I ended up on the list in the first place, i'm not looking).

Got an apology this morning from one of the agents, their manager also just sent me a mail to apologise, as she can see from the records i've received a lot of crap ... but she also took a moment to let me know she does have a role that might actually suit my skill set.

Are you fucking kidding me?

"Why doesn't anyone reply to my emails" - because you are the devil and can't be trusted. -

Records Person: Can you look at this member renewal issue for system A? It’s happening on the website you maintain. Here are some recent errors to debug.

Me (web developer): I can’t reproduce the error your reporting. Is there something I’m missing? And is there an example for the staging environment?

RP: There’s another team that will manually reconcile the records in system A if they don’t match what’s in system B. So this gives users two active memberships when it should only be one.

Me: 😑 So you already know the issue is human intervention messing with the records and causing the renewal issue. This is not a website issue. It’s a data issue.1 -

Ever have one of those moments where you're running a service you built to update about a decade worth of police records, realize about halfway through that you fucked the loop and you're copying data from the first record onto every other record, and then just really wish that you had checked things better in test before running this on the prod server?

I'm sure the only reason I'm still here is because the audit log contained the original values and I'm good at pulling data out of it.1 -

Nude and stranded while fighting off a group of polar bears and wolves in the Arctic, or attempting to try and explain to a web designer what glue records are and why their DNS is fucked...

Easy choice 🌫❄⛄🐺🐧 -

Me: *asks for sample data for all tables to test database transactions*

Team member: *gives over 45000 records only for city*

🤔 😐 -

Kafka lead after a perf test - we have amazing performance, processing 43 records a second😄

Me - WTF!!!! 🤬5 -

Last night was dreadful- at 11 pm Dev on my team makes a change to a form with 2 million plus records without informing me and leaves to go out without confirming the change was successful. I get call from the data center at midnight because the app is down. Didn't get done till midnight and now data center boss is blaming me.5

-

'Google knows everything about you.'

'Facebook records conversations.'

'Personalized ads manipulate you.'

Guess that's why YouTube showed me an ad in Spanish yesterday. Or why I never clicked on an ad. Or why only every 1 in approx. 10 ads is somewhat relevant to me (and thats with me explicitly stating my interests in ad personalization options).

I don't have anything against shielding your privacy. It's your right not to use a product if you don't want to, but I don't see how people can make these statements without providing solid proof.

Just my opinion.5 -

Books. I love them, I buy them, where ever I go. My favorites are the Discworld novels by Terry Pratchett, but I will read any sci-fi/fantasy-styled book I come across. I would attach an image, but my phone's camera is pretty shitty, so just imagine some shelfs filled with books.

Music is imortant to me too. There will always be music playing when I'm around. I'm trying to make some myself. It's not that good but still fun. I am also a collector of vinyl records.

And then there are games of course, because sitting at a pc just for coding is not enough :D3 -

I updated all the records about 1000+ because I forgot to write the where clause with the update query and it updated every fucking single row with same values.

~God bless backups :)2 -

I wasn't hired to do a dev's job (handled sales) but they asked me to help the non-HQ end with sorting transaction records (a country's worth) for an audit.

Asked HQ if they could send the data they took so I wouldn't need to request the data. We get told sure, you can have it. Waits for a month. Nothing. Apparently, they've forgotten.

Asks for data again. They churn it out in 24 hours. Badly Parsed. Apparently they just put a mask of a UI and stored all fields as one entire string (with no separators). The horror!

Ended up wasting most of a week simply fixing the parsing by brute force since we had no time.

Good news(?): We ended up training the front desk people to ending their fields with semi-colons to force backend into a possibly parsed state. -

So my Database professor decided that we should design a database with like 4 tables and hundreds of records and we had to write like 100 queries to produce a specific output from the tables we designed. All in less than a week. This is the first time I'm learning about databases, mind you.5

-

I was asked to update the whole confidential, financial database by exporting it as excel, and using Macros to edit its content. Much akin to adding one extra attribute per row.

The truth is, the table originally had 6.3k records. After updating and putting the data back to NoSQL database again, I realized I ended up creating 7k rows of data. Yet it works just perfect !

*HAILS TO ALMIGHTY FOR THE MIRACLE*

Sometimes, I still wonder where did those effin 700 rows come from, even after I skipped an excel while uploading2 -

Just found a breach somewhere in the university's meal booking system, that exposes some good 60K records of students, professors and staff orders and payments.

It's just that I am behind this shitty web UI with 20 rows per page table as the only option.

Now how 1337 is that?6 -

You made a very important device used in pharmaceutical labs which stores important data, but for some fucking reason you decided to write the communication protocol so poorly that I want to cry.

You can't fucking have unique IDs for important records, but still asks me for the "INDEX" (not unique ID, fucking INDEX) to delete a particular one. YOU HAVE IT IN THE MEMORY, WHY DON'T USE IT?!

How the fuck you have made such a stupid decision… it's a device that communicates using USB so theoretically I could unplug it for a moment, remove records, add them and plug it in again and then delete a wrong one.

I can't fucking check if it's still the correct one and the user isn't an asshole every 2 seconds because this dumb device takes about 3 for each request made.

WHY?

Why I, developing a third party system, have to be responsible for these dumb vulnerabilities you've created? -

To fetch 100 users at once, i used JPA hibernate findAll() method. Simple fucking enough. I realized this shit is slow. Takes a while to fetch and 100 records aint even a lot!

This shit needs over 265 ms to fetch 100 users

About 75 ms to fetch 20 users

That shits terrible!

Then i wrote a custom JDBC class with custom SQL queries to fetch the exact same shit.

Now it fetched 100 users in 7 ms, 37x times faster for performance

I havent even optimized indexing or did shit. I just avoided using jpa hibernate

Someone explain this to me8 -

Every time I see the N+1 query problem in people's implementation, I feel like crying. Especially when it's dealing with large data sets of something like 1000 records.2

-

I have a gitlab instance behind a reverse proxy at gitlab.mydoman.pizza (yeah my TLD is .pizza 😎🍕). I have a personal site hosted on GitHub pages. I have a CNAME record in GitHub repo pointing to mydomain.pizza. I have 4 A records on my domain registrar pointing to the GitHub pages server IP addresses. now both mydomain.pizza and myusername.github.io both go to my gitlab instance??¿¿ what the fuuuuuckkkkk?¿?¿1

-

Customer: "There are only 'X' values in COLUMN_D, your - report - import is wrong!"

Me: select count(*) from table_a where column_d is not in ('X') -> returns more than a thousand... Yeah please only scroll within a couple hundreds of records in your shitty sql client gui without making queries. Fuckhead. -

How can a shitty student information system that already costs $20k/yr have an optional shitty SOAP API module, that only allows read access to records, that has an initial setup fee of $5k plus $5k/yr?!5

-

Me: You decided some records in system A should be obsolete, but the records are tied to active user accounts on the website. Now, I have users emailing and asking why their profile’s last name field says “shell record - do not use.”

Stakeholder: Oh…can’t you stop those profiles from loading? Or redirect the users to the right record in system A? In system A, we set up a relationship between the shell record and the active one.

Me: 😵 Um, no and no. If I stop a user’s profile from on the website, that’s just going to cause more confusion. And the only way to identify those shell record is to look at the last name field, a text field, for that shell record wording. Also, the website uses an API to query data from system A by user id. Whatever record relationship you established isn’t reflected in the vendor’s API. The website can’t get the right record from system A if it doesn’t have the right user id.7 -

I have a bit of a love/hate relationship with Brian Goetz. He's undoubtedly capable as an engineer, but he's also one of those 90's style neck beard jerks who is incapable of having a conversation with another human being and not being condescending AF.

That out of the way, this proposition and explanation is why I keep paying attention to him (well, maybe not entirely, he owns the direction of java, so yeah).

https://github.com/openjdk/...

It's reasonable, well thought out, and gives credit where it's due. While a bit non-committal, it speaks to what good has happened to java since it moved out from under the original manager (though the original owner was still far superior).

Here's hoping we see more proposals that parallel this direction.3 -

As a webdev telling a system admin that he should set the DNS records before moving the domain name to keep the email working. It does bother me

-

Why are some people incapable of reading documentation? THE "DUPLICATE RECORDS" IN OUR KAFKA TOPIC ARE BECAUSE ITS AN EVENT STREAM AND NOT A DATABASE. THIS IS LITERALLY ON THE FIRST PAGE OF THE GUIDE YOU ABSOLUTE MORONS.3

-

So I was searching how to speedup my page speed of site which is built on Codeigniter and despite minified css and js I read this:

" CI active records are garbage, use classic quries"

:'( I used active records all over my app -

don't use natural keys

I accidentally registered with the NHS with the wrong date of birth. NHS records are keyed by date of birth among other things. This will have devastating consequences.8 -

opening (and closing) 400 tabs/hour with Google Chrome means Chrome will now memflood all my 32GB of RAM in less than an hour now! ... someone call Guinness World Records3

-

So a team at on-site sent a OOM(Out of Memory) issue in our morning.

Everyone analysed the issue as being code issue since we were bringing too much data into the runtime process. The analysis was done on the heap dumps. The number of records reported by user were 1k. At the end of the day it turns the number of records were actually 100k+.

Why do people jump.to conclusions without thinking about the obvious. :-(1 -

Ok Visio. You have a Database Wizard that allows me to associate shapes with database records. Cool! You do not allow me to automate this through VBA? NOT COOL2

-

So this is my first experience of shitty code written by colleague

God, for REST API she used ?id=<int>

Not only that,

if the route was /cms

she used GET method for /cms/get/?id= to get single record and

/cms/getAll again in GET method to get all records

Damn15 -

I know a doctor's practice which gives you your first name as a default password for your account. Watertight security for all these medical records :)

-

DEAR NON TECHNICAL 'IT' PERSON, JUST CONSUME THE FUCKING DATA!!!!

Continuation of this:

https://devrant.com/rants/3319553/...

So essentially my theory was correct that their concern about data not being up to date is almost certianly ... the spreadsheet is old, not the data.... but I'm up against this wall of a god damn "IT PERSON" who has no technical or logic skills, but for some reason this person doesn't think "man I'm confused, I should talk to my other IT people" rather they just eat my time with vague and weird requests that they express with NO PRECISION WHATSOEVER and arbitrary hold ups and etc.

Like it's pretty damn obvious your spreadsheet was likely created before you got the latest update, it's not a mystery how this might happen. But god damn I tell them to tell me or go find out when the spreadsheet was generated and nothing happens.

Meanwhile their other IT people 'cleaned the database' and now a bunch of records are missing and they want me to just rando update a list of records. Like wtf is 'clean the database' all about!?!?!?

I'm all "hey how about I send you all records between these dates and now we're sure you've got all the records you need up to date and I'll send you my usual updates a couple times a day using the usual parameters".

But this customer is all "oh man that's a lot of records", what even is that?

It's like maybe 10k fucking records at most. Are you loading this in MS Access or something (I really don't know MS Access limits, just picking an old weird system) and it's choking??!?! Just fucking take the data and stick it in the damn database, how much trouble can it be?!!?!?

Side theory: I kinda wonder if after they put it in the DB every time someone wants the data they have some API on their end that is just "HERE"S ALL THE FUCKING DATA" and their client application chokes and that's why there's a concern about database size with these guys.

I also wonder if their whole 'it's out of date' shit is actually them not updating records properly and they're sort of grooming the DB size to manage all these bad choices....

Having said all that, it makes a lot more sense to me how we get our customers. Like we do a lot of customer sends us their data and we feed it back to them after doing surprisingly basic stuff ever to it... like guies your own tools do th---- wait never mind....1 -

Customer asks us to add an exception report to a file upload process, to show which users failed to be added, and why.

File has 4 fields per record: id, first name, last name, and email.

Customer: "some of these records aren't uploading, and when I look at the new report, it says 'email required' for those users. I don't understand. Does that mean they can't be uploaded without an email?" -

Anyone else here who needs to deal with GDPR on the software level? I'll go nuts until we're compliant in every aspect.

I've been developing a consent library for the last few days. It even automatically links expressions of explicit consent to current screenshots of the relevant forms (because you need to do that too), and past records are immutable. Well, unless the whole database gets fucked somehow, then it's not.3 -

#devrant , you really need to do something about it . The GitHub account is literally named "cheating wife"

https://devrant.com/rants/4336831/... 53

53 -

Boss explaining a bug found in my senior developer's (yes, that one) application...

Boss: "This shouldn't happen, this presents a security issue since these records should not be visible at this point."

"Senior" Developer: "You're right. Hmm, what should I do about that?"

Me: *face palm*2 -

**Wrongly edited last time, made it confusing. Deleted. Posting again. Apologies.**

So, scenario was like, we friends were chatting on WhatsApp and talking about Germany as one of us has shifted there recently. After a 30-40 mins chat, I clicked on Google to search for some company(say 'xyz') .... Now this searching for company and chatting with friends are totally separate events. But when I typed 'xyz' , google suggested "xyz career Germany" and 'xyz Germany glassdoor'.

My question is that is it possible that Google is taking records of what am I typing anywhere(I've android phone) and using that to decide which suggestions should it be showing to me? Or am I thinking too much? 😌9 -

Truncated a database of around 14 million records today... Had 2 minutes of silence after it with my buddies @hahaha1234 and @papierbouwer1

-

This is why code reviews are important.

Instead of loading a relevant dataset from the database once, the developer was querying the database for every field, every time the method interacted with it.

What should have been one call for 200k records ended up as 50+ calls for 200k records for every one of 300+ users.

The whole production application server was locked.2 -

Looking for ideas here...

OK, customer runs a manufacturing business. A local web developer solicits them, convinces them to let him move their website onto his system.

He then promptly disappears. No phone calls, no e-mail, no anything for 3 months by the time they called me looking to fix things.

Since we have no access to FTP or anything except the OpenCart admin, we agree to a basic rebuild of the website and a redeployment onto a SiteGround account that they control. Dev process goes smoothly, customer is happy.

Come time to launch and...naturally, the previous dev pointed the nameservers to his account, which will not allow the business to make changes because they aren't the account owner.

"We can work around this," I figure, since all we *really* need to do is change the A records, and we can leave the e-mail set up as it is (hopefully).

Well, that hopefully is kind of true—turns out instead of being set up in GoDaddy (where the domain is registered) it's set up in Gmail—and the customer doesn't know which account is the Google admin account associated with the domain. For all we know it could be the previous developer—again.

I've been able to dig up the A, MX, and TXT records, and I'm seeing references to dreamhost.com (where the nameservers are at) in the SPF data in the TXT records. Am I going to have to update these records, or will it be safe to just leave them as they are and simply update the A record as originally planned?6 -

Yesterday morning I was working on importing records to a Shopify store. A few thousand records in their API starting returning status code 420 with the message "Unavailable Shop", same for the admin panel.

I called support and they created a ticket but it's been almost 24 hours and our shops API and admin panel are still on a smoke break apperently. -

The company I worked for had to do deletion runs of customer data (files and database records) every year, mainly for legal reasons. Two months before the next run they found out that the next year would bring multiple times the amount of objects, because a decade ago they had introduced a new solution whose data would be eligible for deletion for the first time.

The existing process was not be able to cope with those amounts of objects and froze to death gobbling up every bit of ram on the testing system. So my task was to rewrite the exising code, optimize api calls and somehow I ended up in multithreading the whole process. It worked and is most probably still in production today. 💨 -

Tonight I will delete the data of 10M of records of personal data after the inspection of the Data Protection Authority.

3

3 -

This kind of BS makes me mad

" - The password must have 6 digits

- It must have at most 2 repeated digits and 3 sequentials"

RIGHT, because 293417 is SO much safer than 999123

Btw, this is a phone company, so with this password you could probably have access to someone's phone number, phone records, address, and much more. WTF 1

1 -

I FINALLY GOT A FUCKING MAIL SERVER GOING

DMARC, SPF, MX, WHATEVER

My zonefile is full of crap generated by Stalwart Mail that I don't understand but I DON"T CARE. I've been trying to do this since high school. I sank WEEKS into it. I can finally die in peace.

Also, I'm now able to self-host shit like GitLab that relies on a mailer for correct operation.

God. FUCKING Damn.

Naturally, now that it's done I've no idea what took so long, and the only problems I appreciate are the ones I solved in the last weeks.4 -

There are days I like to pull my hair out and create a dynamic 4D map that holds a list of records. 🤯

Yes there's a valid reason to build this map, generally I'm against this kind of depth 2 or 3 is usually where I draw the line, but I need something searchable against multiple indexes that doesn't entail querying the database over and over again as it will be used against large dynamic datasets, and the only thing I could come up with was a tree to filter down on as required.6 -

Waiting for DNS records to update..

It's always a difficult choice; Do I work on something else or do I hope record will be updated in few minutes..

I always choose wrong, will keep you updated :p9 -

So I inherited this buggy application my company developed to process state rosters for health care. The daily process fails often and I haven’t been able to figure out why. Then I notice one little thing... it’s essentially using SQL injection as a method of updating records from a file that we receive from outside... there’s no checking for validity of the statements or making sure they’re safe to execute. Just a for in loop and calling a sp to execute the query text under elevated permissions.

-

I just joined a team of a product where a user can have multiple roles. While getting familiar with the flow, I noticed that the previous developer, instead of creating a many-to-many relation of users and roles, adds the 6 records of the same user with the same email each with the different role id.6

-

"I think.."