Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "data set"

-

Long rant ahead, but it's worth it.

I used to work with a professor (let's call him Dr. X) and developed a backend + acted as sysadmin for our team's research project. Two semesters ago, they wanted to revamp the front end + do some data visualization, so a girl (let's call her W) joined the team and did all that. We wanted to merge the two sites and host on azure, but due to issues and impeding conferences that require our data to be online, we kept postponing. I graduate this semester and haven't worked with the team for a while, so they have a new guy in charge of the azure server (let's call him H), and yesterday my professor sends me (let's call me M), H and W an email telling us to coordinate to have the merge up on azure in 2-3 days, max. The following convo was what I had with H:

M: Hi, if you just give me access to azure I'll be able to set everything up myself, also I'll need a db set up, and just send me the connection string.

H: Hi, we won't have dbs because that is extra costs involved since we don't have dynamic content. Also I can't give you access, instead push everything on git and set up the site on a test azure server and I will take it from there.

M: There is proprietary data on the site...

H: Oh really? I don't know what's on it.

<and yet he knows we have no dynamic data>

M: Fine, I'll load the data some other way, but I have access to all the data anyway, just talk to Dr. X and you'll see you can give me access. Delete my access after if you want.

H: No, just do what I said: git then upload to test azure account.

Fine, he's a complete tool, but I like Dr. X, so I message W and tell her we have to merge, she tells me that it's not that easy to set it up on github as she's using wordpress. She sends me instructions on what to do, and, lo and behold, there's a db in her solution. Ok, I go back to talking to H:

M: W is using a db. Talk to her so we can figure out whether we need a database or not.

H: We can't use a database because we want to decrease costs.

M: Yes I know that, so talk to her because that probably means she has to re-do some stuff, which might take some time. Also there might be dynamic content in what she's doing.

H: This is your project, you talk to her.

<I'm starting to get mad right now>

M: I don't know what they had her do apart from how it interfaces with what I've done.

H: We still can't have databases.

M: Listen, I don't do wordpress, and I'm not gonna mess with it, you talk to her

H: I won't do any development

<So you won't do any dev, but you won't give me access to do it either?>

M: Man, the bottleneck isn't the merging right now, it's the fact that W needs a db

H: I know, so talk to her

M: THE RESTRICTION TO NOT HAVE DATABASES IS NOT MINE, IT'S YOURS, YOU TALK TO HER. I can't evaluate whether it's a reasonable enough reason or not since I don't know the requirements or what they're willing to spend.

H: It's your project.

M: Then give me fucking access to azure and I'll handle it, you know you'll have to set up wordpress again regardless whether we set it up the first time.

H: Man just do your job.

At this point I lost it. WHAT A FUCKING TOOL. He doesn't wanna do dev work, wants me to go through the trouble of setting up on a test subscription first, and doesn't want to give me access to azure. What's more, he did shit all and doesn't want to anything else. Well fuck you. I googled him, to see if he's anyone important, if he's done anything notable which is why he's being so God damn condescending. MY INTERNSHIP ALONE ECLIPSES HIS ENTIRE CV. Then what the fuck?

There's also this that happened sometime during our talk:

M: You'll have to take to Dr. Y so he'll change the DNS to point to the azure subscription instead of my server.

H: Yea don't worry, too early for that.

M: DNS propagation takes 24 hours...

H: Yea don't worry.

DNS propagation allows the entire web to know that your website is hosted on a different server so it can change where it's pointing to. We have to do this in 2-3 days. Why do work in parallel? Nah let's wait.

I went over his head and talked to the professor directly, and despite wanting to tell him that he was both drunk and high the day he hired that guy, I kept it professional. He hasn't replied yet, but this fucker's pompous attitude is just too much for me alone, so I had to share.

PS: I named his contact as Annoying Prick 4 minutes into our chat. Gonna rename him cz that seems tooooooo soft a name right now.undefined tools i have access and you don't haha retards why the fuck would you hire that guy? i don't do development46 -

Four months ago changed a laptop failing hdd and give it back to customer. Today I got a 30 minutes call because the computer "is not like before the repair, it doesn't work well".

*Thinks* Well, s#*@, before the repair the hdd had more than 2000 damaged clusters, which prevented even the os to start

*Says* "Could you describe me the issue?"

....

...

[ basically he was saying that since he started using IE as a browser, he didn't have Google.com set as the first page and that he had to bing it]

...

"When did the 'issue' start, If I may ask?"

>"Two weeks ago. "

Two weeks ago.

Two f*""#ng weeks ago.

Set aside for the nature of the issue, you blame my four months ago repair for a two weeks ago issue?

"The computer was better before"?

THAT F###ING MACHINE WAS LIKE A BURNING HOUSE - I RESCUED DATA FOR 2 DAYS JUST BEFORE THE HDD STARTED TO CRANK AT EVERY SPIN - I REBUILT IT TO GIVE IT YOU BACK FULLY WORKING AFTER YOU USED AS A FOOTBALL, AND NOW YOU BLAME ME BECAUSE THE

BROWSER ISN'T SETTED AS YOU LIKE

No,seriously, is like I rebuilt a burned house from scrap and now the owner blames me because in the kitchen sink the hot water tap is on the right side instead of the left side.

Seriusly, wtf.6 -

I think I've shown in my past rants and comments that I'm pretty experienced. Looking back though, I was really fucking stupid. Since I haven't posted a rant yet on the weekly topics, I figure I would share this humbling little gem.

Way back in the ancient era known as 2009, I was working my first desk job as a "web designer". Apparently the owner of this company didn't know the difference between "designer", which I'm not, and "developer", which I am, nor the responsibilities of each role.

It was a shitty job paying $12/hour. It was such a nightmare to work at. I guess the silver lining is that this company now no longer exists as it was because of my mistake, but it was definitely a learning experience I hold in high regard even today. Okay, enough filler...

I was told to wipe the Dev server in order to start fresh and set up an entirely new distro of Linux. I was to swap out the drives with whatever was available from the non-production machines, set up the RAID 5 array and route it through the router and firewall, as we needed to bring this Dev server online to allow clients to monitor the work. I had no idea what any of this meant, but I was expected to learn it that day because the next day I would be commencing with the task.

Astonishingly, I managed to set up the server and everything worked great! I got a pat on the back and the boss offered me a 4 day weekend with pay to get some R&R. I decided to take the time to go camping. I let him know I would be out of town and possibly unreachable because of cell service, to which he said no problem.

Tuesday afternoon I walked into work and noticed two of the field techs messing with the Dev server I built. One was holding a drive while the other was holding a clipboard. I was immediately called into the boss's office.

He told me the drives on the production server failed during the weekend, resulting in the loss of the data. He then asked me where I got the drives from for the Dev server upgrade. I told him that they came from one of the inactive systems on the shelf. What he told me next through the deafening screams rendered me speechless.

I had gutted the drives from our backup server that was just set up the week prior. Every Friday at midnight, it would turn on through a remote power switch on a schedule, then the system would boot and proceed to copy over the production server's files into an archive for that night and shutdown when it completed. Well, that last Friday night/Saturday morning, the machine kicked on, but guess what didn't happen? The files weren't copied. Not only were they not copied, but the existing files that got backed up previously we're gone. Why? Because I wiped those drives when I put them into the Dev server.

I would up quitting because the conversation was very hostile and I couldn't deal with it. The next week, I was served with a suit for damages to this company. Long story short, the employer was found in the wrong from emails I saved of him giving me the task and not once stating that machine was excluded in the inactive machines I could salvage drives from. The company sued me because they were being sued by a client, whose entire company presence was hosted by us and we lost the data. In total just shy of 1TB of data was lost, all because of my mistake. The company filed for bankruptcy as a result of the lawsuit against them and someone bought the company name and location, putting my boss and its employees out of a job.

If there's one lesson I have learned that I take with the utmost respect to even this day, it's this: Know your infrastructure front to back before you change it, especially when it comes to data.8 -

In a user-interface design meeting over a regulatory compliance implementation:

User: “We’ll need to input a city.”

Dev: “Should we validate that city against the state, zip code, and country?”

User: “You are going to make me enter all that data? Ugh…then make it a drop-down. I select the city and the state, zip code auto-fill. I don’t want to make a mistake typing any of that data in.”

Me: “I don’t think a drop-down of every city in the US is feasible.”

Manage: “Why? There cannot be that many. Drop-down is fine. What about the button? We have a few icons to choose from…”

Me: “Uh..yea…there are thousands of cities in the US. Way too much data to for anyone to realistically scroll through”

Dev: “They won’t have to scroll, I’ll filter the list when they start typing.”

Me: “That’s not really the issue and if they are typing the city anyway, just let them type it in.”

User: “What if I mistype Ch1cago? We could inadvertently be out of compliance. The system should never open the company up for federal lawsuits”

Me: “If we’re hiring individuals responsible for legal compliance who can’t spell Chicago, we should be sued by the federal government. We should validate the data the best we can, but it is ultimately your department’s responsibility for data accuracy.”

Manager: “Now now…it’s all our responsibility. What is wrong with a few thousand item drop-down?”

Me: “Um, memory, network bandwidth, database storage, who maintains this list of cities? A lot of time and resources could be saved by simply paying attention.”

Manager: “Memory? Well, memory is cheap. If the workstation needs more memory, we’ll add more”

Dev: “Creating a drop-down is easy and selecting thousands of rows from the database should be fast enough. If the selection is slow, I’ll put it in a thread.”

DBA: “Table won’t be that big and won’t take up much disk space. We’ll need to setup stored procedures, and data import jobs from somewhere to maintain the data. New cities, name changes, ect. ”

Manager: “And if the network starts becoming too slow, we’ll have the Networking dept. open up the valves.”

Me: “Am I the only one seeing all the moving parts we’re introducing just to keep someone from misspelling ‘Chicago’? I’ll admit I’m wrong or maybe I’m not looking at the problem correctly. The point of redesigning the compliance system is to make it simpler, not more complex.”

Manager: “I’m missing the point to why we’re still talking about this. Decision has been made. Drop-down of all cities in the US. Moving on to the button’s icon ..”

Me: “Where is the list of cities going to come from?”

<few seconds of silence>

Dev: “Post office I guess.”

Me: “You guess?…OK…Who is going to manage this list of cities? The manager responsible for regulations?”

User: “Thousands of cities? Oh no …no one is our area has time for that. The system should do it”

Me: “OK, the system. That falls on the DBA. Are you going to be responsible for keeping the data accurate? What is going to audit the cities to make sure the names are properly named and associated with the correct state?”

DBA: “Uh..I don’t know…um…I can set up a job to run every night”

Me: “A job to do what? Validate the data against what?”

Manager: “Do you have a point? No one said it would be easy and all of those details can be answered later.”

Me: “Almost done, and this should be easy. How many cities do we currently have to maintain compliance?”

User: “Maybe 4 or 5. Not many. Regulations are mostly on a state level.”

Me: “When was the last time we created a new city compliance?”

User: “Maybe, 8 years ago. It was before I started.”

Me: “So we’re creating all this complexity for data that, realistically, probably won’t ever change?”

User: “Oh crap, you’re right. What the hell was I thinking…Scratch the drop-down idea. I doubt we’re have a new city regulation anytime soon and how hard is it to type in a city?”

Manager: “OK, are we done wasting everyone’s time on this? No drop-down of cities...next …Let’s get back to the button’s icon …”

Simplicity 1, complexity 0.16 -

At my previous job we had the rule to lock your PC when you leave. Makes sense of course.

We were not programmers but application engineers, still, we worked with sensitive data.

One colleague always claimed to be the most intelligent and always demanded the "senior" - title. Which he obviously did not deserve.

multiple times a day forgot to lock his workstation and we had to do it for him.

My last week working there, I've had it. He forgot it again... So I made a screenshot of his current environment. Closed everything. Set his new background with the screen shot and killed explorer (windows). Then finally I locked his PC.

When he came back he panicked that his PC froze. He couldn't do shit anymore. Not knowing what to do... 😂

Which makes him a senior of course.

But seriously, first thing I would do is open the task manager and notice that explorer wasn't running... Thus my background with the taskbar isn't real.... My colleagues must be pranking me!

Nope... The "senior" knew little10 -

Biggest scaling challenge?

The imaginary scaling issues from clients.

Client : How do you cope with data that's a billion times bigger than our current data set? Can you handle that? How much longer will it take to access some data then?

I could then give a speech about optimizing internal data structures and access algorithms that work with O(log n) complexity, but that wouldn't help, non-tech people will not understand that.

And telling someone, the system will be outdated and hopefully been replaced when that amount of data is reached, would be misinterpreted as "Our system can not handle it".

So the usual answer is: "No problem, our algorithms are optimized so they can handle any amount of data"6 -

I’ve been thinking lately, what is it that devRant devs do?

So after a couple of days of pulling data from devRants API's, filtering through the inconsistent skill set data of about 500 users (seriously guys, the comma is your friend) i’ve found an interesting set of languages being used by everyone.

I've limited this to just languages, as dwelling into frameworks, libraries and everything else just grows exponentially, also ive only included languages with at least 5 users out of the pool.

sorry you brainfuck guys. 28

28 -

--- GitHub 24-hour outage post mortem ---

As many of you will remember; Github fell over earlier this month and cracked its head on the counter top on the way down. For more or less a full 24 hours the repo-wrangling behemoth had inconsistent data being presented to users, slow response times and failing requests during common user actions such as reporting issues and questioning your career choice in code reviews.

It's been revealed in a post-mortem of the incident (link at the end of the article) that DB replication was the root cause of the chaos after a failing 100G network link was being replaced during routine maintenance. I don't pretend to be a rockstar-ninja-wizard DBA but after speaking with colleagues who went a shade whiter when the term "replication" was used - It's hard to predict where a design decision will bite back and leave you untanging the web of lies and misinformation reported by the databases for weeks if not months after everything's gone a tad sideways.

When the link was yanked out of the east coast DC undergoing maintenance - Github's "Orchestrator" software did exactly what it was meant to do; It hit the "ohshi" button and failed over to another DC that wasn't reporting any issues. The hitch in the master plan was that when connectivity came back up at the east coast DC, Orchestrator was unable to (un)fail-over back to the east coast DC due to each cluster containing data the other didn't have.

At this point it's reasonable to assume that pants were turning funny colours - Monitoring systems across the board started squealing, firing off messages to engineers demanding they rouse from the land of nod and snap back to reality, that was a bit more "on-fire" than usual. A quick call to Orchestrator's API returned a result set that only contained database servers from the west coast - none of the east coast servers had responded.

Come 11pm UTC (about 10 minutes after the initial pant re-colouring) engineers realised they were well and truly backed into a corner, the site was flipped into "Yellow" status and internal mechanisms for deployments were locked out. 5 minutes later an Incident Co-ordinator was dragged from their lair by the status change and almost immediately flipped the site into "Red" status, a move i can only hope was accompanied by all the lights going red and klaxons sounding.

Even more engineers were roused from their slumber to help with the recovery effort, By this point hair was turning grey in real time - The fail-over DB cluster had been processing user data for nearly 40 minutes, every second that passed made the inevitable untangling process exponentially more difficult. Not long after this Github made the call to pause webhooks and Github Pages builds in an attempt to prevent further data loss, causing disruption to those of us using Github as a way of kicking off our deployment processes (myself included, I had to SSH in and run a git pull myself like some kind of savage).

Glossing over several more "And then things were still broken" sections of the post mortem; Clever engineers with their heads screwed on the right way successfully executed what i can only imagine was a large, complex and risky plan to untangle the mess and restore functionality. Github was picked up off the kitchen floor and promptly placed in a comfy chair with a sweet tea to recover. The enormous backlog of webhooks and Pages builds was caught up with and everything was more or less back to normal.

It goes to show that even the best laid plan rarely survives first contact with the enemy, In this case a failing 100G network link somewhere inside an east coast data center.

Link to the post mortem: https://blog.github.com/2018-10-30-...6 -

Devs: We need access to PROD DB in order to provide support you're asking us for.

Mgmt: No, we cannot trust you with PROD DB accesses. That DB contains live data and is too sensitive for you to fuck things up

Mgmt: We'll only grant PROD DB access to DBAs and app support guys

Mgmt: <hire newbies to app support>

App_supp: `update USER set invoice_directory = 54376; commit;`

----------------

I have nothing left to say....7 -

A few years ago:

In the process of transferring MySQL data to a new disk, I accidentally rm'ed the actual MySQL directory, instead of the symlink that I had previously set up for it.

My guts felt like dropping through to the floor.

In a panic, I asked my colleague: "What did those databases contain?"

C: "Raw data of load tests that were made last week."

Me: "Oh.. does that mean that they aren't needed anymore?"

C: "They already got the results, but might need to refer to the raw data later... why?"

Me: "Uh, I accidentally deleted all the MySQL files... I'm in Big Trouble, aren't I?"

C: "Hmm... with any luck, they might forget that the data even exists. I got your back on this one, just in case."

Luck was indeed on my side, as nobody ever asked about the data again.5 -

You know side projects? Well I took on one. An old customer asked to come and take over his latest startups companys tech. Why not, I tought. Idea is sound. Customer base is ripe and ready to pay.

I start digging and the Hardware part is awesome. The guys doing the soldering and imbedded are geniuses. I was impressed AF.

I commit and meet up with CEO. A guy with a vision and sales orientation/contacts. Nice! This shit is gonna sell. Production lines are also set.

Website? WTF is this shit. Owner made it. Gotta give him the credit. Dude doesn't do computers and still managed to online something. He is still better at sales so we agree that he's gonna stick with those and I'll handle the tech.

I bootstrap a new one in my own simplistic style and online it. I like it. The owner likes it. He made me to stick to a tacky logo. I love CSS and bootstrap. You can make shit look good quick.

But I still don't have access to the soul of the product. DBs millions rows of data and source for the app I still behind the guy that has been doing this for over a year.

He has been working on a new version for quite some time. He granted access to the new versions source, but back end and DB is still out of reach. Now for over month has passed and it's still no new version or access to data.

Source has no documentation and made in a flavor of JS frame I'm not familiar with. Weekend later of crazy cramming I get up to speed and it's clear I can't get further without the friggin data.

The V2 is a scramble of bleeding edge of Alpha tech that isn't ready for production and is clearly just a paid training period for the dev. And clearly it isn't going so well because release is a month late. I try to contact, but no reaction. The owner is clueless.

Disheartening. A good idea is going to waste because of some "dev" dropping a ball and stonewalling the backup.

I fucking give him till the end of the next week until I make the hardware team a new api to push the data and refactor the whole thing in proper technologies and cut him off.

Please. If you are a dev and don't have the time to concentrate on the solution don't take it on and kill off the idea. You guys are the key to making things happening and working. Demand your cut but also deserve it by delivering or at least have the balls to tell you are not up for it. -

I'm convinced code addiction is a real problem and can lead to mental illness.

Dev: "Thanks for helping me with the splunk API. Already spent two weeks and was spinning my wheels."

Me: "I sent you the example over a month ago, I guess you could have used it to save time."

Dev: "I didn't understand it. I tried getting help from NetworkAdmin-Dan, SystemAdmin-Jake, they didn't understand what you sent me either."

Me: "I thought it was pretty simple. Pass it a query, get results back. That's it"

Dev: "The results were not in a standard JSON format. I was so confused."

Me: "Yea, it's sort-of JSON. Splunk streams the result as individual JSON records. You only have to deserialize each record into your object. I sent you the code sample."

Dev: "Your code didn't work. Dan and Jake were confused too. The data I have to process uses a very different result set. I guess I could have used it if you wrote the class more generically and had unit tests."

<oh frack...he's been going behind my back and telling people smack about my code again>

Me: "My code wouldn't have worked for you, because I'm serializing the objects I need and I do have unit tests, but they are only for the internal logic."

Dev:"I don't know, it confused me. Once I figured out the JSON problem and wrote unit tests, I really started to make progress. I used a tuple for this ... functional parameters for that...added a custom event for ... Took me a few weeks, but it's all covered by unit tests."

Me: "Wow. The way you explained the project was; get data from splunk and populate data in SQLServer. With the code I sent you, sounded like a 15 minute project."

Dev: "Oooh nooo...its waaay more complicated than that. I have this very complex splunk query, which I don't understand, and then I have to perform all this parsing, update a database...which I have no idea how it works. Its really...really complicated."

Me: "The splunk query returns what..4 fields...and DBA-Joe provided the upsert stored procedure..sounds like a 15 minute project."

Dev: "Maybe for you...we're all not super geniuses that crank out code. I hope to be at your level some day."

<frack you ... condescending a-hole ...you've got the same seniority here as I do>

Me: "No seriously, the code I sent would have got you 90% done. Write your deserializer for those 4 fields, execute the stored procedure, and call it a day. I don't think the effort justifies the outcome. Isn't the data for a report they'll only run every few months?"

Dev: "Yea, but Mgr-Nick wanted unit tests and I have to follow orders. I tried to explain the situation, but you know how he is."

<fracking liar..Nick doesn't know the difference between a unit test and breathalyzer test. I know exactly what you told Nick>

Dev: "Thanks again for your help. Gotta get back to it. I put a due date of April for this project and time's running out."

APRIL?!! Good Lord he's going to drag this intern-level project for another month!

After he left, I dug around and found the splunk query, the upsert stored proc, and yep, in about 15 minutes I was done.1 -

Me: So what if this field has no info?

NonDev Manager: There should always be data in that field.

Me: *Shows the field has default set as null*

NonDev Manager: *thinks thinks thinks*, but they are always added...how...if...

Me: I'll default to X behavior.

NonDev Manager: ...Yeah...do that.

I know what should happen but it's so fun to see non-dev's scratch their heads with business logic edge cases that seem nonsensical to them. Yeah I'm a bit of a dick.3 -

After work I wanted to come home and work on a project. I have a few ideas for a few things I want to do, so I started a Trello board with the ideas to start mapping things out. But there were guys redoing the kitchen tile and it was noisy as fuck. So I packed up and headed to the library.

So I get all set up, and start plugging away. Currently working on a database design for a project that is a form for some user data collection for my dad, for an internal company thing. I am not contracted for this - I just know the details so I am using it as a learning exercise. Anyway...

I'm fucking about in a VM in MySQL and I feel someone behind me. So I turn and it's this girl looking over my shoulder. She asks what I am doing, and it turned into a 2 hour conversation. She is only a few years older than me (21) but she was brilliant. She (unintentionally) made me feel SO stupid with her scope of knowledge and giant brain. I learned quite a bit from talking to her and she offered to help me further, if I liked.

And she was really cute. We exchanged phone numbers...16 -

*creates table in database*

*writes query to retrieve data*

*gets error and Google's problem for 2 hours but no luck*

*in frustration, takes a half hour break*

*checks database for set up issues*

*realizes that the database is the wrong fucking database*

*face palm & quits fucking life*

I make dumb fucking mistakes like this way too much5 -

I’m so mad I’m fighting back anger tears. This is a long rant and I apologize but I’m so freaking mad.

So a few weeks ago I was asked by my lead staff person to do a data analysis project for the director of our dept. It was a pretty big project, spanning thousands of users. I was excited because I love this sort of thing and I really don’t have anything else to do. Well I don’t have access to the dataset, so I had to get it from my lead and he said he’d do it when he had a chance. Three days later he hadn’t given it to me yet. I approach him and he follows me to my desk, gives me his login and password to login to the secure freaking database, then has me clone it and put it on my computer.

So, I start working on it. It took me about six hours to clean the database, 2 to set up the parameters and plan of attack, and two or three to visualize the data. I realized about halfway through that my lead wasn’t sure about the parameters of the analysis, and I mentioned to him that the director had asked for more information than what he was having me do. He tells me he will speak with director.

So, our director is never there, so I give my lead about a week to speak with her, in the mean time I finish the project to the specifications that the director gave. I even included notes about information that I would need to make more accurate predictions, to draw conclusions, etc. It was really well documented.

Finally, exasperated, and with the project finished but just sitting on my computer for a week, I approached my director on a Saturday when I was working overtime. She confirmed that I needed to what she said in the project specs (duh), and also mentioned she needed a bigger data set than what I was working with if we had one. She told me to speak to my lead on Monday about this, but said that my work looked great.

Monday came and my lead wasn’t there so I spoke with my supervisor and she said that what I was using was the entire dataset, and that my work looked great and I could just send it off. So, at this point 2/3 of my bosses have seen the project, reviewed it, told me it was great, and confirmed that I was doing the right thing.

I sent it off to the director to disseminate to the appropriate people. Again, she looked at it and said it was great.

A week later (today) one of the people that the project was sent to approaches me and tells me that i did a great job and thank you so much for blah blah blah. She then asks me if the dataset I used included blahblah, and I said no, that I used what was given to me but that I’d be happy to go in and fix it if given the necessary data.

She tells me, “yeah the director was under the impression that these numbers were all about blahblah, so I think there was some kind of misunderstanding.” And then implied that I would not be the one fixing the mistake.

I’m being taken off of the project for two reasons: 1. it took to long to get the project out in the first place,

2. It didn’t even answer the questions that they needed answered.

I fucking told them in the notes and ALL THROUGH THE VISUALIZATIONS that I needed additional data to compare these things I’m so fucking mad. I’m so mad.15 -

Q: Your data migration service from old site to new site cost money.

A: Yes, I have to copy data from old database and import to the new one.

Q: Can I just provide you content separately so you don’t need to do that?

A: Yes, but I will have to charge you for copying and pasting your 100 pages of content manually.

Q: Can it come with part of the web development service and not as an additional service?

A: Yes, but the price for web development service will have to be increased to combine the two. If you don’t want to pay for it, I can just set up a few sample pages with the layout and you can handle your own content entry. Does that work for you?

Q: Well, but then I will have to spend extra time to work on it.

A: Yes you will. (At this point I think she starts to understand the concept of Time = Money...)3 -

Every step of this project has added another six hurdles. I thought it would be easy, and estimated it at two days to give myself a day off. But instead it's ridiculous. I'm also feeling burned out, depressed (work stress, etc.), and exhausted since I'm taking care of a 3 week old. It has not been fun. :<

I've been trying to get the Google Sheets API working (in Ruby). It's for a shared sales/tracking spreadsheet between two companies.

The documentation for it is almost entirely for Python and Java. The Ruby "quickstart" sample code works, but it's only for 3-legged auth (meaning user auth), but I need it for 2-legged auth (server auth with non-expiring credentials). Took awhile to figure out that variant even existed.

After a bit of digging, I discovered I needed to create a service account. This isn't the most straightforward thing, and setting it up honestly reminds me of setting up AWS, just with less risk of suddenly and surprisingly becoming a broke hobo by selecting confusing option #27 instead of #88.

I set up a new google project, tied it to my company's account (I think?), and then set up a service account for it, with probably the right permissions.

After downloading its creds, figuring out how to actually use them took another few hours. Did I mention there's no Ruby documentation for this? There's plenty of Python and Java example code, but since they use very different implementations, it's almost pointless to read them. At best they give me a vague idea of what my next step might be.

I ended up reading through the code of google's auth gem instead because I couldn't find anything useful online. Maybe it's actually there and the past several days have been one of those weeks where nothing ever works? idk :/

But anyway. I read through their code, and while it's actually not awful, it has some odd organization and a few very peculiar param names. Figuring out what data to pass, and how said data gets used requires some file-hopping. e.g. `json_data_io` wants a file handle, not the data itself. This is going to cause me headaches later since the data will be in the database, not the filesystem. I guess I can write a monkeypatch? or fork their gem? :/

But I digress. I finally manged to set everything up, fix the bugs with my code, and I'm ready to see what `service.create_spreadsheet()` returns. (now that it has positively valid and correctly-implemented authentication! Finally! Woo!)

I open the console... set up the auth... and give it a try.

... six seconds pass ...

... another two seconds pass ...

... annnd I get a lovely "unauthorized" response.

asjdlkagjdsk.

> Pic related. rant it was not simple. but i'm already flustered damnit it's probably the permissions documentation what documentation "it'll be simple" he said google sheets google "totally simple!" she agreed it's been days. days!19

rant it was not simple. but i'm already flustered damnit it's probably the permissions documentation what documentation "it'll be simple" he said google sheets google "totally simple!" she agreed it's been days. days!19 -

Me:, I built you this beautiful site it's super modular, it's really straight forward

Client: urm we aren't tech people if you could..... Set up all the pages for us using the modules so we can just input the data

Me: 😡 yes I could do that or you could take 5, minutes to learn this system. It's simple 😡 see that title there "left image right title module" . I've done the sample for the templates. So if you need to you can duplicate it! There's even a duplicate button!

Client: can you do it I don't want to waste time learning it right now since we are on a tight deadline

Me in head: fuck off you supreme bitch you try to get my mates dad fired! Now I've done you this huge favour getting you out of the shit 😡 and you won't take 5 minutes to just look at the admin section your old site was wix ffs.

My next move(not yet done): here is a word document it outlines what you need to do 😐

If after this see asks again I'm asking to work with someone else or quitting the project2 -

Writing more infrastructure than product.

Look, my application requests and transforms data from a single external API endpoint, it's just one GET request...

But I made an intelligent response caching middleware to prevent downtime when the parent API goes down, I made mocks and tests for everything, the documentation is directly generated from the code and automatically hosted for every git branch using hooks, responses are translated into JSONschema notation which automatically generate integration tests on commit, and the transformations are set up as a modular collection of composable higher order lenses!

Boss: Please use less amphetamine.5 -

Dev: We need a better name than “Data” for this class. It’s used for displaying a set of tiles with certain coordinates so maybe TileMap would be a bit more declarative?

Manager: No I don’t like that. Data is perfectly fine, this class is for managing data so it’s perfectly declarative you just need to get better at reading code. If you have to change it then DataObject or DataObjectClass might be a bit more specific.

Dev: …14 -

My first real "rant", okay...

So I decided today to hop back on the horse and open Android Studio for the first time in a couple months.

I decided I was going to make a random color generator. One of my favorite projects. Very excited.

Got all the layouts set up, and got a new color every tap with RGB and hex codes, too. Took more time to open Android Studio, really.

Excited with my speedy progress, I think "This'll be done in no time!". Text a friend and tell them what I'm up to. Shes very nice, wants the app. "As soon as I'm done". I expected that to be within the hour.

I want to be able to save the colors for future reference. Got the longClickListener set up just fine. Cute little toast pops up every time. Now I just need to save the color to a file.

Easy, just a semicolon-deliminated text file in my app's cache folder.

Three hours later, and my file still won't write any data. Friend has gone to sleep. Homework has gone undone. My hatred for Android is reborn.

Stay tuned, the adventure continues tomorrow...11 -

I think I will ship a free open-source messenger with end-to-end encryption soon.

With zero maintenance cost, it’ll be awesome to watch it grow and become popular or remain unknown and become an everlasting portfolio project.

So I created Heroku account with free NodeJS dyno ($0/mo), set up UptimeRobot for it to not fall asleep ($0/mo), plugged in MongoDB (around 700mb for free) and Redis for api rate limiting (30 mb of ram for free, enough if I’m going to purge the whole database each three seconds, and there’ll be only api hit counters), set up GitHub auto deployment.

So, backend will be in nodejs, cryptico will manage private/public keys stuff, express will be responsible for api, I also decided to plug in Helmet and Sqreen, just to be sure.

Actual data will be stored in mongo, rate limit counters – in redis.

Frontend will probably be implemented in React, hosted for free at GitHub pages. I also can attach a custom domain there, let’s see if I can attach it to Freenom garbage.

So, here we go, starting up modern nosql-nodejs-react application completely for free.

If it blasts off, I’m moving to Clojure + Cassandra for backend.

And the last thing. It’ll be end-to-end encrypted. That means if it blasts off, it will probably attract evil russian government. They’ll want me to give him keys. It’ll be impossible, you know. But they doesn’t accept that answer. So if I accidentally stop posting there, please tell my girl that I love her and I’m probably dead or captured28 -

Dear Managers,

This is not efficient:

Boss: * calls *

Me: * answers *

B: there's a bug in feature ABC! The form doesn't work!

M: ABC uses a lot of forms. Is it Form A, B, or C?

B: Umm... let's just go on a Zoom call!

* 5 minutes trying to set up a Zoom call *

* 3 more minutes trying to find the form *

B: This form in here.

M: It works fine for me. What data are you inputting?

B: * takes 5 minutes trying to reproduce the bug * (in the meantime, the call is basically an awkward silence)

You spent 5 minutes wasting both of our times trying to set up a Zoom meeting, and another 8 wasting MY tine trying to find the bug.

This is efficient:

B: There is a bug in form C. If I try to upload this data, it malfunctions.

M: Thank you. I'll look into it.

You saved me 8 minutes of staring at a screen and saved us both another 5 minutes of setting up a meeting.6 -

I tried to convince my boss that using 3d rendering to display information on webpage is unnecessary luxury.

The web browser would hang if the user is using an average pc and there is too much data to render.

This product is aimed for average joe, but he argues that computers in foreign countries are high end devices ONLY.

Such a bullshit.

I asked what if someone with low spec laptop tries to view the webpage.

He said, we will set a min spec requirements for using the website.

Are you fucking kidding me?! RAM and Graphics requirements for a webpage?!

My instinct says that the thing I'm working on would probably end up as waste of time.

But I'd probably learn cool tricks of threejs.5 -

!rant

We just did a massive update to our prod db environment that would implicate damn near all system in our servers....on a friday.

Luckily for us, our DB is a badass rockstar mfking hero that was planning this shit for a little over a year with the assistance of yours truly as backup following the man's lead...and even then I didn't do SHIT

My boy did great, tested everything and the switch was effortless, fast (considering that it went on during working hours) and painless.

I salute my mfking dude, if i make my own company I am stealing this mfker. Homie speaks in SQL, homie was prolly there when SQL was invented and was already speaking in sql before shit was even set in spec, homie can take a glance at a huge db and already cast his opinion before looking at the design and architecture, homie was Data Science before data science was a thing.

Homie is my man crush on the number one spot putting mfking henry cavill on second place.

Homie wakes up and pisses greatness.

Homie is the man. Hope yall have the same mfking homie as I do5 -

EXCEL YOU FUCKING PIECE OF SHIT! don't get me wrong, it's usefull and kt works, usually... Buckle up, your i for a ride. SO HERE WE FUCKING GO: TRANSLATED FORMULA NAMES? SUCKS BUT MANAGABLE. WHATS REALLY FUCKED UP IS HTHE GERMAN VERSION!

DID YOU HEAR ABOUT .csv? It stands for MOTHERFUCKING COMMA SEPERATED VALUES! GUESS WHAT SOME GENIUS AT MICROSOFT FIGURED? Hey guys let's use a FUCKING SEMICOLON INSTEAD OF A COMMA IN THE GERMAN VERSION! LET'S JUST FUCK EVERY ONE EXPORTING ANY DATA FROM ANY WEBSITE!

The workaround is to go to your computer settings, YOU CAN'T FUCKING ADJUST THIS IN EXCEL!, change the language of the OS to English, open the file and change it back to German. I mean, come on guys, what is this shit?

AND DON'T GET ME STARTED ON ENCODING! äöü and that stuff usually works, but in Switzerland we also use French stuff, that then usually breaks the encoding for Excel if the OS language is set to German (both on Windows and Mac, at least they are consistent...)

To whoever approved, implemented or tested it: FUCK YOU, YOU STUPID SHITFUCK, with love: me7 -

So my in-laws got a new computer 😑

Yup you know where this is going. Ok so after I transferred all of their data set them all up etc.

They wanted to use "word" and could I set it up for free for them. I said no Microsoft office is not free you lost your license and disk and your old computer is trashed so the better choice would be Google services . So I explained the value of using Google drive, docs,sheets etc.. today and told them how much better it is everything would be on their Google drive so if I got hit by a bus they could get a new computer again and still have access to their data etc... So they said great and so I did.

Two weeks later... Can you set up word for us on our computer. Me annoyed at this point " sure no problem"

I made a shortcut on their desktop to Google docs. Them: oh boy this is great see John all you have to do is click on google docs to go to word! Thanks so much!

🤫🤓5 -

Not only do I write software, but now I help the managers view and understand our analytics, just like in kindergarten.

Now I'm forced to help them essentially fake data so investors are satisfied 🤡🔫

"Delete metrics X, Y, and Z for now, we don't want anyone to see them!"

"Change the label of this metric to 'unique user' views! (not total!)"

"Set all charts to cumulative so it looks like they are all up and to the right!"

Sigh.

This isn't what I signed up for.17 -

Just thought I'd share my current project: Taking an old ISA sound card I got off eBay and wiring it up to an Arduino to control its OPL3 synth from a MIDI keyboard. I have it mostly working now.

No intention to play audio samples, so I've not bothered with any of the DMA stuff - just MIDI (MPU-401 UART) and OPL3.

It has involved learning the pinout of the ISA bus connectors, figuring out which ones are actually used for this card, ignoring the standards a little (hello, amplifier chip that is wired up to the +12V line but which still happily works at +5V...)

Most of the wires going to it are for each bit of the 16-bit address and 8-bit data. Using a couple of shift registers for the address, and a universal shift register for the data. Wrote some fairly primitive ISA bus read/write code, but it was really slow. Eventually found out about SPI and re-wrote the code to use that and it became very fast. Had trouble with some timings, fixed those.

The card is an ISA Plug and Play card, meaning before I could use it I had to tell it what resources to use. Linux driver code and some reverse-engineering of the official Windows/DOS drivers got me past this stage.

Wired up IRQ 5 to an Arduino interrupt to deal with incoming MIDI data, with a routine that buffers it. Ran into trouble with the interrupt happening during I/O and needing to do some I/O inside the handler and had to set a flag to decide whether to disable/re-enable interrupts during I/O.

It looks like total chaos, but the various wires going across the breadboard are mainly to make it easier to deal with the 16-bit address and 8-bit data lines. The LEDs were initially used to check what addresses/data were being sent, but now only one of them is connected and indicates when the interrupt handler is executing.

There's still a lot to do after that though - MIDI and OPL3 are two completely different things so I had to write some code to manage the different "channels" of the OPL3 chip. I have it playing multiple notes at the same time but need to make it able to control the various settings over MIDI. Eventually I might add some physical controls to it and get a PCB made.

The fun part is, I only vaguely know what I'm doing with the electronics side of this. I didn't know what a "shift register" was before this project, nor anything about the workings of the ISA bus. I knew a bit about MIDI (both the protocol and generally how the MPU-401 UART works) along with the operation of a sound card from a driver/software perspective, but everything else is pretty new to me.

As a useful little extra, I made some "fake" components that I can build the software against on a PC, to run some tests before uploading it to the Arduino (mostly just prints out the addresses it is going to try and write to). 46

46 -

Yep. So the dev teams boss says it's fine to run a production environment on a single Windows instance with the db on that same instance, which they already totally lost once from a reboot after an auto update before I came along tasked with fixing the cluster fuck they created.

This from a man who somehow runs a dev team while using gmail via the web because he can't use an email client, uses email to track tasks but can't because they get lost amongst his 3000+ unread emails, has a screen dirtier than a hookers vag on half priced Tuesday, and got a new laptop but had to get his daughter to set it up and transfer his data because he couldn't.

But ok... you have a degree, You must know what you're doing.

It's ok though, I'll keep covering your incompetent ass while you keep raping the company because no one listens.

Peoples ignorance and arrogance astounds me.4 -

Client called the office in an angry voice complaining about how he could nog see the data in the latest generated excel sheets. Calmy tryimg to figure out what could be the problem. Asked him to send over the file so I could check it. Works perfect on my end. Ask him to open the file again on his computer and tell me what he is seeing. Error message, empty excel file. He starts to me discribing a directory full of files and folders. 15 minutes later I finally figuren out what it is.

The guy had set winzip as default program for excel files. Hoe do these people work behind a PC Evert day. Are they like I hope this magic box with screen and buttond does everything right today.4 -

FUCK THE RECRUITERS WHO ASK US TO MAKE AN ENTIRE PROJECT AS A CODE TEST.

Oh you need to scrape this website and then store the data in some DB. Apply sentimental analysis on the data set. On the UI, the user should be able to search the fields that were scraped from the website. Upon clicking it should consume a REST API which you have to create as well. Oh and also deploy it somewhere... Oh I almost forgot, make the UI look good. If you could submit it in one week, we will move towards further rounds if we find you fit enough.

YOU KNOW WHAT, FUCK YOU!

I can apply to 10 others companies in one week and get hired in half the effort than making this whole project for you which you are going to use it on your website YOU SADIST MOTHERFUCK

I CURSE YOUR COMPANY WITH THE ETERNITY OF JS CALLBACK HELL 😡😤😣9 -

When migrating from MySQL server to MariaDB and having a query start returning a completely different result set then what was expected purely because MariaDB corrected a bug with sub selects being sorted.

It took several days to identify all that was needed on that sub select was “limit 1” to get that thing to return the correct data, felt like an idiot for only having to do 7 character commit 😆4 -

I'm moving some old data into a new database.

It contains some dates that *should* be in ISO 8601...

This is some of the trash that I found:

01/01/70

2010-11-05T08:06:48T08:06:03.7

2007-09-13T

Moreover, it has a column which *should* contains numbers, instead it has been defined using varchar, so it contains also some wonderful 'NaN' values.

I really would like to beat the person who set up all this stuff without some basic validation policies.9 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

Yesterday I got to the point where all changes that customer support and backend asked for were set, and i could start rebuilding the old models from their very base with the changes and the new, disjoint data the company expansion brought.

So making the start of our main script and the first commit, only having that, had high importance... At least to me. 3

3 -

Working on a project with 2 other students. One of them makes a C# "super class" with 50 fields, and manually creates getters and setters for each and every one. Then he proceeds to write a constructor that accepts 50 parameters, because why not.

I comment on the git commit, telling him that he can just write " get; set; " in C# and that he should model the problem in smaller, more manageable classes ( this class had 270 lines and did everything from displaying data to calculating stuff). Tried to explain to him that OOP works kind of differently from how he did it.

....

His answer: "Yeah, I don't really care. If it works once, it's okay for me".

This after the most beautiful code review I have ever done...

Fml8 -

Currently, a classmate and I are working on our technical thesis.

It is all about industry 4.0, IIoT, big data and stuff.

This week, we presented our interim results to our supervisor. He is very pleased with our work and made the following suggestion:

He thinks it would be awesome to publish our work on our own GitHub repository and make it open source because he is convinced that this thesis is able to kind of "set a new standard" in some specific fields of using big data analysis in production processes.

I guess I'm kind of proud :)4 -

I am interviewing people for a job position with python knowledge.

My first question is how to reverse string and second one what’s the difference between set and list.

So far no one knows.

Fairly speaking I am asking only basic questions about what is decorator, generator, lambda. Also some basic data structure questions.

Is it to hard ?

I lost my faith in humanity.15 -

"We use WSDL and SOAP to provide data APIs"

- Old-fashioned but ok, gimme the service def file

(The WSDL services definition file describes like 20 services)

- Cool, I see several services. In need those X data entities.

"Those will all be available through the Data service endpoint"

- What you mean "all entities in the same endpoint"? It is a WSDL, the whole point is having self-documented APIs for each entity format!

"No, you have a parameter to set the name of the data entity you want, and each entity will have its own format when the service return it"

- WTF you need the WSDL for if you will have a single service for everything?!?

"It is the way we have always done things"

Certain companies are some outdated-ass backwater tech wannabees.

Usually those that have dominated the market of an entire country since the fucking Perestroika.

The moment I turn on the data pipeline, those fuckers are gonna be overloaded into oblivion. I brought popcorn.6 -

Poorly written docs.

I've been fighting with the Epson T88VI printer webconfig api for five hours now.

The official TM-T88VI WebConfig API User's Manual tells me how to configure their printer via the API... but it does so without complete examples. Most of it is there, but the actual format of the API call is missing.

It's basically: call `API_URL` with GET to get the printer's config data (works). Call it with PUT to set the data! ... except no matter what I try, I get either a 401:Unauthorized (despite correct credentials), 403:Forbidden (again...), or an "Invalid Parameter" response.

I have no idea how to do this.

I've tried literally every combination of params, nesting, json formatting, etc. I can think of. Nothing bloody works!

All it would have taken to save me so many hours of trouble is a single complete example. Ten minutes' effort on their part. tops.

asjdf;ahgwjklfjasdg;kh.5 -

Sad story:

User : Hey , this interface seems quite nice

Me : Yeah, well I’m still working on it ; I still haven’t managed to workaround the data limit of the views so for the time limit I’ve set it to a couple of days

Few moments later

User : Why does it give me that it can’t connect to the data?

Me : what did you do ?

User : I tried viewing the last year of entries and compare it with this one

Few comas later

100476 errors generated

False cert authorization

Port closed

Server down

DDOS on its way1 -

AT&T: We've fixed your voicemail setup issue, use <APP> to set it up

Me: About fucking time

*uses app*

AT&T: Voicemail setup failed. Also, we've eaten 300MB of your 500MB of mobile data. 15

15 -

<just got out of this meeting>

Mgr: “Can we log the messages coming from the services?”

Me: “Absolutely, but it could be a lot of network traffic and create a lot of noise. I’m not sure if our current logging infrastructure is the right fit for this.”

Senior Dev: “We could use Log4Net. That will take care of the logging.”

Mgr: “Log4Net?…Yea…I’ve heard of it…Great, make it happen.”

Me: “Um…Log4Net is just the client library, I’m talking about the back-end, where the data is logged. For this issue, we want to make sure the data we’re logging is as concise as possible. We don’t want to cause a bottleneck inside the service logging informational messages.”

Mgr: “Oh, no, absolutely not, but I don’t know the right answer, which is why I’ll let you two figure it out.”

Senior Dev: “Log4Net will take care of any threading issues we have with logging. It’ll work.”

Me: “Um..I’m sure…but we need to figure out what we need to log before we decide how we’re logging it.”

Senior Dev: “Yea, but if we log to SQL database, it will scale just fine.”

Mgr: “A SQL database? For logging? That seems excessive.”

Senior Dev: “No, not really. Log4Net takes care of all the details.”

Me: “That’s not going to happen. We’re not going to set up an entire sql database infrastructure to log data.”

Senior Dev: “Yea…probably right. We could use ElasticSearch or even Redis. Those are lightweight.”

Mgr: “Oh..yea…I’ve heard good things about Redis.”

Senior Dev: “Yea, and it runs on Linux and Linux is free.”

Mgr: “I like free, but I’m late for another meeting…you guys figure it out and let me know.”

<mgr leaves>

Me: “So..Linux…um…know anything about administrating Redis on Linux?”

Senior Dev: ”Oh no…not a clue.”

It was all I could do from doing physical harm to another human being.

I really hate people playing buzzword bingo with projects I’m responsible for.

Only good piece is he’s not changing any of the code.3 -

I managed to accidentally clear everybody's usernames and email addresses from an SQL table once. I only recovered it because a few seconds before, I'd opened a tab with all the user data displayed as an HTML table. I quickly copied it into Excel, then a text editor (saving multiple times!), then managed to write a set of queries to paste it all back in place. If I'd refreshed the tab it would have all gone!2

-

Context: we analyzed data from the past 10 years.

So the fuckface who calls himself head of research tried to put blame on me again, what a surprise. He asked for a tool what basically adds a lot of numbers together with some tweakable stuff, doesnt really matter. Now of course all the datanwas available already so i just grabbed it off of our api, and did the math thing. Then this turdnugget spends 4 literal weeks, tryna feed a local csv file into the program, because he 'wanted to change some values'. One, this isnt what we agreed on, he wanted the data from the original. When i told him this, he denied it so i had to dig out a year old email. Two, he never explicitly specified anything so i didnt use a local file because why the fuck would i do that. Three, i clearly told him that it pulls data from the server. Four, what the fuck does he wanna change past values for, getting ready for time travel? Five, he ranted for like 3 pages, when a change can be done by currentVal - changedVal + newVal, even a fucking 10 year old could figure that out. Also, when i allowed changes in a temporary api, he bitched about how the additional info, what was calculated from yet another original dataset, doesnt get updated, when he fucking just randimly changes values in the end set. Pinnacle of professionalism.2 -

When working with hardware some mistakes can be literally painful. Thankfully this was all during undergrad and I'm only around computer hardware now lol.

>Misprogrammed a software kill switch so a sensor that should not have been sending data was actually sending data which caused the system to activate a piston that went WHAM! into the face of a teammate working on replacing some part of it...

>Misprogrammed a controller so it drew too much power from the supply and the puny supply wires literally burst into flame and fell across my arm.

>Spun a 9000rpm CNC spindle the wrong way and caused an attached screw to go rocketing upwards instead of downwards and almost break the (pretty expensive) thing (uh...we were trying to use it as a power screwdriver essentially but I set the rpm to about 100x what I wanted and the direction wrong so yeah).

>Switched a -1 with a +1 in a robot's control system sending it careening into a teammate's leg... let's just say mecanum wheels are paaaainful.6 -

The Hungarian public transport company launched an online shop (created by T-Systems), which was clearly rushed. Within the first days people found out that you could modify the headers and buy tickets for whatever price you set, and you could login as anyone else without knowing their password. And they sent out password reminders in plain text in non-encrypted emails. People reported these to the company which claims to have fixed the problems.

Instead of being ashamed of themselves now they're suing those who pointed out the flaws. Fucking dicks, if anyone they should be sued for treating confidential user data (such as national ID numbers) like idiots.3 -

Fuck Android Oreo and everyone who thought that the following ideas are useful:

- xy app is running in the background notification, which can't be disabled

- xy app is overlaying other apps, click here if you wish to disable it. But you can't disable the notification, you can only disable the app.

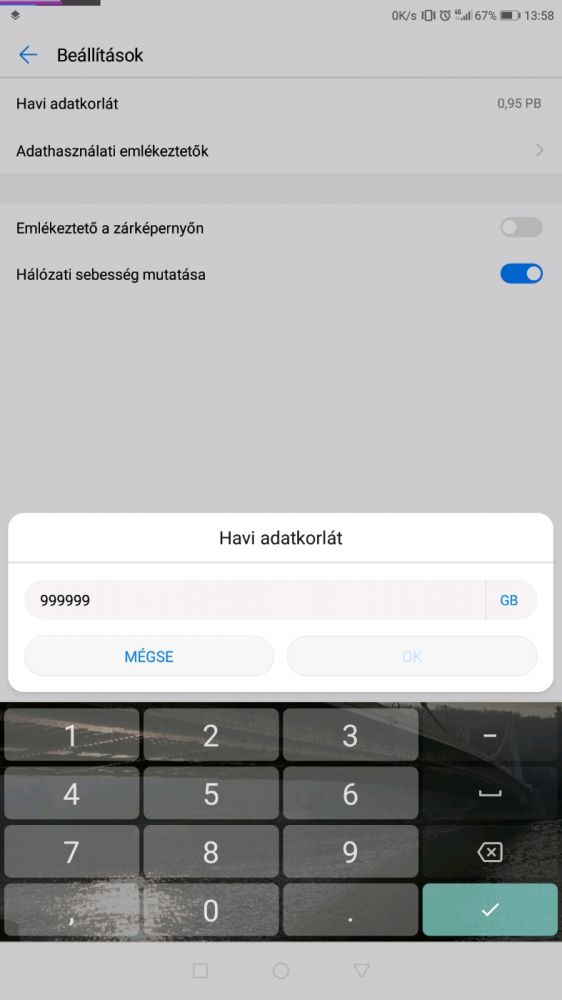

- the un-zeroable data limit. It can't be disabled, you can only set it to a retarded high number to avoid annoying notifications

Go suck a veiny one Android devs. Fucking cunt faces. 13

13 -

Fuck me in the ass, but do it harder then this api just so I can feel some love 😖

it's one of those days where you have to migrate from soap to rest, only the rest api doesn't have the same structure or search parameters as the soap api, so there's this entire fucking application sending requests at a brick wall, and expecting a purple throbbing 12 inch cock of xml to be pushed into an multi dimensional array and pushed through to the views to derange the mess, only you have to create that fucking 12 inch cock from several 2 inch dipsticks that have a different hierarchy, different field names, and merge the shit together with a glue gun...

good thing it's only an unexpected prod problem... right? 🤷♂️

Ah, the woes of a Monday on the legacy app adventures.rant bullshit applications over engineered using a view to build a data set from hell adopt a piece of shit day1 -

Client: "Let's code a prototype to show our intents to the customer. We have 96 screens to implement as a native app in 6 weeks"

~Prototype delivered in 6 weeks, despite some changes in the design on-the-go. Few months later~

Client: "The customer likes the prototype a lot. They would like to see some changes (another set of > 40 screens). It's still a prototype and the changes should be implemented in a month, but this time it has to use real data from external apis. We don't know which APIs yet, but it should also go live on the app store"

Like, seriously?8 -

That feeling when the business wants you to allow massive chunks of data to simply be missing or not required for "grandfathered" accounts, but required for all new accounts.

Our company handles tens of thousands of accounts and at some point in the past during a major upgrade, it was decided that everyone prior to the upgrade just didn't need to fill in the new data.

Now we are doing another major upgrade that is somewhat near completion and we are only just now being told that we have to magically allow a large set of our accounts to NOT require all of this new required data. The circumstances are clear as mud. If the user changes something in their grandfathered account or adds something new, from that point on that piece of data is now required.

But everything else that isn't changed or added can still be blank...

But every new account has to have all the data required...

WHY?!2 -

So these motherfuckers... they have stored the queries to generate the reports... fucking guess where. Just guess.

They stored the queries AS FUCKING STRING DATA IN THE TABLE. And you know how they get the parameters? A FUCKING JOIN WHERE THEY HAVE STORED THE PARAMS AS DATA IN ANOTHER TABLE.

So you query set the params to query to get the query to get that is joined with a query to get the reports.

If God is a programmer after all y’all are fuuuuuuuuuuuuuucked4 -

This is the craziest shit... MY FUCKING SERVER JUST SET ON FIRE!!!

Like seriously its hot news (can't resist the puns), it's actually really bad news and I'm just in shock (it's not everyday you find out your running the hottest stack in the country :-P)... I thought it slow as fuck this morning but the office internet was also on the fritz so I carried on with my life until EVERYTHING went down (completely down - poof gone) and within 2 minutes I had a technician from the data centre telling me that something to do with fans had failed and they caught fire, melted and have become one with the hardware. WTF? The last time I went to the data centre it was so cold I pissed sitting down for 2 days because my dick vanished.

I'm just so fucking torn right now because initially I was absolutely fucking ecstatic - 1 week ago after a year of doomsday bitching about having a single point of failure and me not being a sysadmin only to have them look at me like I'm some kind of techie flat earther I finally got approval to spend around 5x more per month and migrate all our software to containerized micro services.

I'll admit this is a bit worse than I expected but thanks to last week at least I have recent off site images of the drives - because big surprise I have to set this monolithic beast back up (No small feat - its gonna be a long night) on a fresh VPS, I also have to do it on premises or the data will only finish uploading sometime next week.

Pro Tip: If your also pleading for more resources/better production environment only to be stone walled the second you mention there's a cost attached be like me - I gave them an ultimatum, either I deploy the software on a stack that's manageable or they man the fuck up and pay a sys admin (This idea got them really amped up until they checked how much decent sys admins cost).

Now I have very flexible pockets because even if I go rambo the max server costs would only be 15-20% of a sys admins paycheck even though that is 13 x more than our current costs.

-

My apprentice is driving me nuts with his failed attempts on gold-plating.

The task "Get the data and export it to a file" becomes ""After many attempts to get the data via a different query than we worked out together I now finally got it and it makes sense if it was displayed but only one set of data at a time and it should also be selected what data should be exported and I have no idea how to do that so Cero, can you help me?".

Dang it dude, just show me for once that you can do 1 clearly decribed task, where you have many examples to work with, and NOT try to add any extras!

I am now working on how to tell him this in a nicer way...2 -

Always back up your data.

I came to my computer earlier today to find it on my Linux login screen. This could only mean one thing: something went horribly wrong.

Let me explain.

I have my BIOS set up to boot into Windows automatically. The exception is a reboot or something horrible happens and the computer crashes. Then, it boots me into Linux. Due to a hardware issue I never looked into, I have to be present to push F1 to allow the computer to start. The fact that it rebooted successfully, without me present, into *Linux*, could only mean one thing:

My primary hard drive died and was no longer bootable.

The warning was the BIOS telling me the drive was likely to fail ("Device Error" doesn't really tell me anything to be fair).

The massive wave of panic hit me.

I rebooted in hopes of reviving the drive. No dice.

I rebooted again. The drive appeared.

Let's see how much data I can recover from it before I can no longer mount it. Hopefully, I can come out of this relatively unscathed.

The drive in question is a 10 year old 1.5 TB Seagate drive that came with the computer. It served me well.

Press F to pay respects I guess.

On the bright side, I'll be getting an SSD as a replacement (probably a Samsung EVO).8 -

The guy who became my manager just pushes to the prod branch.

On a repo where another team clearly set up development and production branches.

This guy has been pushing code like crazy and I always wanted to take my time setting things up properly in our team: TDD, CI/CD, etc.

Because he pushed so much he became my manager and I was seen as unproductive.

Data Science and software development best practices just dont coexist it seems.

Yeah yeah, it's up to me to start introducing good practices, but atm "getting it done" takes priority over the real based shit.3 -

"I found this tool that we should use because I'm a manager and its simple enough that my tiny little manager brain could set it up!"

Oh wow good for you, Mr. Manager! And what, praytell, does the tool require?

"All proprietary and cost-ineffecient products: MSSQL Server and Windows IIS! What do you mean we have to get the data out in order for it to be scalable? Look at it! I set up a website by clicking on an EXE i downloaded from github!"

Amazing, Mr. Manager. So you violated our security practices AND want to pocket even MORE of our budget?

Kindly fuck right off and start suggesting things instead of making people embarrass you into stoping your fight for your tool (has happened on more than one occassion).3 -

I spent two weeks writing a WordPress plugin to take some form data, process it through another website, and take the result to Salesforce. the client had a "Salesforce specialist" doing everything on the Salesforce side and refused to give me access to see if the data was properly pushed through.

Finally I got it in working condition when suddenly it stopped working, I hadn't changed anything since it worked so I asked if he could have possibly changed something. We argued for over 4 hours about who changed something, the whole time I was looking for the error in my code. At 6pm I finally told him I would need to take a look at it tomorrow.

Overnight he sent me an email:

"Hey, sorry about the confusion yesterday, I set Salesforce to deny duplicate email entries, looks like once I removed that everything is working."6 -

Current task:

Somehow, one of my predecessors made some sort of custom hook tied to woocommerce check out that pipes some data into a nightmarish spaghetti fuck pile of undocumented wild west visual basic bullshit. It does this, presumably, via a set of parameters passed as plaintext in a url. I know this because I found the singleton that declares this. Helpfully, Mr. Fuckass named the class "Default", so I only have around 30k instances being kicked back by my IDE when I search for it. The only reason I "need" to find this, is so that I can just change the button to an href pointing at my own MS for shipping, and I need to change the fifteen params being passed to just one - a customer ID, which should be stored in the session, and referenced by a cookie. Once that is done, I should be able to freely delete a couple of gigs worth of bullshit. Been stuck on this for three days now. God forbid we have a test environment or something.

I'm tired. Can't even get angry anymore really. Can't even think of anything funny to say about it either, I just can't wait until this is done and I can go back to sleep.3 -

Because sharing is caring.

For anyone whom cares, I've extracted the CoronaVirus data for total infected / deaths from the world health organisation and shoved it into applicable csv files per day.

You can find the complete data set here:

https://github.com/C0D4-101/...5 -

Every language ever:

"You can't compare objects of type A and B"

Swift, on the other hand: