Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "api data"

-

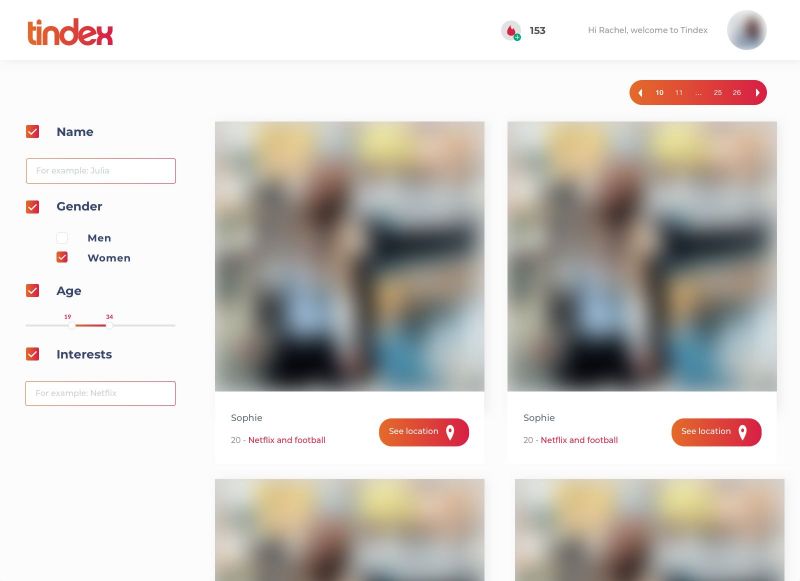

Last year I built the platform 'Tindex'. It was an index of Tinder profiles so people could search by name, gender and age.

We scraped the Tinder profiles through a Tinder API which was discontinued not long ago, but weird enough it was still intact and one of my friends who was also working on it found out how to get api keys (somewhere in network tab at Tinder Online).

Except name, gender and age we also got 3 distances so we could calculate each users' location, then save the location each 15 minutes and put the coordinates on a map so users of Tindex could easily see the current location of a specific Tinder user.

Fun note: we also got the Spotify data of each Tinder user, so we could actually know on which time and which location a user listened to a specific Spotify track.

Later on we started building it out: A chatbot which connected to Tinder so Tindex users could automatically send a pick up line to their new matches (Was kinda buggy, sometimes it sent 3 pick up lines at ones).

Right when we started building a revenue model we stopped the entire project because a friend of ours had found out that we basically violated almost all terms.

Was a great project, learned a lot from it and actually had me thinking twice or more about online dating platforms.

Below an image of the user overview design I prototyped. The data is mock-data. 51

51 -

I always like to approach a new coding project by concentrating on the data model first. I've seen a lot of projects built on extremely convoluted database structures and it really hurts because it makes it hard to add new features to the project.

So I look at the requirements of the new project and try to come up with a basic data model. Then I like to think about what logical future additions to the project could be. And using those, I try to see if the data model is flexible enough to be able to handle those additions fairly easily or if complex migrations or hacks would be needed to account for new use cases and features.

I think once you have a solid data structure and database technology, planning out an API or rest of the software is pretty straight forward. I like to create reusable pieces of middleware early on in the project which makes it easy to apply consistent functionality with ease to different API endpoints.8 -

"there's a problem with your API"

Me: "why?"

"I get no data"

Me: "what response code are you getting?"

"405 - Method not allowed. But only on the /version endpoint"

Me: "Soo... What request are you sending?"

"POST"

WHY THE FUCK WOULD YOU SEND A POST REQUEST TO AN ENDPOINT THAT **GETS** THE VERSION OF MY API???!!!!

Me: "Read the documentation. It's there for a reason"13 -

USER: I can't see any data in the page...!

ME: ok, I'll do a check

ME: API calls get no data back. Boss, did you change anything and put it in production?

BOSS: Absolutely not, I just modified the name of what was the "Family" parameter in "Type".

ME: Seems legit. Totally agree. I'm going to lunch. Can you check in the meanwhile why calling the API with "Family" does return nothing? Thanks.3 -

I’ve been thinking lately, what is it that devRant devs do?

So after a couple of days of pulling data from devRants API's, filtering through the inconsistent skill set data of about 500 users (seriously guys, the comma is your friend) i’ve found an interesting set of languages being used by everyone.

I've limited this to just languages, as dwelling into frameworks, libraries and everything else just grows exponentially, also ive only included languages with at least 5 users out of the pool.

sorry you brainfuck guys. 28

28 -

Boss: Our customer's data is not syncing with XYZ service anynmore!

Me: Ok let me check. Did the tokens not refresh? Hmm the tokens are refreshing fine but the API still says that we do not have permissions. The scopes are fine too. I'll use our test account... its... cancelled? Hey boss, why is our XYZ account cancelled?

Boss: Oh, "I haven’t paid since I didn’t think we needed it" (ad verbatim)

😐1 -

Was programming on the privacy site REST api.

Needed a break and started searching for a good movie or documentary.

Found a documentary about big data/mass surveillance.

I now have loads of motivation for programming on this again as this showed me the importance of secure services/software.19 -

Why do people (Some devs too...) bloody hell think that devs have Hard time fixing the Semi Colon issue, we have a lot of other issues to figure out, like the Structure of Data, Code Fragmentation, API Creation, Invalid Data Handling, Injection Prevention. But no, since we are developers, we are having sleepless nights because of one fucking semicolon? FUCKING NO, it hardly takes 30 seconds to figure out that there is a missing semi-colon. Really People, stop the ; thing!10

-

ARE YOU FUCKING KIDDING ME. I SPEND HOURS INVESTIGATING INCOMING & OUTGOING DATA. I CHECKED ALL THE CODE, I EVEN TEAMVIEWED A CUSTOMER WHICH WAS HAVING SOME ISSUES WITH MY APP.

TURNS OUT I FORGOT A FUCKING '/' IN MY FUCKING CODE. WHICH MEANS THE HOLE GODDAMN API URL MAKES NO SENSE.

WHY THE FUCK DO I ALWAYS OVERCOMPLICATE SHIT LIKE THIS.

FUCK2 -

So we hired a junior engineer. 1 year of experience, this is his second job.

First task: Send some data to a web service using its REST API. Let me know when you've finished.

Two hours later I go to check on him.

- "I'm trying to decode this weird format the server uses"

He was writing a JSON parser in Python from scratch.

:/12 -

Update on my student job :

Today they put me on a new project.

Basically, I have to update a database of buildings owned or rented by the company. They provided me a lot of data including the address of each building but I need the GPS coordinates.

They wanted me to do it manually, copy pasting from Google maps (info: there's 75 buildings).

So I wrote a small script using an API to automate that, took me 20 minutes.

My colleagues were like "how the fuck is that possible ? We always do it manually, always takes ages !"7 -

Although I do give some privacy related advice here and there on here, I'm planning on hiring a server dedicated to devRant regarding privacy/tiny simple tools.

I've got the folloing in mind:

- Host the privacy website

- Put a pi-hole server on it for everyone to use

- Own IP lookup API which would display it in a few data formats.

Any other ideas?74 -

FUCK!!! FUCK IT ALL. FUCK YOU AND YOUR CRAPPY BULLSHT UNDOCUMENTED AND OUTDATED API.

YOUR DATABASE SERVER BACK-END HAS TO BE THE ONE MANAGING THE DISPLAY DATA FOR ITS WEB AND MOBILE CLIENTS. NOT THE OTHER WAY AROUND, DAMN IT.

I'M NOT GONNA SIT HERE ALL DAY HARD-CODING ALL YOUR SERVER'S INADEQUACY.

MAKES ME WONDER DO YOU EVER USE DESIGN PATTERNS OR APPLY DESIGN PRINCIPLES? DRY AT LEAST? DON'T FUCKING REPEAT YOURSELF, DAMN IT.

I CAN'T WAIT TO LEAVE THIS PLACE FOR GOOD.6 -

As a Java developer, reasons to kill other programmers:

- static mutable variables

- WRITING to static mutable variables

- API call with Framework X didn't work. Add Framework Y along with X and try that. Wrap X in try/catch statement. Catch block fires framework Y.

- six, seven, ten levels of nested code. Zero thought put in organization

- 6K LOC Java files

- spring (singleton? Maybe) object assigning values in static mutable (see pt.1)

- a couple of unit tests in code base that no longer work. Zero unit tests in new code

- unit testing disabled in CI pipeline

- empty catch blocks

- pass mutable data between threads. Modify in various places concurrently.3 -

My team was sharing an API key to our company's microservice containing all our customer data.

I say "was" because one team member accidentally published the key online so the security team disabled our key and won't give us a new one.

I don't know whether to laugh or cry4 -

I wrote a database migration to add a column to a table and populated that column upon record creation.

But the code is so freaking convoluted that it took me four days of clawing my eyes out to manage this.

BUT IT'S FINALLY DONE.

FREAKING YAY.

Why so long, you ask? Just how convoluted could this possibly be? Follow my lead ~

There's an API to create a gift. (Possibly more; I have no bloody clue.)

I needed the mobile dev contractor to tell me which APIs he uses because there are lots of unused ones, and no reasoning to their naming, nor comments telling me what they do.

This API takes the supplied gift params, cherry-picks a few bits of useful data out (by passing both hashes by reference to several methods), replaces a couple of them with lookups / class instances (more pass-by-reference nonsense). After all of this, it logs the resulting (and very different) mess, and happily declares it the original supplied params. Utterly useless for basically everything, and so very wrong.

It then uses this data to call GiftSale#create, which returns an instance of GiftSale (that's actually a Gift; more on that soon).

GiftSale inherits from Gift, and redefines three of its methods.

GiftSale#create performs a lot of validations / data massaging, some by reference, some not. It uses `super` to call Gift#create which actually maps to the constructor Gift#initialize.

Gift#initialize calls Gift#pre_init (passing the data by reference again), which does nothing and returns null. But remember: GiftSale inherits from Gift, meaning GiftSale#pre_init supersedes Gift#pre_init, so that one is called instead. GiftSale#pre_init returns a Stripe charge object upon success, or a Gift (and a log entry containing '500 Internal') upon failure. But this is irrelevant because the return value is never actually used. Pass by reference, remember? I didn't.

We're now back at Gift#initialize, Rails finally creates a Gift object using the args modified [mostly] in-place by all of the above.

Another step back and we're at GiftSale#create again. This method returns either the shiny new Gift object or an error string (???), and the API logic branches on its type. For further confusion: not all of the method's returns are explicit, and those implicit return values are nested three levels deep. (In Ruby, a method will return the last executed line's return value automatically, allowing e.g. `def add(a,b); a+b; end`)

So, to summarize: GiftSale#create jumps back and forth between Gift five times before finally creating a Gift instance, and each jump further modifies the supplied params in-place.

Also. There are no rescue/catch blocks, meaning any issue with any of the above results in a 500. (A real 500, not a fake 500 like last time. A real 500, with tragic consequences.)

If you're having trouble following the above... yep! That's why it took FOUR FREAKING DAYS! I had no tests, no documentation, no already-built way of testing the API, and no idea what data to send it. especially considering it requires data from Stripe. It also requires an active session token + user data, and I likewise had no login API tests, documentation, logging, no idea how to create a user ... fucking hell, it's a mess.)

Also, and quite confusingly:

There's a class for GiftSale, but there's no table for it.

Gift and GiftSale are completely interchangeable except for their #create methods.

So, why does GiftSale exist?

I have no bloody idea.

All it seems to do is make everything far more complicated than it needs to be.

Anyway. My total commit?

Six lines.

IN FOUR FUCKING DAYS!

AHSKJGHALSKHGLKAHDSGJKASGH.7 -

We've built a web app and now a client wants a VPN acces to the database of web app. When asked why, they said they want to occasionally pull some data out. 😱

We said no, and this is what they wrote:

"We’ve got live VPN access to every other web database we work with – why is this different?"

Well because maybe we know that we can build you an export of whatever you want, prepare you API calls for getting data to your CRM, but hell I'm not giving you access to the production DB.5 -

I'M SO PROUD, I WROTE A FULLY-FUNCTIONAL JSON PARSER!

I used some data from the devRant API to test it :D

(There's a lot of useful tests in the devRant API like empty arrays, mixed arrays and objects, and nested objects)

Here's the devRant feed with one rant, parsed by Lua!

You can see the type of data (automatically parsed) before the name of the data, and you can see nested data represented by indentation.

The whole thing is about 200 lines of code, and as far as I can tell, is fully-featured. 24

24 -

Start a development job.

Boss: "let's start you off with something very easy. There's this third party we need data from. They have an api, just get the data and place it on our messaging bus."

Me: "sure, sounds easy enough"

Third party api turns out to have the most retarded conversation protocol. With us needing a service to receive data on while also having a client to register for the service. With a lot of timed actions like, 'send this message every five minutes' and 'check whether our last message was sent more than 11 minutes ago'.

Due to us needing a service, we also need special permissions through the company firewall. So I have to go around the company to get these permissions, FOR EVERY DATA STREAM WE NEED!

But the worst of it all is... This whole api is SOAP based!!

Also, Hey DevRant!5 -

Writing more infrastructure than product.

Look, my application requests and transforms data from a single external API endpoint, it's just one GET request...

But I made an intelligent response caching middleware to prevent downtime when the parent API goes down, I made mocks and tests for everything, the documentation is directly generated from the code and automatically hosted for every git branch using hooks, responses are translated into JSONschema notation which automatically generate integration tests on commit, and the transformations are set up as a modular collection of composable higher order lenses!

Boss: Please use less amphetamine.5 -

Somebody asked me my API doc.

I don't have any API at all.

I will lie, and I'll write a swagger specification in few hours and I'll send them.

They will try to read it and understand, and after maybe a week, when they will ask for testing and endpoint I'll pretend to be on holiday for 2 weeks.

3-4 weeks gone already, I checked they should be on holiday by then. Only then, I'll answer with a fake endpoint with fake data.

I'll get another 2 weeks if I'm lucky.

When they discover about fake data, I'll say there is a bug.

In total if I play well, I have 2/2.5 months to implement some kind of API server with some more or less true implementation.

Thanks to Swagger. Swag11 -

Had to implement an API reporting on exabytes of data, scaling up eventually to a zettabyte.

I'd never even heard of these words until I started on the project.6 -

Me: *Demoed my search API which supports multiple database implementations at the backend*

My Manager: Great!! Is the API independent of DB? Can you plug this API to any DB?

Me: Yes

My Manager: How can user specific DB at runtime?

Me: Why will user be interested in the DB used at the backend? He will just query the API for data.

My Manager: Let's just assume he wants to select a database at runtime.

Me: While searching a movie on Netflix, do you specific from which DB you wanna stream the movie?

My Manager: *Confused and pissed*7 -

I spent an hour arguing with the CTO, pushing for having all our new products' data in the database (wow) with an API I could hit to fetch said data (wow) prior to displaying it on our order page.

He never actually agreed with me, but he finally acquiesced and wrote the migrations, API, and entered my (rather contrived) placeholder data. (I've been waiting on the boss for details and copy for three days.)

Anyway, it's now live on QA. but. I don't know where QA is for this app, and it's been long enough that i'm kind of afraid to ask.

Does that sound strange?

well.

We have seven (nine?) live applications (three of which share a database), and none of their repos match their URLs, nor even their Heroku app names. (In some of these Heroku names, "db" is short for the app's namesake, while in the rest it's short for "database").

So, I honestly have no idea where "dbappdev" points to, and I don't have access to the DNS records to check. -.-

What's more: I opened "dbappdev" on Heroku and tested out his new API -- lo and behold! it returns nada. Not a single byte. (Given his history I expected a 500, so this is an improvement, I think. Still totally useless, however.)

And furthermore: he didn't push the code to github, so I cannot test (or fix) it locally.

just. UGH.

every day with this guy, i swear.16 -

Currently working on the privacy site CMS REST API.

For the curious ones, building a custom thingy on top of the Slim framework.

As for the ones wondering about security, I'm thinking out a content filtering (as in, security/database compatibility) right now.

Once data enters the API, it will first go through the filtering system which will check filter based on data type, string length and so on and so on.

If that all checks out, it will be send into the data handling library which basically performs all database interactions.

If everything goes like I want it to go (very highly unlikely), I'll have some of the api actions done by tonight.

But I've got the whole weekend reserved for the privacy site!20 -

Yesterday I received the API documentation from an external company. Over half of the endpoints are either wrong or send invalid data and even the given test requests are fucking failing.

It's a nightmare. We have to finish a website until friday and that company did nothing for 2 months and now we have 2 days left.

The sheer incompetence is too damn high.

My boss said it would have been much better if we had implemented the API on our own. Damn right. 3

3 -

This is one of the biggest hack in my life:

We had a database project in our 2nd year of university where we had to create some complex database and input a lot of random data like postcode, names, weathers, age etc etc.

While everyone was struggling to copy paste random datas in the sql file to generate the data, I used an API from a website using Java to generate all the data.

People came to me and took me as a very brilliant person who does his project on time. I never told that to anyone5 -

Trying to setup a local Overpass API and Nominatim server (OpenStreetMaps data stuff).

The docker overpass image has been downloading a 38gb file for a little more than an hour now and it's coming closer and closer...

3..........

2............

1...............

100% YEAAAA.......

*docker continues to initiate the download of a new file*

"Hmm this can't be THAT bi......"

25gb

D:

Let's wait yet again..... I was so excited :(11 -

So my first job is also my current one. I am a computer science student and for my course we had to do a project for an actual client. The client was a consultancy company and after working my ass off, their software development partner decided to hire me and a classmate.

The company is pretty small (we are now with the 6 of us) and the general attitude is very nice. I've only been working there for a few weeks and I feel very welcome. The work isn't too hard (mainly web development with geographic features/data).

In rough lines the stack always consists of a Java Rest API and an Angular frontend that retrieved the data from the API.

So far I have learned a ton and I am really happy that I have this opportunity. Lunch is provided and we always eat together, we crack jokes, have fun, play games in the break. Coffee machine next to my desk. I'd love to work here all my life :d

Since I'm still in school I can't go to the office every day. Instead I am at the office every Monday and on other days I try to work from school or home.2 -

Just managed to setup a tiny/simple privacy-friendly analytics system.

You basically call an api from your backend with the api key and all the headers you received from the browser (php and Apache or nginx in my case) and the analytics api gets useful stuff out of that data without sacrificing privacy.

I get a little bit more insight into my websites usage and the client isn't sacrificing identifiable information!

I've been wanting to make this fucker for fucking months.11 -

Creating an anonymous analytics system for the security blog and privacy site together with @plusgut!

It's fun to see a very simple API come alive with querying some data :D.

Big thanks to @plusgut for doing the frontend/graphs side on this one!18 -

Boss: it’s all wrong, this was working last week.

Me: we have moved to a new data api and I’m in the progress of moving the views over as it’s new data has different names and more detail.

Boss: well fix it now I have a meeting with the client tomorrow morning. (It’s 3pm)

About 30mins later.

Boss: I guess I can say that we are migrating over to the new api, they should be fine with that.3 -

FUCK THE RECRUITERS WHO ASK US TO MAKE AN ENTIRE PROJECT AS A CODE TEST.

Oh you need to scrape this website and then store the data in some DB. Apply sentimental analysis on the data set. On the UI, the user should be able to search the fields that were scraped from the website. Upon clicking it should consume a REST API which you have to create as well. Oh and also deploy it somewhere... Oh I almost forgot, make the UI look good. If you could submit it in one week, we will move towards further rounds if we find you fit enough.

YOU KNOW WHAT, FUCK YOU!

I can apply to 10 others companies in one week and get hired in half the effort than making this whole project for you which you are going to use it on your website YOU SADIST MOTHERFUCK

I CURSE YOUR COMPANY WITH THE ETERNITY OF JS CALLBACK HELL 😡😤😣9 -

I'm thinking about doing a live coding stream on twitch this saturday, late afternoon or evening (CET).

I've never done a live stream before.

Do you have any suggestions or interests?

I'm thinking about something like a small RESTful API with Angular4/TypeScript (frontend, single page application) and CraftCMS/PHP (backend) with somebasic theory about HTTP requests / response, redirecting, data transfer and interfaces et cetera...

The duration will be around 2-4 hours, maybe longer if I have enough Mate & Beer.

But it's all just an idea at the moment. 😉

I will create an empty project for the stream on my Github and push to it during streaming, so you can pull it live or later.17 -

Managements definition of an MVP:

- Integrate our backend and database with a similar-ish, older internal system built on a different tech stack and different rules.

- Merge the functionality and delete the old one.

- Modify our system to accept 2 types of logged in users.

- Have 2 versions of our API that return different values.

- Update our mobile app to render different data based on which user is logged in.

- Onboard the old system users to this new system.

My definition of an MVP:

- Tell the store we are taking over, that they have to print their labels from our tool, and onboard the users to our app.9 -

Setup my port honeypot today finally, including port 22, then wrote a custom dashboard for some data tracking, feels great to have it open on my screen seeing the bans just roll in every 2 seconds of refresh, the highest hits are as expected from china, russia and india, also filed ~700 reports and already got 300 banned from their service. (mainly Microsoft Azure for whatever reason)

I wanted to first automate that (or atleast blacklist report to various IP lists via API), but then I was afraid that I'll be one day stupid enough to somehow get banned - don't want myself to get reported lol5 -

So, I was gonna rant about how it can be difficult to design event-based Microservices.

I was gonna say some shit about gateways APIs and some other stuff about data aggregation and keeping things idempotent.

I was going to do all this but then as I was stretching out the old ranting fingers I decided to draw a diagram to maybe go along with the rant.

Now I’m not here to really rant about all that Jazz...

I’m here to give you all a first class opportunity to tear apart my architecture!

A few things to note:

Using a gateway API (Kong) to separate the mobile from the desktop.

This traffic is directed through to an in intermediate API. This way the same microservices can provide different data, and even functionality for each device.

Most Microservices currently built in golang.

All services are event based, and all data is built on-the-fly by events generated and handled by each Microservices.

RabbitMQ used as a message broker.

And finally, it is hosted in Google Cloud Platform.

The currently hosted form is built with Microservices but this will be the update version of things.

So, feel free to rip it apart or add anything you think should change.

Also, feel free to tell me to fuck right off if that’s your cup of tea as well.

Peace ✌🏼 19

19 -

-Welcome to our entry level positing with Xyz company. I know we told your recruiter we are very hands on with developers. But we aren’t. Also you will be the only developer and there is no team.

-uh…. Okay..

-for the first part of your interview we are going to have you write a program in node that will reach out to our api and sort medical data with our clients.

-so you want me to create something live, and you’re going to be using it before you hire me in the actual work place?

-if it works, yes. Then we will decide on if we will hire you or not.

Wtaf?6 -

Coworker: since the last data update this query kinda returns 108k records, so we gotta optimize it.

Me: The api must return a massive json by now.

C: Yeah we gotta overhaul that api.

Me: How big do you think that json response is? I'd say 300Kb

C: I guess 1.2Mb

C: *downloads json response*

Filesize: 298Kb

Me: Hell yeah!

PM: Now start giving estimates this accurate!

Me: 😅😂4 -

"I'll just fetch the data from Wikipedia, it must be really simple using their API"

I've never been so wrong.2 -

Fuck you you fucking fuck, why would you change an api without any notification?

Background: built an app for a customer, it needs to fetch data frlman external api, and save it to a db.

Customer called: it's broken what did you do?!?

Me: I'll look into it.

Turned out the third party just changed their api... Guess I should implenent some kind of notification, if no messages come in for some time...5 -

This might actually be my first real rant.

Whatever fucking cockgoblin decided that making dynamics GP so fucking confusing needs to suck a big bag of dicks. I'm so fucking tired of having to google every damned table name and column name because nothing makes any motherfucking sense.

Am I supposed to instinctively know what PM20201 does? What data it holds? I don't mind reading documentation. But it's hard to even know where to start when the shitbird API and database are more complicated than calculating orbital fucking decay.

I am done. Fuck you gp. Fuck you and your nonsense. I guess our sales people don't get to know when an invoice was paid.8 -

Poorly written docs.

I've been fighting with the Epson T88VI printer webconfig api for five hours now.

The official TM-T88VI WebConfig API User's Manual tells me how to configure their printer via the API... but it does so without complete examples. Most of it is there, but the actual format of the API call is missing.

It's basically: call `API_URL` with GET to get the printer's config data (works). Call it with PUT to set the data! ... except no matter what I try, I get either a 401:Unauthorized (despite correct credentials), 403:Forbidden (again...), or an "Invalid Parameter" response.

I have no idea how to do this.

I've tried literally every combination of params, nesting, json formatting, etc. I can think of. Nothing bloody works!

All it would have taken to save me so many hours of trouble is a single complete example. Ten minutes' effort on their part. tops.

asjdf;ahgwjklfjasdg;kh.5 -

Fuck (some of) you backend developers who think regurgitating JSON makes for a good API.

"It's all in JSON. iOS can read JSON, right?"

A well-trained simian can read JSON, still doesn't mean it can do something with it. Your shitty API could be spitting out fucking ancient Egyptian for all I care, just make it be the same ancient Egyptian everywhere!

Don't create one endpoint that spits out the URL for the next endpoint (completely different domain, completely different path structure). Are you fucking kidding me?

As if that wasn't enough, endpoints receive data structured in one way, but return results in another!! "It's all JSON", but it's still dong.

How do I abstract that, you piece of shit? Now I have to write ever so slightly different code in multiple places instead of writing it only once.

How the fuck do I even model that in a database?

Have a crash course on implementing APIs on the client side and only come back when you're done.

Morons.6 -

"The aim is to develop highly robust data streams so we have the flexibility to build and evolve the user interface without having to change code in the API"

Oh, is that all you need? 4

4 -

me: “Realistically, the only way to pull in this data without replicating and without an API feed is to scrape it from the site”

manager -> to the client: “basically he’s got to hack your system to do it”2 -

Found that out that one of our company's internal API (I hope it's only internal) is exposing some personal data. After finally getting the right people involved they said they'd fix it 'immediately'.

5 days later I check and now there is more personal data exposed...which includes personal security questions and the hashed answers to said questions.

And of course they are using a secure hashing mechanism...right? Wrong. md5, no salt

Sigh...5 -

Context: we analyzed data from the past 10 years.

So the fuckface who calls himself head of research tried to put blame on me again, what a surprise. He asked for a tool what basically adds a lot of numbers together with some tweakable stuff, doesnt really matter. Now of course all the datanwas available already so i just grabbed it off of our api, and did the math thing. Then this turdnugget spends 4 literal weeks, tryna feed a local csv file into the program, because he 'wanted to change some values'. One, this isnt what we agreed on, he wanted the data from the original. When i told him this, he denied it so i had to dig out a year old email. Two, he never explicitly specified anything so i didnt use a local file because why the fuck would i do that. Three, i clearly told him that it pulls data from the server. Four, what the fuck does he wanna change past values for, getting ready for time travel? Five, he ranted for like 3 pages, when a change can be done by currentVal - changedVal + newVal, even a fucking 10 year old could figure that out. Also, when i allowed changes in a temporary api, he bitched about how the additional info, what was calculated from yet another original dataset, doesnt get updated, when he fucking just randimly changes values in the end set. Pinnacle of professionalism.2 -

While trying to integrate a third-party service:

Their Android SDK accepts almost anything as a UID, even floats and doubles. Which is odd, who uses those as UIDs? I pass an Integer instead. No errors. Seems like it's working. User shows up on their dashboard.

Next let's move onto using their data import API. Plug in everything just like I did on mobile. Whoa, got an error. "UIDs must be a string". What. Uh, but the SDK accepts everything with no error. Ok fine. Change both the SDK and API to return the UID as a string. No errors returned after changing the UIDs.

Check dashboard for user via UID. Uh, properties haven't been updating. Check search properties. Find out that UIDs can only be looked up as Integers. What? Why do you ask me to send it as a string via the API then? Contact support. Find out it created two distinct records with the UID, one as a string and the other as an Integer.

GFG.3 -

R is the worst language.

* Indices start at 1, so you have to fix all your calculations by either +1 oder -1. It sucks

* Vectors and Lists are both neither vectors nor lists

* Data frames dont have a proper api. Simple operations like add or remove are a pain.

* The naming „conventions“ suck. Why on earth would add dots in your identifiers? You never know if its an object, a value, a function.

* The namespace is cluttered. If you import two libraries that deal with the same problem domain, it is likely that they define functions with clashing names that will overwrite each other defined on import.4 -

Me: why are we paying for OCR when the API offers both json and pdf format for the data?

Manager: because we need to have the data in a PDF format for reporting to this 3rd party

Me: sure, but can we not just request both json and PDF from the vendor (it’s the same data). send the json for the automated workflow (save time, money and get better accuracy) and send the PDF to the 3rd party?

Manager: we made a commercial decision to use PDF, so we will use PDF as the format.

Me: but ...3 -

The GitHub graphql API is pretty neat, mostly because it's a great example of a product where graphql has advantages over REST. As a code reviewer for repos with hundreds of simultaneous PRs, I use it to filter through branches for stuff that needs my attention the most.

NewRelic's NRQL API is also quite nice, as it provides an unusual but very direct interface into the underlying application metrics.

I'm also a big fan of launchlibrary, purely because I love spaceflight, and their API is an extremely rich and actively maintained resource. This makes it a great data source for playing around with plotting & statistics libraries — when I'm learning new languages or tools, I prefer to make something "real" rather than following a tutorial, and I often use launchlibrary as a fun and useful data backend. -

I am speechless! Assigned back to a project after leaving it for four months, went to see tasks, and they are like this:

Q1. Why did't you do this for the app?

A1: Because your team has not yet provided API, how is my team supposed to implement

Q2: Why having this in the app? either x or y not both!

A2: You guys wanted both

Q3: Why is the app showing data that must not be displayed?

A3: Because your server is sending me the data based on the criteria I sent? What else do you expect

and the list goes on ....11 -

So I wrote an application that loads data from a 3rd party API. It allows the user to enter a record locator number and pull it up. By design, the value can be a partial match and it will pull up the record still.

The first API call I make only took 2-3 seconds, so I didn't see an issue as it's loading most of the data the app needs. I keep the filters/fields as they are and move on.

Fast forward 6 months. The user is complaining that the records are taking 30-45 seconds to load. Sure enough, load times are terrible. I've made lots of changes to what fields I'm loading through the API, and I'm calling several additional APIs, so I start pulling pieces of code out to see if anything improves. They all barely make any difference--still 30+ second load times. I end up removing everything except the first API call I developed that was taking 2-3 seconds before. Still taking 30+ seconds.

The 3rd party API allows you to filter using "starts with" or "contains". I used "contains" initially and had no issue, but I decided to try "starts with" since it should fit most use cases.

Load time is less than one second. I add back everything else. Load time is just over a second.

It seems that the 3rd party updated the API and multiplied load times by 10 when using that particular filter. I spent almost an hour on this since the platform doesn't support performance or debugging tools very well, and it all came down to a one line fix.4 -

Have to use a 3rd party API which responds to all requests like

{

status: 200,

data:{

status: 'fail' / 'pass',

data: { data}

}

Should I be sad?

P.S. They ask for a 'userName'7 -

Thank God the week 233 rants are over - was getting sick of elitist internet losers.

The worst security bug I saw was when I first started work as a dev in Angular almost year ago. Despite the code being a couple of years old, the links to the data on firebase had 0 rules concerning user access, all data basically publicly available, the API keys were uploaded on GitHub, and even the auth guard didn't work. A proper mess that still gives me the night spooks to this day.3 -

>be my team

>developing a mobile app

>I'm responsible for developing a "RESTful" API to interface communications between the app and the database

>there's also an "admin" web application which the client themselves will use to manage some shit in the database

>I've developed the API, it works with the mobile app

>instead of just making it simply a front-end app that makes requests to the API like the mobile app does, the guy responsible for the admin app completely ignores my API and implements his own with a certain messy dollar symbol language and a certain bloated piece of server software, accessing the same database directly, and does some operations in his own special way that will break what I've implemented

>now data inserted via admin app is inaccessible to the server API, and I'm expected to "fix" my code so it's consistent with this guy's shit, but the only way to do it is introducing interdependency between the actual API and the admin app's back end

Fuck my life, now I'm the one responsible for the app being broken because no way the guy who's used to kludging unmaintainable shit together fast would ever fuck anything up2 -

Google just emailed me to tell me that I should, "take action against suspicious apps that can access your data"... but the app in question was a Google Drive API token I made for a thing I am personally developing .-.6

-

So at the moment I'm developing a RESTful API for an internal project at work and I'm starting to learn and understand about HTTP status codes.

So I started incorporating proper response HTTP status etc, but my co-workers don't understand what any of it means. They think that just sending a JSON response is enough with any messages should be enough. I think this mindset stems from people who just do simple AJAX calls in JavaScript just to get or store data.

It's these kind of developers that I find are lazy or have no motivation to improve themselves, which is disappointing.5 -

Me: hey backend, I'd like you to make three external API calls and a system call, then based on the result can you sort out the output and add it to the data base

Backend: sure *goes and does its thing*

Me: hey html/css, can I please have a square in the middle of the screen and a rectangle ok the left that takes up the full height minus 6px of the border?

html/css: *starts loading*

Me: ok cool thanks *anticipation*

html/css: *displays something resembling a 5 year old who just found out about rulers*

Me: oh ffs is it that hard5 -

Just received a test for a job I'm interviewing for. I was interviewing for a C++ position. Practice test: Create an REST API using SpringBoot, Spring Data, document with Swagger and implement continuous integration testing.

To be fair, I also mentioned I'm fluent in Java. But I've never touched SpringBoot or done any backend webdev, since my intention was to never get near it.

Deadline: Sunday. Game on...4 -

"Our company encourages cryptocurrency big data agile machine learning, empowerment diversity, celebrate wellness and synergy, unpack creative cloud real-time front-end bleeding edge cross-platform modular success-driven development of digital signage, powered by an unparalleled REST API backend, driven by a neural network tail recursion AI on our cloud based big data linux servers which output real time data to our Wordpress template interactive dynamic website TypeScript applet, with deep learning tensor flow capabilities.

Don't get what the fuck I just said? Udemy offers countless courses on python based buzzwords. Be the first out of 13 people to sell your soul and private information, and you'll get the first three minutes of the course free!"random bullshit cryptocurrency joke/meme ai fuck your buzzwords rest api deep learning big data udemy3 -

EoS1: This is the continuation of my previous rant, "The Ballad of The Six Witchers and The Undocumented Java Tool". Catch the first part here: https://devrant.com/rants/5009817/...

The Undocumented Java Tool, created by Those Who Came Before to fight the great battles of the past, is a swift beast. It reaches systems unknown and impacts many processes, unbeknownst even to said processes' masters. All from within it's lair, a foggy Windows Server swamp of moldy data streams and boggy flows.

One of The Six Witchers, the Wild One, scouted ahead to map the input and output data streams of the Unmapped Data Swamp. Accompanied only by his animal familiars, NetCat and WireShark.

Two others, bold and adventurous, raised their decompiling blades against the Undocumented Java Tool beast itself, to uncover it's data processing secrets.

Another of the witchers, of dark complexion and smooth speak, followed the data upstream to find where the fuck the limited excel sheets that feeds The Beast comes from, since it's handlers only know that "every other day a new one appears on this shared active directory location". WTF do people often have NPC-levels of unawareness about their own fucking jobs?!?!

The other witchers left to tend to the Burn-Rate Bonfire, for The Sprint is dark and full of terrors, and some bigwigs always manage to shoehorn their whims/unrelated stories into a otherwise lean sprint.

At the dawn of the new year, the witchers reconvened. "The Beast breathes a currency conversion API" - said The Wild One - "And it's claws and fangs strike mostly at two independent JIRA clusters, sometimes upserting issues. It uses a company-deprecated API to send emails. We're in deep shit."

"I've found The Source of Fucking Excel Sheets" - said the smooth witcher - "It is The Temple of Cash-Flow, where the priests weave the Tapestry of Transactions. Our Fucking Excel Sheets are but a snapshot of the latest updates on the balance of some billing accounts. I spoke with one of the priestesses, and she told me that The Oracle (DB) would be able to provide us with The Data directly, if we were to learn the way of the ODBC and the Query"

"We stroke at the beast" - said the bold and adventurous witchers, now deserving of the bragging rights to be called The Butchers of Jarfile - "It is actually fewer than twenty classes and modules. Most are API-drivers. And less than 40% of the code is ever even fucking used! We found fucking JIRA API tokens and URIs hard-coded. And it is all synchronous and monolithic - no wonder it takes almost 20 hours to run a single fucking excel sheet".

Together, the witchers figured out that each new billing account were morphed by The Beast into a new JIRA issue, if none was open yet for it. Transactions were used to update the outstanding balance on the issues regarding the billing accounts. The currency conversion API was used too often, and it's purpose was only to give a rough estimate of the total balance in each Jira issue in USD, since each issue could have transactions in several currencies. The Beast would consume the Excel sheet, do some cryptic transformations on it, and for each resulting line access the currency API and upsert a JIRA issue. The secrets of those transformations were still hidden from the witchers. When and why would The Beast send emails, was still a mistery.

As the Witchers Council approached an end and all were armed with knowledge and information, they decided on the next steps.

The Wild Witcher, known in every tavern in the land and by the sea, would create a connector to The Red Port of Redis, where every currency conversion is already updated by other processes and can be quickly retrieved inside the VPC. The Greenhorn Witcher is to follow him and build an offline process to update balances in JIRA issues.

The Butchers of Jarfile were to build The Juggler, an automation that should be able to receive a parquet file with an insertion plan and asynchronously update the JIRA API with scores of concurrent requests.

The Smooth Witcher, proud of his new lead, was to build The Oracle Watch, an order that would guard the Oracle (DB) at the Temple of Cash-Flow and report every qualifying transaction to parquet files in AWS S3. The Data would then be pushed to cross The Event Bridge into The Cluster of Sparks and Storms.

This Witcher Who Writes is to ride the Elephant of Hadoop into The Cluster of Sparks an Storms, to weave the signs of Map and Reduce and with speed and precision transform The Data into The Insertion Plan.

However, how exactly is The Data to be transformed is not yet known.

Will the Witchers be able to build The Data's New Path? Will they figure out the mysterious transformation? Will they discover the Undocumented Java Tool's secrets on notifying customers and aggregating data?

This story is still afoot. Only the future will tell, and I will keep you posted.6 -

You build a system to integrate into an API to save the client hours of data-entry per day and reducing the number of fields needed to be filled manually by 75% and querying for the rest of the data and filling in the blanks. It took weeks of building and researching and bug fixing and when you're finally done the client looks at you unimpressed.

The same client gets a small piece of js that gets users location(by ip address) and uses it to customize a hello message on the home page and they think 'yer a wizard, Harry!' and jump for joy over the "cool factor" of this simple hack. -

My god the wall looks really punchable right now. Let me tell you why.

So I’m working on a data mining project, and I’m trying to get data from google trends. Unfortunately, there have been a lot of roadblocks for what should have been an easy task.

First it won’t give a raw search volume, only relative “interest”.

Fortunately it lets me compare search terms, which would work for my needs however it will only let me compare a few at a time. I need to compare 300.

So my solution is simple: compare all the terms relative to one term. Simple enough, but it would be time consuming so I figured I’d write a program to get the data.

But then I learned that they don’t have an official api. There’s a node module for this very thing based on a python module that reverse engineers the api endpoints. I thought as long as it works I’d use it.

It does work... But then I discovered that google heavily rate limits the endpoints.

So... I figured I’d build a system to route the requests through different tor nodes to get around the rate limit. Good solution right? Well like a slap to the face, after spending way to much time getting requests through tor working, I discovered that THEY FUCKING BLOCKED TOR IPS.

So I gave up, and resigned to wait 5 hours for my program to get the data... 1 comparison at a time... 60s interval between requests. They, of course, don’t tell you the rate limit threshold, so this is more or less a guess (I verified that 30s interval was too short and another person using the module suggested 60s).

Remember when I said the discovery that the blocked tor came like a slap to the face? This came as a sledge hammer to the face: for some reason my program didn’t dump the data at the end. I waited 5 fucking hours to get nothing.

I am so mad right now. I am so fucking mad.4 -

!rant

So my pm gave me a task and estimated it to 6 days. I was like, well, thats a vacation for me isn't it :). I started it 3 days later and read the description... Get these api into this app..etc..mvp and all... so I worked on the views first. Later I found out that the api were totally incompatible, and no such data was found or COULD BE MADE for the app. that was day 1 :)

I kept publishing apks with empty views, nice empties If I do say so, and just said we have to wait for backend to make tokens and data. Vacation starts, (sorry boss if you're reading this :D)

On day 6, the PMs were just rushing up and down, contacting backend, back to me, then backend, office ping pong, (a lovely sight), til the senior SysAdmin said, its impossible. Of course I knew this, buuuut, who would miss such a lovely opportunity.

PS: to all PMs, keep on dreaming those impossible ideas :) -

TL:DR

Why do so shitty "API"s exist that are even harder to write than proper ones? D:

Trying to hack my venilation at home.

This API is so horrible D:

The API is only based on POST requests no matter if you want to write values or get values and the response only contains XML with cryptic values like:

<?xml version="1.0" encoding="UTF-8"?>

<PARAMETER>

<LANG>de</LANG>

<ID>v01306</ID>

<VA>00011100000000000000000010000001</VA>

<ID>v00024</ID>

<VA>0</VA>

<ID>v00033</ID>

<VA>2</VA>

<ID>v00037</ID>

<VA>0</VA>

Also there are multiple API routes like

POST /data/werte1.xml

POST /data/werte2.xml

POST /data/werte3.xml

POST /data/werte4.xml

And actually the real API route is only given in the request body and not in the path.

Why is this so shitty? D:<

Btw in terms of security this is also top notch. It just globally saves if one computer sends the login password.

I mean why even ask for a password then? D:

That made me end up with a cronjob to send a login request so I don't have to login on any device.

PS:

You see, great piece of German engineering.3 -

Boss"So, we need to get some data about the users using the APIs from this list of sites."

Me"Alright, sounds feasible enough"

Navigating to first site.

M"Hold on, where's the API?"

B"What do you mean? You're looking at it."

M"This is a website with a search bar, not an API"

B"Same thing. Get to scrapping that data."

M"I-It's written in a JS framework to be reactive in a half-assed way."

B"We need that data"

M"The data is not even consistent!"

B"That's why we need to join it with all these different sources."

The API was a lie. None of the sites had anything remotely similar to an API.

Having to use bloody selenium with chrome driver to scrap all the information because of course, it has to be done programatically every week from now on.

I just hope no captcha of any kind is installed before I finish this project.4 -

So here I am testing some python code and writing to a file. No big deal. But damn is it taking a long time to get data back from this API. Ah it's fine I'll let it work in the background.

40 minutes later.

Oh! The requests timed out. No big deal. I'll just cut out the parts that are already done.

1st request in.

I wonder what the file is looking like.

Only showing 1 request.

waitaminute.jpeg

I should have more than that.

*Suddenly realizes that I was writing to the file and not appending.

Fuuuuuuuuuuuuuuuuuuck 2

2 -

Facebook is a giant piece of shit. Not only is their platform a massive contributor to mental illness, even their API's are fucking garbage. I'm trying to use their ads API and what it does is it hijacks the entire fucking request so you can't even extract data from the request after calling it. Fuck Facebook and everything they've ever "contributed" to society.5

-

This is what happened today in our dayli:

Lead: We need to profile our software

Me: You can use the chrome devtools as remote profiler, even on prod, or make HAR files for later inspection.

Lead: Yeah but no that’s just collecting data on every tick, we need something like “has been called x times”

Me: Yeah but you can filt -

Lead: Yeah no, so back when I wrote code in Delphi...

Me: *oh god no not this again*

Lead: ... We could have clicked a button in our IDE and it would wrap the function call with the API call to profile that function ...

Me, to the secret dev group in slack: doesn’t a simple method decorator and node performance api help with that?

The people in the group: We had this topic last Friday all day...

Me: oh well *get’s coffee and ignores lead*3 -

What the actual fuck...

What kind of API does not do data integrity validation, and allows me to subscribe a user to a newsletter list with a non-existant list id ?

That's some fucking bullshit. fucktards at www.make.as1 -

Look honey, I wrote this little function that calls an api and submits 80% of the data to my submit form based on the input you give it, ain't that cool?

Her: "Yeah that's okay"

Me: Yeah, yeah it is..1 -

Android dev job question:

"Describe the activity lifecycle and write an application that does x,y,z in accordance with it"

Fullstack dev job question:

"Write some code that interacts with our API and does x,y,z, put the data into our database and build a web interface"

Java backend dev interview :

"BUILD AN ELEVATOR ALGORITHM WITH LESS THAN o(nlog(n)), FIND NEIGHBORS IN A BINARY TREE, WHAT IS THE DIFFERENCE BETWEEN AN INTERFACE AND ABSTRACT CLASS?"

Why?5 -

Fuck everything about Microsoft Dynamics. I'm supposed to use the REST API to make a web front-end. I notice all of the data comes back codified.

null == 0.

boolean true == 100000000

boolean false == 100000001

except sometimes when

boolean false == 100000000

boolean true == 100000001

or other times

string "Yes" == 100000000

string "No" == 100000001

string "Maybe" == 100000003

Hang on. Is the system representing a 1 bit value with base 10 numbers? Did the client set this up like this? Holy crap every number corresponds to a unique record in a table somewhere. That means it only returns numeric values instead of strings and I have to figure out what the number means in the context of the table.

A "key" is user typed? So every time someone starts to make a new record it saves a new "key" without a record? So I can pull a bunch of "0" records if I pull sequentially? So basically I need to see all of the data in Dynamics to have any context at all for what is returned from the Dynamics API? Fuuuuuuuuuu10 -

management logic.

dev : calling api on every product scroll is a stupid idea. we shouldn't do it. what if user has 100s of products bought?

mgmt : it isn't a practical scenario. in prod, we checked the data and we rarely have customers with more than 20 products

dev : 😮🤷♂️

dev : this is a rare issue that only happens for very old devices from this specific manufacturer. even manufacturers have acknowledged this.

mgmt : we don't care. fix it, as per data this error has been logged for more than 12 times (from 1 user only)

dev : 😮😢2 -

Oh I have quite a few.

#1 a BASH script automating ~70% of all our team's work back in my sysadmin days. It was like a Swiss army knife. You could even do `ScriptName INC_number fix` to fix a handful of types of issues automagically! Or `ScriptName server_name healthcheck` to run HW and SW healthchecks. Or things like `ScriptName server_name hw fix` to run HW diags, discover faulty parts, schedule a maintenance timeframe, raise a change request to the appropriate DC and inform service owners by automatically chasing them for CHNG approvals. Not to mention you could `ScriptName -l "serv1 serv2 serv3 ..." doSomething` and similar shit. I am VERY proud of this util. Employee liked it as well and got me awarded. Bought a nice set of Swarowski earrings for my wife with that award :)

#2 a JAVA sort-of-lib - a ModelMapper - able to map two data structures with a single util method call. Defining datamodels like https://github.com/netikras/... (note the @ModelTransform anno) and mapping them to my DTOs like https://github.com/netikras/... .

#3 a @RestTemplate annptation processor / code generator. Basically this dummy class https://github.com/netikras/... will be a template for a REST endpoint. My anno processor will read that class at compile-time and build: a producer (a Controller with all the mappings, correct data types, etc.) and a consumer (a class with the same methods as the template, except when called these methods will actually make the required data transformations and make a REST call to the producer and return the API response object to the caller) as a .jar library. Sort of a custom swagger, just a lil different :)

I had #2 and #3 opensourced but accidentally pushed my nexus password to gitlab. Ever since my utils are a private repo :/3 -

Be me

Need to fetch data from a website for project I'm working on

Look for an API with no luck

Spend 2 weeks developing a ridiculously overengineered and slow webscraping program with Selenium

Find an API

Fuck this4 -

Developing a notification API, sends emails to subscribers, email API can take only 100 IDs at once, so partitioned the email list and send mails in blocks of 100.

Forgot to reset the list after every block, so each new partition got appended to the existing list and kept going on.

Ran it against a test DB, which was recently refreshed with near-prod data !!! Thousands of emails went out of the app server in one shot and everybody receiving numerous duplicate emails. Especially the ones in the very first partition.

Got an incident raised by the CEO himself reg the flurry of emails. But, things were out of our hands, quite literally. All emails are queued up in the exchange server.

Called up the exchange server team, purged the queued emails. No other emails were sent/received during this whole episode.

Thanks to Iterables.partition in the present day.3 -

Wtf firefox ? are you serious ?

I made an extension (https://addons.mozilla.org/en-US/...) that uses the Storage API to store preferences. In their website the permission section it displayed "Access your data for all websites". Some guy gave 1 star and let this message "This does not need all my browsing information."

For firefox I'm worst than facebook. Get your facts right. 1

1 -

Neat: MongoDB. Fairly easy to use, intuitive-ish JSON API. Thinking about using it on a project. Excitement.

Neater: Data validation. You can have it drop writes that don't match a schema. Excitement intensifies.

Braindead: It absolutely will not tell you exactly *why* the write doesn't meet the schema, leaving you to figure that out on your own, smart guy. Mongo smugly crosses its arms and tells you to go back and do it right without actually telling you what the problem is.

Fucking braindead: This has been an open feature request since year of our lord two-thousand-and-fucking-fifteen. https://jira.mongodb.org/browse/...7 -

>uni project

>6 people in group

>3 devs (including me)

I am in charge of electronics and software to control it as well as the application that will use them.

2 other "devs" in charge of a simple website.

Literally, static pages, a login/registration, and a dump of data when users are logged in.

Took on writing the api for the data as well, since I didn't fully trust the other 2.

Finished api, soldered all electronics, 3d printed models.

Check on the website.

Ugly af, badly written html and css.

No function working yet.

Project is due next week Thursday.

Guess who's not having a weekend and gonna be pulling 2 all nighters2 -

While in the banking world, I had a project where I had to automate an import into a shit system called CRAWiz. The data had to come from multiple archaic loan systems with no API and tons of shit data.

After implementing, the shit data came to light. Instead of fixing shit data (and using their loan systems correctly), they decided to go back to digging through physical files and manually importing. They blamed CRAWiz and decided to go with a new system to import their shit data into. I warned them repeatedly that a new system would not fix the shit data but they couldn't accept it. I left at that point. 😂 -

Testing an attendace machine API one by one so i know what does what.

And there’s an API for wiping all the attendance data stored in the machine.

I didn’t realize it until i push the damn button.

The attendance machine just become fresh like new 😱😨😰

Shit.

A testing session just become an extreme sport.

Thankfully the IT guy has a backup but just up to last month.

Well, it’s better than nothing isn’t it?

He just tell his boss that the machine was run out of memory and the attendance data for the current month were not saved.

And he ask him to buy me some machine for testing.

Yes, i was living on the edge by testing in the production machine. -

A government website that I wanted to try and scrape data from to make a better app, I've actually found to be the pinnacle of a demonstration of what NOT to do...

Containing a JavaScript file that not only had got code copied 3 times (changed the tiniest bit on each) for what environment it's on, but has ALSO got the API keys for all 3 environments, AND the APIs they've made it call from there pass FULL SQL right in the query string...

What. The. Actual. Fuck?!5 -

-- This is my first rant so sorry if it's bad--

We have a nice project that I am working on that needs to store and interact with location data. It is a .NET Core API using Entity Framework Core to interact with the database. All good and well. Until today when I started working on the implementation of storing location data we retrieve from mobile devices.

SQL has a nice data type named: "Geography" which can store a location and do calculations on it with queries. Such as proximity and distance which is what we need.

But then it turns out that EntityFramework Core does not have support for the Spatial data types. even though version 6 did have Spatial support.

Then i found the following issue on GitHub: https://github.com/aspnet/...

Turns out this feature has been requested since 2014 and is even on the "High-priority" list and is still not implemented to this day. Even though in the issue many people are asking to have this implemented.

WHY IS THIS TAKING SO LONG MICROSOFT!!

So now i have to figure out how to work around this. But that is an issue for tomorrow.1 -

Was writing a functional test in AdonisJS that queries an API endpoint with data and my test stays red with a dainty `expected 500 to equal 200` assertion failure.

In frustration, I yelled "What must I fuchen do to get my 500 to become a 200". Then my dev friend, an absolute fuchen genius tells me, "Subtract 300." I hope the prat stays debugging his code for a week!! 6

6 -

Got myself into Facebook's Graph-API...

...everything is so easy and well optimized.

NOT! Now I have to optimize the request to reduce loadtime. Why is there data so fractured? -

I would like to know if anyone has created a CSV file which has 10,000,000 objects ?

1) The data is received via an API call.

2) The maximum data received is 1000 objects at once. So it needs to be in some loop to retrieve and insert the data.11 -

My gods, fuck WordPress in the backend!

Why did my company decide on writing a data collection api with many dynamic pages on top of WordPress. It's a blog, not a wonder tool you dumbwits.

Never have I had more fuck this moments per hour..2 -

Working on a new payment gateway for one of my customers, and it turns out that instead of just specifying the parameters for what to include in the API call they want you to use their drop-in module for it...which is still written in PHP 4 and hasn't been updated since 2011. Also turns out that they only accept data formatted in XML.

Not insurmountable, but more than I feel like dealing with right this moment...7 -

When you're trying to find out from what API endpoint a page gets it's data from, put breakpoints on every endpoint, but none hit a breakpoint when the page loads.

2

2 -

I'm debugging a script...

It takes 1+ minute to start because it loads data from remote API and apparently loading 80k objects takes a lot of time, even though I need only headers

I could optimize it. Like, add a local cache. But I will not.

Instead I will waste 1 minute, then another minute, then another minute, each time hoping it's the last pass, but no. I will waste the whole day on it and at the end of the day I will still NOT have the slightest idea why it is slow. That is what will happen, I predict it.

Good times3 -

I work in a dev company. One of our clients hired us to help them out as their devs are failing with their deadlines.

I had to expose app services via an api. I did it. Client company devs didn't like the way I did it as I rewrote their datamodels and declared them as api-use-only. I was demanded to return bkend services' data structures.

I didn't agree and waited until deadline to submit my code.

Now they are honestly thanking me for what I did as I've saved them from a forever-mutating-api-and-angry-integration-customers hell.

Not sure whether should I be happy or worried. I forced my solution onto them. It's not proffessional. But yhe customer is happier now than it would've been.

What do you do in such situations?6 -

This utilization shit is stupid! Seriously man what the hell! Yes yes it's an important number yes yes I don't even care. You want me to increase my utilization and at the same time be wary of the budget, which are unrealistically tight to begin with. It's freaking impossible! Who comes up with this shit?

You know what? Half of this shit ain't even my fault! A project was set for 200 hours and a guy wasted half of that trying to figure out just HOW TO CONNECT TO THE API! Like the guy only wrote 30 lines in 100 HOURS! ARE YOU FREAKING KIDDING ME! THEN YOU PASS OVER THE PROJECT TO ME AND SAY YOU HAVE ONLY 100 HOURS LEFT TO CONNECT TO THE API, GET THE DATA (WHICH BTW DOESNT EVEN EXIST), PARSE IT, AND THEN CREATE GRAPHS AND A FULLY FUNCTIONAL SOFTWARE, WITH A USER INTERFACE THAT SHOULD RUN AS AN EXECUTABLE!!!! ME? ALONE?

MAN FUCK YOU!2 -

My first rant for ages

I'm working on a new project at a new company. We ha e a bunch of front end clients talking to an api.

I suggested that the api only communicate in terms of view models in order to bring some kind of standardisation to the project since at the time the gets and posts were either dB entities, view models, or just whatever the dev at the time decided.

I got a no, but that we could do posts and gets just with database entities. OK better than nothing..

I'm the front end angular app I implemented a generic form component and a generic data table component. The models given to these to build the components need to implement a view model interface.

Now we have a problem of the api giving us not view models and the front end needing view models so I put together a way to handle this in the front end.

My colleague with 8 years experience asks for my help and I'm happy to oblige. It turns out a model should have multiple child models in the database but the database entity models don't reflect this and therefore there is no way to build the view models. The data just isn't there from the api... Still I show him what the front end model should look like and write all the front end code for him to handle that.

2 days later he asks for my help again. It's exactly the same problem. Instead of fixing the backend and setting up the one to many relationship he has ignore the problem, retrieved a one to one relationship model and is just trying to force it to work - even though the data isn't there. He has also commented or removed all the code I helped him write and overwritten a file of typescript models that get autogenerated for us to be in sync with the backend...

I actually felt bad afterwards but I got frustrated as hell and he could tell...1 -

When the API you are supposed to use for fetching data returns another item when the item you were looking for is not found! Dudes WTF? Ever heard of 404 or something2

-

Yahoo finance shut down all their historic data uRL and im out of options. No API or packeges works. I have a report to pass up on tuesday for my ViVa and this is shit. Fuck. My months of work just wasted.7

-

It works locally, it works in Dev, it works in Test, but fails to deploy in UAT. Is it a data issue? I don't know, I don't have permissions to see the UAT database. Literally all I know is that this API is returning 500 instead of what it's supposed to return, but only sometimes.

Guess I'll sit here all day and try to solve the problem telepathically as there is literally no way of troubleshooting other than scrolling through the code and hoping that a cartoon lightbulb appears above my head.2 -

Finally got my anime api somewhat working.

Tomorrow (or in a couple of hours 😅) I'll try to register my first domain and and get my first vps(?) up and running

The api features the data from /r/animethemes, so it'll have 2000+ animes entries with opening / ending urls.

I've also tried to implement some form of searching ('%term%' stuff 🤣), but you better know your abime by its romanized name, or you're gonna have a bad time since I have no alternative names per anime yet.8 -

Today we start working on a app that learns biometric data from the user for extra security, so if some one else uses my account... The system would know and shuts the bad user out. Although we use an api for the biometric data collection, it's still epic! 😀😀😀

Only bad thing is that the deadline is next week3 -

Meeting at 'Derp & Co', the topic was what data model should send the back-end to frontend & app via API calls:

- Coworker: 'we should send the data structured like this for reasons'.

- Me: 'Yeah, this nested object.object.object should do the trick for the front end, but this will be a pain in the ass to convert to POJOs. Why not use something like idk better structure?'

<Mad/intrigued faces>

- CoworkerS: 'Why you need to use POJOs?'

- Me: <More Mad> 'cause I work with java in android... and we have/need/like objects?

<Captain Obvious left the room>

- CoworkerS: 'Oh yeah, well... we can do it the way you say'.

Why you need Objects... what is the next?

- Git? For what? Did not have the usb key from day one?2 -

Company A: Oh yes we work with this huge tech company all the time and our APIs are just amazingly well made! DONT WORRY!

Company B: Yeah we've worked together once or twice and nothing seemed to go wrong the last time. DONT WORRY!

Reality: 11 API warnings, no data transfer and a SQL error meaning nothing I've been working on actually worked. #Rantover2 -

Client: I need you to integrate with this API.

Me: ok cool, but what are we doing with it (where does the data go/styling)?

Client: what do think we should do?

Me: well it would be really cool if we did it like this *short and sweet explanation of really cool functionality and design*

Client: I LOVE IT! Let's do it, oh also I need it done by tomorrow...

Me: *GOD DAMNIT, why do you always do this...don't you dare say ok* ok, yeah I can totally do that.

...now at the market stocking up on redbull2 -

Me, converting shit to JSON between a data pull and an API call even though I don't have to just because the data only makes sense to my brain that way.4

-

Just succesfully converted my entire app from using web scraping data fetching to direct API by reverse-engineering their android app to get to their private API

App is running much faster and more stable now, feels good3 -

Me: I need some stickers

Devrant: Give some programming jokes

Me:

#Take as many as you want

import requests

# api-endpoint

URL = "http://devrant.com/jokes/"

# sending get request and saving the response as response object

r = requests.get(url = URL, params = "funnyprogrammingjoke")

# extracting data in json format

Joke = r.json()

# printing the output

print(Joke)5 -

Boss: so we've got to call an app to verify data in this project. But I've got no more info and I'm on holiday next week. Please contact GuyA next week.

Me: ok I guess?

*writes email to GuyA*

GuyB: GuyA is on holiday please hold the line

*1 week later*

GuyA: we need more time it's not ready yet

*2 weeks later?

Me: so?

GuyA: yeah it's ready here's the wsdl etc your client already has the password

*1 week later*

Me: yeah so I got the data but the api says my auth isn't working

GuyB: yeah your user isn't activated on the test system. I'm gonna forward that and come back at you

*1 week later*

GuyA: so we're going live in about 2 weeks hows testing going?

Me: well I'm still waiting for the response and activation

*suddenly it works*

Me: yeah so auth is working but i can't find any data. Is there any special test data?

GuyA: oh no there is NO test data on the test system. You need to wait for GuyB but he us not here today...

Me: are you fking kidding Me?????

... no response since then and it's been days.... -

TLDR; I was editing the wrong file, let's go to bed.

We have this huge system that receives data from an API endpoint, does a whole bunch of stuff, going through three other servers, and then via some calculation based on the data received from the UI, and data received from the endpoint, it finally sends the calculated fields to the UI via websocket.

Poor me sitting for over 4 hours debugging and changing values in the logic file trying to understand why one of the fields ends up being null.

Of course every change needs a reboot to all the 4 servers involved, and a hard refresh of the UI.

I even tried to search for the word null in that file, but to no avail.

After scattering hundreds of console logs, and pulling my hair out, I found out that I am editing the wrong file.

I guess it's time for some sleep.1 -

Bloody fucking Crystal. Lame fucking excuse for a reporting engine product.

Can’t change data source from API for a report containing List of Values at runtime.

What the actual fuck! A reporting system where I can’t change the data source.

Die in hell you Fucking blood sucking leeches. Die of malaria!2 -

I am writing an api to get data from another api, mix it with some data from a database and then send it back.

I am using nodejs (javascript) to write it but I would like to learn something new. Can you recommend me any languages that I could use for my backend? I was thinking maybe go but I am open for ideas.10 -

I would like to present new super API which I have "pleasure" to work with. Documentation (very poor written in *.docx without list of contents) says that communication is json <-> json which is not entirely true. I have to post request as x-www-form with one field which contains data encoded as json.

Response is json but they set Content-Type header as text/html and Postman didn't prettify body by default...

I'm attaching screenshot as a evidence.

I can't understand why people don't use frameworks and making other lives harder :-/ 3

3 -

How do I make my manager understand that something isn’t doable no matter how much effort, time and perseverance are put into it?

———context———

I’ve been tasked in optimizing a process that goes through a list of sites using the api that manage said sites. The main bottle neck of the process are the requests made to the api. I went as far as making multiple accounts to have multiple tokens fetch the data, balance the loads on the different accounts, make requests in parallel, make dedicated sub processes for each chunks. All of this doesn’t even help that much considering we end up getting rate limited anyway. As for the maintainer of the API, it’s a straight no-can-do if we ask to decrease the rate limit for us.

Essentially I did everything you could possibly do to optimize the process and yet… That’s not enough, it doesn’t fit the 2 days max process time spec that was given to me. So I decided I would tell them that the specs wouldn’t match what’s possible but they insist on 2 days.

I’ve even proposed a valid alternative but they don’t like it either, admittedly it’s not the best as it’s marked as “depreciated” but it would allow us to process data in real time instead of iterating each site.3 -